目录

- Named Entity Recognition

- Relation Extraction

- Other IE Tasks

- Conclusion

- information extraction

- Given this: “Brasilia, the Brazilian capital, was founded in 1960.”

- Obtain this:

- capital(Brazil, Brasilia)

- founded(Brasilia, 1960)

- Main goal: turn text into structured data

- applications

- Stock analysis

- Gather information from news and social media

- Summarise texts into a structured format

- Decide whether to buy/sell at current stock price

- Medical research

- Obtain information from articles about diseases and treatments

- Decide which treatment to apply for new patient

- Stock analysis

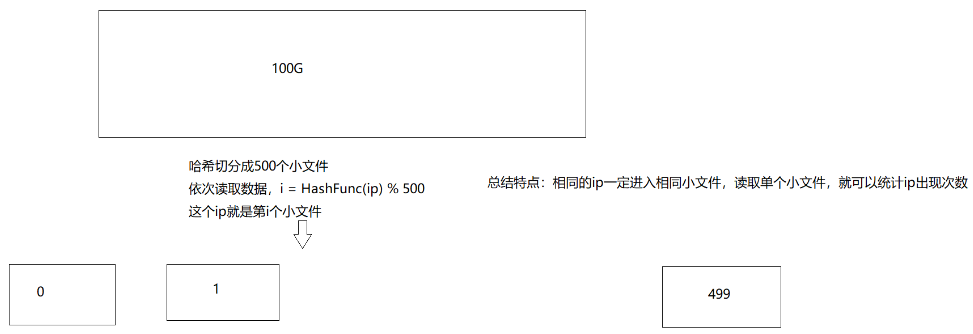

- how

- Two steps:

- Named Entity Recognition (NER): find out entities such as “Brasilia” and “1960”

- Relation Extraction: use context to find the relation between “Brasilia” and “1960” (“founded”)

- Two steps:

- machine learning in IE

- Named Entity Recognition (NER): sequence models such as RNNs, HMMs or CRFs.

- Relation Extraction: mostly classifiers, either binary or multi-class.

- This lecture: how to frame these two tasks in order to apply sequence labellers and classifiers.

Named Entity Recognition

- typical entity tags (types of tags to use depend on domains)

- PER(people): people, characters

- ORG(organisation): companies, sports teams

- LOC(natural location): regions, mountains, seas

- GPE(man-made locations): countries, states, provinces (in some tagset this is labelled as LOC)

- FAC(facility): bridges, buildings, airports

- VEH(vehcle): planes, trains, cars

- Tag-set is application-dependent: some domains deal with specific entities e.g. proteins and genes

- NER as sequnce labelling

-

NE tags can be ambiguous:

- “Washington” can be a person, location or political entity

-

Similar problem when doing POS tagging

- possible solution: Incorporate(包含) context

-

Can we use a sequence tagger for this (e.g. HMM)?

- No, as entities can span multiple tokens(multiple words)

- Solution: modify the tag set

-

IO(inside,outside) tagging

- [ORG American Airlines], a unit of [ORG AMR Corp.], immediately matched the move, spokesman [PER Tim Wagner] said.

- ‘I-ORG’ represents a token that is inside an entity (ORG in this case).

- All tokens which are not entities get the ‘O’ token (for outside).

- Cannot differentiate between:

- a single entity with multiple tokens

- multiple entities with single tokens

-

IOB(beginning) tagging

-

[ORG American Airlines], a unit of [ORG AMR Corp.], immediately matched the move, spokesman [PER Tim Wagner] said.

-

B-ORG represents the beginning of an ORG entity.

-

If the entity has more than one token, subsequent tags are represented as I-ORG.

-

example: annotate the following sentence with NER tags(IOB)

- Steves Jobs founded Apple Inc. in 1976, Tageset: PER, ORG, LOC, TIME

- [B-PER Steves] [I-PER Jobs] [O founded] [B-ORG Apple] [I-ORG Inc.] [O in] [B-Time 1976]

- Steves Jobs founded Apple Inc. in 1976, Tageset: PER, ORG, LOC, TIME

-

-

NER as sequence labelling

- Given such tagging scheme, we can train any sequence labelling model

- In theory, HMMs can be used but discriminative models such as CRFs are preferred (HMMs cannot incorperate new features)

-

NER

-

features

- Example: L’Occitane

- Prefix/suffix:

- L / L’ / L’O / L’Oc / …

- e / ne / ane / tane / …

- Word shape:

- X’Xxxxxxxx / X’Xx

- XXXX-XX-XX (date!)

- POS tags / syntactic chunks: many entities are nouns or noun phrases.

- Presence in a gazeteer: lists of entities, such as place names, people’s names and surnames, etc.

-

classifier

-

deep learning for NER

- State of the art approach uses LSTMs with character and word embeddings (Lample et al. 2016)

- State of the art approach uses LSTMs with character and word embeddings (Lample et al. 2016)

-

-

Relation Extraction

-

relation extraction

- [ORG American Airlines], a unit of [ORG AMR Corp.], immediately matched the move, spokesman [PER Tim Wagner] said.

- Traditionally framed as triple(a relation and two entities) extraction:

- unit(American Airlines, AMR Corp.)

- spokesman(Tim Wagner, American Airlines)

- Key question: do we know all the possible relations?

- map relations to a closed set of relations

- unit(American Airlines, AMR Corp.) → subsidiary

- spokesman(Tim Wagner, American Airlines) → employment

-

methods

-

If we have access to a fixed relation database:

- Rule-based

- Supervised

- Semi-supervised

- Distant supervision

-

If no restrictions on relations:

- Unsupervised

- Sometimes referred as “OpenIE”

-

rule-based relation extraction

- “Agar is a substance prepared from a mixture of red algae such as Gelidium, for laboratory or industrial use.”

- identify linguitics patterns in sentence

- [NP red algae] such as [NP Gelidium]

- NP0 such as NP1 → hyponym(NP1, NP0)

- hyponym(Gelidium, red algae)

- Lexico-syntactic patterns: high precision, low recall(unlikely to recover all patterns, so many linguistic patterns!), manual effort required

- more rules

-

supervised relation extraction

- Assume a corpus with annotated relations

- Two steps (if only one step, class imbalance problem: most entities have no relations!)

- First, find if an entity pair is related or not (binary classification)

- For each sentence, gather all possible entity pairs

- Annotated pairs are considered positive examples

- Non-annotated pairs are taken as negative examples

- Second, for pairs predicted as positive, use a multiclass classifier (e.g. SVM) to obtain the relation

- example

- [ORG American Airlines], a unit of [ORG AMR Corp.], immediately matched the move, spokesman [PER Tim Wagner] said.

- First:

- (American Airlines, AMR Corp.) → \to → positive

- (American Airlines, Tim Wagner) → \to → positive

- (AMR Corp., Tim Wagner) → \to → negative

- Second:

- (American Airlines, AMR Corp.) → \to → subsidiary

- (American Airlines, Tim Wagner) → \to → employment

- First, find if an entity pair is related or not (binary classification)

- features

- [ORG American Airlines], a unit of [ORG AMR Corp.], immediately matched the move, spokesman [PER Tim Wagner] said.

- (American Airlines, Tim Wagner)

→

\to

→ employment

-

semi-supervised relation extraction

-

Annotated corpora is very expensive to create

-

Use seed tuples to bootstrap a classifier (use seed to find more training data)

-

steps:

- Given seed tuple: hub(Ryanair, Charleroi)

- Find sentences containing terms in seed tuples

- Budget airline Ryanair, which uses Charleroi as a hub, scrapped all weekend flights out of the airport

- Extract general patterns

- [ORG], which uses [LOC] as a hub

- Find new tuples with these patterns

- hub(Jetstar, Avalon)

- Add these new tuples to existing tuples and repeat step 2

-

issues

- Extracted tuples deviate from original relation over time

- semantic drift(deviate from original relation)

- Pattern: [NP] has a {NP}* hub at [LOC]

- Sydney has a ferry hub at Circular Quay

- hub(Sydney, Circular Quay)

- More erroneous(错误的) patterns extracted from this tuple…

- Should only accept patterns with high confidences

- semantic drift(deviate from original relation)

- Difficult to evaluate(no labels for new extracted tuples)

- Extracted general patterns tend to be very noisy

- Extracted tuples deviate from original relation over time

-

-

distant supervision

-

Semi-supervised methods assume the existence of seed tuples to mine new tuples

-

Can we mine new tuples directly?

-

Distant supervision obtain new tuples from a range of sources:

- DBpedia

- Freebase

-

Generate massive training sets, enabling the use of richer features, and no risk of semantic drift

-

-

unsupervised relation extraction

- No fixed or closed set of relations

- Relations are sub-sentences; usually has a verb

- “United has a hub in Chicago, which is the headquarters of United Continental Holdings.”

- “has a hub in”(United, Chicago)

- “is the headquarters of”(Chicago, United Continental Holdings)

- Main problem: so many relation forms! mapping relations into canonical forms

-

evaluation

- NER: F1-measure at the entity level.

- Relation Extraction with known relation set: F1-measure

- Relation Extraction with unknown relations: much harder to evaluate

- Usually need some human evaluation

- Massive datasets used in these settings are impractical to evaluate manually (use samples)

- Can only obtain (approximate) precision, not recall(too many possible relations!)

-

Other IE Tasks

-

temporal expression extraction

“[TIME July 2, 2007]: A fare increase initiated [TIME last week] by UAL Corp’s United Airlines was matched by competitors over [TIME the weekend], marking the second successful fare increase in [TIME two weeks].”

- Anchoring: when is “last week”?

- “last week” → 2007−W26

- Normalisation: mapping expressions to canonical forms.

- July 2, 2007 → 2007-07-02

- Mostly rule-based approaches

- Anchoring: when is “last week”?

-

event extraction

- “American Airlines, a unit of AMR Corp., immediately [EVENT matched] [EVENT the move], spokesman Tim Wagner [EVENT said].”

- Very similar to NER but different tags, including annotation and learning methods.

- Event ordering: detect how a set of events happened in a timeline.

- Involves both event extraction and temporal expression extraction.

Conclusion

- Information Extraction is a vast field with many different tasks and applications

- Named Entity Recognition

- Relation Extraction

- Event Extraction

- Machine learning methods involve classifiers and sequence labelling models.