一、概述

PVC 的全称是:PersistentVolumeClaim(持久化卷声明),PVC 是用户存储的一种声明,PVC 和 Pod 比较类似,Pod 消耗的是节点,PVC 消耗的是 PV 资源,Pod 可以请求 CPU 和内存,而 PVC 可以请求特定的存储空间和访问模式。对于真正使用存储的用户不需要关心底层的存储实现细节,只需要直接使用 PVC 即可。

PVC作为用户对存储资源的需求申请,主要涉及存储空间请求、访问模式、 PV选择条件和存储类别等信息的设置。

二、参数解释

创建pvc,如下:

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: test-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi

storageClassName: nfs

volumeMode: Filesystem

-

accessModes:访问模式

PVC也可以设置访问模式,用于描述用户应用对存储资源的访问权限,其设置参数和pv一致

-

resources:资源请求

描述对存储的请求数量,通过 resources.requests.storage字段设置需要的存储空间大小。

-

volumeMode:存储卷格式,用于描述用户使用的PV存储卷模式,包括文件系统(Filesystem)和块设备 (Block),与pv一致。

-

storageClassName:存储类型

在创建pvc时需要指定后端存储类型,以减少对后端存储详细信息的依赖。

-

如果系统中存在一个DefaultStorageClass,可以在创建pvc的时候不指定storageclassname,系统会自动使用默认的存储类型。

-

如果将多个StorageClass都定义为default,则由于不唯一,系统将无法创建PVC。

-

三、pod使用pvc

编辑配置文件,如下:

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: test-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi

storageClassName: nfs

volumeMode: Filesystem

---

apiVersion: v1

kind: Pod

metadata:

name: mypod

spec:

containers:

- name: myfrontend

image: docker.io/library/centos:7

command: ["init"]

imagePullPolicy: IfNotPresent

volumeMounts:

- mountPath: /data name: test-path

volumes:

- name: test-path

persistentVolumeClaim:

claimName: test-pvc

执行创建命令如下:

kubectl apply -f pod.yaml

[root@node1 yaml]# kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mypod 1/1 Running 0 4s 10.233.96.10 node2 <none> <none>

进入pod查看挂载的目录,如下:

[root@node1 yaml]# kubectl exec -it mypod bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

[root@mypod /]# ls

anaconda-post.log bin data dev etc home lib lib64 media mnt opt proc root run sbin srv sys tmp usr var

[root@mypod /]# ll

total 12

-rw-r--r-- 1 root root 12114 Nov 13 2020 anaconda-post.log

lrwxrwxrwx 1 root root 7 Nov 13 2020 bin -> usr/bin

drwxrwxrwx 2 root root 6 Jun 5 06:27 data ####目录被自动挂载

drwxr-xr-x 5 root root 360 Jun 5 06:34 dev

drwxr-xr-x 1 root root 41 Jun 5 06:34 etc

drwxr-xr-x 2 root root 6 Apr 11 2018 home

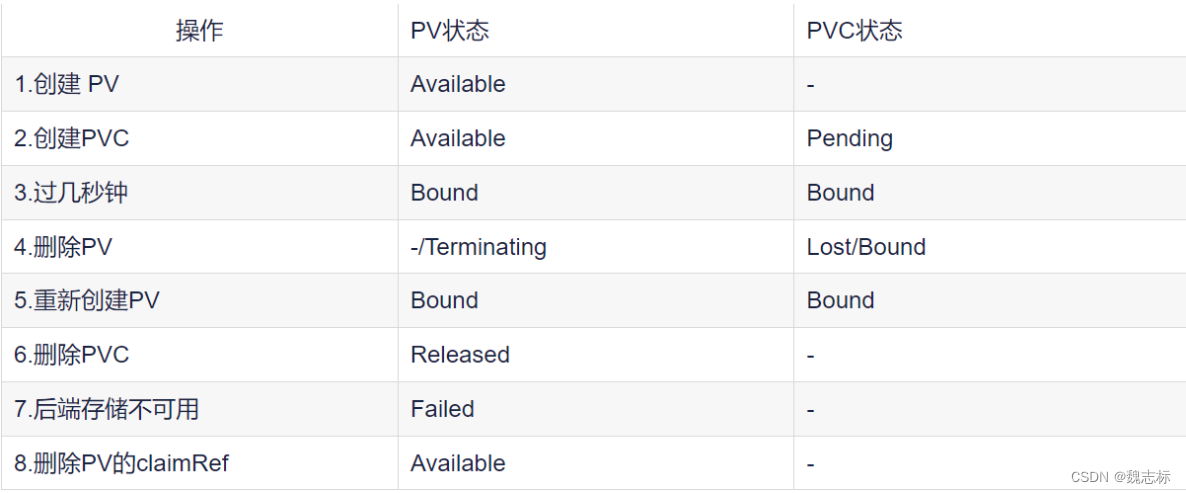

四、pv、pvc状态变化

不同情况下,PV和PVC的状态变化如下:

具体操作如下:

#############################

1:创建pv

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv-nfs

spec:

accessModes:

- ReadWriteOnce

capacity:

storage: 2Gi

nfs:

path: /data/

server: 192.168.5.79

persistentVolumeReclaimPolicy: Retain

storageClassName: nfs

volumeMode: Filesystem

[root@node1 yaml]# kubectl apply -f nfs-pv.yaml

persistentvolume/pv-nfs created

[root@node1 yaml]#

[root@node1 yaml]# kubectl get pv | grep pv-nfs

pv-nfs 2Gi RWO Retain Available nfs 23s

[root@node1 yaml]#

此时查看pv的状态为Available

##############################

2:创建pvc,如下:

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-nfs

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi

storageClassName: nfs

volumeMode: Filesystem

[root@node1 yaml]# kubectl apply -f pvc.yaml

persistentvolumeclaim/pvc-nfs created

[root@node1 yaml]# kubectl get pvc ###正常是状态先为pending,然后是Bound

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc-nfs Bound pv-nfs 2Gi RWO nfs 7s

############################

3:删除pv,查看pvc的状态

[root@node1 yaml]# kubectl delete pv pv-nfs

persistentvolume "pv-nfs" delete ####会一直卡在这里

打开新的终端,查看pv状态已经是Terminating

[root@node1 ~]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv-nfs 2Gi RWO Retain Terminating default/pvc-nfs nfs 11m

出现删除不掉问题的主要原因是pv已经和pvc绑定,pv内部有kubernetes.io/pv-protection这个参数进行保护,编辑pv,删除这个参数,kubectl edit pv pv-nfs,如下:

apiVersion: v1

kind: PersistentVolume

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"PersistentVolume","metadata":{"annotations":{},"name":"pv-nfs"},"spec":{"accessModes":["ReadWriteOnce"],"capacity":{"storage":"2Gi"},"nfs":{"path":"/data/","server":"192.168.5.79"},"persistentVolumeReclaimPolicy":"Retain","storageClassName":"nfs","volumeMode":"Filesystem"}}

pv.kubernetes.io/bound-by-controller: "yes"

creationTimestamp: "2023-06-05T08:12:28Z"

deletionGracePeriodSeconds: 0

deletionTimestamp: "2023-06-05T08:23:27Z"

# finalizers: ####将此参数删除

# - kubernetes.io/pv-protection

name: pv-nfs

resourceVersion: "9420641"

uid: cb3b7c54-b8e9-4132-b273-16e9415ae643

spec:

accessModes:

- ReadWriteOnce

capacity:

storage: 2Gi

再次查看pv已经被删除了,此时pvc状态为Lost,如下:

[root@node1 yaml]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc-nfs Lost pv-nfs 0 nfs 13m

解决方式,将删除的pv进行重新创建,pvc和pv的状态都会变成Bound

[root@node1 yaml]# kubectl apply -f nfs-pv.yaml

persistentvolume/pv-nfs created

[root@node1 yaml]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv-nfs 2Gi RWO Retain Bound default/pvc-nfs nfs 4s

[root@node1 yaml]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc-nfs Bound pv-nfs 2Gi RWO nfs 15m

############################

4:删除pvc,查看pv状态变化,如下:

[root@node1 yaml]# kubectl delete pvc pvc-nfs

persistentvolumeclaim "pvc-nfs" deleted

[root@node1 yaml]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv-nfs 2Gi RWO Retain Released default/pvc-nfs nfs 84s

此时的pv状态已经是released,但如果此时在创建新的pvc,能够进行pv和pvc的绑定呢,大答案是不行的,因为pvc只能和Available状态的pv进行绑定。

在pv变成released的时候,查看claimRef属性,其中依然保留着PVC的绑定信息,如下:

[root@node1 yaml]# kubectl get pv pv-nfs -o yaml

apiVersion: v1

kind: PersistentVolume

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"PersistentVolume","metadata":{"annotations":{},"name":"pv-nfs"},"spec":{"accessModes":["ReadWriteOnce"],"capacity":{"storage":"2Gi"},"nfs":{"path":"/data/","server":"192.168.5.79"},"persistentVolumeReclaimPolicy":"Retain","storageClassName":"nfs","volumeMode":"Filesystem"}}

pv.kubernetes.io/bound-by-controller: "yes"

creationTimestamp: "2023-06-05T08:29:29Z"

finalizers:

- kubernetes.io/pv-protection

name: pv-nfs

resourceVersion: "9421489"

uid: 09c62907-7fa3-491f-bb48-656003581c8f

spec:

accessModes:

- ReadWriteOnce

capacity:

storage: 2Gi

claimRef: ####查看此处,保留了pvc的绑定信息

apiVersion: v1

kind: PersistentVolumeClaim

name: pvc-nfs

namespace: default

resourceVersion: "9421099"

uid: 15935386-4b7b-4b97-88b3-2bece1ed6ea9

nfs:

path: /data/

server: 192.168.5.79

persistentVolumeReclaimPolicy: Retain

storageClassName: nfs

volumeMode: Filesystem

status:

phase: Released

[root@node1 yaml]#

解决方式是导出pv的信息进行备份,然后删除claimRef出的内容,如下:

[root@node1 yaml]# kubectl get pv pv-nfs -o yaml > pv.yaml

[root@node1 yaml]# kubectl edit pv pv-nfs

---

apiVersion: v1

kind: PersistentVolume

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"PersistentVolume","metadata":{"annotations":{},"name":"pv-nfs"},"spec":{"accessModes":["ReadWriteOnce"],"capacity":{"storage":"2Gi"},"nfs":{"path":"/data/","server":"192.168.5.79"},"persistentVolumeReclaimPolicy":"Retain","storageClassName":"nfs","volumeMode":"Filesystem"}}

pv.kubernetes.io/bound-by-controller: "yes"

creationTimestamp: "2023-06-05T08:29:29Z"

finalizers:

- kubernetes.io/pv-protection

name: pv-nfs

resourceVersion: "9421489"

uid: 09c62907-7fa3-491f-bb48-656003581c8f

spec:

accessModes:

- ReadWriteOnce

capacity:

storage: 2Gi

nfs:

path: /data/

server: 192.168.5.79

persistentVolumeReclaimPolicy: Retain

storageClassName: nfs

volumeMode: Filesystem

status:

phase: Released

persistentvolume/pv-nfs edited

[root@node1 yaml]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv-nfs 2Gi RWO Retain Available nfs 10m

再次查看pv状态变为Available,此在创建pvc,pv/pvc状态都为Bound,如下:

[root@node1 yaml]# kubectl apply -f pvc.yaml

persistentvolumeclaim/pvc-nfs created

[root@node1 yaml]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv-nfs 2Gi RWO Retain Bound default/pvc-nfs nfs 12m

[root@node1 yaml]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc-nfs Bound pv-nfs 2Gi RWO nfs 7s