在 Rocky Linux 8 上安装 Kubernetes 集群。毫无疑问,Kubernetes 将继续改变我们大规模部署和管理业务应用程序的方式。无论用于部署应用程序的模型是手动还是通过 CI/CD 管道,Kubernetes 仍然是处理编排、管理和扩展应用程序的最佳选择。

对于那些不知道的人,Kubernetes 已经弃用了dockershim,这意味着他们不再支持 docker 作为容器运行时。正是出于这个原因,我们将使用 CRI-O 容器运行时。CRI-O 是使用 Docker 作为 kubernetes 运行时的轻量级替代方案。在你发脾气之前,重要的是要注意 Cri-o 的工作方式类似于 Docker。开发人员构建和部署应用程序映像的方式不受影响。

什么是 CRI-O?

CRI-O 是 Kubernetes CRI(容器运行时接口)的实现,可以使用 OCI(开放容器计划)兼容的运行时。CRI-O 允许 Kubernetes 使用任何符合 OCI 标准的运行时作为运行 Pod 的容器运行时。

CRI-O 支持 OCI 容器镜像,可以从任何容器注册表中提取。它是使用 Docker、Moby 或 rkt 作为 Kubernetes 运行时的轻量级替代方案。

从官方项目网站上提取的 CRI-O 架构:

我的实验室环境设置

我将在 Rocky Linux 8 节点集上部署一个 Kubernetes 集群,如下所述:

- 3个控制平面节点(Masters)

- 4 台工作节点机器(数据平面)

- Ansible 版本需要2.10+

但是可以1 master node使用本指南中演示的过程设置 Kubernetes 集群。

虚拟机和规格

我们可以使用virsh命令查看我实验室环境中所有正在运行的节点,因为它由 Vanilla KVM 提供支持:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>[root@kvm-private-lab ~]# virsh list

Id Name State

--------------------------------------

4 k8s-bastion-server running

17 k8s-master-01-server running

22 k8s-master-02-server running

26 k8s-master-03-server running

31 k8s-worker-01-server running

36 k8s-worker-02-server running

38 k8s-worker-03-server running

41 k8s-worker-04-server running</code></span></span>列表中的第一台机器将是我们的堡垒/工作站机器。这是我们将在 Rocky Linux 8 服务器上执行 Kubernetes 集群安装的地方。

我的主机名和 IP 地址的服务器列表:

| 主机名 | IP地址 | 集群角色 |

| k8s-bastion.example.com | 192.168.200.9 | 堡垒主机 |

| k8s-master-01.example.com | 192.168.200.10 | 主节点 |

| k8s-master-02.example.com | 192.168.200.11 | 主节点 |

| k8s-master-03.example.com | 192.168.200.12 | 工作节点 |

| k8s-worker-01.example.com | 192.168.200.13 | 工作节点 |

| k8s-worker-02.example.com | 192.168.200.14 | 工作节点 |

| k8s-worker-03.example.com | 192.168.200.15 | 工作节点 |

| k8s-worker-04.example.com | 192.168.200.16 | 工作节点 |

Kubernetes 集群机器

我集群中的每台机器都具有以下硬件规格:

<span style="background-color:#051e30"><span style="color:#ffffff"><code><em># Memory

</em>$ free -h

total used free shared buff/cache available

Mem: 7.5Gi 169Mi 7.0Gi 8.0Mi 285Mi 7.1Gi

<em># Disk space

</em>$ df -hT /

Filesystem Type Size Used Avail Use% Mounted on

/dev/vda4 xfs 39G 2.0G 37G 5% /

<em># CPU Cores

</em>$ egrep ^processor /proc/cpuinfo | wc -l

2</code></span></span>DNS 设置

为 DNS 服务器中的主机名设置 A 记录,并可选择在每个集群节点中的 /etc/hosts 文件中设置 A 记录,以防 DNS 解析失败。

DNS 绑定记录创建示例:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>; Create entries for the master nodes

k8s-master-01 IN A 192.168.200.10

k8s-master-02 IN A 192.168.200.11

k8s-master-03 IN A 192.168.200.12

; Create entries for the worker nodes

k8s-worker-01 IN A 192.168.200.13

k8s-worker-02 IN A 192.168.200.14

k8s-worker-03 IN A 192.168.200.15

;

; The Kubernetes cluster <strong>ControlPlaneEndpoint</strong> point these to the IP of the masters

k8s-endpoint IN A 192.168.200.10

k8s-endpoint IN A 192.168.200.11

k8s-endpoint IN A 192.168.200.12</code></span></span>第 1 步:为 Kubernetes 安装准备堡垒服务器

在 Bastion / Workstation 系统上安装设置所需的基本工具:

<span style="background-color:#051e30"><span style="color:#ffffff"><code><em>### Ubuntu / Debian ###

</em>sudo apt update

sudo apt install git wget curl vim bash-completion

<em>### CentOS / RHEL / Fedora / Rocky Linux ###

</em>sudo yum -y install git wget curl vim bash-completion</code></span></span>安装 Ansible 配置管理

使用 Python 3:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>curl https://bootstrap.pypa.io/get-pip.py -o get-pip.py

python3 get-pip.py --user</code></span></span>使用 Python 2:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>curl https://bootstrap.pypa.io/get-pip.py -o get-pip.py

python get-pip.py --user</code></span></span>使用 pip 安装 Ansible

<span style="background-color:#051e30"><span style="color:#ffffff"><code><em># Python 3

</em>python3 -m pip install ansible --user

<em># Python </em>2

python -m pip install ansible --user</code></span></span>安装后检查Ansible版本:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>$ <span style="color:var(--wp--preset--color--luminous-vivid-amber) !important">ansible --version</span>

ansible [core 2.11.5]

config file = None

configured module search path = ['/root/.ansible/plugins/modules', '/usr/share/ansible/plugins/modules']

ansible python module location = /usr/local/lib/python3.9/site-packages/ansible

ansible collection location = /root/.ansible/collections:/usr/share/ansible/collections

executable location = /usr/local/bin/ansible

python version = 3.9.7 (default, Aug 30 2021, 00:00:00) [GCC 11.2.1 20210728 (Red Hat 11.2.1-1)]

jinja version = 3.0.1

libyaml = True</code></span></span>更新/etc/hostsBastion 机器中的文件:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>$ <span style="color:var(--wp--preset--color--luminous-vivid-amber) !important">sudo vim /etc/hosts</span>

192.168.200.9 k8s-bastion.example.com k8s-bastion

192.168.200.10 k8s-master-01.example.com k8s-master-01

192.168.200.11 k8s-master-02.example.com k8s-master-02

192.168.200.12 k8s-master-03.example.com k8s-master-03

192.168.200.13 k8s-worker-01.example.com k8s-worker-01

192.168.200.14 k8s-worker-02.example.com k8s-worker-02

192.168.200.15 k8s-worker-03.example.com k8s-worker-03

192.168.200.16 k8s-worker-04.example.com k8s-worker-04</code></span></span>生成 SSH 密钥:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>$ <span style="color:var(--wp--preset--color--luminous-vivid-amber) !important">ssh-keygen -t rsa -b 4096 -N ''</span>

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:LwgX4oCWENqWAyW9oywAv9jTK+BEk4+XShgX0galBqE root@k8s-master-01.example.com

The key's randomart image is:

+---[RSA 4096]----+

|OOo |

|B**. |

|EBBo. . |

|===+ . . |

|=*+++ . S |

|*=++.o . . |

|=.o. .. . . |

| o. . . |

| . |

+----[SHA256]-----+</code></span></span>创建 SSH 客户端配置文件

<span style="background-color:#051e30"><span style="color:#ffffff"><code>$ vim ~/.ssh/config

Host *

UserKnownHostsFile /dev/null

StrictHostKeyChecking no

IdentitiesOnly yes

ConnectTimeout 0

ServerAliveInterval 30</code></span></span>将 SSH 密钥复制到所有 Kubernetes 集群节点

<span style="background-color:#051e30"><span style="color:#ffffff"><code><em># Master Nodes

</em>for host in k8s-master-0{1..3}; do

ssh-copy-id root@$host

done

<em># Worker Nodes

</em>for host in k8s-worker-0{1..4}; do

ssh-copy-id root@$host

done</code></span></span>第 2 步:在所有节点上设置正确的主机名

登录到集群中的每个节点并配置正确的主机名:

<span style="background-color:#051e30"><span style="color:#ffffff"><code><strong># Examples

</strong><em># Master Node 01

</em>sudo hostnamectl set-hostname k8s-master-01.example.com

<em># Worker Node 01

</em>sudo hostnamectl set-hostname k8s-worker-01.example.com</code></span></span>注销然后重新登录以确认主机名设置正确:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>$ hostnamectl

Static hostname: <em>k8s-master-01.example.com</em>

Icon name: computer-vm

Chassis: vm

Machine ID: 7a7841970fc6fab913a02ca8ae57fe18

Boot ID: 4388978e190a4be69eb640b31e12a63e

Virtualization: kvm

Operating System: Rocky Linux 8.4 (Green Obsidian)

CPE OS Name: cpe:/o:rocky:rocky:8.4:GA

Kernel: Linux 4.18.0-305.19.1.el8_4.x86_64

Architecture: x86-64</code></span></span>对于使用 cloud-init 的云实例,请查看以下指南:

- 在 EC2|OpenStack|DigitalOcean|Azure 实例中设置服务器主机名

第 3 步:为 Kubernetes 准备 Rocky Linux 8 服务器(先决条件设置)

我写了一个Ansible 角色来做标准的 Kubernetes 节点准备。该角色包含以下任务:

- 安装管理节点所需的标准包

- 设置标准系统要求——禁用交换、修改 sysctl、禁用 SELinux

- 安装和配置您选择的容器运行时——cri-o、Docker、Containerd

- 安装 Kubernetes 包——kubelet、kubeadm 和 kubectl

- 在 Kubernetes Master 和 Worker 节点上配置 Firewalld——打开所有需要的端口

在 kubeadm init 到你的Bastion机器之前克隆我的 Ansible 角色,我们将使用它来设置 Kubernetes 要求:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>git clone https://github.com/jmutai/k8s-pre-bootstrap.git</code></span></span>切换到从克隆过程中创建的k8s-pre-bootstrap目录:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>cd k8s-pre-bootstrap</code></span></span>使用 Kubernetes 节点正确设置主机清单。这是我的库存清单:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>$ vim hosts

[<em>k8snodes</em>]

k8s-master-01

k8s-master-02

k8s-master-03

k8s-worker-01

k8s-worker-02

k8s-worker-03

k8s-worker-04</code></span></span>您还应该更新剧本文件中的变量。最重要的是:

- Kubernetes 版本:k8s_version

- 您的时区:时区

- 要使用的 Kubernetes CNI:k8s_cni

- 容器运行时:container_runtime

<span style="background-color:#051e30"><span style="color:#ffffff"><code>$ vim k8s-prep.yml

---

- name: Setup Proxy

hosts: k8snodes

remote_user: root

become: yes

become_method: sudo

#gather_facts: no

vars:

k8s_version: "1.21" # Kubernetes version to be installed

selinux_state: permissive # SELinux state to be set on k8s nodes

timezone: "Africa/Nairobi" # Timezone to set on all nodes

k8s_cni: calico # calico, flannel

container_runtime: cri-o # docker, cri-o, containerd

configure_firewalld: true # true / false

# Docker proxy support

setup_proxy: false # Set to true to configure proxy

proxy_server: "proxy.example.com:8080" # Proxy server address and port

docker_proxy_exclude: "localhost,127.0.0.1" # Addresses to exclude from proxy

roles:

- kubernetes-bootstrap</code></span></span>检查剧本语法:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>$ ansible-playbook --syntax-check -i hosts k8s-prep.yml

playbook: k8s-prep.yml</code></span></span>如果您的 SSH 私钥有密码,则将其保存以防止在执行剧本时出现提示:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>eval `ssh-agent -s` && ssh-add</code></span></span>运行剧本以准备您的节点:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>$ ansible-playbook -i hosts k8s-prep.yml</code></span></span>如果服务器可访问,执行应立即开始:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>PLAY [Setup Proxy] ***********************************************************************************************************************************************************************************

TASK [Gathering Facts] *******************************************************************************************************************************************************************************

ok: [k8s-worker-02]

ok: [k8s-worker-01]

ok: [k8s-master-03]

ok: [k8s-master-01]

ok: [k8s-worker-03]

ok: [k8s-master-02]

ok: [k8s-worker-04]

TASK [kubernetes-bootstrap : Add the OS specific variables] ******************************************************************************************************************************************

ok: [k8s-master-01] => (item=/root/k8s-pre-bootstrap/roles/kubernetes-bootstrap/vars/RedHat8.yml)

ok: [k8s-master-02] => (item=/root/k8s-pre-bootstrap/roles/kubernetes-bootstrap/vars/RedHat8.yml)

ok: [k8s-master-03] => (item=/root/k8s-pre-bootstrap/roles/kubernetes-bootstrap/vars/RedHat8.yml)

ok: [k8s-worker-01] => (item=/root/k8s-pre-bootstrap/roles/kubernetes-bootstrap/vars/RedHat8.yml)

ok: [k8s-worker-02] => (item=/root/k8s-pre-bootstrap/roles/kubernetes-bootstrap/vars/RedHat8.yml)

ok: [k8s-worker-03] => (item=/root/k8s-pre-bootstrap/roles/kubernetes-bootstrap/vars/RedHat8.yml)

ok: [k8s-worker-04] => (item=/root/k8s-pre-bootstrap/roles/kubernetes-bootstrap/vars/RedHat8.yml)

TASK [kubernetes-bootstrap : Put SELinux in permissive mode] *****************************************************************************************************************************************

changed: [k8s-master-01]

changed: [k8s-worker-01]

changed: [k8s-master-03]

changed: [k8s-master-02]

changed: [k8s-worker-02]

changed: [k8s-worker-03]

changed: [k8s-worker-04]

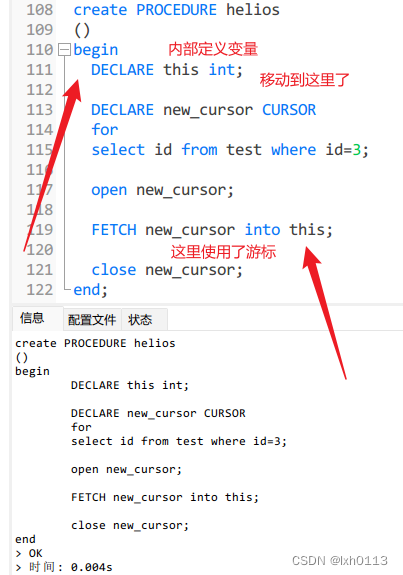

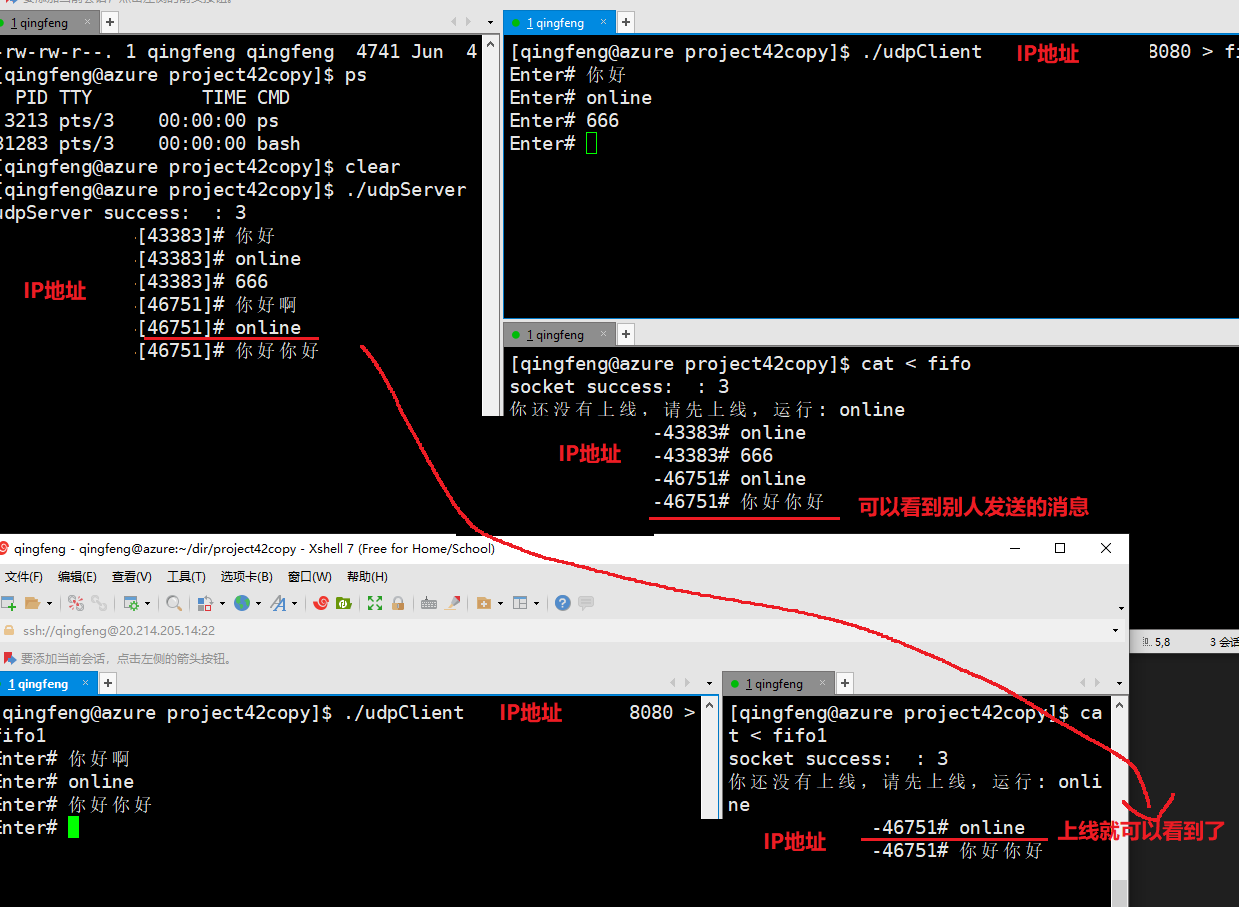

TASK [kubernetes-bootstrap : Update system packages] *************************************************************************************************************************************************</code></span></span>这是启动剧本执行的屏幕截图:

设置确认后输出没有错误:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>...output omitted...

PLAY RECAP *******************************************************************************************************************************************************************************************

k8s-master-01 : ok=28 changed=20 unreachable=0 failed=0 skipped=12 rescued=0 ignored=0

k8s-master-02 : ok=28 changed=20 unreachable=0 failed=0 skipped=12 rescued=0 ignored=0

k8s-master-03 : ok=28 changed=20 unreachable=0 failed=0 skipped=12 rescued=0 ignored=0

k8s-worker-01 : ok=27 changed=19 unreachable=0 failed=0 skipped=13 rescued=0 ignored=0

k8s-worker-02 : ok=27 changed=19 unreachable=0 failed=0 skipped=13 rescued=0 ignored=0

k8s-worker-03 : ok=27 changed=19 unreachable=0 failed=0 skipped=13 rescued=0 ignored=0

k8s-worker-04 : ok=27 changed=19 unreachable=0 failed=0 skipped=13 rescued=0 ignored=0</code></span></span>如果您在运行剧本时遇到任何错误,请使用评论部分联系我们,我们很乐意提供帮助。

登录到其中一个节点并验证以下设置:

- 配置

/etc/hosts文件内容:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>[root@k8s-master-01 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.200.10 k8s-master-01.example.com k8s-master-01

192.168.200.11 k8s-master-02.example.com k8s-master-02

192.168.200.12 k8s-master-03.example.com k8s-master-03

192.168.200.13 k8s-worker-01.example.com k8s-worker-01

192.168.200.14 k8s-worker-02.example.com k8s-worker-02

192.168.200.15 k8s-worker-03.example.com k8s-worker-03

192.168.200.16 k8s-worker-04.example.com k8s-worker-04</code></span></span>- cri-o服务现状:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>[root@k8s-master-01 ~]# <span style="color:var(--wp--preset--color--luminous-vivid-amber) !important">systemctl status crio</span>

<span style="color:var(--wp--preset--color--light-green-cyan) !important">●</span> crio.service - Container Runtime Interface for OCI (CRI-O)

Loaded: loaded (/usr/lib/systemd/system/crio.service; enabled; vendor preset: disabled)

Active: <span style="color:var(--wp--preset--color--vivid-green-cyan) !important">active (running)</span> since Fri 2021-09-24 18:06:53 EAT; 11min ago

Docs: https://github.com/cri-o/cri-o

Main PID: 13445 (crio)

Tasks: 10

Memory: 41.9M

CGroup: /system.slice/crio.service

└─13445 /usr/bin/crio

Sep 24 18:06:53 k8s-master-01.example.com crio[13445]: time="2021-09-24 18:06:53.052576977+03:00" level=info msg="Using default capabilities: CAP_CHOWN, CAP_DAC_OVERRIDE, CAP_FSETID, CAP_>

Sep 24 18:06:53 k8s-master-01.example.com crio[13445]: time="2021-09-24 18:06:53.111352936+03:00" level=info msg="Conmon does support the --sync option"

Sep 24 18:06:53 k8s-master-01.example.com crio[13445]: time="2021-09-24 18:06:53.111623836+03:00" level=info msg="No seccomp profile specified, using the internal default"

Sep 24 18:06:53 k8s-master-01.example.com crio[13445]: time="2021-09-24 18:06:53.111638473+03:00" level=info msg="AppArmor is disabled by the system or at CRI-O build-time"

Sep 24 18:06:53 k8s-master-01.example.com crio[13445]: time="2021-09-24 18:06:53.117006450+03:00" level=info msg="Found CNI network crio (type=bridge) at /etc/cni/net.d/100-crio-bridge.co>

Sep 24 18:06:53 k8s-master-01.example.com crio[13445]: time="2021-09-24 18:06:53.120722070+03:00" level=info msg="Found CNI network 200-loopback.conf (type=loopback) at /etc/cni/net.d/200>

Sep 24 18:06:53 k8s-master-01.example.com crio[13445]: time="2021-09-24 18:06:53.120752984+03:00" level=info msg="Updated default CNI network name to crio"

Sep 24 18:06:53 k8s-master-01.example.com crio[13445]: W0924 18:06:53.126936 13445 hostport_manager.go:71] The binary conntrack is not installed, this can cause failures in network conn>

Sep 24 18:06:53 k8s-master-01.example.com crio[13445]: W0924 18:06:53.130986 13445 hostport_manager.go:71] The binary conntrack is not installed, this can cause failures in network conn>

Sep 24 18:06:53 k8s-master-01.example.com systemd[1]: Started Container Runtime Interface for OCI (CRI-O).</code></span></span>- 配置的sysctl内核参数

<span style="background-color:#051e30"><span style="color:#ffffff"><code>[root@k8s-master-01 ~]# sysctl -p

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1</code></span></span>- firewalld 打开的端口:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>[root@k8s-master-01 ~]# firewall-cmd --list-all

public (active)

target: default

icmp-block-inversion: no

interfaces: enp1s0

sources:

services: cockpit dhcpv6-client ssh

ports: 22/tcp 80/tcp 443/tcp 6443/tcp 2379-2380/tcp 10250/tcp 10251/tcp 10252/tcp 30000-32767/tcp 4789/udp 5473/tcp 179/tcp

protocols:

masquerade: no

forward-ports:

source-ports:

icmp-blocks:

rich rules:</code></span></span>第 4 步:Bootstrap Kubernetes 控制平面(单节点/多节点)

我们将使用kubeadm init命令来初始化 Kubernetes 控制平面节点。它将首先运行一系列飞行前检查以在进行更改之前验证系统状态

以下是您应该注意的关键选项:

- –apiserver-advertise-address:API 服务器将公布其正在侦听的 IP 地址。如果未设置,将使用默认网络接口。

- –apiserver-bind-port:API 服务器绑定的端口;默认为6443

- –control-plane-endpoint:为控制平面指定一个稳定的 IP 地址或 DNS 名称。

- –cri-socket:要连接的 CRI 套接字的路径。如果为空,kubeadm 将尝试自动检测该值;仅当您安装了多个 CRI 或具有非标准 CRI 套接字时才使用此选项。

- –dry-run:不应用任何更改;只输出将要做的事情

- –image-repository:选择容器注册表以从中提取控制平面图像;默认值:“ k8s.gcr.io ”

- –kubernetes-version:为控制平面选择特定的 Kubernetes 版本。

- –pod-network-cidr:指定 pod 网络的 IP 地址范围。如果设置,控制平面将自动为每个节点分配 CIDR。

- –service-cidr:为服务 VIP 使用备用 IP 地址范围。默认值:“ 10.96.0.0/12 ”

下表列出了容器运行时及其关联的套接字路径:

| 运行 | Unix 域套接字的路径 |

|---|---|

| 码头工人 | /var/run/dockershim.sock |

| 集装箱 | /run/containerd/containerd.sock |

| 克里奥 | /var/run/crio/crio.sock |

检查 Kubernetes 版本发行说明

可以通过阅读与 您的 Kubernetes 版本相匹配的变更日志来找到发行说明

选项 1:引导单节点控制平面 Kubernetes 集群

如果您计划将单个控制平面 kubeadm 集群升级到高可用性,您应该指定--control-plane-endpoint为所有控制平面节点设置共享端点。

但是,如果这是针对具有单节点控制平面的测试环境,那么您可以忽略–control-plane-endpoint选项。

登录主节点:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>[root@k8s-bastion ~]# ssh k8s-master-01

Warning: Permanently added 'k8s-master-01' (ED25519) to the list of known hosts.

Last login: Fri Sep 24 18:07:55 2021 from 192.168.200.9

[root@k8s-master-01 ~]#</code></span></span>然后初始化控制平面:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>sudo kubeadm init --pod-network-cidr=192.168.0.0/16</code></span></span>将 Calico Pod 网络部署到集群

使用以下命令部署 Calico Pod 网络附加组件:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>kubectl create -f https://docs.projectcalico.org/manifests/tigera-operator.yaml

kubectl create -f https://docs.projectcalico.org/manifests/custom-resources.yaml</code></span></span>使用以下命令检查 pod 状态:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>[root@k8s-master-01 ~]# <span style="color:var(--wp--preset--color--luminous-vivid-amber) !important">kubectl get pods -n calico-system</span>

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-bdd5f97c5-6856t 1/1 Running 0 60s

calico-node-vnlkf 1/1 Running 0 60s

calico-typha-5f857549c5-hkbwq 1/1 Running 0 60s</code></span></span>选项 2:引导多节点控制平面 Kubernetes 集群

该--control-plane-endpoint选项可用于为所有控制平面节点设置共享端点。此选项允许IP 地址和可以映射到 IP 地址的DNS 名称

Bind DNS 服务器中的 A 记录示例

<span style="background-color:#051e30"><span style="color:#ffffff"><code>; Create entries for the master nodes

k8s-master-01 IN A 192.168.200.10

k8s-master-02 IN A 192.168.200.11

k8s-master-03 IN A 192.168.200.12

;

; The Kubernetes cluster <strong>ControlPlaneEndpoint</strong> point these to the IP of the masters

k8s-endpoint IN A 192.168.200.10

k8s-endpoint IN A 192.168.200.11

k8s-endpoint IN A 192.168.200.12</code></span></span>A记录/etc/hosts文件示例

<span style="background-color:#051e30"><span style="color:#ffffff"><code>$ sudo vim /etc/hosts

192.168.200.10 k8s-master-01.example.com k8s-master-01

192.168.200.11 k8s-master-02.example.com k8s-master-02

192.168.200.12 k8s-master-03.example.com k8s-master-03

## Kubernetes cluster ControlPlaneEndpoint Entries ###

192.168.200.10 k8s-endpoint.example.com k8s-endpoint

#192.168.200.11 k8s-endpoint.example.com k8s-endpoint

#192.168.200.12 k8s-endpoint.example.com k8s-endpoint</code></span></span>为 ControlPlaneEndpoint 使用负载均衡器 IP

最理想的 HA 设置方法是将 ControlPlane 端点映射到负载均衡器 IP。然后 LB 将通过某种形式的健康检查指向控制平面节点。

<span style="background-color:#051e30"><span style="color:#ffffff"><code><em># Entry in Bind DNS Server

</em>k8s-endpoint IN A 192.168.200.8

<em># Entry in /etc/hosts file

</em>192.168.200.8 k8s-endpoint.example.com k8s-endpoint</code></span></span>Bootstrap 多节点控制平面 Kubernetes 集群

从堡垒服务器或您的工作站计算机登录到主节点 01:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>[root@k8s-bastion ~]# ssh k8s-master-01

Warning: Permanently added 'k8s-master-01' (ED25519) to the list of known hosts.

Last login: Fri Sep 24 18:07:55 2021 from 192.168.200.9

[root@k8s-master-01 ~]#</code></span></span>/etc/hosts使用此节点 IP 地址和映射到此 IP 的自定义 DNS 名称更新文件:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>[root@k8s-master-01 ~]# vim /etc/hosts

192.168.200.10 k8s-endpoint.example.com k8s-endpoint</code></span></span>初始化控制平面节点运行:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>[root@k8s-master-01 ~]# kubeadm init \

--pod-network-cidr=192.168.0.0/16 \

--control-plane-endpoint=k8s-endpoint.example.com \

--cri-socket=/var/run/crio/crio.sock \

--upload-certs</code></span></span>在哪里:

- k8s-endpoint.example.com是为 ControlPlane Endpoint 配置的有效 DNS 名称

- /var/run/crio/crio.sock是 Cri-o 运行时套接字文件

- 192.168.0.0/16是您要在 Kubernetes 中使用的 Pod 网络

- –upload-certs Flag used ti将应该在所有控制平面实例之间共享的证书上传到集群

如果成功,您将获得内容类似于以下内容的输出:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>...output omitted...

[mark-control-plane] Marking the node k8s-master-01.example.com as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: p11op9.eq9vr8gq9te195b9

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join k8s-endpoint.example.com:6443 --token 78oyk4.ds1hpo2vnwg3yykt \

--discovery-token-ca-cert-hash sha256:4fbb0d45a1989cf63624736a005dc00ce6068eb7543ca4ae720c7b99a0e86aca \

--control-plane --certificate-key 999110f4a07d3c430d19ca0019242f392e160216f3b91f421da1a91f1a863bba

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join k8s-endpoint.example.com:6443 --token 78oyk4.ds1hpo2vnwg3yykt \

--discovery-token-ca-cert-hash sha256:4fbb0d45a1989cf63624736a005dc00ce6068eb7543ca4ae720c7b99a0e86aca</code></span></span>配置Kubectl,如输出所示:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config</code></span></span>通过检查活动节点进行测试:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>[root@k8s-master-01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master-01.example.com Ready control-plane,master 4m3s v1.26.2</code></span></span>将 Calico Pod 网络部署到集群

使用以下命令部署 Calico Pod 网络附加组件:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>kubectl create -f https://docs.projectcalico.org/manifests/tigera-operator.yaml

kubectl create -f https://docs.projectcalico.org/manifests/custom-resources.yaml</code></span></span>使用以下命令检查 pod 状态:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>[root@k8s-master-01 ~]# <span style="color:var(--wp--preset--color--luminous-vivid-amber) !important">kubectl get pods -n calico-system -w</span>

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-bdd5f97c5-6856t 1/1 Running 0 60s

calico-node-vnlkf 1/1 Running 0 60s

calico-typha-5f857549c5-hkbwq 1/1 Running 0 60s</code></span></span>添加其他控制平面节点

添加主节点 02

登录k8s-master-02:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>[root@k8s-bastion ~]#<span style="color:var(--wp--preset--color--luminous-vivid-amber) !important"> ssh k8s-master-02</span>

Warning: Permanently added 'k8s-master-02' (ED25519) to the list of known hosts.

Last login: Sat Sep 25 01:49:15 2021 from 192.168.200.9

[root@k8s-master-02 ~]#</code></span></span>/etc/hosts通过将 ControlPlaneEndpoint 设置为启动引导过程的第一个控制节点来更新文件:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>[root@k8s-master-02 ~]# <span style="color:var(--wp--preset--color--luminous-vivid-amber) !important">vim /etc/hosts</span>

192.168.200.10 k8s-endpoint.example.com k8s-endpoint

#192.168.200.11 k8s-endpoint.example.com k8s-endpoint

#192.168.200.12 k8s-endpoint.example.com k8s-endpoint</code></span></span>我将使用成功初始化后打印的命令:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>kubeadm join k8s-endpoint.example.com:6443 --token 78oyk4.ds1hpo2vnwg3yykt \

--discovery-token-ca-cert-hash sha256:4fbb0d45a1989cf63624736a005dc00ce6068eb7543ca4ae720c7b99a0e86aca \

--control-plane --certificate-key 999110f4a07d3c430d19ca0019242f392e160216f3b91f421da1a91f1a863bba</code></span></span>添加主节点 03

登录k8s-master-0 3:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>[root@k8s-bastion ~]#<span style="color:var(--wp--preset--color--luminous-vivid-amber) !important"> ssh k8s-master-03</span>

Warning: Permanently added 'k8s-master-02' (ED25519) to the list of known hosts.

Last login: Sat Sep 25 01:55:11 2021 from 192.168.200.9

[root@k8s-master-02 ~]#</code></span></span>/etc/hosts通过将 ControlPlaneEndpoint 设置为启动引导过程的第一个控制节点来更新文件:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>[root@k8s-master-02 ~]# <span style="color:var(--wp--preset--color--luminous-vivid-amber) !important">vim /etc/hosts</span>

192.168.200.10 k8s-endpoint.example.com k8s-endpoint

#192.168.200.11 k8s-endpoint.example.com k8s-endpoint

#192.168.200.12 k8s-endpoint.example.com k8s-endpoint</code></span></span>我将使用成功初始化后打印的命令:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>kubeadm join k8s-endpoint.example.com:6443 --token 78oyk4.ds1hpo2vnwg3yykt \

--discovery-token-ca-cert-hash sha256:4fbb0d45a1989cf63624736a005dc00ce6068eb7543ca4ae720c7b99a0e86aca \

--control-plane --certificate-key 999110f4a07d3c430d19ca0019242f392e160216f3b91f421da1a91f1a863bba</code></span></span>检查控制平面节点列表

从配置了 Kubectl 的主节点之一检查节点列表:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>[root@k8s-master-03 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master-01.example.com Ready control-plane,master 11m v1.26.2

k8s-master-02.example.com Ready control-plane,master 5m v1.26.2

k8s-master-03.example.com Ready control-plane,master 32s v1.26.2</code></span></span>/etc/hosts如果不使用 Load Balancer IP,您现在可以删除每个控制节点上文件中取消注释的其他行:

<span style="background-color:#051e30"><span style="color:#ffffff"><code><strong><em># Perform on all control plane nodes

</em></strong>[root@k8s-master-03 ~]# <span style="color:var(--wp--preset--color--luminous-vivid-amber) !important">vim /etc/hosts</span>

### Kubernetes cluster ControlPlaneEndpoint Entries ###

192.168.200.10 k8s-endpoint.example.com k8s-endpoint

192.168.200.11 k8s-endpoint.example.com k8s-endpoint

192.168.200.12 k8s-endpoint.example.com k8s-endpoint</code></span></span>第 5 步:将工作节点添加到 Kubernetes 集群

使用 ssh 登录到每台工作机器:

<span style="background-color:#051e30"><span style="color:#ffffff"><code><em><strong>### Example ###

</strong></em>[root@k8s-bastion ~]# <span style="color:var(--wp--preset--color--pale-pink) !important">ssh root@k8s-worker-01</span>

Warning: Permanently added 'k8s-worker-01' (ED25519) to the list of known hosts.

Enter passphrase for key '/root/.ssh/id_rsa':

Last login: Sat Sep 25 04:27:42 2021 from 192.168.200.9

[root@k8s-worker-01 ~]#</code></span></span>如果没有 DNS,则使用主节点和工作节点主机名/IP 地址更新每个节点/etc/hosts上的文件:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>[root@k8s-worker-01 ~]# sudo vim /etc/hosts

<em>### Also add Kubernetes cluster ControlPlaneEndpoint Entries for multiple control plane nodes(masters) ###

</em>192.168.200.10 k8s-endpoint.example.com k8s-endpoint

192.168.200.11 k8s-endpoint.example.com k8s-endpoint

1192.168.200.12 k8s-endpoint.example.com k8s-endpoint</code></span></span>使用前面给出的命令将您的工作机器加入集群:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>kubeadm join k8s-endpoint.example.com:6443 \

--token 78oyk4.ds1hpo2vnwg3yykt \

--discovery-token-ca-cert-hash sha256:4fbb0d45a1989cf63624736a005dc00ce6068eb7543ca4ae720c7b99a0e86aca</code></span></span>完成后kubectl get nodes在控制平面上运行以查看节点加入集群:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>[root@k8s-master-02 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master-01.example.com Ready control-plane,master 23m v1.26.2

k8s-master-02.example.com Ready control-plane,master 16m v1.26.2

k8s-master-03.example.com Ready control-plane,master 12m v1.26.2

k8s-worker-01.example.com Ready <none> 3m25s v1.26.2

k8s-worker-02.example.com Ready <none> 2m53s v1.26.2

k8s-worker-03.example.com Ready <none> 2m31s v1.26.2

k8s-worker-04.example.com Ready <none> 2m12s v1.26.2</code></span></span>第 6 步:在集群上部署测试应用程序

我们需要通过部署应用程序来验证我们的集群是否正常工作。我们将使用Guestbook 应用程序。

对于单节点集群,请查看我们关于如何在控制平面节点上运行 pod 的指南:

- 在 Kubernetes 控制平面(主)节点上调度 Pod

创建一个临时命名空间:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>$ <span style="color:var(--wp--preset--color--pale-pink) !important">kubectl create namespace temp</span>

namespace/temp created</code></span></span>在创建的临时命名空间中部署留言簿应用程序。

<span style="background-color:#051e30"><span style="color:#ffffff"><code>kubectl -n temp apply -f https://k8s.io/examples/application/guestbook/redis-leader-deployment.yaml

kubectl -n temp apply -f https://k8s.io/examples/application/guestbook/redis-leader-service.yaml

kubectl -n temp apply -f https://k8s.io/examples/application/guestbook/redis-follower-deployment.yaml

kubectl -n temp apply -f https://k8s.io/examples/application/guestbook/redis-follower-service.yaml

kubectl -n temp apply -f https://k8s.io/examples/application/guestbook/frontend-deployment.yaml

kubectl -n temp apply -f https://k8s.io/examples/application/guestbook/frontend-service.yaml</code></span></span>查询 Pod 列表以验证它们是否在几分钟后运行:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>$ <span style="color:var(--wp--preset--color--pale-pink) !important"><em>kubectl get all -n temp</em></span>

NAME READY STATUS RESTARTS AGE

pod/frontend-85595f5bf9-j9xlp 1/1 Running 0 81s

pod/frontend-85595f5bf9-m6lsl 1/1 Running 0 81s

pod/frontend-85595f5bf9-tht82 1/1 Running 0 81s

pod/redis-follower-dddfbdcc9-hjjf6 1/1 Running 0 83s

pod/redis-follower-dddfbdcc9-vg4sf 1/1 Running 0 83s

pod/redis-leader-fb76b4755-82xlp 1/1 Running 0 7m34s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/frontend ClusterIP 10.101.239.74 <none> 80/TCP 7s

service/redis-follower ClusterIP 10.109.129.97 <none> 6379/TCP 83s

service/redis-leader ClusterIP 10.101.73.117 <none> 6379/TCP 84s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/frontend 3/3 3 3 81s

deployment.apps/redis-follower 2/2 2 2 83s

deployment.apps/redis-leader 1/1 1 1 7m34s

NAME DESIRED CURRENT READY AGE

replicaset.apps/frontend-85595f5bf9 3 3 3 81s

replicaset.apps/redis-follower-dddfbdcc9 2 2 2 83s

replicaset.apps/redis-leader-fb76b4755 1 1 1 7m34s</code></span></span>运行以下命令将 8080 本地计算机上的端口转发到 80 服务上的端口。

<span style="background-color:#051e30"><span style="color:#ffffff"><code>$ <span style="color:var(--wp--preset--color--pale-pink) !important"><em>kubectl -n temp port-forward svc/frontend 8080:80</em></span>

Forwarding from 127.0.0.1:8080 -> 80

Forwarding from [::1]:8080 -> 80</code></span></span>现在在您的浏览器中加载页面 http://localhost:8080 以查看您的留言簿。

第 7 步:安装 Metrics Server(用于检查 Pod 和节点资源使用情况)

Metrics Server 是集群范围内的资源使用数据聚合器。它从Kubelet在每个节点上公开的 Summary API收集指标 。使用我们下面的指南来部署它:

- 如何将 Metrics Server 部署到 Kubernetes 集群

步骤 8:安装入口控制器

您还可以为 Kubernetes 工作负载安装 Ingress 控制器,您可以使用我们的安装过程指南之一:

- 使用 Helm Chart 在 Kubernetes 上部署 Nginx Ingress Controller

- 在 Kubernetes 集群上安装和配置 Traefik Ingress Controller

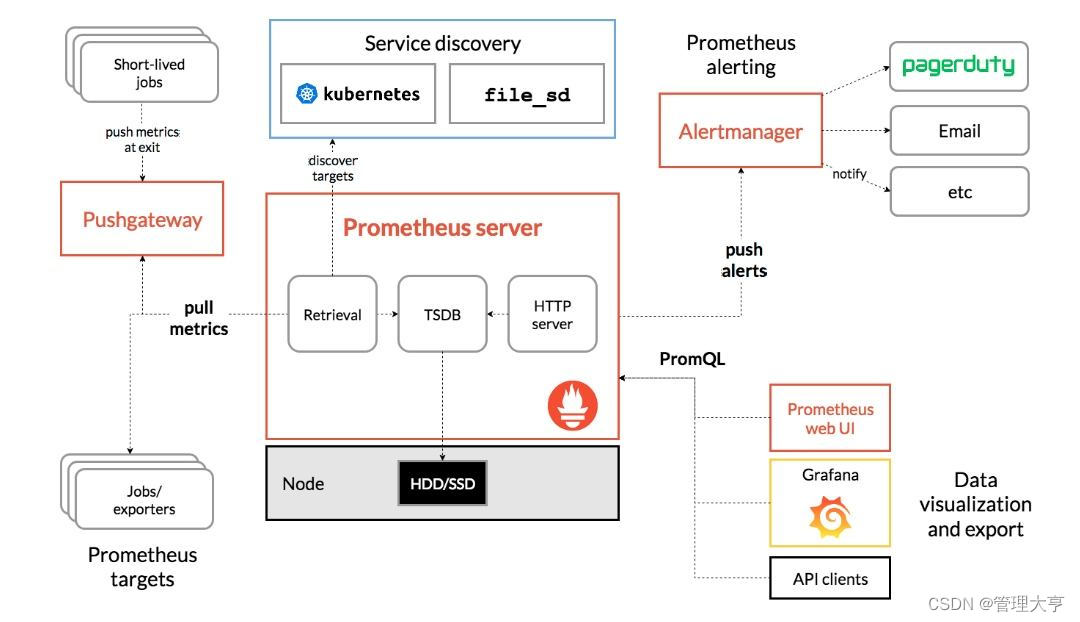

第九步:部署Prometheus/Grafana监控

Prometheus 是一个成熟的解决方案,使您能够访问 Kubernetes 集群中的高级指标功能。Grafana 用于对收集并存储在 Prometheus 数据库中的指标进行分析和交互式可视化。我们有关于如何在 Kubernetes 集群上设置完整监控堆栈的完整指南:

- 使用 prometheus-operator 在 Kubernetes 上设置 Prometheus 和 Grafana

第 10 步:部署 Kubernetes 仪表板(可选)

Kubernetes 仪表板可用于将容器化应用程序部署到 Kubernetes 集群、对容器化应用程序进行故障排除以及管理集群资源。

请参阅我们的安装指南:

- 如何使用 NodePort 安装 Kubernetes 仪表板

第十一步:持久化存储配置思路(可选)

如果您也在为您的 Kubernetes 寻找持久存储解决方案,请查看:

- 将 NFS 配置为 Kubernetes 持久卷存储

- 如何在 Kubernetes 集群上部署 Rook Ceph 存储

- 使用 Cephfs 的 Kubernetes 的 Ceph 持久存储

- 使用 Ceph RBD 的 Kubernetes 持久存储

- 如何使用 Heketi 和 GlusterFS 配置 Kubernetes 动态卷配置

第 12 步:在 Kubernetes 上部署 MetalLB

按照以下指南在 Kubernetes 上安装和配置 MetalLB:

- 如何在 Kubernetes 集群上部署 MetalLB 负载均衡器

第 13 步:使用 crictl 的 CRI-O 基本管理——奖金

显示容器运行时的信息:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>[root@k8s-master-01 ~]# <span style="color:var(--wp--preset--color--luminous-vivid-amber) !important">sudo crictl info</span>

{

"status": {

"conditions": [

{

"type": "RuntimeReady",

"status": true,

"reason": "",

"message": ""

},

{

"type": "NetworkReady",

"status": true,

"reason": "",

"message": ""

}

]

}

}</code></span></span>列出每个节点上拉取的可用图像:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>sudo crictl images</code></span></span>列出节点中正在运行的 Pod:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>[root@k8s-master-01 ~]# <span style="color:var(--wp--preset--color--luminous-vivid-amber) !important">sudo crictl pods</span>

POD ID CREATED STATE NAME NAMESPACE ATTEMPT RUNTIME

4f9630e87f62f 45 hours ago Ready calico-apiserver-77dffffcdf-fvkp6 calico-apiserver 0 (default)

cbb3e8f3e027f 45 hours ago Ready calico-kube-controllers-bdd5f97c5-thmhs calico-system 0 (default)

e54575c66d1f4 45 hours ago Ready coredns-78fcd69978-wnmdw kube-system 0 (default)

c4d03ba28658e 45 hours ago Ready coredns-78fcd69978-w25zj kube-system 0 (default)

350967fe5a9ae 45 hours ago Ready calico-node-24bff calico-system 0 (default)

a05fe07cac170 45 hours ago Ready calico-typha-849b9f85b9-l6sth calico-system 0 (default)

813176f56c107 45 hours ago Ready tigera-operator-76bbbcbc85-x6kzt tigera-operator 0 (default)

f2ff65cae5ff9 45 hours ago Ready kube-proxy-bpqf8 kube-system 0 (default)

defdbef7e8f3f 45 hours ago Ready kube-apiserver-k8s-master-01.example.com kube-system 0 (default)

9a165c4313dc9 45 hours ago Ready kube-scheduler-k8s-master-01.example.com kube-system 0 (default)

b2fd905625b90 45 hours ago Ready kube-controller-manager-k8s-master-01.example.com kube-system 0 (default)

d23524b1b3345 45 hours ago Ready etcd-k8s-master-01.example.com kube-system 0 (default)</code></span></span>使用 crictl 列出正在运行的容器:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>[root@k8s-master-01 ~]# <span style="color:var(--wp--preset--color--luminous-vivid-amber) !important">sudo crictl ps</span>

CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID

7dbb7957f9e46 98e04bee275750acf8b94e3e7dec47336ade7efda240556cd39273211d090f74 45 hours ago Running tigera-operator 1 813176f56c107

22a2a949184bf b51ddc1014b04295e85be898dac2cd4c053433bfe7e702d7e9d6008f3779609b 45 hours ago Running kube-scheduler 5 9a165c4313dc9

3db88f5f14181 5425bcbd23c54270d9de028c09634f8e9a014e9351387160c133ccf3a53ab3dc 45 hours ago Running kube-controller-manager 5 b2fd905625b90

b684808843527 4e7da027faaa7b281f076bccb81e94da98e6394d48efe1f46517dcf8b6b05b74 45 hours ago Running calico-apiserver 0 4f9630e87f62f

43ef02d79f68e 5df320a38f63a072dac00e0556ff1fba5bb044b12cb24cd864c03b2fee089a1e 45 hours ago Running calico-kube-controllers 0 cbb3e8f3e027f

f488d1d1957ff 8d147537fb7d1ac8895da4d55a5e53621949981e2e6460976dae812f83d84a44 45 hours ago Running coredns 0 e54575c66d1f4

db2310c6e2bc7 8d147537fb7d1ac8895da4d55a5e53621949981e2e6460976dae812f83d84a44 45 hours ago Running coredns 0 c4d03ba28658e

823b9d049c8f3 355c1ee44040be5aabadad8a0ca367fbadf915c50a6ddcf05b95134a1574c516 45 hours ago Running calico-node 0 350967fe5a9ae

5942ea3535b3c 8473ae43d01b845e72237bf897fda02b7e28594c9aa8bcfdfa2c9a55798a3889 45 hours ago Running calico-typha 0 a05fe07cac170

9072655f275b1 873127efbc8a791d06e85271d9a2ec4c5d58afdf612d490e24fb3ec68e891c8d 45 hours ago Running kube-proxy 0 f2ff65cae5ff9

3855de8a093c1 0048118155842e4c91f0498dd298b8e93dc3aecc7052d9882b76f48e311a76ba 45 hours ago Running etcd 4 d23524b1b3345

87f03873cf9c4 e64579b7d8862eff8418d27bf67011e348a5d926fa80494a6475b3dc959777f5 45 hours ago Running kube-apiserver 4 defdbef7e8f3f</code></span></span>列出Kubernetes 节点中的容器资源使用统计信息:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>[root@k8s-master-01 ~]# <span style="color:var(--wp--preset--color--luminous-vivid-amber) !important">sudo crictl stats</span>

CONTAINER CPU % MEM DISK INODES

22a2a949184bf 0.89 27.51MB 232B 13

3855de8a093c1 1.85 126.8MB 297B 17

3db88f5f14181 0.75 68.62MB 404B 21

43ef02d79f68e 0.00 24.32MB 437B 18

5942ea3535b3c 0.09 26.72MB 378B 20

7dbb7957f9e46 0.19 30.97MB 368B 20

823b9d049c8f3 1.55 129.5MB 12.11kB 93

87f03873cf9c4 3.98 475.7MB 244B 14

9072655f275b1 0.00 20.13MB 2.71kB 23

b684808843527 0.71 36.68MB 405B 21

db2310c6e2bc7 0.10 22.51MB 316B 17

f488d1d1957ff 0.09 21.5MB 316B 17</code></span></span>获取容器的日志:

<span style="background-color:#051e30"><span style="color:#ffffff"><code>[root@k8s-master-01 ~]# sudo crictl ps

[root@k8s-master-01 ~]# sudo crictl logs <containerid>

<strong># Example

</strong>[root@k8s-master-01 ~]# sudo crictl logs 9072655f275b1

I0924 18:06:37.800801 1 proxier.go:659] "Failed to load kernel module with modprobe. You can ignore this message when kube-proxy is running inside container without mounting /lib/modules" moduleName="nf_conntrack_ipv4"

I0924 18:06:37.815013 1 node.go:172] Successfully retrieved node IP: 192.168.200.10

I0924 18:06:37.815040 1 server_others.go:140] Detected node IP 192.168.200.10

W0924 18:06:37.815055 1 server_others.go:565] Unknown proxy mode "", assuming iptables proxy

I0924 18:06:37.833413 1 server_others.go:206] kube-proxy running in dual-stack mode, IPv4-primary

I0924 18:06:37.833459 1 server_others.go:212] Using iptables Proxier.

I0924 18:06:37.833469 1 server_others.go:219] creating dualStackProxier for iptables.

W0924 18:06:37.833487 1 server_others.go:495] detect-local-mode set to ClusterCIDR, but no IPv6 cluster CIDR defined, , defaulting to no-op detect-local for IPv6

I0924 18:06:37.833761 1 server.go:649] Version: v1.26.2

I0924 18:06:37.837601 1 conntrack.go:52] Setting nf_conntrack_max to 131072

I0924 18:06:37.837912 1 config.go:315] Starting service config controller

I0924 18:06:37.837921 1 shared_informer.go:240] Waiting for caches to sync for service config

I0924 18:06:37.838003 1 config.go:224] Starting endpoint slice config controller

I0924 18:06:37.838007 1 shared_informer.go:240] Waiting for caches to sync for endpoint slice config

E0924 18:06:37.843521 1 event_broadcaster.go:253] Server rejected event '&v1.Event{TypeMeta:v1.TypeMeta{Kind:"", APIVersion:""}, ObjectMeta:v1.ObjectMeta{Name:"k8s-master-01.example.com.16a7d4488288392d", GenerateName:"", Namespace:"default", SelfLink:"", UID:"", ResourceVersion:"", Generation:0, CreationTimestamp:v1.Time{Time:time.Time{wall:0x0, ext:0, loc:(*time.Location)(nil)}}, DeletionTimestamp:(*v1.Time)(nil), DeletionGracePeriodSeconds:(*int64)(nil), Labels:map[string]string(nil), Annotations:map[string]string(nil), OwnerReferences:[]v1.OwnerReference(nil), Finalizers:[]string(nil), ClusterName:"", ManagedFields:[]v1.ManagedFieldsEntry(nil)}, EventTime:v1.MicroTime{Time:time.Time{wall:0xc04ba2cb71ef9faf, ext:72545250, loc:(*time.Location)(0x2d81340)}}, Series:(*v1.EventSeries)(nil), ReportingController:"kube-proxy", ReportingInstance:"kube-proxy-k8s-master-01.example.com", Action:"StartKubeProxy", Reason:"Starting", Regarding:v1.ObjectReference{Kind:"Node", Namespace:"", Name:"k8s-master-01.example.com", UID:"k8s-master-01.example.com", APIVersion:"", ResourceVersion:"", FieldPath:""}, Related:(*v1.ObjectReference)(nil), Note:"", Type:"Normal", DeprecatedSource:v1.EventSource{Component:"", Host:""}, DeprecatedFirstTimestamp:v1.Time{Time:time.Time{wall:0x0, ext:0, loc:(*time.Location)(nil)}}, DeprecatedLastTimestamp:v1.Time{Time:time.Time{wall:0x0, ext:0, loc:(*time.Location)(nil)}}, DeprecatedCount:0}': 'Event "k8s-master-01.example.com.16a7d4488288392d" is invalid: involvedObject.namespace: Invalid value: "": does not match event.namespace' (will not retry!)

I0924 18:06:37.938785 1 shared_informer.go:247] Caches are synced for endpoint slice config

I0924 18:06:37.938858 1 shared_informer.go:247] Caches are synced for service config</code></span></span>结论

您现在已经在 Rocky Linux 8 服务器上部署了一个功能正常的多节点 Kubernetes 集群。我们的集群具有三个控制平面节点,当放置在具有节点健康状态检查的负载均衡器之前时,可以保证高可用性。在本指南中,我们使用 CRI-O 作为 Kubernetes 设置的 Docker 容器运行时的轻量级替代方案。我们还为 Pod 网络选择了 Calico 插件。

您现在已准备好在集群上部署容器化应用程序或将更多工作节点加入集群。我们之前写了其他文章来帮助您开始 Kubernetes 之旅: