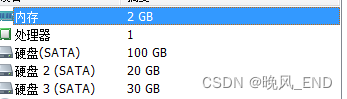

注意

- 存储空间不够可能安装失败

环境

- master 192.168.1.108

- node1 192.168.1.106

- node2 192.168.1.102

root ssh登录

sudo passwd root

sudo apt install openssh-server

# 定位 /PermitRootLogin 添加 PermitRootLogin yes

# 注释掉#PermitRootLogin prohibit-password #StrictModes yes

sudo vim /etc/ssh/sshd_config

sudo service ssh restart

主机名

master

hostnamectl set-hostname master

hostnamectl set-hostname node1

hostnamectl set-hostname node2

node1

hostnamectl set-hostname node1

node2

hostnamectl set-hostname node2

kk

master

export KKZONE=cn

curl -sfL https://get-kk.kubesphere.io | VERSION=v1.1.1 sh -

chmod +x kk

# 运行成功 当前文件下有config-sample.yaml

./kk create config --with-kubernetes v1.20.4 --with-kubesphere v3.1.1

# 需要修改的地方见下

vim config-sample.yaml

# 开始安装

./kk create cluster -f config-sample.yaml

# 查看安装进度

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

config-sample.yaml需要修改的地方

spec:

hosts:#name是hostname,address和internalAddress换成内网地址,user主机用户名,password主机登录密码

- {name: master, address: 10.140.126.6, internalAddress: 10.140.126.6, user: root, password: 12345678}

- {name: node1, address: 10.140.122.56, internalAddress: 10.140.122.56, user: root, password: 12345678}

- {name: node2, address: 10.140.122.39, internalAddress: 10.140.122.39, user: root, password: 12345678}

roleGroups:

etcd: #etcd在master中

- master

master: #master名称 设置为hostname

- master

worker: #worker名称 设置为hostname

- node1

- node2

安装完成产生输出

Console: http://192.168.1.108:30880

Account: admin

Password: P@88w0rd

# 等待所有pod状态位running

kubectl get pod -A

访问 http://192.168.1.108:30880

若需要nfs存储服务则继续下面操作

nfs

master

sudo apt install nfs-kernel-server

# 补充,卸载 remove apt remove nfs-kernel-server

# 补充,node卸载 umount -f -l /nfs/data

node1

showmount -e 192.168.1.108

mkdir -p /nfs/data

mount -t nfs 192.168.1.108:/nfs/data /nfs/data

node2

showmount -e 192.168.1.108

mkdir -p /nfs/data

mount -t nfs 192.168.1.108:/nfs/data /nfs/data

默认storage

master

vim nfs-storage.yaml

kubectl apply -f nfs-storage.yaml

nfs-storage.yaml

- 注意修改 下面的两处IP

## 创建了一个存储类

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-storage

annotations:

storageclass.kubernetes.io/is-default-class: "true"

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner

parameters:

archiveOnDelete: "true" ## 删除pv的时候,pv的内容是否要备份

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/nfs-subdir-external-provisioner:v4.0.2

# resources:

# limits:

# cpu: 10m

# requests:

# cpu: 10m

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner

- name: NFS_SERVER

value: 192.168.1.108 ## 指定自己nfs服务器地址

- name: NFS_PATH

value: /nfs/data ## nfs服务器共享的目录

volumes:

- name: nfs-client-root

nfs:

server: 192.168.1.108

path: /nfs/data

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

参考

参考