模型结构和训练代码来自这里 https://blog.csdn.net/weixin_41477928/article/details/123385000

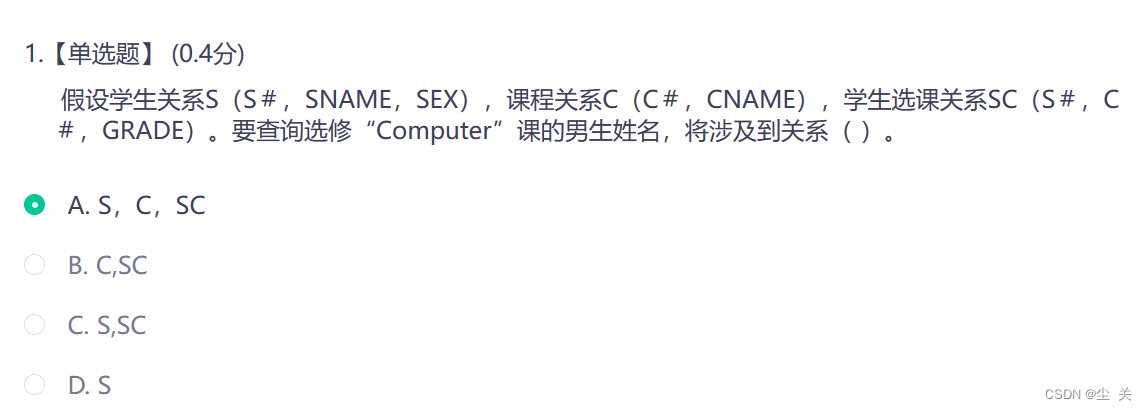

俺又加了离线测试的代码:

- 第一次运行此代码,需有网络,会下载开源数据集MNIST

- 训练的过程中会把10个epoch的模型均保存到./models下,可能需要你创建好models文件夹。训练过程中的输出如下:

[1, 300] loss:0.257 [1, 600] loss:0.078 [1, 900] loss:0.060 Accuracy on test set:98 % ... [10, 300] loss:0.002 [10, 600] loss:0.003 [10, 900] loss:0.004 Accuracy on test set:99 % - 如果想加载保存的模型文件,然后推理一个手写照片看预测结果,可将最下面main函数中的两个函数,注释第一个,使用第二个

-

比如测试如下图片:

-

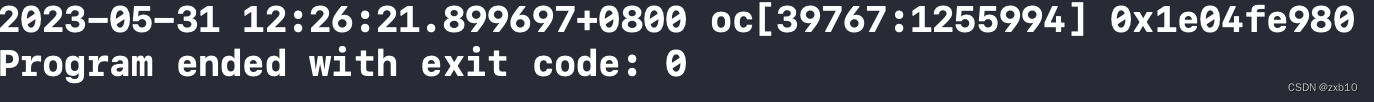

输出结果:

The predicted digit is 5

-

import torch

from torchvision import transforms # 是一个常用的图片变换类

from torchvision import datasets

from torch.utils.data import DataLoader

import torch.nn.functional as F

import cv2

# 如果有GPU那么就使用GPU跑代码,否则就使用cpu。cuda:0表示第1块显卡

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu") # 将数据放在GPU上跑所需要的代码

# 定义数据批的大小,预处理

batch_size = 64

transform = transforms.Compose(

[

transforms.ToTensor(), # 把数据转换成张量

transforms.Normalize((0.1307,), (0.3081,)) # 0.1307是均值,0.3081是标准差

]

)

# 训练集、测试集 (首次运行会下载到root下)

train_dataset = datasets.MNIST(root='./data/',

train=True,

download=True,

transform=transform)

train_loader = DataLoader(train_dataset,

shuffle=True,

batch_size=batch_size)

test_dataset = datasets.MNIST(root='./data/',

train=False,

download=True,

transform=transform)

test_loader = DataLoader(test_dataset,

shuffle=True,

batch_size=batch_size)

# 定义一个神经网络

class MyNet(torch.nn.Module):

def __init__(self):

super(MyNet, self).__init__()

self.layer1 = torch.nn.Sequential(

torch.nn.Conv2d(1, 25, kernel_size=3),

torch.nn.BatchNorm2d(25),

torch.nn.ReLU(inplace=True)

)

self.layer2 = torch.nn.Sequential(

torch.nn.MaxPool2d(kernel_size=2, stride=2)

)

self.layer3 = torch.nn.Sequential(

torch.nn.Conv2d(25, 50, kernel_size=3),

torch.nn.BatchNorm2d(50),

torch.nn.ReLU(inplace=True)

)

self.layer4 = torch.nn.Sequential(

torch.nn.MaxPool2d(kernel_size=2, stride=2)

)

self.fc = torch.nn.Sequential(

torch.nn.Linear(50 * 5 * 5, 1024),

torch.nn.ReLU(inplace=True),

torch.nn.Linear(1024, 128),

torch.nn.ReLU(inplace=True),

torch.nn.Linear(128, 10)

)

def forward(self, x):

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = x.view(x.size(0), -1) # 在进入全连接层之前需要把数据拉直Flatten

x = self.fc(x)

return x

# 实例化,得到神经网络的结构

model = MyNet()

model.to(device) # 将数据放在GPU上跑所需要的代码

def train(epochs):

criterion = torch.nn.CrossEntropyLoss() # 使用交叉熵损失

optimizer = torch.optim.SGD(model.parameters(), lr=0.1, momentum=0.5) # momentum表示冲量,冲出局部最小

running_loss = 0.0

for batch_idx, data in enumerate(train_loader, 0):

inputs, target = data

inputs, target = inputs.to(device), target.to(device) # 将数据放在GPU上跑所需要的代码

optimizer.zero_grad()

# 前向+反馈+更新

outputs = model(inputs)

loss = criterion(outputs, target)

loss.backward()

optimizer.step()

running_loss += loss.item()

if batch_idx % 300 == 299: # 不让他每一次小的迭代就输出,而是300次小迭代再输出一次

print('[%d,%5d] loss:%.3f' % (epochs + 1, batch_idx + 1, running_loss / 300))

running_loss = 0.0

torch.save(model.state_dict(), 'models/model_{}.pth'.format(epochs))

def test():

correct = 0

total = 0

with torch.no_grad(): # 下面的代码就不会再计算梯度

for data in test_loader:

inputs, target = data

inputs, target = inputs.to(device), target.to(device) # 将数据放在GPU上跑所需要的代码

outputs = model(inputs)

_, predicted = torch.max(outputs.data, dim=1) # _为每一行的最大值,predicted表示每一行最大值的下标

total += target.size(0)

correct += (predicted == target).sum().item()

print('Accuracy on test set:%d %%' % (100 * correct / total))

# 方式1:训练、测试

def train_test():

for epoch in range(10):

train(epoch)

test()

# 方式2:加载保存到本地的模型权重,然后推理得到预测结果

def load_model_test():

model.load_state_dict(torch.load("models/model_9.pth"))

model.eval()

# 使用 OpenCV 处理本地手写数字图片

img = cv2.imread('data/5-1.png', cv2.IMREAD_GRAYSCALE)

img = cv2.resize(img, (28, 28))

img = img / 255.0

img = torch.from_numpy(img).float().unsqueeze(0).unsqueeze(0)

img = img.to(device)

with torch.no_grad():

output = model(img) # 推理并得到输出

# 导出模型为onnx

torch_out = torch.onnx.export(model,

img,

"./models/model_9.onnx",

input_names=['i0'],

export_params=True,

opset_version=11, # 转换为哪个版本的 onnx

do_constant_folding=True, # 是否执行常量折叠优化

operator_export_type=torch.onnx.OperatorExportTypes.ONNX_ATEN_FALLBACK # 命名输入输出;支持超前op

)

pred = torch.argmax(output, dim=1)

print(f'The predicted digit is {pred.item()}')

if __name__ == '__main__':

train_test() # 先训练,再测试,并保存训练好的模型

# load_model_test() # 加载保存后的模型权重,推理预测