从零开始进行高精度手眼标定 eye in hand(小白向)2 Tsai轴角法与四元数法编程实现

- 前言

- Tsai标定方法原理推导

- 轴角方法

- 原理

- matlab编程实现

- 四元数方法

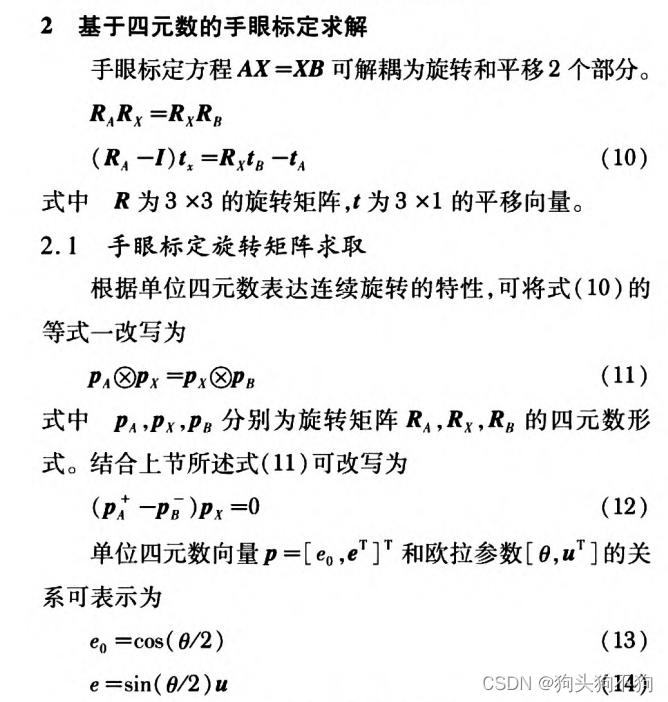

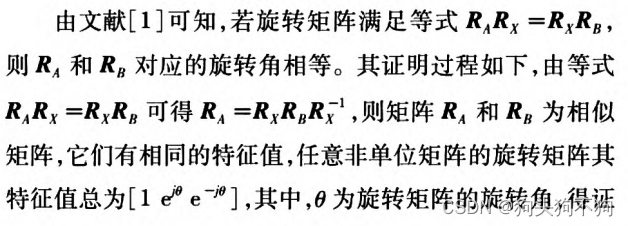

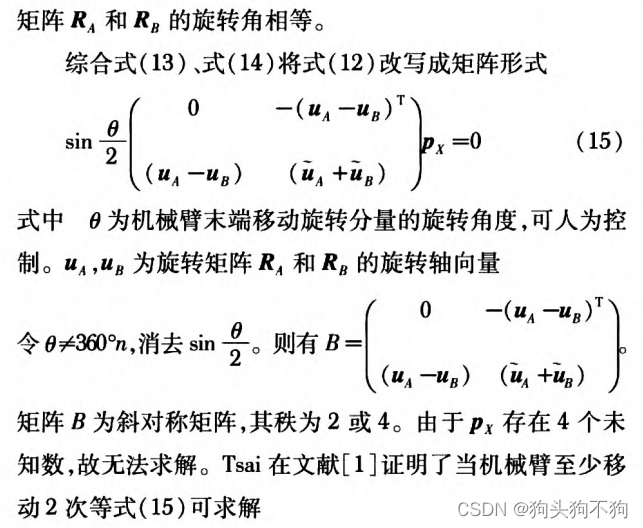

- 原理

- matlab编程实现

前言

最近由于组内的相关工作需求,需要进行机器人的高精度标定。原始的标定精度在6mm左右,虽然听起来是非常微小的偏差,但是由于研究方向手术机器人对精度要求极高。且在运动过程中,深度信息误差、畸变误差、机械误差、坐标系转换等一系列误差累积环节,将导致误差放大,因此远不能达到要求。

在经过了为其两周的研究和编程探索,最后成功将标定精度提升到0.5mm,达到了任务要求。

在研究和标定中,查阅了很多博客和相关资料,也踩了很多坑,作为也是小白的自己在研究和学习中发现当前的研究文献和博客最重要的问题是,没有从Tsai方法中AX=XB中,对A矩阵和B矩阵进行详细描述,也没有给出具体的计算代码和过程。默认读者已经搞清楚矩阵AB的物理意义和计算方式,对小白并不友好,笔者在进行手眼标定的过程中也经常由于计算错误A矩阵和B矩阵的左乘或右乘,得到错误的AB矩阵的输入数据,耽误了一些时间,因此在此记录

本系列的重点有三个:

- 原理中A,B矩阵的推导和代码计算

- Tsai轴角方法和四元数等二步计算方法的实现

- 基于非线性优化的高精度手眼标定方法的原理与代码实现

Tsai标定方法原理推导

传送门:

轴角方法

原理

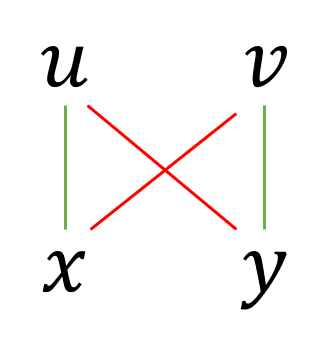

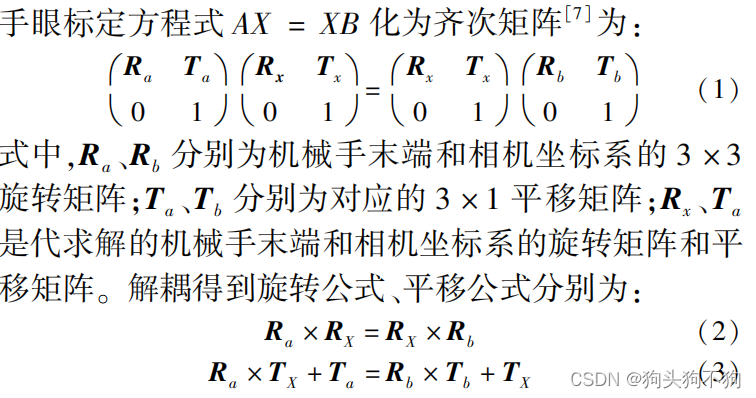

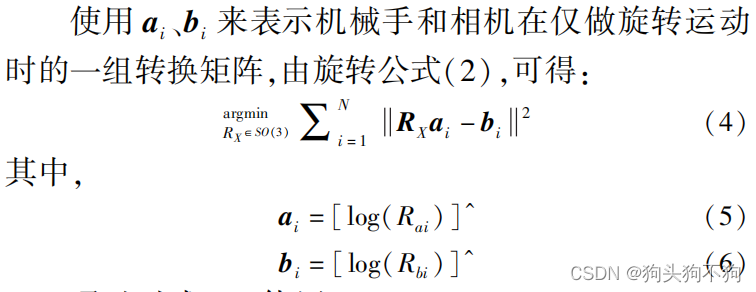

以旋转矩阵进行公式表示可以得到分别关于旋转矩阵和平移矩阵的等式。对等式进行解耦可以分别的得到关于手眼变换矩阵的旋转公式和平移公式。

通过轴角法对tsai进行求解首先要通过机械手和相机在旋转过程中计算旋转轴向向量和旋转角度,通过两方进行log处理可以使用SVD方法求解旋转矩阵

最后通过,旋转矩阵与平移矩阵的关系即通过公式(3)进行求解,得到平移矩阵T的值

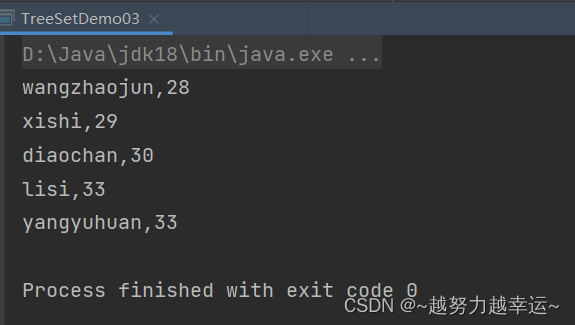

matlab编程实现

% clc;

%% 通过四元数方法求解手眼变换矩阵

img_num =44;

%% RobotEffectorPose; %机器人末端位姿导入

x = load("E:\研究生学习\手眼标定\IMG_20230516_3\pos.txt");

pos = zeros(4,4,img_num);

% 各个点机械臂位姿变化矩阵

for i=1:1:img_num

pos(:,:,i) = x(((4*(i-1)+1):4*i),:);

end

% 整合到连续矩阵中

REs = [];

for i=1:1:img_num

REs = [REs;pos(:,:,i)];

end

%% 标定数据导入

load('calibrationSession0516_3.mat')

TE = calibrationSession.EstimationErrors.ExtrinsicsErrors.TranslationVectorsError;

% TE = estimationErrors.ExtrinsicsErrors.TranslationVectorsError;

Tex = mean(TE(:,1)); Tey = mean(TE(:,2)); Tez = mean(TE(:,3));

errorT = [Tex,Tey,Tez];

%% 求末端和摄像机转轴kl, kr.

kl=[]; kr=[]; theta_r=[]; theta_l=[];

for i = 1:img_num-2

%% 求摄像机转轴Kr

Rr1 = calibrationSession.CameraParameters.RotationMatrices(:,:,i);

Rr2 = calibrationSession.CameraParameters.RotationMatrices(:,:,i+1);

Rr3 = calibrationSession.CameraParameters.RotationMatrices(:,:,i+2);

% Tr1 = calibrationSession.CameraParameters.TranslationVectors(i,:)+errorT;

% Tr2 = calibrationSession.CameraParameters.TranslationVectors(i+1,:)+errorT;

% Tr3 = calibrationSession.CameraParameters.TranslationVectors(i+2,:)+errorT;

Tr1 = calibrationSession.CameraParameters.TranslationVectors(i,:)+errorT;

Tr2 = calibrationSession.CameraParameters.TranslationVectors(i+1,:)+errorT;

Tr3 = calibrationSession.CameraParameters.TranslationVectors(i+2,:)+errorT;

% Rrt1 = [Rr1' (Tr1/1000)';0,0,0,1]/[Rr2' (Tr2/1000)';0,0,0,1];

% Rrt2 = [Rr2' (Tr2/1000)';0,0,0,1]/[Rr3' (Tr3/1000)';0,0,0,1];

Rrt1 = [Rr1' (Tr1/1000)';0,0,0,1]/[Rr2' (Tr2/1000)';0,0,0,1];

Rrt2 = [Rr2' (Tr2/1000)';0,0,0,1]/[Rr3' (Tr3/1000)';0,0,0,1];

[fr1, thetar1] = InvRot(Rrt1);

[fr2, thetar2] = InvRot(Rrt2);

kr = [kr,fr1,fr2,cross(fr1,fr2)];

theta_r = [theta_r;thetar1;thetar2];

%% 求末端转轴Kl

Re1 = REs(4*i-3:4*i,:);

Re2 = REs(4*(i+1)-3:4*(i+1),:);

Re3 = REs(4*(i+2)-3:4*(i+2),:);

Ret1 = Re1\Re2;

Ret2 = Re2\Re3;

Rlt1 = Ret1(1:3,1:3);

Rlt2 = Ret2(1:3,1:3);

[fl1, thetal1] = InvRot(Rlt1);

[fl2, thetal2] = InvRot(Rlt2);

kl = [kl,fl1,fl2,cross(fl1,fl2)];

theta_l = [theta_l;thetal1;thetal2];

end

%% 求手眼矩阵旋转关系

Rm = kl*pinv(kr); %-----------------------------------------

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%% 求手眼关系平移向量

Prs=[]; Pls=[];

for i = 1:img_num-1

%% (E-Rli)*Pm = Pli-Rm*Pri ----------构造右端

%% 末端平移向量

TRl1 = REs(4*i-3:4*i,:);

TRl2 = REs(4*(i+1)-3:4*(i+1),:);

Rl = TRl1\TRl2;

Pli = Rl(1:3,4); %--------------Pli

%% 相机外参平移向量

TRr1 = calibrationSession.CameraParameters.RotationMatrices(:,:,i);

TRr2 = calibrationSession.CameraParameters.RotationMatrices(:,:,i+1);

TTr1 = calibrationSession.CameraParameters.TranslationVectors(i,:)-errorT;

TTr2 = calibrationSession.CameraParameters.TranslationVectors(i+1,:)-errorT;

% TTr1 = calibrationSession.CameraParameters.TranslationVectors(i,:);

% TTr2 = calibrationSession.CameraParameters.TranslationVectors(i+1,:);

TRlt = [TRr1' (TTr1/1000)';0,0,0,1]/[TRr2' (TTr2/1000)';0,0,0,1];

Pri = TRlt(1:3,4);

Pr = Pli - Rm*Pri;

Prs = [Prs;Pr]; %--------------存储

%% (E-Rli)*Pm = Pli-Rm*Pri ----------构造左端

E=[1,0,0;0,1,0;0,0,1];

Rli = Rl(1:3,1:3);

Pl = E - Rli;

Pls = [Pls;Pl]; %--------------存储

end

%% 求手眼矩阵平移向量

Pm = pinv(Pls)*Prs; %-----------------------------------------

H_E = [Rm,Pm;0,0,0,1]

%clearvars -except cameraParams estimationErrors H_E ;

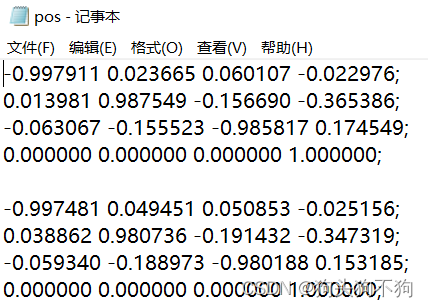

在上述代码中需要注意的两点是数据的输入格式

其中矩阵A的输入为:

x = load("E:\研究生学习\手眼标定\IMG_20230516_3\pos.txt");

pos.tx文件中储存的是机械臂各个位姿点,由机器人基座标系到末端坐标系的变换矩阵

矩阵B的输入为:

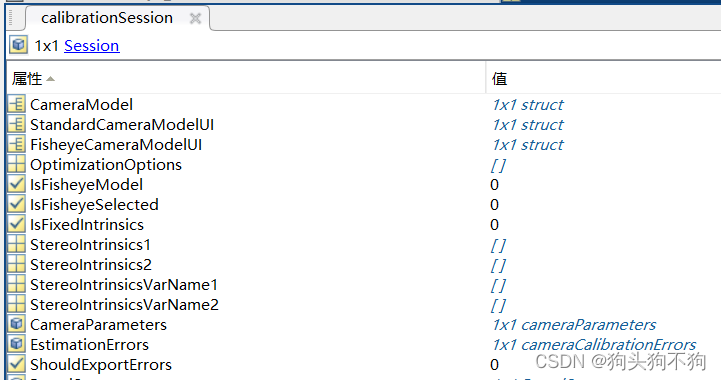

load('calibrationSession0516_3.mat')

其中calibrationSession0516_3.mat是通过相机标定工具箱保存在代码同一文件夹下的标定参数

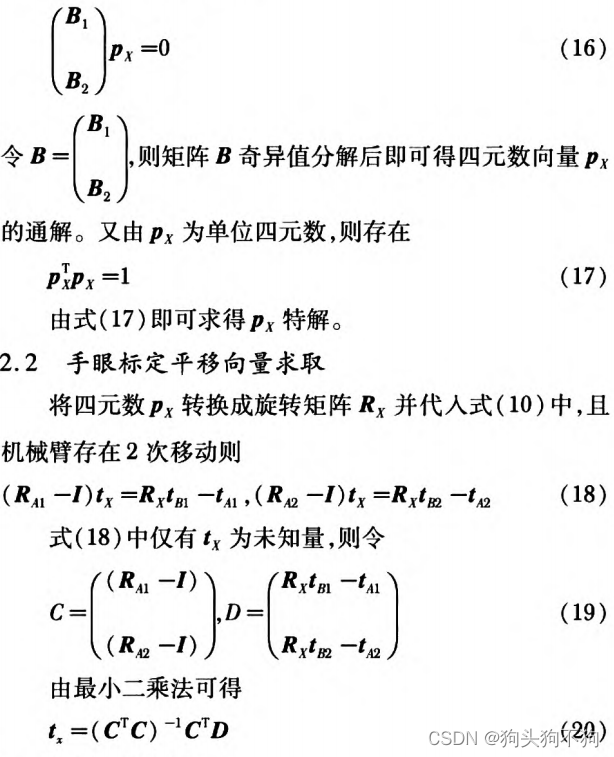

四元数方法

原理

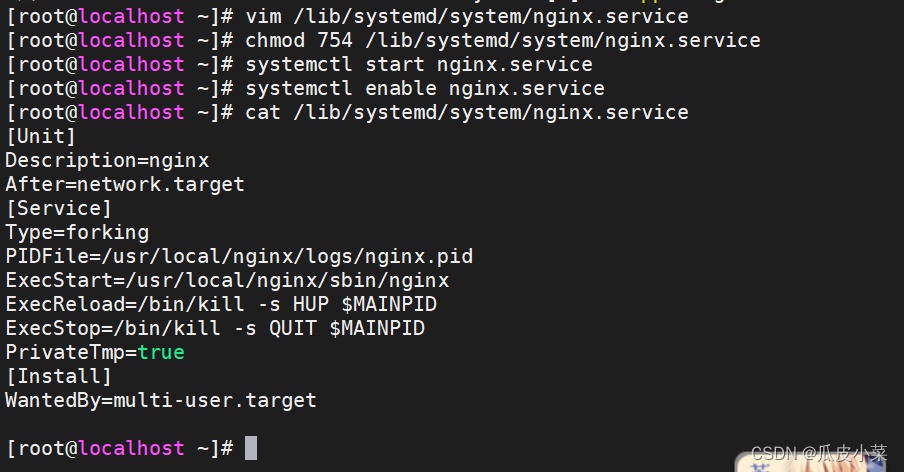

matlab编程实现

% clc;

%% 通过四元数方法求解手眼变换矩阵

img_num =46;

%% RobotEffectorPose; %机器人末端位姿导入

x = load("E:\研究生学习\手眼标定\IMG_20230516_3\pos.txt");

pos = zeros(4,4,img_num);

% 各个点机械臂位姿变化矩阵

for i=1:1:img_num

pos(:,:,i) = x(((4*(i-1)+1):4*i),:);

end

% 整合到连续矩阵中

REs = [];

for i=1:1:img_num

REs = [REs;pos(:,:,i)];

end

%% 标定数据导入

load('calibrationSession0516_3.mat')

TE = calibrationSession.EstimationErrors.ExtrinsicsErrors.TranslationVectorsError;

% TE = estimationErrors.ExtrinsicsErrors.TranslationVectorsError;

Tex = mean(TE(:,1)); Tey = mean(TE(:,2)); Tez = mean(TE(:,3));

errorT = [Tex,Tey,Tez];

%% 求末端和摄像机转轴kl, kr.

kl=[]; kr=[]; theta_r=[]; theta_l=[];

for i = 1:img_num-2

%% 求摄像机转轴Kr

Rr1 = calibrationSession.CameraParameters.RotationMatrices(:,:,i);

Rr2 = calibrationSession.CameraParameters.RotationMatrices(:,:,i+1);

Rr3 = calibrationSession.CameraParameters.RotationMatrices(:,:,i+2);

% Tr1 = calibrationSession.CameraParameters.TranslationVectors(i,:)+errorT;

% Tr2 = calibrationSession.CameraParameters.TranslationVectors(i+1,:)+errorT;

% Tr3 = calibrationSession.CameraParameters.TranslationVectors(i+2,:)+errorT;

Tr1 = calibrationSession.CameraParameters.TranslationVectors(i,:)+errorT;

Tr2 = calibrationSession.CameraParameters.TranslationVectors(i+1,:)+errorT;

Tr3 = calibrationSession.CameraParameters.TranslationVectors(i+2,:)+errorT;

% Rrt1 = [Rr1' (Tr1/1000)';0,0,0,1]/[Rr2' (Tr2/1000)';0,0,0,1];

% Rrt2 = [Rr2' (Tr2/1000)';0,0,0,1]/[Rr3' (Tr3/1000)';0,0,0,1];

Rrt1 = [Rr1' (Tr1/1000)';0,0,0,1]/[Rr2' (Tr2/1000)';0,0,0,1];

Rrt2 = [Rr2' (Tr2/1000)';0,0,0,1]/[Rr3' (Tr3/1000)';0,0,0,1];

[fr1, thetar1] = InvRot(Rrt1);

[fr2, thetar2] = InvRot(Rrt2);

kr = [kr,fr1,fr2,cross(fr1,fr2)];

theta_r = [theta_r;thetar1;thetar2];

%% 求末端转轴Kl

Re1 = REs(4*i-3:4*i,:);

Re2 = REs(4*(i+1)-3:4*(i+1),:);

Re3 = REs(4*(i+2)-3:4*(i+2),:);

Ret1 = Re1\Re2;

Ret2 = Re2\Re3;

Rlt1 = Ret1(1:3,1:3);

Rlt2 = Ret2(1:3,1:3);

[fl1, thetal1] = InvRot(Rlt1);

[fl2, thetal2] = InvRot(Rlt2);

kl = [kl,fl1,fl2,cross(fl1,fl2)];

theta_l = [theta_l;thetal1;thetal2];

end

Rm = kl*pinv(kr); %-----------------------------------------

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%% 求手眼关系平移向量

Prs=[]; Pls=[];

C=[];D=[];

for i = 1:img_num-1

%% tx = (C'C)^(-1)*C'*D ----------构造C

%% 末端平移向量

TRl1 = REs(4*i-3:4*i,:);

TRl2 = REs(4*(i+1)-3:4*(i+1),:);

Rl = TRl1\TRl2;

E=[1,0,0;0,1,0;0,0,1];

C = [C;E-Rl(1:3,1:3)]; %--------------Pli

%% 相机外参平移向量

TRr1 = calibrationSession.CameraParameters.RotationMatrices(:,:,i);

TRr2 = calibrationSession.CameraParameters.RotationMatrices(:,:,i+1);

TTr1 = calibrationSession.CameraParameters.TranslationVectors(i,:)-errorT;

TTr2 = calibrationSession.CameraParameters.TranslationVectors(i+1,:)-errorT;

% TTr1 = calibrationSession.CameraParameters.TranslationVectors(i,:);

% TTr2 = calibrationSession.CameraParameters.TranslationVectors(i+1,:);

%% tx = (C'C)^(-1)*C'*D ----------构造D

TRlt = [TRr1' (TTr1/1000)';0,0,0,1]/[TRr2' (TTr2/1000)';0,0,0,1];% 摄像头变换矩阵

Tb = TRlt(1:3,4); %摄像头

Rx = Rl(1:3,1:3);

Ta = Rl(1:3,4); %末端

D = [D;Rm*Tb-Ta];

end

Pm = pinv(C'*C)*C'*D;

%% 求手眼矩阵平移向量

% Pm = pinv(Pls)*Prs; %-----------------------------------------

H_E = [Rm,Pm;0,0,0,1]

%clearvars -except cameraParams estimationErrors H_E ;

传送门:

1.【从零开始进行高精度手眼标定 eye in hand(小白向)1 原理推导】

2.【从零开始进行高精度手眼标定 eye in hand(小白向)2 Tsai轴角法与四元数法编程实现】

3.【从零开始进行高精度手眼标定 eye in hand(小白向)3 非线性高精度标定法编程实现】