然后是model的搭建:

在creat_model函数中:

import torch

def create_model(opt):

if opt.model == 'pix2pixHD':

from .pix2pixHD_model import Pix2PixHDModel, InferenceModel

if opt.isTrain:

model = Pix2PixHDModel()

else:

model = InferenceModel()

else:

from .ui_model import UIModel

model = UIModel()

model.initialize(opt)

if opt.verbose:

print("model [%s] was created" % (model.name()))

if opt.isTrain and len(opt.gpu_ids) and not opt.fp16:

model = torch.nn.DataParallel(model, device_ids=opt.gpu_ids)

return model

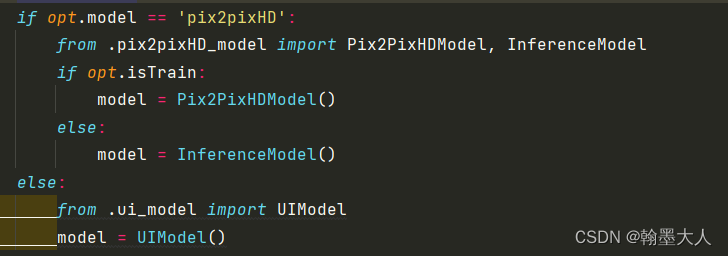

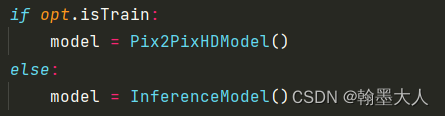

首先判断model:

train的时候用第一个,否则用第二个:

先看第一个:

import numpy as np

import torch

import os

from torch.autograd import Variable

from util.image_pool import ImagePool

from .base_model import BaseModel

from . import networks

class Pix2PixHDModel(BaseModel):

def name(self):

return 'Pix2PixHDModel'

def init_loss_filter(self, use_gan_feat_loss, use_vgg_loss):

flags = (True, use_gan_feat_loss, use_vgg_loss, True, True)

def loss_filter(g_gan, g_gan_feat, g_vgg, d_real, d_fake):

return [l for (l,f) in zip((g_gan,g_gan_feat,g_vgg,d_real,d_fake),flags) if f]

return loss_filter

def initialize(self, opt):

BaseModel.initialize(self, opt)

if opt.resize_or_crop != 'none' or not opt.isTrain: # when training at full res this causes OOM

torch.backends.cudnn.benchmark = True

self.isTrain = opt.isTrain

self.use_features = opt.instance_feat or opt.label_feat

self.gen_features = self.use_features and not self.opt.load_features

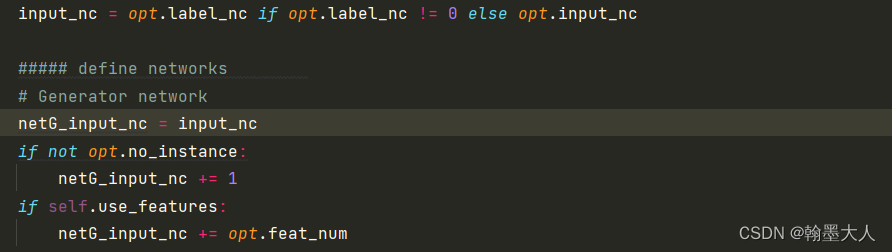

input_nc = opt.label_nc if opt.label_nc != 0 else opt.input_nc

##### define networks

# Generator network

netG_input_nc = input_nc

if not opt.no_instance:

netG_input_nc += 1

if self.use_features:

netG_input_nc += opt.feat_num

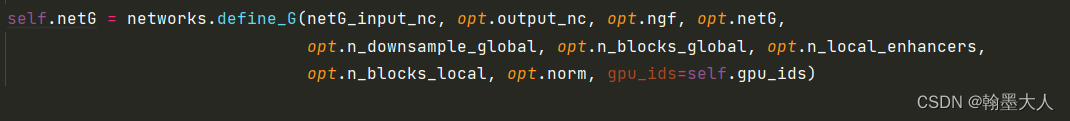

self.netG = networks.define_G(netG_input_nc, opt.output_nc, opt.ngf, opt.netG,

opt.n_downsample_global, opt.n_blocks_global, opt.n_local_enhancers,

opt.n_blocks_local, opt.norm, gpu_ids=self.gpu_ids)

# Discriminator network

if self.isTrain:

use_sigmoid = opt.no_lsgan

netD_input_nc = input_nc + opt.output_nc

if not opt.no_instance:

netD_input_nc += 1

self.netD = networks.define_D(netD_input_nc, opt.ndf, opt.n_layers_D, opt.norm, use_sigmoid,

opt.num_D, not opt.no_ganFeat_loss, gpu_ids=self.gpu_ids)

### Encoder network

if self.gen_features:

self.netE = networks.define_G(opt.output_nc, opt.feat_num, opt.nef, 'encoder',

opt.n_downsample_E, norm=opt.norm, gpu_ids=self.gpu_ids)

if self.opt.verbose:

print('---------- Networks initialized -------------')

# load networks

if not self.isTrain or opt.continue_train or opt.load_pretrain:

pretrained_path = '' if not self.isTrain else opt.load_pretrain

self.load_network(self.netG, 'G', opt.which_epoch, pretrained_path)

if self.isTrain:

self.load_network(self.netD, 'D', opt.which_epoch, pretrained_path)

if self.gen_features:

self.load_network(self.netE, 'E', opt.which_epoch, pretrained_path)

# set loss functions and optimizers

if self.isTrain:

if opt.pool_size > 0 and (len(self.gpu_ids)) > 1:

raise NotImplementedError("Fake Pool Not Implemented for MultiGPU")

self.fake_pool = ImagePool(opt.pool_size)

self.old_lr = opt.lr

# define loss functions

self.loss_filter = self.init_loss_filter(not opt.no_ganFeat_loss, not opt.no_vgg_loss)

self.criterionGAN = networks.GANLoss(use_lsgan=not opt.no_lsgan, tensor=self.Tensor)

self.criterionFeat = torch.nn.L1Loss()

if not opt.no_vgg_loss:

self.criterionVGG = networks.VGGLoss(self.gpu_ids)

# Names so we can breakout loss

self.loss_names = self.loss_filter('G_GAN','G_GAN_Feat','G_VGG','D_real', 'D_fake')

# initialize optimizers

# optimizer G

if opt.niter_fix_global > 0:

import sys

if sys.version_info >= (3,0):

finetune_list = set()

else:

from sets import Set

finetune_list = Set()

params_dict = dict(self.netG.named_parameters())

params = []

for key, value in params_dict.items():

if key.startswith('model' + str(opt.n_local_enhancers)):

params += [value]

finetune_list.add(key.split('.')[0])

print('------------- Only training the local enhancer network (for %d epochs) ------------' % opt.niter_fix_global)

print('The layers that are finetuned are ', sorted(finetune_list))

else:

params = list(self.netG.parameters())

if self.gen_features:

params += list(self.netE.parameters())

self.optimizer_G = torch.optim.Adam(params, lr=opt.lr, betas=(opt.beta1, 0.999))

# optimizer D

params = list(self.netD.parameters())

self.optimizer_D = torch.optim.Adam(params, lr=opt.lr, betas=(opt.beta1, 0.999))

def encode_input(self, label_map, inst_map=None, real_image=None, feat_map=None, infer=False):

if self.opt.label_nc == 0:

input_label = label_map.data.cuda()

else:

# create one-hot vector for label map

size = label_map.size()

oneHot_size = (size[0], self.opt.label_nc, size[2], size[3])

input_label = torch.cuda.FloatTensor(torch.Size(oneHot_size)).zero_()

input_label = input_label.scatter_(1, label_map.data.long().cuda(), 1.0)

if self.opt.data_type == 16:

input_label = input_label.half()

# get edges from instance map

if not self.opt.no_instance:

inst_map = inst_map.data.cuda()

edge_map = self.get_edges(inst_map)

input_label = torch.cat((input_label, edge_map), dim=1)

input_label = Variable(input_label, volatile=infer)

# real images for training

if real_image is not None:

real_image = Variable(real_image.data.cuda())

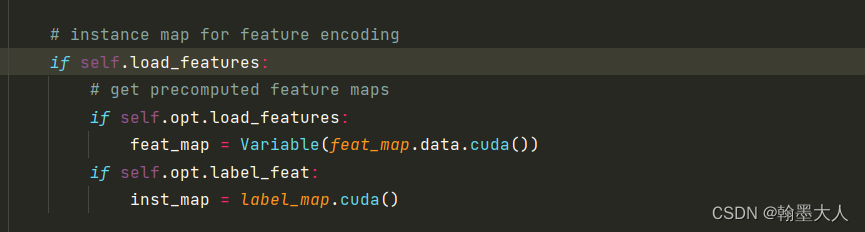

# instance map for feature encoding

if self.use_features:

# get precomputed feature maps

if self.opt.load_features:

feat_map = Variable(feat_map.data.cuda())

if self.opt.label_feat:

inst_map = label_map.cuda()

return input_label, inst_map, real_image, feat_map

def discriminate(self, input_label, test_image, use_pool=False):

input_concat = torch.cat((input_label, test_image.detach()), dim=1)

if use_pool:

fake_query = self.fake_pool.query(input_concat)

return self.netD.forward(fake_query)

else:

return self.netD.forward(input_concat)

def forward(self, label, inst, image, feat, infer=False):

# Encode Inputs

input_label, inst_map, real_image, feat_map = self.encode_input(label, inst, image, feat)

# Fake Generation

if self.use_features:

if not self.opt.load_features:

feat_map = self.netE.forward(real_image, inst_map)

input_concat = torch.cat((input_label, feat_map), dim=1)

else:

input_concat = input_label

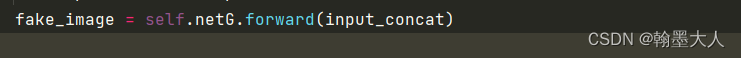

fake_image = self.netG.forward(input_concat)

# Fake Detection and Loss

pred_fake_pool = self.discriminate(input_label, fake_image, use_pool=True)

loss_D_fake = self.criterionGAN(pred_fake_pool, False)

# Real Detection and Loss

pred_real = self.discriminate(input_label, real_image)

loss_D_real = self.criterionGAN(pred_real, True)

# GAN loss (Fake Passability Loss)

pred_fake = self.netD.forward(torch.cat((input_label, fake_image), dim=1))

loss_G_GAN = self.criterionGAN(pred_fake, True)

# GAN feature matching loss

loss_G_GAN_Feat = 0

if not self.opt.no_ganFeat_loss:

feat_weights = 4.0 / (self.opt.n_layers_D + 1)

D_weights = 1.0 / self.opt.num_D

for i in range(self.opt.num_D):

for j in range(len(pred_fake[i])-1):

loss_G_GAN_Feat += D_weights * feat_weights * \

self.criterionFeat(pred_fake[i][j], pred_real[i][j].detach()) * self.opt.lambda_feat

# VGG feature matching loss

loss_G_VGG = 0

if not self.opt.no_vgg_loss:

loss_G_VGG = self.criterionVGG(fake_image, real_image) * self.opt.lambda_feat

# Only return the fake_B image if necessary to save BW

return [ self.loss_filter( loss_G_GAN, loss_G_GAN_Feat, loss_G_VGG, loss_D_real, loss_D_fake ), None if not infer else fake_image ]

def inference(self, label, inst, image=None):

# Encode Inputs

image = Variable(image) if image is not None else None

input_label, inst_map, real_image, _ = self.encode_input(Variable(label), Variable(inst), image, infer=True)

# Fake Generation

if self.use_features:

if self.opt.use_encoded_image:

# encode the real image to get feature map

feat_map = self.netE.forward(real_image, inst_map)

else:

# sample clusters from precomputed features

feat_map = self.sample_features(inst_map)

input_concat = torch.cat((input_label, feat_map), dim=1)

else:

input_concat = input_label

if torch.__version__.startswith('0.4'):

with torch.no_grad():

fake_image = self.netG.forward(input_concat)

else:

fake_image = self.netG.forward(input_concat)

return fake_image

def sample_features(self, inst):

# read precomputed feature clusters

cluster_path = os.path.join(self.opt.checkpoints_dir, self.opt.name, self.opt.cluster_path)

features_clustered = np.load(cluster_path, encoding='latin1').item()

# randomly sample from the feature clusters

inst_np = inst.cpu().numpy().astype(int)

feat_map = self.Tensor(inst.size()[0], self.opt.feat_num, inst.size()[2], inst.size()[3])

for i in np.unique(inst_np):

label = i if i < 1000 else i//1000

if label in features_clustered:

feat = features_clustered[label]

cluster_idx = np.random.randint(0, feat.shape[0])

idx = (inst == int(i)).nonzero()

for k in range(self.opt.feat_num):

feat_map[idx[:,0], idx[:,1] + k, idx[:,2], idx[:,3]] = feat[cluster_idx, k]

if self.opt.data_type==16:

feat_map = feat_map.half()

return feat_map

def encode_features(self, image, inst):

image = Variable(image.cuda(), volatile=True)

feat_num = self.opt.feat_num

h, w = inst.size()[2], inst.size()[3]

block_num = 32

feat_map = self.netE.forward(image, inst.cuda())

inst_np = inst.cpu().numpy().astype(int)

feature = {}

for i in range(self.opt.label_nc):

feature[i] = np.zeros((0, feat_num+1))

for i in np.unique(inst_np):

label = i if i < 1000 else i//1000

idx = (inst == int(i)).nonzero()

num = idx.size()[0]

idx = idx[num//2,:]

val = np.zeros((1, feat_num+1))

for k in range(feat_num):

val[0, k] = feat_map[idx[0], idx[1] + k, idx[2], idx[3]].data[0]

val[0, feat_num] = float(num) / (h * w // block_num)

feature[label] = np.append(feature[label], val, axis=0)

return feature

def get_edges(self, t):

edge = torch.cuda.ByteTensor(t.size()).zero_()

edge[:,:,:,1:] = edge[:,:,:,1:] | (t[:,:,:,1:] != t[:,:,:,:-1])

edge[:,:,:,:-1] = edge[:,:,:,:-1] | (t[:,:,:,1:] != t[:,:,:,:-1])

edge[:,:,1:,:] = edge[:,:,1:,:] | (t[:,:,1:,:] != t[:,:,:-1,:])

edge[:,:,:-1,:] = edge[:,:,:-1,:] | (t[:,:,1:,:] != t[:,:,:-1,:])

if self.opt.data_type==16:

return edge.half()

else:

return edge.float()

def save(self, which_epoch):

self.save_network(self.netG, 'G', which_epoch, self.gpu_ids)

self.save_network(self.netD, 'D', which_epoch, self.gpu_ids)

if self.gen_features:

self.save_network(self.netE, 'E', which_epoch, self.gpu_ids)

def update_fixed_params(self):

# after fixing the global generator for a number of iterations, also start finetuning it

params = list(self.netG.parameters())

if self.gen_features:

params += list(self.netE.parameters())

self.optimizer_G = torch.optim.Adam(params, lr=self.opt.lr, betas=(self.opt.beta1, 0.999))

if self.opt.verbose:

print('------------ Now also finetuning global generator -----------')

def update_learning_rate(self):

lrd = self.opt.lr / self.opt.niter_decay

lr = self.old_lr - lrd

for param_group in self.optimizer_D.param_groups:

param_group['lr'] = lr

for param_group in self.optimizer_G.param_groups:

param_group['lr'] = lr

if self.opt.verbose:

print('update learning rate: %f -> %f' % (self.old_lr, lr))

self.old_lr = lr

class InferenceModel(Pix2PixHDModel):

def forward(self, inp):

label, inst = inp

return self.inference(label, inst)

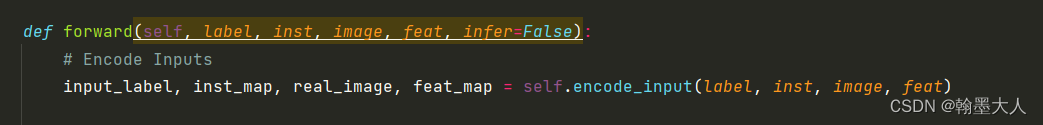

首先看forward函数:将标签,实例,RGB,feat输入到encoder中。

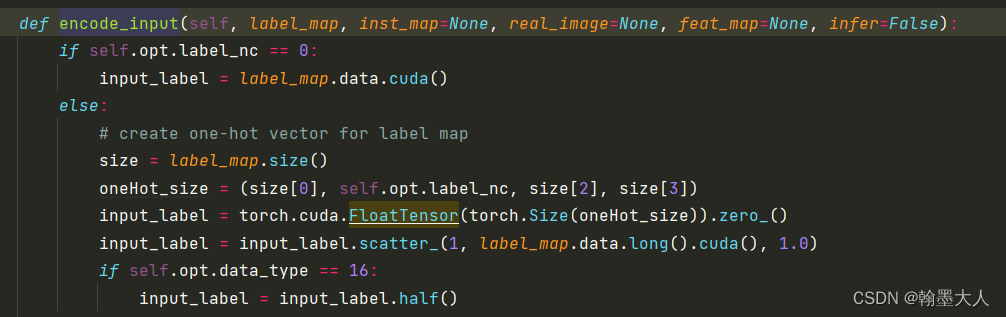

如果标签通道不为0,对标签进行one-hot编码。CItyscapes有35个通道,oneHot_size=(b,35,1024,512)。初始化一个oneHot_size大小全为0的tensor。然后通过scatter_进行编码。

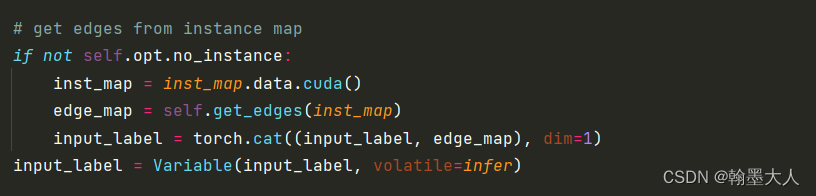

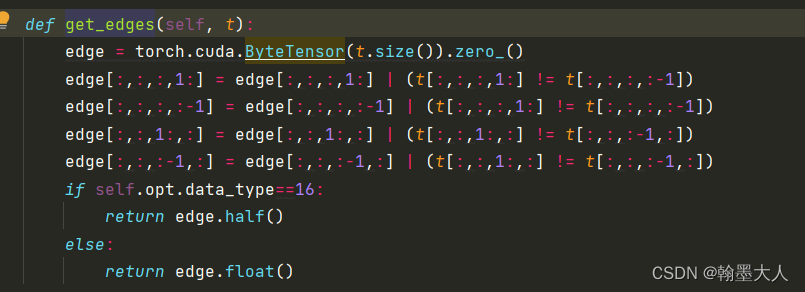

接着对实例图提边。输入的是示例图得到的是边界图。将边界图和输入label拼接在一起作为输入label。

默认为False。

将label输入到生成器中。

接着看netG。

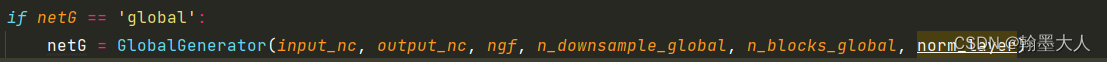

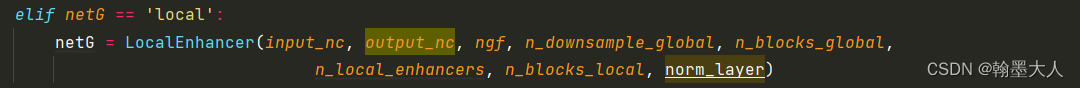

接着看define_G:很多参数,首先是输入通道数,接着是输出通道数=3,ngf=64,netG=gloabl。

def define_G(input_nc, output_nc, ngf, netG, n_downsample_global=3, n_blocks_global=9, n_local_enhancers=1, n_blocks_local=3, norm='instance', gpu_ids=[]):

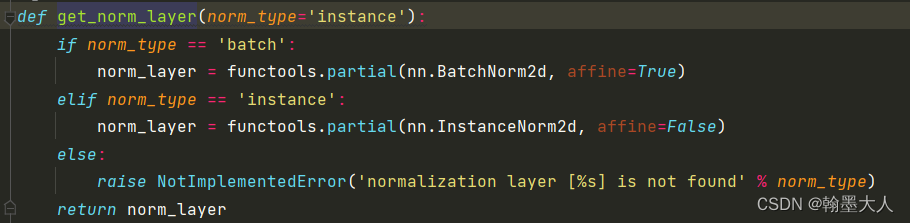

norm_layer = get_norm_layer(norm_type=norm)

if netG == 'global':

netG = GlobalGenerator(input_nc, output_nc, ngf, n_downsample_global, n_blocks_global, norm_layer)

elif netG == 'local':

netG = LocalEnhancer(input_nc, output_nc, ngf, n_downsample_global, n_blocks_global,

n_local_enhancers, n_blocks_local, norm_layer)

elif netG == 'encoder':

netG = Encoder(input_nc, output_nc, ngf, n_downsample_global, norm_layer)

else:

raise('generator not implemented!')

print(netG)

if len(gpu_ids) > 0:

assert(torch.cuda.is_available())

netG.cuda(gpu_ids[0])

netG.apply(weights_init)

return netG

看第一个参数:如果label通道不为0,input_nc=label_nc,否则input_nc=0.如果使用instance,netG的输入通道数再加1.如果使用feature encoder network的话,则输入通道再加3.

根据norm_type确定归一化类型:

接着输入到global生成器中:

class GlobalGenerator(nn.Module):

def __init__(self, input_nc, output_nc, ngf=64, n_downsampling=3, n_blocks=9, norm_layer=nn.BatchNorm2d,

padding_type='reflect'):

assert(n_blocks >= 0)

super(GlobalGenerator, self).__init__()

activation = nn.ReLU(True)

model = [nn.ReflectionPad2d(3), nn.Conv2d(input_nc, ngf, kernel_size=7, padding=0), norm_layer(ngf), activation]

### downsample

for i in range(n_downsampling):

mult = 2**i

model += [nn.Conv2d(ngf * mult, ngf * mult * 2, kernel_size=3, stride=2, padding=1),

norm_layer(ngf * mult * 2), activation]

### resnet blocks

mult = 2**n_downsampling

for i in range(n_blocks):

model += [ResnetBlock(ngf * mult, padding_type=padding_type, activation=activation, norm_layer=norm_layer)]

### upsample

for i in range(n_downsampling):

mult = 2**(n_downsampling - i)

model += [nn.ConvTranspose2d(ngf * mult, int(ngf * mult / 2), kernel_size=3, stride=2, padding=1, output_padding=1),

norm_layer(int(ngf * mult / 2)), activation]

model += [nn.ReflectionPad2d(3), nn.Conv2d(ngf, output_nc, kernel_size=7, padding=0), nn.Tanh()]

self.model = nn.Sequential(*model)

def forward(self, input):

return self.model(input)

首先对原图pad=3,接着输入经过一个7x7卷积,将输入通道变为64。图片大小不变,通道变为64。

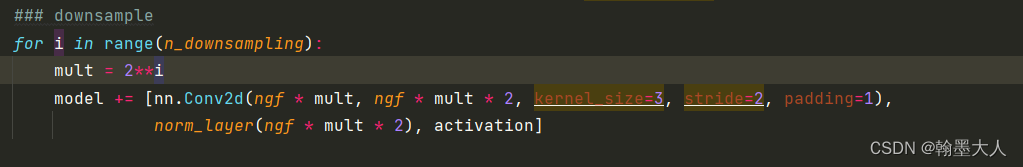

接着进行连续的三次下采样:

step1:i=0,mult=1,nn.conv2d(64,642,k=3,s=2,p=1),norm,act。(1,64,512,1024)—>(1,128,256,512)

step2:i=1,mul=2,nn.conv2d(642,644,k=3,s=2,p=1),norm,act。(1,128,256,512)—>(1,256,128,256)

step3:i=2,mul=4,nn.conv2d(644,64*8,k=3,s=2,p=1),norm,act。(1,256,128,256)—>(1,512,64,128)

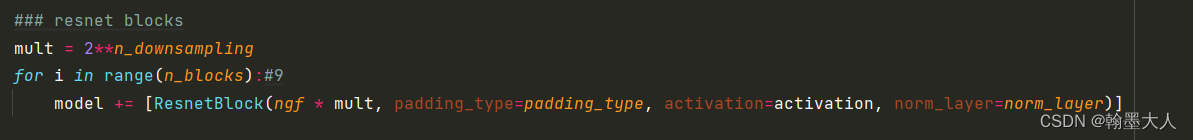

mul=8,有连续9个block。每一个block调用一次Resnetblock。

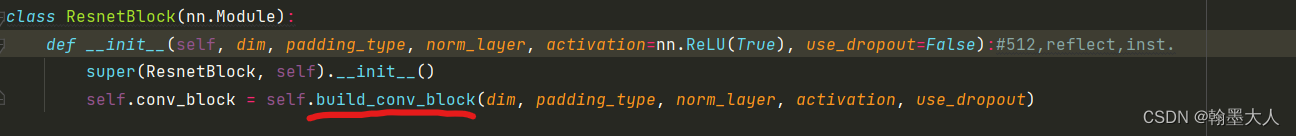

class ResnetBlock(nn.Module):

def __init__(self, dim, padding_type, norm_layer, activation=nn.ReLU(True), use_dropout=False):

super(ResnetBlock, self).__init__()

self.conv_block = self.build_conv_block(dim, padding_type, norm_layer, activation, use_dropout)

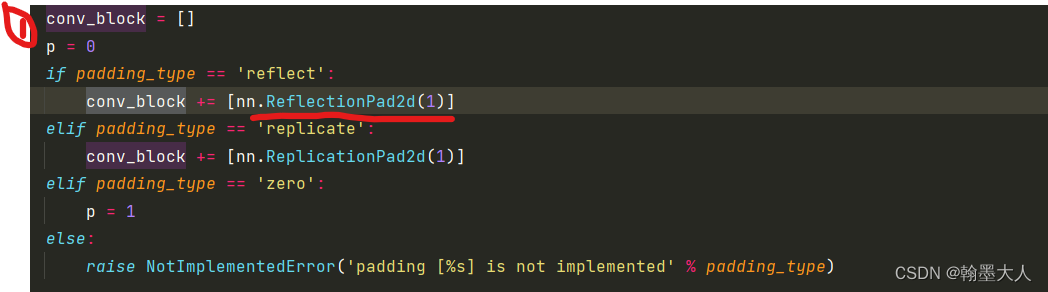

def build_conv_block(self, dim, padding_type, norm_layer, activation, use_dropout):

conv_block = []

p = 0

if padding_type == 'reflect':

conv_block += [nn.ReflectionPad2d(1)]

elif padding_type == 'replicate':

conv_block += [nn.ReplicationPad2d(1)]

elif padding_type == 'zero':

p = 1

else:

raise NotImplementedError('padding [%s] is not implemented' % padding_type)

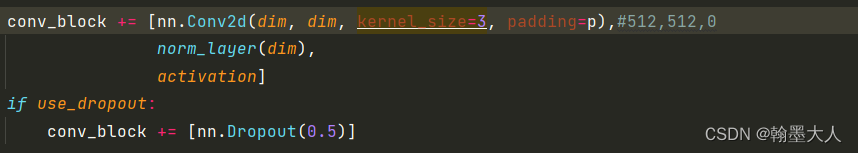

conv_block += [nn.Conv2d(dim, dim, kernel_size=3, padding=p),

norm_layer(dim),

activation]

if use_dropout:

conv_block += [nn.Dropout(0.5)]

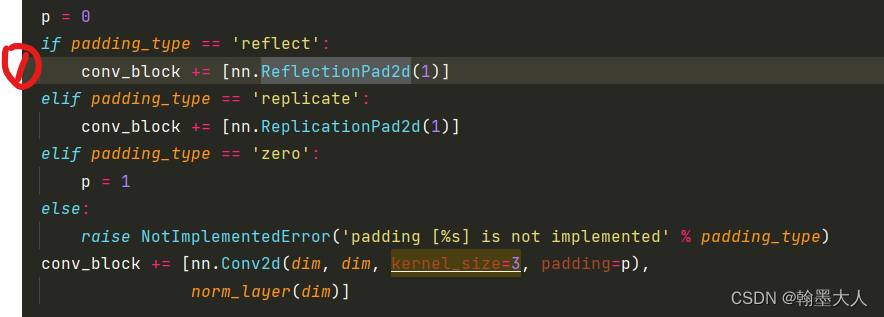

p = 0

if padding_type == 'reflect':

conv_block += [nn.ReflectionPad2d(1)]

elif padding_type == 'replicate':

conv_block += [nn.ReplicationPad2d(1)]

elif padding_type == 'zero':

p = 1

else:

raise NotImplementedError('padding [%s] is not implemented' % padding_type)

conv_block += [nn.Conv2d(dim, dim, kernel_size=3, padding=p),

norm_layer(dim)]

return nn.Sequential(*conv_block)

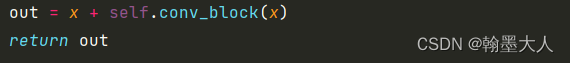

def forward(self, x):

out = x + self.conv_block(x)

return out

在内部调用:

首先在convblock里面添加padding。

接着在里面添加卷积,k=3,p=0,因为事前已经添加了pad,所以这里不指定pad,大小也不发生变换。如过使用dropout,添加dropout。

接着上述的操作再执行一遍:大小还是不变。

最后再来一个indentity相加。

小结:Convblock一个里面有两个卷积,不改变大小,不改变通道,有9个Convblock,那么就有18个卷积,不改变通道和大小。

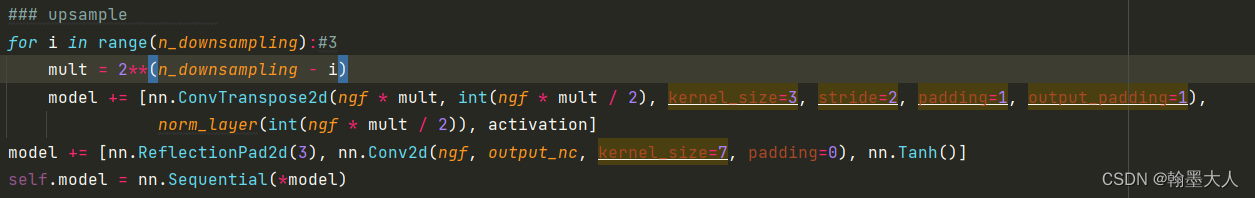

接着进行上采样:

step1:输入通道为512,输出256,大小上采样两倍。norm,act。

step2:输入通道为256,输出128,大小上采样两倍。norm,act。

step3:输入通道为128,输出64,大小上采样两倍。norm,act。

最后再加一个卷积,输出通道为3,大小不变。

小结:GlobalGenerator:首先下采样三次,再经过一个残差卷积由18个卷积组成,最后上采样到原图大小,输出通道为3.因为输入是经过one-hot编码后的标签,输出是image通道为3.

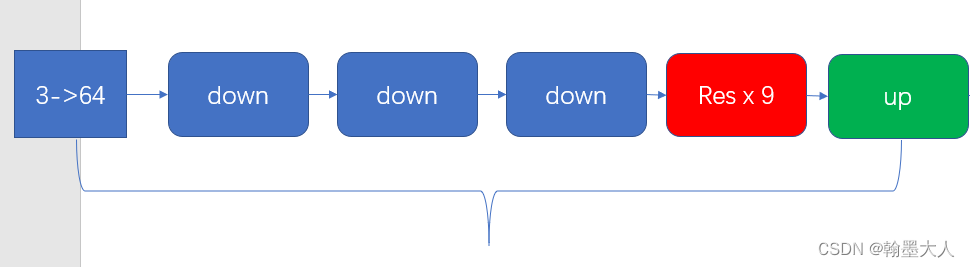

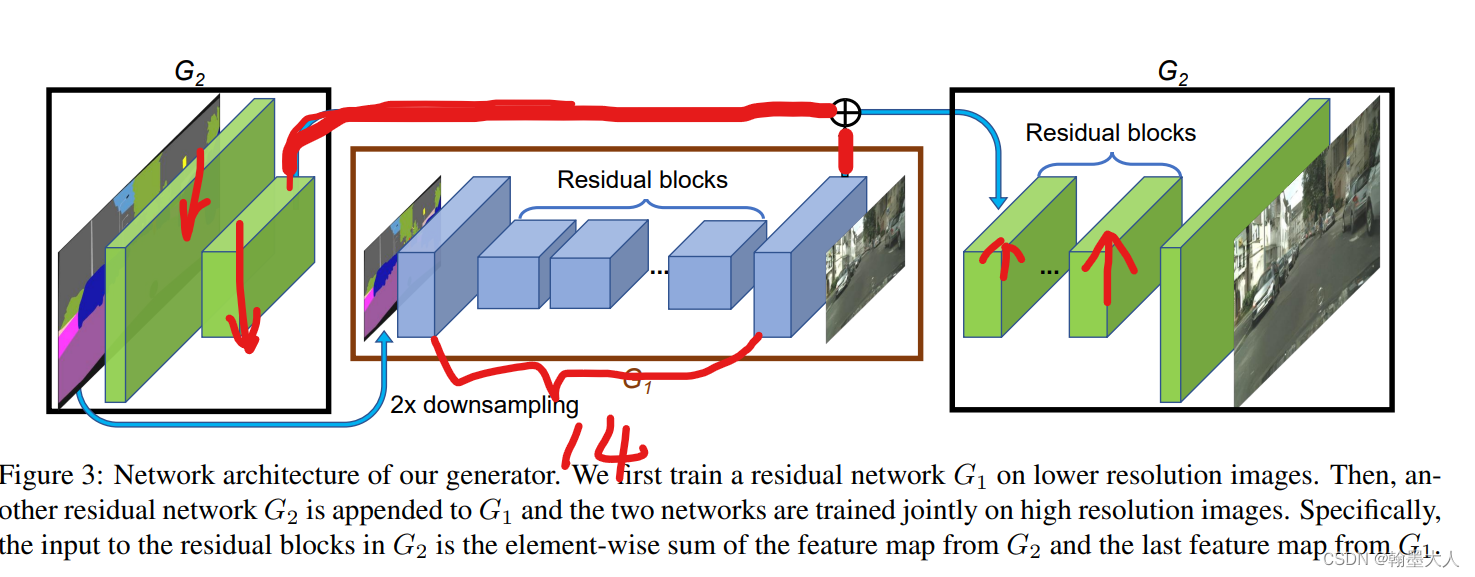

如果生成器是LocalEnhancer:

class LocalEnhancer(nn.Module):

def __init__(self, input_nc, output_nc, ngf=32, n_downsample_global=3, n_blocks_global=9,

n_local_enhancers=1, n_blocks_local=3, norm_layer=nn.BatchNorm2d, padding_type='reflect'):

super(LocalEnhancer, self).__init__()

self.n_local_enhancers = n_local_enhancers

###### global generator model #####

ngf_global = ngf * (2**n_local_enhancers)

model_global = GlobalGenerator(input_nc, output_nc, ngf_global, n_downsample_global, n_blocks_global, norm_layer).model

model_global = [model_global[i] for i in range(len(model_global)-3)] # get rid of final convolution layers

self.model = nn.Sequential(*model_global)

###### local enhancer layers #####

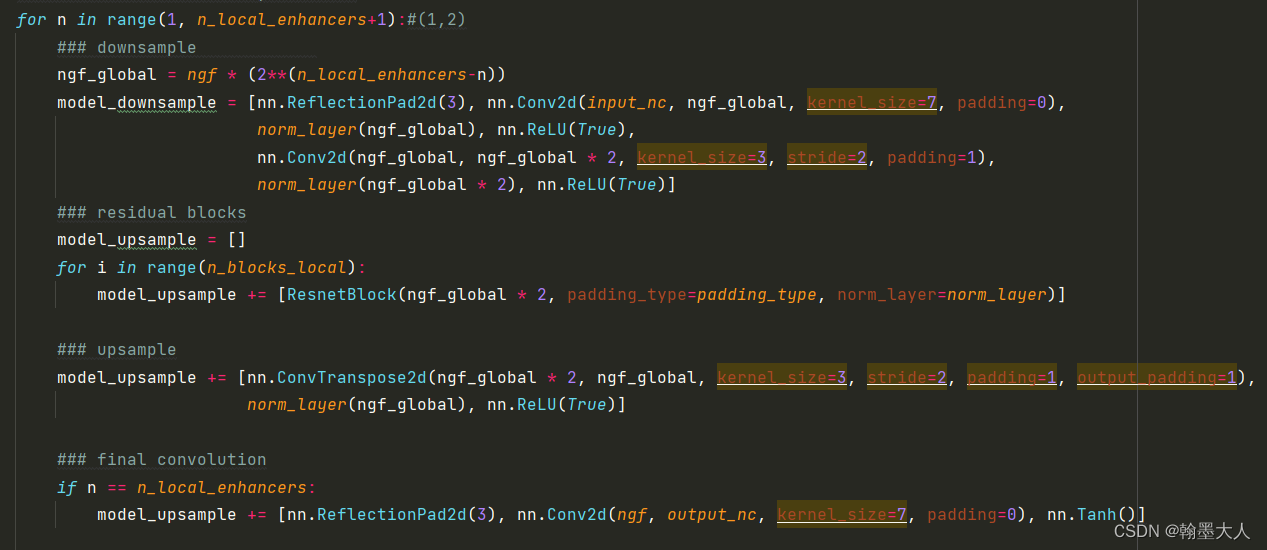

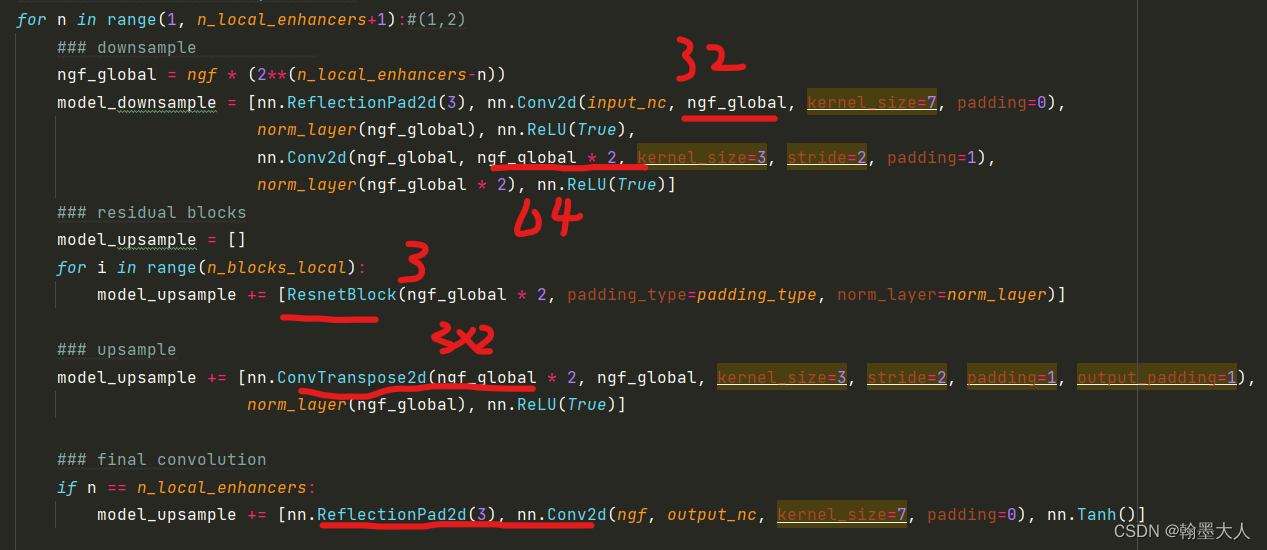

for n in range(1, n_local_enhancers+1):

### downsample

ngf_global = ngf * (2**(n_local_enhancers-n))

model_downsample = [nn.ReflectionPad2d(3), nn.Conv2d(input_nc, ngf_global, kernel_size=7, padding=0),

norm_layer(ngf_global), nn.ReLU(True),

nn.Conv2d(ngf_global, ngf_global * 2, kernel_size=3, stride=2, padding=1),

norm_layer(ngf_global * 2), nn.ReLU(True)]

### residual blocks

model_upsample = []

for i in range(n_blocks_local):

model_upsample += [ResnetBlock(ngf_global * 2, padding_type=padding_type, norm_layer=norm_layer)]

### upsample

model_upsample += [nn.ConvTranspose2d(ngf_global * 2, ngf_global, kernel_size=3, stride=2, padding=1, output_padding=1),

norm_layer(ngf_global), nn.ReLU(True)]

### final convolution

if n == n_local_enhancers:

model_upsample += [nn.ReflectionPad2d(3), nn.Conv2d(ngf, output_nc, kernel_size=7, padding=0), nn.Tanh()]

setattr(self, 'model'+str(n)+'_1', nn.Sequential(*model_downsample))

setattr(self, 'model'+str(n)+'_2', nn.Sequential(*model_upsample))

self.downsample = nn.AvgPool2d(3, stride=2, padding=[1, 1], count_include_pad=False)

def forward(self, input):

### create input pyramid

input_downsampled = [input]

for i in range(self.n_local_enhancers):#1

input_downsampled.append(self.downsample(input_downsampled[-1]))

### output at coarest level

output_prev = self.model(input_downsampled[-1])

### build up one layer at a time

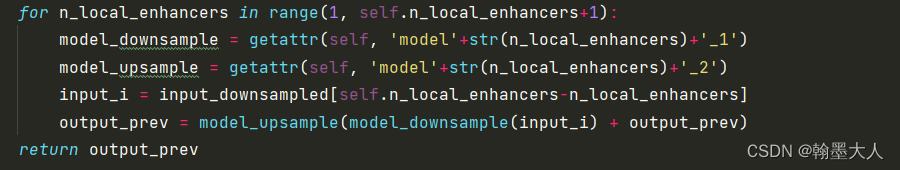

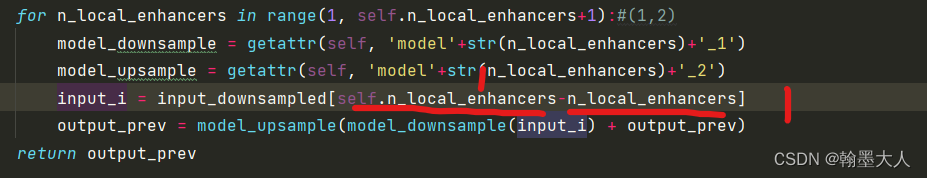

for n_local_enhancers in range(1, self.n_local_enhancers+1):

model_downsample = getattr(self, 'model'+str(n_local_enhancers)+'_1')

model_upsample = getattr(self, 'model'+str(n_local_enhancers)+'_2')

input_i = input_downsampled[self.n_local_enhancers-n_local_enhancers]

output_prev = model_upsample(model_downsample(input_i) + output_prev)

return output_prev

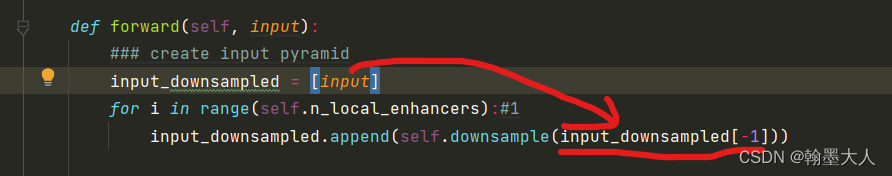

将输入首先进行平均池化:大小变为一半,通道不变。

这样input_downsampled列表中就有两个值了,分辨是输入和下采样一半的值。

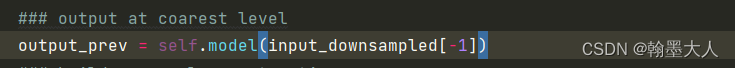

接着将下采样一半的值输入到model中:还是调用的GlobalGenerator。

model_global包含了17个卷积层:则len(model)=19,i从0到[(17-3)-1]。

则model_global包含前14层(0到13)。

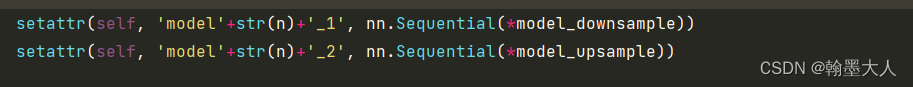

接着:假设local_enhancer实例化为A,则getattr获得A的model1_1属性对应的值。getattr函数

我们去上面找:setattr是A设置model1_1属性,对应的值为nn.Sequential(*model_downsample),然后通过getattr获得属性值即model_downsample = nn.Sequential(*model_downsample)。

接着看nn.Sequential(*model_downsample)组成:

这个for循环只循环一次:model_down由两个卷积组成,输出通道变为64,下采样4倍,model_up由三个ResNetBlock和一个转置卷积和一个输出通道为3的卷积组成。

最后给A赋予属性值:

最后给A赋予属性值:

回到forward中:input_i = input_downsampled列表中第0个值,即输入。将输入下采样两倍再和经过GlobalGenerator前14层的值住像素相加。在上采样。

对应于原文:

回到pix2pixHD_model:self.netG就是指定的网络,然后调用forward函数。最后生成假的图片。