文章目录

- 前言

- 准备工作

- 准备4台虚拟机

- 说明

- 下载对应的二进制包

- 初始化操作

- CentOS7

- 配置yum源

- 配置免密、修改hostname、关闭防火墙、selinux、关闭swap分区(方便后面进行其它操作)

- 下载软件包并批量安装

- 配置时间同步

- 配置打开文件描述符

- 添加ipvs模块和内核模块

- Ubuntu

- 配置apt源

- 配置免密、修改hostname、关闭防火墙、关闭swap分区(方便后面进行其它操作)

- 下载软件包并批量安装

- 配置时间同步

- 配置打开文件描述符

- 添加ipvs模块和内核模块

- 编译一个nginx做负载均衡

- 安装容器运行时

- 安装docker

- 安装cri-docker

- 安装etcd

- 准备etcd所需证书

- 分发证书、二进制文件及service文件

- 安装k8s组件

- apiserver

- 准备apiserver 证书

- 准备metrics-server证书

- 准备service文件

- 分发apiserver文件并启动

- kubectl

- 准备admin证书

- 创建kubeconfig

- 创建 clusterrolebinding 实现 exec 进入容器权限

- controller-manager

- 准备controller-manager 证书

- 创建kubeconfig

- 准备service文件

- 分发controller-manager文件并启动

- scheduler

- 准备scheduler证书

- 创建kubeconfig

- 准备service文件

- 分发scheduler文件并启动

- 检查管理组件需要的服务是否正常

- kubelet

- 准备kubelet证书

- 创建kubeconfig

- 准备kubelet配置文件

- 准备service文件

- 分发kubelet文件并启动

- kube-proxy

- 准备kube-proxy证书

- 创建kubeconfig

- 创建kube-proxy配置文件

- 准备service文件

- 分发kube-proxy文件并启动

- 检查是否起来

- 安装网络插件

- cni插件

- coredns

- 验证集群是否正常

- 后记

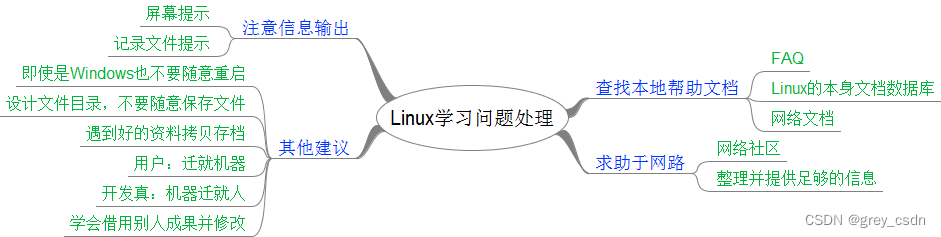

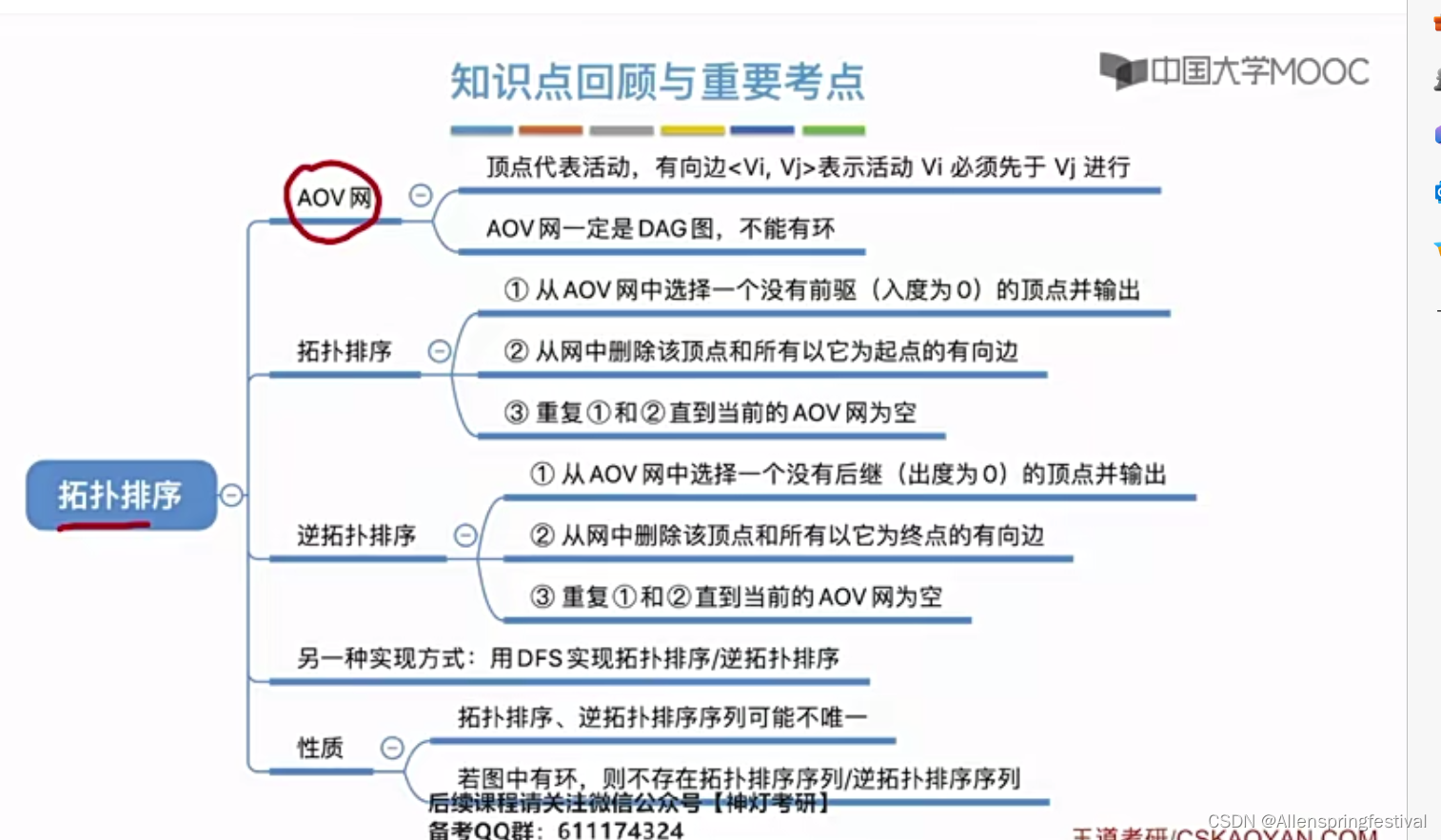

前言

- v1.24.0 - v1.26.0 之前支持docker,但是需要额外安装cri-docker来充当垫片

- 由于工作原因作者会同时使用Ubuntu和CentOS,因此本次将两个系统的K8S安装一起记录一下(与CentOS7.9、Ubuntu2004验证)

- 证书采用cfssl工具制作

- 使用二进制方式部署3主1从高可用集群

- etcd采用二进制部署,复用3个管理节点

- 本次还是选择docker,containerd很多命令不习惯,而且不能直接构建dockerfile

- 本次环境为私有云环境,默认情况无法使用keepalived,因此本次部署无vip,采用nginx做负载均衡(各位可自行使用keepalived+haproxy做vip来进行负载均衡)

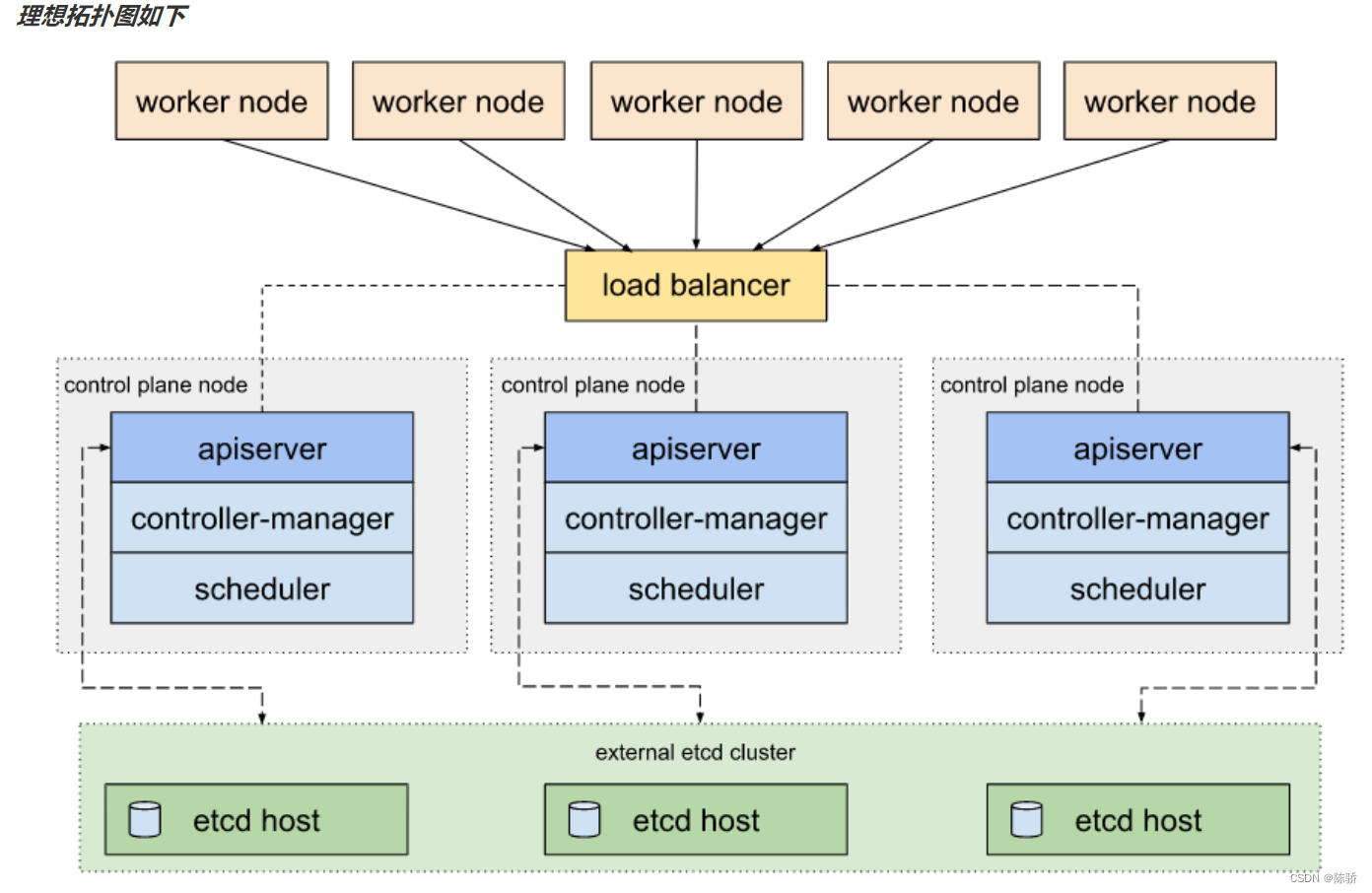

理想拓扑图如下

准备工作

准备4台虚拟机

虚拟机建议同时使用相同操作系统并配置好正确的IP地址

# centos7网卡配置文件位置: /etc/sysconfig/network-scripts/ifcfg-eth0 或 ens33等,vim编辑完成之后重启network服务即可

# ubuntu2004网卡配置文件位置:/etc/netplan/50-cloud-init.yaml 一般是xxx.yaml文件,配置完成之后 netplan apply 生效即可

# centos8 无network服务,且centos7/8或者Ubuntu2004/2204都可以直接使用 NetworkManager服务来管理网络,可以使用nmtui类图形界面配置,也可以直接 nmcli 命令行配置

| IP地址 | 角色 |

|---|---|

| 10.10.21.223 | master/worker |

| 10.10.21.224 | master/worker |

| 10.10.21.225 | master/worker |

| 10.10.21.226 | worker |

说明

如无特殊说明,以下操作均在第一个节点进行

如果需要完全按官方给的各个软件推荐版本的话,可以先下载一个对应的kubeadm,然后命令查看镜像版本,再去下载对应版本,我这边就直接很多都给上新了

# 例如

[root@node1 ~]# kubeadm config images list

W0523 17:58:43.225920 28717 version.go:104] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.txt": Get "https://storage.googleapis.com/kubernetes-release/release/stable-1.txt": context deadline exceeded (Client.Timeout exceeded while awaiting headers)

W0523 17:58:43.225985 28717 version.go:105] falling back to the local client version: v1.26.5

registry.k8s.io/kube-apiserver:v1.26.5

registry.k8s.io/kube-controller-manager:v1.26.5

registry.k8s.io/kube-scheduler:v1.26.5

registry.k8s.io/kube-proxy:v1.26.5

registry.k8s.io/pause:3.9

registry.k8s.io/etcd:3.5.6-0

registry.k8s.io/coredns/coredns:v1.9.3

下载对应的二进制包

kubernetes包可以在CHANGELOG-1.26.md找到:如1.26.5链接

etcd的包也可以直接在github下载,项目地址,进入Release即可下载

docker的包可以直接上docker官网下载

cri-docker的包可以上github下载

cfssl工具包可以上github下载

mkdir /k8s/{service,ssl,pkg,conf} -p

# 创建文件夹存放后续文件,service存放service文件,ssl存放证书文件,pkg存放安装包,conf存放配置文件

cd /k8s/pkg

wget https://dl.k8s.io/v1.26.5/kubernetes-server-linux-amd64.tar.gz

# k8s安装包

wget https://github.com/etcd-io/etcd/releases/download/v3.5.9/etcd-v3.5.9-linux-amd64.tar.gz

# etcd安装包

wget https://download.docker.com/linux/static/stable/x86_64/docker-23.0.6.tgz

# docker安装包

wget https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.2/cri-dockerd-0.3.2.amd64.tgz

# cri-docker安装包

wget https://github.com/cloudflare/cfssl/releases/download/v1.6.3/cfssl_1.6.3_linux_amd64

wget https://github.com/cloudflare/cfssl/releases/download/v1.6.3/cfssljson_1.6.3_linux_amd64

# cfssl安装包

初始化操作

大部分步骤只需要在管理节点1操作

记录一下规划的配置文件,避免后面写错

cat <<EOF > /k8s/conf/k8s_env.sh

# k8s节点网段,方便做chronyd对时

NODEIPS=10.10.21.0/24

# k8s集群所有节点,可以自定义名字,但是需要和后续名字一一对应,如节点超过4个,只需要加节点即可

HOSTS=(node1 node2 node3 node4)

# k8s管理节点

MASTERS=(node1 node2 node3)

# k8s工作节点

WORKS=(node1 node2 node3 node4)

# 每个节点对应的IP地址

node1=10.10.21.223

node2=10.10.21.224

node3=10.10.21.225

node4=10.10.21.226

# VIP地址+端口,可以是keepalived设置的vip,也可以是云平台申请的vip

VIP_server=127.0.0.1:8443

# 节点root密码,方便脚本自动免密

SSHPASS=666

# 配置kubectl自动补全,安装完成把这句加到需要使用kubectl的节点环境变量即可

#source <(kubectl completion bash)

# 服务网段(Service CIDR),部署前路由不可达,部署后集群内部使用IP:Port可达

SERVICE_CIDR="192.168.0.0/16"

# kubernetes服务地址,部署前路由不可达,部署后集群内部可达,需要在Service CIDR中可达,一般建议选用第1个地址

CLUSTER_KUBERNETES_IP="192.168.0.1"

# clusterDNS地址,部署前路由不可达,部署后集群内部使用IP:Port可达,需要在Service CIDR中可达,一般建议选用第10个地址

CLUSTER_KUBERNETES_SVC_IP="192.168.0.10"

# Pod 网段(Cluster CIDR),部署前路由不可达,部署后路由可达(网络插件保证)

CLUSTER_CIDR="172.16.0.0/16"

# 服务端口范围(NodePort Range)

NODE_PORT_RANGE="30000-40000"

# etcd集群服务地址列表(默认复用3个master节点)

ETCD_ENDPOINTS="https://\$node1:2379,https://\$node2:2379,https://\$node3:2379"

# etcd集群服务地址列表(默认复用3个master节点)

ETCD_CLUSTERS="node1=https://\$node1:2380,node2=https://\$node2:2380,node3=https://\$node3:2380"

# k8s证书路径

K8S_SSL_Path=/etc/kubernetes/pki

EOF

\cp /k8s/conf/k8s_env.sh /etc/profile.d/

source /etc/profile

# 执行了这步的话,后面可以不用再多次source了

CentOS7

配置yum源

- 配置基础yum源(后续安装基础软件包)

mkdir /opt/yum_bak && mv /etc/yum.repos.d/* /opt/yum_bak/ # 备份原有的repo

curl -o /etc/yum.repos.d/CentOS-Base.repo https://repo.huaweicloud.com/repository/conf/CentOS-7-reg.repo

# 配置基础源

yum -y install epel-release

sed -i "s/#baseurl/baseurl/g" /etc/yum.repos.d/epel.repo

sed -i "s/metalink/#metalink/g" /etc/yum.repos.d/epel.repo

sed -i "s@https\?://download.fedoraproject.org/pub@https://repo.huaweicloud.com@g" /etc/yum.repos.d/epel.repo

# 配置epel源

- 添加启用源(后续更新内核)

yum install https://www.elrepo.org/elrepo-release-7.el7.elrepo.noarch.rpm -y

sed -i "s@mirrorlist@#mirrorlist@g" /etc/yum.repos.d/elrepo.repo

sed -i "s@elrepo.org/linux@mirrors.tuna.tsinghua.edu.cn/elrepo@g" /etc/yum.repos.d/elrepo.repo

添加docker源(用于安装docker(二进制安装docker,可以省略,如果是yum安装需要进行)

yum install -y yum-utils device-mapper-persistent-data lvm2

curl -o /etc/yum.repos.d/docker-ce.repo https://repo.huaweicloud.com/docker-ce/linux/centos/docker-ce.repo

sed -i 's+download.docker.com+repo.huaweicloud.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo

添加k8s源(用于安装kubeadm、kubelet、kubectl(二进制安装k8s可以省略,如果是yum安装需要进行)

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://repo.huaweicloud.com/kubernetes/yum/repos/kubernetes-el7-\$basearch

enabled=1

gpgcheck=1

repo_gpgcheck=0

gpgkey=https://repo.huaweicloud.com/kubernetes/yum/doc/yum-key.gpg https://repo.huaweicloud.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

# 提示 若您使用的yum中变量 $basearch 无法解析, 请把第二步配置文件中的$basearch修改为相应系统架构(aarch64/armhfp/ppc64le/s390x/x86_64).

- 建立yum缓存

yum clean all && yum makecache fast

配置免密、修改hostname、关闭防火墙、selinux、关闭swap分区(方便后面进行其它操作)

- 修改hosts文件

source /k8s/conf/k8s_env.sh

for host in ${HOSTS[@]};do echo "$(eval echo "$"$host) $host" >> /etc/hosts;done

echo "VIP $VIP" >> /etc/hosts

# 执行本命令之前记得先修改配置文件/k8s/conf/k8s_env.sh

- 修改hostname、关闭防火墙、selinux、关闭swap分区

source /k8s/conf/k8s_env.sh

yum -y install sshpass

ssh-keygen -t rsa -b 2048 -P "" -f /root/.ssh/id_rsa -q

for host in ${HOSTS[@]};do

#sshpass -p 1 ssh-copy-id -o StrictHostKeyChecking=no $host

# 如果k8s_env.sh中未定义密码,就把这句打开,注释下面一句

sshpass -e ssh-copy-id -o StrictHostKeyChecking=no $host

ssh $host "hostnamectl set-hostname $host"

ssh $host "systemctl disable --now firewalld"

ssh $host "setenforce 0"

ssh $host "sed -ri '/^SELINUX=/cSELINUX=disabled' /etc/selinux/config"

ssh $host "sed -i 's@.*swap.*@#&@g' /etc/fstab"

ssh $host "swapoff -a"

scp /etc/hosts $host:/etc/hosts

done

- 上面命令执行完成之后会修改服务器hostname,建议logout退出之后重新连接促使主机名生效

下载软件包并批量安装

- 下载软件包至/k8s/rpm_dir方便后续一起安装

mkdir /k8s/rpm_dir

curl http://rpmfind.net/linux/centos/8-stream/BaseOS/x86_64/os/Packages/libseccomp-2.5.2-1.el8.x86_64.rpm -o /k8s/rpm_dir/libseccomp.rpm

# el7的libseccomp版本太低,给下载个el8的,如果是CentOS8的系统可以不做这一步

yumdownloader --resolve --destdir /k8s/rpm_dir wget psmisc vim net-tools nfs-utils telnet yum-utils device-mapper-persistent-data lvm2 git tar curl ipvsadm ipset sysstat conntrack chrony

# 常用基础软件包

yumdownloader --resolve --destdir /k8s/rpm_dir kernel-ml --enablerepo=elrepo-kernel

# 新版本ml内核软件包

- 所有节点都安装以上软件包

source /k8s/conf/k8s_env.sh

for host in ${HOSTS[@]}

do

scp /etc/yum.repos.d/*.repo $host:/etc/yum.repos.d/

scp -r /k8s/rpm_dir $host:/tmp/

ssh $host "yum -y remove libseccomp"

ssh $host "yum -y localinstall /tmp/rpm_dir/*"

ssh $host "rm -rf /tmp/rpm_dir/"

#ssh $host "echo 'export LC_ALL=en_US.UTF-8' >> ~/.bashrc"

# 如果习惯中文可以将这句的注释去掉

done

- 升级内核(节点会重启)

source /k8s/conf/k8s_env.sh

for host in ${HOSTS[@]};do

ssh $host "rpm -qa|grep kernel"

#ssh $host " awk -F\' '$1=="menuentry " {print $2}' /etc/grub2.cfg"

echo $host

echo ""

ssh $host "grub2-set-default 0"

done

for host in ${HOSTS[@]};do

if [[ $host == $(hostname) ]];then

continue

fi

ssh $host reboot

done

init 6

- 做完这一步之后可以等待一到三分钟左右再连接服务器

# 此时检查内核版本会发现已经升级完成

uname -r

6.3.3-1.el7.elrepo.x86_64

配置时间同步

计划node1同步阿里ntp服务器,其余节点同步node1

source /k8s/conf/k8s_env.sh

cat > /etc/chrony.conf <<EOF

server ntp.aliyun.com iburst

driftfile /var/lib/chrony/drift

makestep 1.0 3

rtcsync

allow $NODEIPS

local stratum 10

keyfile /etc/chrony.keys

leapsectz right/UTC

logdir /var/log/chrony

EOF

cat > /k8s/conf/chrony.conf.client <<EOF

server $node1 iburst

driftfile /var/lib/chrony/drift

makestep 1.0 3

rtcsync

local stratum 10

keyfile /etc/chrony.keys

leapsectz right/UTC

logdir /var/log/chrony

EOF

分发chrony配置文件

source /k8s/conf/k8s_env.sh

for host in ${HOSTS[@]};do

ssh $host "ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime"

if [[ $host == $(hostname) ]];then

ssh $host "systemctl restart chronyd"

continue

fi

scp /k8s/conf/chrony.conf.client $host:/etc/chrony.conf

ssh $host " systemctl restart chronyd"

done

检查chrony是否配置成功

for host in ${HOSTS[@]};do

ssh $host "timedatectl"

ssh $host "chronyc sources -v"

sleep 1

done

配置打开文件描述符

cat <<EOF > /etc/security/limits.conf

* soft nofile 655360

* hard nofile 131072

* soft nproc 655350

* hard nproc 655350

* seft memlock unlimited

* hard memlock unlimited

EOF

source /k8s/conf/k8s_env.sh

for host in ${HOSTS[@]};do

scp /etc/security/limits.conf $host:/etc/security/limits.conf

done

添加ipvs模块和内核模块

- 添加ipvs模块

cat <<EOF > /etc/modules-load.d/ipvs.conf

ip_vs

ip_vs_lc

ip_vs_wlc

ip_vs_rr

ip_vs_wrr

ip_vs_lblc

ip_vs_lblcr

ip_vs_dh

ip_vs_sh

ip_vs_fo # 如遇到报错可以删除这个模块重试

ip_vs_nq

ip_vs_sed

ip_vs_ftp

ip_vs_sh

nf_conntrack #内核小于4.18,把这行改成nf_conntrack_ipv4,实际用的时候把注释删掉,否则可能报错

ip_tables

ip_set

xt_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip

overlay

br_netfilter

EOF

- 添加内核模块

cat <<EOF > /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.netfilter.nf_conntrack_max=2310720

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl =15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16384

vm.swappiness=0

EOF

- 进行配置文件分发

source /k8s/conf/k8s_env.sh

for host in ${WORKS[@]};do

scp /etc/modules-load.d/ipvs.conf $host:/etc/modules-load.d/ipvs.conf

scp /etc/sysctl.d/k8s.conf $host:/etc/sysctl.d/k8s.conf

ssh $host "systemctl restart systemd-modules-load.service"

ssh $host "sysctl --system"

done

Ubuntu

配置apt源

- 基础apt源

mv /etc/apt/{sources.list,sources.list.bak} # 备份现有的apt源

cat <<EOF > /etc/apt/sources.list

deb http://repo.huaweicloud.com/ubuntu focal main restricted

deb http://repo.huaweicloud.com/ubuntu focal-updates main restricted

deb http://repo.huaweicloud.com/ubuntu focal universe

deb http://repo.huaweicloud.com/ubuntu focal-updates universe

deb http://repo.huaweicloud.com/ubuntu focal multiverse

deb http://repo.huaweicloud.com/ubuntu focal-updates multiverse

deb http://repo.huaweicloud.com/ubuntu focal-backports main restricted universe multiverse

deb http://repo.huaweicloud.com/ubuntu focal-security main restricted

deb http://repo.huaweicloud.com/ubuntu focal-security universe

deb http://repo.huaweicloud.com/ubuntu focal-security multiverse

EOF

sudo apt-get update

添加docker源(用于安装containerd)(二进制安装不需要)

curl -fsSL https://repo.huaweicloud.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add -

sudo add-apt-repository "deb [arch=amd64] https://repo.huaweicloud.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable"

添加k8s源(用于安装kubeadm、kubelet、kubectl)(二进制安装不需要)

cat <<EOF > /etc/apt/sources.list.d/kubernetes.list

deb https://repo.huaweicloud.com/kubernetes/apt/ kubernetes-xenial main

EOF

curl -s https://repo.huaweicloud.com/kubernetes/apt/doc/apt-key.gpg | sudo apt-key add -

配置免密、修改hostname、关闭防火墙、关闭swap分区(方便后面进行其它操作)

- 修改hosts文件

source /k8s/conf/k8s_env.sh

for host in ${HOSTS[@]};do echo "$(eval echo "$"$host) $host" >> /etc/hosts;done

echo "VIP $VIP" >> /etc/hosts

# 执行本命令之前记得先修改配置文件/k8s/conf/k8s_env.sh

- 修改hostname、关闭防火墙、关闭swap分区

source /k8s/conf/k8s_env.sh

apt -y install sshpass

ssh-keygen -t rsa -b 2048 -P "" -f /root/.ssh/id_rsa -q

for host in ${HOSTS[@]};do

#sshpass -p 1 ssh-copy-id -o StrictHostKeyChecking=no $host

sshpass -e ssh-copy-id -o StrictHostKeyChecking=no $host

ssh $host "hostnamectl set-hostname $host"

ssh $host "systemctl disable --now ufw"

ssh $host "sed -i 's@.*swap.*@#&@g' /etc/fstab"

ssh $host "swapoff -a"

scp /etc/hosts $host:/etc/hosts

done

下载软件包并批量安装

- 本来想下载软件包然后一起dpkg安装的,但是总是少安装包,就还是直接安装了

source /k8s/conf/k8s_env.sh

for host in ${HOSTS[@]};do

ssh $host "apt -y install net-tools ipvsadm ipset conntrack chrony "

ssh $host "apt -y install kubelet=1.26.0-00 kubeadm=1.26.0-00 kubectl=1.26.0-00"

ssh $host "apt -y install containerd.io"

done

配置时间同步

计划node1同步阿里ntp服务器,其余节点同步node1

cat > /etc/chrony/chrony.conf <<EOF

server ntp.aliyun.com iburst

driftfile /var/lib/chrony/drift

makestep 1.0 3

rtcsync

allow $NODEIPS

local stratum 10

keyfile /etc/chrony/chrony.keys

leapsectz right/UTC

logdir /var/log/chrony

EOF

cat > /k8s/conf/chrony.conf.client <<EOF

server $node1 iburst

driftfile /var/lib/chrony/drift

makestep 1.0 3

rtcsync

local stratum 10

keyfile /etc/chrony/chrony.keys

leapsectz right/UTC

logdir /var/log/chrony

EOF

分发chrony配置文件

source /k8s/conf/k8s_env.sh

for host in ${HOSTS[@]};do

ssh $host "ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime"

if [[ $host == $(hostname) ]];then

ssh $host "systemctl restart chrony"

continue

fi

scp /k8s/conf/chrony.conf.client $host:/etc/chrony/chrony.conf

ssh $host " systemctl restart chrony"

done

sleep 3

for host in ${HOSTS[@]};do

ssh $host "systemctl enable chrony"

ssh $host "timedatectl"

ssh $host "chronyc sources -v"

done

配置打开文件描述符

cat <<EOF > /etc/security/limits.conf

* soft nofile 655360

* hard nofile 131072

* soft nproc 655350

* hard nproc 655350

* seft memlock unlimited

* hard memlock unlimited

EOF

source /k8s/conf/k8s_env.sh

for host in ${HOSTS[@]};do

scp /etc/security/limits.conf $host:/etc/security/limits.conf

done

添加ipvs模块和内核模块

- 添加ipvs模块

cat <<EOF > /etc/modules-load.d/ipvs.conf

ip_vs

ip_vs_lc

ip_vs_wlc

ip_vs_rr

ip_vs_wrr

ip_vs_lblc

ip_vs_lblcr

ip_vs_dh

ip_vs_sh

ip_vs_fo # 如遇到报错可以删除这个模块重试

ip_vs_nq

ip_vs_sed

ip_vs_ftp

ip_vs_sh

nf_conntrack #内核小于4.18,把这行改成nf_conntrack_ipv4

ip_tables

ip_set

xt_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip

overlay

br_netfilter

EOF

- 添加内核模块

cat <<EOF > /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.netfilter.nf_conntrack_max=2310720

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl =15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16384

vm.swappiness=0

EOF

- 进行配置文件分发

source /k8s/conf/k8s_env.sh

for host in ${WORKS[@]};do

scp /etc/modules-load.d/ipvs.conf $host:/etc/modules-load.d/ipvs.conf

scp /etc/sysctl.d/k8s.conf $host:/etc/sysctl.d/k8s.conf

ssh $host "systemctl restart systemd-modules-load.service"

ssh $host "sysctl --system"

done

编译一个nginx做负载均衡

如果是采用keepalived+haproxy的方式做负载均衡就不执行本步骤

如果不想编译的话也可以直接yum/apt安装nginx,但是需要额外安装模块

CentOS

yum -y install nginx

yum -y install nginx-all-modules.noarch

Ubuntu

apt -y install nginx

- 安装编译需要的软件

CentOS

yum -y install gcc gcc-c++ //C语言环境

yum -y install pcre pcre-devel //正则

yum -y install zlib zlib-devel //lib包

yum -y install openssl openssl-devel make

Ubuntu

apt-get install gcc

apt-get install libpcre3 libpcre3-dev

apt-get install zlib1g zlib1g-dev

apt-get install make

- 下载源码包

cd /k8s/pkg/

wget http://nginx.org/download/nginx-1.20.1.tar.gz

tar -xf nginx-1.20.1.tar.gz

cd nginx-1.20.1/

- 编译部署nginx

mkdir /k8s/pkg/nginx_tmp

./configure --prefix=/k8s/pkg/nginx_tmp --with-stream --without-http --without-http_uwsgi_module && \

make && \

make install

- 编写nginx配置文件

source /k8s/conf/k8s_env.sh

cat > /k8s/conf/nginx.conf <<EOF

worker_processes auto;

events {

worker_connections 1024;

}

stream {

upstream backend {

hash \$remote_addr consistent;

server $node1:6443 max_fails=3 fail_timeout=30s;

server $node2:6443 max_fails=3 fail_timeout=30s;

server $node3:6443 max_fails=3 fail_timeout=30s;

}

server {

listen *:8443;

proxy_connect_timeout 1s;

proxy_pass backend;

}

}

EOF

- 编写nginx的service文件

cat > /k8s/service/kube-nginx.service <<EOF

[Unit]

Description=kube-apiserver nginx proxy

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=forking

ExecStartPre=/usr/local/bin/nginx -c /etc/nginx/nginx.conf -p /etc/nginx -t

ExecStart=/usr/local/bin/nginx -c /etc/nginx/nginx.conf -p /etc/nginx

ExecReload=/usr/local/bin/nginx -c /etc/nginx/nginx.conf -p /etc/nginx -s reload

PrivateTmp=true

Restart=always

RestartSec=5

StartLimitInterval=0

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

- 分发文件并启动nginx

source /k8s/conf/k8s_env.sh

for host in ${HOSTS[@]};do

ssh $host "mkdir /etc/nginx/logs -p"

scp /k8s/pkg/nginx_tmp/sbin/nginx $host:/usr/local/bin/

scp /k8s/conf/nginx.conf $host:/etc/nginx/nginx.conf

scp /k8s/service/kube-nginx.service $host:/etc/systemd/system/

ssh $host "systemctl daemon-reload "

ssh $host "systemctl enable kube-nginx"

ssh $host "systemctl restart kube-nginx"

done

for host in ${HOSTS[@]};do

ssh $host "systemctl is-active kube-nginx"

done

# 检查nginx是否就绪

安装容器运行时

可以装docker也可以装containerd,我实在是不习惯containerd,本次还是安装的docker

安装docker

解压二进制包

cd /k8s/pkg/

tar -xf docker-23.0.6.tgz

准备containerd.service文件

cat <<EOF > /k8s/service/containerd.service

[Unit]

Description=containerd container runtime

Documentation=https://containerd.io

After=network.target local-fs.target

[Service]

ExecStartPre=-/sbin/modprobe overlay

ExecStart=/usr/bin/containerd

Type=notify

Delegate=yes

KillMode=process

Restart=always

RestartSec=5

LimitNPROC=infinity

LimitCORE=infinity

LimitNOFILE=1048576

TasksMax=infinity

OOMScoreAdjust=-999

[Install]

WantedBy=multi-user.target

EOF

准备docker.socket文件

cat <<EOF > /k8s/service/docker.socket

[Unit]

Description=Docker Socket for the API

[Socket]

ListenStream=/var/run/docker.sock

SocketMode=0660

SocketUser=root

SocketGroup=docker

[Install]

WantedBy=sockets.target

EOF

准备docker.service文件

cat <<EOF > /k8s/service/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service time-set.target

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/bin/dockerd

ExecReload=/bin/kill -s HUP \$MAINPID

TimeoutStartSec=0

RestartSec=2

Restart=always

StartLimitBurst=3

StartLimitInterval=60s

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TasksMax=infinity

Delegate=yes

KillMode=process

OOMScoreAdjust=-500

[Install]

WantedBy=multi-user.target

EOF

准备daemon.json文件

cat > /k8s/conf/docker.daemon.json <<EOF

{

"log-driver": "json-file",

"log-opts": {

"max-size": "20m",

"max-file": "3"

},

"registry-mirrors": ["https://pilvpemn.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"live-restore": true

}

EOF

分发二进制文件并启动docker

source /k8s/conf/k8s_env.sh

for host in ${HOSTS[@]};do

ssh $host "mkdir /etc/docker "

ssh $host "groupadd docker"

scp /k8s/pkg/docker/* $host:/usr/bin/

scp /k8s/conf/docker.daemon.json $host:/etc/docker/daemon.json

scp /k8s/service/docker.service $host:/etc/systemd/system/docker.service

ssh $host "systemctl daemon-reload "

ssh $host "systemctl enable docker --now"

done

for host in ${HOSTS[@]};do

ssh $host "systemctl is-active docker"

done

# 检查docker是否就绪

安装cri-docker

解压二进制包

cd /k8s/pkg/

tar -xf cri-dockerd-0.3.2.amd64.tgz

cd cri-dockerd/

准备cri-docer.service文件,官网有参考文件

官方给出的模板,一些参数是需要做修改的

- –cni-bin-dir 默认路径是 /opt/cni/bin,有需要的话,可以做修改,为了避免大家后面忘记了,这里我就不做修改了,使用默认的路径

- –container-runtime-endpoint 默认的路径是 unix:///var/run/cri-dockerd.sock ,可以把官方的这个参数去掉就好

- –cri-dockerd-root-directory 默认路径是 /var/lib/cri-dockerd ,可以和 docker 一样修改路径,避免默认的路径空间不足

- –pod-infra-container-image 默认镜像是 registry.k8s.io/pause:3.6,要改成阿里的镜像,如果没有国外服务器,拉取 k8s 的镜像会失败

- 其他参数可以通过 cri-dockerd --help 命令来获取

准备cri-docker.service文件

cat <<EOF > /k8s/service/cri-docker.service

[Unit]

Description=CRI Interface for Docker Application Container Engine

Documentation=https://docs.mirantis.com

After=network-online.target firewalld.service docker.service

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/bin/cri-dockerd --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.9

ExecReload=/bin/kill -s HUP \$MAINPID

TimeoutSec=0

RestartSec=2

Restart=always

StartLimitBurst=3

StartLimitInterval=60s

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TasksMax=infinity

Delegate=yes

KillMode=process

[Install]

WantedBy=multi-user.target

EOF

分发二进制文件并启动docker

source /k8s/conf/k8s_env.sh

for host in ${HOSTS[@]};do

scp /k8s/pkg/cri-dockerd/* $host:/usr/bin/

scp /k8s/service/cri-docker.service $host:/etc/systemd/system/cri-docker.service

ssh $host "systemctl daemon-reload "

ssh $host "systemctl enable cri-docker --now"

done

for host in ${HOSTS[@]};do

ssh $host "systemctl is-active cri-docker"

done

# 检查docker是否就绪

安装etcd

- 下载二进制包

cd /k8s/pkg/

ls etcd*

etcd-v3.5.9-linux-amd64.tar.gz

# 检查安装包是否存在

tar -xf etcd-v3.5.9-linux-amd64.tar.gz

- 准备etcd的service文件

cat <<EOF > /k8s/service/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

Documentation=https://github.com/coreos

[Service]

Type=notify

WorkingDirectory=/var/lib/etcd/

ExecStart=/usr/local/bin/etcd \\

--name=##NODE_NAME## \\

--cert-file=/etc/etcd/ssl/etcd.pem \\

--key-file=/etc/etcd/ssl/etcd-key.pem \\

--peer-cert-file=/etc/etcd/ssl/etcd.pem \\

--peer-key-file=/etc/etcd/ssl/etcd-key.pem \\

--trusted-ca-file=/etc/etcd/ssl/ca.pem \\

--peer-trusted-ca-file=/etc/etcd/ssl/ca.pem \\

--initial-advertise-peer-urls=https://##NODE_IP##:2380 \\

--listen-peer-urls=https://##NODE_IP##:2380 \\

--listen-client-urls=https://##NODE_IP##:2379,http://127.0.0.1:2379 \\

--advertise-client-urls=https://##NODE_IP##:2379 \\

--initial-cluster-token=etcd-cluster \\

--initial-cluster=##ETCD_CLUSTERS## \\

--initial-cluster-state=new \\

--data-dir=/var/lib/etcd

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

准备etcd所需证书

- 下载cfssl工具制作证书

cd /k8s/pkg/

mv cfssl_1.6.3_linux_amd64 /usr/local/bin/cfssl

mv cfssljson_1.6.3_linux_amd64 /usr/local/bin/cfssljson

chmod +x /usr/local/bin/cfssl*

cfssl version # 验证版本

- 创建根证书配置

cd /k8s/ssl/

cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": { # 如果是etcd和k8s不共用证书,这里建议写etcd

"usages": [ # 执行之前删除掉 # 注释

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "876000h"

}

}

}

}

EOF

- 创建ca证书请求文件

cat > ca-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "HuBei",

"L": "WuHan",

"O": "k8s",

"OU": "System"

}

],

"ca": {

"expiry": "876000h"

}

}

EOF

- 创建etcd证书请求文件

cat <<EOF > etcd-csr.json

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"$node1",

"$node2",

"$node3"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "HuBei",

"L": "WuHan",

"O": "k8s",

"OU": "Etcd"

}

]

}

EOF

- 初始化ca证书,利用生成的根证书生成etcd证书文件

cfssl gencert -initca ca-csr.json | cfssljson -bare ca

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes etcd-csr.json |cfssljson -bare etcd

# 这里的profile要和CA里面的对应

分发证书、二进制文件及service文件

source /k8s/conf/k8s_env.sh

cd /k8s/service

for host in ${MASTERS[@]};do

ssh $host "mkdir /etc/etcd/ssl/ -p"

ssh $host "mkdir /var/lib/etcd"

scp /k8s/ssl/etcd* $host:/etc/etcd/ssl/

scp /k8s/ssl/ca* $host:/etc/etcd/ssl/

done

for host in ${MASTERS[@]};do

IP=$(eval echo "$"$host)

sed -e "s@##NODE_NAME##@$host@g" -e "s@##NODE_IP##@$IP@g" -e "s@##ETCD_CLUSTERS##@$ETCD_CLUSTERS@g" etcd.service > etcd.service.$host

scp etcd.service.$host $host:/etc/systemd/system/etcd.service

scp /k8s/pkg/etcd*/etcd* $host:/usr/local/bin/

ssh $host "systemctl daemon-reload"

ssh $host "systemctl enable etcd "

ssh $host "systemctl start etcd --no-block"

done

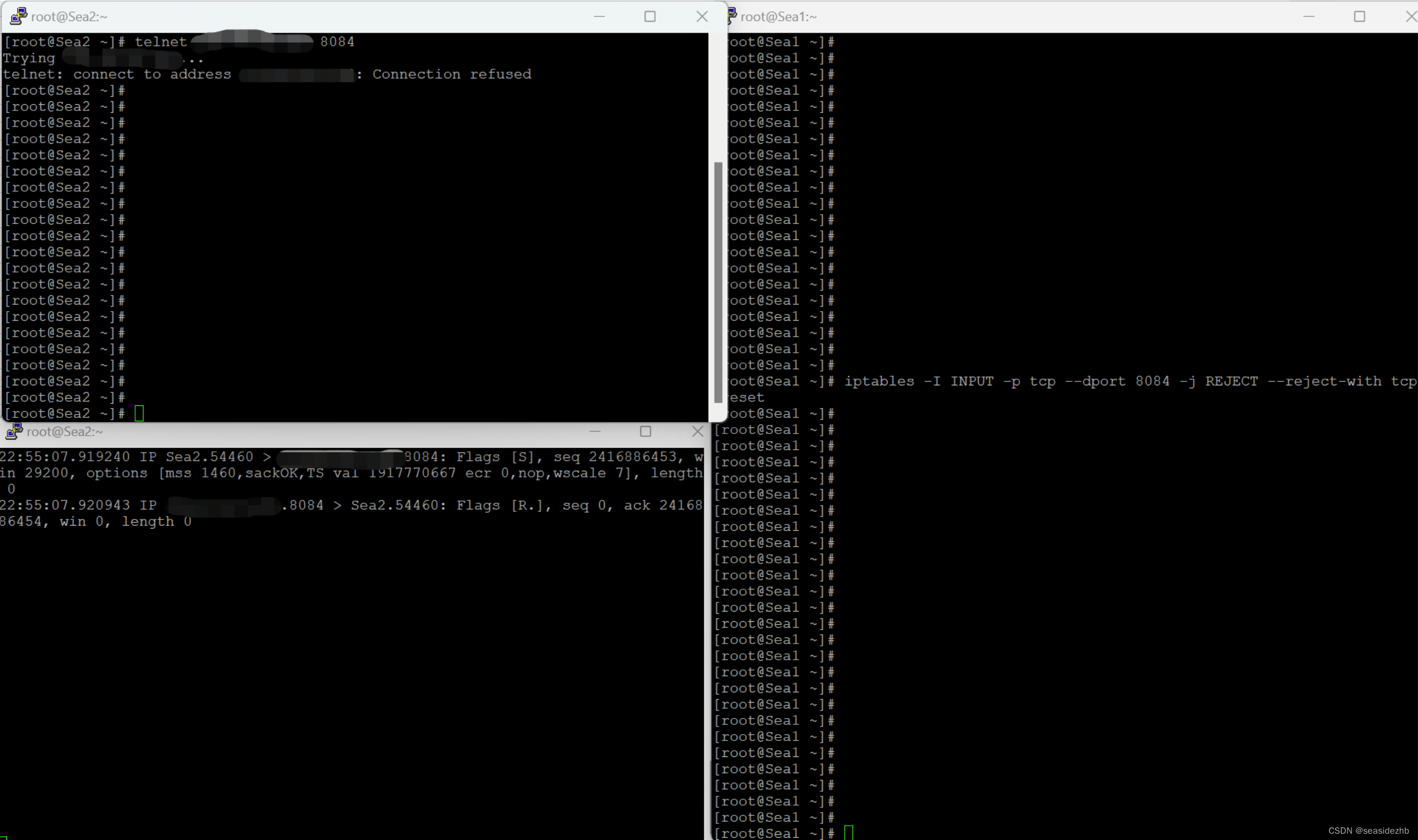

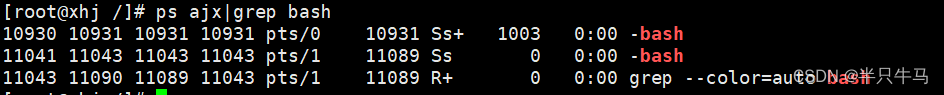

检查etcd是否正常

ETCDCTL_API=3 etcdctl --endpoints=$ETCD_ENDPOINTS --cacert=/etc/etcd/ssl/ca.pem --cert=/etc/etcd/ssl/etcd.pem --key=/etc/etcd/ssl/etcd-key.pem endpoint status --write-out=table

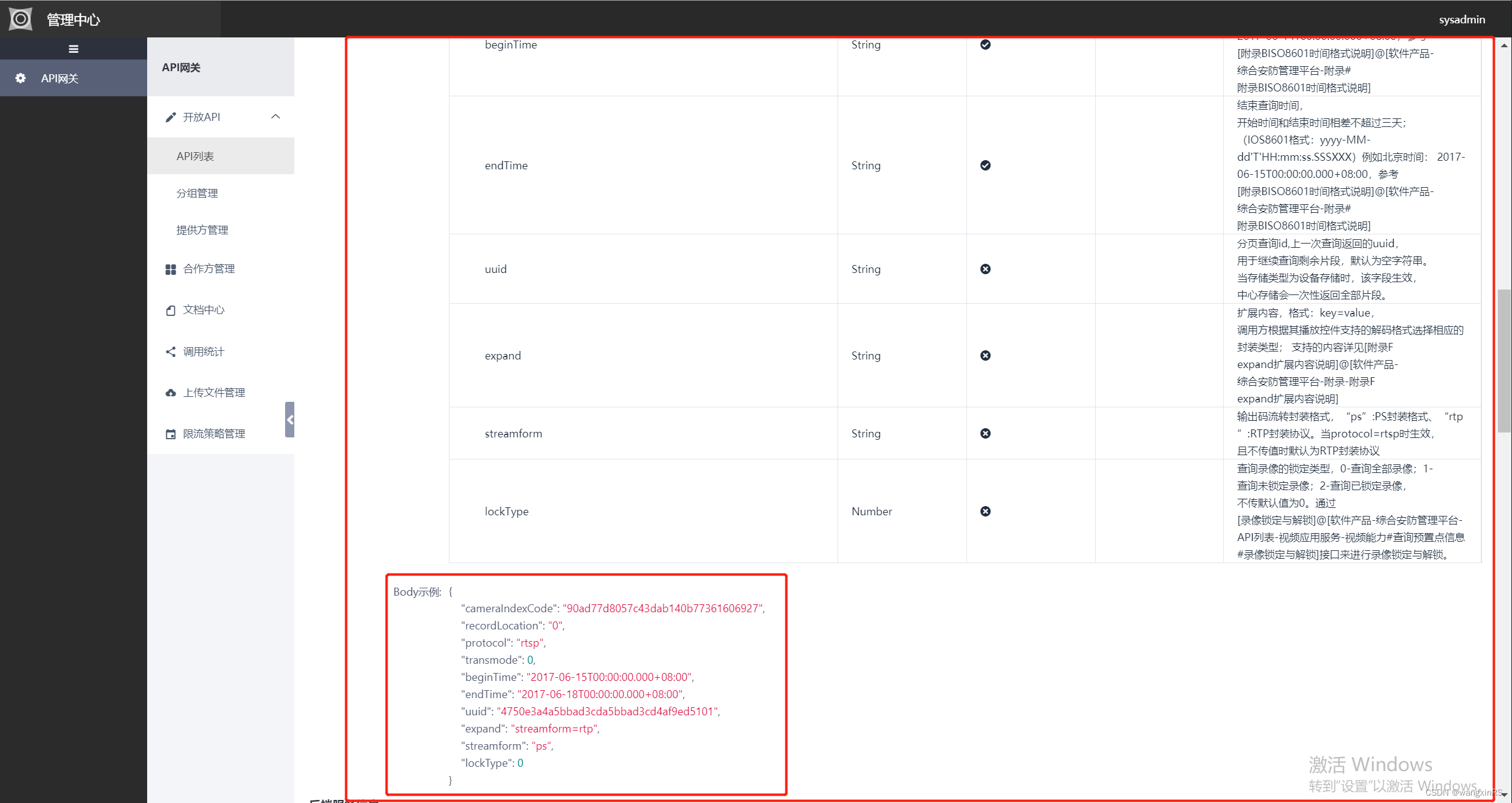

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-0IDBF6hr-1684939084813)(https://pek3b.qingstor.com/chenjiao-0606//md/202305242140661.png)]

安装k8s组件

apiserver

cd /k8s/pkg/

tar -xf kubernetes-server-linux-amd64.tar.gz

# 解压出来的二进制包默认都在 kubernetes/server/bin/ 里面

准备apiserver 证书

准备证书申请文件

和 etcd 组件一样,把所有的 apiserver 节点 ip 都要写进去

如果有 SLB 等高可用 ip,也要写进去 (我这里实际上是127.0.0.1做的负载均衡)

source /k8s/conf/k8s_env.sh

cat << EOF > /k8s/ssl/apiserver-csr.json

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"$node1",

"$node2",

"$node3",

"$CLUSTER_KUBERNETES_IP",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "HuBei",

"L": "WuHan",

"O": "k8s",

"OU": "System"

}

]

}

EOF

创建apiserver证书

cd /k8s/ssl/

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes apiserver-csr.json |cfssljson -bare apiserver

准备metrics-server证书

后期如果需要用到hpa的话那就需要起metrics-server,起这个pod可以不用证书,但是官方建议给配上

准备证书申请文件

cat << EOF > /k8s/ssl/aggregator-csr.json

{

"CN": "aggregator",

"hosts": [

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "HuBei",

"L": "WuHan",

"O": "k8s",

"OU": "System"

}

]

}

EOF

创建metrics-server证书

cd /k8s/ssl/

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes aggregator-csr.json |cfssljson -bare aggregator

准备service文件

参考

这里每个apiserver节点IP不一样,后续用sed替换

cat << EOF > /k8s/service/kube-apiserver.service.template

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-apiserver \\

--secure-port=6443 \\

--allow-privileged=true \\

--anonymous-auth=false \\

--api-audiences=api,istio-ca \\

--authorization-mode=Node,RBAC \\

--bind-address=##masterIP## \\

--client-ca-file=$K8S_SSL_Path/ca.pem \\

--endpoint-reconciler-type=lease \\

--etcd-cafile=$K8S_SSL_Path/ca.pem \\

--etcd-certfile=$K8S_SSL_Path/apiserver.pem \\

--etcd-keyfile=$K8S_SSL_Path/apiserver-key.pem \\

--etcd-servers=$ETCD_ENDPOINTS \\

--kubelet-certificate-authority=$K8S_SSL_Path/ca.pem \\

--kubelet-client-certificate=$K8S_SSL_Path/apiserver.pem \\

--kubelet-client-key=$K8S_SSL_Path/apiserver-key.pem \\

--service-account-issuer=https://kubernetes.default.svc \\

--service-account-signing-key-file=$K8S_SSL_Path/ca-key.pem \\

--service-account-key-file=$K8S_SSL_Path/ca.pem \\

--service-cluster-ip-range=$SERVICE_CIDR \\

--service-node-port-range=$NODE_PORT_RANGE \\

--tls-cert-file=$K8S_SSL_Path/apiserver.pem \\

--tls-private-key-file=$K8S_SSL_Path/apiserver-key.pem \\

--requestheader-client-ca-file=$K8S_SSL_Path/ca.pem \\

--requestheader-allowed-names= \\

--requestheader-extra-headers-prefix=X-Remote-Extra- \\

--requestheader-group-headers=X-Remote-Group \\

--requestheader-username-headers=X-Remote-User \\

--proxy-client-cert-file=$K8S_SSL_Path/aggregator.pem \\

--proxy-client-key-file=$K8S_SSL_Path/aggregator-key.pem \\

--enable-aggregator-routing=true \\

--v=2

Restart=always

RestartSec=5

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

分发apiserver文件并启动

source /k8s/conf/k8s_env.sh

cd /k8s/service

for host in ${MASTERS[@]};do

IP=$(eval echo "$"$host)

ssh $host "mkdir ${K8S_SSL_Path} -p"

scp /k8s/ssl/{ca*.pem,apiserver*.pem,aggregator*.pem} $host:${K8S_SSL_Path}/

scp /k8s/pkg/kubernetes/server/bin/kube{-apiserver,ctl} $host:/usr/local/bin/

sed "s@##masterIP##@$IP@g" kube-apiserver.service.template > kube-apiserver.service.${host}

# 注意这里sed需要用双引号才能成功替换

scp kube-apiserver.service.${host} $host:/etc/systemd/system/kube-apiserver.service

ssh $host "systemctl daemon-reload"

ssh $host "systemctl enable kube-apiserver --now"

done

验证apiserver节点健康状态

# 验证apiserver是否健康

source /k8s/conf/k8s_env.sh

for host in ${MASTERS[@]};do

curl -k https://${host}:6443/healthz --cacert ${K8S_SSL_Path}/ca.pem --cert ${K8S_SSL_Path}/apiserver.pem --key ${K8S_SSL_Path}/apiserver-key.pem

echo -e "\t$host"

done

# 正常结果如下

ok node1

ok node2

ok node3

kubectl

准备admin证书

后期肯定会用到kubectl命令操作集群,需要给准备个证书

准备证书申请文件

cat << EOF > /k8s/ssl/admin-csr.json

{

"CN": "admin",

"hosts": [

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "HuBei",

"L": "WuHan",

"O": "system:masters",

"OU": "System"

}

]

}

EOF

# 这里的masters必不能掉

创建admin证书

cd /k8s/ssl/

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json |cfssljson -bare admin

创建kubeconfig

设置集群参数

cd /k8s/ssl/

kubectl config \

set-cluster kubernetes \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://$VIP_server \

--kubeconfig=kubectl.kubeconfig

设置客户端认证参数

cd /k8s/ssl/

kubectl config \

set-credentials admin \

--client-certificate=admin.pem \

--client-key=admin-key.pem \

--embed-certs=true \

--kubeconfig=kubectl.kubeconfig

设置上下文参数

cd /k8s/ssl/

kubectl config \

set-context kubernetes \

--cluster=kubernetes \

--user=admin \

--kubeconfig=kubectl.kubeconfig

设置默认上下文

cd /k8s/ssl/

kubectl config use-context kubernetes --kubeconfig=kubectl.kubeconfig

创建config

kubectl 命令默认从

$HOME/.kube/config文件里面读取证书来访问 apiserver 节点的如果不创建的话也可以

kubectl --kubeconfig=kubectl.kubeconfig来指定

mkdir ~/.kube

cp /k8s/ssl/kubectl.kubeconfig ~/.kube/config

cp /k8s/ssl/kubectl.kubeconfig /etc/kubernetes/admin.conf

# 这步是为了归档,其实可以忽略

创建 clusterrolebinding 实现 exec 进入容器权限

不创建 clusterrolebinding ,使用 kubectl exec 命令会出现 error: unable to upgrade connection: Forbidden (user=kubernetes, verb=create, resource=nodes, subresource=proxy)类似报错

kubectl create clusterrolebinding kubernetes --clusterrole=cluster-admin --user=kubernetes

配置kubectl命令自动补全(可忽略)

echo "source <(kubectl completion bash)" >> ~/.bashrc

source ~/.bashrc

controller-manager

准备controller-manager 证书

准备controller-manager证书申请文件

source /k8s/conf/k8s_env.sh

cat << EOF > /k8s/ssl/kube-controller-manager-csr.json

{

"CN": "system:kube-controller-manager",

"hosts": [

"127.0.0.1",

"$node1",

"$node2",

"$node3"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "HuBei",

"L": "WuHan",

"O": "system:kube-controller-manager",

"OU": "System"

}

]

}

EOF

创建controller-manager证书

cd /k8s/ssl/

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-controller-manager-csr.json |cfssljson -bare kube-controller-manager

创建kubeconfig

设置集群参数

cd /k8s/ssl/

kubectl config \

set-cluster kubernetes \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://$VIP_server \

--kubeconfig=kube-controller-manager.kubeconfig

设置客户端认证参数

cd /k8s/ssl/

kubectl config \

set-credentials system:kube-controller-manager \

--client-certificate=kube-controller-manager.pem \

--client-key=kube-controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=kube-controller-manager.kubeconfig

设置上下文参数

cd /k8s/ssl/

kubectl config \

set-context system:kube-controller-manager \

--cluster=kubernetes \

--user=system:kube-controller-manager \

--kubeconfig=kube-controller-manager.kubeconfig

设置默认上下文

cd /k8s/ssl/

kubectl config \

use-context system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig

准备service文件

参考

cd /k8s/service

cat << EOF > kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-controller-manager \\

--bind-address=0.0.0.0 \\

--allocate-node-cidrs=true \\

--cluster-cidr=$CLUSTER_CIDR \\

--cluster-name=kubernetes \\

--cluster-signing-cert-file=$K8S_SSL_Path/ca.pem \\

--cluster-signing-key-file=$K8S_SSL_Path/ca-key.pem \\

--kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \\

--leader-elect=true \\

--node-cidr-mask-size=24 \\

--root-ca-file=$K8S_SSL_Path/ca.pem \\

--service-account-private-key-file=$K8S_SSL_Path/ca-key.pem \\

--service-cluster-ip-range=$SERVICE_CIDR \\

--use-service-account-credentials=true \\

--v=2

Restart=always

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

分发controller-manager文件并启动

source /k8s/conf/k8s_env.sh

cd /k8s/service

for host in ${MASTERS[@]};do

scp /k8s/ssl/kube-controller-manager{,-key}.pem $host:${K8S_SSL_Path}/

scp /k8s/ssl/kube-controller-manager.kubeconfig $host:/etc/kubernetes/

scp /k8s/pkg/kubernetes/server/bin/kube-controller-manager $host:/usr/local/bin/

scp kube-controller-manager.service $host:/etc/systemd/system/kube-controller-manager.service

ssh $host "systemctl daemon-reload"

ssh $host "systemctl enable kube-controller-manager --now"

done

scheduler

准备scheduler证书

准备scheduler证书申请文件

source /k8s/conf/k8s_env.sh

cat << EOF > /k8s/ssl/kube-scheduler-csr.json

{

"CN": "system:kube-scheduler",

"hosts": [

"127.0.0.1",

"$node1",

"$node2",

"$node3"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "HuBei",

"L": "WuHan",

"O": "system:kube-scheduler",

"OU": "System"

}

]

}

EOF

创建kube-scheduler证书

cd /k8s/ssl/

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-scheduler-csr.json |cfssljson -bare kube-scheduler

创建kubeconfig

设置集群参数

cd /k8s/ssl/

kubectl config \

set-cluster kubernetes \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://$VIP_server \

--kubeconfig=kube-scheduler.kubeconfig

设置客户端认证参数

cd /k8s/ssl/

kubectl config \

set-credentials system:kube-scheduler \

--client-certificate=kube-scheduler.pem \

--client-key=kube-scheduler-key.pem \

--embed-certs=true \

--kubeconfig=kube-scheduler.kubeconfig

设置上下文参数

cd /k8s/ssl/

kubectl config \

set-context system:kube-scheduler \

--cluster=kubernetes \

--user=system:kube-scheduler \

--kubeconfig=kube-scheduler.kubeconfig

设置默认上下文

cd /k8s/ssl/

kubectl config \

use-context system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig

准备service文件

参考

cd /k8s/service/

cat << EOF > kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-scheduler \\

--authentication-kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig \\

--authorization-kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig \\

--bind-address=0.0.0.0 \\

--kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig \\

--leader-elect=true \\

--v=2

Restart=always

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

分发scheduler文件并启动

source /k8s/conf/k8s_env.sh

cd /k8s/service

for host in ${MASTERS[@]};do

scp /k8s/ssl/kube-scheduler{,-key}.pem $host:${K8S_SSL_Path}/

scp /k8s/ssl/kube-scheduler.kubeconfig $host:/etc/kubernetes/

scp /k8s/pkg/kubernetes/server/bin/kube-scheduler $host:/usr/local/bin/

scp kube-scheduler.service $host:/etc/systemd/system/kube-scheduler.service

ssh $host "systemctl daemon-reload"

ssh $host "systemctl enable kube-scheduler --now"

done

检查管理组件需要的服务是否正常

kubectl get componentstatuses (可简写为cs)

NAME STATUS MESSAGE ERROR

etcd-0 Healthy {"health":"true","reason":""}

controller-manager Healthy ok

etcd-2 Healthy {"health":"true","reason":""}

etcd-1 Healthy {"health":"true","reason":""}

scheduler Healthy ok

kubelet

准备kubelet证书

kubelet计划每个节点单独配置一个证书文件,因此先使用模板,后面一一替换生成证书

准备kubelet证书申请文件

cat << EOF > /k8s/ssl/kubelet-csr.json.template

{

"CN": "system:node:##nodeIP##",

"key": {

"algo": "rsa",

"size": 2048

},

"hosts": [

"127.0.0.1",

"##nodeIP##"

],

"names": [

{

"C": "CN",

"ST": "HuBei",

"L": "WuHan",

"O": "system:nodes",

"OU": "System"

}

]

}

EOF

创建kubelet证书

cd /k8s/ssl/

source /k8s/conf/k8s_env.sh

for host in ${HOSTS[@]}

do

IP=$(eval echo "$"$host)

sed "s/##nodeIP##/$IP/g" kubelet-csr.json.template > kubelet-csr.${host}.json

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kubelet-csr.${host}.json |cfssljson -bare kubelet.${host}

done

创建kubeconfig

设置集群参数

cd /k8s/ssl/

for host in ${HOSTS[@]}

do

kubectl config \

set-cluster kubernetes \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://$VIP_server \

--kubeconfig=kubelet.kubeconfig.$host

done

设置客户端认证参数

cd /k8s/ssl/

source /k8s/conf/k8s_env.sh

for host in ${HOSTS[@]}

do

kubectl config \

set-credentials system:node:$host \

--client-certificate=kubelet.${host}.pem \

--client-key=kubelet.${host}-key.pem \

--embed-certs=true \

--kubeconfig=kubelet.kubeconfig.$host

done

设置上下文参数

cd /k8s/ssl/

source /k8s/conf/k8s_env.sh

for host in ${HOSTS[@]}

do

kubectl config \

set-context system:node:$host \

--cluster=kubernetes \

--user=system:node:$host \

--kubeconfig=kubelet.kubeconfig.$host

done

设置默认上下文

cd /k8s/ssl/

source /k8s/conf/k8s_env.sh

for host in ${HOSTS[@]}

do

kubectl config \

use-context system:node:$host \

--cluster=kubernetes \

--kubeconfig=kubelet.kubeconfig.$host

done

准备kubelet配置文件

cd /k8s/conf

cat << EOF > kubelet-config.yaml

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 0.0.0.0

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: $K8S_SSL_Path/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

cgroupDriver: systemd

cgroupsPerQOS: true

clusterDNS:

- $CLUSTER_KUBERNETES_SVC_IP

clusterDomain: cluster.local

configMapAndSecretChangeDetectionStrategy: Watch

containerLogMaxFiles: 3

containerLogMaxSize: 10Mi

enforceNodeAllocatable:

- pods

eventBurst: 10

eventRecordQPS: 5

evictionHard:

imagefs.available: 15%

memory.available: 300Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

evictionPressureTransitionPeriod: 5m0s

failSwapOn: true

fileCheckFrequency: 40s

hairpinMode: hairpin-veth

healthzBindAddress: 0.0.0.0

healthzPort: 10248

httpCheckFrequency: 40s

imageGCHighThresholdPercent: 85

imageGCLowThresholdPercent: 80

imageMinimumGCAge: 2m0s

kubeAPIBurst: 100

kubeAPIQPS: 50

makeIPTablesUtilChains: true

maxOpenFiles: 1000000

maxPods: 110

nodeLeaseDurationSeconds: 40

nodeStatusReportFrequency: 1m0s

nodeStatusUpdateFrequency: 10s

oomScoreAdj: -999

podPidsLimit: -1

port: 10250

# disable readOnlyPort

readOnlyPort: 0

resolvConf: /etc/resolv.conf

runtimeRequestTimeout: 2m0s

serializeImagePulls: true

streamingConnectionIdleTimeout: 4h0m0s

syncFrequency: 1m0s

tlsCertFile: $K8S_SSL_Path/kubelet.pem

tlsPrivateKeyFile: $K8S_SSL_Path/kubelet-key.pem

EOF

准备service文件

参考

cd /k8s/service/

cat << EOF > kubelet.service.template

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

WorkingDirectory=/var/lib/kubelet

ExecStart=/usr/local/bin/kubelet \\

--config=/etc/kubernetes/kubelet-config.yaml \\

--container-runtime=remote \\

--container-runtime-endpoint=unix:///var/run/cri-dockerd.sock \\

--hostname-override=##hostIP## \\

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig \\

--pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.9 \\

--root-dir=/var/lib/kubelet \\

--v=2

Restart=always

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

分发kubelet文件并启动

cd /k8s/service/

for host in ${HOSTS[@]}

do

IP=$(eval echo "$"$host)

sed "s@##hostIP##@$IP@g" kubelet.service.template > kubelet.service.$host

ssh $host "mkdir -p $K8S_SSL_Path -p"

ssh $host "mkdir -p /var/lib/kubelet -p"

scp /k8s/ssl/ca*.pem $host:$K8S_SSL_Path/

scp /k8s/ssl/kubelet.${host}.pem $host:$K8S_SSL_Path/kubelet.pem

scp /k8s/ssl/kubelet.${host}-key.pem $host:$K8S_SSL_Path/kubelet-key.pem

scp /k8s/ssl/kubelet.kubeconfig.$host $host:/etc/kubernetes/kubelet.kubeconfig

scp kubelet.service.$host $host:/etc/systemd/system/kubelet.service

scp /k8s/conf/kubelet-config.yaml $host:/etc/kubernetes/kubelet-config.yaml

scp /k8s/pkg/kubernetes/server/bin/kubelet $host:/usr/local/bin/

ssh $host "systemctl enable kubelet --now"

done

kube-proxy

准备kube-proxy证书

准备ku-proxy证书申请文件

cat << EOF > /k8s/ssl/kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "HuBei",

"L": "WuHan",

"O": "system:nodes",

"OU": "System"

}

]

}

EOF

创建kube-proxy证书

cd /k8s/ssl/

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json |cfssljson -bare kube-proxy

创建kubeconfig

设置集群参数

cd /k8s/ssl/

kubectl config \

set-cluster kubernetes \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://$VIP_server \

--kubeconfig=kube-proxy.kubeconfig

设置客户端认证参数

cd /k8s/ssl/

kubectl config \

set-credentials system:kube-proxy \

--client-certificate=kube-proxy.pem \

--client-key=kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

设置上下文参数

cd /k8s/ssl/

kubectl config \

set-context system:kube-proxy \

--cluster=kubernetes \

--user=system:kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

设置默认上下文

cd /k8s/ssl/

kubectl config \

use-context system:kube-proxy --kubeconfig=kube-proxy.kubeconfig

创建kube-proxy配置文件

参数要与前面的对应

cd /k8s/conf/

cat << EOF > kube-proxy-config.yaml.template

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

clientConnection:

kubeconfig: "/etc/kubernetes/kube-proxy.kubeconfig"

clusterCIDR: "$CLUSTER_CIDR"

conntrack:

maxPerCore: 32768

min: 131072

tcpCloseWaitTimeout: 1h0m0s

tcpEstablishedTimeout: 24h0m0s

healthzBindAddress: 0.0.0.0:10256

hostnameOverride: "##hostIP##"

metricsBindAddress: 0.0.0.0:10249

mode: "ipvs"

EOF

准备service文件

cd /k8s/service/

cat << EOF > kube-proxy.service

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

# kube-proxy 根据 --cluster-cidr 判断集群内部和外部流量

## 指定 --cluster-cidr 或 --masquerade-all 选项后

## kube-proxy 会对访问 Service IP 的请求做 SNAT

WorkingDirectory=/var/lib/kube-proxy

ExecStart=/usr/local/bin/kube-proxy \\

--config=/etc/kubernetes/kube-proxy-config.yaml

Restart=always

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

分发kube-proxy文件并启动

cd /k8s/service/

for host in ${HOSTS[@]}

do

IP=$(eval echo "$"$host)

sed "s@##hostIP##@$IP@g" /k8s/conf/kube-proxy-config.yaml.template > /k8s/conf/kube-proxy-config.yaml.$host

# ssh $host "mkdir -p $K8S_SSL_Path -p"

ssh $host "mkdir -p /var/lib/kube-proxy -p"

# scp /k8s/ssl/ca*.pem $host:$K8S_SSL_Path/

# scp /k8s/ssl/kube-proxy{,-key}.pem $host:$K8S_SSL_Path/kubelet.pem

scp /k8s/ssl/kube-proxy.kubeconfig $host:/etc/kubernetes/

scp kube-proxy.service $host:/etc/systemd/system/

scp /k8s/conf/kube-proxy-config.yaml.$host $host:/etc/kubernetes/kube-proxy-config.yaml

scp /k8s/pkg/kubernetes/server/bin/kube-proxy $host:/usr/local/bin/

ssh $host "systemctl enable kube-proxy --now"

done

检查是否起来

kubectl get node,svc,cs -owide

我这边的输出如下

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

node/10.10.21.223 NotReady <none> 31m v1.26.5 10.10.21.223 <none> CentOS Linux 7 (Core) 6.3.3-1.el7.elrepo.x86_64 docker://23.0.6

node/10.10.21.224 NotReady <none> 31m v1.26.5 10.10.21.224 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 docker://23.0.6

node/10.10.21.225 NotReady <none> 31m v1.26.5 10.10.21.225 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 docker://23.0.6

node/10.10.21.226 NotReady <none> 27m v1.26.5 10.10.21.226 <none> CentOS Linux 7 (Core) 6.3.3-1.el7.elrepo.x86_64 docker://23.0.6

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 192.168.0.1 <none> 443/TCP 4h <none>

NAME STATUS MESSAGE ERROR

componentstatus/scheduler Healthy ok

componentstatus/controller-manager Healthy ok

componentstatus/etcd-2 Healthy {"health":"true","reason":""}

componentstatus/etcd-1 Healthy {"health":"true","reason":""}

componentstatus/etcd-0 Healthy {"health":"true","reason":""}

开始初始化

sed -i "s@##node1##@$node1@g" kubeadm_init.yaml

sed -i "s@##SERVICE_CIDR##@$SERVICE_CIDR@g" kubeadm_init.yaml

sed -i "s@##CLUSTER_CIDR##@$CLUSTER_CIDR@g" kubeadm_init.yaml

sed -i "s@##CLUSTER_KUBERNETES_SVC_IP##@$CLUSTER_KUBERNETES_SVC_IP@g" kubeadm_init.yaml

kubeadm init --config=kubeadm_init.yaml

配置kubeconfig

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

安装网络插件

cni插件

k8s常见的网络插件有很多,如flannel、cilium、calico等,如果是在各个云厂商的环境跑,可能每个厂商也都有自己的网络插件

这里我们选择calico来部署

从官方下载yaml文件

wget -O /k8s/conf/calico.yaml --no-check-certificate https://docs.tigera.io/archive/v3.25/manifests/calico.yaml

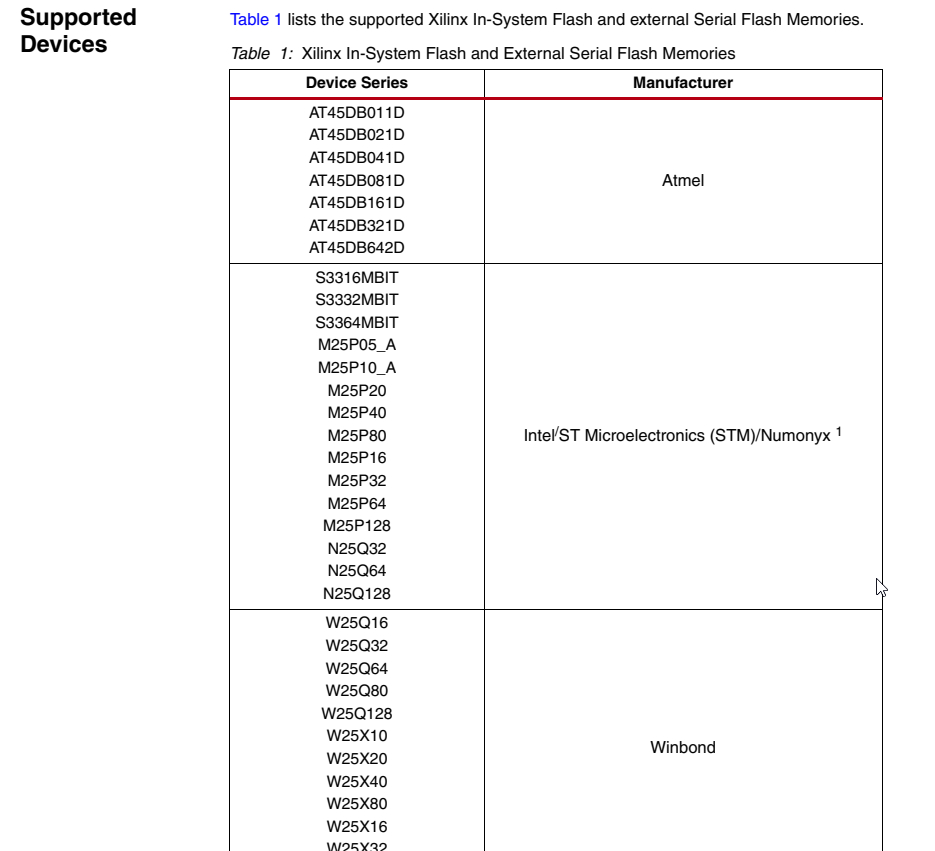

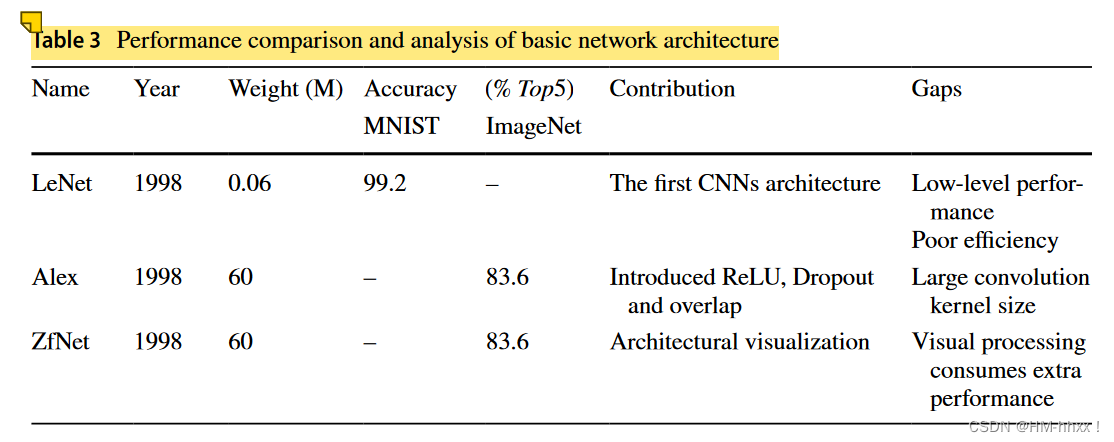

修改CIDR

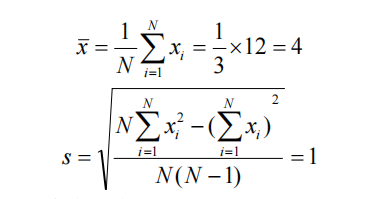

找到这两行,将注释去掉,然后将value修改成自己的pod的cidr

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-4tKNtppi-1684939084814)(https://pek3b.qingstor.com/chenjiao-0606//md/202305242140711.png)]

# 如不确定或不记得的话可以查询之前规划的文件 ,也可以直接echo查询

source /k8s/conf/k8s_env.sh

echo $CLUSTER_CIDR

- name: CALICO_IPV4POOL_CIDR

value: "172.16.0.0/16"

# 我的改出来是这样

创建calico对应pod

kubectl apply -f /k8s/conf/calico.yaml

# 这条命令执行完成会创建很多crd资源

如果pod一直在init,那就应该是出问题了,可以看看日志

kubectl -n kube-system logs calico-node-qrtpz

# 每个人的calico后缀不一样,我这边只是拿我的举例子

Error from server: Get "https://10.10.21.223:10250/containerLogs/kube-system/calico-node-qrtpz/calico-node": x509: certificate is valid for 127.0.0.1, not 10.1021.223

# 看到x509就知道是证书哪里不对,那就可以去检查证书文件,正常是 apiserver、controller-manager、scheduler、kubelet 的证书申请文件写了IP地址,那就重点检查这几个组件的证书申请文件 ,如 kube-scheduler-csr.json

# 找到对应问题之后重新修改证书并且重新分发再重启对应组件,一般都可以解决

coredns

准备yaml文件

cat << EOF > /k8s/conf/coredns.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: Reconcile

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- discovery.k8s.io

resources:

- endpointslices

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: EnsureExists

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: EnsureExists

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

ttl 30

}

prometheus :9153

forward . /etc/resolv.conf {

max_concurrent 1000

}

cache 30

reload

loadbalance

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

replicas: 1

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

spec:

securityContext:

seccompProfile:

type: RuntimeDefault

priorityClassName: system-cluster-critical

serviceAccountName: coredns

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: k8s-app

operator: In

values: ["kube-dns"]

topologyKey: kubernetes.io/hostname

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

nodeSelector:

kubernetes.io/os: linux

containers:

- name: coredns

image: coredns/coredns:1.9.3

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 300Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /ready

port: 8181

scheme: HTTP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: $CLUSTER_KUBERNETES_SVC_IP

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP

EOF

部署coredns

kubectl apply -f /k8s/conf/coredns.yaml

# 只有cni插件的pod正常之后,coredns的pod才会正常,否则一直是pending

验证集群是否正常

kubectl run mybusybox --image=busybox:1.28 --rm -it # 创建一个busybox的pod

/# nslookup kubernetes

/# kube-dns.kube-system

# 看能否打印正常结果

正常结果如下

/ # nslookup kubernetes

Server: 192.168.0.10

Address 1: 192.168.0.10 kube-dns.kube-system.svc.cluster.local

Name: kubernetes

Address 1: 192.168.0.1 kubernetes.default.svc.cluster.local

/ # nslookup kube-dns.kube-system

Server: 192.168.0.10

Address 1: 192.168.0.10 kube-dns.kube-system.svc.cluster.local

Name: kube-dns.kube-system

Address 1: 192.168.0.10 kube-dns.kube-system.svc.cluster.local

后记

二进制部署完成和kubeadm有一些差别,且二进制的master节点是没有污点的,可以直接调度pod上去,如有需要控制调度可以给node打标签加污点

kubectl label nodes 10.10.21.223 kubernetes.io/os=linux

kubectl label nodes 10.10.21.223 node-role.kubernetes.io/control-plane=

kubectl label nodes 10.10.21.223 node-role.kubernetes.io/master=

kubectl taint node 10.10.21.223 node-role.kubernetes.io/master:NoSchedule

# 这里对应的是节点的name,也就是 kubectl get node -oname 打印出来的结果

kubectl get node

NAME STATUS ROLES AGE VERSION

10.10.21.223 Ready control-plane,master 131m v1.26.5

10.10.21.224 Ready <none> 131m v1.26.5

10.10.21.225 Ready <none> 131m v1.26.5

10.10.21.226 Ready <none> 127m v1.26.5

kubectl describe node 10.10.21.223 |grep -i taints

Taints: node-role.kubernetes.io/master:NoSchedule