文章目录

- 逻辑回归和线性回归的区别?

- 正则化逻辑回归

- 逻辑回归中的梯度下降:

- 模型预测案例

- 解决二分类问题:

- 不同的 λ \lambda λ会产生不同的分类结果:

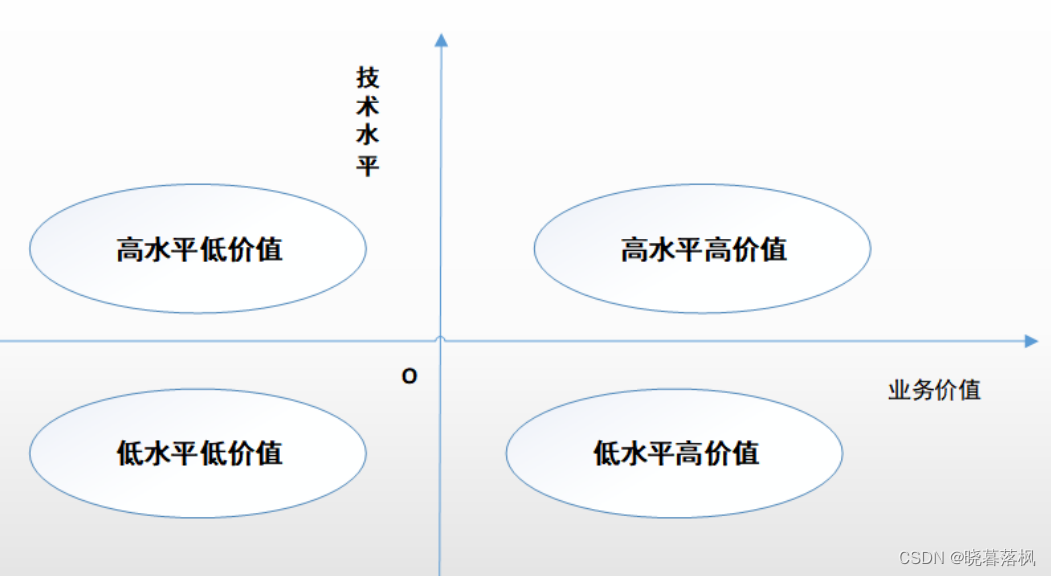

逻辑回归和线性回归的区别?

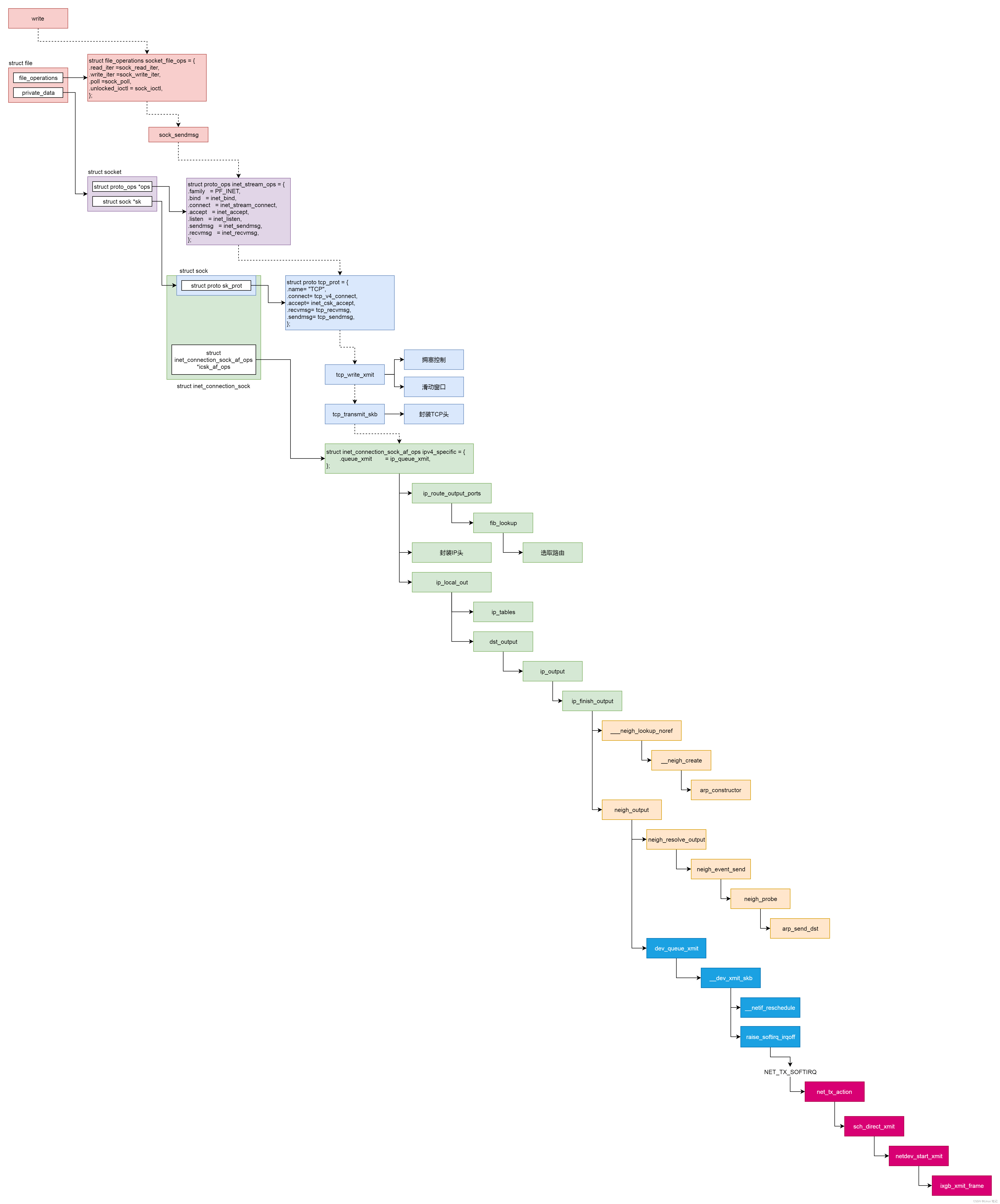

逻辑回归可以理解为线性回归的一个plus版,主要的区别在于逻辑回归使用的是sigmoid函数,而线性回归使用的是线性函数。逻辑回归中通过引入sigmoid函数将线性函数的输出映射到0到1之间的概率值,再加入阈值处理后,使其可以用于二分类问题的预测,如判断一粒肿瘤病理是良性还是恶性。

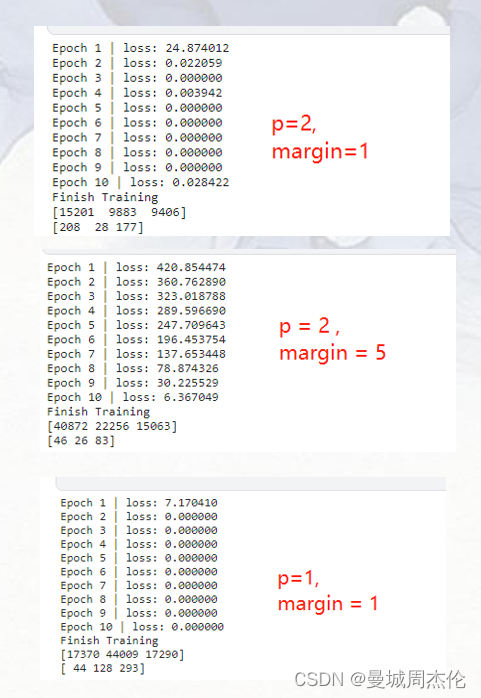

正则化逻辑回归

对于一些复杂的数据,用线性函数进行拟合容易造成欠拟合(underfit),而用过多的高次多项式拟合也可能会造成过拟合(overfit),因此除了学习率 α \alpha α外,通过引入另一个变量 λ \lambda λ,用于将与高次项式点乘的向量 w w w中引起过拟合的系数元素置零,可实现正则化的逻辑回归。

逻辑回归中的梯度下降:

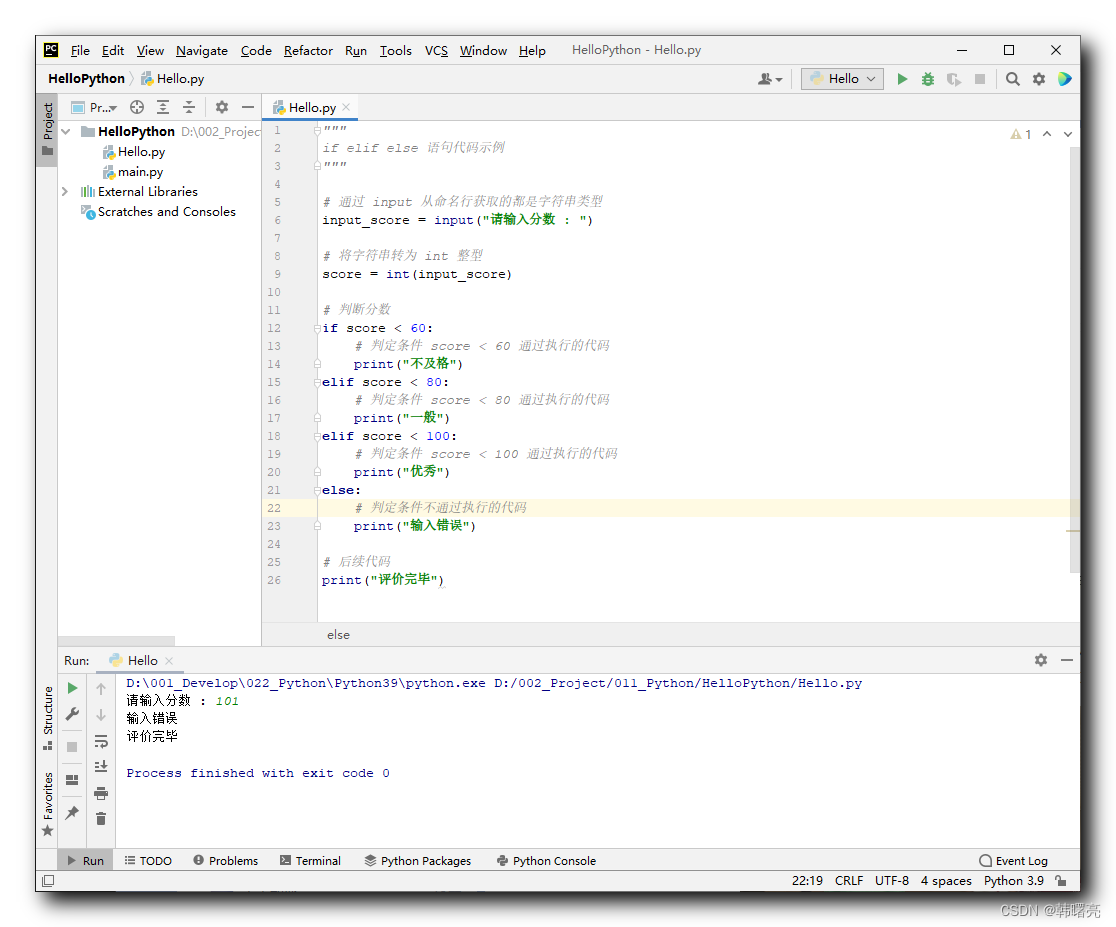

模型预测案例

import numpy as np

import matplotlib.pylab as plt

import scipy.optimize as op

# Load Data

data = np.loadtxt('ex2data1.txt', delimiter=',')

X = data[:, 0:2]

Y = data[:, 2]

# ==================== Part 1: Plotting ====================

print('Plotting data with + indicating (y = 1) examples and o indicating (y = 0) examples.')

# 绘制散点图像

def plotData(x, y):

pos = np.where(y == 1)

neg = np.where(y == 0)

p1 = plt.scatter(x[pos, 0], x[pos, 1], marker='+', s=30, color='b')

p2 = plt.scatter(x[neg, 0], x[neg, 1], marker='o', s=30, color='y')

plt.legend((p1, p2), ('Admitted', 'Not admitted'), loc='upper right', fontsize=8)

plt.xlabel('Exam 1 score')

plt.ylabel('Exam 2 score')

plt.show()

plotData(X, Y)

# _ = input('Press [Enter] to continue.')

# ============ Part 2: Compute Cost and Gradient ============

m, n = np.shape(X)

X = np.concatenate((np.ones((m, 1)), X), axis=1)

init_theta = np.zeros((n+1,))

# sigmoid函数

def sigmoid(z):

g = 1/(1+np.exp(-1*z))

return g

# 计算损失函数和梯度函数

def costFunction(theta, x, y):

m = np.size(y, 0)

h = sigmoid(x.dot(theta))

if np.sum(1-h < 1e-10) != 0:

return np.inf

j = -1/m*(y.dot(np.log(h))+(1-y).dot(np.log(1-h)))

return j

def gradFunction(theta, x, y):

m = np.size(y, 0)

grad = 1 / m * (x.T.dot(sigmoid(x.dot(theta)) - y))

return grad

cost = costFunction(init_theta, X, Y)

grad = gradFunction(init_theta, X, Y)

print('Cost at initial theta (zeros): ', cost)

print('Gradient at initial theta (zeros): ', grad)

# _ = input('Press [Enter] to continue.')

# ============= Part 3: Optimizing using fmin_bfgs =============

# 注:此处与原始的情况有些出入

result = op.minimize(costFunction, x0=init_theta, method='BFGS', jac=gradFunction, args=(X, Y))

theta = result.x

print('Cost at theta found by fmin_bfgs: ', result.fun)

print('theta: ', theta)

# 绘制图像

def plotDecisionBoundary(theta, x, y):

pos = np.where(y == 1)

neg = np.where(y == 0)

p1 = plt.scatter(x[pos, 1], x[pos, 2], marker='+', s=60, color='r')

p2 = plt.scatter(x[neg, 1], x[neg, 2], marker='o', s=60, color='y')

plot_x = np.array([np.min(x[:, 1])-2, np.max(x[:, 1]+2)])

plot_y = -1/theta[2]*(theta[1]*plot_x+theta[0])

plt.plot(plot_x, plot_y)

plt.legend((p1, p2), ('Admitted', 'Not admitted'), loc='upper right', fontsize=8)

plt.xlabel('Exam 1 score')

plt.ylabel('Exam 2 score')

plt.show()

plotDecisionBoundary(theta, X, Y)

# _ = input('Press [Enter] to continue.')

# ============== Part 4: Predict and Accuracies ==============

prob = sigmoid(np.array([1, 45, 85]).dot(theta))

print('For a student with scores 45 and 85, we predict an admission probability of: ', prob)

# 预测给定值

def predict(theta, x):

m = np.size(X, 0)

p = np.zeros((m,))

pos = np.where(x.dot(theta) >= 0)

neg = np.where(x.dot(theta) < 0)

p[pos] = 1

p[neg] = 0

return p

p = predict(theta, X)

print('Train Accuracy: ', np.sum(p == Y)/np.size(Y, 0)) #计算训练准确率(p==Y的个数/Y的总个数)

解决二分类问题:

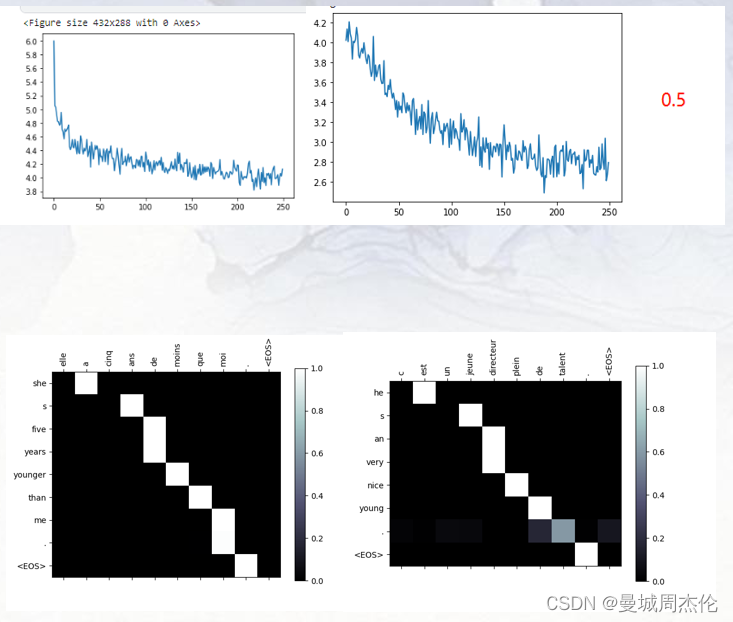

不同的 λ \lambda λ会产生不同的分类结果:

import numpy as np

import matplotlib.pylab as plt

import scipy.optimize as op

# 加载数据

data = np.loadtxt('ex2data2.txt', delimiter=',')

X = data[:, 0:2]

Y = data[:, 2]

def plotData(x, y):

pos = np.where(y == 1)

neg = np.where(y == 0)

p1 = plt.scatter(x[pos, 0], x[pos, 1], marker='+', s=50, color='b')

p2 = plt.scatter(x[neg, 0], x[neg, 1], marker='o', s=50, color='y')

plt.legend((p1, p2), ('Admitted', 'Not admitted'), loc='upper right', fontsize=8)

plt.xlabel('Exam 1 score')

plt.ylabel('Exam 2 score')

plt.show()

plotData(X, Y)

# =========== Part 1: Regularized Logistic Regression ============

# 向高维扩展

def mapFeature(x1, x2):

degree = 6

col = int(degree * (degree + 1) / 2 + degree + 1)

out = np.ones((np.size(x1, 0), col))

count = 1

for i in range(1, degree + 1):

for j in range(i + 1):

out[:, count] = np.power(x1, i - j) * np.power(x2, j)

count += 1

return out

X = mapFeature(X[:, 0], X[:, 1])

init_theta = np.zeros((np.size(X, 1),))

lamd = 10

# sigmoid函数

def sigmoid(z):

g = 1 / (1 + np.exp(-1 * z))

return g

# 损失函数

def costFuncReg(theta, x, y, lam):

m = np.size(y, 0)

h = sigmoid(x.dot(theta))

j = -1 / m * (y.dot(np.log(h)) + (1 - y).dot(np.log(1 - h))) + lam / (2 * m) * theta[1:].dot(theta[1:])

return j

# 梯度函数

def gradFuncReg(theta, x, y, lam):

m = np.size(y, 0)

h = sigmoid(x.dot(theta))

grad = np.zeros(np.size(theta, 0))

grad[0] = 1 / m * (x[:, 0].dot(h - y))

grad[1:] = 1 / m * (x[:, 1:].T.dot(h - y)) + lam * theta[1:] / m

return grad

cost = costFuncReg(init_theta, X, Y, lamd)

print('Cost at initial theta (zeros): ', cost)

# _ = input('Press [Enter] to continue.')

# ============= Part 2: Regularization and Accuracies =============

init_theta = np.zeros((np.size(X, 1),))

lamd = 10

result = op.minimize(costFuncReg, x0=init_theta, method='BFGS', jac=gradFuncReg, args=(X, Y, lamd))

theta = result.x

def plotDecisionBoundary(theta, x, y):

pos = np.where(y == 1)

neg = np.where(y == 0)

p1 = plt.scatter(x[pos, 1], x[pos, 2], marker='+', s=60, color='r')

p2 = plt.scatter(x[neg, 1], x[neg, 2], marker='o', s=60, color='y')

u = np.linspace(-1, 1.5, 50)

v = np.linspace(-1, 1.5, 50)

z = np.zeros((np.size(u, 0), np.size(v, 0)))

for i in range(np.size(u, 0)):

for j in range(np.size(v, 0)):

z[i, j] = mapFeature(np.array([u[i]]), np.array([v[j]])).dot(theta)

z = z.T

[um, vm] = np.meshgrid(u, v)

plt.contour(um, vm, z, levels=[0], lw=2)

plt.legend((p1, p2), ('Admitted', 'Not admitted'), loc='upper right', fontsize=8)

plt.xlabel('Microchip Test 1')

plt.ylabel('Microchip Test 2')

plt.title('lambda = 10')

plt.show()

plotDecisionBoundary(theta, X, Y)

# 预测给定值

def predict(theta, x):

m = np.size(X, 0)

p = np.zeros((m,))

pos = np.where(x.dot(theta) >= 0)

neg = np.where(x.dot(theta) < 0)

p[pos] = 1

p[neg] = 0

return p

p = predict(theta, X)

print('Train Accuracy: ', np.sum(p == Y) / np.size(Y, 0))

λ

=

2

:

\lambda=2:

λ=2:

λ

=

10

:

\lambda=10:

λ=10: