文章目录

- 一、集群概况

- 二、RabbitMQ集群部署

- 2.1 安装NFS

- 2.2 创建storageclass存储类

- 2.3 部署RabbitMQ集群

- 2.4 测试

一、集群概况

- 主机规划

| 节点 | IP |

|---|---|

| k8s-master1 | 192.168.2.245 |

| k8s-master2 | 192.168.2.246 |

| k8s-master3 | 192.168.2.247 |

| k8s-node1 | 192.168.2.248 |

| NFS、Rancher | 192.168.2.251 |

- 版本介绍

| 服务 | 版本 |

|---|---|

| centos | 7.9 |

| Rancher(单节点) | 2.5.12 |

| kubernetes | 1.20.15 |

| RabbitMQ | 3.7-management |

二、RabbitMQ集群部署

2.1 安装NFS

NFS Server IP(服务端):192.168.2.251

NFS Client IP(客户端):192.168.2.245

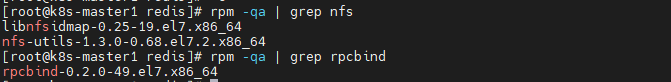

1. NFS Server端安装NFS

操作主机:NFS、Rancher|192.168.2.251

# 1.安装nfs与rpc

yum install -y nfs-utils rpcbind

# 查看是否安装成功

rpm -qa | grep nfs

rpm -qa | grep rpcbind

# 2.创建共享存储文件夹

mkdir -p /k8s/rmq-cluster

# 3.配置nfs

vim /etc/exports

/k8s/rmq-cluster 192.168.2.0/24(rw,no_root_squash,no_all_squash,sync)

# 4.启动服务

systemctl start nfs

systemctl start rpcbind

#添加开机自启

systemctl enable nfs

systemctl enable rpcbind

# 5.配置生效

exportfs -r

# 6.查看挂载情况

showmount -e localhost

#输出下面信息表示正常

Export list for localhost:

/nfs/k8s_data 192.168.2.0/24

- NFS Client安装NFS

操作主机:除了NFS server,其他所有主机

yum -y install nfs-utils

2.2 创建storageclass存储类

1. 创建ServiceAccount账号

vim rabbitmq-rbac.yaml

#复制以下内容:

apiVersion: v1

kind: ServiceAccount

metadata:

name: rmq-cluster

namespace: public-service

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rmq-cluster

namespace: public-service

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rmq-cluster

namespace: public-service

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: rmq-cluster

subjects:

- kind: ServiceAccount

name: rmq-cluster

namespace: public-service

# 创建资源

kubectl create -f rabbitmq-rbac.yaml

2. 创建provisioner

(也可称为供应者、置备程序、存储分配器)

vim nfs-client-provisioner.yaml

# 复制以下内容:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

namespace: public-service

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: rmq-cluster #这个serviceAccountName就是上面创建ServiceAccount账号

containers:

- name: nfs-client-provisioner

image: quay.io/external_storage/nfs-client-provisioner:latest

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME #PROVISIONER_NAME的值就是本清单的顶部定义的name

value: nfs-client-provisioner

- name: NFS_SERVER #这个NFS_SERVER参数的值就是nfs服务器的IP地址

value: 192.168.2.251

- name: NFS_PATH #这个NFS_PATH参数的值就是nfs服务器的共享目录

value: /k8s/rmq-cluster

volumes:

- name: nfs-client-root

nfs: #这里就是配置nfs服务器的ip地址和共享目录

server: 192.168.2.251

path: /k8s/rmq-cluster

# 创建资源

kubectl create -f nfs-client-provisioner.yaml

3. 创建StorageClass

vim nfs-storageclass.yaml

# 复制以下内容:

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: rabbitmq-storageclass

parameters:

archiveOnDelete: 'false'

provisioner: nfs-client-provisioner # 与provisioner的name一致

reclaimPolicy: Delete

volumeBindingMode: Immediate

# 创建资源:

kubectl create -f nfs-storageclass.yaml

2.3 部署RabbitMQ集群

1. 创建命名空间

kubectl create namespace public-service

2. 创建访问rabbitmq集群的service和屋头service

vim rabbitmq-service-lb.yaml

复制以下内容:

kind: Service

apiVersion: v1

metadata:

labels:

app: rmq-cluster

type: LoadBalancer

name: rmq-cluster-balancer

namespace: public-service

spec:

ports:

- name: http

port: 15672

protocol: TCP

targetPort: 15672

- name: amqp

port: 5672

protocol: TCP

targetPort: 5672

selector:

app: rmq-cluster

type: NodePort

vim rabbitmq-service-cluster.yaml

复制以下内容:

apiVersion: v1

metadata:

labels:

app: rmq-cluster

name: rmq-cluster

namespace: public-service

spec:

clusterIP: None

ports:

- name: amqp

port: 5672

targetPort: 5672

selector:

app: rmq-cluster

# 创建资源

kubectl create -f rabbitmq-service-lb.yaml rabbitmq-service-cluster.yaml

3. 创建一个secret对象,用来存储rabbitmq的用户名、密码

vim rabbitmq-secret.yaml

复制以下内容:

kind: Secret

apiVersion: v1

metadata:

name: rmq-cluster-secret

namespace: public-service

stringData:

cookie: ERLANG_COOKIE

password: RABBITMQ_PASS

url: amqp://RABBITMQ_USER:RABBITMQ_PASS@rmq-cluster-balancer

username: RABBITMQ_USER

type: Opaque

# 创建资源:

kubectl apply -f rabbitmq-secret.yaml

4. Configmap创建 rabbitmq配置文件

vim rabbitmq-configmap.yaml

复制以下内容:

kind: ConfigMap

apiVersion: v1

metadata:

name: rmq-cluster-config

namespace: public-service

labels:

addonmanager.kubernetes.io/mode: Reconcile

data:

enabled_plugins: |

[rabbitmq_management,rabbitmq_peer_discovery_k8s].

rabbitmq.conf: |

loopback_users.guest = false

default_user = RABBITMQ_USER

default_pass = RABBITMQ_PASS

## Clustering

cluster_formation.peer_discovery_backend = rabbit_peer_discovery_k8s

cluster_formation.k8s.host = kubernetes.default.svc.cluster.local

cluster_formation.k8s.address_type = hostname

#################################################

# public-service is rabbitmq-cluster's namespace#

#################################################

cluster_formation.k8s.hostname_suffix = .rmq-cluster.public-service.svc.cluster.local

cluster_formation.node_cleanup.interval = 10

cluster_formation.node_cleanup.only_log_warning = true

cluster_partition_handling = autoheal

## queue master locator

queue_master_locator=min-masters

# 创建资源

kubectl apply -f rabbitmq-configmap.yaml

5. 通过statefulset类型创建rabbitmq集群

vim rabbitmq-cluster-sts.yaml

复制以下内容:

kind: StatefulSet

apiVersion: apps/v1

metadata:

labels:

app: rmq-cluster

name: rmq-cluster

namespace: public-service

spec:

replicas: 3

selector:

matchLabels:

app: rmq-cluster

serviceName: rmq-cluster

template:

metadata:

labels:

app: rmq-cluster

spec:

containers:

- args:

- -c

- cp -v /etc/rabbitmq/rabbitmq.conf ${RABBITMQ_CONFIG_FILE}; exec docker-entrypoint.sh

rabbitmq-server

command:

- sh

env:

- name: RABBITMQ_DEFAULT_USER #登陆用户名和密码都存储在一个secret对象中

valueFrom:

secretKeyRef:

key: username

name: rmq-cluster-secret

- name: RABBITMQ_DEFAULT_PASS

valueFrom:

secretKeyRef:

key: password

name: rmq-cluster-secret

- name: RABBITMQ_ERLANG_COOKIE

valueFrom:

secretKeyRef:

key: cookie

name: rmq-cluster-secret

- name: K8S_SERVICE_NAME

value: rmq-cluster

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: RABBITMQ_USE_LONGNAME

value: "true"

- name: RABBITMQ_NODENAME

value: rabbit@$(POD_NAME).rmq-cluster.$(POD_NAMESPACE).svc.cluster.local

- name: RABBITMQ_CONFIG_FILE

value: /var/lib/rabbitmq/rabbitmq.conf

image: registry.cn-hongkong.aliyuncs.com/susie/rabbitmq:3.7-management

imagePullPolicy: IfNotPresent

livenessProbe:

exec:

command:

- rabbitmqctl

- status

initialDelaySeconds: 30

timeoutSeconds: 10

name: rabbitmq

ports:

- containerPort: 15672

name: http

protocol: TCP

- containerPort: 5672

name: amqp

protocol: TCP

readinessProbe:

exec:

command:

- rabbitmqctl

- status

initialDelaySeconds: 10

timeoutSeconds: 10

volumeMounts:

- mountPath: /etc/rabbitmq

name: config-volume

readOnly: false

# - mountPath: /var/lib/rabbitmq

# name: rabbitmq-storage

# readOnly: false

serviceAccountName: rmq-cluster

terminationGracePeriodSeconds: 30

volumes:

- configMap:

items:

- key: rabbitmq.conf

path: rabbitmq.conf

- key: enabled_plugins

path: enabled_plugins

name: rmq-cluster-config

name: config-volume

volumeClaimTemplates:

- metadata:

name: rabbitmq-storage

spec:

accessModes:

- ReadWriteMany

storageClassName: "rabbitmq-storage-class" # 配置前面创建好的storageclass名称

resources:

requests:

storage: 1Gi #设置大小

# 创建资源:

kubectl apply -f rabbitmq-cluster-sts.yaml

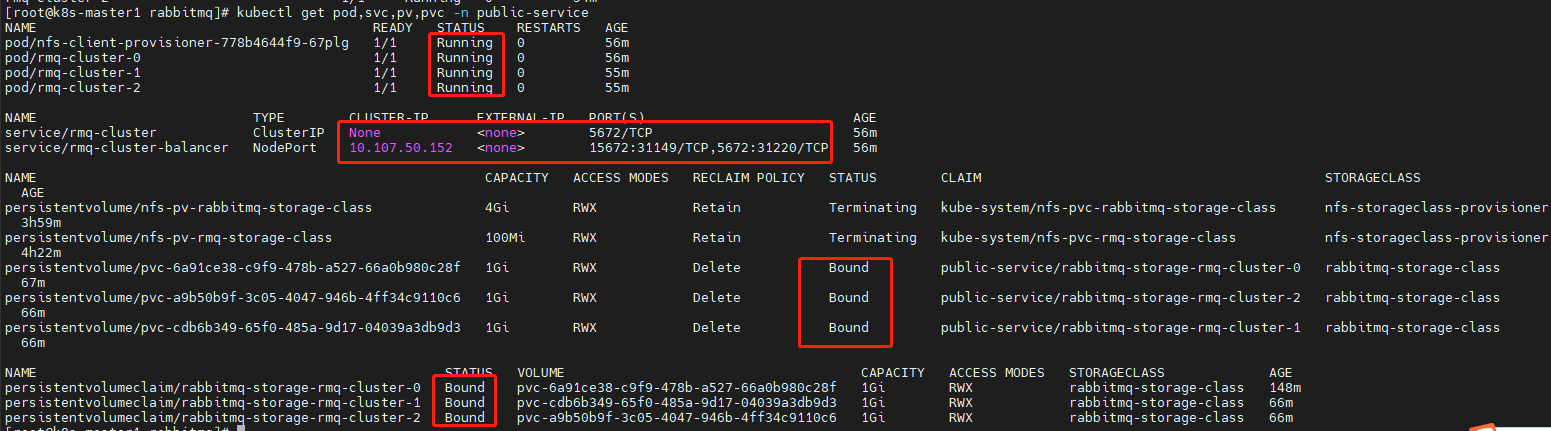

查看资源创建情况:

kubectl get pod,svc,pv,pvc -n public-service

可以看到3个pod 已经running,并且pvc 均已自动绑定。

2.4 测试

1. 进入一个pod,查看rabbitmq集群状态:

[root@k8s-master1 rabbitmq]# kubectl exec -it rmq-cluster-0 -n public-service bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

root@rmq-cluster-0:/# rabbitmqctl cluster_status

Cluster status of node rabbit@rmq-cluster-0.rmq-cluster.public-service.svc.cluster.local ...

[{nodes,

[{disc,

['rabbit@rmq-cluster-0.rmq-cluster.public-service.svc.cluster.local',

'rabbit@rmq-cluster-1.rmq-cluster.public-service.svc.cluster.local',

'rabbit@rmq-cluster-2.rmq-cluster.public-service.svc.cluster.local']}]},

{running_nodes,

['rabbit@rmq-cluster-2.rmq-cluster.public-service.svc.cluster.local',

'rabbit@rmq-cluster-1.rmq-cluster.public-service.svc.cluster.local',

'rabbit@rmq-cluster-0.rmq-cluster.public-service.svc.cluster.local']},

{cluster_name,

<<"rabbit@rmq-cluster-0.rmq-cluster.public-service.svc.cluster.local">>},

{partitions,[]},

{alarms,

[{'rabbit@rmq-cluster-2.rmq-cluster.public-service.svc.cluster.local',[]},

{'rabbit@rmq-cluster-1.rmq-cluster.public-service.svc.cluster.local',[]},

{'rabbit@rmq-cluster-0.rmq-cluster.public-service.svc.cluster.local',

[]}]}]

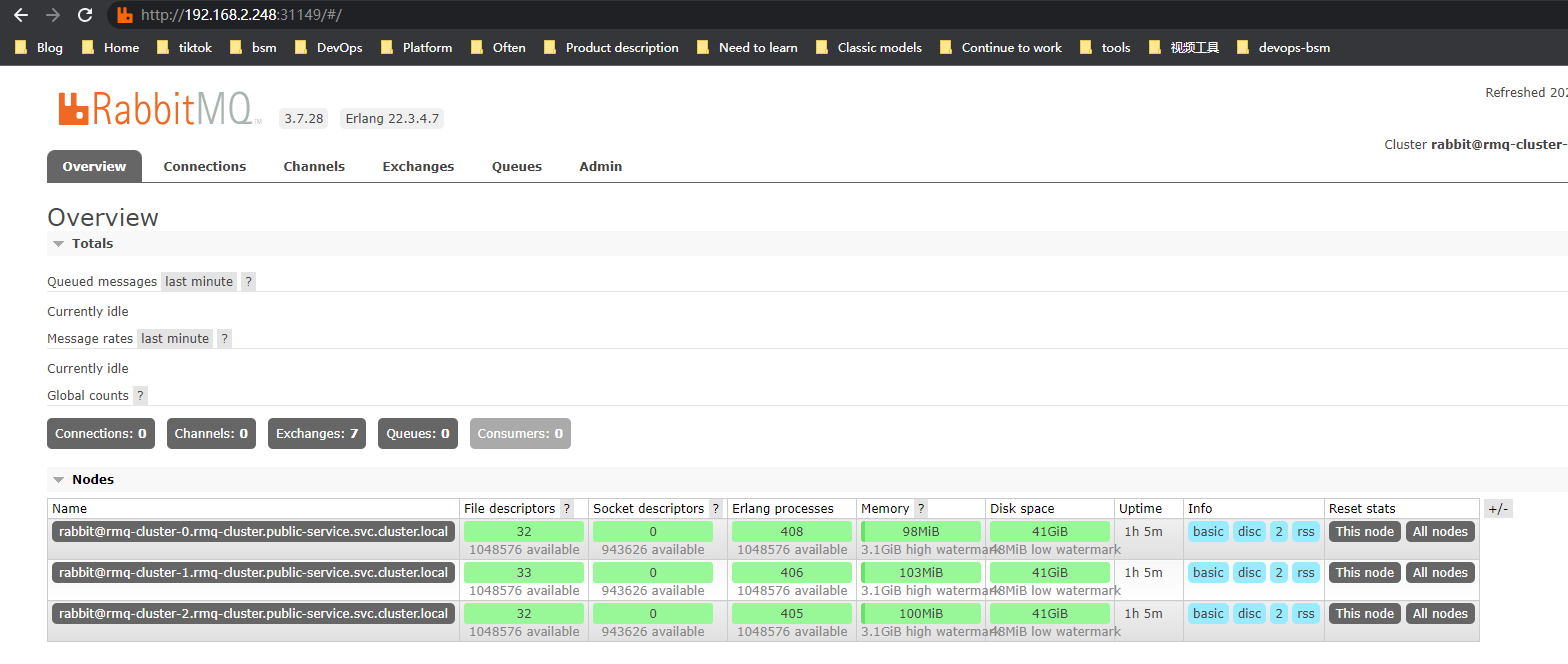

2. RabbitMQ Management 管理界面查看集群状态

http://nodeip:service暴露的IP登录管理界面,这里是http://192.168.2.248:31149/

部署完成。

参考文章:

k8s部署rabbitMQ集群