文章目录

- 1、数据准备

- 1.1 VOC转COCO

- 2、使用sahi切图

- 2.1 切图分析及过程可视化

- 2.2 使用完整的切图命令进行切图

- 2.3 对各个数据集的状态进行查看

- 2.4 过滤数据集中不合适的框

- 3、转换成VOC

- 4、生成训练数据

- 5、模型训练

- 6、模型推理

使用picodet进行小目标检测。

本文以检测小目标乒乓球为例,包括数据的准备及训练全流程。

1、数据准备

这次有两个分辩率的输入分别是416和256,对应的切图多尺度是这么做的:

416:[320, 352, 384, 416, 448, 480, 512],对应configs/picodet/picodet_s_416_voc_npu_pingpang_20230322.yml这个配置文件

256: [160, 192, 224, 256, 288, 320, 352],对应configs/picodet/picodet_s_416_voc_npu_pingpang_20230322.yml这个配置文件

以上各自的分辩率。

配置文件的多尺度如下:

batch_transforms:

# - BatchRandomResize: {target_size: [320, 352, 384, 416, 448, 480, 512], random_size: True, random_interp: True, keep_ratio: False}

- BatchRandomResize: {target_size: [160, 192, 224, 256, 288, 320, 352], random_size: True, random_interp: True, keep_ratio: False}

数据准备流程是:

voc->coco->sahi切图->转voc->生成训练数据

官方的主流是支持coco的,但我们使用voc是为了使用labelimg进行查看

原始数据位置:dataset/pqdetection_voc

pqdetection_voc

|----images

| |----a.jpg

| |----b.jpg

|----labels

| |----a.xml

| |----ab.xml

|----label_list.txt #写有类别名称

1.1 VOC转COCO

这个有我的其它博客链接,这里写一部分单独的代码

import os

import glob

import json

from tqdm import tqdm

import xml.etree.ElementTree as ET

def voc_xmls_to_cocojson(annpath,imgpath,labelpath, output_dir, output_file):

"""voc xmls 转cocojson,只生成一个json的文件

Args:

annpath (_type_): 标注文件xml的路径

imgpath (_type_): 图片的路径

labelpath (_type_): 标签文件的路径

output_dir (_type_): json保存路径

output_file (_type_): json文件名

"""

img_names = os.listdir(imgpath)

annotation_paths = glob.glob(os.path.join(annpath,'*.xml'))

with open(labelpath, 'r') as f:

labels_str = f.read().split()

labels_ids = list(range(1, len(labels_str) + 1))

label2id=dict(zip(labels_str, labels_ids))

output_json_dict = {

"images": [],

"type": "instances",

"annotations": [],

"categories": []

}

bnd_id = 1 # bounding box start id

im_id = 0

print('Start converting !')

for xml_path in tqdm(annotation_paths): # 在这里我们以xml为基础,而不是图片,因为有些图片没有目标,没有生成xml文件;这样必须用到glob,这会一定程度上减小程序运行速度

# Read annotation xml

im_dir = glob.glob(os.path.join(imgpath,os.path.splitext(os.path.basename(xml_path))[0]+".*"))[0]

img_name = os.path.basename(im_dir)

ann_tree = ET.parse(xml_path)

ann_root = ann_tree.getroot()

size = ann_root.find('size')

width = float(size.findtext('width'))

height = float(size.findtext('height'))

img_info = {

'file_name': img_name,

'height': height,

'width': width,

'id': im_id

}

# temp={"file_name":}

output_json_dict['images'].append(img_info)

for obj in ann_root.findall('object'):

label = obj.findtext('name')

assert label in label2id, "label is not in label2id."

category_id = label2id[label]

bndbox = obj.find('bndbox')

xmin = float(bndbox.findtext('xmin'))

ymin = float(bndbox.findtext('ymin'))

xmax = float(bndbox.findtext('xmax'))

ymax = float(bndbox.findtext('ymax'))

assert xmax > xmin and ymax > ymin, "Box size error."

o_width = xmax - xmin

o_height = ymax - ymin

ann = {

'area': o_width * o_height,

'iscrowd': 0,

'bbox': [xmin, ymin, o_width, o_height],

'category_id': category_id,

'ignore': 0,

}

ann.update({'image_id': im_id, 'id': bnd_id})

output_json_dict['annotations'].append(ann)

bnd_id = bnd_id + 1

im_id += 1

for label, label_id in label2id.items():

category_info = {'supercategory': 'none', 'id': label_id, 'name': label}

output_json_dict['categories'].append(category_info)

output_file = os.path.join(output_dir, output_file)

if not os.path.exists(output_dir):

os.makedirs(output_dir,exist_ok=True)

with open(output_file, 'w') as f:

output_json = json.dumps(output_json_dict)

f.write(output_json)

voc_xmls_to_cocojson(annpath='dataset/pqdetection_voc/labels/',

imgpath="dataset/pqdetection_voc/images",

labelpath="dataset/pqdetection_voc/label_list.txt",

output_dir="dataset/pqdetection_coco/",

output_file='pddetection.json')

Start converting !

100%|██████████| 1477/1477 [00:00<00:00, 11965.53it/s]

查看转换后数据的状态,sahi有许多个的关于coco的函数,可以查看github相关链接

from sahi.utils.coco import Coco

coco=Coco.from_coco_dict_or_path("dataset/pqdetection_coco/pddetection.json")

coco.stats

indexing coco dataset annotations...

Loading coco annotations: 100%|██████████| 1477/1477 [00:00<00:00, 5365.99it/s]

{'num_images': 1477,

'num_annotations': 3924,

'num_categories': 1,

'num_negative_images': 0,

'num_images_per_category': {'ball': 1477},

'num_annotations_per_category': {'ball': 3924},

'min_num_annotations_in_image': 1,

'max_num_annotations_in_image': 25,

'avg_num_annotations_in_image': 2.6567366283006093,

'min_annotation_area': 36,

'max_annotation_area': 1158852,

'avg_annotation_area': 5425.130988786952,

'min_annotation_area_per_category': {'ball': 36},

'max_annotation_area_per_category': {'ball': 1158852}}

2、使用sahi切图

切图有两个尺度的,一个是416,一个是256

from sahi.slicing import slice_coco

from sahi.utils.file import load_json

from PIL import Image,ImageDraw

import matplotlib.pyplot as plt

2.1 切图分析及过程可视化

尝试其中的一张图像

coco_dict = load_json('dataset/pqdetection_coco/pddetection.json')

f,axarr = plt.subplots(1,1,figsize=(12,12))

#read img

img_ind=100

img = Image.open(os.path.join('dataset/pqdetection_voc/images',coco_dict['images'][img_ind]["file_name"]))

# iter annotations

for ann_ind in range(len(coco_dict['annotations'])):

if coco_dict["annotations"][ann_ind]['image_id']==coco_dict['images'][img_ind]['id']:

xywh = coco_dict["annotations"][ann_ind]["bbox"]

xyxy=[xywh[0],xywh[1],xywh[0]+xywh[2],xywh[1]+xywh[3]]

ImageDraw.Draw(img).rectangle(xyxy,width=2)

axarr.imshow(img)

上图标注出了乒乓球位置,是个白框;更多的切图效果可以查看https://github.com/obss/sahi/blob/main/demo/slicing.ipynb

2.2 使用完整的切图命令进行切图

进行切图,图片大小256x256,重叠0.2

coco_dict,coco_path = slice_coco(

coco_annotation_file_path="dataset/pqdetection_coco/pddetection.json",

image_dir='dataset/pqdetection_voc/images',

output_coco_annotation_file_name='sliced_coco.json',

ignore_negative_samples=False,

output_dir='sliced_coco',

slice_height=256,

slice_width=256,

overlap_height_ratio=0.2,

overlap_width_ratio=0.2,

min_area_ratio=0.1,

verbose=True)

以上代码如果图片多,最好放到一个脚本里执行。其中min_arae_ratio的定义是(标注框面积/crop图面积)/(标注框面积/原图面积)<min_area_ration那么标注就相当与没有。

ignore_negative_samples这个参数是指切后的图如果图片没有标注,是否进行忽略,默认是False,即不忽略,我们如果只关注有标注框的图那么可以设置成True.

以上切图工作会花一些时间。

文件夹保存结果为

sliced_coco:

2023_0302_164336_005_510_0_0_256_256.jpg

2023_0302_164336_005_510_0_205_256_461.jpg等

后边是切图的时候左上右下的结果

还有一个

sliced_coco.json_coco.json的文件,看来是我们指定的output_coco_annotation_file_name=‘sliced_coco.json’,后边又加一个"_coco.json"的结果,

所以我们定义名字的时候可以不这么做。

查看一上切图后的数据集状态:

from sahi.utils.coco import Coco

coco=Coco.from_coco_dict_or_path("sliced_coco/sliced_coco.json_coco.json")

coco.stats

indexing coco dataset annotations...

Loading coco annotations: 100%|██████████| 34451/34451 [00:01<00:00, 33605.44it/s]

{'num_images': 34451,

'num_annotations': 10429,

'num_categories': 1,

'num_negative_images': 27773,

'num_images_per_category': {'ball': 6678},

'num_annotations_per_category': {'ball': 10429},

'min_num_annotations_in_image': 0,

'max_num_annotations_in_image': 11,

'avg_num_annotations_in_image': 1.5616951182988918,

'min_annotation_area': 9,

'max_annotation_area': 65536,

'avg_annotation_area': 3454.7506951769105,

'min_annotation_area_per_category': {'ball': 9},

'max_annotation_area_per_category': {'ball': 65536}}

将ignore_negative_samples设置成True

coco_dict,coco_path = slice_coco(

coco_annotation_file_path="dataset/pqdetection_coco/pddetection.json",

image_dir='dataset/pqdetection_voc/images',

output_coco_annotation_file_name='sliced1',

ignore_negative_samples=False,

output_dir='sliced1_coco',

slice_height=256,

slice_width=256,

overlap_height_ratio=0.2,

overlap_width_ratio=0.2,

min_area_ratio=0.1,

verbose=True)

接着查看状态:

from sahi.utils.coco import Coco

coco=Coco.from_coco_dict_or_path("sliced_coco1/sliced1_coco.json")

coco.stats

indexing coco dataset annotations...

Loading coco annotations: 100%|██████████| 6678/6678 [00:00<00:00, 8963.77it/s]

{'num_images': 6678,

'num_annotations': 10429,

'num_categories': 1,

'num_negative_images': 0,

'num_images_per_category': {'ball': 6678},

'num_annotations_per_category': {'ball': 10429},

'min_num_annotations_in_image': 1,

'max_num_annotations_in_image': 11,

'avg_num_annotations_in_image': 1.5616951182988918,

'min_annotation_area': 9,

'max_annotation_area': 65536,

'avg_annotation_area': 3454.7506951769105,

'min_annotation_area_per_category': {'ball': 9},

'max_annotation_area_per_category': {'ball': 65536}}

paddle 自带命令"python tools/slice_image.py --image_dir --json_path --output_dir --slice_size --overlap_ratio",但少了ignore_negative_samples,这个参数对与密集小目标可以设置成False,但我们的小目标图在原始图上只有一小部分,所在背景图太多,设置成True更合适,关于slice命令的参数,如下:

from sahi.scripts.slice_coco import slice

help(slice)

Help on function slice in module sahi.scripts.slice_coco:

slice(image_dir: str, dataset_json_path: str, slice_size: int = 512, overlap_ratio: float = 0.2, ignore_negative_samples: bool = False, output_dir: str = 'runs/slice_coco', min_area_ratio: float = 0.1)

Args:

image_dir (str): directory for coco images

dataset_json_path (str): file path for the coco dataset json file

slice_size (int)

overlap_ratio (float): slice overlap ratio

ignore_negative_samples (bool): ignore images without annotation

output_dir (str): output export dir

min_area_ratio (float): If the cropped annotation area to original

annotation ratio is smaller than this value, the annotation

is filtered out. Default 0.1.

切图命令如下,其中overlap_ratio越大,分割的越密集也就会越慢,数据集特别小,标注框附近背景变化大的时候可以把这个参数设置大。这个命令会只显示进度条,用来查看进度

slice(

image_dir="dataset/pqdetection_voc/images/",

dataset_json_path="dataset/pqdetection_coco/pddetection.json",

output_dir="dataset/pqdetection_sliced/pq_256_075",

slice_size=256,

overlap_ratio=0.75,

ignore_negative_samples=True,

min_area_ratio=0.1 )

生成文件结构是

dataset/pqdetection_sliced/pq_256_075

|

|----pddetections_images_256_075/*.jpg

|

|----pddetection_256_075.json

文件的状态是:

from sahi.utils.coco import Coco

coco=Coco.from_coco_dict_or_path("dataset/pqdetection_sliced/pq_256_075/pddetection_256_075.json")

coco.stats

indexing coco dataset annotations...

Loading coco annotations: 100%|██████████| 44695/44695 [00:05<00:00, 8403.17it/s]

{'num_images': 44695,

'num_annotations': 67009,

'num_categories': 1,

'num_negative_images': 0,

'num_images_per_category': {'ball': 44695},

'num_annotations_per_category': {'ball': 67009},

'min_num_annotations_in_image': 1,

'max_num_annotations_in_image': 12,

'avg_num_annotations_in_image': 1.4992504754446807,

'min_annotation_area': 6,

'max_annotation_area': 65536,

'avg_annotation_area': 3860.9125938306793,

'min_annotation_area_per_category': {'ball': 6},

'max_annotation_area_per_category': {'ball': 65536}}

同理完成其它尺度的切割,过程比较慢,可以用个多进程来完成 以下是我最终使用的代码

from multiprocessing import Process

import os

sizes=[160,192,224,256,288,320,352,384,416,448,480,512]

ratios=[0.75]*len(sizes)

out_size_ratio=[[f"dataset/pqdetection_sliced/pq_{size}_{int(ratio*100)}",size,ratio] for size,ratio in zip(sizes,ratios)]

def slice_my(image_dir="dataset/pqdetection_voc/images/",

dataset_json_path="dataset/pqdetection_coco/pddetection.json",

output_dir="dataset/pqdetection_sliced/pq_256_075",

slice_size=256,

overlap_ratio=0.75,

ignore_negative_samples=True,

min_area_ratio=0.1 ):

print(f"output_dir:{output_dir} pid:{os.getpid()}") #只是想加一个进程id的获取,方便把进程手动停止

slice(

image_dir=image_dir,

dataset_json_path=dataset_json_path,

output_dir=output_dir,

slice_size=slice_size,

overlap_ratio=overlap_ratio,

ignore_negative_samples=ignore_negative_samples,

min_area_ratio= min_area_ratio)

out_size_ratio

[['dataset/pqdetection_sliced/pq_160_75', 160, 0.75],

['dataset/pqdetection_sliced/pq_192_75', 192, 0.75],

['dataset/pqdetection_sliced/pq_224_75', 224, 0.75],

['dataset/pqdetection_sliced/pq_256_75', 256, 0.75],

['dataset/pqdetection_sliced/pq_288_75', 288, 0.75],

['dataset/pqdetection_sliced/pq_320_75', 320, 0.75],

['dataset/pqdetection_sliced/pq_352_75', 352, 0.75],

['dataset/pqdetection_sliced/pq_384_75', 384, 0.75],

['dataset/pqdetection_sliced/pq_416_75', 416, 0.75],

['dataset/pqdetection_sliced/pq_448_75', 448, 0.75],

['dataset/pqdetection_sliced/pq_480_75', 480, 0.75],

['dataset/pqdetection_sliced/pq_512_75', 512, 0.75]]

kwargs=dict(

image_dir="dataset/pqdetection_voc/images/",

dataset_json_path="dataset/pqdetection_coco/pddetection.json",

output_dir=out_size_ratio[0][0],

slice_size=out_size_ratio[0][1],

overlap_ratio=out_size_ratio[0][2],

ignore_negative_samples=True,

min_area_ratio=0.1 )

kwargs

{'image_dir': 'dataset/pqdetection_voc/images/',

'dataset_json_path': 'dataset/pqdetection_coco/pddetection.json',

'output_dir': 'dataset/pqdetection_sliced/pq_160_75',

'slice_size': 160,

'overlap_ratio': 0.75,

'ignore_negative_samples': True,

'min_area_ratio': 0.1}

processer=[]

for i in range(len(out_size_ratio)):

kwargs=dict(

image_dir="dataset/pqdetection_voc/images/",

dataset_json_path="dataset/pqdetection_coco/pddetection.json",

output_dir=out_size_ratio[i][0],

slice_size=out_size_ratio[i][1],

overlap_ratio=out_size_ratio[i][2],

ignore_negative_samples=True,

min_area_ratio=0.1 )

p = Process(target=slice_my,kwargs=kwargs)

processer.append(p)

p.start()

for p in processer: #等待所有进程都结速

p.join()

以上输出会比较乱,在jupyter中执行会有问题,要放到python脚本中执行.最后生成的文件结构是:

pqdetection_sliced

├── pq_160_75

│ ├── pddetection_160_075.json

│ └── pddetection_images_160_075

├── pq_192_75

│ ├── pddetection_192_075.json

│ └── pddetection_images_192_075

├── pq_224_75

│ ├── pddetection_224_075.json

│ └── pddetection_images_224_075

├── pq_256_75

│ ├── pddetection_256_075.json

│ └── pddetection_images_256_075

├── pq_288_75

│ ├── pddetection_288_075.json

│ └── pddetection_images_288_075

├── pq_320_75

│ ├── pddetection_320_075.json

│ └── pddetection_images_320_075

├── pq_352_75

│ ├── pddetection_352_075.json

│ └── pddetection_images_352_075

├── pq_384_75

│ ├── pddetection_384_075.json

│ └── pddetection_images_384_075

├── pq_416_75

│ ├── pddetection_416_075.json

│ └── pddetection_images_416_075

├── pq_448_75

│ ├── pddetection_448_075.json

│ └── pddetection_images_448_075

├── pq_480_75

│ ├── pddetection_480_075.json

│ └── pddetection_images_480_075

└── pq_512_75

├── pddetection_512_075.json

└── pddetection_images_512_075

25 directories, 12 files

2.3 对各个数据集的状态进行查看

import os

import glob

from pprint import pprint

from sahi.utils.coco import Coco

for i in out_size_ratio:

jsonpath = glob.glob(os.path.join(i[0],'*.json'))[0]

coco=Coco.from_coco_dict_or_path(jsonpath)

pprint(f"{os.path.basename(i[0])}:")

pprint(coco.stats)

indexing coco dataset annotations...

Loading coco annotations: 100%|██████████| 60068/60068 [00:05<00:00, 10029.56it/s]

'pq_160_75:'

{'avg_annotation_area': 2752.9663472653942,

'avg_num_annotations_in_image': 1.296097755876673,

'max_annotation_area': 25600,

'max_annotation_area_per_category': {'ball': 25600},

'max_num_annotations_in_image': 9,

'min_annotation_area': 6,

'min_annotation_area_per_category': {'ball': 6},

'min_num_annotations_in_image': 1,

'num_annotations': 77854,

'num_annotations_per_category': {'ball': 77854},

'num_categories': 1,

'num_images': 60068,

'num_images_per_category': {'ball': 60068},

'num_negative_images': 0}

indexing coco dataset annotations...

Loading coco annotations: 100%|██████████| 52959/52959 [00:05<00:00, 9287.43it/s]

'pq_192_75:'

{'avg_annotation_area': 3227.159324899192,

'avg_num_annotations_in_image': 1.3626956702354651,

'max_annotation_area': 36864,

'max_annotation_area_per_category': {'ball': 36864},

'max_num_annotations_in_image': 10,

'min_annotation_area': 7,

'min_annotation_area_per_category': {'ball': 7},

'min_num_annotations_in_image': 1,

'num_annotations': 72167,

'num_annotations_per_category': {'ball': 72167},

'num_categories': 1,

'num_images': 52959,

'num_images_per_category': {'ball': 52959},

'num_negative_images': 0}

indexing coco dataset annotations...

Loading coco annotations: 100%|██████████| 47829/47829 [00:05<00:00, 8223.08it/s]

'pq_224_75:'

{'avg_annotation_area': 3784.9258265381764,

'avg_num_annotations_in_image': 1.41023228585168,

'max_annotation_area': 50176,

'max_annotation_area_per_category': {'ball': 50176},

'max_num_annotations_in_image': 10,

'min_annotation_area': 6,

'min_annotation_area_per_category': {'ball': 6},

'min_num_annotations_in_image': 1,

'num_annotations': 67450,

'num_annotations_per_category': {'ball': 67450},

'num_categories': 1,

'num_images': 47829,

'num_images_per_category': {'ball': 47829},

'num_negative_images': 0}

indexing coco dataset annotations...

Loading coco annotations: 100%|██████████| 44695/44695 [00:05<00:00, 8464.05it/s]

'pq_256_75:'

{'avg_annotation_area': 3860.9125938306793,

'avg_num_annotations_in_image': 1.4992504754446807,

'max_annotation_area': 65536,

'max_annotation_area_per_category': {'ball': 65536},

'max_num_annotations_in_image': 12,

'min_annotation_area': 6,

'min_annotation_area_per_category': {'ball': 6},

'min_num_annotations_in_image': 1,

'num_annotations': 67009,

'num_annotations_per_category': {'ball': 67009},

'num_categories': 1,

'num_images': 44695,

'num_images_per_category': {'ball': 44695},

'num_negative_images': 0}

indexing coco dataset annotations...

Loading coco annotations: 100%|██████████| 38428/38428 [00:04<00:00, 8022.50it/s]

'pq_288_75:'

{'avg_annotation_area': 4340.086797086578,

'avg_num_annotations_in_image': 1.5470230040595399,

'max_annotation_area': 82944,

'max_annotation_area_per_category': {'ball': 82944},

'max_num_annotations_in_image': 13,

'min_annotation_area': 7,

'min_annotation_area_per_category': {'ball': 7},

'min_num_annotations_in_image': 1,

'num_annotations': 59449,

'num_annotations_per_category': {'ball': 59449},

'num_categories': 1,

'num_images': 38428,

'num_images_per_category': {'ball': 38428},

'num_negative_images': 0}

indexing coco dataset annotations...

Loading coco annotations: 100%|██████████| 33576/33576 [00:04<00:00, 7668.71it/s]

'pq_320_75:'

{'avg_annotation_area': 4339.065280923504,

'avg_num_annotations_in_image': 1.6305694543721705,

'max_annotation_area': 102400,

'max_annotation_area_per_category': {'ball': 102400},

'max_num_annotations_in_image': 14,

'min_annotation_area': 6,

'min_annotation_area_per_category': {'ball': 6},

'min_num_annotations_in_image': 1,

'num_annotations': 54748,

'num_annotations_per_category': {'ball': 54748},

'num_categories': 1,

'num_images': 33576,

'num_images_per_category': {'ball': 33576},

'num_negative_images': 0}

indexing coco dataset annotations...

Loading coco annotations: 100%|██████████| 34133/34133 [00:04<00:00, 7323.31it/s]

'pq_352_75:'

{'avg_annotation_area': 4425.495999587941,

'avg_num_annotations_in_image': 1.7063838514047989,

'max_annotation_area': 123904,

'max_annotation_area_per_category': {'ball': 123904},

'max_num_annotations_in_image': 16,

'min_annotation_area': 8,

'min_annotation_area_per_category': {'ball': 8},

'min_num_annotations_in_image': 1,

'num_annotations': 58244,

'num_annotations_per_category': {'ball': 58244},

'num_categories': 1,

'num_images': 34133,

'num_images_per_category': {'ball': 34133},

'num_negative_images': 0}

indexing coco dataset annotations...

Loading coco annotations: 100%|██████████| 28585/28585 [00:04<00:00, 6942.04it/s]

'pq_384_75:'

{'avg_annotation_area': 4594.21496125687,

'avg_num_annotations_in_image': 1.801399335315725,

'max_annotation_area': 147456,

'max_annotation_area_per_category': {'ball': 147456},

'max_num_annotations_in_image': 17,

'min_annotation_area': 8,

'min_annotation_area_per_category': {'ball': 8},

'min_num_annotations_in_image': 1,

'num_annotations': 51493,

'num_annotations_per_category': {'ball': 51493},

'num_categories': 1,

'num_images': 28585,

'num_images_per_category': {'ball': 28585},

'num_negative_images': 0}

indexing coco dataset annotations...

Loading coco annotations: 100%|██████████| 24423/24423 [00:03<00:00, 7061.30it/s]

'pq_416_75:'

{'avg_annotation_area': 5258.0641016721165,

'avg_num_annotations_in_image': 1.7655079228595996,

'max_annotation_area': 173056,

'max_annotation_area_per_category': {'ball': 173056},

'max_num_annotations_in_image': 16,

'min_annotation_area': 9,

'min_annotation_area_per_category': {'ball': 9},

'min_num_annotations_in_image': 1,

'num_annotations': 43119,

'num_annotations_per_category': {'ball': 43119},

'num_categories': 1,

'num_images': 24423,

'num_images_per_category': {'ball': 24423},

'num_negative_images': 0}

indexing coco dataset annotations...

Loading coco annotations: 100%|██████████| 24265/24265 [00:03<00:00, 6604.52it/s]

'pq_448_75:'

{'avg_annotation_area': 4893.795871259963,

'avg_num_annotations_in_image': 1.8925200906655677,

'max_annotation_area': 200704,

'max_annotation_area_per_category': {'ball': 200704},

'max_num_annotations_in_image': 16,

'min_annotation_area': 13,

'min_annotation_area_per_category': {'ball': 13},

'min_num_annotations_in_image': 1,

'num_annotations': 45922,

'num_annotations_per_category': {'ball': 45922},

'num_categories': 1,

'num_images': 24265,

'num_images_per_category': {'ball': 24265},

'num_negative_images': 0}

indexing coco dataset annotations...

Loading coco annotations: 100%|██████████| 18265/18265 [00:02<00:00, 7345.95it/s]

'pq_480_75:'

{'avg_annotation_area': 5578.763276481661,

'avg_num_annotations_in_image': 1.9196277032575966,

'max_annotation_area': 230400,

'max_annotation_area_per_category': {'ball': 230400},

'max_num_annotations_in_image': 17,

'min_annotation_area': 8,

'min_annotation_area_per_category': {'ball': 8},

'min_num_annotations_in_image': 1,

'num_annotations': 35062,

'num_annotations_per_category': {'ball': 35062},

'num_categories': 1,

'num_images': 18265,

'num_images_per_category': {'ball': 18265},

'num_negative_images': 0}

indexing coco dataset annotations...

Loading coco annotations: 100%|██████████| 17726/17726 [00:02<00:00, 6080.24it/s]

'pq_512_75:'

{'avg_annotation_area': 5312.11180071894,

'avg_num_annotations_in_image': 2.0088006318402347,

'max_annotation_area': 262144,

'max_annotation_area_per_category': {'ball': 262144},

'max_num_annotations_in_image': 17,

'min_annotation_area': 7,

'min_annotation_area_per_category': {'ball': 7},

'min_num_annotations_in_image': 1,

'num_annotations': 35608,

'num_annotations_per_category': {'ball': 35608},

'num_categories': 1,

'num_images': 17726,

'num_images_per_category': {'ball': 17726},

'num_negative_images': 0}

2.4 过滤数据集中不合适的框

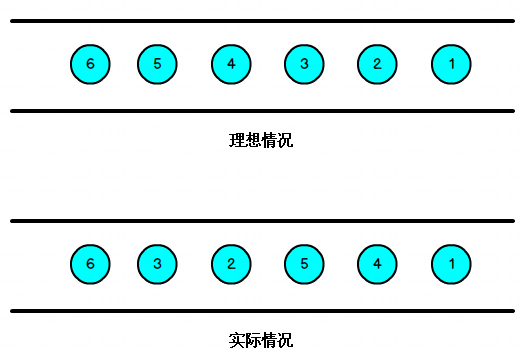

切图过程中必然会产生一些只切了一部分目标物体的图,这部分图要过滤掉

过滤主要:超过图片高宽、面积

import os

import glob

from sahi.utils.coco import Coco

from sahi.utils.coco import remove_invalid_coco_results

from sahi.utils.file import save_json

for i in out_size_ratio:

datapath = i[0]

print(f"Start do {datapath}")

jsonfile = glob.glob(os.path.join(datapath,'*.json'))[0]

jsonname = os.path.splitext(os.path.basename(jsonfile))[0]

savejson=os.path.join(datapath,jsonname+'_filtered.json')

coco = Coco.from_coco_dict_or_path(jsonfile)

print(f" init coco img_num:{len(coco.json['images'])} annottations num:{len(coco.json['annotations'])}")

#因为是标注的图片,所以用图片高宽对标注框直接进行clip

coco = coco.get_coco_with_clipped_bboxes()

#面积

intervals_per_category={"ball":{"min":9,"max":2500}}

area_filtered_coco=coco.get_area_filtered_coco(intervals_per_category=intervals_per_category)

print(f" area filtered coco img_num:{len(area_filtered_coco.json['images'])} annottations num:{len(area_filtered_coco.json['annotations'])}")

save_json(area_filtered_coco.json,savejson)

Start do dataset/pqdetection_sliced/pq_160_75

indexing coco dataset annotations...

Loading coco annotations: 100%|██████████| 60068/60068 [00:06<00:00, 9621.37it/s]

init coco img_num:60068 annottations num:77854

area filtered coco img_num:47588 annottations num:62160

Start do dataset/pqdetection_sliced/pq_192_75

indexing coco dataset annotations...

Loading coco annotations: 100%|██████████| 52959/52959 [00:06<00:00, 8777.43it/s]

init coco img_num:52959 annottations num:72167

area filtered coco img_num:42357 annottations num:58173

Start do dataset/pqdetection_sliced/pq_224_75

indexing coco dataset annotations...

Loading coco annotations: 100%|██████████| 47829/47829 [00:05<00:00, 8405.05it/s]

init coco img_num:47829 annottations num:67450

area filtered coco img_num:38547 annottations num:54666

Start do dataset/pqdetection_sliced/pq_256_75

indexing coco dataset annotations...

Loading coco annotations: 100%|██████████| 44695/44695 [00:05<00:00, 7904.13it/s]

init coco img_num:44695 annottations num:67009

area filtered coco img_num:36976 annottations num:55907

Start do dataset/pqdetection_sliced/pq_288_75

indexing coco dataset annotations...

Loading coco annotations: 100%|██████████| 38428/38428 [00:05<00:00, 7582.49it/s]

init coco img_num:38428 annottations num:59449

area filtered coco img_num:31925 annottations num:49597

Start do dataset/pqdetection_sliced/pq_320_75

indexing coco dataset annotations...

Loading coco annotations: 100%|██████████| 33576/33576 [00:04<00:00, 7037.32it/s]

init coco img_num:33576 annottations num:54748

area filtered coco img_num:28577 annottations num:46788

Start do dataset/pqdetection_sliced/pq_352_75

indexing coco dataset annotations...

Loading coco annotations: 100%|██████████| 34133/34133 [00:04<00:00, 6937.45it/s]

init coco img_num:34133 annottations num:58244

area filtered coco img_num:29367 annottations num:50455

Start do dataset/pqdetection_sliced/pq_384_75

indexing coco dataset annotations...

Loading coco annotations: 100%|██████████| 28585/28585 [00:04<00:00, 6415.28it/s]

init coco img_num:28585 annottations num:51493

area filtered coco img_num:24956 annottations num:45075

Start do dataset/pqdetection_sliced/pq_416_75

indexing coco dataset annotations...

Loading coco annotations: 100%|██████████| 24423/24423 [00:03<00:00, 6390.16it/s]

init coco img_num:24423 annottations num:43119

area filtered coco img_num:21233 annottations num:37535

Start do dataset/pqdetection_sliced/pq_448_75

indexing coco dataset annotations...

Loading coco annotations: 100%|██████████| 24265/24265 [00:03<00:00, 7433.75it/s]

init coco img_num:24265 annottations num:45922

area filtered coco img_num:21508 annottations num:40713

Start do dataset/pqdetection_sliced/pq_480_75

indexing coco dataset annotations...

Loading coco annotations: 100%|██████████| 18265/18265 [00:03<00:00, 5657.12it/s]

init coco img_num:18265 annottations num:35062

area filtered coco img_num:16187 annottations num:30991

Start do dataset/pqdetection_sliced/pq_512_75

indexing coco dataset annotations...

Loading coco annotations: 100%|██████████| 17726/17726 [00:03<00:00, 5450.05it/s]

init coco img_num:17726 annottations num:35608

area filtered coco img_num:15916 annottations num:31855

3、转换成VOC

转换voc一个是方便我们用labelimg查看标注的情况,另一个方便组合数据,当然也会增加存储空间。当然coco本身也可以进行组合,如下:

from sahi.utils.coco import Coco

from sahi.utils.file import save_json

coco_1 = Coco.from_coco_dict_or_path("dataset/pqdetection_sliced/pq_160_75/pddetection_160_075.json","dataset/pqdetection_sliced/pq_160_75/pddetection_images_160_075")

coco_2 = Coco.from_coco_dict_or_path("dataset/pqdetection_sliced/pq_192_75/pddetection_192_075.json","dataset/pqdetection_sliced/pq_192_75/pddetection_images_192_075")

indexing coco dataset annotations...

Loading coco annotations: 100%|██████████| 60068/60068 [00:06<00:00, 8639.41it/s]

indexing coco dataset annotations...

Loading coco annotations: 100%|██████████| 52959/52959 [00:06<00:00, 8750.60it/s]

coco_1.merge(coco_2)

save_json(coco_1.json,"merged_coco.json")

'desired_name2id' is not specified, combining all categories.

Categories are formed as:

[{'id': 0, 'name': 'ball', 'supercategory': 'ball'}]

要注意的是原先的json中保存的文件名都是图片文件名本身,而合并后文件名变成了绝对路径,这个应该是防止存在同名文件的情况。如果合并json,最后再转换voc,如果有同名的也是不好处理,所以我们各自单独转换,这样即使文件名相同也不怕。最后图片的文件名是原文件名_xmin_ymin_xmax_ymax.jpg

from pycocotools.coco import COCO

import os, cv2, shutil

from lxml import etree, objectify

from tqdm import tqdm

from PIL import Image

import numpy as np

import time

import json

import argparse

def cover_copy(src,dst):

'''

src和dst都必须是文件,该函数是执行覆盖操作

'''

if os.path.exists(dst):

os.remove(dst)

shutil.copy(src,dst)

else:

shutil.copy(src,dst)

def coco2voc(imgpath='VOCdevkit/COCO_VOC',jsonpath=None,savepath='COCO'):

"""

savepath:用来存放转换后数据和标注文件

imgpath:用来指定原始COCO数据集的存放位置

"""

img_savepath= os.path.join(savepath,'images')

ann_savepath=os.path.join(savepath,'labels')

for p in [img_savepath,ann_savepath]:

if os.path.exists(p):

shutil.rmtree(p)

os.makedirs(p)

else:

os.makedirs(p)

start = time.time()

no_ann=[] #用来存放没有标注数据的图片的id,并将这些图片复制到results文件夹中

not_rgb=[] #是灰度图,同样将其保存

print('loading annotations into memory...')

tic = time.time()

with open(jsonpath, 'r') as f:

dataset_ann = json.load(f)

assert type(

dataset_ann

) == dict, 'annotation file format {} not supported'.format(

type(dataset))

print('Done (t={:0.2f}s)'.format(time.time() - tic))

coco = COCO(jsonpath)

classes = dict()

for cat in coco.dataset['categories']:

classes[cat['id']] = cat['name']

imgIds = coco.getImgIds()

# imgIds=imgIds[0:1000]#测试用,抽取10张图片,看下存储效果

for imgId in tqdm(imgIds):

img = coco.loadImgs(imgId)[0]

filename = img['file_name']

filepath=os.path.join(imgpath,filename)

annIds = coco.getAnnIds(imgIds=img['id'], iscrowd=None)

anns = coco.loadAnns(annIds)

if not len(anns):

# print(f"{dataset}:{imgId}该文件没有标注信息,将其复制到{dataset}_noann_result中,以使查看")

no_ann.append(imgId)

result_path = os.path.join(savepath,"noann_result")

dest_path = os.path.join(result_path,filename)

if not os.path.exists(result_path):

os.makedirs(result_path)

cover_copy(filepath,dest_path)

continue #如果没有标注信息,则把没有标注信息的图片移动到相关结果文件 noann_result中,来进行查看 ,然后返回做下一张图

#有标注信息,接着往下走,获取标注信息

objs = []

for ann in anns:

name = classes[ann['category_id']]

if 'bbox' in ann:

# print('bbox in ann',imgId)

bbox = ann['bbox']

xmin = (int)(bbox[0])

ymin = (int)(bbox[1])

xmax = (int)(bbox[2] + bbox[0])

ymax = (int)(bbox[3] + bbox[1])

obj = [name, 1.0, xmin, ymin, xmax, ymax]

#标错框在这里

if not(xmin-xmax==0 or ymin-ymax==0):

objs.append(obj)

else:

print(f"{imgId} bbox在标注文件中不存在")# 单张图有多个标注框,某个类别没有框

annopath = os.path.join(ann_savepath,filename[:-3] + "xml") #生成的xml文件保存路径

dst_path = os.path.join(img_savepath,filename)

im = Image.open(filepath)

image = np.array(im).astype(np.uint8)

if im.mode != "RGB":

# if img.shape[-1] != 3:

# print(f"{dataset}:{imgId}该文件非rgb图,其复制到{dataset}_notrgb_result中,以使查看")

# print(f"img.shape{image.shape} and img.mode{im.mode}")

not_rgb.append(imgId)

result_path = os.path.join(savepath,"notrgb_result")

dest_path = os.path.join(result_path,filename)

if not os.path.exists(result_path):

os.makedirs(result_path)

cover_copy(filepath,dest_path) #复制到notrgb_result来方便查看

im=im.convert('RGB')

image = np.array(im).astype(np.uint8)

im.save(dst_path,quality=95)#图片经过转换后,放到我们需要的位置片

im.close()

else:

cover_copy(filepath, dst_path)#把原始图像复制到目标文件夹

E = objectify.ElementMaker(annotate=False)

anno_tree = E.annotation(

E.folder('VOC'),

E.filename(filename),

E.source(

E.database('COCO'),

E.annotation('VOC'),

E.image('COCO')

),

E.size(

E.width(image.shape[1]),

E.height(image.shape[0]),

E.depth(image.shape[2])

),

E.segmented(0)

)

for obj in objs:

E2 = objectify.ElementMaker(annotate=False)

anno_tree2 = E2.object(

E.name(obj[0]),

E.pose(),

E.truncated("0"),

E.difficult(0),

E.bndbox(

E.xmin(obj[2]),

E.ymin(obj[3]),

E.xmax(obj[4]),

E.ymax(obj[5])

)

)

anno_tree.append(anno_tree2)

etree.ElementTree(anno_tree).write(annopath, pretty_print=True)

print(f"该数据集有{len(no_ann)}/{len(imgIds)}张图片没有instance标注信息,已经这些图片复制到{savepath}/noann_result中以使进行查看")

print(f"该数据集有{len(not_rgb)}/{len(imgIds)}张图片是非RGB图像,已经这些图片复制到{savepath}/notrgb_result中以使进行查看")

duriation = time.time()-start

print(f"数据集处理完成用时{round(duriation/60,2)}分")

for i in out_size_ratio:

coco_path = i[0]

name = os.path.basename(coco_path)

for p in os.listdir(coco_path):

path = os.path.join(coco_path,p)

if os.path.isfile(path) and path.endswith('filtered.json'):

jsonpath=path

elif os.path.isdir(path):

imgpath=path

coco2voc(imgpath,jsonpath,savepath='dataset/pqdetection_sliced2voc/'+name)

loading annotations into memory...

Done (t=0.20s)

loading annotations into memory...

Done (t=0.88s)

creating index...

index created!

100%|██████████| 47588/47588 [01:57<00:00, 404.14it/s]

该数据集有0/47588张图片没有instance标注信息,已经这些图片复制到dataset/pqdetection_sliced2voc/pq_160_75/noann_result中以使进行查看

该数据集有0/47588张图片是非RGB图像,已经这些图片复制到dataset/pqdetection_sliced2voc/pq_160_75/notrgb_result中以使进行查看

数据集处理完成用时1.98分

loading annotations into memory...

Done (t=0.17s)

loading annotations into memory...

Done (t=0.73s)

creating index...

index created!

100%|██████████| 42357/42357 [02:11<00:00, 321.90it/s]

该数据集有0/42357张图片没有instance标注信息,已经这些图片复制到dataset/pqdetection_sliced2voc/pq_192_75/noann_result中以使进行查看

该数据集有0/42357张图片是非RGB图像,已经这些图片复制到dataset/pqdetection_sliced2voc/pq_192_75/notrgb_result中以使进行查看

数据集处理完成用时2.21分

loading annotations into memory...

Done (t=0.16s)

loading annotations into memory...

Done (t=0.72s)

creating index...

index created!

100%|██████████| 38547/38547 [02:00<00:00, 320.49it/s]

该数据集有0/38547张图片没有instance标注信息,已经这些图片复制到dataset/pqdetection_sliced2voc/pq_224_75/noann_result中以使进行查看

该数据集有0/38547张图片是非RGB图像,已经这些图片复制到dataset/pqdetection_sliced2voc/pq_224_75/notrgb_result中以使进行查看

数据集处理完成用时2.02分

loading annotations into memory...

Done (t=0.16s)

loading annotations into memory...

Done (t=0.75s)

creating index...

index created!

100%|██████████| 36976/36976 [02:43<00:00, 226.09it/s]

该数据集有0/36976张图片没有instance标注信息,已经这些图片复制到dataset/pqdetection_sliced2voc/pq_256_75/noann_result中以使进行查看

该数据集有0/36976张图片是非RGB图像,已经这些图片复制到dataset/pqdetection_sliced2voc/pq_256_75/notrgb_result中以使进行查看

数据集处理完成用时2.74分

loading annotations into memory...

Done (t=0.14s)

loading annotations into memory...

Done (t=0.15s)

creating index...

index created!

100%|██████████| 31925/31925 [02:12<00:00, 240.72it/s]

该数据集有0/31925张图片没有instance标注信息,已经这些图片复制到dataset/pqdetection_sliced2voc/pq_288_75/noann_result中以使进行查看

该数据集有0/31925张图片是非RGB图像,已经这些图片复制到dataset/pqdetection_sliced2voc/pq_288_75/notrgb_result中以使进行查看

数据集处理完成用时2.22分

loading annotations into memory...

Done (t=0.67s)

loading annotations into memory...

Done (t=0.14s)

creating index...

index created!

100%|██████████| 28577/28577 [02:28<00:00, 192.43it/s]

该数据集有0/28577张图片没有instance标注信息,已经这些图片复制到dataset/pqdetection_sliced2voc/pq_320_75/noann_result中以使进行查看

该数据集有0/28577张图片是非RGB图像,已经这些图片复制到dataset/pqdetection_sliced2voc/pq_320_75/notrgb_result中以使进行查看

数据集处理完成用时2.49分

loading annotations into memory...

Done (t=0.71s)

loading annotations into memory...

Done (t=0.15s)

creating index...

index created!

100%|██████████| 29367/29367 [02:29<00:00, 196.12it/s]

该数据集有0/29367张图片没有instance标注信息,已经这些图片复制到dataset/pqdetection_sliced2voc/pq_352_75/noann_result中以使进行查看

该数据集有0/29367张图片是非RGB图像,已经这些图片复制到dataset/pqdetection_sliced2voc/pq_352_75/notrgb_result中以使进行查看

数据集处理完成用时2.51分

loading annotations into memory...

Done (t=0.67s)

loading annotations into memory...

Done (t=0.13s)

creating index...

index created!

100%|██████████| 24956/24956 [02:29<00:00, 167.27it/s]

该数据集有0/24956张图片没有instance标注信息,已经这些图片复制到dataset/pqdetection_sliced2voc/pq_384_75/noann_result中以使进行查看

该数据集有0/24956张图片是非RGB图像,已经这些图片复制到dataset/pqdetection_sliced2voc/pq_384_75/notrgb_result中以使进行查看

数据集处理完成用时2.5分

loading annotations into memory...

Done (t=0.10s)

loading annotations into memory...

Done (t=0.67s)

creating index...

index created!

100%|██████████| 21233/21233 [02:05<00:00, 168.79it/s]

该数据集有0/21233张图片没有instance标注信息,已经这些图片复制到dataset/pqdetection_sliced2voc/pq_416_75/noann_result中以使进行查看

该数据集有0/21233张图片是非RGB图像,已经这些图片复制到dataset/pqdetection_sliced2voc/pq_416_75/notrgb_result中以使进行查看

数据集处理完成用时2.11分

loading annotations into memory...

Done (t=0.11s)

loading annotations into memory...

Done (t=0.12s)

creating index...

index created!

100%|██████████| 21508/21508 [02:34<00:00, 139.29it/s]

该数据集有0/21508张图片没有instance标注信息,已经这些图片复制到dataset/pqdetection_sliced2voc/pq_448_75/noann_result中以使进行查看

该数据集有0/21508张图片是非RGB图像,已经这些图片复制到dataset/pqdetection_sliced2voc/pq_448_75/notrgb_result中以使进行查看

数据集处理完成用时2.59分

loading annotations into memory...

Done (t=0.08s)

loading annotations into memory...

Done (t=0.09s)

creating index...

index created!

100%|██████████| 16187/16187 [02:15<00:00, 119.87it/s]

该数据集有0/16187张图片没有instance标注信息,已经这些图片复制到dataset/pqdetection_sliced2voc/pq_480_75/noann_result中以使进行查看

该数据集有0/16187张图片是非RGB图像,已经这些图片复制到dataset/pqdetection_sliced2voc/pq_480_75/notrgb_result中以使进行查看

数据集处理完成用时2.25分

loading annotations into memory...

Done (t=0.08s)

loading annotations into memory...

Done (t=0.09s)

creating index...

index created!

100%|██████████| 15916/15916 [02:17<00:00, 115.38it/s]

该数据集有0/15916张图片没有instance标注信息,已经这些图片复制到dataset/pqdetection_sliced2voc/pq_512_75/noann_result中以使进行查看

该数据集有0/15916张图片是非RGB图像,已经这些图片复制到dataset/pqdetection_sliced2voc/pq_512_75/notrgb_result中以使进行查看

数据集处理完成用时2.31分

! tree -L 2 dataset/pqdetection_sliced2voc

[01;34mdataset/pqdetection_sliced2voc[0m

├── [01;34mpq_160_75[0m

│ ├── [01;34mimages[0m

│ └── [01;34mlabels[0m

├── [01;34mpq_192_75[0m

│ ├── [01;34mimages[0m

│ └── [01;34mlabels[0m

├── [01;34mpq_224_75[0m

│ ├── [01;34mimages[0m

│ └── [01;34mlabels[0m

├── [01;34mpq_256_75[0m

│ ├── [01;34mimages[0m

│ └── [01;34mlabels[0m

├── [01;34mpq_288_75[0m

│ ├── [01;34mimages[0m

│ └── [01;34mlabels[0m

├── [01;34mpq_320_75[0m

│ ├── [01;34mimages[0m

│ └── [01;34mlabels[0m

├── [01;34mpq_352_75[0m

│ ├── [01;34mimages[0m

│ └── [01;34mlabels[0m

├── [01;34mpq_384_75[0m

│ ├── [01;34mimages[0m

│ └── [01;34mlabels[0m

├── [01;34mpq_416_75[0m

│ ├── [01;34mimages[0m

│ └── [01;34mlabels[0m

├── [01;34mpq_448_75[0m

│ ├── [01;34mimages[0m

│ └── [01;34mlabels[0m

├── [01;34mpq_480_75[0m

│ ├── [01;34mimages[0m

│ └── [01;34mlabels[0m

└── [01;34mpq_512_75[0m

├── [01;34mimages[0m

└── [01;34mlabels[0m

37 directories, 0 files

对数据进行可视化,最方便的就是把数据用labelimg查看,如果是在远程服务器上,可以把数据下载下来或vnc远程桌而查看;也可以参考https://blog.csdn.net/u011119817/article/details/119004535这个博客里

在jupyter中显示图片,我们用这种方法

import os

import numpy as np

import matplotlib.pyplot as plt

import xml.etree.ElementTree as ET

def draw_single_image(imgpath,annpath,savepath):

"""_summary_

Args:

imgpath (_type_): 图片路径

annpath (_type_): 标签文件

savepath (_type_): 保存位置

"""

img = cv2.imdecode(np.fromfile(imgpath,dtype=np.uint8),1) #bgr

if img is None or not img.any():

raise Exceeption("read img failded!")

tree = ET.parse(annpath)

root = tree.getroot()

result = root.findall("object")

for obj in result:

name = obj.find("name").text

x1 = int(obj.find("bndbox").find("xmin").text)

y1 = int(obj.find("bndbox").find("ymin").text)

x2 = int(obj.find("bndbox").find("xmax").text)

y2 = int(obj.find("bndbox").find("ymax").text)

cv2.rectangle(img,(x1,y1),(x2,y2),(0,0,255),2)

if savepath is None:

imgrgb = cv2.cvtColor(img,cv2.COLOR_BGR2RGB)

plt.figure(figsize=(20,10))

plt.imshow(imgrgb)

else:

cv2.imencode('.jpg',img)[1].tofile(save_path)

draw_single_image(imgpath="dataset/pqdetection_sliced2voc/pq_160_75/images/1_280_120_440_280.jpg", annpath="dataset/pqdetection_sliced2voc/pq_160_75/labels/1_280_120_440_280.xml", savepath=None)

draw_single_image(imgpath="dataset/pqdetection_sliced2voc/pq_224_75/images/2023_0302_184834_009_3270_392_392_616_616.jpg", annpath="dataset/pqdetection_sliced2voc/pq_224_75/labels/2023_0302_184834_009_3270_392_392_616_616.xml", savepath=None)

看到一张没有问题,大概率我们的程序处理流程就没有问题,可以放心往下走

4、生成训练数据

paddledetection训练数据主要包括三个文件train.txt,val.txt,label_list.txt

而我们有两个尺度,所以会有两份的train.txt和val.txt,因为是同一个标签,所以label_list.txt就是ball

with open("dataset/pqdetection_sliced2voc/label_list.txt","w") as f:

f.writelines(["ball"])

# 416的尺度

size_416=[320,352,384,416,448,480,512]

# 256的尺度

size_256=[160,192,224,256,288,320,352]

import os

import random

img_xmls=[]

for i in size_416:

imgpath = f"dataset/pqdetection_sliced2voc/pq_{i}_75/images"

labpath = f"dataset/pqdetection_sliced2voc/pq_{i}_75/labels"

imgs = os.listdir(imgpath)

for img in imgs:

imgbasename = os.path.splitext(img)[0]

imgname = os.path.join(f"pq_{i}_75/images",img)

xmlname = os.path.join(f"pq_{i}_75/labels",imgbasename+'.xml')

img_xml = f"{imgname} {xmlname}\n"

img_xmls.append(img_xml)

for i in range(5):

random.shuffle(img_xmls)

with open("dataset/pqdetection_sliced2voc/train_416.txt","w") as f:

f.writelines(img_xmls)

img_xmls=[]

for i in size_256:

imgpath = f"dataset/pqdetection_sliced2voc/pq_{i}_75/images"

labpath = f"dataset/pqdetection_sliced2voc/pq_{i}_75/labels"

imgs = os.listdir(imgpath)

for img in imgs:

imgbasename = os.path.splitext(img)[0]

imgname = os.path.join(f"pq_{i}_75/images",img)

xmlname = os.path.join(f"pq_{i}_75/labels",imgbasename+'.xml')

img_xml = f"{imgname} {xmlname}\n"

img_xmls.append(img_xml)

for i in range(5):

random.shuffle(img_xmls)

with open("dataset/pqdetection_sliced2voc/train_256.txt","w") as f:

f.writelines(img_xmls)

#看几行训练文件

!head dataset/pqdetection_sliced2voc/train_256.txt

#总的训练样本数

!cat dataset/pqdetection_sliced2voc/train_256.txt | wc -l

pq_192_75/images/2023_0302_175706_008_240_384_432_576_624.jpg pq_192_75/labels/2023_0302_175706_008_240_384_432_576_624.xml

pq_288_75/images/2023_0302_164151_003_780_144_216_432_504.jpg pq_288_75/labels/2023_0302_164151_003_780_144_216_432_504.xml

pq_224_75/images/pingpang_720_00384_560_224_784_448.jpg pq_224_75/labels/pingpang_720_00384_560_224_784_448.xml

pq_160_75/images/2000_0107_022408_002_4830_600_520_760_680.jpg pq_160_75/labels/2000_0107_022408_002_4830_600_520_760_680.xml

pq_256_75/images/2023_0302_164336_005_150_448_464_704_720.jpg pq_256_75/labels/2023_0302_164336_005_150_448_464_704_720.xml

pq_320_75/images/pingpang_720_08579_560_80_880_400.jpg pq_320_75/labels/pingpang_720_08579_560_80_880_400.xml

pq_256_75/images/2023_0302_164336_005_1830_192_256_448_512.jpg pq_256_75/labels/2023_0302_164336_005_1830_192_256_448_512.xml

pq_320_75/images/2023_0302_175706_008_1860_480_160_800_480.jpg pq_320_75/labels/2023_0302_175706_008_1860_480_160_800_480.xml

pq_224_75/images/2023_0302_164336_005_870_392_336_616_560.jpg pq_224_75/labels/2023_0302_164336_005_870_392_336_616_560.xml

pq_224_75/images/10_112_256_336_480.jpg pq_224_75/labels/10_112_256_336_480.xml

255337

因为训练过程中使用在线数据增强方式,所以test就用训练集做,这可能不科学,也就是说test是增强后的train的子集,但至少能说明模型收敛情况。

!cp dataset/pqdetection_sliced2voc/train_256.txt dataset/pqdetection_sliced2voc/test_256.txt

!cp dataset/pqdetection_sliced2voc/train_416.txt dataset/pqdetection_sliced2voc/test_416.txt

! tree -L 2 dataset/pqdetection_sliced2voc

[01;34mdataset/pqdetection_sliced2voc[0m

├── [01;34mpq_160_75[0m

│ ├── [01;34mimages[0m

│ └── [01;34mlabels[0m

├── [01;34mpq_192_75[0m

│ ├── [01;34mimages[0m

│ └── [01;34mlabels[0m

├── [01;34mpq_224_75[0m

│ ├── [01;34mimages[0m

│ └── [01;34mlabels[0m

├── [01;34mpq_256_75[0m

│ ├── [01;34mimages[0m

│ └── [01;34mlabels[0m

├── [01;34mpq_288_75[0m

│ ├── [01;34mimages[0m

│ └── [01;34mlabels[0m

├── [01;34mpq_320_75[0m

│ ├── [01;34mimages[0m

│ └── [01;34mlabels[0m

├── [01;34mpq_352_75[0m

│ ├── [01;34mimages[0m

│ └── [01;34mlabels[0m

├── [01;34mpq_384_75[0m

│ ├── [01;34mimages[0m

│ └── [01;34mlabels[0m

├── [01;34mpq_416_75[0m

│ ├── [01;34mimages[0m

│ └── [01;34mlabels[0m

├── [01;34mpq_448_75[0m

│ ├── [01;34mimages[0m

│ └── [01;34mlabels[0m

├── [01;34mpq_480_75[0m

│ ├── [01;34mimages[0m

│ └── [01;34mlabels[0m

├── [01;34mpq_512_75[0m

│ ├── [01;34mimages[0m

│ └── [01;34mlabels[0m

├── [00mtest_256.txt[0m

├── [00mtest_416.txt[0m

├── [00mtrain_256.txt[0m

└── [00mtrain_416.txt[0m

37 directories, 4 files

5、模型训练

以256为例,预训练模型使用我们之前的即可,更多配置说明可以参考 https://blog.csdn.net/u011119817/article/details/126665278

配置文件内容大概是:

_BASE_: [

'../datasets/voc_pingpang_20230405.yml',

'../runtime.yml',

'_base_/picodet_v2.yml',

'_base_/optimizer_300e.yml',

]

#pretrain_weights: pretrained/PPLCNet_x0_75_pretrained.pdparams

pretrain_weights: output/picodet_s_416_voc_npu_256_20230404/model_final.pdparams

weights: output/picodet_s_416_voc_npu_256_20230404/model_final.pdparams

find_unused_parameters: True

keep_best_weight: True

use_ema: True

epoch: 80

snapshot_epoch: 5

PicoDet:

backbone: LCNet

neck: CSPPAN

head: PicoHeadV2

LCNet:

scale: 0.75

feature_maps: [3, 4, 5]

act: relu6

CSPPAN:

out_channels: 96

use_depthwise: True

num_csp_blocks: 1

num_features: 4

act: relu6

PicoHeadV2:

conv_feat:

name: PicoFeat

feat_in: 96

feat_out: 96

num_convs: 4

num_fpn_stride: 4

norm_type: bn

share_cls_reg: True

use_se: True

act: relu6

feat_in_chan: 96

act: relu6

LearningRate:

base_lr: 0.0008

schedulers:

- !CosineDecay

max_epochs: 100

min_lr_ratio: 0.001

last_plateau_epochs: 10

- !ExpWarmup

epochs: 5

worker_num: 6

eval_height: &eval_height 256

eval_width: &eval_width 256

eval_size: &eval_size [*eval_height, *eval_width]

TrainReader:

sample_transforms:

- Decode: {}

- Mosaic:

prob: 0.6

input_dim: [416, 416]

degrees: [-10, 10]

scale: [0.1, 2.0]

shear: [-2, 2]

translate: [-0.1, 0.1]

enable_mixup: True

- AugmentHSV: {is_bgr: False, hgain: 5, sgain: 30, vgain: 30}

- RandomFlip: {prob: 0.5}

batch_transforms:

# - BatchRandomResize: {target_size: [320, 352, 384, 416, 448, 480, 512], random_size: True, random_interp: True, keep_ratio: False}

- BatchRandomResize: {target_size: [160, 192, 224, 256, 288, 320, 352], random_size: True, random_interp: True, keep_ratio: False}

- NormalizeImage: {mean: [0, 0, 0], std: [1, 1, 1], is_scale: True}

- Permute: {}

- PadGT: {}

batch_size: 80

shuffle: true

drop_last: true

mosaic_epoch: 70

EvalReader:

sample_transforms:

- Decode: {}

- Resize: {interp: 2, target_size: *eval_size, keep_ratio: False}

- NormalizeImage: {mean: [0, 0, 0], std: [1, 1, 1], is_scale: True}

- Permute: {}

batch_transforms:

- PadBatch: {pad_to_stride: 32}

batch_size: 16

shuffle: false

TestReader:

inputs_def:

image_shape: [1, 3, *eval_height, *eval_width]

sample_transforms:

- Decode: {}

- Resize: {interp: 2, target_size: *eval_size, keep_ratio: False}

- NormalizeImage: {mean: [0, 0, 0], std: [1, 1, 1], is_scale: True}

- Permute: {}

batch_size: 1

voc_pingpang_20230405.yml内容是:

metric: VOC

map_type: 11point

num_classes: 1

TrainDataset:

!VOCDataSet

dataset_dir: dataset/pqdetection_sliced2voc

anno_path: train_256.txt

label_list: label_list.txt

data_fields: ['image', 'gt_bbox', 'gt_class', 'difficult']

EvalDataset:

!VOCDataSet

dataset_dir: dataset/pqdetection_sliced2voc

anno_path: test_256.txt

label_list: label_list.txt

data_fields: ['image', 'gt_bbox', 'gt_class', 'difficult']

TestDataset:

!ImageFolder

anno_path: dataset/pqdetection/label_list.txt

命令是:

python -m paddle.distributed.launch --gpus 2,3 tools/train.py -c configs/picodet/picodet_s_416_voc_npu_256_20230405.yml --eval

6、模型推理

对于小目标的推理,同样的是使用滑窗的方式,相关命令查看https://blog.csdn.net/u011119817/article/details/125665278 第8小节内容。