因为某个需求,需要把原来pytorch的神经网络移植到华为的mindspore上

这边记录下遇到的坑

附上mindspore的官方教程:

https://mindspore.cn/tutorials/zh-CN/r2.0/advanced/compute_graph.html

这边附上需要移植的网络,以tensorflow和pytorch的形式移植

import numpy as np

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Dense, Embedding, Conv1D, multiply, GlobalMaxPool1D, Input, Activation

def Malconv(max_len=200000, win_size=500, vocab_size=256):

inp = Input((max_len,))

emb = Embedding(vocab_size, 8)(inp)

conv1 = Conv1D(kernel_size=win_size, filters=128, strides=win_size, padding='same')(emb)

conv2 = Conv1D(kernel_size=win_size, filters=128, strides=win_size, padding='same')(emb)

a = Activation('sigmoid', name='sigmoid')(conv2)

mul = multiply([conv1, a])

a = Activation('relu', name='relu')(mul)

p = GlobalMaxPool1D()(a)

d = Dense(64)(p)

out = Dense(1, activation='sigmoid')(d)

return Model(inp, out)

pytorch是这种写法:

from typing import Optional

import torch

import torch.nn as nn

import mindspore.nn as nn

from torch import Tensor

class MalConv(nn.Module):

"""The MalConv model.

References:

- Edward Raff et al. 2018. Malware Detection by Eating a Whole EXE.

https://arxiv.org/abs/1710.09435

"""

def __init__(

self,

num_classes: int = 2,

*,

num_embeddings: int = 257,

embedding_dim: int = 8,

channels: int = 128,

kernel_size: int = 512,

stride: int = 512,

padding_idx: Optional[int] = 256,

) -> None:

super().__init__()

self.num_classes = num_classes

# By default, num_embeddings (257) = byte (0-255) + padding (256).

self.embedding = nn.Embedding(num_embeddings, embedding_dim, padding_idx=padding_idx)

self.conv1 = nn.Conv1d(embedding_dim, channels, kernel_size=kernel_size, stride=stride, bias=True)

self.conv2 = nn.Conv1d(embedding_dim, channels, kernel_size=kernel_size, stride=stride, bias=True)

self.max_pool = nn.AdaptiveMaxPool1d(1)

self.fc = nn.Sequential(

nn.Flatten(),

nn.Linear(channels, channels),

nn.ReLU(inplace=True),

nn.Linear(channels, num_classes),

)

def _embed(self, x: Tensor) -> Tensor:

# Perform embedding.

x = self.embedding(x)

# Treat embedding dimension as channel.

x = x.permute(0, 2, 1)

return x

def _forward_embedded(self, x: Tensor) -> Tensor:

# Perform gated convolution.

x = self.conv1(x) * torch.sigmoid(self.conv2(x))

# Perform global max pooling.

x = self.max_pool(x)

x = self.fc(x)

return x

def forward(self, x: Tensor) -> Tensor:

x = self._embed(x)

x = self._forward_embedded(x)

return x

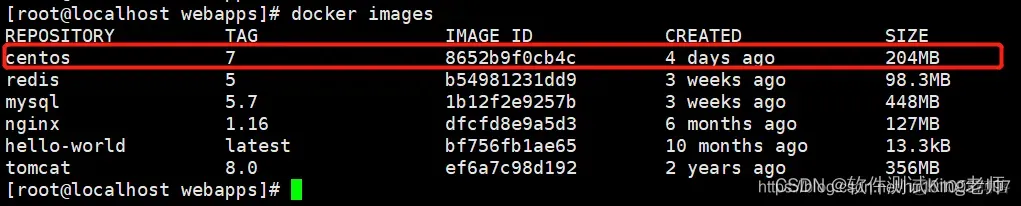

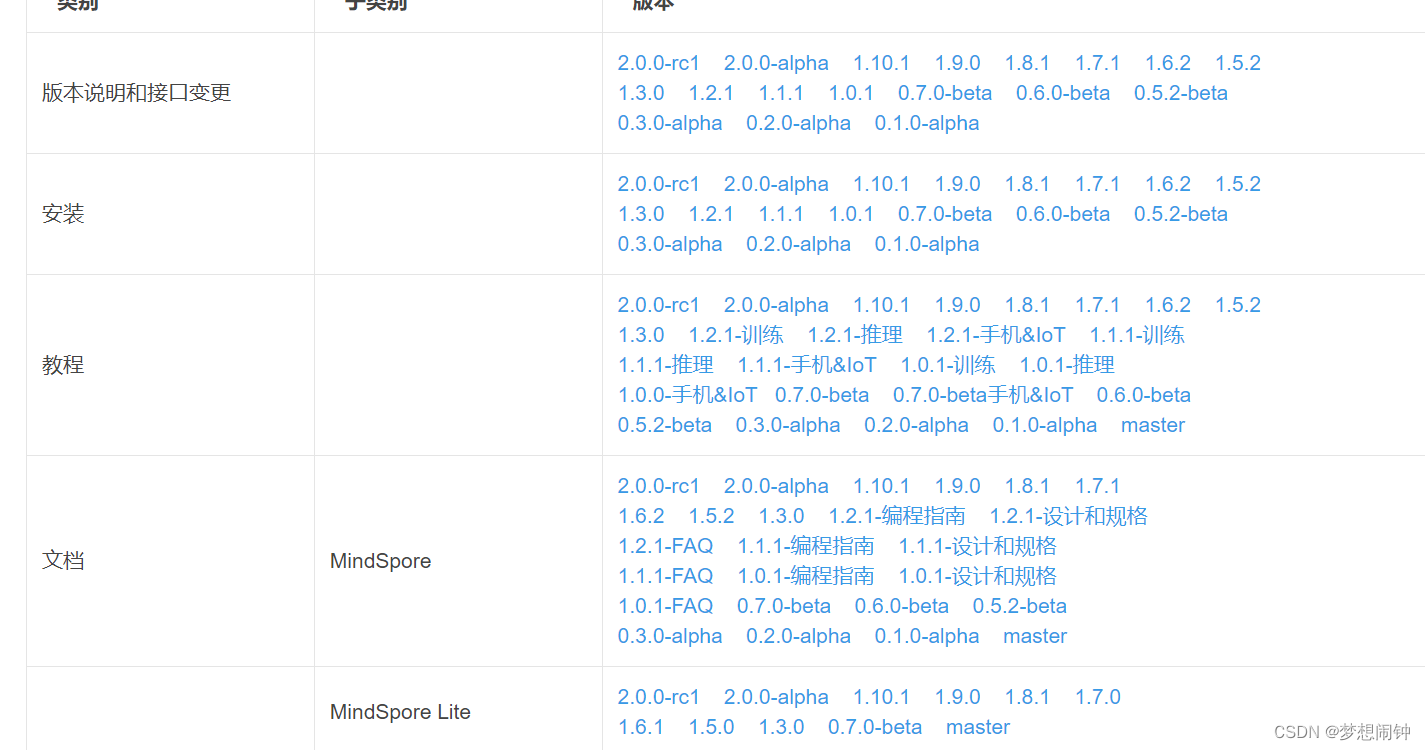

先看看mindspore怎么安装:

https://mindspore.cn/install

我先安装的是cpu版本的,顺便一提看着有三个版本,实际你能用的只有2.0.0版本,1.10.1里连pytorch的卷积nn.Conv1d都没有,Nightly更是连介绍这东西是啥的文档都没有。

pip install https://ms-release.obs.cn-north-4.myhuaweicloud.com/2.0.0rc1/MindSpore/unified/x86_64/mindspore-2.0.0rc1-cp37-cp37m-linux_x86_64.whl --trusted-host ms-release.obs.cn-north-4.myhuaweicloud.com -i https://pypi.tuna.tsinghua.edu.cn/simple

安装完后,先看看介绍,mindspore是啥,最重要的先查看与pytorch的典型区别

https://www.mindspore.cn/docs/zh-CN/r2.0/migration_guide/typical_api_comparision.html

这里点名批评下mindspore,看到这部分我自己都气笑了,在使用mindspore的时候它很贴心的告诉你 import mindspore.nn as nn 就可以代替import torch.nn as nn,听上去是不是很方便?要是完全函数名方法名重合那确实方便,但是很多时候这些函数名不完全一样,比如:

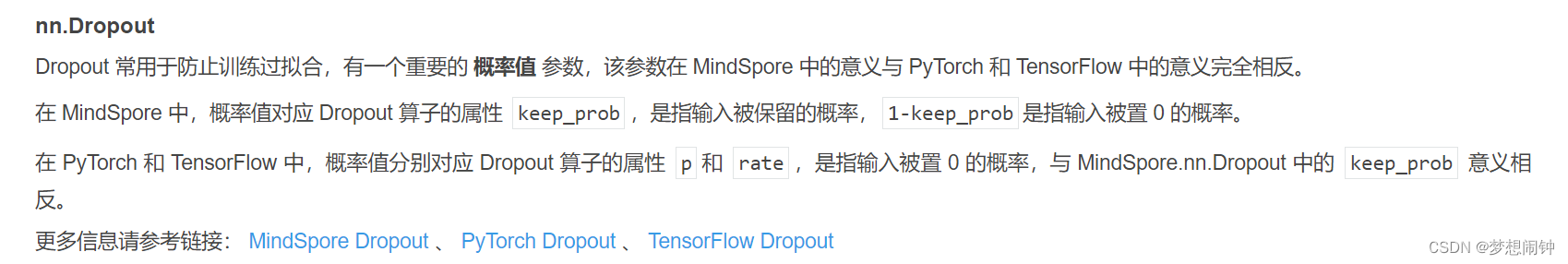

mindspore里名字变了但是功能没变,mindspore里名词没变,功能没变,但是参数变了,mindspore里名词没变参数没变但是功能变了,最后一种是最讨厌的,比如:

这个dropout和你原来使用的dropout是相反的没想到吧!如果不看文档直接掉坑里去

mindspore说我改动了一些方法,只有这样你才知道你用的是mindspore!

首先是搭建网络,原来的网络不算复杂,先看看mindspore的官方文档:

https://www.mindspore.cn/tutorials/zh-CN/r1.10/beginner/model.html

看看它写的样例网络:

Copyclass Network(nn.Cell):

def __init__(self):

super().__init__()

self.flatten = nn.Flatten()

self.dense_relu_sequential = nn.SequentialCell(

nn.Dense(28*28, 512),

nn.ReLU(),

nn.Dense(512, 512),

nn.ReLU(),

nn.Dense(512, 10)

)

def construct(self, x):

x = self.flatten(x)

logits = self.dense_relu_sequential(x)

return logits

看上去很像pytorch的网络,类似于把forward换成了construct

这里需要提一点,mindspore介绍里说的是图构建的时候会自动构建图,说是自动计算梯度啥的,不需要backward和优化器的step方法这样,我个人感觉是直接把forward换成construct了,但是这部分我没完全理解透,如果后面有变更我会再修改

我修改后的网络长这样:

首先原来继承的nn.module变成了cell类,这个是mindspore自己写的类,然后是一些替换,把forward换成construct,Linear换成Dense,Conv1d里的bias参数需要换成have_bias

关于api的替换最好还是查下官方的映射文档:

https://www.mindspore.cn/docs/zh-CN/r2.0/note/api_mapping/pytorch_api_mapping.html

from typing import Optional

import mindspore.nn as nn

from mindspore import Tensor

import mindspore

class MalConv(nn.Cell):

"""The MalConv model.

References:

- Edward Raff et al. 2018. Malware Detection by Eating a Whole EXE.

https://arxiv.org/abs/1710.09435

"""

def __init__(

self,

num_classes: int = 2,

*,

num_embeddings: int = 257,

embedding_dim: int = 8,

channels: int = 128,

kernel_size: int = 512,

stride: int = 512,

padding_idx: Optional[int] = 256,

) -> None:

super().__init__()

self.num_classes = num_classes

# By default, num_embeddings (257) = byte (0-255) + padding (256).

self.embedding = nn.Embedding(num_embeddings, embedding_dim, padding_idx=padding_idx)

self.conv1 = nn.Conv1d(embedding_dim, channels, kernel_size=kernel_size, stride=stride, has_bias=True)

self.conv2 = nn.Conv1d(embedding_dim, channels, kernel_size=kernel_size, stride=stride, has_bias=True)

self.maxpool = nn.AdaptiveMaxPool1d(1)

self.fc = nn.SequentialCell(

nn.Flatten(),

nn.Dense(channels, channels),

nn.ReLU(),

nn.Dense(channels, num_classes),

nn.Sigmoid()

)

def _embed(self, x: Tensor) -> Tensor:

# Perform embedding.

x = self.embedding(x)

# Treat embedding dimension as channel.

x = x.permute(0, 2, 1)

return x

def _forward_embedded(self, x: Tensor) -> Tensor:

# Perform gated convolution.

x = self.conv1(x) * mindspore.ops.sigmoid(self.conv2(x))

# Perform global max pooling.

x = self.maxpool(x)

x = self.fc(x)

return x

def construct(self, x: Tensor) -> Tensor:

x = self._embed(x)

x = self._forward_embedded(x)

return x

print(MalConv())

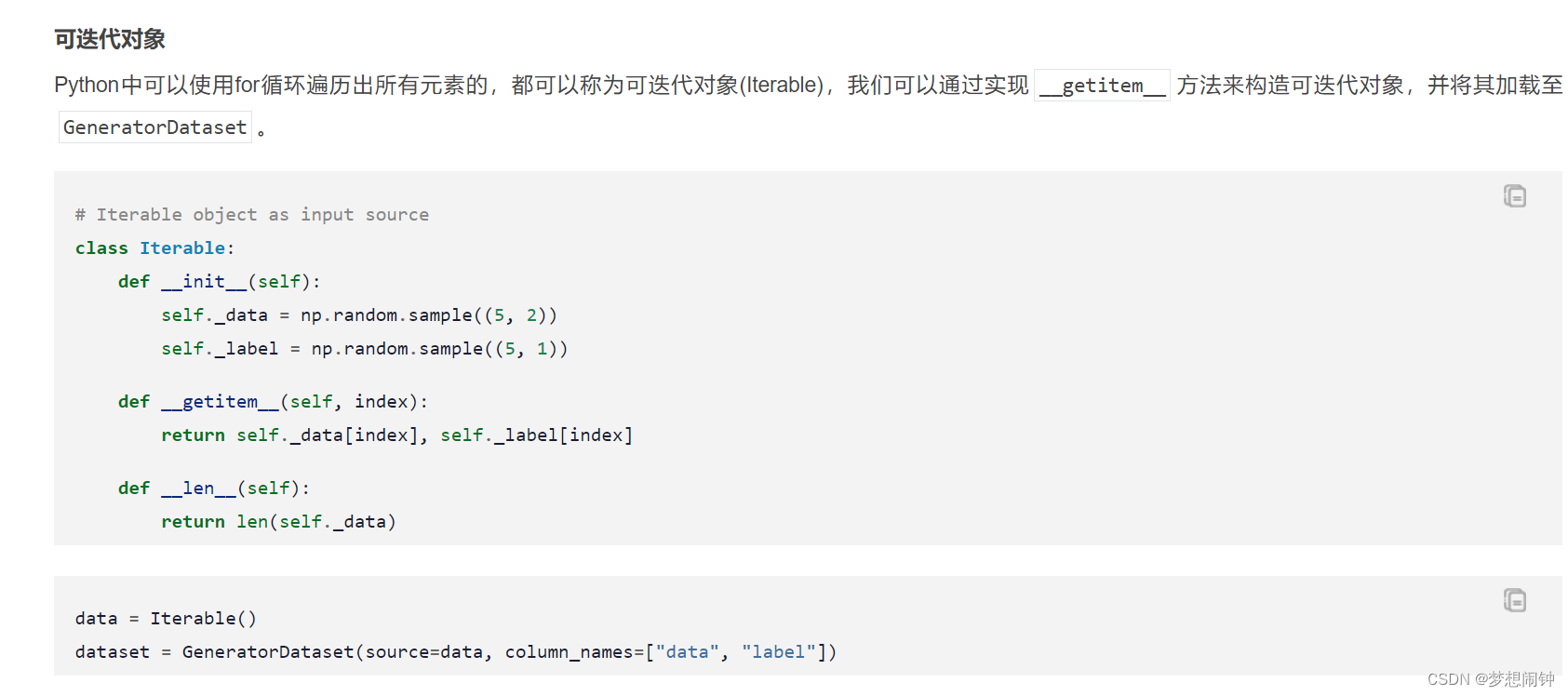

然后是需要构建数据集,这里我用了原来的数据集代码,和构建pytorch数据集的方法类似,我之前也写过一篇如何构建pytorch数据集的博客

https://blog.csdn.net/qq_43199509/article/details/127534962

官方文档:

https://www.mindspore.cn/tutorials/zh-CN/r2.0.0-alpha/beginner/dataset.html

附上我改后的代码:

需要注意mindspore的数据类型转换不像原来的pytorch那么兼容,如果报错提示数据类型不符合需要用类似np.int32(),np.astype(dtype=xxx)这些方法来对数据类型进行转换, 最后需要用GeneratorDataset来进行修饰,batch表示每次且多少个数据扔进pytorch进行训练,drop_remainder如果为False的话,最后剩下的数据如果小于batch大小的话会扔掉

dataset = ds.GeneratorDataset(MalConvDataSet(), column_names=["data", "label"])

dataset = dataset.batch(1, drop_remainder=True)

# 准备数据

from OSutils import getDataPath, loadJsonData

from ByteSequencesFeature import byte_sequences_feature

import numpy as np

def data_loader_multilabel(file_path: str, label_dict=None):

"""

用于读取多标签的情况

"""

if label_dict is None:

label_dict = {}

file_md5 = file_path.split('/')[-1]

return byte_sequences_feature(file_path), label_dict.get(file_md5)

def data_loader(file_path: str, label_dict=None):

"""

用于读取单标签的情况

"""

if label_dict is None:

label_dict = {}

file_md5 = file_path.split('/')[-1]

if file_md5 in label_dict:

return byte_sequences_feature(file_path), 1

else:

return byte_sequences_feature(file_path), 0

def pred_data_loader(file_path: str, *args):

"""

用于读取预测文件的情况

"""

file_md5 = file_path.split('/')[-1]

return byte_sequences_feature(file_path), file_md5

class MalConvDataSet(object):

def __init__(self, black_samples_dir="black_samples/", white_samples_dir='white_samples/',

label_dict_path='label_dict.json', label_type="single", valid=False, valid_size=0.2, seed=207):

self.file_list = getDataPath(black_samples_dir)

self.loader = data_loader_multilabel

if label_type == "single":

self.loader = data_loader

self.file_list += getDataPath(white_samples_dir)

if label_type == "predict":

self.label_dict = {}

self.loader = pred_data_loader

else:

self.label_dict = loadJsonData(label_dict_path)

np.random.seed(seed)

np.random.shuffle(self.file_list)

# 如果是需要测试集,就在原来的基础上分割

# 因为设定了随机种子,所以分割的结果是一样的

valid_cut = int((1 - valid_size) * len(self.file_list))

if valid:

self.file_list = self.file_list[valid_cut:]

else:

self.file_list = self.file_list[:valid_cut]

def __getitem__(self, index):

file_path = self.file_list[index]

feature, label = self.loader(file_path, self.label_dict)

return np.array(feature), np.int32(label)

def __len__(self):

return len(self.file_list)

if __name__ == "__main__":

import mindspore.dataset as ds

# corresponding to torch.utils.data.DataLoader(my_dataset)

dataset = ds.GeneratorDataset(MalConvDataSet(), column_names=["data", "label"])

dataset = dataset.batch(1, drop_remainder=True)

for data in dataset:

for data in data:

print(data)

然后需要专门写个类配置启动环境,我这边用的是官方提供的模板,官方文档如下:

https://www.mindspore.cn/docs/zh-CN/r2.0.0-alpha/migration_guide/model_development/training_and_evaluation_procession.html?highlight=init_env

import mindspore as ms

from mindspore.communication.management import init, get_rank, get_group_size

class DefaultConfig(object):

"""

设置默认的环境

"""

seed = 1

device_target = "CPU"

context_mode = "graph" # should be in ['graph', 'pynative']

device_num = 1

device_id = 0

def init_env(cfg=None):

"""初始化运行时环境."""

if cfg is None:

cfg = DefaultConfig()

ms.set_seed(cfg.seed)

# 如果device_target设置是None,利用框架自动获取device_target,否则使用设置的。

if cfg.device_target != "None":

if cfg.device_target not in ["Ascend", "GPU", "CPU"]:

raise ValueError(f"Invalid device_target: {cfg.device_target}, "

f"should be in ['None', 'Ascend', 'GPU', 'CPU']")

ms.set_context(device_target=cfg.device_target)

# 配置运行模式,支持图模式和PYNATIVE模式

if cfg.context_mode not in ["graph", "pynative"]:

raise ValueError(f"Invalid context_mode: {cfg.context_mode}, "

f"should be in ['graph', 'pynative']")

context_mode = ms.GRAPH_MODE if cfg.context_mode == "graph" else ms.PYNATIVE_MODE

ms.set_context(mode=context_mode)

cfg.device_target = ms.get_context("device_target")

# 如果是CPU上运行的话,不配置多卡环境

if cfg.device_target == "CPU":

cfg.device_id = 0

cfg.device_num = 1

cfg.rank_id = 0

# 设置运行时使用的卡

if hasattr(cfg, "device_id") and isinstance(cfg.device_id, int):

ms.set_context(device_id=cfg.device_id)

if cfg.device_num > 1:

# init方法用于多卡的初始化,不区分Ascend和GPU,get_group_size和get_rank方法只能在init后使用

init()

print("run distribute!", flush=True)

group_size = get_group_size()

if cfg.device_num != group_size:

raise ValueError(f"the setting device_num: {cfg.device_num} not equal to the real group_size: {group_size}")

cfg.rank_id = get_rank()

ms.set_auto_parallel_context(parallel_mode=ms.ParallelMode.DATA_PARALLEL, gradients_mean=True)

if hasattr(cfg, "all_reduce_fusion_config"):

ms.set_auto_parallel_context(all_reduce_fusion_config=cfg.all_reduce_fusion_config)

else:

cfg.device_num = 1

cfg.rank_id = 0

print("run standalone!", flush=True)

if __name__ == "__main__":

init_env()

最后就是启动环境,加载数据集和开始训练,我这边用了较少的训练集发现网络没有收敛,不知道是数据太少还是我迁移的时候少加了东西,我代码是这么写的:

from mindspore.train import Model, LossMonitor, TimeMonitor, CheckpointConfig, ModelCheckpoint

from mindspore import nn

import mindspore.dataset as ds

from MalConv import MalConv as Net

from MalConvDataSet import MalConvDataSet

from SetEnvironment import init_env

import mindspore

def train_net():

# 初始化运行时环境

init_env()

# 二分类任务

task_type = "single"

num_classes = 2

# 多分类任务

# task_type="multilabel"

# num_classes=103

total_step = 1

max_step = 300

display_step = 1

test_step = 1000

learning_rate = 0.0001

log_file_path = 'train_log_' + task_type + '.txt'

use_gpu = False

model_path = 'Malconv_' + task_type + '.model'

black_samples_dir = "black_samples/"

white_samples_dir = 'white_samples/'

label_dict_path = 'label_dict.json'

valid_size = 0.2

# 构造数据集对象

dataset_ori = ds.GeneratorDataset(

MalConvDataSet(black_samples_dir=black_samples_dir, white_samples_dir=white_samples_dir,

label_dict_path=label_dict_path, label_type=task_type, valid=False,

valid_size=valid_size, seed=207), shuffle=True, column_names=["data", "label"])

dataset=dataset_ori.batch(2,drop_remainder=False)

# 网络模型,和任务有关

net = Net()

# 损失函数,和任务有关

loss = nn.CrossEntropyLoss()

# 优化器实现,和任务有关

optimizer = nn.Adam(net.trainable_params(), learning_rate)

# 封装成Model

model = Model(net, loss_fn=loss, metrics={'top_1_accuracy', 'top_5_accuracy'})

# checkpoint保存

config_ck = CheckpointConfig(save_checkpoint_steps=dataset.get_dataset_size(),

keep_checkpoint_max=5)

ckpt_cb = ModelCheckpoint(prefix="resnet", directory="./checkpoint", config=config_ck)

# 模型训练,1轮

model.train(1, dataset, callbacks=[LossMonitor(), TimeMonitor()])

for each in dataset:

print("data:",each[0],each[1])

each_predict=model.predict(each[0])

print("predict:",each_predict)

if __name__ == '__main__':

train_net()

反正跑是能跑,要是有新发现或者发现错误我会回来更新