《基于DPDK收包的suricata的安装和运行》中已经讲过基于DPDK收发包的suricata的安装过程,今天我们来看一下,suricata中DPDK的收发包线程模型以及相关的配置。

1、收发包线程模型:

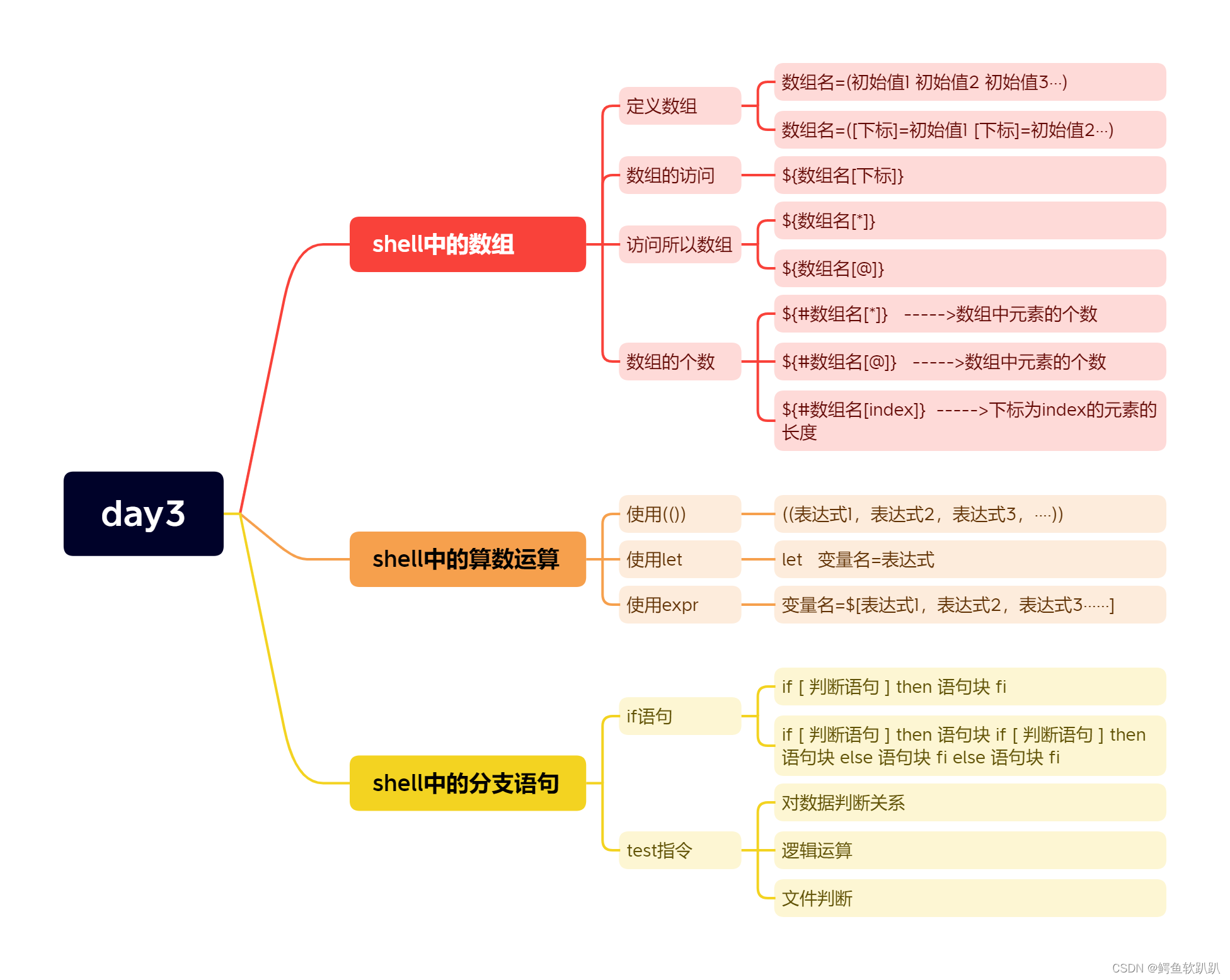

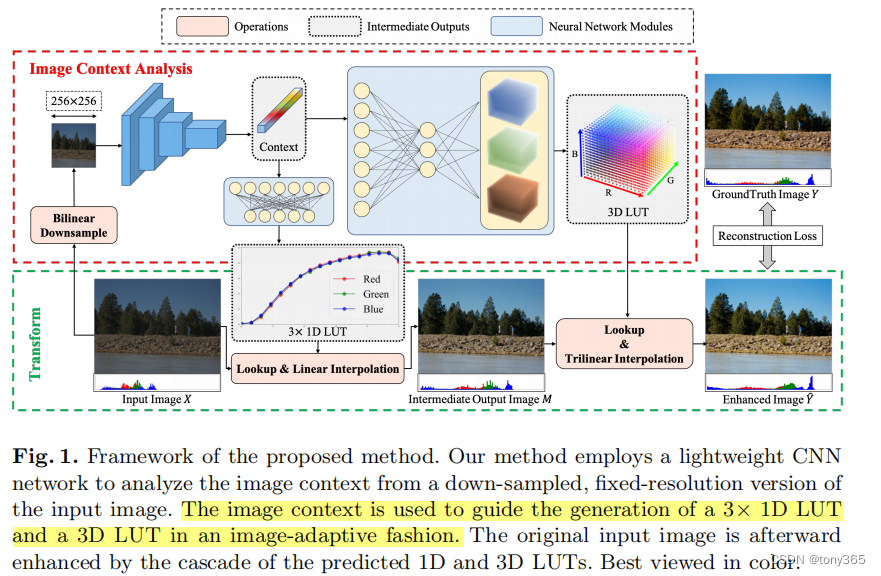

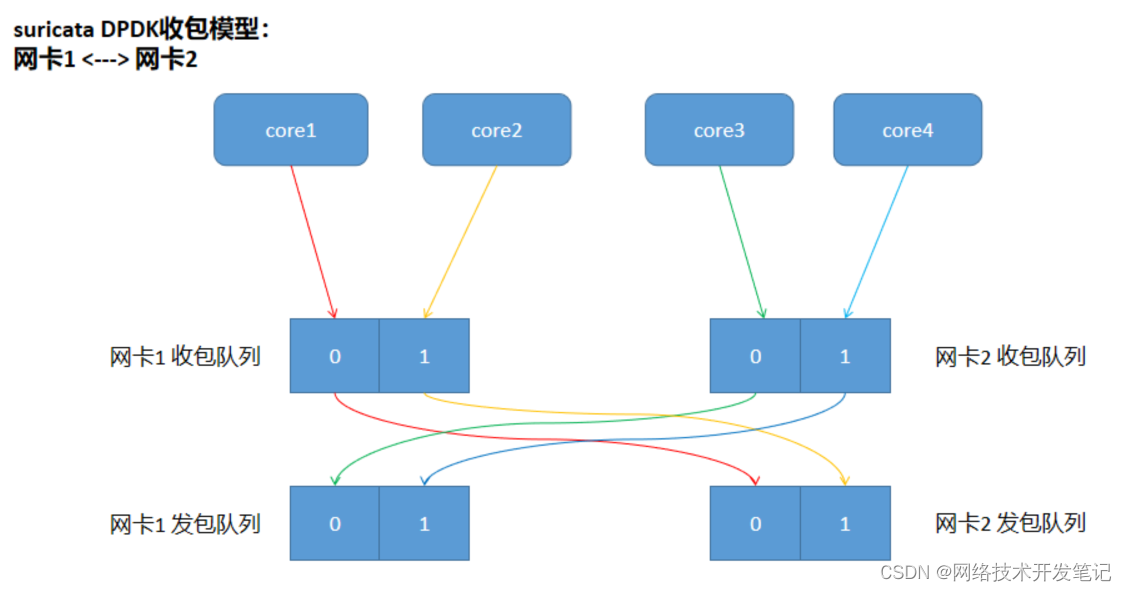

通过分析代码,suricata中DPDK收发包线程模型如下:

suricata按网卡指定收包线程,IPS模式下成对的网卡收包线程数必须得一样,在上图中,网卡1和网卡2是一对,每个网卡配置两个收包线程,网卡1的两个收包线程绑定cpu core1和core2,网卡2的两个收包线程绑定cpu core3和core4,网卡1的core1收包线程从网卡1的0号队列收报文,然后往网卡2的0号队列发,其它收包线程的收发报文关系见上图中的箭头。

分析一下上面的收包模型:

1)每个cpu core只处理某一个网卡的单个队列的报文,性能会高一些,但是会耗用更多的cpu。

2)在配置网卡RSS的情况下,相同五元组会话的报文,会在每个网卡的相同编号的队列进行处理,所以五元组会话是会存在多线程互斥访问的问题的。

3)每个发送队列只会有一个收包线程的cpu core进行调用,没有互斥的问题。但这种方式只适用于成对网口透明转发的情况,对二三层转发不太适合。

2、DPDK相关配置说明:

1)DPDK收包配置:

dpdk:

eal-params:

proc-type: primary

# DPDK capture support

# RX queues (and TX queues in IPS mode) are assigned to cores in 1:1 ratio

interfaces:

- interface: 0000:0b:00.0 # PCIe address of the NIC port

# Threading: possible values are either "auto" or number of threads

# - auto takes all cores

# in IPS mode it is required to specify the number of cores and the numbers on both interfaces must match

threads: 2

promisc: true # promiscuous mode - capture all packets

multicast: true # enables also detection on multicast packets

checksum-checks: true # if Suricata should validate checksums

checksum-checks-offload: false # if possible offload checksum validation to the NIC (saves Suricata resources)

mtu: 1500 # Set MTU of the device in bytes

# rss-hash-functions: 0x0 # advanced configuration option, use only if you use untested NIC card and experience RSS warnings,

# For `rss-hash-functions` use hexadecimal 0x01ab format to specify RSS hash function flags - DumpRssFlags can help (you can see output if you use -vvv option during Suri startup)

# setting auto to rss_hf sets the default RSS hash functions (based on IP addresses)

# To approximately calculate required amount of space (in bytes) for interface's mempool: mempool-size * mtu

# Make sure you have enough allocated hugepages.

# The optimum size for the packet memory pool (in terms of memory usage) is power of two minus one: n = (2^q - 1)

mempool-size: 65535 # The number of elements in the mbuf pool

# Mempool cache size must be lower or equal to:

# - RTE_MEMPOOL_CACHE_MAX_SIZE (by default 512) and

# - "mempool-size / 1.5"

# It is advised to choose cache_size to have "mempool-size modulo cache_size == 0".

# If this is not the case, some elements will always stay in the pool and will never be used.

# The cache can be disabled if the cache_size argument is set to 0, can be useful to avoid losing objects in cache

# If the value is empty or set to "auto", Suricata will attempt to set cache size of the mempool to a value

# that matches the previously mentioned recommendations

mempool-cache-size: 257

rx-descriptors: 1024

tx-descriptors: 1024

#

# IPS mode for Suricata works in 3 modes - none, tap, ips

# - none: IDS mode only - disables IPS functionality (does not further forward packets)

# - tap: forwards all packets and generates alerts (omits DROP action) This is not DPDK TAP

# - ips: the same as tap mode but it also drops packets that are flagged by rules to be dropped

copy-mode: ips

copy-iface: 0000:13:00.0 # or PCIe address of the second interface

- interface: 0000:13:00.0

threads: 2

promisc: true

multicast: true

checksum-checks: true

checksum-checks-offload: false

mtu: 1500

#rss-hash-functions: auto

mempool-size: 65535

mempool-cache-size: 257

rx-descriptors: 1024

tx-descriptors: 1024

copy-mode: ips

copy-iface: 0000:0b:00.0

需要重点关注的配置项如下:

- interface

收包网口的pcie地址

- threads

每个网口收包使用的收包线程数,suricata会配置该网口相同数量的队列数,该线程数必须与copy-iface指定网口的收包线程数一样

- copy-mode

报文转发模式,目前有三种,none: 不转发; tap: 纯透明网桥,不丢包; ips: 按配置的规则,执行丢包动作

- copy-iface

对应转发网口的pcie地址

2)CPU绑定配置:

threading:

set-cpu-affinity: yes

# Tune cpu affinity of threads. Each family of threads can be bound

# to specific CPUs.

#

# These 2 apply to the all runmodes:

# management-cpu-set is used for flow timeout handling, counters

# worker-cpu-set is used for 'worker' threads

#

# Additionally, for autofp these apply:

# receive-cpu-set is used for capture threads

# verdict-cpu-set is used for IPS verdict threads

#

cpu-affinity:

- management-cpu-set:

cpu: [ 0 ] # include only these CPUs in affinity settings

- receive-cpu-set:

cpu: [ 0 ] # include only these CPUs in affinity settings

- worker-cpu-set:

cpu: [ 1,2,3,4 ]

mode: "exclusive"

# Use explicitly 3 threads and don't compute number by using

# detect-thread-ratio variable:

# threads: 3

prio:

low: [ 0 ]

medium: [ "1-2" ]

high: [ 3 ]

default: "medium"

需要重点关注的配置项如下:

- set-cpu-affinity

线程绑定cpu开关

- worker-cpu-set

收包线程绑定的cpu

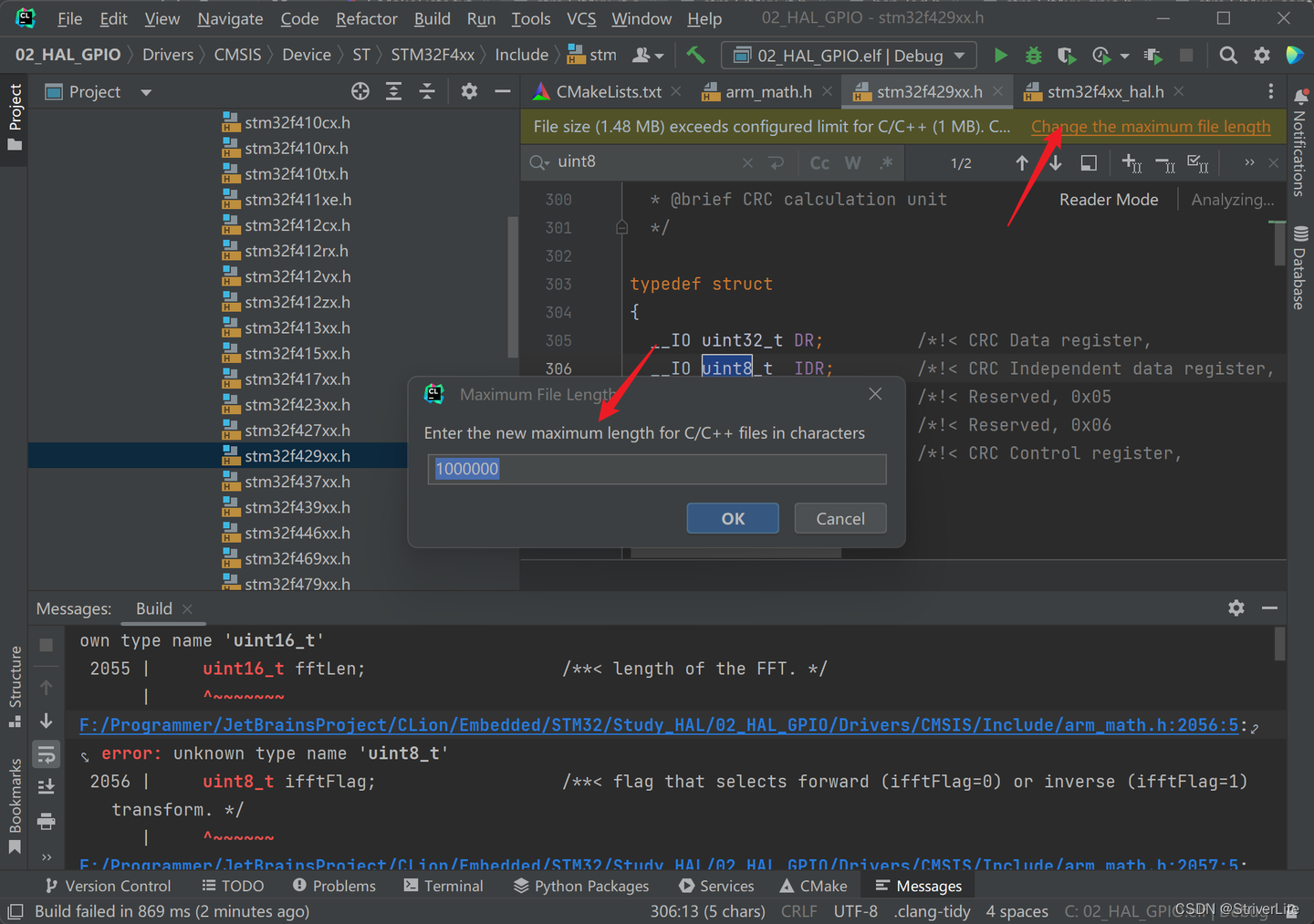

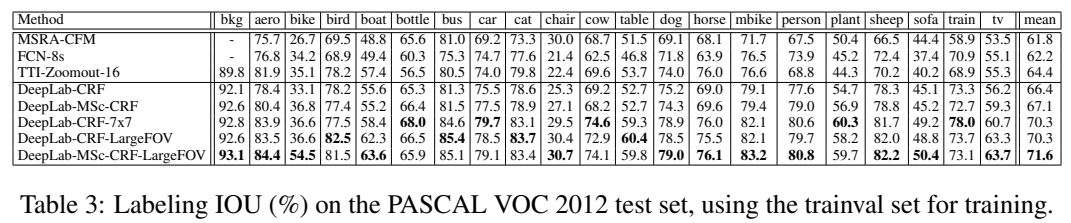

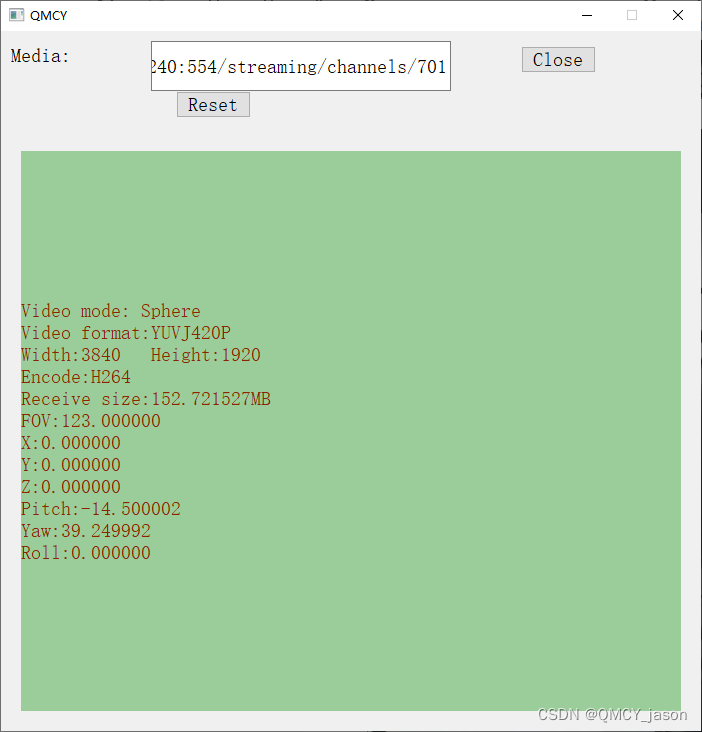

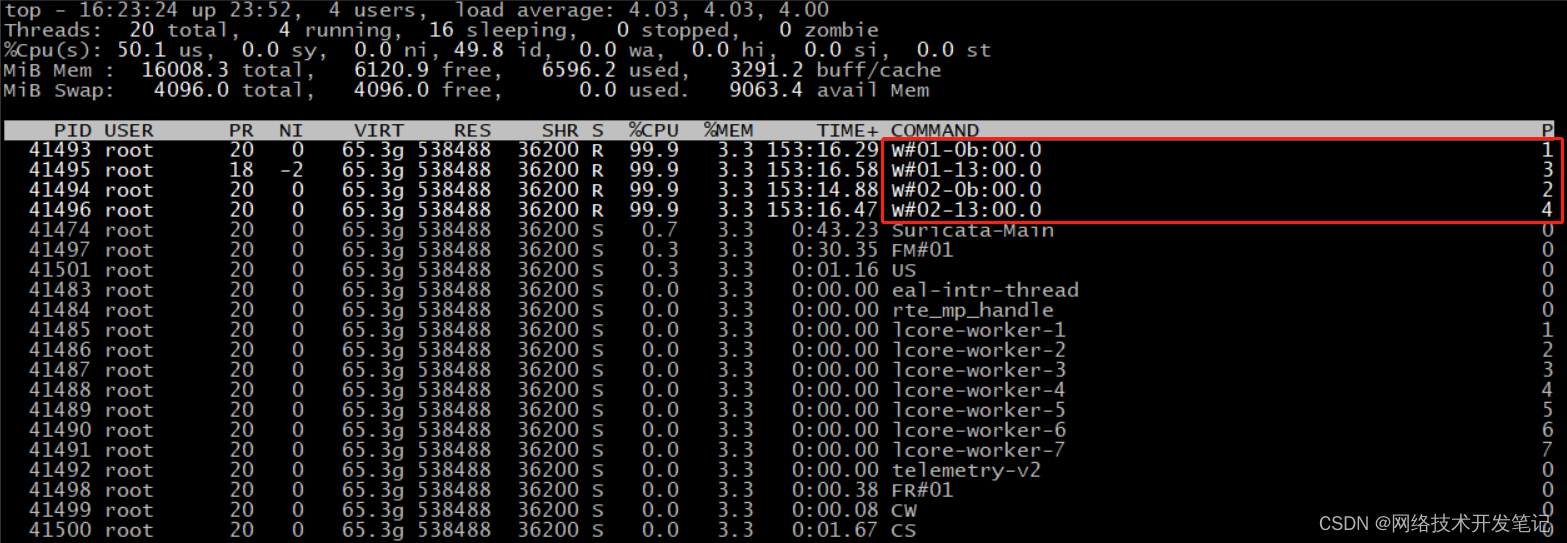

启动suricata进程,使用命令top -H -p $(pidof suricata),选择显示Last Used Cpu,如下图所示,可以看到suricata启动了4个收包线程,线程命名格式:w#队列编号-pcie地址缩写,4个收包线程分别绑定cpu 1,2,3,4。

好了,suricata DPDK的收包模型以及相关配置说明,就讲到这里了,下一篇我们看看suricata DPDK收包的相关源码。