Android WebRTC+SRS/ZLM视频通话(4):Android使用WebRTC推流SRS/ZLMediaKit

来自奔三人员的焦虑日志

接着上一章内容,继续来记录Android是如何使用WebRTC往SRS或ZLMediaKit进行推流。想要在Android设备上实现高质量的实时流媒体推送?那么不要错过 WebRTC、SRS 和 ZLMediaKit 这三个工具!

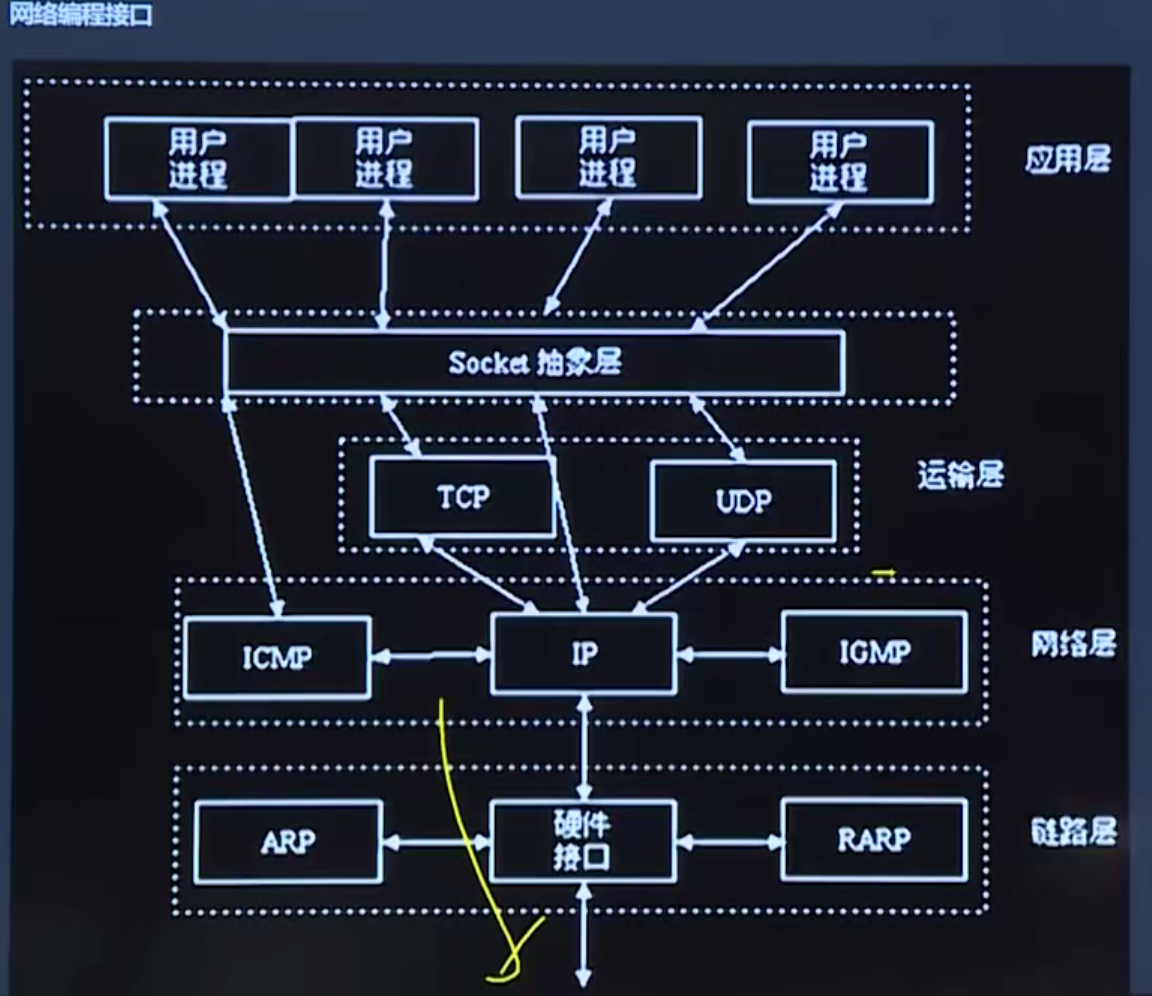

WebRTC 是一种使用标准的 Web 技术实现 P2P 实时通信的开源技术,对于实时流媒体的推送、拉取都有着很好的支持。同时,SRS(Simple-RTMP-Server)和 ZLMediaKit 都是非常优秀的流媒体服务器,并且都内置了丰富的 WebRTC 支持。

结合使用这三者,您可以在 Android 设备上快速实现音视频流的推送,并且保证高质量、低延迟的体验。不仅如此,WebRTC 的 P2P 功能还可以使您的流媒体服务更加安全可靠,避免对于延迟、带宽和服务器压力造成过大的依赖。

盘点 WebRTC、SRS 和 ZLMediaKit,还有更多的有关实时流媒体的技术,可以持续关注我的专栏,我会用最菜的技术视角来进行记录,方便大家一起交流和指教。

ChatGPT提供的代码思路

上实践

从AI的回答中可以看出,要实现比较复杂的逻辑,就目前而言还是有点吃力,但相比于以前自己去Google和百度确实方便了好多,既然不能直接复制粘贴,那我们就照着AI给的思路来自己去封装一下代码即可。

由于找不到AI给出的cn.zhoulk:ZLMediaKit-Android:x.y.z依赖,AI提供的GitHub仓库也是返回404,甚至提供的webrtc-android-codelab项目地址以及WebRTC官方文档地址都没法打开,所以这里我们直接弃用,全凭自己的经验来写就好。

Android代码部分

1、创建项目

这里我们创建Compose Activity,顺便体验一下Kotlin中Compose写UI的顺滑。

2、添加依赖

首先需要将以下依赖项添加到您的 build.gradle 文件中:

dependencies {

//...

//webrtc

implementation 'org.webrtc:google-webrtc:1.0.32006'

//网络请求 https://github.com/liangjingkanji/Net

implementation "org.jetbrains.kotlinx:kotlinx-coroutines-core:1.6.0" // 协程(版本自定)

implementation 'org.jetbrains.kotlinx:kotlinx-coroutines-android:1.6.0'

implementation 'com.squareup.okhttp3:okhttp:4.10.0' // 要求OkHttp4以上

implementation 'com.github.liangjingkanji:Net:3.5.3'

implementation 'com.google.code.gson:gson:2.9.1'

implementation 'com.github.getActivity:GsonFactory:5.2'

implementation "org.jetbrains.kotlin:kotlin-stdlib:1.7.0"

implementation 'com.github.liangjingkanji:Serialize:1.2.3'

//申请权限框架

implementation 'com.github.getActivity:XXPermissions:18.0'

}

由于WebRTC推拉流和sdp交换都必须依赖于网络,所以我们添加网络请求相关依赖和权限申请框架依赖。这里要注意一下settings.gradle的配置,添加aliyun的maven,避免下载不到依赖。如果实在加载不了,可以拉取我的Demo(WebRTC_Compose_Demo),我后面会把WebRTC的aar包一起传上去。

pluginManagement {

repositories {

google()

mavenCentral()

gradlePluginPortal()

}

}

dependencyResolutionManagement {

repositoriesMode.set(RepositoriesMode.FAIL_ON_PROJECT_REPOS)

repositories {

google()

mavenCentral()

maven { url 'https://jitpack.io' }

maven { url 'https://maven.aliyun.com/repository/public/' }

maven { url 'https://maven.aliyun.com/repository/google/' }

maven { url 'https://maven.aliyun.com/repository/jcenter' }

}

}

rootProject.name = "WebRTC-Compose-Demo"

include ':app'

记住要添加相应的权限:

<?xml version="1.0" encoding="utf-8"?>

<manifest xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:tools="http://schemas.android.com/tools">

<uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE" />

<uses-permission android:name="android.permission.READ_EXTERNAL_STORAGE" />

<uses-permission android:name="android.permission.INTERNET" />

<uses-permission android:name="android.permission.ACCESS_WIFI_STATE" />

<uses-permission android:name="android.permission.RECORD_AUDIO" />

<uses-permission android:name="android.permission.CAMERA" />

<uses-feature android:name="android.hardware.camera" />

<uses-feature android:name="android.hardware.camera2.full" />

<uses-feature android:name="android.hardware.camera2.autofocus" />

<application

android:allowBackup="true"

android:dataExtractionRules="@xml/data_extraction_rules"

android:fullBackupContent="@xml/backup_rules"

android:icon="@mipmap/ic_launcher"

android:label="@string/app_name"

android:networkSecurityConfig="@xml/network_security_config"

android:requestLegacyExternalStorage="true"

android:supportsRtl="true"

android:theme="@style/Theme.WebRTCComposeDemo"

tools:targetApi="31">

<meta-data

android:name="ScopedStorage"

android:value="true" />

<activity

android:name=".MainActivity"

android:exported="true"

android:label="@string/app_name"

android:theme="@style/Theme.WebRTCComposeDemo">

<intent-filter>

<action android:name="android.intent.action.MAIN" />

<category android:name="android.intent.category.LAUNCHER" />

</intent-filter>

</activity>

</application>

</manifest>

3、构建WebRTC工具类

将WebRTC推拉流相关逻辑封装成一个工具类,方便后面使用。代码里的备注比较少,具体可以参照大佬的解释(WebRTC简介)。

/**

* Created by 玉念聿辉.

* Use: WebRTC推拉流工具拉

* Date: 2023/5/9

* Time: 11:23

*/

class WebRTCUtil(context: Context) : PeerConnection.Observer,

SdpObserver {

private val context: Context

private var eglBase: EglBase? = null

private var playUrl: String? = null

private var peerConnection: PeerConnection? = null

private var surfaceViewRenderer: SurfaceViewRenderer? = null

private var peerConnectionFactory: PeerConnectionFactory? = null

private var audioSource: AudioSource? = null

private var videoSource: VideoSource? = null

private var localAudioTrack: AudioTrack? = null

private var localVideoTrack: VideoTrack? = null

private var captureAndroid: VideoCapturer? = null

private var surfaceTextureHelper: SurfaceTextureHelper? = null

private var isShowCamera = false

private var isPublish = false //isPublish true为推流 false为拉流

private var reConnCount = 0

fun create(

eglBase: EglBase?,

surfaceViewRenderer: SurfaceViewRenderer?,

playUrl: String?,

callBack: WebRtcCallBack?

) {

create(eglBase, surfaceViewRenderer, false, playUrl, callBack)

}

fun create(

eglBase: EglBase?,

surfaceViewRenderer: SurfaceViewRenderer?,

isPublish: Boolean,

playUrl: String?,

callBack: WebRtcCallBack?

) {

this.eglBase = eglBase

this.surfaceViewRenderer = surfaceViewRenderer

this.callBack = callBack

this.playUrl = playUrl

this.isPublish = isPublish

init()

}

fun create(

eglBase: EglBase?,

surfaceViewRenderer: SurfaceViewRenderer?,

isPublish: Boolean,

isShowCamera: Boolean,

playUrl: String?,

callBack: WebRtcCallBack?

) {

this.eglBase = eglBase

this.surfaceViewRenderer = surfaceViewRenderer

this.callBack = callBack

this.playUrl = playUrl

this.isPublish = isPublish

this.isShowCamera = isShowCamera

init()

}

private fun init() {

peerConnectionFactory = getPeerConnectionFactory(context)

// 这可以通过使用 PeerConnectionFactory 类并调用 createPeerConnection() 方法来创建WebRTC PeerConnection

Logging.enableLogToDebugOutput(Logging.Severity.LS_NONE)

peerConnection = peerConnectionFactory!!.createPeerConnection(config, this)

//拉流

if (!isPublish) {

peerConnection!!.addTransceiver(

MediaStreamTrack.MediaType.MEDIA_TYPE_AUDIO,

RtpTransceiver.RtpTransceiverInit(RtpTransceiver.RtpTransceiverDirection.RECV_ONLY)

)

peerConnection!!.addTransceiver(

MediaStreamTrack.MediaType.MEDIA_TYPE_VIDEO,

RtpTransceiver.RtpTransceiverInit(RtpTransceiver.RtpTransceiverDirection.RECV_ONLY)

)

}

//推流

else {

peerConnection!!.addTransceiver(

MediaStreamTrack.MediaType.MEDIA_TYPE_AUDIO,

RtpTransceiver.RtpTransceiverInit(RtpTransceiver.RtpTransceiverDirection.SEND_ONLY)

)

peerConnection!!.addTransceiver(

MediaStreamTrack.MediaType.MEDIA_TYPE_VIDEO,

RtpTransceiver.RtpTransceiverInit(RtpTransceiver.RtpTransceiverDirection.SEND_ONLY)

)

//设置回声去噪

WebRtcAudioUtils.setWebRtcBasedAcousticEchoCanceler(true)

WebRtcAudioUtils.setWebRtcBasedNoiseSuppressor(true)

// 添加音频轨道

audioSource = peerConnectionFactory!!.createAudioSource(createAudioConstraints())

localAudioTrack = peerConnectionFactory!!.createAudioTrack(AUDIO_TRACK_ID, audioSource)

localAudioTrack!!.setEnabled(true)

peerConnection!!.addTrack(localAudioTrack)

//添加视频轨道

if (isShowCamera) {

captureAndroid = CameraUtil.createVideoCapture(context)

surfaceTextureHelper =

SurfaceTextureHelper.create("CameraThread", eglBase!!.eglBaseContext)

videoSource = peerConnectionFactory!!.createVideoSource(false)

captureAndroid!!.initialize(

surfaceTextureHelper,

context,

videoSource!!.capturerObserver

)

captureAndroid!!.startCapture(VIDEO_RESOLUTION_WIDTH, VIDEO_RESOLUTION_HEIGHT, FPS)

localVideoTrack = peerConnectionFactory!!.createVideoTrack(VIDEO_TRACK_ID, videoSource)

localVideoTrack!!.setEnabled(true)

if (surfaceViewRenderer != null) {

val videoSink = ProxyVideoSink()

videoSink.setTarget(surfaceViewRenderer)

localVideoTrack!!.addSink(videoSink)

}

peerConnection!!.addTrack(localVideoTrack)

}

}

peerConnection!!.createOffer(this, MediaConstraints())

}

fun destroy() {

if (callBack != null) {

callBack = null

}

if (peerConnection != null) {

peerConnection!!.dispose()

peerConnection = null

}

if (surfaceTextureHelper != null) {

surfaceTextureHelper!!.dispose()

surfaceTextureHelper = null

}

if (captureAndroid != null) {

captureAndroid!!.dispose()

captureAndroid = null

}

if (surfaceViewRenderer != null) {

surfaceViewRenderer!!.clearImage()

}

if (peerConnectionFactory != null) {

peerConnectionFactory!!.dispose()

peerConnectionFactory = null

}

}

/**

* 配置音频参数

* @return

*/

private fun createAudioConstraints(): MediaConstraints {

val audioConstraints = MediaConstraints()

audioConstraints.mandatory.add(

MediaConstraints.KeyValuePair(AUDIO_ECHO_CANCELLATION_CONSTRAINT, "true")

)

audioConstraints.mandatory.add(

MediaConstraints.KeyValuePair(AUDIO_AUTO_GAIN_CONTROL_CONSTRAINT, "false")

)

audioConstraints.mandatory.add(

MediaConstraints.KeyValuePair(AUDIO_HIGH_PASS_FILTER_CONSTRAINT, "false")

)

audioConstraints.mandatory.add(

MediaConstraints.KeyValuePair(AUDIO_NOISE_SUPPRESSION_CONSTRAINT, "true")

)

return audioConstraints

}

/**

* 交换sdp

*/

private fun openWebRtc(sdp: String?) {

// scopeNet {

// val mediaType: MediaType = "application/json".toMediaTypeOrNull()!!

// var mBody: RequestBody? = RequestBody.create(mediaType, sdp!!)

// var result = Post<SdpBean>(playUrl!!) {

// body = mBody

// addHeader("Content-Type", "application/json")

// }.await()

// reConnCount = 0

// setRemoteSdp(result.sdp)

// FLogUtil.e(TAG, "交换sdp: ${Gson().toJson(result)}")

// }.catch {

// FLogUtil.e(TAG, "交换sdp 异常: $it")

// reConnCount++

// if (reConnCount < 50) {

// Timer().schedule(300){ //执行的任务

// openWebRtc(sdp)

// }

// }

// }

reConnCount++

val client: OkHttpClient = OkHttpClient.Builder()

.connectTimeout(60, TimeUnit.SECONDS)

.hostnameVerifier { _, _ -> true }.build()

val mediaType: MediaType = "application/json".toMediaTypeOrNull()!!

val body = RequestBody.create(mediaType, sdp!!)

val request: Request = Request.Builder()

.url(playUrl!!)

.method("POST", body)

.addHeader("Content-Type", "application/json")

.build()

val call = client.newCall(request)

call.enqueue(object : Callback {

override fun onFailure(call: Call, e: IOException) {

FLogUtil.e(TAG, "交换sdp reConnCount:$reConnCount 异常: ${e.message}")

Timer().schedule(300) { //执行的任务

openWebRtc(sdp)

}

}

@Throws(IOException::class)

override fun onResponse(call: Call, response: Response) {

val result = response.body!!.string()

FLogUtil.e(TAG, "交换sdp: $result")

var sdpBean = Gson().fromJson(result, SdpBean::class.java)

if (sdpBean != null && !TextUtils.isEmpty(sdpBean.sdp)) {

if (sdpBean.code === 400) {

Timer().schedule(300) { //执行的任务

openWebRtc(sdp)

}

} else {

reConnCount = 0

setRemoteSdp(sdpBean.sdp)

if (callBack != null) callBack!!.onSuccess()

}

}

}

})

}

fun setRemoteSdp(sdp: String?) {

if (peerConnection != null) {

val remoteSpd = SessionDescription(SessionDescription.Type.ANSWER, sdp)

peerConnection!!.setRemoteDescription(this, remoteSpd)

}

}

interface WebRtcCallBack {

fun onSuccess()

fun onFail()

}

private var callBack: WebRtcCallBack? = null

init {

this.context = context.applicationContext

}

/**

* 获取 PeerConnectionFactory

*/

private fun getPeerConnectionFactory(context: Context): PeerConnectionFactory {

val initializationOptions: PeerConnectionFactory.InitializationOptions =

PeerConnectionFactory.InitializationOptions.builder(context)

.setEnableInternalTracer(true)

.setFieldTrials("WebRTC-H264HighProfile/Enabled/")

.createInitializationOptions()

PeerConnectionFactory.initialize(initializationOptions)

// 2. 设置编解码方式:默认方法

val encoderFactory: VideoEncoderFactory = DefaultVideoEncoderFactory(

eglBase!!.eglBaseContext,

false,

true

)

val decoderFactory: VideoDecoderFactory =

DefaultVideoDecoderFactory(eglBase!!.eglBaseContext)

// 构造Factory

PeerConnectionFactory.initialize(

PeerConnectionFactory.InitializationOptions

.builder(context)

.createInitializationOptions()

)

return PeerConnectionFactory.builder()

.setOptions(PeerConnectionFactory.Options())

.setAudioDeviceModule(JavaAudioDeviceModule.builder(context).createAudioDeviceModule())

.setVideoEncoderFactory(encoderFactory)

.setVideoDecoderFactory(decoderFactory)

.createPeerConnectionFactory()

}

//修改模式 PlanB无法使用仅接收音视频的配置

private val config: PeerConnection.RTCConfiguration

private get() {

val rtcConfig: PeerConnection.RTCConfiguration =

PeerConnection.RTCConfiguration(ArrayList())

//关闭分辨率变换

rtcConfig.enableCpuOveruseDetection = false

//修改模式 PlanB无法使用仅接收音视频的配置

rtcConfig.sdpSemantics = PeerConnection.SdpSemantics.UNIFIED_PLAN

return rtcConfig

}

override fun onCreateSuccess(sdp: SessionDescription) {

if (sdp.type === SessionDescription.Type.OFFER) {

//设置setLocalDescription offer返回sdp

peerConnection!!.setLocalDescription(this, sdp)

if (!TextUtils.isEmpty(sdp.description)) {

reConnCount = 0

openWebRtc(sdp.description)

}

}

}

override fun onSetSuccess() {}

override fun onCreateFailure(error: String?) {}

override fun onSetFailure(error: String?) {}

override fun onSignalingChange(newState: PeerConnection.SignalingState?) {}

override fun onIceConnectionChange(newState: PeerConnection.IceConnectionState?) {}

override fun onIceConnectionReceivingChange(receiving: Boolean) {}

override fun onIceGatheringChange(newState: PeerConnection.IceGatheringState?) {}

override fun onIceCandidate(candidate: IceCandidate?) {

peerConnection!!.addIceCandidate(candidate)

}

override fun onIceCandidatesRemoved(candidates: Array<IceCandidate?>?) {

peerConnection!!.removeIceCandidates(candidates)

}

override fun onAddStream(stream: MediaStream?) {}

override fun onRemoveStream(stream: MediaStream?) {}

override fun onDataChannel(dataChannel: DataChannel?) {}

override fun onRenegotiationNeeded() {}

override fun onAddTrack(receiver: RtpReceiver, mediaStreams: Array<MediaStream?>?) {

val track: MediaStreamTrack = receiver.track()!!

if (track is VideoTrack) {

val remoteVideoTrack: VideoTrack = track

remoteVideoTrack.setEnabled(true)

if (surfaceViewRenderer != null && isShowCamera) {

val videoSink = ProxyVideoSink()

videoSink.setTarget(surfaceViewRenderer)

remoteVideoTrack.addSink(videoSink)

}

}

}

companion object {

private const val TAG = "WebRTCUtil"

const val VIDEO_TRACK_ID = "ARDAMSv0"

const val AUDIO_TRACK_ID = "ARDAMSa0"

private const val VIDEO_RESOLUTION_WIDTH = 1280

private const val VIDEO_RESOLUTION_HEIGHT = 720

private const val FPS = 30

private const val AUDIO_ECHO_CANCELLATION_CONSTRAINT = "googEchoCancellation"

private const val AUDIO_AUTO_GAIN_CONTROL_CONSTRAINT = "googAutoGainControl"

private const val AUDIO_HIGH_PASS_FILTER_CONSTRAINT = "googHighpassFilter"

private const val AUDIO_NOISE_SUPPRESSION_CONSTRAINT = "googNoiseSuppression"

}

}

4、MainActivity绘制推流预览页面

页面很简单,一个输入框加开始推流的按钮,下面就是一个摄像头实时预览画面,由于Compose还没出SurfaceViewRenderer的替代品,这里我们还是用xlm来实现,再用AndroidView来加载即可,具体实现代码如下:

<?xml version="1.0" encoding="utf-8"?>

<org.webrtc.SurfaceViewRenderer xmlns:android="http://schemas.android.com/apk/res/android"

android:id="@+id/surface_view"

android:layout_width="wrap_content"

android:layout_height="wrap_content">

</org.webrtc.SurfaceViewRenderer>

/**

* Created by 玉念聿辉.

* Use: WebRTC Demo

* Date: 2023/5/9

* Time: 11:23

*/

class MainActivity : ComponentActivity() {

private val permissionArray = arrayOf(

Manifest.permission.RECORD_AUDIO,

Manifest.permission.CAMERA,

Manifest.permission.WRITE_EXTERNAL_STORAGE,

Manifest.permission.READ_EXTERNAL_STORAGE

)

private var mEglBase: EglBase = EglBase.create()

private var webRtcUtil1: WebRTCUtil? = null

private var pushUrl =

mutableStateOf("https://192.168.1.172/index/api/webrtc?app=live&stream=test&type=push")

private var surfaceViewRenderer1: SurfaceViewRenderer? = null

/**

* 开始推流

*/

private fun doPush() {

if (TextUtils.isEmpty(pushUrl.value)) {

Toast.makeText(this@MainActivity, "推流地址为空!", Toast.LENGTH_SHORT).show()

return

}

if (webRtcUtil1 != null) {

webRtcUtil1!!.destroy()

}

webRtcUtil1 = WebRTCUtil(this@MainActivity)

webRtcUtil1!!.create(

mEglBase,

surfaceViewRenderer1,

isPublish = true,

isShowCamera = true,

playUrl = pushUrl.value,

callBack = object : WebRTCUtil.WebRtcCallBack {

override fun onSuccess() {}

override fun onFail() {}

})

}

override fun onCreate(savedInstanceState: Bundle?) {

super.onCreate(savedInstanceState)

FLogUtil.init(this, true)//初始化日志工具

app = this //初始化net

getPermissions()//检测权限

webRtcUtil1 = WebRTCUtil(this@MainActivity)

setContent {

WebRTCComposeDemoTheme {

Column(modifier = Modifier.fillMaxWidth()) {

//推流地址输入框以及开始推流按钮

Row(

modifier = Modifier.fillMaxWidth(),

verticalAlignment = Alignment.CenterVertically

) {

Box(

modifier = Modifier.weight(1f)

) {

TextField(

value = pushUrl.value,

onValueChange = {

pushUrl.value = it

},

textStyle = TextStyle(

color = Color(0xFF000000),

fontSize = 14.sp

), colors = TextFieldDefaults.textFieldColors(

backgroundColor = Color(0x00FFFFFF),

disabledIndicatorColor = Color.Transparent,

errorIndicatorColor = Color.Transparent,

focusedIndicatorColor = Color.Transparent,

unfocusedIndicatorColor = Color.Transparent

),

keyboardOptions = KeyboardOptions(keyboardType = KeyboardType.Text),

placeholder = {

Text(

text = "请输入推流地址",

style = TextStyle(

fontSize = 14.sp,

color = Color(0xffc0c4cc)

)

)

}

)

}

Box(

modifier = Modifier

.clickable {

doPush()//开始推流

}

.width(80.dp)

.background(

Color.White,

RoundedCornerShape(5.dp)

)

.border(

1.dp,

Color(0xFF000000),

shape = RoundedCornerShape(5.dp)

)

.padding(5.dp),

contentAlignment = Alignment.Center

) {

Text(text = "推流")

}

}

//推流预览部分

Box(

modifier = Modifier

.fillMaxWidth()

.height(300.dp)

) {

surfaceViewRenderer1 = mSurfaceViewRenderer(mEglBase, webRtcUtil1!!)

AndroidView({ surfaceViewRenderer1!! }) { videoView ->

CoroutineScope(Dispatchers.Main).launch {

//根据视频大小缩放surfaceViewRenderer控件

var screenSize = "480-640"

var screenSizeD = 720 / 1280.0

val screenSizeS: Array<String> =

screenSize.split("-").toTypedArray()

screenSizeD =

screenSizeS[0].toInt() / (screenSizeS[1].toInt() * 1.0)

var finalScreenSizeD = screenSizeD

var vto = videoView.viewTreeObserver

vto.addOnPreDrawListener {

var width: Int = videoView.measuredWidth

var height: Int = (finalScreenSizeD * width).toInt()

//获取到宽度和高度后,可用于计算

var layoutParams = videoView.layoutParams

layoutParams.height = height

videoView.layoutParams = layoutParams

true

}

}

}

}

}

}

}

}

/**

* 申请权限

*/

private fun getPermissions() {

XXPermissions.with(this@MainActivity)

.permission(permissionArray)

.request(object : OnPermissionCallback {

override fun onGranted(@NonNull permissions: List<String>, allGranted: Boolean) {

if (!allGranted) {

Toast.makeText(this@MainActivity, "请打开必要权限,以免影响正常使用!", Toast.LENGTH_LONG)

.show()

return

}

}

override fun onDenied(@NonNull permissions: List<String>, doNotAskAgain: Boolean) {

if (doNotAskAgain) {

Toast.makeText(this@MainActivity, "被永久拒绝授权,请手动授予权限", Toast.LENGTH_LONG)

.show()

// 如果是被永久拒绝就跳转到应用权限系统设置页面

XXPermissions.startPermissionActivity(this@MainActivity, permissions)

} else {

Toast.makeText(this@MainActivity, "获取权限失败", Toast.LENGTH_LONG).show()

}

}

})

}

}

/**

* 由于Compose还没出SurfaceViewRenderer的替代品,这里我们还是用xlm来实现

*/

@Composable

fun mSurfaceViewRenderer(mEglBase: EglBase, webRtcUtil1: WebRTCUtil): SurfaceViewRenderer {

val context = LocalContext.current

val surfaceViewRenderer = remember {

SurfaceViewRenderer(context).apply {

id = R.id.surface_view

}

}

//Makes MapView follow the lifecycle of this composable

val lifecycleObserver = rememberMapLifecycleObserver(surfaceViewRenderer, mEglBase, webRtcUtil1)

val lifecycle = LocalLifecycleOwner.current.lifecycle

DisposableEffect(lifecycle) {

lifecycle.addObserver(lifecycleObserver)

onDispose {

lifecycle.removeObserver(lifecycleObserver)

}

}

return surfaceViewRenderer

}

@Composable

fun rememberMapLifecycleObserver(

surfaceViewRenderer: SurfaceViewRenderer,

mEglBase: EglBase, webRtcUtil1: WebRTCUtil

): LifecycleEventObserver =

remember(surfaceViewRenderer) {

LifecycleEventObserver { _, event ->

when (event) {

Lifecycle.Event.ON_CREATE -> {

surfaceViewRenderer.init(mEglBase.eglBaseContext, null)

surfaceViewRenderer.setScalingType(RendererCommon.ScalingType.SCALE_ASPECT_FILL)

surfaceViewRenderer.setEnableHardwareScaler(true)

surfaceViewRenderer.setZOrderMediaOverlay(true)

}

Lifecycle.Event.ON_START -> {

}

Lifecycle.Event.ON_RESUME -> {

}

Lifecycle.Event.ON_PAUSE -> {

}

Lifecycle.Event.ON_STOP -> {

}

Lifecycle.Event.ON_DESTROY -> {

webRtcUtil1.destroy()

}

else -> throw IllegalStateException()

}

}

}

到这里一个简单的WebRTC推流Demo就已经完成,我们把代码跑起来看一下运行效果。

第四章到这里就结束了,下节继续记录Android如何拉取SRS和ZLMediaKit流,占用您的垃圾时间了,实在对不住

THE END

感谢查阅

玉念聿辉:编辑