1:vue-simple-uploader介绍

vue-simple-uploader是基于 simple-uploader.js 封装的vue上传插件。它的优点包括且不限于以下几种:

- 支持文件、多文件、文件夹上传;支持拖拽文件、文件夹上传

- 可暂停、继续上传

- 错误处理

- 支持“秒传”,通过文件判断服务端是否已存在从而实现“秒传”

- 分片上传

- 支持进度、预估剩余时间、出错自动重试、重传等操作

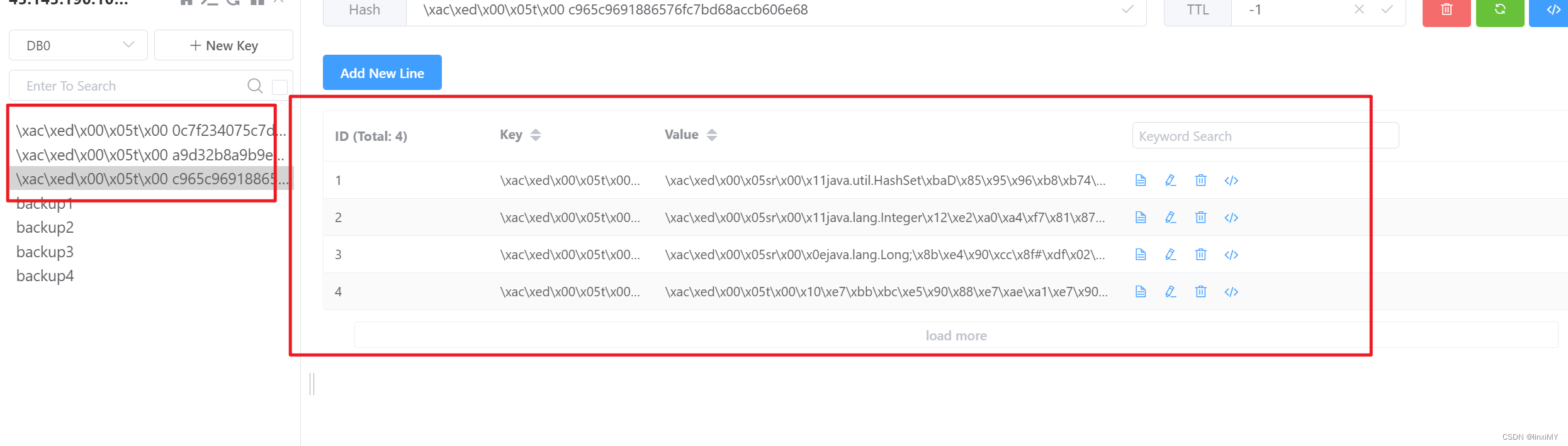

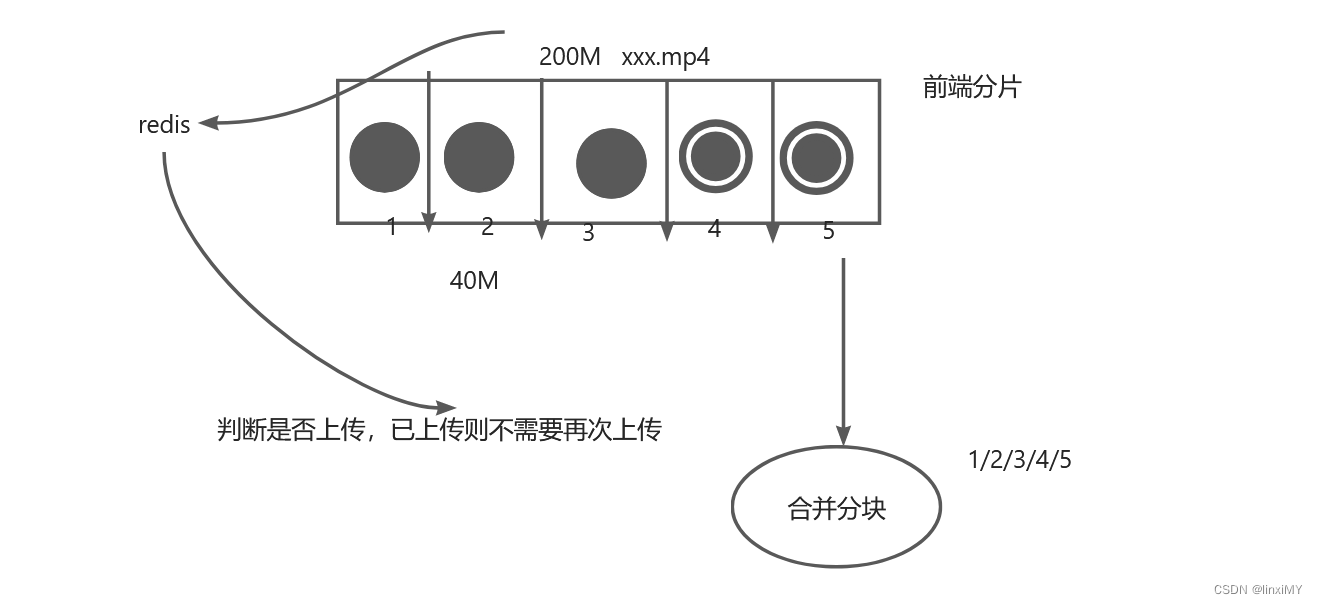

2:图片便于理解:

秒传:(将文件使用MD5加密,生成一个串,我们拿到这个串到redis 中查看是否存在)

3:服务端Java代码

3.1 UploaderController

package com.xialj.demoend.controller;

import com.xialj.demoend.common.RestApiResponse;

import com.xialj.demoend.common.Result;

import com.xialj.demoend.dto.FileChunkDTO;

import com.xialj.demoend.dto.FileChunkResultDTO;

import com.xialj.demoend.service.IUploadService;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.*;

import javax.annotation.Resource;

/**

* @ProjectName UploaderController

* @author Administrator

* @version 1.0.0

* @Description 附件分片上传

* @createTime 2022/4/13 0013 15:58

*/

@RestController

@RequestMapping("upload")

public class UploaderController {

@Resource

private IUploadService uploadService;

/**

* 检查分片是否存在

*

* @return

*/

@GetMapping("chunk")

public Result checkChunkExist(FileChunkDTO chunkDTO) {

FileChunkResultDTO fileChunkCheckDTO;

try {

fileChunkCheckDTO = uploadService.checkChunkExist(chunkDTO);

return Result.ok(fileChunkCheckDTO);

} catch (Exception e) {

return Result.fail(e.getMessage());

}

}

/**

* 上传文件分片

*

* @param chunkDTO

* @return

*/

@PostMapping("chunk")

public Result uploadChunk(FileChunkDTO chunkDTO) {

try {

uploadService.uploadChunk(chunkDTO);

return Result.ok(chunkDTO.getIdentifier());

} catch (Exception e) {

return Result.fail(e.getMessage());

}

}

/**

* 请求合并文件分片

*

* @param chunkDTO

* @return

*/

@PostMapping("merge")

public Result mergeChunks(@RequestBody FileChunkDTO chunkDTO) {

try {

boolean success = uploadService.mergeChunk(chunkDTO.getIdentifier(), chunkDTO.getFilename(), chunkDTO.getTotalChunks());

return Result.ok(success);

} catch (Exception e) {

return Result.fail(e.getMessage());

}

}

}

3.2 IUploadService 接口

package com.xialj.demoend.service;

import com.xialj.demoend.dto.FileChunkDTO;

import com.xialj.demoend.dto.FileChunkResultDTO;

import java.io.IOException;

/**

* @ProjectName IUploadService

* @author Administrator

* @version 1.0.0

* @Description 附件分片上传

* @createTime 2022/4/13 0013 15:59

*/

public interface IUploadService {

/**

* 检查文件是否存在,如果存在则跳过该文件的上传,如果不存在,返回需要上传的分片集合

* @param chunkDTO

* @return

*/

FileChunkResultDTO checkChunkExist(FileChunkDTO chunkDTO);

/**

* 上传文件分片

* @param chunkDTO

*/

void uploadChunk(FileChunkDTO chunkDTO) throws IOException;

/**

* 合并文件分片

* @param identifier

* @param fileName

* @param totalChunks

* @return

* @throws IOException

*/

boolean mergeChunk(String identifier,String fileName,Integer totalChunks)throws IOException;

}

3.3 UploadServiceImpl

package com.xialj.demoend.service.impl;

import com.xialj.demoend.dto.FileChunkDTO;

import com.xialj.demoend.dto.FileChunkResultDTO;

import com.xialj.demoend.service.IUploadService;

import org.apache.tomcat.util.http.fileupload.IOUtils;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.stereotype.Service;

import java.io.*;

import java.util.*;

/**

* @ProjectName UploadServiceImpl

* @author Administrator

* @version 1.0.0

* @Description 附件分片上传

* @createTime 2022/4/13 0013 15:59

*/

@Service

@SuppressWarnings("all")

public class UploadServiceImpl implements IUploadService {

private Logger logger = LoggerFactory.getLogger(UploadServiceImpl.class);

@Autowired

private RedisTemplate<String, Object> redisTemplate;

@Value("${uploadFolder}")

private String uploadFolder;

/**

* 检查文件是否存在,如果存在则跳过该文件的上传,如果不存在,返回需要上传的分片集合

* 检查分片是否存在

○ 检查目录下的文件是否存在。

○ 检查redis存储的分片是否存在。

○ 判断分片数量和总分片数量是否一致。

如果文件存在并且分片上传完毕,标识已经完成附件的上传,可以进行秒传操作。

如果文件不存在或者分片为上传完毕,则返回false并返回已经上传的分片信息。

* @param chunkDTO

* @return

*/

@Override

public FileChunkResultDTO checkChunkExist(FileChunkDTO chunkDTO) {

//1.检查文件是否已上传过

//1.1)检查在磁盘中是否存在

String fileFolderPath = getFileFolderPath(chunkDTO.getIdentifier());

logger.info("fileFolderPath-->{}", fileFolderPath);

String filePath = getFilePath(chunkDTO.getIdentifier(), chunkDTO.getFilename());

File file = new File(filePath);

boolean exists = file.exists();

//1.2)检查Redis中是否存在,并且所有分片已经上传完成。

Set<Integer> uploaded = (Set<Integer>) redisTemplate.opsForHash().get(chunkDTO.getIdentifier(), "uploaded");

if (uploaded != null && uploaded.size() == chunkDTO.getTotalChunks() && exists) {

return new FileChunkResultDTO(true);

}

File fileFolder = new File(fileFolderPath);

if (!fileFolder.exists()) {

boolean mkdirs = fileFolder.mkdirs();

logger.info("准备工作,创建文件夹,fileFolderPath:{},mkdirs:{}", fileFolderPath, mkdirs);

}

// 断点续传,返回已上传的分片

return new FileChunkResultDTO(false, uploaded);

}

/**

* 上传分片

* 上传附件分片

○ 判断目录是否存在,如果不存在则创建目录。

○ 进行切片的拷贝,将切片拷贝到指定的目录。

○ 将该分片写入redis

* @param chunkDTO

*/

@Override

public void uploadChunk(FileChunkDTO chunkDTO) {

//分块的目录

String chunkFileFolderPath = getChunkFileFolderPath(chunkDTO.getIdentifier());

logger.info("分块的目录 -> {}", chunkFileFolderPath);

File chunkFileFolder = new File(chunkFileFolderPath);

if (!chunkFileFolder.exists()) {

boolean mkdirs = chunkFileFolder.mkdirs();

logger.info("创建分片文件夹:{}", mkdirs);

}

//写入分片

try (

InputStream inputStream = chunkDTO.getFile().getInputStream();

FileOutputStream outputStream = new FileOutputStream(new File(chunkFileFolderPath + chunkDTO.getChunkNumber()))

) {

IOUtils.copy(inputStream, outputStream);

logger.info("文件标识:{},chunkNumber:{}", chunkDTO.getIdentifier(), chunkDTO.getChunkNumber());

//将该分片写入redis

long size = saveToRedis(chunkDTO);

} catch (Exception e) {

e.printStackTrace();

}

}

@Override

public boolean mergeChunk(String identifier, String fileName, Integer totalChunks) throws IOException {

return mergeChunks(identifier, fileName, totalChunks);

}

/**

* 合并分片

*

* @param identifier

* @param filename

*/

private boolean mergeChunks(String identifier, String filename, Integer totalChunks) {

String chunkFileFolderPath = getChunkFileFolderPath(identifier);

String filePath = getFilePath(identifier, filename);

// 检查分片是否都存在

if (checkChunks(chunkFileFolderPath, totalChunks)) {

File chunkFileFolder = new File(chunkFileFolderPath);

File mergeFile = new File(filePath);

File[] chunks = chunkFileFolder.listFiles();

// 切片排序1、2/3、---

List fileList = Arrays.asList(chunks);

Collections.sort(fileList, (Comparator<File>) (o1, o2) -> {

return Integer.parseInt(o1.getName()) - (Integer.parseInt(o2.getName()));

});

try {

RandomAccessFile randomAccessFileWriter = new RandomAccessFile(mergeFile, "rw");

byte[] bytes = new byte[1024];

for (File chunk : chunks) {

RandomAccessFile randomAccessFileReader = new RandomAccessFile(chunk, "r");

int len;

while ((len = randomAccessFileReader.read(bytes)) != -1) {

randomAccessFileWriter.write(bytes, 0, len);

}

randomAccessFileReader.close();

}

randomAccessFileWriter.close();

} catch (Exception e) {

return false;

}

return true;

}

return false;

}

/**

* 检查分片是否都存在

* @param chunkFileFolderPath

* @param totalChunks

* @return

*/

private boolean checkChunks(String chunkFileFolderPath, Integer totalChunks) {

try {

for (int i = 1; i <= totalChunks + 1; i++) {

File file = new File(chunkFileFolderPath + File.separator + i);

if (file.exists()) {

continue;

} else {

return false;

}

}

} catch (Exception e) {

return false;

}

return true;

}

/**

* 分片写入Redis

* 判断切片是否已存在,如果未存在,则创建基础信息,并保存。

* @param chunkDTO

*/

private synchronized long saveToRedis(FileChunkDTO chunkDTO) {

Set<Integer> uploaded = (Set<Integer>) redisTemplate.opsForHash().get(chunkDTO.getIdentifier(), "uploaded");

if (uploaded == null) {

uploaded = new HashSet<>(Arrays.asList(chunkDTO.getChunkNumber()));

HashMap<String, Object> objectObjectHashMap = new HashMap<>();

objectObjectHashMap.put("uploaded", uploaded);

objectObjectHashMap.put("totalChunks", chunkDTO.getTotalChunks());

objectObjectHashMap.put("totalSize", chunkDTO.getTotalSize());

// objectObjectHashMap.put("path", getFileRelativelyPath(chunkDTO.getIdentifier(), chunkDTO.getFilename()));

objectObjectHashMap.put("path", chunkDTO.getFilename());

redisTemplate.opsForHash().putAll(chunkDTO.getIdentifier(), objectObjectHashMap);

} else {

uploaded.add(chunkDTO.getChunkNumber());

redisTemplate.opsForHash().put(chunkDTO.getIdentifier(), "uploaded", uploaded);

}

return uploaded.size();

}

/**

* 得到文件的绝对路径

*

* @param identifier

* @param filename

* @return

*/

private String getFilePath(String identifier, String filename) {

String ext = filename.substring(filename.lastIndexOf("."));

// return getFileFolderPath(identifier) + identifier + ext;

return uploadFolder + filename;

}

/**

* 得到文件的相对路径

*

* @param identifier

* @param filename

* @return

*/

private String getFileRelativelyPath(String identifier, String filename) {

String ext = filename.substring(filename.lastIndexOf("."));

return "/" + identifier.substring(0, 1) + "/" +

identifier.substring(1, 2) + "/" +

identifier + "/" + identifier

+ ext;

}

/**

* 得到分块文件所属的目录

*

* @param identifier

* @return

*/

private String getChunkFileFolderPath(String identifier) {

return getFileFolderPath(identifier) + "chunks" + File.separator;

}

/**

* 得到文件所属的目录

*

* @param identifier

* @return

*/

private String getFileFolderPath(String identifier) {

return uploadFolder + identifier.substring(0, 1) + File.separator +

identifier.substring(1, 2) + File.separator +

identifier + File.separator;

// return uploadFolder;

}

}

3.4 FileChunkDTO

package com.xialj.demoend.dto;

import org.springframework.web.multipart.MultipartFile;

/**

* @ProjectName FileChunkDTO

* @author Administrator

* @version 1.0.0

* @Description 附件分片上传

* @createTime 2022/4/13 0013 15:59

*/

public class FileChunkDTO {

/**

* 文件 md5

*/

private String identifier;

/**

* 分块文件

*/

MultipartFile file;

/**

* 当前分块序号

*/

private Integer chunkNumber;

/**

* 分块大小

*/

private Long chunkSize;

/**

* 当前分块大小

*/

private Long currentChunkSize;

/**

* 文件总大小

*/

private Long totalSize;

/**

* 分块总数

*/

private Integer totalChunks;

/**

* 文件名

*/

private String filename;

public String getIdentifier() {

return identifier;

}

public void setIdentifier(String identifier) {

this.identifier = identifier;

}

public MultipartFile getFile() {

return file;

}

public void setFile(MultipartFile file) {

this.file = file;

}

public Integer getChunkNumber() {

return chunkNumber;

}

public void setChunkNumber(Integer chunkNumber) {

this.chunkNumber = chunkNumber;

}

public Long getChunkSize() {

return chunkSize;

}

public void setChunkSize(Long chunkSize) {

this.chunkSize = chunkSize;

}

public Long getCurrentChunkSize() {

return currentChunkSize;

}

public void setCurrentChunkSize(Long currentChunkSize) {

this.currentChunkSize = currentChunkSize;

}

public Long getTotalSize() {

return totalSize;

}

public void setTotalSize(Long totalSize) {

this.totalSize = totalSize;

}

public Integer getTotalChunks() {

return totalChunks;

}

public void setTotalChunks(Integer totalChunks) {

this.totalChunks = totalChunks;

}

public String getFilename() {

return filename;

}

public void setFilename(String filename) {

this.filename = filename;

}

@Override

public String toString() {

return "FileChunkDTO{" +

"identifier='" + identifier + '\'' +

", file=" + file +

", chunkNumber=" + chunkNumber +

", chunkSize=" + chunkSize +

", currentChunkSize=" + currentChunkSize +

", totalSize=" + totalSize +

", totalChunks=" + totalChunks +

", filename='" + filename + '\'' +

'}';

}

}

3.5 FileChunkResultDTO

package com.xialj.demoend.dto;

import java.util.Set;

/**

* @ProjectName FileChunkResultDTO

* @author Administrator

* @version 1.0.0

* @Description 附件分片上传

* @createTime 2022/4/13 0013 15:59

*/

public class FileChunkResultDTO {

/**

* 是否跳过上传

*/

private Boolean skipUpload;

/**

* 已上传分片的集合

*/

private Set<Integer> uploaded;

public Boolean getSkipUpload() {

return skipUpload;

}

public void setSkipUpload(Boolean skipUpload) {

this.skipUpload = skipUpload;

}

public Set<Integer> getUploaded() {

return uploaded;

}

public void setUploaded(Set<Integer> uploaded) {

this.uploaded = uploaded;

}

public FileChunkResultDTO(Boolean skipUpload, Set<Integer> uploaded) {

this.skipUpload = skipUpload;

this.uploaded = uploaded;

}

public FileChunkResultDTO(Boolean skipUpload) {

this.skipUpload = skipUpload;

}

}

3.6 Result

package com.xialj.demoend.common;

import io.swagger.annotations.ApiModel;

import io.swagger.annotations.ApiModelProperty;

import lombok.Data;

/**

* @Author

* @Date Created in 2023/2/23 17:25

* @DESCRIPTION: 全局统一返回结果

* @Version V1.0

*/

@Data

@ApiModel(value = "全局统一返回结果")

@SuppressWarnings("all")

public class Result<T> {

@ApiModelProperty(value = "返回码")

private Integer code;

@ApiModelProperty(value = "返回消息")

private String message;

@ApiModelProperty(value = "返回数据")

private T data;

private Long total;

public Result(){}

protected static <T> Result<T> build(T data) {

Result<T> result = new Result<T>();

if (data != null)

result.setData(data);

return result;

}

public static <T> Result<T> build(T body, ResultCodeEnum resultCodeEnum) {

Result<T> result = build(body);

result.setCode(resultCodeEnum.getCode());

result.setMessage(resultCodeEnum.getMessage());

return result;

}

public static <T> Result<T> build(Integer code, String message) {

Result<T> result = build(null);

result.setCode(code);

result.setMessage(message);

return result;

}

public static<T> Result<T> ok(){

return Result.ok(null);

}

/**

* 操作成功

* @param data

* @param <T>

* @return

*/

public static<T> Result<T> ok(T data){

Result<T> result = build(data);

return build(data, ResultCodeEnum.SUCCESS);

}

public static<T> Result<T> fail(){

return Result.fail(null);

}

/**

* 操作失败

* @param data

* @param <T>

* @return

*/

public static<T> Result<T> fail(T data){

Result<T> result = build(data);

return build(data, ResultCodeEnum.FAIL);

}

public Result<T> message(String msg){

this.setMessage(msg);

return this;

}

public Result<T> code(Integer code){

this.setCode(code);

return this;

}

public boolean isOk() {

if(this.getCode().intValue() == ResultCodeEnum.SUCCESS.getCode().intValue()) {

return true;

}

return false;

}

}

3.6 ResultCodeEnum

package com.xialj.demoend.common;

import lombok.Getter;

/**

* @Author

* @Date Created in 2023/2/23 17:25

* @DESCRIPTION: 统一返回结果状态信息类

* @Version V1.0

*/

@Getter

@SuppressWarnings("all")

public enum ResultCodeEnum {

SUCCESS(200,"成功"),

FAIL(201, "失败"),

PARAM_ERROR( 202, "参数不正确"),

SERVICE_ERROR(203, "服务异常"),

DATA_ERROR(204, "数据异常"),

DATA_UPDATE_ERROR(205, "数据版本异常"),

LOGIN_AUTH(208, "未登陆"),

PERMISSION(209, "没有权限"),

CODE_ERROR(210, "验证码错误"),

// LOGIN_MOBLE_ERROR(211, "账号不正确"),

LOGIN_DISABLED_ERROR(212, "改用户已被禁用"),

REGISTER_MOBLE_ERROR(213, "手机号码格式不正确"),

REGISTER_MOBLE_ERROR_NULL(214, "手机号码为空"),

LOGIN_AURH(214, "需要登录"),

LOGIN_ACL(215, "没有权限"),

URL_ENCODE_ERROR( 216, "URL编码失败"),

ILLEGAL_CALLBACK_REQUEST_ERROR( 217, "非法回调请求"),

FETCH_ACCESSTOKEN_FAILD( 218, "获取accessToken失败"),

FETCH_USERINFO_ERROR( 219, "获取用户信息失败"),

//LOGIN_ERROR( 23005, "登录失败"),

PAY_RUN(220, "支付中"),

CANCEL_ORDER_FAIL(225, "取消订单失败"),

CANCEL_ORDER_NO(225, "不能取消预约"),

HOSCODE_EXIST(230, "医院编号已经存在"),

NUMBER_NO(240, "可预约号不足"),

TIME_NO(250, "当前时间不可以预约"),

SIGN_ERROR(300, "签名错误"),

HOSPITAL_OPEN(310, "医院未开通,暂时不能访问"),

HOSPITAL_LOCK(320, "医院被锁定,暂时不能访问"),

HOSPITAL_LOCKKEY(330,"医院对应key不一致")

;

private Integer code;

private String message;

private ResultCodeEnum(Integer code, String message) {

this.code = code;

this.message = message;

}

}

4:完成vue2前端的创建

4.1 安装uploader和spark-md5的依赖

npm install --save vue-simple-uploader

npm install --save spark-md5

4.2 mainjs导入uploader

import uploader from 'vue-simple-uploader'

Vue.use(uploader)

4.3 创建uploader组件

<template>

<div>

<uploader

:autoStart="false"

:options="options"

:file-status-text="statusText"

class="uploader-example"

@file-complete="fileComplete"

@complete="complete"

@file-success="fileSuccess"

@files-added="filesAdded"

>

<uploader-unsupport></uploader-unsupport>

<uploader-drop>

<p>将文件拖放到此处以上传</p>

<uploader-btn>选择文件</uploader-btn>

<uploader-btn :attrs="attrs">选择图片</uploader-btn>

<uploader-btn :directory="true">选择文件夹</uploader-btn>

</uploader-drop>

<!-- <uploader-list></uploader-list> -->

<uploader-files> </uploader-files>

</uploader>

<br />

<el-button @click="allStart()" :disabled="disabled">全部开始</el-button>

<el-button @click="allStop()" style="margin-left: 4px">全部暂停</el-button>

<el-button @click="allRemove()" style="margin-left: 4px">全部移除</el-button>

</div>

</template>

<script>

import axios from "axios";

import SparkMD5 from "spark-md5";

import {upload} from "@/api/user";

// import storage from "store";

// import { ACCESS_TOKEN } from '@/store/mutation-types'

export default {

name: "Home",

data() {

return {

skip: false,

options: {

target: "//localhost:9999/upload/chunk",

// 开启服务端分片校验功能

testChunks: true,

parseTimeRemaining: function (timeRemaining, parsedTimeRemaining) {

return parsedTimeRemaining

.replace(/\syears?/, "年")

.replace(/\days?/, "天")

.replace(/\shours?/, "小时")

.replace(/\sminutes?/, "分钟")

.replace(/\sseconds?/, "秒");

},

// 服务器分片校验函数

checkChunkUploadedByResponse: (chunk, message) => {

const result = JSON.parse(message);

if (result.data.skipUpload) {

this.skip = true;

return true;

}

return (result.data.uploaded || []).indexOf(chunk.offset + 1) >= 0;

},

// headers: {

// // 在header中添加的验证,请根据实际业务来

// "Access-Token": storage.get(ACCESS_TOKEN),

// },

},

attrs: {

accept: "image/*",

},

statusText: {

success: "上传成功",

error: "上传出错了",

uploading: "上传中...",

paused: "暂停中...",

waiting: "等待中...",

cmd5: "计算文件MD5中...",

},

fileList: [],

disabled: true,

};

},

watch: {

fileList(o, n) {

this.disabled = false;

},

},

methods: {

// fileSuccess(rootFile, file, response, chunk) {

// // console.log(rootFile);

// // console.log(file);

// // console.log(message);

// // console.log(chunk);

// const result = JSON.parse(response);

// console.log(result.success, this.skip);

//

// if (result.success && !this.skip) {

// axios

// .post(

// "http://127.0.0.1:9999/upload/merge",

// {

// identifier: file.uniqueIdentifier,

// filename: file.name,

// totalChunks: chunk.offset,

// },

// // {

// // headers: { "Access-Token": storage.get(ACCESS_TOKEN) }

// // }

// )

// .then((res) => {

// if (res.data.success) {

// console.log("上传成功");

// } else {

// console.log(res);

// }

// })

// .catch(function (error) {

// console.log(error);

// });

// } else {

// console.log("上传成功,不需要合并");

// }

// if (this.skip) {

// this.skip = false;

// }

// },

fileSuccess(rootFile, file, response, chunk) {

// console.log(rootFile);

// console.log(file);

// console.log(message);

// console.log(chunk);

const result = JSON.parse(response);

console.log(result.success, this.skip);

const user = {

identifier: file.uniqueIdentifier,

filename: file.name,

totalChunks: chunk.offset,

}

if (result.success && !this.skip) {

upload(user).then((res) => {

if (res.code == 200) {

console.log("上传成功");

} else {

console.log(res);

}

})

.catch(function (error) {

console.log(error);

});

} else {

console.log("上传成功,不需要合并");

}

if (this.skip) {

this.skip = false;

}

},

fileComplete(rootFile) {

// 一个根文件(文件夹)成功上传完成。

// console.log("fileComplete", rootFile);

// console.log("一个根文件(文件夹)成功上传完成。");

},

complete() {

// 上传完毕。

// console.log("complete");

},

filesAdded(file, fileList, event) {

// console.log(file);

file.forEach((e) => {

this.fileList.push(e);

this.computeMD5(e);

});

},

computeMD5(file) {

let fileReader = new FileReader();

let time = new Date().getTime();

let blobSlice =

File.prototype.slice ||

File.prototype.mozSlice ||

File.prototype.webkitSlice;

let currentChunk = 0;

const chunkSize = 1024 * 1024;

let chunks = Math.ceil(file.size / chunkSize);

let spark = new SparkMD5.ArrayBuffer();

// 文件状态设为"计算MD5"

file.cmd5 = true; //文件状态为“计算md5...”

file.pause();

loadNext();

fileReader.onload = (e) => {

spark.append(e.target.result);

if (currentChunk < chunks) {

currentChunk++;

loadNext();

// 实时展示MD5的计算进度

console.log(

`第${currentChunk}分片解析完成, 开始第${

currentChunk + 1

} / ${chunks}分片解析`

);

} else {

let md5 = spark.end();

console.log(

`MD5计算完毕:${file.name} \nMD5:${md5} \n分片:${chunks} 大小:${

file.size

} 用时:${new Date().getTime() - time} ms`

);

spark.destroy(); //释放缓存

file.uniqueIdentifier = md5; //将文件md5赋值给文件唯一标识

file.cmd5 = false; //取消计算md5状态

file.resume(); //开始上传

}

};

fileReader.onerror = function () {

this.error(`文件${file.name}读取出错,请检查该文件`);

file.cancel();

};

function loadNext() {

let start = currentChunk * chunkSize;

let end =

start + chunkSize >= file.size ? file.size : start + chunkSize;

fileReader.readAsArrayBuffer(blobSlice.call(file.file, start, end));

}

},

allStart() {

console.log(this.fileList);

this.fileList.map((e) => {

if (e.paused) {

e.resume();

}

});

},

allStop() {

console.log(this.fileList);

this.fileList.map((e) => {

if (!e.paused) {

e.pause();

}

});

},

allRemove() {

this.fileList.map((e) => {

e.cancel();

});

this.fileList = [];

},

},

};

</script>

<style>

.uploader-example {

width: 100%;

padding: 15px;

margin: 0px auto 0;

font-size: 12px;

box-shadow: 0 0 10px rgba(0, 0, 0, 0.4);

}

.uploader-example .uploader-btn {

margin-right: 4px;

}

.uploader-example .uploader-list {

max-height: 440px;

overflow: auto;

overflow-x: hidden;

overflow-y: auto;

}

</style>

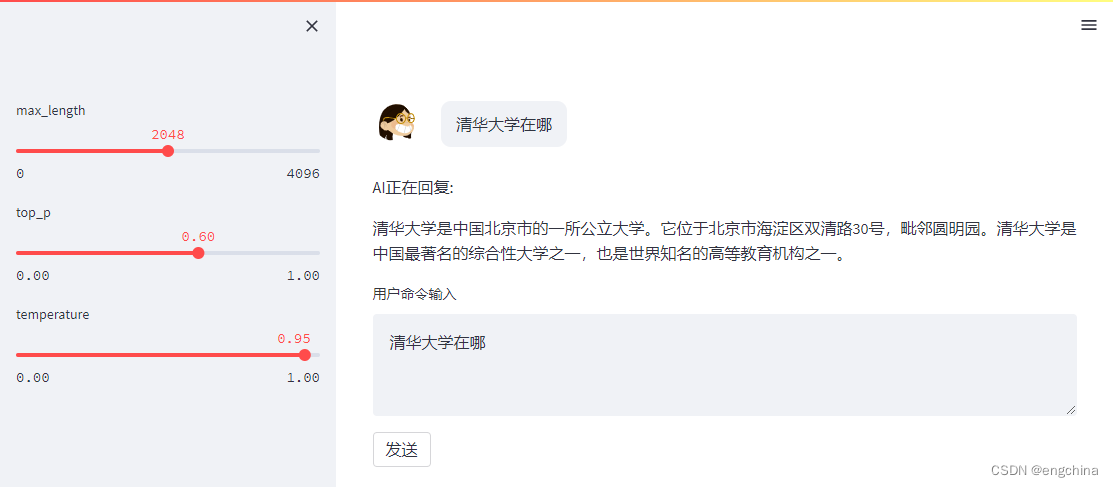

效果:

redis 中的文件: