软件版本:

Android Studio Electric Eel 2022.1.1 Patch 2

https://sourceforge.net/projects/opencvlibrary/files/4.5.0/opencv-4.5.0-android-sdk.zip/download

创建工程并导入opencv sdk:

导入opencv sdk:

File->New->Import Module

添加工程依赖:File->Project Structure, sdk为opencv sdk.

导入人脸和人眼检测训练模型

haarcascade_eye_tree_eyeglasses.xml

lbpcascade_frontalface_improved.xml

activity_main.xml

<?xml version="1.0" encoding="utf-8"?><org.opencv.android.JavaCameraView

android:id="@+id/camera_view"

android:layout_height="match_parent"

android:layout_width="match_parent"

android:layout_weight="1"/>

<Button

android:layout_width="37dp"

android:layout_height="37dp"

android:layout_margin="10dp"

android:layout_gravity="center_vertical"

android:background="@mipmap/ic_launcher"

android:id="@+id/switchCamera"/>

AndroidManifest.xml

<?xml version="1.0" encoding="utf-8"?>

<application

android:allowBackup="true"

android:dataExtractionRules="@xml/data_extraction_rules"

android:fullBackupContent="@xml/backup_rules"

android:icon="@mipmap/ic_launcher"

android:label="@string/app_name"

android:supportsRtl="true"

android:theme="@style/Theme.Facedetectwithcamera"

tools:targetApi="31">

<activity

android:name=".MainActivity"

android:exported="true">

<intent-filter>

<action android:name="android.intent.action.MAIN" />

<category android:name="android.intent.category.LAUNCHER" />

</intent-filter>

</activity>

</application>

MainActivity

package com.michael.facedetectwithcamera;

import androidx.appcompat.app.AppCompatActivity;

import androidx.core.app.ActivityCompat;

import androidx.core.content.ContextCompat;

import android.Manifest;

import android.app.Activity;

import android.content.Context;

import android.content.pm.ActivityInfo;

import android.content.pm.PackageManager;

import android.graphics.Bitmap;

import android.os.Bundle;

import android.provider.ContactsContract;

import android.util.Log;

import android.view.View;

import android.view.WindowManager;

import android.widget.Button;

import android.widget.Toast;

import org.opencv.android.BaseLoaderCallback;

import org.opencv.android.CameraActivity;

import org.opencv.android.CameraBridgeViewBase;

import org.opencv.android.CameraBridgeViewBase.CvCameraViewListener2;

import org.opencv.android.JavaCameraView;

import org.opencv.android.LoaderCallbackInterface;

import org.opencv.android.OpenCVLoader;

import org.opencv.android.Utils;

import org.opencv.core.Core;

import org.opencv.core.CvType;

import org.opencv.core.Mat;

import org.opencv.core.MatOfRect;

import org.opencv.core.Point;

import org.opencv.core.Rect;

import org.opencv.core.Scalar;

import org.opencv.core.Size;

import org.opencv.imgproc.Imgproc;

import org.opencv.objdetect.CascadeClassifier;

import java.io.File;

import java.io.FileOutputStream;

import java.io.InputStream;

import java.util.ArrayList;

import java.util.List;

public class MainActivity extends CameraActivity implements View.OnClickListener, CvCameraViewListener2 {

//public class MainActivity extends CameraActivity implements View.OnClickListener {

private static final String TAG = “…OpencvCam…”;

private JavaCameraView cameraView;

private Button switchButton;

private boolean isFrontCamera = true;

private Mat mRgba,leftEye_template,rightEye_template;

private CascadeClassifier classifier, classifierEye;

private static final Scalar EYE_RECT_COLOR = new Scalar(0,0,255);

private int mAbsoluteFaceSize = 0;

// private CameraBridgeViewBase.CvCameraViewListener2 cvCameraViewListener2 = new CameraBridgeViewBase.CvCameraViewListener2() {

// @Override

// public void onCameraViewStarted(int width, int height) {

// Log.i(TAG, “onCameraViewStarted width=” + width + “, height=” + height);

// }

//

// @Override

// public void onCameraViewStopped() {

// Log.i(TAG, “onCameraViewStopped”);

// }

//

// @Override

// public Mat onCameraFrame(CameraBridgeViewBase.CvCameraViewFrame inputFrame) {

// System.out.println(“…onCameraFrame…”);

// return inputFrame.rgba();

// }

// };

// private BaseLoaderCallback baseLoaderCallback = new BaseLoaderCallback(this) {

// @Override

// public void onManagerConnected(int status) {

// Log.i(TAG, “onManagerConnected status=” + status + “, javaCameraView=” + cameraView);

// switch (status) {

// case LoaderCallbackInterface.SUCCESS: {

// if (cameraView != null) {

// cameraView.setCvCameraViewListener(MainActivity.this);

// // 禁用帧率显示

// cameraView.disableFpsMeter();

// cameraView.enableView();

// }

// }

// break;

// default:

// super.onManagerConnected(status);

// break;

// }

// }

// };

//复写父类的 getCameraViewList 方法,把 javaCameraView 送到父 Activity,一旦权限被授予之后,javaCameraView 的 setCameraPermissionGranted 就会自动被调用。

@Override

protected List<? extends CameraBridgeViewBase> getCameraViewList() {

Log.i(TAG, "getCameraViewList");

List<CameraBridgeViewBase> list = new ArrayList<>();

list.add(cameraView);

return list;

}

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

initWindowSettings();

setContentView(R.layout.activity_main);

initLoadOpenCV();

cameraView = (JavaCameraView) findViewById(R.id.camera_view);

cameraView.setCvCameraViewListener(this);

System.out.println(".........cameraView.setCvCameraViewListener..........");

switchButton = (Button) findViewById(R.id.switchCamera);

switchButton.setOnClickListener(this);

// if (ContextCompat.checkSelfPermission(this, Manifest.permission.CAMERA)!= PackageManager.PERMISSION_DENIED) {

// ActivityCompat.requestPermissions(this, new String[]{Manifest.permission.CAMERA},1);

// } else {

// cameraView.setCameraPermissionGranted();

// }

boolean havePermission = getPermissionCamera(this);

System.out.println(“…getPermissionCamera…”+havePermission);

initClassifier();

initEyeDetector();

leftEye_template = new Mat();

rightEye_template = new Mat();

cameraView.setCameraIndex(CameraBridgeViewBase.CAMERA_ID_BACK);

isFrontCamera=false;

cameraView.enableView();

}

/**

* 确认camera权限

* @param activity

* @return

*/

public static boolean getPermissionCamera(Activity activity) {

int cameraPermissionCheck = ContextCompat.checkSelfPermission(activity, Manifest.permission.CAMERA);

int readPermissionCheck = ContextCompat.checkSelfPermission(activity, Manifest.permission.READ_EXTERNAL_STORAGE);

int writePermissionCheck = ContextCompat.checkSelfPermission(activity, Manifest.permission.WRITE_EXTERNAL_STORAGE);

if (cameraPermissionCheck != PackageManager.PERMISSION_GRANTED

|| readPermissionCheck != PackageManager.PERMISSION_GRANTED

|| writePermissionCheck != PackageManager.PERMISSION_GRANTED) {

String[] permissions = new String[]{Manifest.permission.CAMERA,Manifest.permission.READ_EXTERNAL_STORAGE,Manifest.permission.WRITE_EXTERNAL_STORAGE};

ActivityCompat.requestPermissions(

activity,

permissions,

0);

return false;

} else {

return true;

}

}

private void initWindowSettings() {

getWindow().setFlags(WindowManager.LayoutParams.FLAG_FULLSCREEN,WindowManager.LayoutParams.FLAG_FULLSCREEN);

getWindow().addFlags(WindowManager.LayoutParams.FLAG_KEEP_SCREEN_ON);

setRequestedOrientation(ActivityInfo.SCREEN_ORIENTATION_LANDSCAPE);

}

private void initLoadOpenCV() {

boolean success = OpenCVLoader.initDebug();

if(success) {

Toast.makeText(this, "Loading opencv ......", Toast.LENGTH_SHORT).show();

} else {

Toast.makeText(this, "Can't load opencv", Toast.LENGTH_SHORT).show();

}

}

@Override

public void onCameraViewStarted(int width, int height) {

System.out.println(".........onCameraViewStarted..........");

Toast.makeText(this, "onCameraViewStarted ", Toast.LENGTH_SHORT).show();

mRgba = new Mat();

}

@Override

public void onCameraViewStopped() {

mRgba.release();

}

@Override

public Mat onCameraFrame(CameraBridgeViewBase.CvCameraViewFrame inputFrame) {

// System.out.println(“…onCameraFrame…”);

mRgba = inputFrame.rgba();

if(isFrontCamera) {

//Core.rotate(mRgba,mRgba,Core.ROTATE_180);

Core.flip(mRgba,mRgba,1);//解决前置镜头上下颠倒

}

return myfacedetect1(mRgba);

}

//初始化人脸级联分类器

private void initClassifier() {

try {

InputStream is = getResources().openRawResource(R.raw.lbpcascade_frontalface_improved);

File cascadeDir = getDir("cascade", Context.MODE_PRIVATE);

File cascadeFile = new File(cascadeDir, "lbpcascade_frontalface_improved.xml");

FileOutputStream os = new FileOutputStream(cascadeFile);

byte[] buffer = new byte[4096];

int bytesRead;

while ((bytesRead = is.read(buffer)) != -1) {

os.write(buffer, 0, bytesRead);

}

is.close();

os.close();

classifier = new CascadeClassifier(cascadeFile.getAbsolutePath());

cascadeFile.delete();

cascadeDir.delete();

} catch (Exception e) {

e.printStackTrace();

}

}

private Mat myfacedetect(Mat srcmat) {

Imgproc.cvtColor(srcmat,srcmat,Imgproc.COLOR_BGRA2GRAY);

MatOfRect faces = new MatOfRect();

classifier.detectMultiScale(srcmat,faces,1.1,1,0,new Size(30,30), new Size());

List<Rect> faceList = faces.toList();

// srcmat.copyTo(dstmat3);

if(faceList.size()>0) {

// Toast.makeText(MainActivity.this, “Michael test”, Toast.LENGTH_SHORT).show();

for(Rect rect : faceList) {

Imgproc.rectangle(srcmat,rect.tl(),rect.br(),new Scalar(255,0,255),4,8,0);

}

}

return srcmat;

}

private Mat myfacedetect1(Mat mRgba) {

float mRelativefaceSize = 0.2f;

if (mAbsoluteFaceSize == 0) {

int height = mRgba.rows();

if (Math.round(height * mRelativefaceSize) > 0) {

mAbsoluteFaceSize = Math.round(height*mRelativefaceSize);

}

}

MatOfRect faces = new MatOfRect();

if(classifier != null) {

classifier.detectMultiScale(mRgba,faces,1.1,2,2,new Size(mAbsoluteFaceSize,mAbsoluteFaceSize), new Size());

}

Rect[] facesArray = faces.toArray();

Scalar faceRectColor = new Scalar(0,255,0,255);

for (int i = 0; i<facesArray.length;i++) {

Imgproc.rectangle(mRgba,facesArray[i].tl(),facesArray[i].br(),faceRectColor,2);

selectEyesArea(facesArray[i],mRgba);

}

return mRgba;

}

//选定眼睛区域

private void selectEyesArea(Rect faceROI, Mat frame) {

int offy = (int) (faceROI.height * 0.15f);

int offx = (int) (faceROI.width * 0.12f);

int sh = (int) (faceROI.height * 0.35f);

int sw = (int) (faceROI.width * 0.3f);

int gap = (int) (faceROI.width * 0.25f);

Point lp_eye = new Point(faceROI.tl().x+offx,faceROI.tl().y+offy);

Point lp_end = new Point(lp_eye.x+sw,lp_eye.y+sh);

Imgproc.rectangle(frame,lp_eye,lp_end,EYE_RECT_COLOR,2);

int right_offx = (int) (faceROI.width * 0.08f);

Point rp_eye = new Point(faceROI.x+faceROI.width/2+right_offx,faceROI.tl().y+offy);

Point rp_end = new Point(rp_eye.x+sw,rp_eye.y+sh);

Imgproc.rectangle(frame,rp_eye,rp_end,EYE_RECT_COLOR,2);

//使用级联分类器检测眼睛

MatOfRect eyes = new MatOfRect();

Rect left_eye_roi = new Rect();

left_eye_roi.x = (int) lp_eye.x;

left_eye_roi.y = (int) lp_eye.y;

left_eye_roi.width = (int) (lp_end.x - lp_eye.x);

left_eye_roi.height = (int) (lp_end.y - lp_eye.y);

Rect right_eye_roi = new Rect();

right_eye_roi.x = (int) rp_eye.x;

right_eye_roi.y = (int) rp_eye.y;

right_eye_roi.width = (int) (rp_end.x - rp_eye.x);

right_eye_roi.height = (int) (rp_end.y - rp_eye.y);

//级联分类器

Mat leftEye = frame.submat(left_eye_roi);

Mat rightEye = frame.submat(right_eye_roi);

classifierEye.detectMultiScale(mRgba.submat(left_eye_roi),eyes,1.15,2,0,new Size(30,30),new Size());

Rect[] eyesArray = eyes.toArray();

for(int i=0; i<eyesArray.length;i++){

Log.i(TAG, "Found left Eyes... ");

leftEye.submat(eyesArray[i]).copyTo(leftEye_template);

}

if(eyesArray.length==0){

Rect left_roi = matchEyeTemplate(leftEye,true);

if(left_roi != null) {

Imgproc.rectangle(leftEye,left_roi.tl(),left_roi.br(),EYE_RECT_COLOR,2);

}

}

eyes.release();

eyes = new MatOfRect();

classifierEye.detectMultiScale(mRgba.submat(right_eye_roi),eyes,1.15,2,0,new Size(30,30),new Size());

eyesArray = eyes.toArray();

for(int i=0; i<eyesArray.length;i++){

Log.i(TAG, "Found right Eyes... ");

rightEye.submat(eyesArray[i]).copyTo(rightEye_template);

}

if(eyesArray.length==0){

Rect right_roi = matchEyeTemplate(rightEye,true);

if(right_roi != null) {

Imgproc.rectangle(rightEye,right_roi.tl(),right_roi.br(),EYE_RECT_COLOR,2);

}

}

}

private Rect matchEyeTemplate(Mat src, Boolean left){

Mat tpl = left ? leftEye_template : rightEye_template;

if(tpl.cols() ==0 || tpl.rows() == 0) {

return null;

}

int height = src.rows() - tpl.rows()+1;

int width = src.cols() - tpl.cols()+1;

if(height<1 || width <1){

return null;

}

Mat result = new Mat(height,width, CvType.CV_32FC1);

//模板匹配

int method = Imgproc.TM_CCOEFF_NORMED;

Imgproc.matchTemplate(src, tpl, result,method);

Core.MinMaxLocResult minMaxLocResult = Core.minMaxLoc(result);

Point maxloc = minMaxLocResult.maxLoc;

//ROI

Rect rect = new Rect();

rect.x = (int) (maxloc.x);

rect.y = (int) (maxloc.y);

rect.width = tpl.cols();

rect.height = tpl.rows();

result.release();

return rect;

}

private void initEyeDetector() {

try {

InputStream is = getResources().openRawResource(R.raw.haarcascade_eye_tree_eyeglasses);

File cascadeDir = getDir("cascade", Context.MODE_PRIVATE);

File cascadeFile = new File(cascadeDir, "haarcascade_eye_tree_eyeglasses.xml");

FileOutputStream os = new FileOutputStream(cascadeFile);

byte[] buffer = new byte[4096];

int bytesRead;

while ((bytesRead = is.read(buffer)) != -1) {

os.write(buffer, 0, bytesRead);

}

is.close();

os.close();

classifierEye = new CascadeClassifier(cascadeFile.getAbsolutePath());

cascadeFile.delete();

cascadeDir.delete();

} catch (Exception e) {

e.printStackTrace();

}

}

@Override

public void onClick(View view) {

switch (view.getId()){

case R.id.switchCamera:

if(isFrontCamera) {

cameraView.setCameraIndex(CameraBridgeViewBase.CAMERA_ID_BACK);

isFrontCamera=false;

System.out.println("isFrontCamera=false");

} else {

cameraView.setCameraIndex(CameraBridgeViewBase.CAMERA_ID_FRONT);

isFrontCamera=true;

System.out.println("isFrontCamera=true");

}

}

if(cameraView != null) {

cameraView.disableView();

}

cameraView.enableView();

System.out.println("..........onClick enableView............");

// Toast.makeText(this, "…enableView… ", Toast.LENGTH_SHORT).show();

}

@Override

protected void onDestroy() {

super.onDestroy();

if(cameraView != null) {

cameraView.disableView();

}

}

}

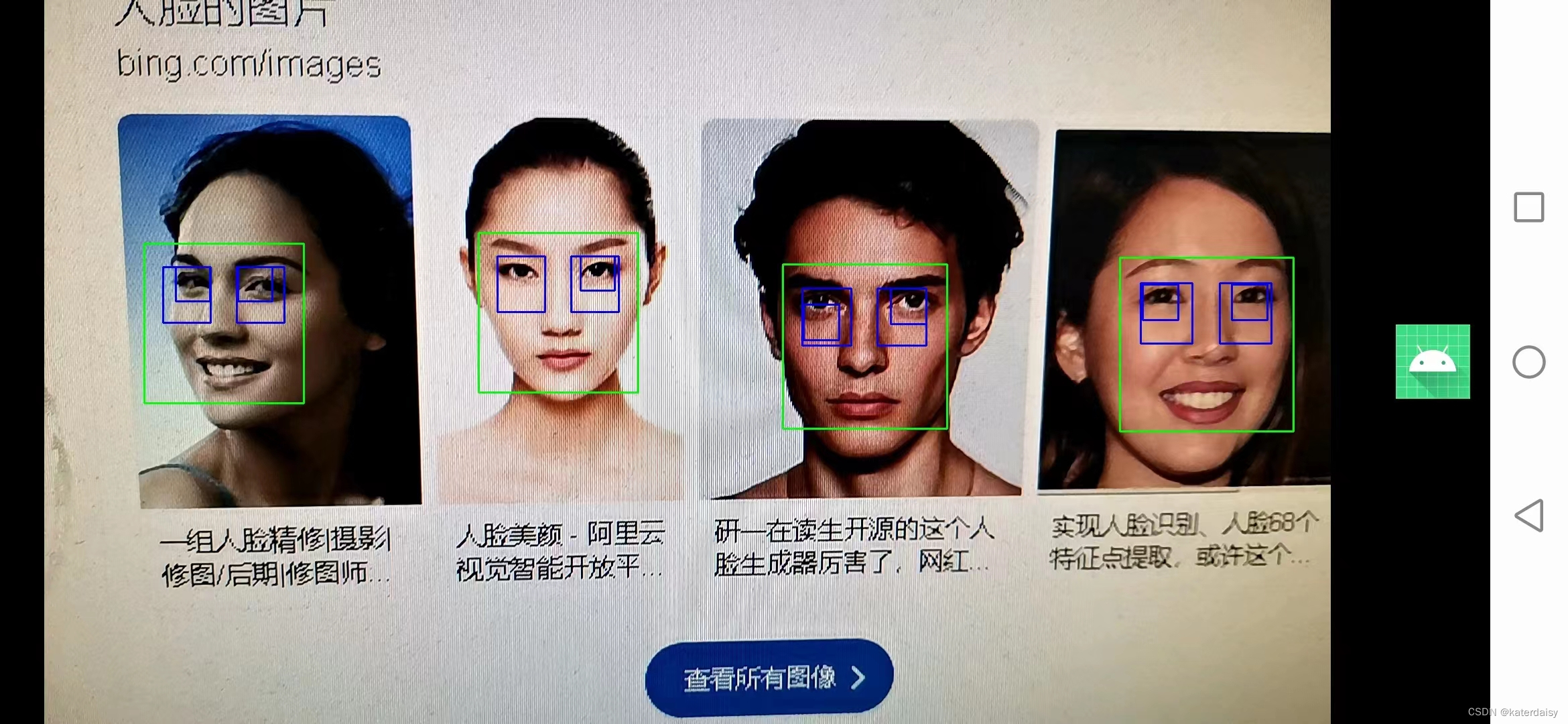

结果:

![如何利用超融合提升制造业开发测试效率 [附用户案例]](https://img-blog.csdnimg.cn/9dd04af1fa0541278b024a30f43a7912.png)