一、准备

1.1 安装zookeeper

zookeeper 安装下载与集群

1.2 安装HADOOP

hadoop搭建集群搭建

1.3下载HBase

https://mirrors.tuna.tsinghua.edu.cn/apache/hbase/

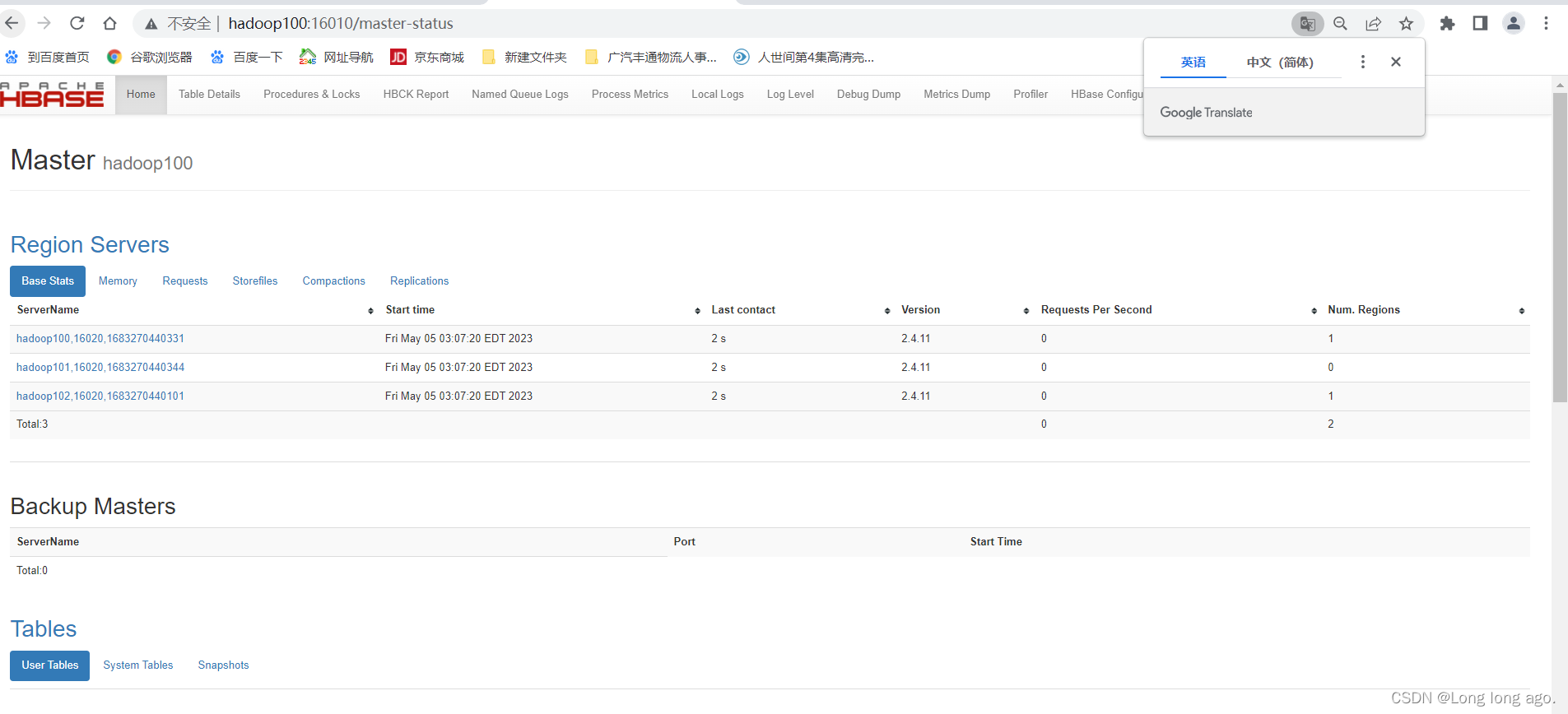

二、正常部署

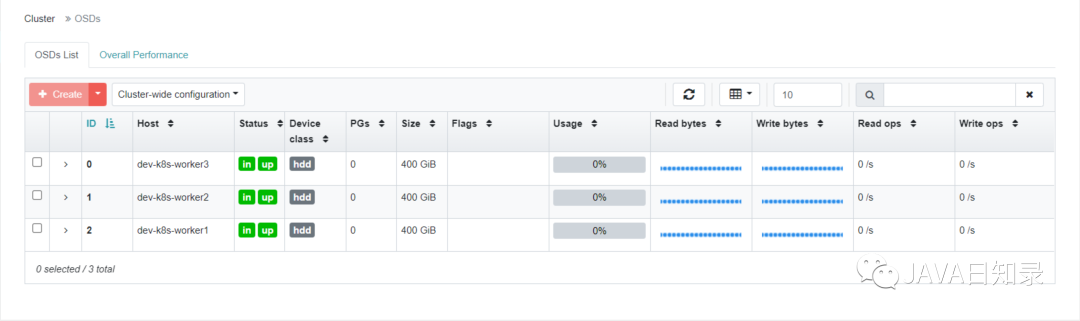

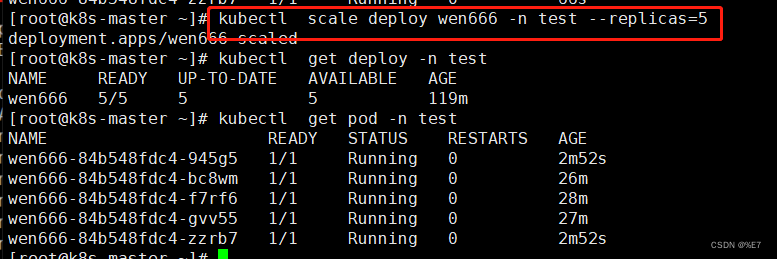

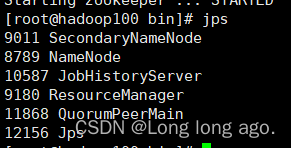

2.1 检查是否正常启动

2.2解压

tar -zvxf hbase-2.4.11-bin.tar.gz

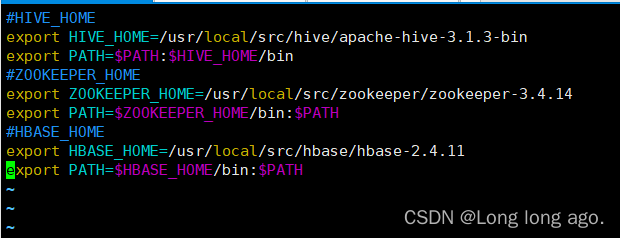

2.3配置环境变量

vim /etc/profile.d/my_env.sh

#HBASE_HOME

export HBASE_HOME=/usr/local/src/hbase/hbase-2.4.11

export PATH=$HBASE_HOME/bin:$PATH

source环境变量

source /etc/profile.d/my_env.sh

2.4修改配置

2.4.1 配置hbase-env.sh 不使用habse自带的zookeeper

vim $HBASE_HOME/conf/hbase-env.sh

export HBASE_MANAGES_ZK=false

2.4.2 配置habse-site.xml

vim $HBASE_HOME/conf/hbase-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

/*

* Licensed to the Apache Software Foundation (ASF) under one

* or more contributor license agreements. See the NOTICE file

* distributed with this work for additional information

* regarding copyright ownership. The ASF licenses this file

* to you under the Apache License, Version 2.0 (the

* "License"); you may not use this file except in compliance

* with the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

-->

<configuration>

<!--

The following properties are set for running HBase as a single process on a

developer workstation. With this configuration, HBase is running in

"stand-alone" mode and without a distributed file system. In this mode, and

without further configuration, HBase and ZooKeeper data are stored on the

local filesystem, in a path under the value configured for `hbase.tmp.dir`.

This value is overridden from its default value of `/tmp` because many

systems clean `/tmp` on a regular basis. Instead, it points to a path within

this HBase installation directory.

Running against the `LocalFileSystem`, as opposed to a distributed

filesystem, runs the risk of data integrity issues and data loss. Normally

HBase will refuse to run in such an environment. Setting

`hbase.unsafe.stream.capability.enforce` to `false` overrides this behavior,

permitting operation. This configuration is for the developer workstation

only and __should not be used in production!__

See also https://hbase.apache.org/book.html#standalone_dist

-->

<property>

<name>hbase.zookeeper.quorum</name>

<!--zookeeper节点-->

<value>hadoop100,hadoop101,hadoop102</value>

<description>The directory shared by RegionServers.

</description>

</property>

<!-- <property>-->

<!-- <name>hbase.zookeeper.property.dataDir</name>-->

<!-- <value>/export/zookeeper</value>-->

<!-- <description> 记得修改 ZK 的配置文件 -->

<!-- ZK 的信息不能保存到临时文件夹-->

<!-- </description>-->

<!-- </property>-->

<property>

<name>hbase.rootdir</name>

<!--hdfs需要根据自己情况来-->

<value>hdfs://hadoop100:9000/hbase</value>

<description>The directory shared by RegionServers.

</description>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

</configuration>

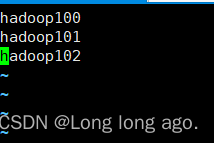

配置regionservers

vim $HBASE_HOME/conf/regionserver

hadoop100

hadoop101

hadoop102

2.4.4 使用 Hadoop 的 jar 包,解决 HBase 和 Hadoop 的 log4j 兼容性问题

mv $HBASE_HOME/lib/client-facing-thirdparty/slf4j-reload4j-1.7.33.jar $HBASE_HOME/lib/client-facing-thirdparty/slf4j-reload4j-1.7.33.jar.bak

2.4.5 分发给其他节点

scp -r /usr/local/src/hbase root@hadoop102:/usr/local/src

scp -r /usr/local/src/hbase root@hadoop101:/usr/local/src

环境变量

scp -r /etc/profile.d/my_env.sh root@hadoop101:/etc/profile.d

scp -r /etc/profile.d/my_env.sh root@hadoop102:/etc/profile.d

记得source环境变量

source /etc/profile.d/my_env.sh

2.5 启动

2.5.1单点启动

$HBASE_HOME/bin/hbase-daemon.sh start master

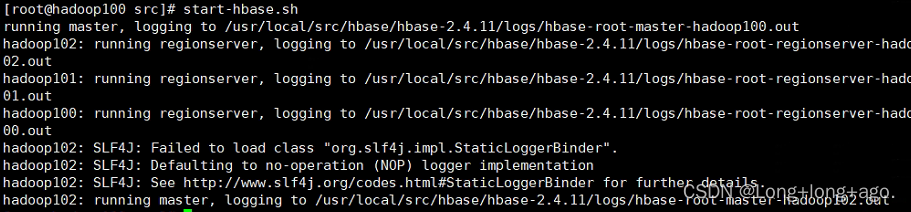

2.5.2 群启

$HBASE_HOME/bin/start-hbase.sh

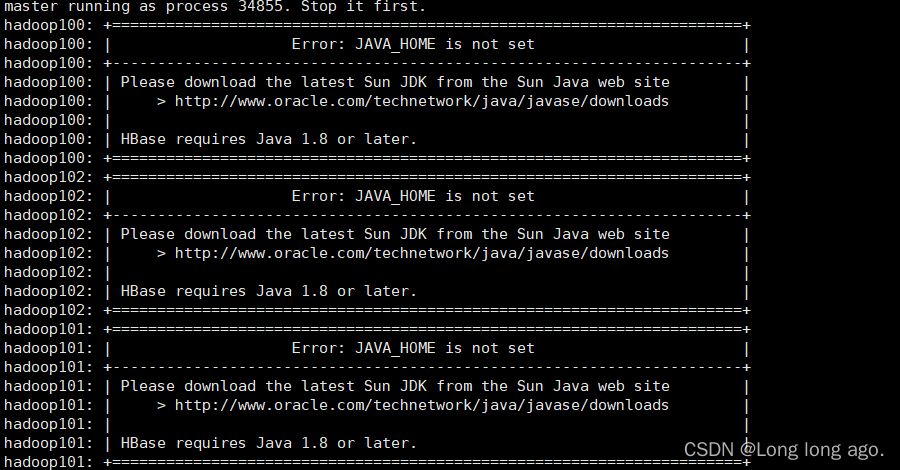

2.6 报错

Error: JAVA_HOME is not set

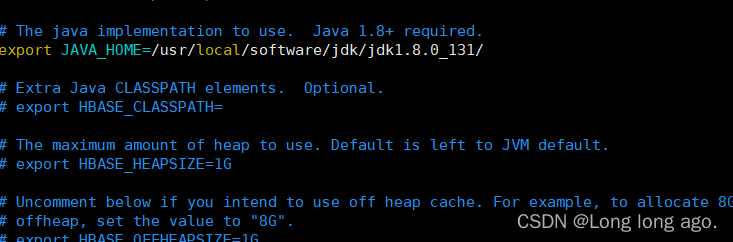

2.6.1原因是没配置JAVA_HOME

vim $HBASE_HOME/conf/hbase-env.sh

export JAVA_HOME=/usr/local/software/jdk/jdk1.8.0_131/

记得要分发给其他节点,其他节点的JAVA_HOME可能也不同,需要注意

重新启动

Failed to load class "org.slf4j.impl.StaticLoggerBinder"

如果启动一直报

Defaulting to no-operation (NOP) logger implementation

请看

2.4.4 使用 Hadoop 的 jar 包,解决 HBase 和 Hadoop 的 log4j 兼容性问题

如果还是有,看是哪个节点报错,直接删除,再重新把hbase重新分发过去

还是会的话

我看是102节点有问题,

我把102节点2.4.4修改的jar包改回来,重新启动后,就可以了

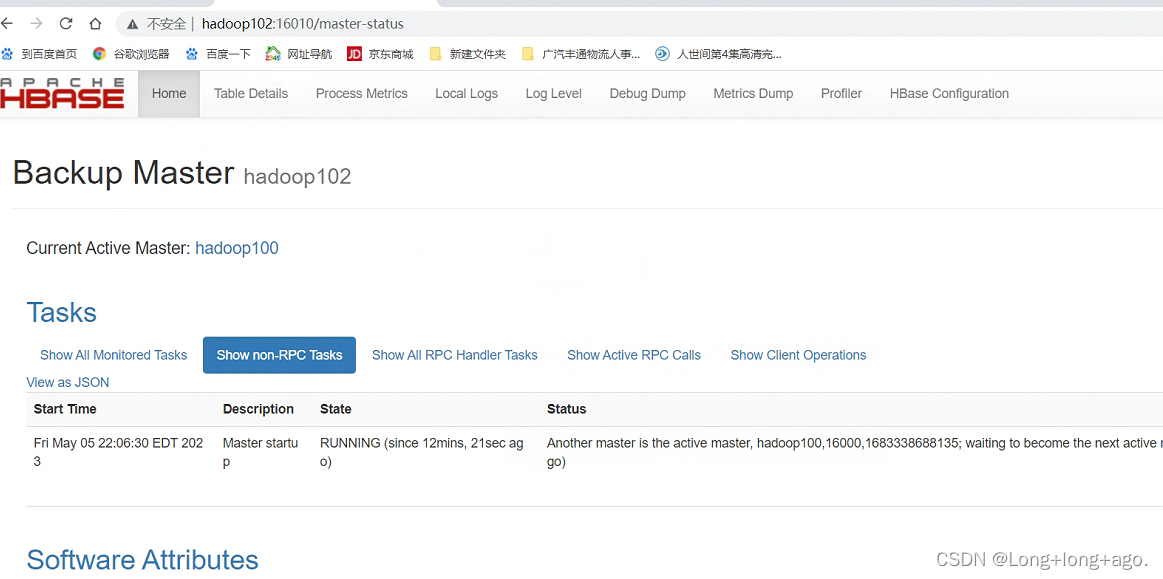

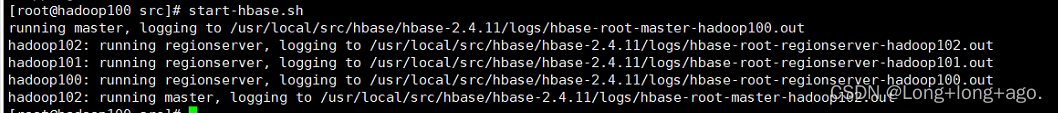

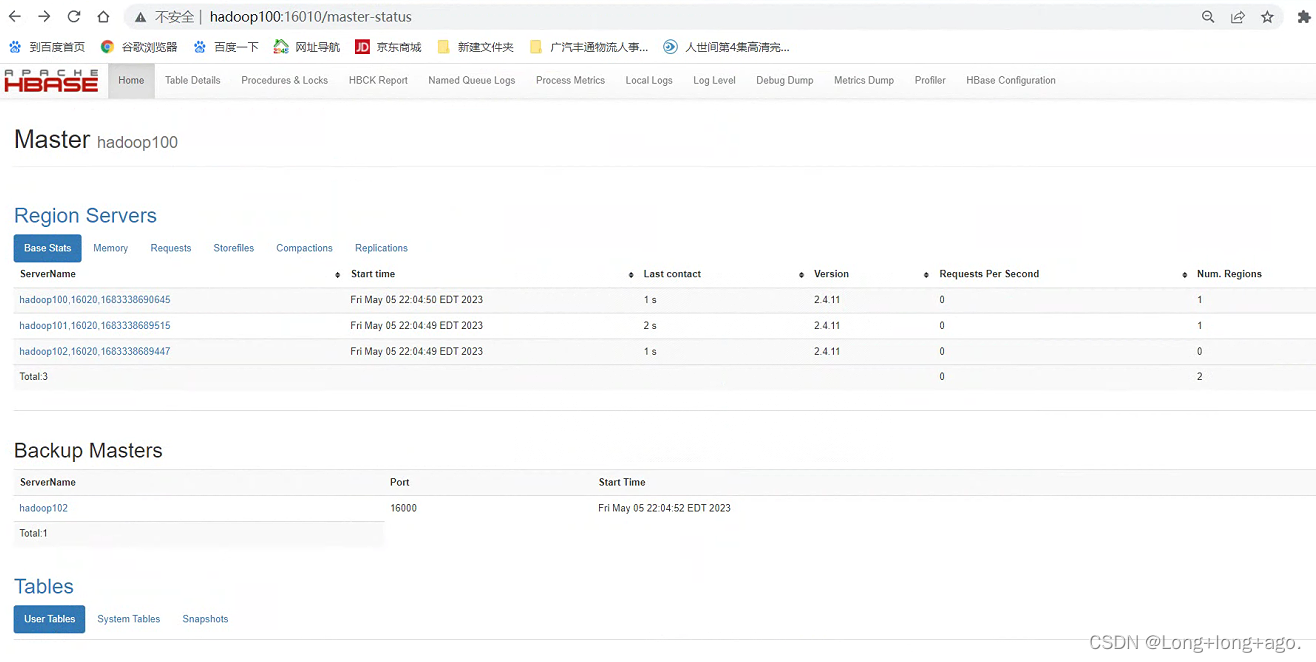

三、高可用

vim $HBASE_HOME/conf/backup-masters

写入你需要做为备份主节点的ip

记得分发

重新启动

备份主节点的时候是还不会有region servers的信息,只有单主节点挂了,他才会拉取region servers信息