一、CDC简介

1.1 什么是CDC?

CDC是 Change Data Capture(变更数据获取 )的简称。 核心思想是,监测并捕获数据库的

变动(包括数据或数据表的插入 、 更新 以及 删除等),将这些变更按发生的顺序完整记录下

来,写入到消息中间件中以供其他服务进行订阅及消费。

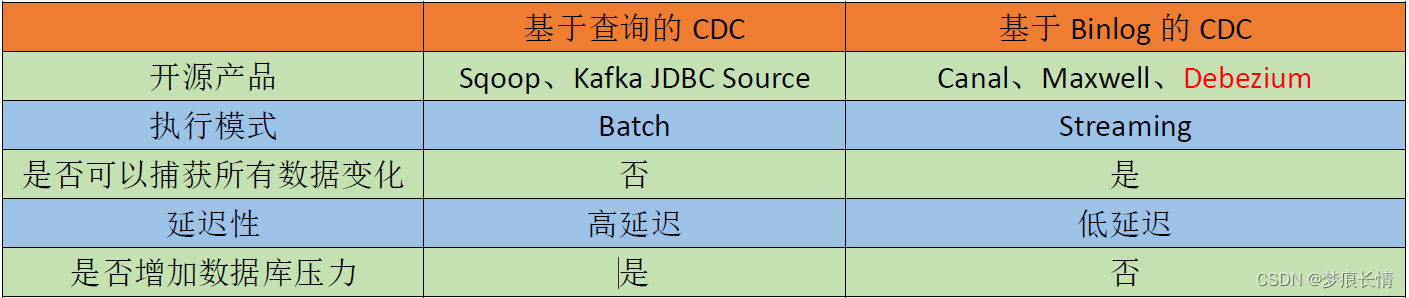

1.2 CDC的种类

CDC主要分为 基于查询 和 基于 Binlog两种方式,这两种之间的区别:

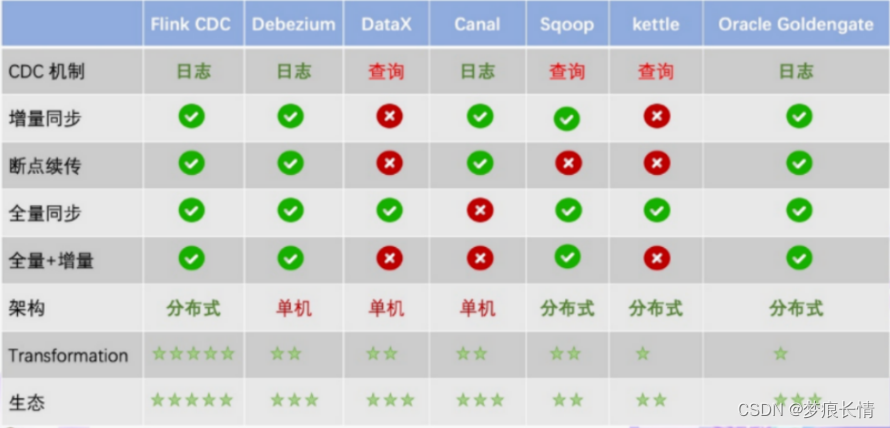

1.3 Canal、 Maxwell、 Debezium、FlinkCDC 之间的区别

1.4. 什么是FlinkCDC?

1.5 FlinkCDC之DataStream

1.5.1 导入依赖

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>org.example</groupId>

<artifactId>Flink-CDC</artifactId>

<version>1.0-SNAPSHOT</version>

<properties>

<maven.compiler.source>8</maven.compiler.source>

<maven.compiler.target>8</maven.compiler.target>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-java</artifactId>

<version>1.13.0</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_2.12</artifactId>

<version>1.13.0</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients_2.12</artifactId>

<version>1.13.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>3.1.3</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.47</version>

</dependency>

<dependency>

<groupId>com.ververica</groupId>

<artifactId>flink-connector-mysql-cdc</artifactId>

<version>2.0.0</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-planner-blink_2.12</artifactId>

<version>1.13.0</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.28</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-assembly-plugin</artifactId>

<version>3.0.0</version>

<configuration>

<descriptorRefs>

<descriptorRef>jar-with-dependencies</descriptorRef>

</descriptorRefs> </configuration>

<executions>

<execution>

<id>make-assembly</id>

<phase>package</phase>

<goals>

<goal>single</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

</project>

1.5.2 创建FlinkCDC测试类

package com.lzl;

import com.ververica.cdc.connectors.mysql.MySqlSource;

import com.ververica.cdc.connectors.mysql.table.StartupOptions;

import com.ververica.cdc.debezium.DebeziumSourceFunction;

import com.ververica.cdc.debezium.StringDebeziumDeserializationSchema;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

/**

* @author lzl

* @create 2023-05-04 11:21

* @name FlinkCDC

*/

public class FlinkCDC {

public static void main(String[] args) throws Exception{

//1.获取Flink的执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

//2.通过FlinkCDC构建SourceFunction

DebeziumSourceFunction<String> sourceFunction = MySqlSource.<String>builder()

.hostname("ch01")

.port(3306)

.username("root")

.password("xxb@5196")

.databaseList("cdc_test")

.tableList("cdc_test.user_info") //表前一定要加上库名

.deserializer(new StringDebeziumDeserializationSchema()) //这是官网的,我们后面要自己定义反序列

.startupOptions(StartupOptions.initial())

.build();

//3.使用 CDC Source从 MySQL读取数据

DataStreamSource<String> dataStreamSource = env.addSource(sourceFunction);

//4.数据打印

dataStreamSource.print();

//5.启动任务

env.execute("FlinkCDC");

}

}

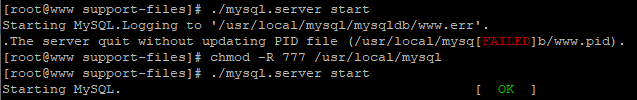

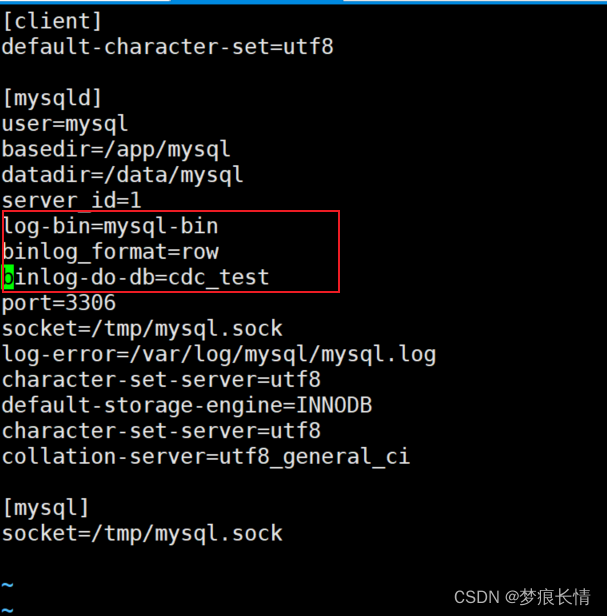

1.5.3 开启Binlog日志并创建数据库

[root@ch01 ~]# vim /etc/my.cnf

添加这部分:

然后重启MySQL服务器:

[root@ch01 ~]# systemctl restart mysqld

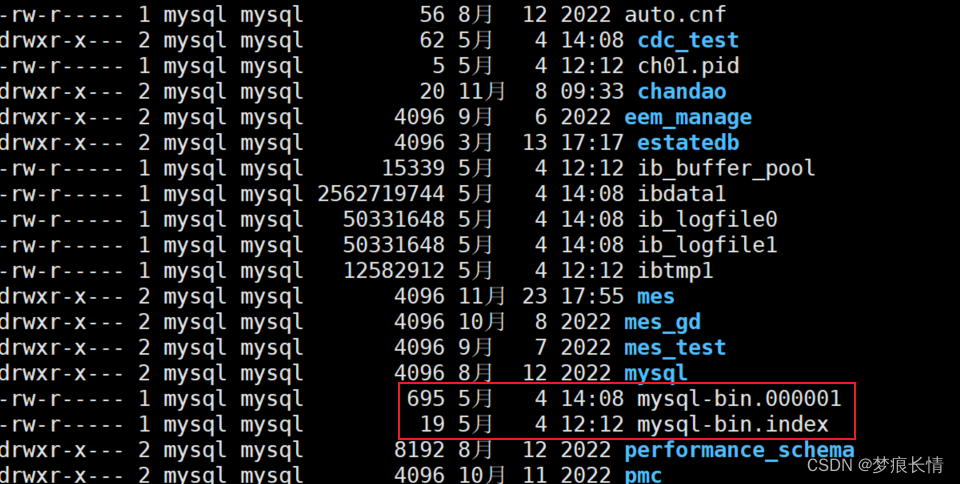

在/var/mysql下可以看到mysql-bin.index的文件(一般)

我的是在/data/mysql/mysql-bin

或者打开MySQL客户端,输入以下命令:

mysql> show variables like 'log_%';

+----------------------------------------+-----------------------------+

| Variable_name | Value |

+----------------------------------------+-----------------------------+

| log_bin | ON |

| log_bin_basename | /data/mysql/mysql-bin |

| log_bin_index | /data/mysql/mysql-bin.index |

| log_bin_trust_function_creators | OFF |

| log_bin_use_v1_row_events | OFF |

| log_builtin_as_identified_by_password | OFF |

| log_error | /var/log/mysql/mysql.log |

| log_error_verbosity | 3 |

| log_output | FILE |

| log_queries_not_using_indexes | OFF |

| log_slave_updates | OFF |

| log_slow_admin_statements | OFF |

| log_slow_slave_statements | OFF |

| log_statements_unsafe_for_binlog | ON |

| log_syslog | OFF |

| log_syslog_facility | daemon |

| log_syslog_include_pid | ON |

| log_syslog_tag | |

| log_throttle_queries_not_using_indexes | 0 |

| log_timestamps | UTC |

| log_warnings | 2 |

+----------------------------------------+-----------------------------+

21 rows in set (0.00 sec)

mysql> show binary logs;

+------------------+-----------+

| Log_name | File_size |

+------------------+-----------+

| mysql-bin.000001 | 154 |

+------------------+-----------+

1 row in set (0.00 sec)

mysql> show master status;

+------------------+----------+--------------+------------------+-------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB | Executed_Gtid_Set |

+------------------+----------+--------------+------------------+-------------------+

| mysql-bin.000001 | 154 | cdc_test | | |

+------------------+----------+--------------+------------------+-------------------+

1 row in set (0.00 sec)

mysql> show binlog events;

+------------------+-----+----------------+-----------+-------------+---------------------------------------+

| Log_name | Pos | Event_type | Server_id | End_log_pos | Info |

+------------------+-----+----------------+-----------+-------------+---------------------------------------+

| mysql-bin.000001 | 4 | Format_desc | 1 | 123 | Server ver: 5.7.11-log, Binlog ver: 4 |

| mysql-bin.000001 | 123 | Previous_gtids | 1 | 154 | |

+------------------+-----+----------------+-----------+-------------+---------------------------------------+

2 rows in set (0.00 sec)

show binary logs; #获取binlog文件列表

show master status;#查看当前正在写入的binlog文件

show binlog events; #只查看第一个binlog文件的内容

show binlog events in ‘mysql-bin.000002’; #查看指定binlog文件的内容

创建MySQL数据库:cdc_test

mysql> create database cdc_test;

Query OK, 1 row affected (0.00 sec)

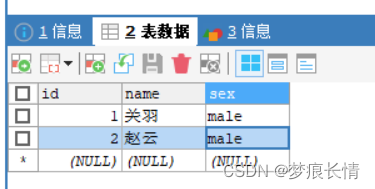

1.5.4 创建用户表user_info

mysql> use cdc_test;

Database changed

mysql> CREATE TABLE user_info (

`id` int(2) primary key comment 'id',

`name` varchar(255) comment '姓名',

`sex` varchar(255) comment '性别'

)ENGINE=InnoDB DEFAULT CHARSET=utf8 ROW_FORMAT=DYNAMIC COMMENT='用户信息表';

Query OK, 0 rows affected (0.01 sec)

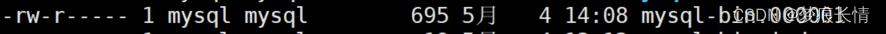

没插入数据之前,mysql-bin.000001的位置是在695.

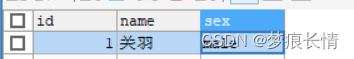

在表中插入一行数据之后看它有何变化?

说明MySQL日志有监控到了。同时位置在983那里。

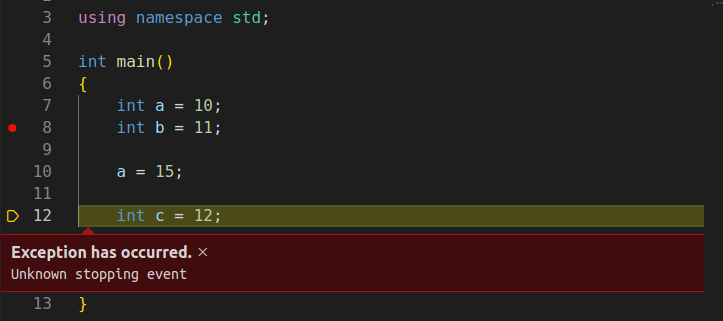

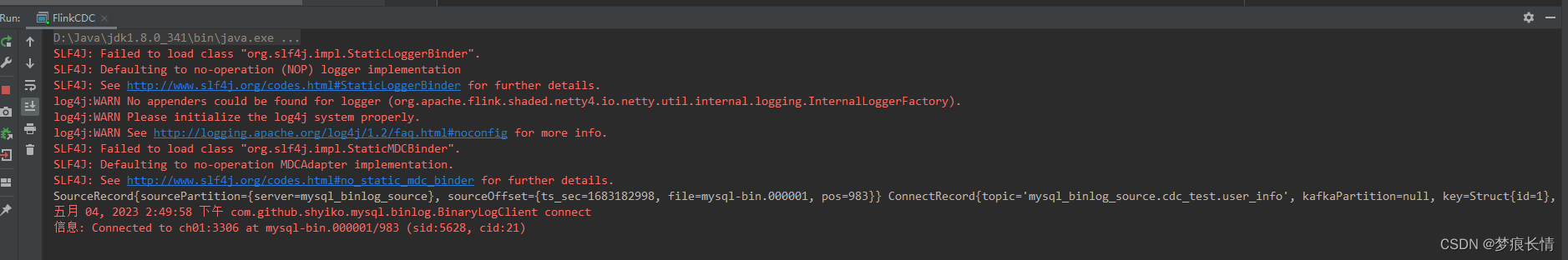

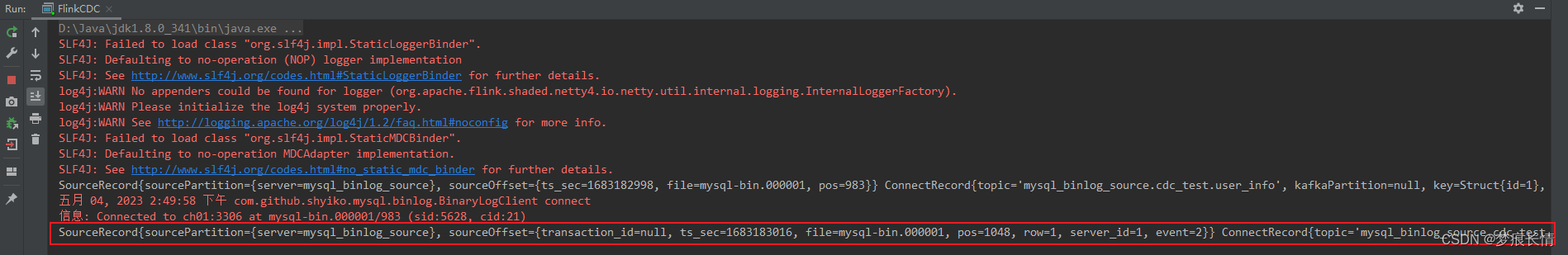

1.5.5 启动FlinkCDC程序

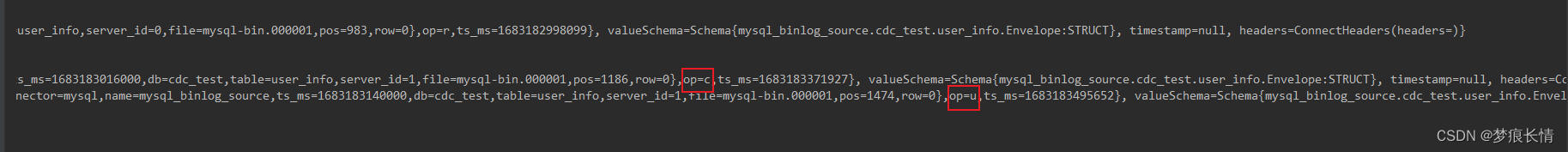

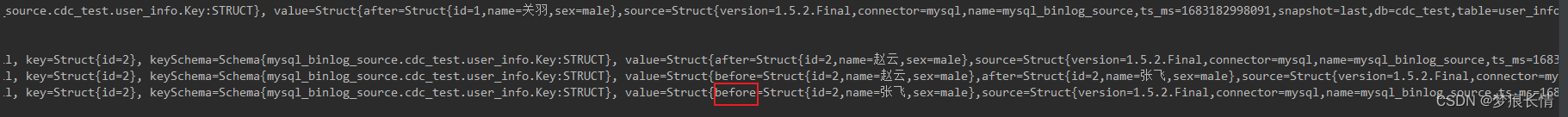

SourceRecord{sourcePartition={server=mysql_binlog_source}, sourceOffset={ts_sec=1683182998, file=mysql-bin.000001, pos=983}}

ConnectRecord{topic='mysql_binlog_source.cdc_test.user_info', kafkaPartition=null, key=Struct{id=1}, keySchema=Schema{mysql_binlog_source.cdc_test.user_info.Key:STRUCT},

value=Struct{after=Struct{id=1,name=关羽,sex=male},source=Struct{version=1.5.2.Final,connector=mysql,name=mysql_binlog_source,ts_ms=1683182998091,snapshot=last,db=cdc_test,table=user_info,server_id=0,file=mysql-bin.000001,pos=983,row=0},op=r,ts_ms=1683182998099},

valueSchema=Schema{mysql_binlog_source.cdc_test.user_info.Envelope:STRUCT}, timestamp=null, headers=ConnectHeaders(headers=)}

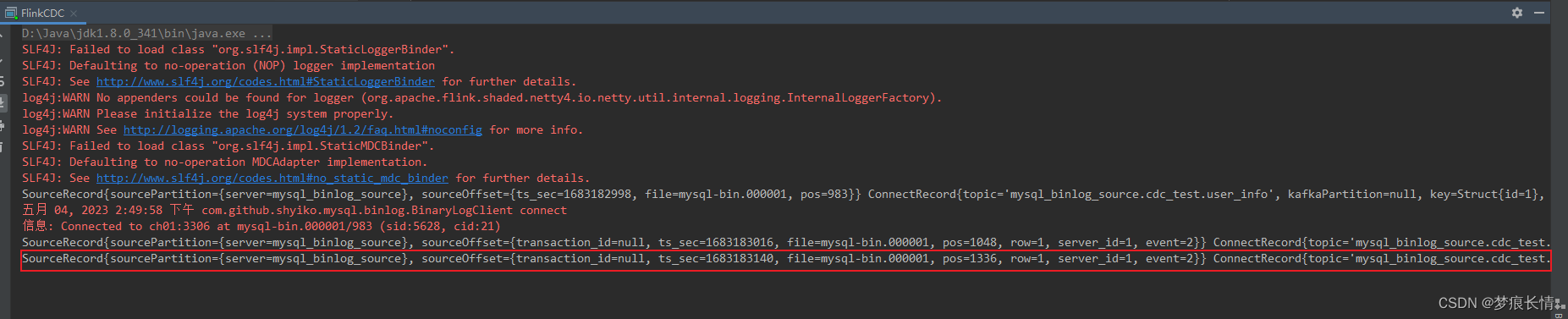

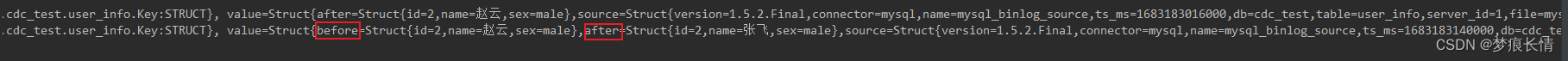

我们再新增一条数据看看:

我们修改一条数据:把赵云改成张飞

我们修改一条数据:把赵云改成张飞

op改成了u,即op=u。

多了一个before和after。更新前和更新后的数据。

我们删除一条数据看看:

就只有before了。且op=d。

1.6 开启CK模式

![【LeetCode】数据结构题解(5)[分割链表]](https://img-blog.csdnimg.cn/1fdcb0f2718b4879bc32d27ae9e283cb.png#pic_center)