vpp支持两套qos实现,一套是基于policer实现的qos,另外一套是基于dpdk的qos套件实现的hqos。

(免费订阅,永久学习)学习地址: Dpdk/网络协议栈/vpp/OvS/DDos/NFV/虚拟化/高性能专家-学习视频教程-腾讯课堂

更多DPDK相关学习资料有需要的可以自行报名学习,免费订阅,永久学习,或点击这里加qun免费

领取,关注我持续更新哦! !

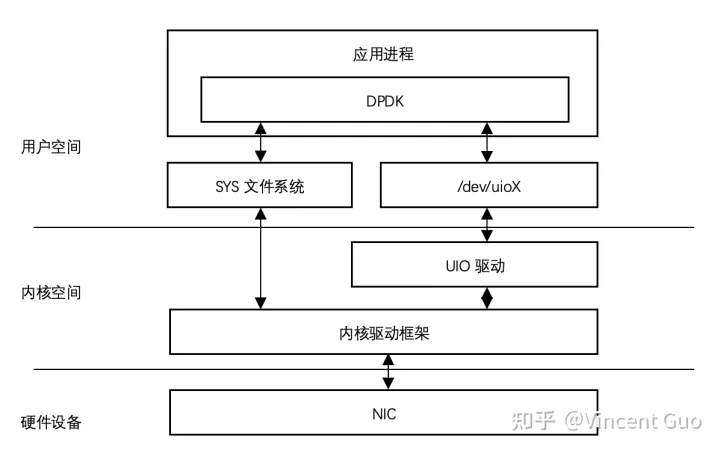

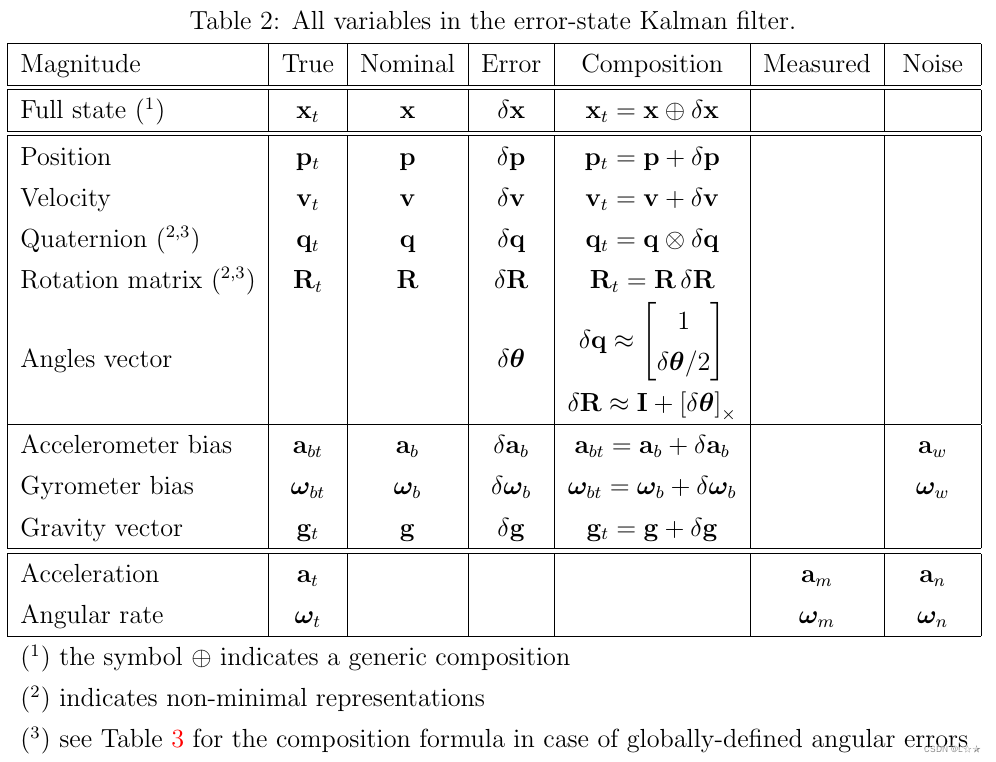

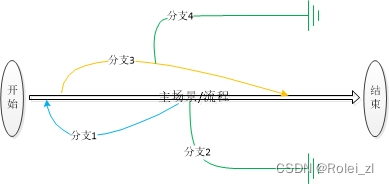

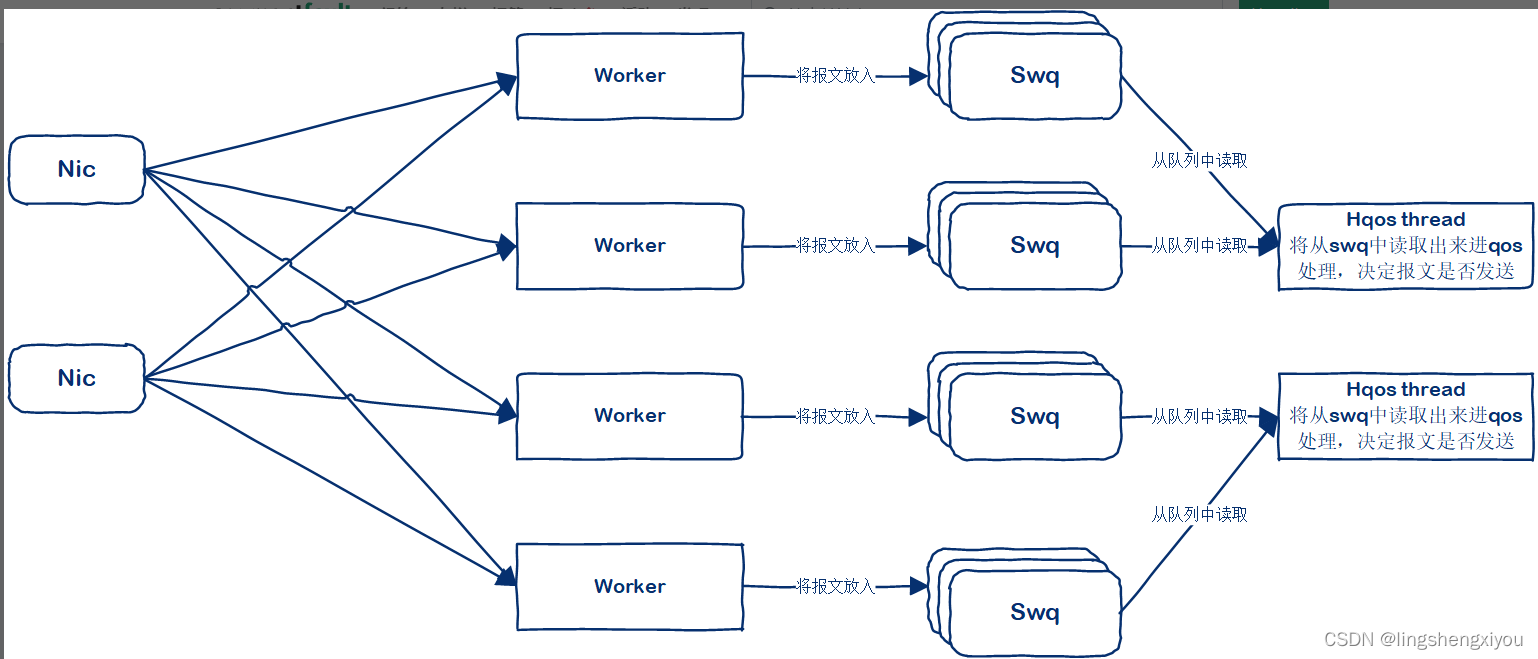

基本流程图

如上图所示,worker线程从nic中读取报文进行处理,调用dpdk设备发送函数时,如果配置了hqos,那么设置hqos相关参数,将其送入swq队列(swq队列与worker线程是1:1的关系),worker线程处理结束,hqos线程(根据配置决定个数)轮询从swq中读取报文进行qos处理,qos使用的是dpdk qos套件。

HQOS配置解析

dpdk配置

dpdk {

socket-mem 16384,16384

dev 0000:02:00.0 {

num-rx-queues 2

hqos #使能网卡hqos

}

dev 0000:06:00.0 {

num-rx-queues 2

hqos #使能网卡hqos

}

num-mbufs 1000000

}

cpu配置

cpu {

main-core 0

corelist-workers 1, 2, 3, 4

corelist-hqos-threads 5, 6 #启动两个hqos线程,分别使用cpu5和6

}

通过上面两步配置即可开启hqos配置,会使用默认的hqos配置。

dpdk qos相关参数配置

- port configuration

port {

rate 1250000000 /* Assuming 10GbE port */

frame_overhead 24 /* Overhead fields per Ethernet frame:

* 7B (Preamble) +

* 1B (Start of Frame Delimiter (SFD)) +

* 4B (Frame Check Sequence (FCS)) +

* 12B (Inter Frame Gap (IFG))

*/

mtu 1522 /* Assuming Ethernet/IPv4 pkt (FCS not included) */

n_subports_per_port 1 /* Number of subports per output interface */

n_pipes_per_subport 4096 /* Number of pipes (users/subscribers) */

queue_sizes 64 64 64 64 /* Packet queue size for each traffic class.

* All queues within the same pipe traffic class

* have the same size. Queues from different

* pipes serving the same traffic class have

* the same size. */

}

- subport configuration

subport 0 {

tb_rate 1250000000 /* Subport level token bucket rate (bytes per second) */

tb_size 1000000 /* Subport level token bucket size (bytes) */

tc0_rate 1250000000 /* Subport level token bucket rate for traffic class 0 (bytes per second) */

tc1_rate 1250000000 /* Subport level token bucket rate for traffic class 1 (bytes per second) */

tc2_rate 1250000000 /* Subport level token bucket rate for traffic class 2 (bytes per second) */

tc3_rate 1250000000 /* Subport level token bucket rate for traffic class 3 (bytes per second) */

tc_period 10 /* Time interval for refilling the token bucket associated with traffic class (Milliseconds) */

pipe 0 4095 profile 0 /* pipe 0到4095使用profile0 pipes (users/subscribers) configured with pipe profile 0 */

}

- pipe configuration

pipe_profile 0 {

tb_rate 305175 /* Pipe level token bucket rate (bytes per second) */

tb_size 1000000 /* Pipe level token bucket size (bytes) */

tc0_rate 305175 /* Pipe level token bucket rate for traffic class 0 (bytes per second) */

tc1_rate 305175 /* Pipe level token bucket rate for traffic class 1 (bytes per second) */

tc2_rate 305175 /* Pipe level token bucket rate for traffic class 2 (bytes per second) */

tc3_rate 305175 /* Pipe level token bucket rate for traffic class 3 (bytes per second) */

tc_period 40 /* Time interval for refilling the token bucket associated with traffic class at pipe level (Milliseconds) */

tc3_oversubscription_weight 1 /* Weight traffic class 3 oversubscription */

tc0_wrr_weights 1 1 1 1 /* Pipe queues WRR weights for traffic class 0 */

tc1_wrr_weights 1 1 1 1 /* Pipe queues WRR weights for traffic class 1 */

tc2_wrr_weights 1 1 1 1 /* Pipe queues WRR weights for traffic class 2 */

tc3_wrr_weights 1 1 1 1 /* Pipe queues WRR weights for traffic class 3 */

}

- red 拥塞控制

red {

tc0_wred_min 48 40 32 /* Minimum threshold for traffic class 0 queue (min_th) in number of packets */

tc0_wred_max 64 64 64 /* Maximum threshold for traffic class 0 queue (max_th) in number of packets */

tc0_wred_inv_prob 10 10 10 /* Inverse of packet marking probability for traffic class 0 queue (maxp = 1 / maxp_inv) */

tc0_wred_weight 9 9 9 /* Traffic Class 0 queue weight */

tc1_wred_min 48 40 32 /* Minimum threshold for traffic class 1 queue (min_th) in number of packets */

tc1_wred_max 64 64 64 /* Maximum threshold for traffic class 1 queue (max_th) in number of packets */

tc1_wred_inv_prob 10 10 10 /* Inverse of packet marking probability for traffic class 1 queue (maxp = 1 / maxp_inv) */

tc1_wred_weight 9 9 9 /* Traffic Class 1 queue weight */

tc2_wred_min 48 40 32 /* Minimum threshold for traffic class 2 queue (min_th) in number of packets */

tc2_wred_max 64 64 64 /* Maximum threshold for traffic class 2 queue (max_th) in number of packets */

tc2_wred_inv_prob 10 10 10 /* Inverse of packet marking probability for traffic class 2 queue (maxp = 1 / maxp_inv) */

tc2_wred_weight 9 9 9 /* Traffic Class 2 queue weight */

tc3_wred_min 48 40 32 /* Minimum threshold for traffic class 3 queue (min_th) in number of packets */

tc3_wred_max 64 64 64 /* Maximum threshold for traffic class 3 queue (max_th) in number of packets */

tc3_wred_inv_prob 10 10 10 /* Inverse of packet marking probability for traffic class 3 queue (maxp = 1 / maxp_inv) */

tc3_wred_weight 9 9 9 /* Traffic Class 3 queue weight */

}

port,subport,pipe,tc,queue这些配置某些参数也可以使用命令行或者api进行配置。

CLI配置qos

- 可以使用如下命令配置subport参数

set dpdk interface hqos subport <if-name> subport <n> [rate <n>] [bktsize <n>] [tc0 <n>] [tc1 <n>] [tc2 <n>] [tc3 <n>] [period <n>]

- 配置pipe参数

set dpdk interface hqos pipe <if-name> subport <n> pipe <n> profile <n>

- 指定接口的hqos处理线程

set dpdk interface hqos placement <if-name> thread <n>

- 设置报文的具体域用于报文分类,其中id为hqos_field编号

set dpdk interface hqos pktfield <if-name> id <n> offset <n> mask <n>

- 设置tc和tcq映射表,根据dscp映射到具体的tc和tcq

set dpdk interface hqos tctbl <if-name> entry <n> tc <n> queue <n>

- 查看hqos配置命令

vpp# show dpdk interface hqos TenGigabitEthernet2/0/0 Thread: Input SWQ size = 4096 packets Enqueue burst size = 256 packets Dequeue burst size = 220 packets Packet field 0: slab position = 0, slab bitmask = 0x0000000000000000 Packet field 1: slab position = 40, slab bitmask = 0x0000000fff000000 Packet field 2: slab position = 8, slab bitmask = 0x00000000000000fc Packet field 2 translation table: [ 0 .. 15]: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 [16 .. 31]: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 [32 .. 47]: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 [48 .. 63]: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 Port: Rate = 1250000000 bytes/second MTU = 1514 bytes Frame overhead = 24 bytes Number of subports = 1 Number of pipes per subport = 4096 Packet queue size: TC0 = 64, TC1 = 64, TC2 = 64, TC3 = 64 packets Number of pipe profiles = 1 Pipe profile 0: Rate = 305175 bytes/second Token bucket size = 1000000 bytes Traffic class rate: TC0 = 305175, TC1 = 305175, TC2 = 305175, TC3 = 305175 bytes/second TC period = 40 milliseconds TC0 WRR weights: Q0 = 1, Q1 = 1, Q2 = 1, Q3 = 1 TC1 WRR weights: Q0 = 1, Q1 = 1, Q2 = 1, Q3 = 1 TC2 WRR weights: Q0 = 1, Q1 = 1, Q2 = 1, Q3 = 1 TC3 WRR weights: Q0 = 1, Q1 = 1, Q2 = 1, Q3 = 1

- 查看设备所属的hqos线程

vpp# show dpdk interface hqos placement

Thread 5 (vpp_hqos-threads_0 at lcore 5):

TenGigabitEthernet2/0/0 queue 0

Thread 6 (vpp_hqos-threads_1 at lcore 6):

TenGigabitEthernet4/0/1 queue 0

DPDK QOS套件

该部分内容请参考:

http://doc.dpdk.org/guides/pr...

HQOS源码分析

数据结构

dpdk_device_t

HQOS是基于每个dpdk设备的,所以dpdk设备描述控制块存在与hqos相关的成员。

typedef struct

{

......

/* HQoS related 工作线程与hqos线程之间有一个映射关系,因为两者线程不一样多 */

dpdk_device_hqos_per_worker_thread_t *hqos_wt;//工作线程队列,工作线程往hqos_wt.swq中写报文,swq指向hqos_ht.swq

dpdk_device_hqos_per_hqos_thread_t *hqos_ht;//hqos线程队列,hqos线程从hqos_ht.swq中读报文

......

} dpdk_device_t;

dpdk_device_hqos_per_worker_thread_t

typedef struct

{

/* Required for vec_validate_aligned */

CLIB_CACHE_LINE_ALIGN_MARK (cacheline0);

struct rte_ring *swq;//指向该设备的该线程将报文写入的目标环形队列。

//这些参数用来对报文进行分类,设置dpdk qos的subport,pipe,tc,queue等参数

u64 hqos_field0_slabmask;

u32 hqos_field0_slabpos;

u32 hqos_field0_slabshr;

u64 hqos_field1_slabmask;

u32 hqos_field1_slabpos;

u32 hqos_field1_slabshr;

u64 hqos_field2_slabmask;

u32 hqos_field2_slabpos;

u32 hqos_field2_slabshr;

u32 hqos_tc_table[64];

} dpdk_device_hqos_per_worker_thread_t;

上面是每工作线程与hqos相关的私有数据,dpdk设备维护一个这样的数组,每一个工作线程一个成员。该结构中的成员主要用来对报文进行分类,从上面可以看出,报文分类太简单,无法满足较细的分类需求。可以结合分类器做进一步改进。

dpdk_device_hqos_per_hqos_thread_t

typedef struct

{

/* Required for vec_validate_aligned */

CLIB_CACHE_LINE_ALIGN_MARK (cacheline0);

struct rte_ring **swq;//worker thread和hqos thread线程报文中转环形队列数组,其大小等于worker线程的个数

struct rte_mbuf **pkts_enq;//入队,用于从swq中提取报文放入到pkts_enq中,然后将pkts_enq中的报文压入qos

struct rte_mbuf **pkts_deq;//出队,从qos中提取报文,然后从网口发送出去。

struct rte_sched_port *hqos;//设备的dpdk qos配置参数

u32 hqos_burst_enq;//设备一次入队报文的个数限制

u32 hqos_burst_deq;//设备一次出队报文的个数限制

u32 pkts_enq_len;//当前入队报文中的个数

u32 swq_pos;//hqos迭代器当前处理的软件队列的位置

u32 flush_count;//当前报文不足批量写入个数空转次数,达到一定次数后,立即写入,不再等待。

} dpdk_device_hqos_per_hqos_thread_t;

上面是每hqos线程与hqos相关的私有数据,一个dpdk设备只能属于一个hqos线程。该结构主要维护与worker线程的桥接队列以及该port的hqos参数。

dpdk_main_t

typedef struct

{

......

//dpdk设备hqos cpu索引数组,根据hqos索引获取其管理的dpdk设备数组,构建了hqos线程与dpdk设备的关系,是一对多的关系

dpdk_device_and_queue_t **devices_by_hqos_cpu;

......

} dpdk_main_t;

hqos类线程注册

/* *INDENT-OFF* 注册dpdk类线程*/

VLIB_REGISTER_THREAD (hqos_thread_reg, static) =

{

.name = "hqos-threads",

.short_name = "hqos-threads",

.function = dpdk_hqos_thread_fn,//执行函数

};

前面提到的配置corelist-hqos-threads 5,6 。VPP在解析到该配置后,会使用hqos-threads去线程类型链表中去查找是否存在这样的线程,找到后会启动对应个数的该类线程,线程的主函数为dpdk_hqos_thread_fn。

函数实现

dpdk_hqos_thread_fn

//dpdk线程执行函数

void

dpdk_hqos_thread_fn (void *arg)

{

vlib_worker_thread_t *w = (vlib_worker_thread_t *) arg;

vlib_worker_thread_init (w);//hqos线程初始化

dpdk_hqos_thread (w);

}

void

dpdk_hqos_thread (vlib_worker_thread_t * w)

{

vlib_main_t *vm;

vlib_thread_main_t *tm = vlib_get_thread_main ();

dpdk_main_t *dm = &dpdk_main;

vm = vlib_get_main ();

ASSERT (vm->thread_index == vlib_get_thread_index ());

clib_time_init (&vm->clib_time);

clib_mem_set_heap (w->thread_mheap);

/* Wait until the dpdk init sequence is complete */

while (tm->worker_thread_release == 0)

vlib_worker_thread_barrier_check ();

//根据cpu索引

if (vec_len (dm->devices_by_hqos_cpu[vm->thread_index]) == 0)

return

clib_error

("current I/O TX thread does not have any devices assigned to it");

if (DPDK_HQOS_DBG_BYPASS)

dpdk_hqos_thread_internal_hqos_dbg_bypass (vm);//调试函数,用于调试

else

dpdk_hqos_thread_internal (vm);//核心处理函数

}

dpdk_hqos_thread_internal

static_always_inline void

dpdk_hqos_thread_internal (vlib_main_t * vm)

{

dpdk_main_t *dm = &dpdk_main;

u32 thread_index = vm->thread_index;

u32 dev_pos;

dev_pos = 0;//起始设备编号

while (1)//循环遍历每一个设备

{

vlib_worker_thread_barrier_check ();//查看主线程是否通知了sync

//根据cpu id获取该cpu的设备数组

u32 n_devs = vec_len (dm->devices_by_hqos_cpu[thread_index]);

if (PREDICT_FALSE (n_devs == 0))

{

dev_pos = 0;

continue;

}

//一圈遍历完成,开始新的一圈。

if (dev_pos >= n_devs)

dev_pos = 0;

//获取当前需要遍历的设备的上下文

dpdk_device_and_queue_t *dq =

vec_elt_at_index (dm->devices_by_hqos_cpu[thread_index], dev_pos);

//获取dpdk设备描述控制块

dpdk_device_t *xd = vec_elt_at_index (dm->devices, dq->device);

原文链接:https://segmentfault.com/a/1190000019644679