原理分析

通常用常规图像算法做检测类的算法需要将图像特征增强,其中就需要滤波,把噪点去掉,如果直接用滤波,像高斯滤波,中值滤波,均值滤波等等,不仅会把噪点过滤掉,也会把图像的一些细节,包括边缘给模糊掉,从而造成图像信息丢失。有时候为了保留边缘及一些细节信息,就会用到保边滤波算法。之前用过的保边滤波包括双边滤波,表面模糊等算法。这两个算法都比较慢,对于检测类的算法来说,性价比不高。

传统滤波算法以滤波器的核中心与要处理的像素对齐进行计算,不考虑要处理的像素是否是在边缘区域,导致滤波窗口在跨越边缘时,引入对来自于边缘两侧像素值的加权计算。一旦经过这样的计算,将会导致边缘信息在沿边缘法线的方向出现扩散,导致边缘信息减弱或者丢失。比如对于均值滤波来说:

中心像素处在边缘处经过均值滤波后,像素值会被拉高

为了避免传统滤波算法对边缘的弱化,现在考虑将待处理的像素不一定非要与滤波器核心对齐。

下面拿均值滤波来说明。对于平坦区域,均值滤波不管滤波器用什么形状的滤波核,其计算结果都跟原像素相差不大。对于处在边缘的像素,若想尽可能的保留边缘,我们需要尽可能的保留跟当前要计算的像素相似的区块中的像素进行计算,而跟当前要计算的像素相差很大的区块中的像素要尽可能抛掉。这个思路跟表面模糊算法很像。这里稍微介绍下表面模糊算法:

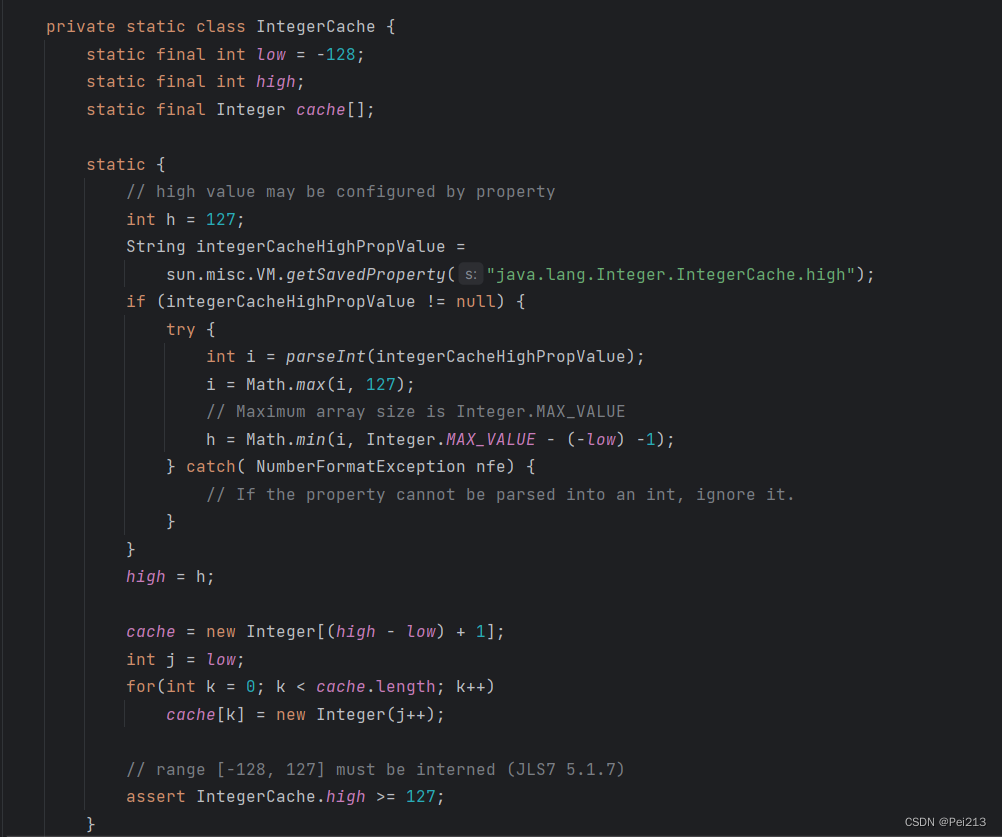

从上面公式可以看出,表面模糊算法的主要思想还是计算当前像素X的邻域范围内不同像素的加权求和,如果跟中心像素差的越多,则权值越小,跟中心像素相差越小,则权值越大,以此来保留边缘信息。上面公式中如果Y值取得非常大,那么表面模糊算法跟均值滤波算法就是一样的。

如下图是典型的理想边缘模型,分别是阶跃边缘、斜坡边缘和屋顶型边缘。我们做均值滤波,计算下图中红点所在像素的新像素值时,肯定希望尽可能用到跟红点在同一个面上的区块像素,而抛掉跟红点不在同一个平面的区块像素,这样就可以尽可能的保留红点所在的边缘,而不是弱化红点所在的边缘。

为了达到上述目的,我们可以预设一些滤波器,这些滤波器内部就有边缘,并且边缘类型就是上图中阶跃边缘类型,它被一切为2块。这样在对图像的边缘块的像素进行计算时,就可以让这样滤波器模型跟实际要计算的像素块所处的边缘模型进行匹配,如果能够匹配上,那么红点处计算的像素值将不会有太大变化,如果匹配是相反的,那么红点处计算的新值将和原来的值差很多。

滤波器中边缘模型有很多种,为了便于计算,只取其中8种。其它形状的滤波器由于有锯齿形状,不好遍历计算(尤其是在滤波核半径如果比较大的时候,那时如果有锯齿形状就只能一行行遍历,不能for循环)因此没有被采用。

上图8类滤波器中每个滤波器被明显分成两个块,即是有边缘的,并且形状不一,最重要的是其中的值分布是呈正方形或者长方形,非常便于遍历计算。我们只需要用上述面8种滤波器分别跟待计算的像素做匹配,然后计算对应的新的像素值,当该新的像素值跟原像素值相差的最小时,说明对应的滤波器的边缘形状,跟待计算的像素所在区块的边缘形状最接近,这时采取的均值滤波对于边缘处的像素弱化最小。

上面是我根据自己的理解对侧窗滤波算法的介绍。原论文里面有公式推导,也有分析滤波器侧窗的形状的公式,比较严谨,但是感觉不太直观。我按照自己的思考提供一种理解方式吧,如果有疑问,或者认为我的理解有误的,欢迎指正哈,谢谢!

#include <fstream>

#include <opencv2/opencv.hpp>

#include <string>

using namespace std;

using namespace cv;

#include <math.h>

#include <cstdio>

#include <cstdlib>

enum class SIDE_DIRECTIONS : int {

L = 0,

R = 1,

U = 2,

D = 3,

NW = 4,

NE = 5,

SW = 6,

SE = 7,

SIDE_DIRECTIONS_COUNT = 8

};

const int side_directions_count = 8;

enum class XY_START_END : int {

WIDTH_START = 0,

WIDTH_END = 1,

HEIGHT_START = 2,

HEIGHT_END = 3,

XY_START_END_COUNT = 4

};

//表面模糊处理

void SurfaceBlur(float *data, int height, int width, int r, int threshold,

float *result) {

for (int i = 0; i < height; ++i) {

for (int j = 0; j < width; ++j) {

float sumx = 0.f;

float sumy = 0.f;

for (int m = i - r; m < i + r; ++m) {

for (int n = j - r; n < j + r; ++n) {

sumx = sumx + (1 - fabs(data[i * width + j] - data[m * width + n]) /

(2.5 * threshold)) *

data[m * width + n];

sumy = sumy + (1 - fabs(data[i * width + j] - data[m * width + n]) /

(2.5 * threshold));

}

}

result[i * width + j] = sumx / sumy;

}

}

}

std::vector<std::vector<int> > m_side_window_params;

std::vector<std::vector<float> > m_side_window_filter_results;

void init(int height, int width, int radius) {

int panel_size = height * width;

m_side_window_params.resize(side_directions_count);

m_side_window_params[static_cast<int>(SIDE_DIRECTIONS::L)] = {

-radius, 0, -radius, radius};

m_side_window_params[static_cast<int>(SIDE_DIRECTIONS::R)] = {

0, radius, -radius, radius};

m_side_window_params[static_cast<int>(SIDE_DIRECTIONS::U)] = {-radius, radius,

-radius, 0};

m_side_window_params[static_cast<int>(SIDE_DIRECTIONS::D)] = {-radius, radius,

0, radius};

m_side_window_params[static_cast<int>(SIDE_DIRECTIONS::NW)] = {-radius, 0,

-radius, 0};

m_side_window_params[static_cast<int>(SIDE_DIRECTIONS::NE)] = {0, radius,

-radius, 0};

m_side_window_params[static_cast<int>(SIDE_DIRECTIONS::SW)] = {-radius, 0, 0,

radius};

m_side_window_params[static_cast<int>(SIDE_DIRECTIONS::SE)] = {0, radius, 0,

radius};

m_side_window_filter_results.resize(side_directions_count);

for (int i = 0; i < side_directions_count; ++i) {

m_side_window_filter_results[i].resize(panel_size);

}

}

void sideWindowFilterImpl(const float *input, const int radius,

const int height, const int width,

const SIDE_DIRECTIONS direction, float *output) {

const int w_start =

m_side_window_params[static_cast<int>(direction)]

[static_cast<int>(XY_START_END::WIDTH_START)];

const int w_end =

m_side_window_params[static_cast<int>(direction)]

[static_cast<int>(XY_START_END::WIDTH_END)];

const int h_start =

m_side_window_params[static_cast<int>(direction)]

[static_cast<int>(XY_START_END::HEIGHT_START)];

const int h_end =

m_side_window_params[static_cast<int>(direction)]

[static_cast<int>(XY_START_END::HEIGHT_END)];

const int w_first_loop_end = std::max(0, -w_start);

const int w_second_loop_start = w_first_loop_end;

const int w_second_loop_end = width - 1 - w_end;

const int w_third_loop_start = width - w_end;

int nn = 0;

int remain_start = (nn << 2) + w_second_loop_start;

int out_idx = 0;

int threshold = 10000;

for (int h = 0; h < height; ++h) {

const int h_lower_bound = std::max(0, h + h_start);

const int h_upper_bound = std::min(height - 1, h + h_end);

const int h_interval = h_upper_bound - h_lower_bound + 1;

//第一个for循环是处理图像边缘

for (int w = 0; w < w_first_loop_end; ++w) {

const int w_left_bound = std::max(0, w + w_start);

const int w_right_bound = std::min(width - 1, w + w_end);

const int arr_len = h_interval * (w_right_bound - w_left_bound + 1);

int idx = 0;

float sumx = 0.f;

float sumy = 0.f;

float center_pixel = input[h * width + w];

for (int i = h_lower_bound; i <= h_upper_bound; ++i) {

const int h_idx = i * width;

for (int j = w_left_bound; j <= w_right_bound; ++j) {

sumx = sumx + (1 - fabs(center_pixel - input[h_idx + j]) /

(2.5 * threshold)) *

input[h_idx + j];

sumy = sumy + (1 - fabs(center_pixel - input[h_idx + j]) /

(2.5 * threshold));

}

}

output[out_idx++] = sumx / sumy;

}

//这里是处理图像中间的任意像素

for (int w = remain_start; w <= w_second_loop_end; ++w) {

const int w_left_bound = std::max(0, w + w_start);

const int w_right_bound = std::min(width - 1, w + w_end);

int idx = 0;

float sumx = 0.f;

float sumy = 0.f;

float center_pixel = input[h * width + w];

for (int i = h_lower_bound; i <= h_upper_bound; ++i) {

const int h_idx = i * width;

for (int j = w_left_bound; j <= w_right_bound; ++j) {

sumx = sumx + (1 - fabs(center_pixel - input[h_idx + j]) /

(2.5 * threshold)) *

input[h_idx + j];

sumy = sumy + (1 - fabs(center_pixel - input[h_idx + j]) /

(2.5 * threshold));

}

}

output[out_idx++] = sumx / sumy;//这里是基于表面模糊的侧窗滤波算法,

//如果你想改成基于均值滤波的侧窗滤波算法,那么就统计下上面那两个for循环的

//像素个数,及累加的像素值的和,然后在这里求下比值赋值给output[out_idx++]就行了

}

for (int w = w_third_loop_start; w < width; ++w) {

const int w_left_bound = std::max(0, w + w_start);

const int w_right_bound = std::min(width - 1, w + w_end);

const int arr_len = h_interval * (w_right_bound - w_left_bound + 1);

int idx = 0;

float sumx = 0.f;

float sumy = 0.f;

float center_pixel = input[h * width + w];

for (int i = h_lower_bound; i <= h_upper_bound; ++i) {

const int h_idx = i * width;

for (int j = w_left_bound; j <= w_right_bound; ++j) {

sumx = sumx + (1 - fabs(center_pixel - input[h_idx + j]) /

(2.5 * threshold)) *

input[h_idx + j];

sumy = sumy + (1 - fabs(center_pixel - input[h_idx + j]) /

(2.5 * threshold));

}

}

output[out_idx++] = sumx / sumy;

}

}

}

void SideWindowFilter(const float *input, const int radius, const int height,

const int width, float *output) {

int panel_size = height * width;

for (int i = 0; i < side_directions_count; ++i) {

sideWindowFilterImpl(input, radius, height, width,

static_cast<SIDE_DIRECTIONS>(i),

m_side_window_filter_results[i].data());

}

for (int i = 0; i < panel_size; ++i) {

float tmp = m_side_window_filter_results[0][i] - input[i];

float min = tmp * tmp;

int min_idx = 0;

for (int j = 1; j < side_directions_count; ++j) {

tmp = m_side_window_filter_results[j][i] - input[i];

tmp = tmp * tmp;

if (min > tmp) {

min_idx = j;

min = tmp;

}

}

output[i] = m_side_window_filter_results[min_idx][i];

}

}

int main() {

cv::Mat sourceimg = imread("src_img/降噪test.jpg", 1);

cv::Mat resultimg = imread("src_img/降噪test.jpg", 1);

cv::imwrite("0_sourceimg.jpg", sourceimg);

int img_h = sourceimg.rows;

int img_w = sourceimg.cols;

cv::Mat temp_img;

temp_img.create(img_h, img_w, CV_32FC3);

sourceimg.convertTo(temp_img, CV_32FC3);

float *input_r = new float[img_h * img_w];

float *input_g = new float[img_h * img_w];

float *input_b = new float[img_h * img_w];

int radius = 10;

int iteration = 10;

for (int ite = 10; ite <= 10; ++ite) {

iteration = 5;

for (int rad = 3; rad <= 3; rad +=1) {

radius = 2;

for (int i = 0; i < img_h; ++i) {

for (int j = 0; j < img_w; ++j) {

input_r[i * img_w + j] = sourceimg.data[i * img_w * 3 + j * 3 + 0];

input_g[i * img_w + j] = sourceimg.data[i * img_w * 3 + j * 3 + 1];

input_b[i * img_w + j] = sourceimg.data[i * img_w * 3 + j * 3 + 2];

}

}

// SurfaceBlur(input,img_h,img_w, 1, 250, result);

init(img_h, img_w, radius);

for (int m = 0; m < iteration; ++m) {

float *temp_result_r = new float[img_h * img_w];

float *temp_result_g = new float[img_h * img_w];

float *temp_result_b = new float[img_h * img_w];

SideWindowFilter(input_r, radius, img_h, img_w, temp_result_r);

SideWindowFilter(input_g, radius, img_h, img_w, temp_result_g);

SideWindowFilter(input_b, radius, img_h, img_w, temp_result_b);

for (int i = 0; i < img_h; ++i) {

for (int j = 0; j < img_w; ++j) {

input_r[i * img_w + j] = temp_result_r[i * img_w + j];

input_g[i * img_w + j] = temp_result_g[i * img_w + j];

input_b[i * img_w + j] = temp_result_b[i * img_w + j];

}

}

delete (temp_result_r);

delete (temp_result_g);

delete (temp_result_b);

}

for (int i = 0; i < img_h; ++i) {

for (int j = 0; j < img_w; ++j) {

resultimg.data[i * img_w * 3 + j * 3 + 0] = input_r[i * img_w + j];

resultimg.data[i * img_w * 3 + j * 3 + 1] = input_g[i * img_w + j];

resultimg.data[i * img_w * 3 + j * 3 + 2] = input_b[i * img_w + j];

}

}

char str[100];

sprintf(str, "iteration_%d_radius_%d_threshold_50.jpg", iteration,

radius);

cv::imwrite(str, resultimg);

}

}

// cv::imwrite("SurfaceBlur.jpg", resultimg);

delete (input_r);

delete (input_g);

delete (input_b);

waitKey(-1);

}