13.1.2 PCA主成分分析代码实现

1.二维空间降维Python代码实现

import numpy as np

X = np.array([[1, 1], [2, 2], [3, 3]])

X

array([[1, 1],

[2, 2],

[3, 3]])

# 也可以通过pandas库来构造数据,效果一样

import pandas as pd

X = pd.DataFrame([[1, 1], [2, 2], [3, 3]])

X

| 0 | 1 | |

|---|---|---|

| 0 | 1 | 1 |

| 1 | 2 | 2 |

| 2 | 3 | 3 |

# 数据降维,由二维降至一维

from sklearn.decomposition import PCA

pca = PCA(n_components=1)

pca.fit(X) # 进行降维模型训练

X_transformed = pca.transform(X) # 进行数据降维,并赋值给X_transformed

X_transformed # 查看降维后的结果

array([[-1.41421356],

[ 0. ],

[ 1.41421356]])

# 查看此时的维度

X_transformed.shape

(3, 1)

# 查看降维的系数

pca.components_

array([[0.70710678, 0.70710678]])

# 查看线性组合表达式

a = pca.components_[0][0]

b = pca.components_[0][1]

print(str(a) + ' * X + ' + str(b) + ' * Y')

0.7071067811865476 * X + 0.7071067811865475 * Y

这个的确也和12.1.1节我们获得的线性组合是一样的。

2.三维空间降维Python代码实现

import pandas as pd

X = pd.DataFrame([[45, 0.8, 9120], [40, 0.12, 2600], [38, 0.09, 3042], [30, 0.04, 3300], [39, 0.21, 3500]], columns=['年龄(岁)', '负债比率', '月收入(元)'])

X

| 年龄(岁) | 负债比率 | 月收入(元) | |

|---|---|---|---|

| 0 | 45 | 0.80 | 9120 |

| 1 | 40 | 0.12 | 2600 |

| 2 | 38 | 0.09 | 3042 |

| 3 | 30 | 0.04 | 3300 |

| 4 | 39 | 0.21 | 3500 |

# 因为三个指标数据的量级相差较大,所以可以先进行数据归一化处理

from sklearn.preprocessing import StandardScaler

X_new = StandardScaler().fit_transform(X)

X_new # 查看归一化后的数据

array([[ 1.36321743, 1.96044639, 1.98450514],

[ 0.33047695, -0.47222431, -0.70685302],

[-0.08261924, -0.57954802, -0.52440206],

[-1.73500401, -0.75842087, -0.41790353],

[ 0.12392886, -0.15025319, -0.33534653]])

from sklearn.decomposition import PCA

pca = PCA(n_components=2)

pca.fit(X_new) # 进行降维模型训练

X_transformed = pca.transform(X_new) # 进行数据降维,并赋值给X_transformed

X_transformed # 查看降维后的结果

array([[ 3.08724247, 0.32991205],

[-0.52888635, -0.74272137],

[-0.70651782, -0.33057258],

[-1.62877292, 1.05218639],

[-0.22306538, -0.30880449]])

# 查看降维的系数

pca.components_

array([[ 0.52952108, 0.61328179, 0.58608264],

[-0.82760701, 0.22182579, 0.51561609]])

dim = ['年龄(岁)', '负债比率', '月收入(元)']

for i in pca.components_:

formula = []

for j in range(len(i)):

formula.append(str(i[j]) + ' * ' + dim[j])

print(" + ".join(formula))

0.5295210839165538 * 年龄(岁) + 0.6132817922410683 * 负债比率 + 0.5860826434841948 * 月收入(元)

-0.8276070105929828 * 年龄(岁) + 0.2218257919336094 * 负债比率 + 0.5156160917294705 * 月收入(元)

# 如果不想显示具体的特征名称,可以采用如下的写法

dim = ['X', 'Y', 'Z']

for i in pca.components_:

formula = []

for j in range(len(i)):

formula.append(str(i[j]) + ' * ' + dim[j])

print(" + ".join(formula))

0.5295210839165538 * X + 0.6132817922410683 * Y + 0.5860826434841948 * Z

-0.8276070105929828 * X + 0.2218257919336094 * Y + 0.5156160917294705 * Z

13.2 案例实战: 人脸识别模型

13.2.1 案例背景

13.2.2 人脸数据读取、处理与变量提取

1. 读取人脸照片数据

import os

names = os.listdir('olivettifaces')

names[0:5] # 查看前5项读取的文件名

['10_0.jpg', '10_1.jpg', '10_2.jpg', '10_3.jpg', '10_4.jpg']

# 获取到文件名称后,便可以通过如下代码在Python中查看这些图片

from PIL import Image

img0 = Image.open('olivettifaces\\' + names[0])

img0.show()

img0 # 在Jupyter Notebook中可以直接输入变量名查看图像

2.人脸数据处理: 特征变量提取

# 图像灰度处理及数值化处理

import numpy as np

img0 = img0.convert('L')

img0 = img0.resize((32, 32))

arr = np.array(img0)

arr # 查看数值化后的结果

array([[186, 76, 73, ..., 100, 103, 106],

[196, 85, 68, ..., 85, 106, 103],

[193, 69, 79, ..., 82, 99, 100],

...,

[196, 87, 193, ..., 103, 66, 52],

[219, 179, 202, ..., 150, 127, 109],

[244, 228, 230, ..., 198, 202, 206]], dtype=uint8)

# 如果觉得numpy格式的arr不好观察,则可以通过pandas库将其转为DataFrame格式进行观察

import pandas as pd

pd.DataFrame(arr)

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | ... | 22 | 23 | 24 | 25 | 26 | 27 | 28 | 29 | 30 | 31 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 186 | 76 | 73 | 87 | 89 | 88 | 75 | 81 | 100 | 102 | ... | 71 | 75 | 75 | 73 | 76 | 85 | 95 | 100 | 103 | 106 |

| 1 | 196 | 85 | 68 | 78 | 104 | 97 | 100 | 94 | 83 | 87 | ... | 52 | 59 | 70 | 85 | 62 | 82 | 89 | 85 | 106 | 103 |

| 2 | 193 | 69 | 79 | 92 | 105 | 102 | 112 | 117 | 106 | 94 | ... | 41 | 45 | 50 | 76 | 59 | 74 | 83 | 82 | 99 | 100 |

| 3 | 186 | 67 | 71 | 75 | 85 | 99 | 114 | 115 | 109 | 109 | ... | 42 | 43 | 40 | 52 | 41 | 61 | 69 | 76 | 76 | 108 |

| 4 | 179 | 46 | 41 | 50 | 53 | 69 | 80 | 91 | 108 | 104 | ... | 43 | 37 | 30 | 31 | 35 | 43 | 59 | 61 | 56 | 101 |

| 5 | 173 | 33 | 43 | 49 | 48 | 53 | 64 | 69 | 72 | 75 | ... | 38 | 36 | 33 | 32 | 39 | 45 | 68 | 60 | 45 | 83 |

| 6 | 173 | 30 | 37 | 41 | 42 | 57 | 81 | 88 | 77 | 64 | ... | 31 | 32 | 35 | 32 | 35 | 49 | 65 | 64 | 53 | 87 |

| 7 | 171 | 24 | 32 | 36 | 42 | 55 | 77 | 101 | 107 | 102 | ... | 54 | 64 | 63 | 51 | 53 | 60 | 56 | 46 | 49 | 89 |

| 8 | 170 | 21 | 31 | 29 | 28 | 35 | 47 | 62 | 76 | 83 | ... | 105 | 101 | 89 | 63 | 45 | 42 | 41 | 37 | 61 | 101 |

| 9 | 172 | 21 | 22 | 27 | 28 | 30 | 33 | 43 | 46 | 44 | ... | 129 | 118 | 103 | 74 | 39 | 27 | 36 | 34 | 68 | 101 |

| 10 | 171 | 23 | 30 | 21 | 30 | 36 | 44 | 51 | 46 | 41 | ... | 136 | 126 | 120 | 102 | 70 | 40 | 30 | 35 | 78 | 101 |

| 11 | 175 | 21 | 33 | 31 | 42 | 54 | 71 | 73 | 64 | 59 | ... | 142 | 133 | 122 | 114 | 111 | 77 | 31 | 36 | 95 | 104 |

| 12 | 192 | 42 | 27 | 37 | 63 | 87 | 99 | 111 | 116 | 116 | ... | 105 | 108 | 108 | 107 | 109 | 104 | 58 | 59 | 100 | 97 |

| 13 | 196 | 82 | 41 | 58 | 102 | 112 | 110 | 110 | 107 | 108 | ... | 70 | 71 | 86 | 102 | 107 | 116 | 84 | 77 | 95 | 99 |

| 14 | 192 | 88 | 78 | 95 | 96 | 87 | 81 | 72 | 51 | 40 | ... | 62 | 73 | 73 | 101 | 116 | 116 | 99 | 80 | 88 | 105 |

| 15 | 190 | 88 | 102 | 114 | 99 | 76 | 55 | 55 | 50 | 37 | ... | 100 | 112 | 120 | 120 | 125 | 125 | 105 | 97 | 101 | 98 |

| 16 | 189 | 106 | 111 | 113 | 137 | 124 | 113 | 109 | 103 | 96 | ... | 134 | 137 | 142 | 146 | 134 | 120 | 96 | 115 | 122 | 93 |

| 17 | 188 | 106 | 132 | 119 | 142 | 160 | 158 | 153 | 148 | 145 | ... | 163 | 158 | 156 | 142 | 124 | 110 | 100 | 114 | 108 | 86 |

| 18 | 193 | 83 | 140 | 130 | 122 | 141 | 153 | 160 | 168 | 177 | ... | 163 | 158 | 148 | 129 | 112 | 103 | 99 | 99 | 78 | 77 |

| 19 | 190 | 81 | 117 | 127 | 107 | 120 | 134 | 146 | 163 | 166 | ... | 157 | 145 | 132 | 117 | 105 | 103 | 90 | 64 | 67 | 72 |

| 20 | 193 | 83 | 84 | 106 | 104 | 113 | 122 | 134 | 138 | 143 | ... | 142 | 129 | 116 | 109 | 105 | 102 | 86 | 55 | 60 | 63 |

| 21 | 194 | 78 | 87 | 91 | 92 | 108 | 113 | 122 | 127 | 140 | ... | 140 | 128 | 118 | 113 | 109 | 101 | 68 | 56 | 56 | 56 |

| 22 | 191 | 80 | 89 | 88 | 90 | 104 | 114 | 120 | 131 | 141 | ... | 130 | 129 | 119 | 108 | 103 | 101 | 50 | 53 | 55 | 53 |

| 23 | 189 | 77 | 89 | 91 | 86 | 93 | 111 | 122 | 133 | 129 | ... | 102 | 113 | 111 | 107 | 101 | 85 | 53 | 51 | 54 | 55 |

| 24 | 190 | 86 | 88 | 87 | 87 | 87 | 104 | 115 | 127 | 115 | ... | 105 | 108 | 104 | 102 | 97 | 55 | 53 | 50 | 53 | 59 |

| 25 | 187 | 74 | 89 | 81 | 93 | 130 | 103 | 96 | 110 | 108 | ... | 111 | 105 | 103 | 102 | 83 | 50 | 49 | 56 | 51 | 49 |

| 26 | 190 | 79 | 81 | 107 | 166 | 206 | 119 | 88 | 94 | 105 | ... | 102 | 104 | 99 | 100 | 111 | 111 | 57 | 48 | 52 | 53 |

| 27 | 192 | 78 | 83 | 173 | 211 | 158 | 114 | 100 | 87 | 94 | ... | 101 | 98 | 98 | 96 | 116 | 123 | 119 | 52 | 49 | 55 |

| 28 | 188 | 70 | 136 | 177 | 198 | 108 | 101 | 119 | 86 | 81 | ... | 98 | 98 | 93 | 82 | 80 | 123 | 145 | 73 | 43 | 51 |

| 29 | 196 | 87 | 193 | 187 | 179 | 113 | 123 | 123 | 110 | 81 | ... | 95 | 90 | 96 | 77 | 53 | 160 | 124 | 103 | 66 | 52 |

| 30 | 219 | 179 | 202 | 196 | 198 | 146 | 122 | 118 | 119 | 94 | ... | 92 | 90 | 87 | 57 | 89 | 126 | 140 | 150 | 127 | 109 |

| 31 | 244 | 228 | 230 | 231 | 233 | 213 | 188 | 195 | 193 | 189 | ... | 179 | 184 | 177 | 161 | 202 | 182 | 207 | 198 | 202 | 206 |

32 rows × 32 columns

# 上面获得的32*32的二维数组,还不利于数据建模,所以我们还需要通过reshape(1, -1)方法将其转换成一行(若reshape(-1,1)则转为一列),也即1*1024格式

arr = arr.reshape(1, -1)

print(arr) # 查看转换后的结果,这一行数就是代表那张人脸图片了,其共有32*32=1024列数

[[186 76 73 ... 198 202 206]]

因为总共有400张照片需要处理,若将400个二维数组堆叠起来会形成三维数组,因为我们需要使用flatten()函数将1*1024的二维数组降维成一维数组,并通过tolist()函数将其转为列表方便之后和其他图片的颜色数值信息一起处理。

print(arr.flatten().tolist()) # 下面这一行数就是那张人脸转换后的结果了

[186, 76, 73, 87, 89, 88, 75, 81, 100, 102, 105, 92, 74, 65, 65, 53, 43, 55, 53, 42, 58, 77, 71, 75, 75, 73, 76, 85, 95, 100, 103, 106, 196, 85, 68, 78, 104, 97, 100, 94, 83, 87, 88, 89, 86, 70, 65, 61, 55, 52, 38, 32, 52, 66, 52, 59, 70, 85, 62, 82, 89, 85, 106, 103, 193, 69, 79, 92, 105, 102, 112, 117, 106, 94, 91, 112, 101, 87, 75, 61, 58, 54, 49, 48, 44, 41, 41, 45, 50, 76, 59, 74, 83, 82, 99, 100, 186, 67, 71, 75, 85, 99, 114, 115, 109, 109, 98, 101, 86, 68, 74, 65, 58, 53, 51, 52, 42, 40, 42, 43, 40, 52, 41, 61, 69, 76, 76, 108, 179, 46, 41, 50, 53, 69, 80, 91, 108, 104, 98, 93, 91, 88, 73, 60, 56, 55, 51, 49, 53, 55, 43, 37, 30, 31, 35, 43, 59, 61, 56, 101, 173, 33, 43, 49, 48, 53, 64, 69, 72, 75, 82, 84, 84, 82, 72, 75, 69, 71, 67, 56, 58, 55, 38, 36, 33, 32, 39, 45, 68, 60, 45, 83, 173, 30, 37, 41, 42, 57, 81, 88, 77, 64, 63, 64, 65, 64, 48, 48, 68, 62, 47, 50, 45, 31, 31, 32, 35, 32, 35, 49, 65, 64, 53, 87, 171, 24, 32, 36, 42, 55, 77, 101, 107, 102, 98, 83, 71, 64, 44, 48, 54, 64, 76, 65, 49, 48, 54, 64, 63, 51, 53, 60, 56, 46, 49, 89, 170, 21, 31, 29, 28, 35, 47, 62, 76, 83, 87, 78, 53, 58, 65, 83, 90, 97, 108, 101, 97, 105, 105, 101, 89, 63, 45, 42, 41, 37, 61, 101, 172, 21, 22, 27, 28, 30, 33, 43, 46, 44, 43, 46, 50, 63, 76, 87, 95, 104, 114, 120, 122, 125, 129, 118, 103, 74, 39, 27, 36, 34, 68, 101, 171, 23, 30, 21, 30, 36, 44, 51, 46, 41, 45, 52, 61, 70, 84, 101, 112, 119, 126, 131, 134, 138, 136, 126, 120, 102, 70, 40, 30, 35, 78, 101, 175, 21, 33, 31, 42, 54, 71, 73, 64, 59, 65, 78, 95, 106, 110, 116, 122, 130, 140, 143, 141, 142, 142, 133, 122, 114, 111, 77, 31, 36, 95, 104, 192, 42, 27, 37, 63, 87, 99, 111, 116, 116, 117, 117, 121, 122, 120, 119, 120, 121, 120, 113, 107, 106, 105, 108, 108, 107, 109, 104, 58, 59, 100, 97, 196, 82, 41, 58, 102, 112, 110, 110, 107, 108, 107, 107, 115, 126, 126, 118, 98, 87, 69, 57, 55, 55, 70, 71, 86, 102, 107, 116, 84, 77, 95, 99, 192, 88, 78, 95, 96, 87, 81, 72, 51, 40, 52, 53, 68, 89, 116, 111, 83, 61, 44, 55, 61, 42, 62, 73, 73, 101, 116, 116, 99, 80, 88, 105, 190, 88, 102, 114, 99, 76, 55, 55, 50, 37, 60, 53, 49, 84, 161, 155, 109, 94, 83, 76, 84, 88, 100, 112, 120, 120, 125, 125, 105, 97, 101, 98, 189, 106, 111, 113, 137, 124, 113, 109, 103, 96, 84, 84, 99, 123, 172, 176, 139, 130, 127, 120, 116, 115, 134, 137, 142, 146, 134, 120, 96, 115, 122, 93, 188, 106, 132, 119, 142, 160, 158, 153, 148, 145, 156, 151, 139, 139, 160, 177, 151, 138, 141, 153, 162, 164, 163, 158, 156, 142, 124, 110, 100, 114, 108, 86, 193, 83, 140, 130, 122, 141, 153, 160, 168, 177, 171, 154, 135, 137, 161, 176, 158, 146, 143, 142, 159, 164, 163, 158, 148, 129, 112, 103, 99, 99, 78, 77, 190, 81, 117, 127, 107, 120, 134, 146, 163, 166, 155, 134, 138, 137, 153, 164, 141, 132, 132, 127, 148, 156, 157, 145, 132, 117, 105, 103, 90, 64, 67, 72, 193, 83, 84, 106, 104, 113, 122, 134, 138, 143, 141, 138, 93, 69, 84, 86, 71, 62, 75, 106, 142, 146, 142, 129, 116, 109, 105, 102, 86, 55, 60, 63, 194, 78, 87, 91, 92, 108, 113, 122, 127, 140, 148, 154, 121, 80, 72, 66, 80, 96, 106, 112, 137, 147, 140, 128, 118, 113, 109, 101, 68, 56, 56, 56, 191, 80, 89, 88, 90, 104, 114, 120, 131, 141, 145, 150, 153, 142, 144, 124, 105, 111, 119, 121, 128, 128, 130, 129, 119, 108, 103, 101, 50, 53, 55, 53, 189, 77, 89, 91, 86, 93, 111, 122, 133, 129, 125, 126, 118, 117, 115, 111, 100, 85, 87, 86, 76, 88, 102, 113, 111, 107, 101, 85, 53, 51, 54, 55, 190, 86, 88, 87, 87, 87, 104, 115, 127, 115, 101, 88, 83, 84, 91, 96, 108, 114, 101, 98, 106, 103, 105, 108, 104, 102, 97, 55, 53, 50, 53, 59, 187, 74, 89, 81, 93, 130, 103, 96, 110, 108, 108, 129, 126, 112, 119, 108, 96, 98, 105, 110, 117, 118, 111, 105, 103, 102, 83, 50, 49, 56, 51, 49, 190, 79, 81, 107, 166, 206, 119, 88, 94, 105, 112, 116, 113, 106, 96, 92, 95, 102, 103, 107, 113, 108, 102, 104, 99, 100, 111, 111, 57, 48, 52, 53, 192, 78, 83, 173, 211, 158, 114, 100, 87, 94, 108, 114, 115, 119, 129, 146, 151, 144, 140, 133, 121, 112, 101, 98, 98, 96, 116, 123, 119, 52, 49, 55, 188, 70, 136, 177, 198, 108, 101, 119, 86, 81, 94, 105, 115, 123, 127, 128, 126, 122, 119, 109, 97, 97, 98, 98, 93, 82, 80, 123, 145, 73, 43, 51, 196, 87, 193, 187, 179, 113, 123, 123, 110, 81, 73, 81, 88, 96, 97, 95, 91, 91, 89, 86, 91, 99, 95, 90, 96, 77, 53, 160, 124, 103, 66, 52, 219, 179, 202, 196, 198, 146, 122, 118, 119, 94, 76, 73, 72, 74, 77, 73, 74, 79, 77, 83, 92, 94, 92, 90, 87, 57, 89, 126, 140, 150, 127, 109, 244, 228, 230, 231, 233, 213, 188, 195, 193, 189, 181, 173, 171, 171, 171, 168, 168, 171, 173, 178, 182, 179, 179, 184, 177, 161, 202, 182, 207, 198, 202, 206]

# 构造所有图片的特征变量

X = [] # 特征变量

for i in names:

img = Image.open('olivettifaces\\' + i)

img = img.convert('L')

img = img.resize((32, 32))

arr = np.array(img)

X.append(arr.reshape(1, -1).flatten().tolist())

import pandas as pd

X = pd.DataFrame(X)

X # 查看400张图片转换后的结果

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | ... | 1014 | 1015 | 1016 | 1017 | 1018 | 1019 | 1020 | 1021 | 1022 | 1023 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 186 | 76 | 73 | 87 | 89 | 88 | 75 | 81 | 100 | 102 | ... | 179 | 184 | 177 | 161 | 202 | 182 | 207 | 198 | 202 | 206 |

| 1 | 196 | 90 | 97 | 98 | 98 | 87 | 101 | 89 | 65 | 73 | ... | 181 | 167 | 190 | 188 | 203 | 209 | 205 | 198 | 190 | 190 |

| 2 | 193 | 89 | 97 | 99 | 75 | 74 | 83 | 64 | 77 | 86 | ... | 178 | 178 | 156 | 185 | 195 | 201 | 206 | 201 | 189 | 190 |

| 3 | 192 | 84 | 93 | 89 | 97 | 89 | 66 | 60 | 60 | 57 | ... | 173 | 151 | 199 | 189 | 203 | 200 | 196 | 186 | 182 | 184 |

| 4 | 194 | 72 | 49 | 45 | 56 | 37 | 44 | 62 | 71 | 71 | ... | 192 | 194 | 192 | 176 | 174 | 224 | 200 | 218 | 176 | 168 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 395 | 114 | 115 | 115 | 119 | 115 | 120 | 117 | 118 | 113 | 112 | ... | 190 | 193 | 169 | 141 | 142 | 144 | 143 | 141 | 143 | 215 |

| 396 | 115 | 118 | 117 | 117 | 116 | 118 | 117 | 119 | 117 | 116 | ... | 187 | 189 | 183 | 216 | 189 | 193 | 148 | 144 | 142 | 212 |

| 397 | 113 | 116 | 113 | 117 | 114 | 121 | 121 | 120 | 121 | 114 | ... | 184 | 188 | 185 | 221 | 203 | 192 | 144 | 143 | 137 | 212 |

| 398 | 110 | 109 | 109 | 110 | 110 | 112 | 112 | 113 | 113 | 111 | ... | 172 | 171 | 209 | 212 | 175 | 136 | 142 | 141 | 137 | 213 |

| 399 | 105 | 107 | 111 | 112 | 113 | 113 | 113 | 116 | 116 | 107 | ... | 181 | 184 | 220 | 188 | 140 | 139 | 142 | 141 | 138 | 213 |

400 rows × 1024 columns

print(X.shape) # 查看此时的表格结构

(400, 1024)

3. 人脸数据处理: 目标变量提取

# 获取目标变量y:第一张图片演示

print(int(names[0].split('_')[0]))

10

# 批量获取所有图片的目标变量y

y = [] # 目标变量

for i in names:

img = Image.open('olivettifaces\\' + i)

y.append(int(i.split('_')[0]))

print(y) # 查看目标变量,也就是对应的人员编号

[10, 10, 10, 10, 10, 10, 10, 10, 10, 10, 11, 11, 11, 11, 11, 11, 11, 11, 11, 11, 12, 12, 12, 12, 12, 12, 12, 12, 12, 12, 13, 13, 13, 13, 13, 13, 13, 13, 13, 13, 14, 14, 14, 14, 14, 14, 14, 14, 14, 14, 15, 15, 15, 15, 15, 15, 15, 15, 15, 15, 16, 16, 16, 16, 16, 16, 16, 16, 16, 16, 17, 17, 17, 17, 17, 17, 17, 17, 17, 17, 18, 18, 18, 18, 18, 18, 18, 18, 18, 18, 19, 19, 19, 19, 19, 19, 19, 19, 19, 19, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 20, 20, 20, 20, 20, 20, 20, 20, 20, 20, 21, 21, 21, 21, 21, 21, 21, 21, 21, 21, 22, 22, 22, 22, 22, 22, 22, 22, 22, 22, 23, 23, 23, 23, 23, 23, 23, 23, 23, 23, 24, 24, 24, 24, 24, 24, 24, 24, 24, 24, 25, 25, 25, 25, 25, 25, 25, 25, 25, 25, 26, 26, 26, 26, 26, 26, 26, 26, 26, 26, 27, 27, 27, 27, 27, 27, 27, 27, 27, 27, 28, 28, 28, 28, 28, 28, 28, 28, 28, 28, 29, 29, 29, 29, 29, 29, 29, 29, 29, 29, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 30, 30, 30, 30, 30, 30, 30, 30, 30, 30, 31, 31, 31, 31, 31, 31, 31, 31, 31, 31, 32, 32, 32, 32, 32, 32, 32, 32, 32, 32, 33, 33, 33, 33, 33, 33, 33, 33, 33, 33, 34, 34, 34, 34, 34, 34, 34, 34, 34, 34, 35, 35, 35, 35, 35, 35, 35, 35, 35, 35, 36, 36, 36, 36, 36, 36, 36, 36, 36, 36, 37, 37, 37, 37, 37, 37, 37, 37, 37, 37, 38, 38, 38, 38, 38, 38, 38, 38, 38, 38, 39, 39, 39, 39, 39, 39, 39, 39, 39, 39, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 40, 40, 40, 40, 40, 40, 40, 40, 40, 40, 4, 4, 4, 4, 4, 4, 4, 4, 4, 4, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 7, 7, 7, 7, 7, 7, 7, 7, 7, 7, 8, 8, 8, 8, 8, 8, 8, 8, 8, 8, 9, 9, 9, 9, 9, 9, 9, 9, 9, 9]

13.2.3 数据划分与降维

1. 划分训练集和测试集

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=1)

2. PCA数据降维

# 数据降维模型训练

from sklearn.decomposition import PCA

pca = PCA(n_components=100)

pca.fit(X_train)

PCA(n_components=100)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

PCA(n_components=100)

# 对训练集和测试集进行数据降维

X_train_pca = pca.transform(X_train)

X_test_pca = pca.transform(X_test)

# 我们通过如下代码验证PCA是否降维:

print(X_train_pca.shape)

print(X_test_pca.shape)

(320, 100)

(80, 100)

# 如果想查看此时降维后的X_train_pca和X_test_pca,可以直接将它们打印出来查看,也可以将它们转为DataFrame格式进行查看,代码如下:

# pd.DataFrame(X_train_pca).head()

# pd.DataFrame(X_test_pca).head()

# 在PCA后面加个“?”运行可以可以看看官方的一些提示

# PCA?

13.2.4 模型的搭建与使用

1. 模型搭建

from sklearn.neighbors import KNeighborsClassifier

knn = KNeighborsClassifier() # 建立KNN模型

knn.fit(X_train_pca, y_train) # 用降维后的训练集进行训练模型

KNeighborsClassifier()In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

KNeighborsClassifier()

2. 模型预测

y_pred = knn.predict(X_test_pca) # 用降维后的测试集进行测试

print(y_pred) # 将对测试集的预测结果打印出来

[ 9 21 3 40 26 4 28 37 12 36 26 7 27 21 3 24 7 2 17 24 21 32 8 2

11 19 6 29 6 29 18 10 25 35 10 18 15 5 9 22 34 29 2 16 8 18 8 38

39 35 16 30 30 11 37 36 35 20 33 6 1 16 31 32 5 30 1 39 35 39 2 19

5 8 11 4 14 27 22 24]

# 通过和之前章节类似的代码,我们可以将预测值和实际值进行对比:

import pandas as pd

a = pd.DataFrame() # 创建一个空DataFrame

a['预测值'] = list(y_pred)

a['实际值'] = list(y_test)

a.head() # 查看表格前5行

| 预测值 | 实际值 | |

|---|---|---|

| 0 | 9 | 9 |

| 1 | 21 | 21 |

| 2 | 3 | 3 |

| 3 | 40 | 40 |

| 4 | 26 | 26 |

# 查看预测准确度 - 方法1

from sklearn.metrics import accuracy_score

score = accuracy_score(y_pred, y_test)

print(score)

0.9125

# 查看预测准确度 - 方法2

score = knn.score(X_test_pca, y_test)

print(score)

0.9125

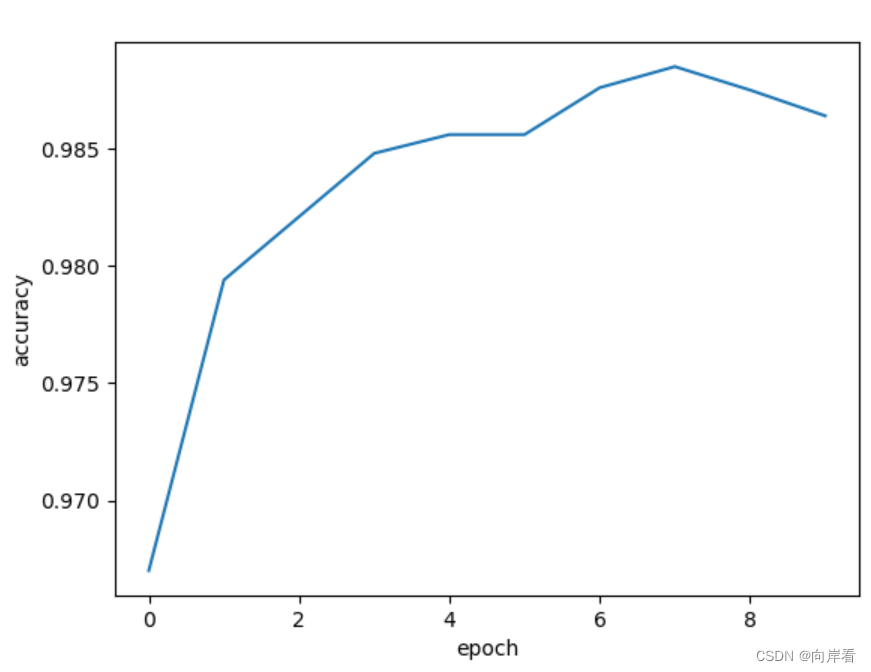

3. 模型对比(数据降维与不降维)

from sklearn.neighbors import KNeighborsClassifier

knn = KNeighborsClassifier() # 建立KNN模型

knn.fit(X_train, y_train) # 不使用数据降维,直接训练

y_pred = knn.predict(X_test) # 不使用数据降维,直接测试

from sklearn.metrics import accuracy_score

score = accuracy_score(y_pred, y_test)

print(score)

0.9125

此时获得的准确度评分score为0.91,可以看到使用数据降维对提高模型预测效果还是有一些效果的,这里的数据量并不大,当数据量更大的时候,利用PCA主成分分析进行数据降维则会发挥更大的作用。

13.3 补充知识点:人脸识别外部接口调用

13.3.1 baidu-aip库安装

pip install baidu-aip

13.3.2 调用接口进行人脸识别和打分

from aip import AipFace

import base64

# 下面3行内容为自己的APP_ID,API_KEY,SECRET_KEY

APP_ID = '16994639' # '25572406'

API_KEY = 'L9XnkKQEMnHhB5omF2P8D9OM' #'FFg2mnhYI8dD46uenYXjzhlg'

SECRET_KEY = 'nnOZDoruZ6AjVglBs6ecvUjFRIAKrn9T' #'ra9rPr3E4wuonUAEB7X1x41aKnh7qFy7'

# 把上面输入的账号信息传入接口

aipFace = AipFace(APP_ID, API_KEY, SECRET_KEY)

# 下面一行内容为需要识别的人脸图片的地址,其他地方就不用改了

filePath = r'张起凡.jpg'

# 定义打开文件的函数

def get_file_content(filePath):

with open(filePath, 'rb') as fp:

content = base64.b64encode(fp.read())

return content.decode('utf-8')

imageType = "BASE64"

# 选择最后要展示的内容,这里展示age(年龄);gender(性别);beauty(颜值)

options = {}

options["face_field"] = "age,gender,beauty"

# 调用接口aipFace的detect()函数进行人脸识别,打印结果

result = aipFace.detect(get_file_content(filePath), imageType, options)

print(result)

# 打印具体信息,本质就是列表索引和字典的键值对应

age = result['result']['face_list'][0]['age']

print('年龄预测为:' + str(age))

gender = result['result']['face_list'][0]['gender']['type']

print('性别预测为:' + gender)

beauty = result['result']['face_list'][0]['beauty']

print('颜值评分为:' + str(beauty))

{'error_code': 0, 'error_msg': 'SUCCESS', 'log_id': 943952634, 'timestamp': 1682385343, 'cached': 0, 'result': {'face_num': 1, 'face_list': [{'face_token': '8898d344d778dd12bcbc4efe488da9aa', 'location': {'left': 313.02, 'top': 379.26, 'width': 328, 'height': 309, 'rotation': 14}, 'face_probability': 1, 'angle': {'yaw': -23.27, 'pitch': 7.04, 'roll': 13.28}, 'age': 22, 'gender': {'type': 'male', 'probability': 0.99}, 'beauty': 53.09}]}}

年龄预测为:22

性别预测为:male

颜值评分为:53.09

![[陇剑杯 2021]之Misc篇(NSSCTF)刷题记录④](https://img-blog.csdnimg.cn/70ede0abb56241d480e636535a33db45.png)