使用onnx.helper可以进行onnx的制造组装操作: 对象 描述 ValueInfoProto 对象 张量名、张量的基本数据类型、张量形状 算子节点信息 NodeProto 算子名称(可选)、算子类型、输入和输出列表(列表元素为数值元素) GraphProto对象 用张量节点和算子节点组成的计算图对象 ModelProto对象 GraphProto封装后的对象

方法 描述 onnx.helper.make_tensor_value_info 制作ValueInfoProto对象onnx.helper.make_tensor 使用指定的参数制作一个张量原型(与ValueInfoProto相比可以设置具体值) onnx.helper.make_node 构建一个节点原型NodeProto对象 (输入列表为之前定义的名称)onnx.helper.make_graph 构造图原型GraphProto对象(输入列表为之前定义的对象)make_model(graph, **kwargs) GraphProto封装后为ModelProto对象make_sequence 使用指定的值参数创建序列 make_operatorsetid make_opsetid make_model_gen_version 推断模型IR_VERSION的make_model扩展,如果未指定,则使用尽力而为的基础。 set_model_props set_model_props make_map 使用指定的键值对参数创建 Map make_attribute get_attribute_value make_empty_tensor_value_info make_sparse_tensor

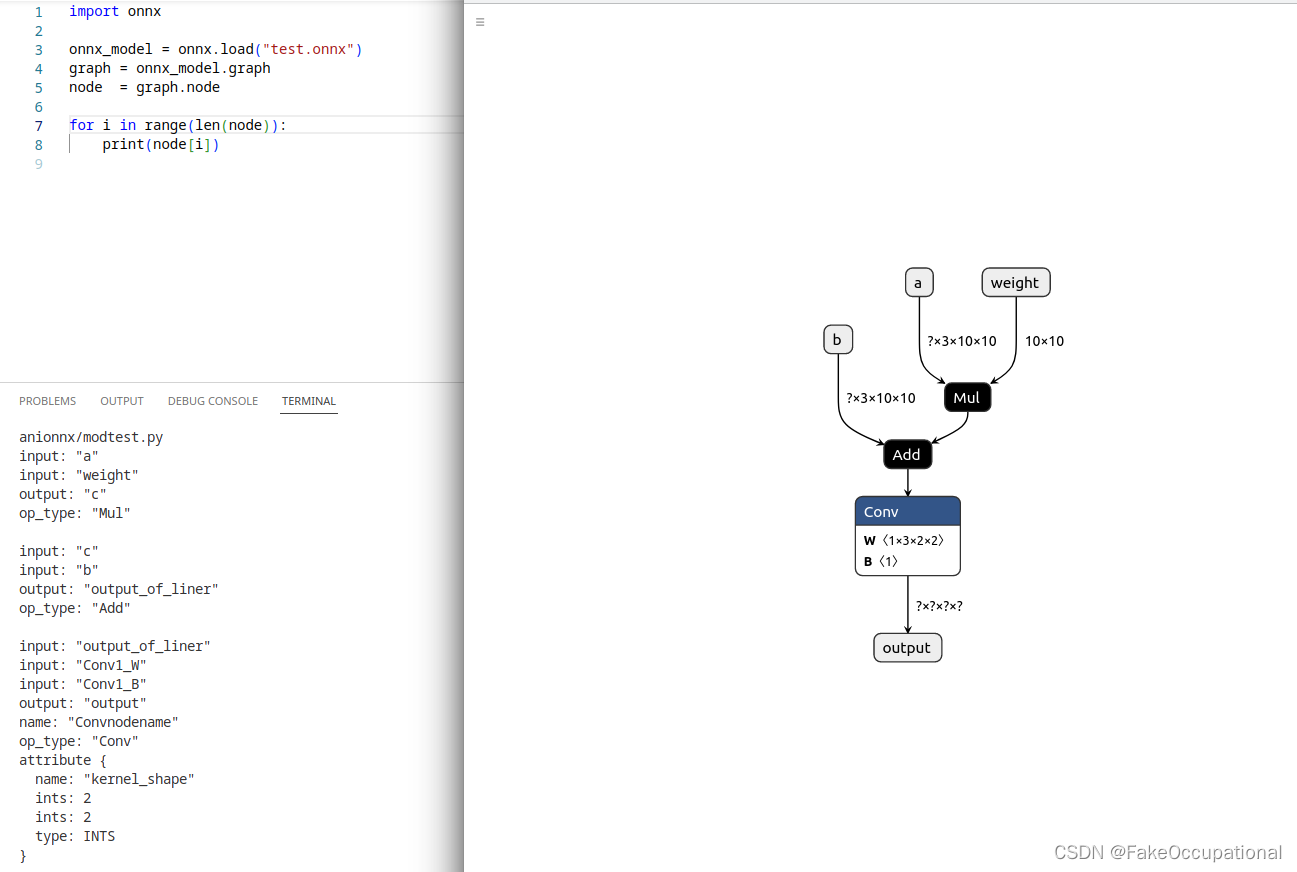

import onnx

onnx.utils.extract_model('whole_model.onnx', 'partial_model.onnx', ['22'], ['28'])

onnx.utils.extract_model('whole_model.onnx', 'submodel_1.onnx', ['22'], ['27', '31']) # 本来只有31节点输出,现在让27节点的值也输出出来

import onnx

from onnx import helper

from onnx import TensorProto

import numpy as np

def create_initializer_tensor(

name: str,

tensor_array: np.ndarray,

data_type: onnx.TensorProto = onnx.TensorProto.FLOAT

) -> onnx.TensorProto:

# (TensorProto)

initializer_tensor = onnx.helper.make_tensor(

name=name,

data_type=data_type,

dims=tensor_array.shape,

vals=tensor_array.flatten().tolist())

return initializer_tensor

# input and output

a = helper.make_tensor_value_info('a', TensorProto.FLOAT, [None,3,10, 10])

x = helper.make_tensor_value_info('weight', TensorProto.FLOAT, [10, 10])

b = helper.make_tensor_value_info('b', TensorProto.FLOAT, [None,3, 10,10])

output = helper.make_tensor_value_info('output', TensorProto.FLOAT, [None,None,None, None])

# Mul

mul = helper.make_node('Mul', ['a', 'weight'], ['c'])

# Add

add = helper.make_node('Add', ['c', 'b'], ['output_of_liner'])

# Conv

conv1_W_initializer_tensor_name = "Conv1_W"

conv1_W_initializer_tensor = create_initializer_tensor(

name=conv1_W_initializer_tensor_name,

tensor_array=np.ones(shape=(1, 3,*(2,2))).astype(np.float32),

data_type=onnx.TensorProto.FLOAT)

conv1_B_initializer_tensor_name = "Conv1_B"

conv1_B_initializer_tensor = create_initializer_tensor(

name=conv1_B_initializer_tensor_name,

tensor_array=np.ones(shape=(1)).astype(np.float32),

data_type=onnx.TensorProto.FLOAT)

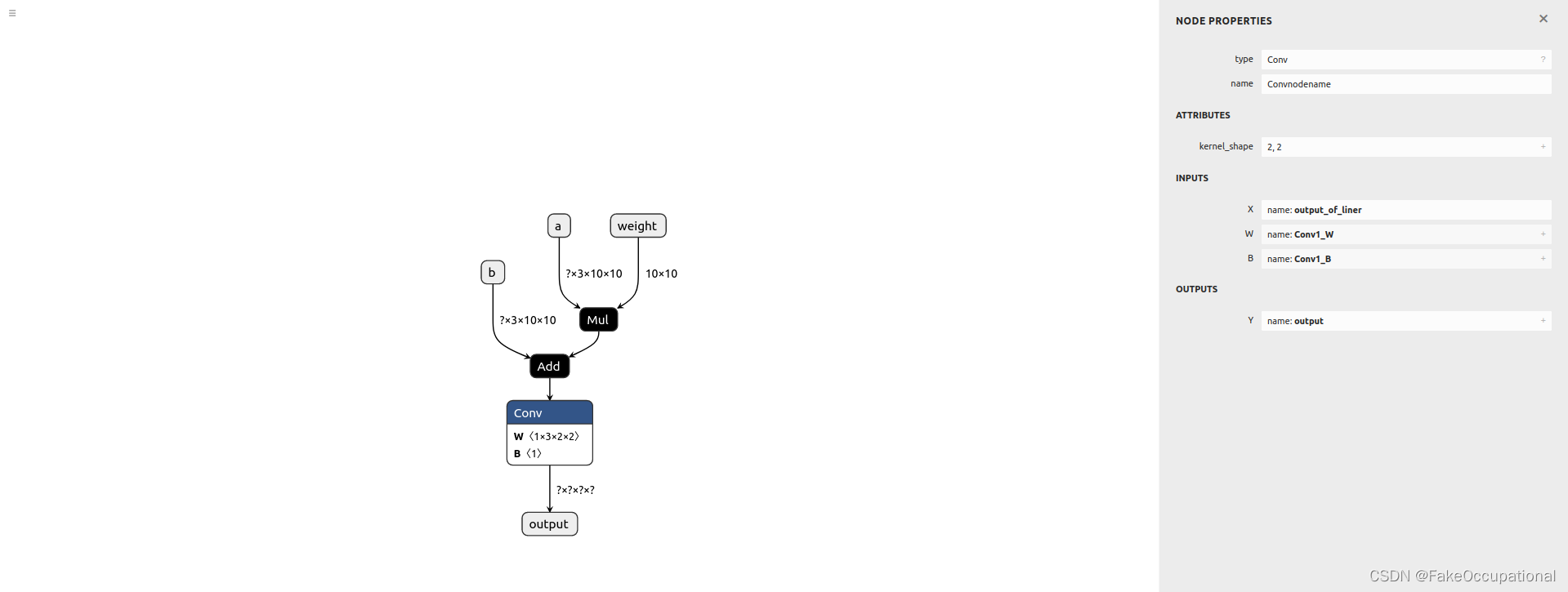

conv_node = onnx.helper.make_node(

name="Convnodename", # Name is optional.

op_type="Conv", # Must follow the order of input and output definitions. # https://github.com/onnx/onnx/blob/rel-1.9.0/docs/Operators.md#inputs-2---3

inputs=[ 'output_of_liner', conv1_W_initializer_tensor_name,conv1_B_initializer_tensor_name ],

outputs=["output"],

kernel_shape= (2, 2),

#pads=(1, 1, 1, 1),

)

# graph and model

graph = helper.make_graph([mul, add,conv_node], 'test', [a, x, b], [output],

initializer=[conv1_W_initializer_tensor, conv1_B_initializer_tensor,],

)

model = helper.make_model(graph)

# save model

onnx.checker.check_model(model)

print(model)

onnx.save(model, 'test.onnx')

###################EEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEVVVVVVVVVVVVVVVVVVVVVVVVVVVVVAAAAAAAAAAAAAAAAAAAAAAAAALLLLLLLLLLLLLLLLLLLLLLLL#########

import onnxruntime

# import numpy as np

sess = onnxruntime.InferenceSession('test.onnx')

a = np.random.rand(1,3,10, 10).astype(np.float32)

b = np.random.rand(1,3,10, 10).astype(np.float32)

x = np.random.rand(10, 10).astype(np.float32)

output = sess.run(['output'], {'a': a, 'b': b, 'weight': x})[0]

print(output)

https://github.com/NVIDIA/TensorRT/tree/master/tools/onnx-graphsurgeon