准备

开通端口

master需要开通的端口:

TCP: 6443 2379 2380 10250 10259 10257 ,10250 30000~30010(应用)

node需要开通的端口:

TCP: 10250 30000~30010(应用)

master加端口

firewall-cmd --permanent --add-port=6443/tcp

firewall-cmd --permanent --add-port=2379/tcp

firewall-cmd --permanent --add-port=2380/tcp

firewall-cmd --permanent --add-port=10250/tcp

firewall-cmd --permanent --add-port=10259/tcp

firewall-cmd --permanent --add-port=10257/tcp

firewall-cmd --permanent --add-port=30000/tcp

firewall-cmd --permanent --add-port=30001/tcp

firewall-cmd --permanent --add-port=30002/tcp

firewall-cmd --permanent --add-port=30003/tcp

firewall-cmd --permanent --add-port=30004/tcp

firewall-cmd --permanent --add-port=30005/tcp

firewall-cmd --permanent --add-port=30006/tcp

firewall-cmd --permanent --add-port=30007/tcp

firewall-cmd --permanent --add-port=30008/tcp

firewall-cmd --permanent --add-port=30009/tcp

firewall-cmd --permanent --add-port=30010/tcp

Node 加端口

firewall-cmd --permanent --add-port=10250/tcp

firewall-cmd --permanent --add-port=30000/tcp

firewall-cmd --permanent --add-port=30001/tcp

firewall-cmd --permanent --add-port=30002/tcp

firewall-cmd --permanent --add-port=30003/tcp

firewall-cmd --permanent --add-port=30004/tcp

firewall-cmd --permanent --add-port=30005/tcp

firewall-cmd --permanent --add-port=30006/tcp

firewall-cmd --permanent --add-port=30007/tcp

firewall-cmd --permanent --add-port=30008/tcp

firewall-cmd --permanent --add-port=30009/tcp

firewall-cmd --permanent --add-port=30010/tcp

主机名解析

vim /etc/hosts

添加如下内容:

172.16.251.151 LTWWAPP01

172.16.251.152 LTWWAPP02

172.16.251.153 LTWWAPP03

172.16.251.154 LTWWAPP04

172.16.251.155 LTWWAPP05

172.16.251.156 LTWWAPP06

时间同步

K8s要求集群服务中的节点时间必须精确一致

安装: yum -y install chrony

开启网络时间同步:systemctl start chronyd

设置开机自启动:systemctl enable chronyd

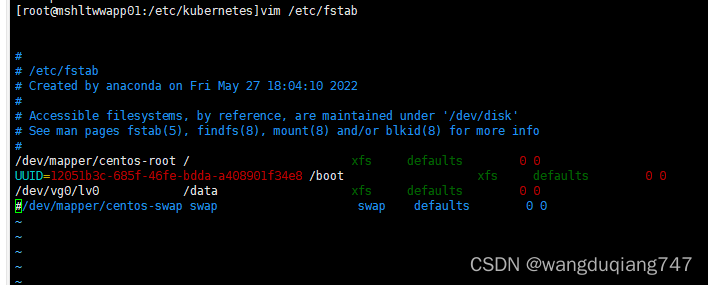

禁用swap分区

vim /etc/fstab 注释掉swap那行

修改内核参数

##加载br_netfilter模块

$ modprobe br_netfilter

#验证模块是否加载成功

$ lsmod | grep br_netfilter

##修改内核参数

$ cat > /etc/sysctl.d/k8s.conf << EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 vm.swappiness = 0 EOF

sysctl --system

安装运行containerd

下载 解压 二进制包

$ wget https://github.com/containerd/containerd/releases/download/v1.6.8/cri-containerd-1.6.8-linux-amd64.tar.gz

#如果下载不下来,第一步去掉直接下载好传到服务器上

$ tar zxvf cri-containerd-1.6.8-linux-amd64.tar.gz

解压后的目录:

etc目录:主要为containerd服务管理配置文件及cni虚拟网卡配置文件;

opt目录:主要为gce环境中使用containerd配置文件及cni插件;

usr目录:主要为containerd运行时的二进制文件,包含runc

拷贝二进制可执行文件到$PATH中

$ cp usr/local/bin/* /usr/local/bin/

创建初始配置文件

$ mkdir -p /etc/containerd/

$ containerd config default > /etc/containerd/config.toml #创建默认的配置文件

修改初始配置文件

替换镜像源

由于国内环境原因我们需要将 sandbox_image 镜像源设置为阿里云的google_containers镜像源。

$ sed -i "s#k8s.gcr.io/pause#registry.cn-hangzhou.aliyuncs.com/google_containers/pause#g" /etc/containerd/config.toml

#等同于:

$ vim /etc/containerd/config.toml

[plugins."io.containerd.grpc.v1.cri"]

sandbox_image = "registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6"

配置镜像加速(在文件的中下位置,也可以不配,好像不影响)

$ vim /etc/containerd/config.toml

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]

endpoint = ["https://cekcu3pt.mirror.aliyuncs.com"]

配置驱动器 (可以不配)

Containerd 和 Kubernetes 默认使用旧版驱动程序来管理 cgroups,但建议在基于 systemd 的主机上使用该驱动程序,以符合 cgroup 的“单编写器”规则。

$ sed -i 's#SystemdCgroup = false#SystemdCgroup = true#g' /etc/containerd/config.toml

#等同于

$ vim /etc/containerd/config.toml

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

SystemdCgroup = true

创建服务管理配置文件

拷贝服务管理配置文件到/usr/lib/systemd/system/目录

$ grep -v ^# etc/systemd/system/containerd.service

$ mv etc/systemd/system/containerd.service /usr/lib/systemd/system/containerd.service

启动 containerd 服务

$ systemctl daemon-reload

$ systemctl enable --now containerd.service

$ systemctl status containerd.service

$ containerd --version #查看版本

#可以查看到版本

安装Runc

Runc是真正运行容器的工具

下载 安装

$ wget https://github.com/opencontainers/runc/releases/download/v1.1.3/runc.amd64

# 下载不成功就本地下载上传到服务器

$ chmod +x runc.amd64

$ mv runc.amd64 /usr/bin/runc

#提示是否重写,yes

$ runc -version

#正常输出就没问题

配置crictl客户端

替换所有docker的命令 , 如 crictl ps

$ mv etc/crictl.yaml /etc/

$ cat /etc/crictl.yaml

image-endpoint: unix:///var/run/containerd/containerd.sock

安装kubeadm、kubectl、kubelet

添加 yum 源

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

添加网易源

否则有些包下载不下来

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.163.com/.help/CentOS7-Base-163.repo

安装

yum install -y readline-devel readline

yum install -y kubelet-1.26.2 kubeadm-1.26.2 kubectl-1.24.4

systemctl enable kubelet && systemctl start kubelet && systemctl status kubelet

Kubeadm: kubeadm是一个工具,用来初始化k8s集群的

kubelet: 安装在集群所有节点上,用于启动Pod的

kubectl: 通过kubectl可以部署和管理应用,查看各种资源,创建、删除和更新各种组件

设置Table键补全

让命令可用自动table键进行补全,对新手无法记住命令提供很好的支持,所在主机进行该操作方可使用table补全

#Kubectl命令补全:

$ kubectl completion bash > /etc/bash_completion.d/kubelet

#Kubeadm命令补全:

$ kubeadm completion bash > /etc/bash_completion.d/kubeadm

初始化master节点(master only ,以上的步骤也是)

检测安装环境

#检测主机环境是否达到集群的要求,可根据结果提示进行逐一排除故障

$ kubeadm init --dry-run

创建配置文件

创建默认的配置文件

$ kubeadm config print init-defaults > kubeadm-init.yaml

修改默认配置文件

改动的点已标出

$ vim kubeadm-init.yaml

#改成如下 ,

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 172.16.251.151 (改)

bindPort: 6443

nodeRegistration:

criSocket: /run/containerd/containerd.sock (改)

imagePullPolicy: IfNotPresent

name: ltwwapp01 (改)

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers (改)

kind: ClusterConfiguration

kubernetesVersion: 1.26.0

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

初始化

$ modprobe br_netfilter (可能不必要)

$ kubeadm init --config kubeadm-init.yaml

环境配置

根据初始化成功后的提示对集群进行基础的配置。

$ mkdir -p $HOME/.kube

$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

$ sudo chown $(id -u):$(id -g) $HOME/.kube/config

# export KUBECONFIG=/etc/kubernetes/admin.conf 不用这条,这是临时的重启会失效

# >https://blog.csdn.net/qq_26711103/article/details/126518479

加入集群(node only)

上面init成功后会给出join命令,如:

kubeadm join 172.16.251.151:6443 --token oybtw9.i25hjwufosdn7cef --discovery-token-ca-cert-hash sha256:5daecb79990c7acf1bbd0aae780dfe5cf57d3a5edba06fa10cd419369e8b0c2f

安装Flannel (master only)

否则nodes会一直是notReady的状态

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

#同样的如果下载不了就本地下载, 完了传上去,或者:

cat > kube-flannel.yml <<EOF

文件内容

EOF

#然后

kubectl apply -f kube-flannel.yml

等待一会,nodes就是ready了

验证集群

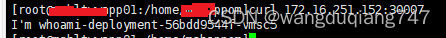

使用whoami来验证集群,他可以返回请求是被哪个容器处理的,而且

一来可以通过浏览器访问

二来可以curl 命令行里访问

方式一 (不好)

# 文件whoami.yaml 这个镜像是用的8000端口对外提供服务

apiVersion: apps/v1

kind: Deployment

metadata:

name: whoami-deployment

labels:

app: whoami

spec:

replicas: 3

selector:

matchLabels:

app: whoami

template:

metadata:

labels:

app: whoami

spec:

containers:

- name: whoami

image: jwilder/whoami

ports:

- containerPort: 8000

部署whoami

kubectl apply -f whoami.yaml

#whoamiservice.yaml

apiVersion: v1

kind: Service

metadata:

name: whoami-service

spec:

type: NodePort

selector:

app: whoami

ports:

# 默认情况下,为了方便起见,`targetPort` 被设置为与 `port` 字段相同的值。

- port: 8000

targetPort: 8000

# 可选字段

# 默认情况下,为了方便起见,Kubernetes 控制平面会从某个范围内分配一个端口号(默认:30000-32767)

nodePort: 30007

配置whoami的服务

kubectl apply -f whoamiservice.yaml

效果就是开头的curl和浏览器访问 30007端口. 这时候只是能访问. 是必须指定某个node的ip的,然而集群的特性就是node的随时扩容缩容.这个方法是不好的

https://kubernetes.io/zh-cn/docs/concepts/services-networking/ingress/

不能说不好, 是负载均衡之前的状态. 外部套上一层负载均衡或者叫路由转发就ok了

方法二(不需要):

NodePort虽然能够实现外部访问Pod的需求,但需要指定node的ip, 且占用了各个物理主机上的端口

https://www.likecs.com/show-205306520.html

https://kubernetes.io/zh-cn/docs/concepts/services-networking/ingress/

安装ingress-nginx (不需要)

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.7.0/deploy/static/provider/cloud/deploy.yaml

https://kubernetes.github.io/ingress-nginx/deploy/

上面的两部为什么不需要,因为正式环境是有域名配置域名解析的,不需要自己再配一套.只需要把nodeport提供出来就可以

部署 使用dashboard

dashboard.yaml 全文

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 30001 (改)

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

nodeName: ltwwapp01 (改)

securityContext:

seccompProfile:

type: RuntimeDefault

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.7.0

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

spec:

nodeName: ltwwapp01 (改)

securityContext:

seccompProfile:

type: RuntimeDefault

containers:

- name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.8

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

然后执行

kubectl apply -f dashboard.yaml

访问

https://your_ip:30001 (注意不要直接访问your_ip:30001)

提示输入token

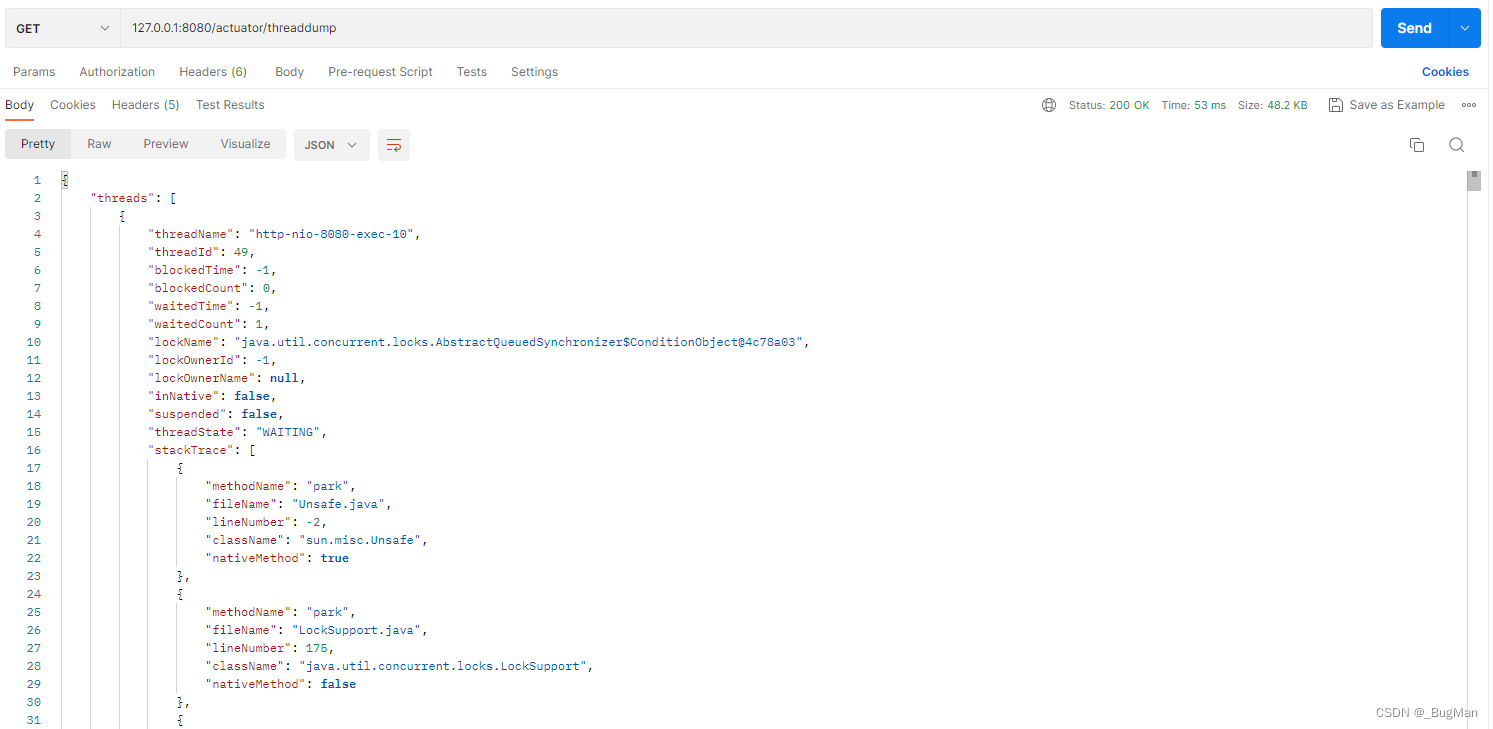

生成token

kubectl -n kubernetes-dashboard create token kubernetes-dashboard

kubectl create clusterrolebinding kubernetes-dashboard-cluster-admin --clusterrole=cluster-admin --serviceaccount=kubernetes-dashboard:kubernetes-dashboard

>https://blog.csdn.net/qq_41619571/article/details/127217339

常用命令

查看服务的详细信息:

kubectl describe service whoami-service

删除一个服务

kubectl delete service whoami-deployment

某个namespace

kubectl get pods -n kube-system

kubectl get all -n ingress-nginx

kubectl delete namespace jenkins

参考

https://www.cnblogs.com/ergwang/p/17205117.html

https://blog.csdn.net/qq_35644307/article/details/126120772

https://blog.csdn.net/weixin_46476452/article/details/127670046

https://blog.csdn.net/github_35735591/article/details/125533342