目录

一、前言

二、论文解读

1、Inception网络架构描述

2、Inception网络架构的优点

3、InceptionV3的改进

三、模型搭建

1、Inception-A

2、Inception-B

3、Inception-C

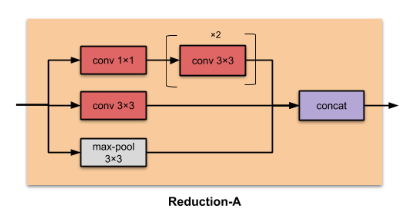

4、Reduction-A

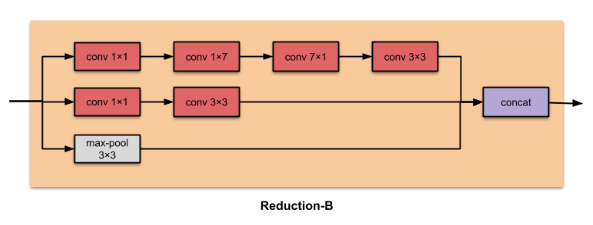

5、Reduction-B

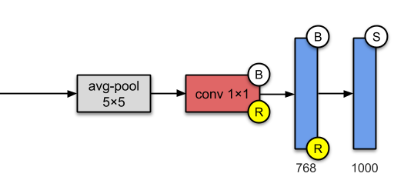

6、辅助分支

7、InceptionV3实现

一、前言

- 🍨 本文为🔗365天深度学习训练营 中的学习记录博客

- 🍖 原作者:K同学啊|接辅导、项目定制

● 难度:夯实基础⭐⭐

● 语言:Python3、Pytorch3

● 时间:4月8日-4月14日

🍺要求:

1、了解并学习InceptionV3相对与InceptionV1有哪些改进的地方

2、使用Inception完成天气识别二、论文解读

论文:Rethinking the Inception Architecture for Computer Vision

《Rethinking the Inception Architecture for Computer Vision》是Google Brain的研究团队在2016年提出的一篇论文,探讨了Inception网络架构在计算机视觉任务中的应用。本文将对该论文的主要内容进行解读。

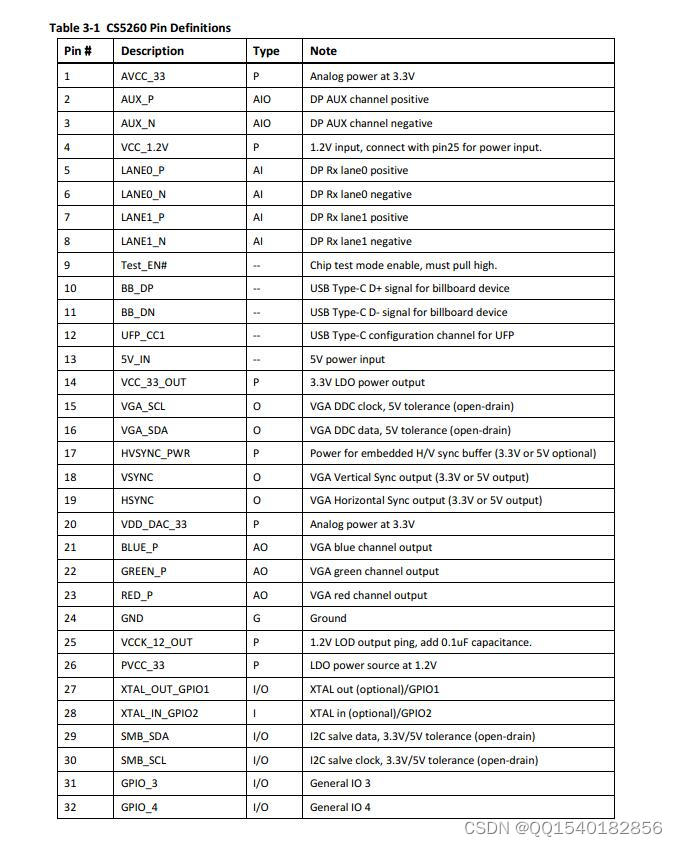

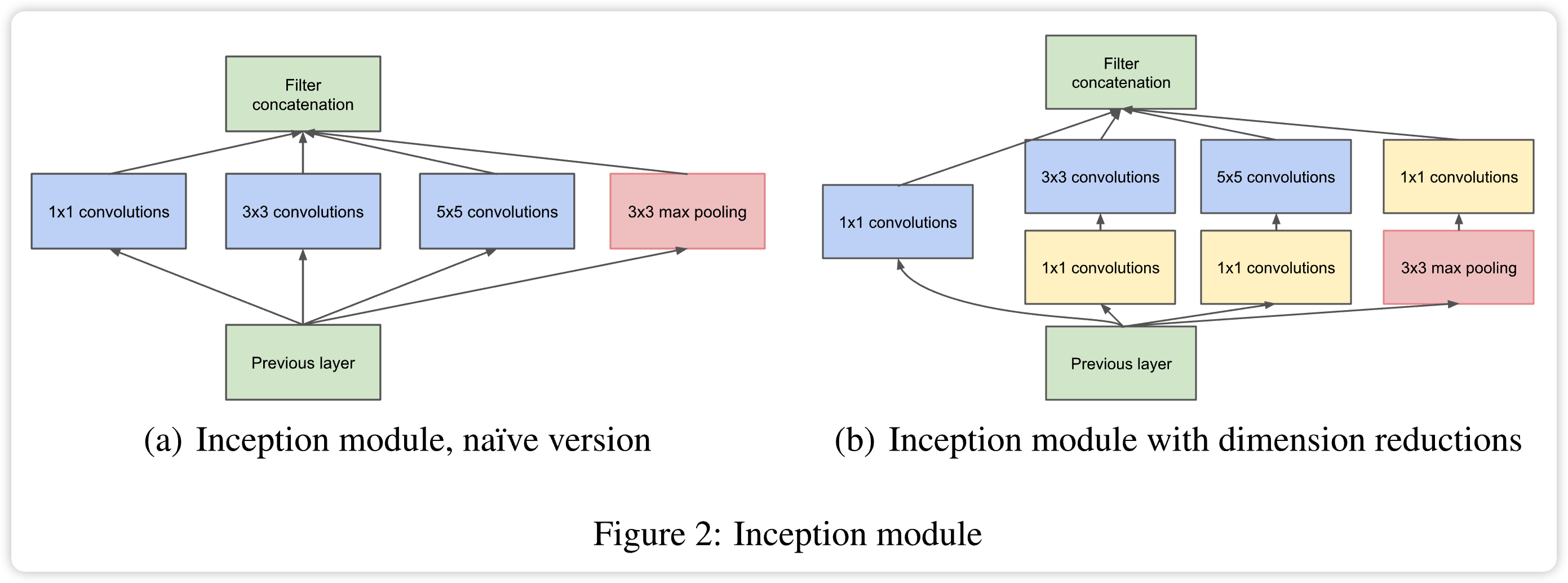

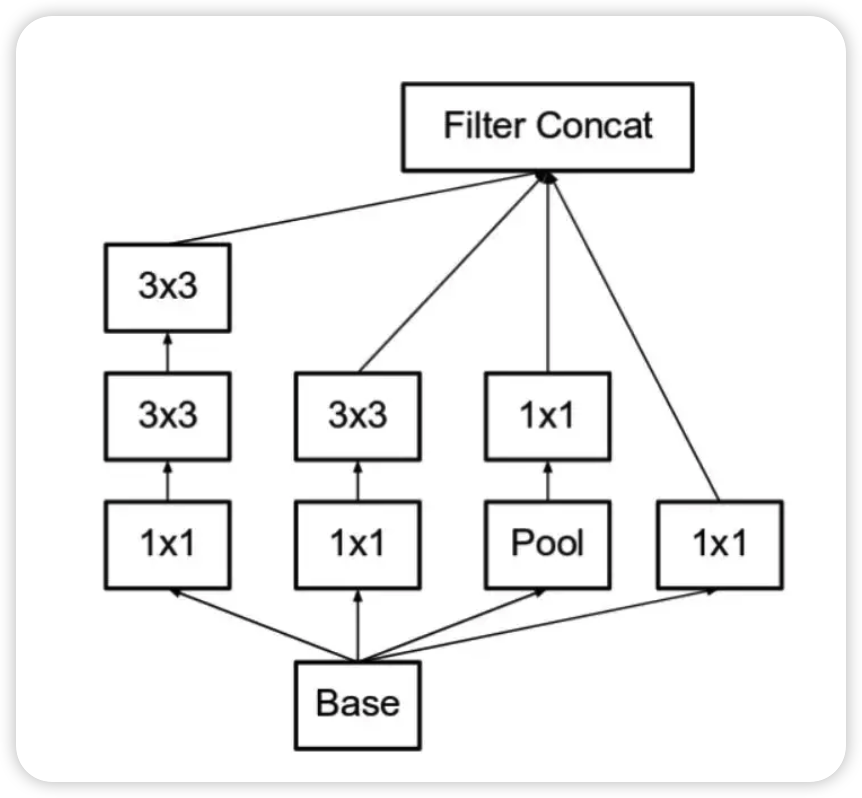

1、Inception网络架构描述

Inception是一种网络结构,它通过不同大小的卷积核来同时捕获不同尺度下的空间信息。它的特点在于它将卷积核组合在一起,建立了一个多分支结构,使得网络能够并行地计算。

Inception-v3网络结构主要包括以下几种类型的层:

-

一般的卷积层(Convolutional Layer)。

-

池化层(Pooling Layer)。Inception-v3使用的是“平均池化(Average Pooling)”。

-

Inception Module。Inception-v3网络中最核心的也是最具特色的部分。它使用多个不同大小的卷积核来捕获不同尺度下的特征。

-

Bottleneck层,在Inception-v3中被称为“1x1卷积层”。这一层的主要作用是降维,通过减少输入的通道数来减轻计算负担。

编辑

2、Inception网络架构的优点

-

更高的表现力:Inception网络具有更高的表现力,即可以在相同的计算资源下获得更好的分类效果。

-

并行计算:通过并行计算,不同分支的计算可以在不同的GPU上进行,并且可以有效地活用多个GPU的计算资源。

-

对计算资源的分配灵活:Inception网络中不同分支的计算量可以通过调整参数来分配不同的计算资源,以获得最佳的性能。

-

降维:Bottleneck层可以有效地减少计算量,提高计算效率。

3、InceptionV3的改进

InceptionV3是Inception网络在V1版本基础上进行改进和优化得到的,相对于InceptionV1,InceptionV3主要有以下改进:

-

更深的网络结构:InceptionV3拥有更深的网络结构,包含了多个Inception模块以及像Batch Normalization和优化器等新技术和方法,从而提高了网络的性能和表现能力。

-

更小的卷积核:InceptionV3引入了3x3的卷积核,相对于之前的5x5卷积核,可以减少参数数量,提高网络的效率和性能。

-

分解卷积的使用:InceptionV3使用了分解卷积,将大的卷积核分解为多个小的卷积核,从而减少参数数量和计算量。

-

加入Atrous Convolution:InceptionV3引入了Atrous Convolution,可以引入额外的感受野,增加了网络的表现能力和性能。

三、模型搭建

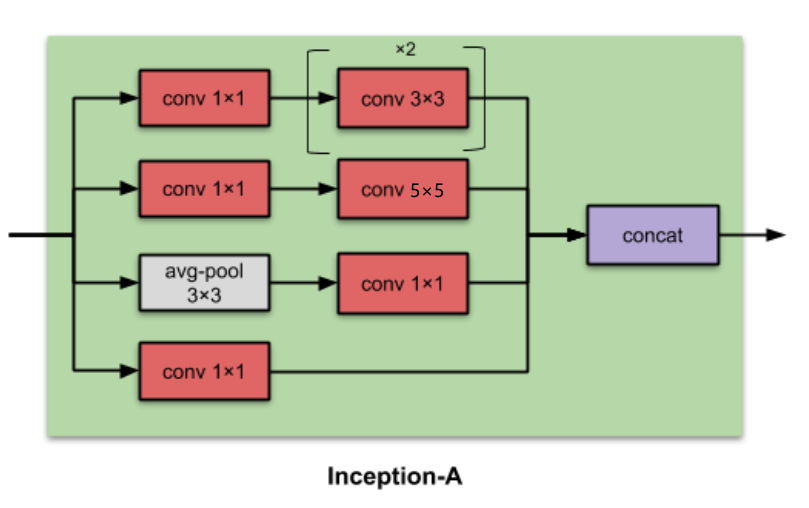

1、Inception-A

pytorch实现

import torch.nn as nn

import torch.nn.functional as F

class InceptionA(nn.Module):

def __init__(self, in_channels):

super(InceptionA, self).__init__()

self.branch1x1 = nn.Conv2d(in_channels, 16, kernel_size=1)

self.branch5x5_1 = nn.Conv2d(in_channels, 16, kernel_size=1)

self.branch5x5_2 = nn.Conv2d(16, 24, kernel_size=5, padding=2)

self.branch3x3dbl_1 = nn.Conv2d(in_channels, 16, kernel_size=1)

self.branch3x3dbl_2 = nn.Conv2d(16, 24, kernel_size=3, padding=1)

self.branch3x3dbl_3 = nn.Conv2d(24, 24, kernel_size=3, padding=1)

self.branch_pool = nn.Conv2d(in_channels, 24, kernel_size=1)

def forward(self, x):

branch1x1 = self.branch1x1(x)

branch5x5 = self.branch5x5_1(x)

branch5x5 = self.branch5x5_2(branch5x5)

branch3x3dbl = self.branch3x3dbl_1(x)

branch3x3dbl = self.branch3x3dbl_2(branch3x3dbl)

branch3x3dbl = self.branch3x3dbl_3(branch3x3dbl)

branch_pool = F.avg_pool2d(x, kernel_size=3, stride=1, padding=1)

branch_pool = self.branch_pool(branch_pool)

outputs = [branch1x1, branch5x5, branch3x3dbl, branch_pool]

return torch.cat(outputs, dim=1)

InceptionA模块包含四个分支,每个分支使用不同的卷积核大小和参数。其中branch1x1、branch5x5_2、branch3x3dbl_3使用较小的卷积核,可以减少参数数量和计算量,提高网络效率。branch_pool使用平均池化的方式进行特征提取和降维。最后,将四个分支的结果在通道维度上进行拼接,输出InceptionA的结果。

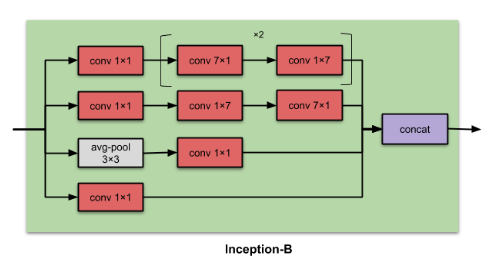

2、Inception-B

pytorch实现

import torch.nn as nn

import torch.nn.functional as F

class InceptionB(nn.Module):

def __init__(self, in_channels):

super(InceptionB, self).__init__()

self.branch1x1 = nn.Conv2d(in_channels, 192, kernel_size=1)

self.branch7x7_1 = nn.Conv2d(in_channels, 192, kernel_size=1)

self.branch7x7_2 = nn.Conv2d(192, 192, kernel_size=(1, 7), padding=(0, 3))

self.branch7x7_3 = nn.Conv2d(192, 192, kernel_size=(7, 1), padding=(3, 0))

self.branch7x7dbl_1 = nn.Conv2d(in_channels, 192, kernel_size=1)

self.branch7x7dbl_2 = nn.Conv2d(192, 192, kernel_size=(7, 1), padding=(3, 0))

self.branch7x7dbl_3 = nn.Conv2d(192, 192, kernel_size=(1, 7), padding=(0, 3))

self.branch7x7dbl_4 = nn.Conv2d(192, 192, kernel_size=(7, 1), padding=(3, 0))

self.branch7x7dbl_5 = nn.Conv2d(192, 192, kernel_size=(1, 7), padding=(0, 3))

self.branch_pool = nn.Conv2d(in_channels, 192, kernel_size=1)

def forward(self, x):

branch1x1 = self.branch1x1(x)

branch7x7 = self.branch7x7_1(x)

branch7x7 = self.branch7x7_2(branch7x7)

branch7x7 = self.branch7x7_3(branch7x7)

branch7x7dbl = self.branch7x7dbl_1(x)

branch7x7dbl = self.branch7x7dbl_2(branch7x7dbl)

branch7x7dbl = self.branch7x7dbl_3(branch7x7dbl)

branch7x7dbl = self.branch7x7dbl_4(branch7x7dbl)

branch7x7dbl = self.branch7x7dbl_5(branch7x7dbl)

branch_pool = F.avg_pool2d(x, kernel_size=3, stride=1, padding=1)

branch_pool = self.branch_pool(branch_pool)

outputs = [branch1x1, branch7x7, branch7x7dbl, branch_pool]

return torch.cat(outputs, dim=1)

InceptionB模块包含四个分支,其中branch7x7_2和branch7x7_3使用不同大小的卷积核进行多次卷积,可以提高特征的表达能力。branch7x7dbl_2、branch7x7dbl_3、branch7x7dbl_4、branch7x7dbl_5也类似地使用多个不同大小的卷积核进行多次卷积,提高了特征的表达能力,并且较好地保留了空间尺寸。branch_pool仍然使用平均池化的方式进行特征提取和降维。最后,将四个分支的结果在通道维度上进行拼接,输出InceptionB的结果。

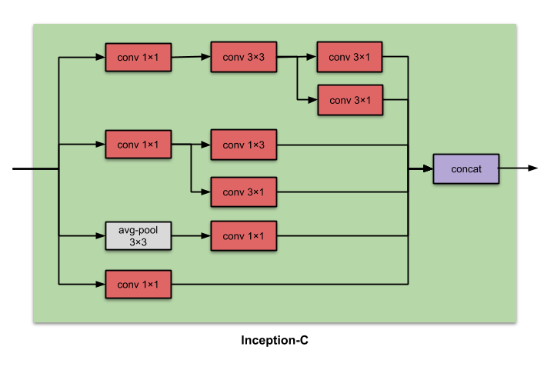

3、Inception-C

pytorch实现

import torch.nn as nn

import torch.nn.functional as F

class InceptionC(nn.Module):

def __init__(self, in_channels):

super(InceptionC, self).__init__()

self.branch3x3_1 = nn.Conv2d(in_channels, 64, kernel_size=1)

self.branch3x3_2a = nn.Conv2d(64, 64, kernel_size=1, padding=0, dilation=1)

self.branch3x3_2b = nn.Conv2d(64, 96, kernel_size=3, padding=1, dilation=1)

self.branch3x3dbl_1 = nn.Conv2d(in_channels, 64, kernel_size=1)

self.branch3x3dbl_2 = nn.Conv2d(64, 96, kernel_size=3, padding=1, dilation=1)

self.branch3x3dbl_3 = nn.Conv2d(96, 96, kernel_size=3, padding=1, dilation=1)

self.branch_pool = nn.Conv2d(in_channels, 96, kernel_size=1)

def forward(self, x):

branch3x3 = self.branch3x3_1(x)

branch3x3 = self.branch3x3_2a(branch3x3)

branch3x3 = self.branch3x3_2b(branch3x3)

branch3x3dbl = self.branch3x3dbl_1(x)

branch3x3dbl = self.branch3x3dbl_2(branch3x3dbl)

branch3x3dbl = self.branch3x3dbl_3(branch3x3dbl)

branch_pool = F.avg_pool2d(x, kernel_size=3, stride=1, padding=1)

branch_pool = self.branch_pool(branch_pool)

outputs = [branch3x3, branch3x3dbl, branch_pool]

return torch.cat(outputs, dim=1)

InceptionC模块同样包含三个分支,其中branch3x3_2b和branch3x3dbl_2使用大小为3x3的卷积核,并且padding=1,dilation=1,可以一定程度上扩大感受野。这两个分支多次卷积,可以提高特征的表达能力。branch_pool仍然使用平均池化的方式进行特征提取和降维。最后,将三个分支的结果在通道维度上进行拼接,输出InceptionB的结果。

4、Reduction-A

pytorch实现

import torch.nn as nn

import torch.nn.functional as F

class ReductionA(nn.Module):

def __init__(self, in_channels):

super(ReductionA, self).__init__()

self.branch3x3_1 = nn.Conv2d(in_channels, 384, kernel_size=3, stride=2)

self.branch3x3_2a = nn.Conv2d(in_channels, 192, kernel_size=1)

self.branch3x3_2b = nn.Conv2d(192, 224, kernel_size=3, padding=1)

self.branch3x3_2c = nn.Conv2d(224, 256, kernel_size=3, stride=2)

self.branch_pool = nn.MaxPool2d(kernel_size=3, stride=2)

def forward(self, x):

branch3x3 = self.branch3x3_1(x)

branch3x3dbl = self.branch3x3_2a(x)

branch3x3dbl = self.branch3x3_2b(branch3x3dbl)

branch3x3dbl = self.branch3x3_2c(branch3x3dbl)

branch_pool = self.branch_pool(x)

outputs = [branch3x3, branch3x3dbl, branch_pool]

return torch.cat(outputs, dim=1)

ReductionA模块包含三个分支,其中branch3x3_1使用3x3的卷积核进行卷积,通过stride=2来降维,同时提取特征。branch3x3_2a、branch3x3_2b、branch3x3_2c使用多层卷积对特征进行提取和表达,同时通过stride=2来降维和压缩特征,减少计算量。branch_pool使用max pooling的方式进行特征提取和降维,与其他模块类似。最后,将三个分支的结果在通道维度上进行拼接,输出ReductionA的结果

5、Reduction-B

6、辅助分支

class InceptionAux(nn.Module):

def __init__(self, in_channels, num_classes):

super(InceptionAux, self).__init__()

self.conv = nn.Conv2d(in_channels, 128, kernel_size=1)

self.relu = nn.ReLU(inplace=True)

self.fc1 = nn.Linear(2048, 1024)

self.dropout = nn.Dropout(p=0.7, inplace=False)

self.fc2 = nn.Linear(1024, num_classes)

def forward(self, x):

# 输入尺寸为 17x17

x = F.avg_pool2d(x, kernel_size=5, stride=3)

# 转换成 1x1

x = self.conv(x)

x = self.relu(x)

x = x.view(x.size(0), -1)

x = self.fc1(x)

x = self.relu(x)

x = self.dropout(x)

x = self.fc2(x)

return x

7、InceptionV3实现

'''-----------------------搭建GoogLeNet网络--------------------------'''

class GoogLeNet(nn.Module):

def __init__(self, num_classes=1000, aux_logits=True, transform_input=False,

inception_blocks=None):

super(GoogLeNet, self).__init__()

if inception_blocks is None:

inception_blocks = [

BasicConv2d, InceptionA, InceptionB, InceptionC,

InceptionD, InceptionE, InceptionAux

]

assert len(inception_blocks) == 7

conv_block = inception_blocks[0]

inception_a = inception_blocks[1]

inception_b = inception_blocks[2]

inception_c = inception_blocks[3]

inception_d = inception_blocks[4]

inception_e = inception_blocks[5]

inception_aux = inception_blocks[6]

self.aux_logits = aux_logits

self.transform_input = transform_input

self.Conv2d_1a_3x3 = conv_block(3, 32, kernel_size=3, stride=2)

self.Conv2d_2a_3x3 = conv_block(32, 32, kernel_size=3)

self.Conv2d_2b_3x3 = conv_block(32, 64, kernel_size=3, padding=1)

self.Conv2d_3b_1x1 = conv_block(64, 80, kernel_size=1)

self.Conv2d_4a_3x3 = conv_block(80, 192, kernel_size=3)

self.Mixed_5b = inception_a(192, pool_features=32)

self.Mixed_5c = inception_a(256, pool_features=64)

self.Mixed_5d = inception_a(288, pool_features=64)

self.Mixed_6a = inception_b(288)

self.Mixed_6b = inception_c(768, channels_7x7=128)

self.Mixed_6c = inception_c(768, channels_7x7=160)

self.Mixed_6d = inception_c(768, channels_7x7=160)

self.Mixed_6e = inception_c(768, channels_7x7=192)

if aux_logits:

self.AuxLogits = inception_aux(768, num_classes)

self.Mixed_7a = inception_d(768)

self.Mixed_7b = inception_e(1280)

self.Mixed_7c = inception_e(2048)

self.fc = nn.Linear(2048, num_classes)

'''输入(229,229,3)的数据,首先归一化输入,经过5个卷积,2个最大池化层。'''

def _forward(self, x):

# N x 3 x 299 x 299

x = self.Conv2d_1a_3x3(x)

# N x 32 x 149 x 149

x = self.Conv2d_2a_3x3(x)

# N x 32 x 147 x 147

x = self.Conv2d_2b_3x3(x)

# N x 64 x 147 x 147

x = F.max_pool2d(x, kernel_size=3, stride=2)

# N x 64 x 73 x 73

x = self.Conv2d_3b_1x1(x)

# N x 80 x 73 x 73

x = self.Conv2d_4a_3x3(x)

# N x 192 x 71 x 71

x = F.max_pool2d(x, kernel_size=3, stride=2)

'''然后经过3个InceptionA结构,

1个InceptionB,3个InceptionC,1个InceptionD,2个InceptionE,

其中InceptionA,辅助分类器AuxLogits以经过最后一个InceptionC的输出为输入。'''

# 35 x 35 x 192

x = self.Mixed_5b(x) # InceptionA(192, pool_features=32)

# 35 x 35 x 256

x = self.Mixed_5c(x) # InceptionA(256, pool_features=64)

# 35 x 35 x 288

x = self.Mixed_5d(x) # InceptionA(288, pool_features=64)

# 35 x 35 x 288

x = self.Mixed_6a(x) # InceptionB(288)

# 17 x 17 x 768

x = self.Mixed_6b(x) # InceptionC(768, channels_7x7=128)

# 17 x 17 x 768

x = self.Mixed_6c(x) # InceptionC(768, channels_7x7=160)

# 17 x 17 x 768

x = self.Mixed_6d(x) # InceptionC(768, channels_7x7=160)

# 17 x 17 x 768

x = self.Mixed_6e(x) # InceptionC(768, channels_7x7=192)

# 17 x 17 x 768

if self.training and self.aux_logits:

aux = self.AuxLogits(x) # InceptionAux(768, num_classes)

# 17 x 17 x 768

x = self.Mixed_7a(x) # InceptionD(768)

# 8 x 8 x 1280

x = self.Mixed_7b(x) # InceptionE(1280)

# 8 x 8 x 2048

x = self.Mixed_7c(x) # InceptionE(2048)

'''进入分类部分。

经过平均池化层+dropout+打平+全连接层输出'''

x = F.adaptive_avg_pool2d(x, (1, 1))

# N x 2048 x 1 x 1

x = F.dropout(x, training=self.training)

# N x 2048 x 1 x 1

x = torch.flatten(x, 1)#Flatten()就是将2D的特征图压扁为1D的特征向量,是展平操作,进入全连接层之前使用,类才能写进nn.Sequential

# N x 2048

x = self.fc(x)

# N x 1000 (num_classes)

return x, aux

def forward(self, x):

x, aux = self._forward(x)

return x, aux