在上一篇文章【点云处理】点云法向量估计及其加速(4)中我们尝试对pcl自带的KDTree的k近邻搜索过程使用OpenMP加速,效果比较明显,有将近1倍的提速。在这篇文章中我们暂时放弃pcl自带的KDTree,转而使用另一大杀器nanflann库提供的KDTree。nanoflann是一个c++11标准库,用于构建具有不同拓扑(R2,R3(点云),SO(2)和SO(3)(2D和3D旋转组))的KD树。nanoflann 算法对fastann进行了改进,效率以及内存使用等方面都进行了优化,而且代码十分轻量级且开源。nanoflann不需要编译或安装,你只需要在你的代码中加入#include <nanoflann.hpp>即可方便快捷地使用它。好了,下面使用nanoflann对我们的代码进行改写。

#include <time.h>

#include <cmath>

#include <functional>

#include <ros/ros.h>

#include <sensor_msgs/PointCloud2.h>

#include <pcl/console/time.h>

#include <pcl/gpu/features/features.hpp>

#include <omp.h>

#include <pcl/features/normal_3d_omp.h>

#include <pcl_conversions/pcl_conversions.h>

#include <spdlog/spdlog.h>

#include "KDTreeTableAdaptor.h"

int main(int argc, char** argv) {

ros::init(argc, argv, "n_lidar_gpu_normal");

ros::NodeHandle node;

pcl::PointCloud<pcl::PointXYZ>::Ptr cloud(new pcl::PointCloud<pcl::PointXYZ>);

const boost::function<void (const boost::shared_ptr<sensor_msgs::PointCloud2 const>&)> callback =[&](sensor_msgs::PointCloud2::ConstPtr msg_pc_ptr) {

pcl::fromROSMsg(*msg_pc_ptr, *cloud);

size_t cloud_size = cloud->size();

int dim=3,k=10;

float* points = new float[cloud_size*dim];

for (int i=0; i<cloud_size; i++) {

float* p = points + i*dim;

p[0] = cloud->points[i].x;

p[1] = cloud->points[i].y;

p[2] = cloud->points[i].z;

}

auto t1 = std::chrono::steady_clock::now();

KDTreeTableAdaptor<float,float> kdtree(cloud_size, dim, points, 64);

std::vector<std::vector<int>> neighbors_all(cloud_size,std::vector<int>(k));

std::vector<int> sizes(cloud_size,k);

for (int i=0; i<cloud_size; i++) {

std::vector<size_t> out_ids(k);

std::vector<float> out_dists_sqr(k);

nanoflann::KNNResultSet<float> result_set(k);

result_set.init(&out_ids[0], &out_dists_sqr[0]);

kdtree.index->findNeighbors(result_set, &points[i*dim], nanoflann::SearchParams(k));

for (int j=0; j<k; j++) {

neighbors_all[i][j] = out_ids[j];

}

}

auto t2 = std::chrono::steady_clock::now();

delete []points;

std::vector<int> flatten_neighbors_all(k * cloud_size);

pcl::gpu::PtrStep<int> ps(&flatten_neighbors_all[0], k * pcl::gpu::PtrStep<int>::elem_size);

for (size_t i=0; i<cloud_size; ++i) {

std::copy(neighbors_all[i].begin(), neighbors_all[i].end(), ps.ptr(i));

}

pcl::gpu::NeighborIndices gpu_neighbor_indices;

pcl::gpu::NormalEstimation::PointCloud gpu_cloud;

gpu_cloud.upload(cloud->points);

gpu_neighbor_indices.upload(flatten_neighbors_all, sizes, k);

pcl::gpu::NormalEstimation::Normals gpu_normals;

pcl::gpu::NormalEstimation::computeNormals(gpu_cloud, gpu_neighbor_indices, gpu_normals);

pcl::gpu::NormalEstimation::flipNormalTowardsViewpoint(gpu_cloud, 0.f, 0.f, 0.f, gpu_normals);

auto t3 = std::chrono::steady_clock::now();

auto compute_normal_time = std::chrono::duration<double,std::milli>(t3 - t2);

std::vector<pcl::PointXYZ> normals;

gpu_normals.download(normals);

auto t4 = std::chrono::steady_clock::now();

auto knn_time = std::chrono::duration<double,std::milli>(t2-t1);

auto total_time = std::chrono::duration<double,std::milli>(t4-t1);

spdlog::info("cloud size:{:d}, knn_time:{:.3f} ms,compute_normal_time:{:.3f} ms, total_time:{:.3f} ms", cloud->size(), knn_time.count(), compute_normal_time.count(), total_time.count());

};

ros::Subscriber pc_sub = node.subscribe<sensor_msgs::PointCloud2>("/BackLidar/lslidar_point_cloud", 1, callback);

ros::spin();

return 0;

}

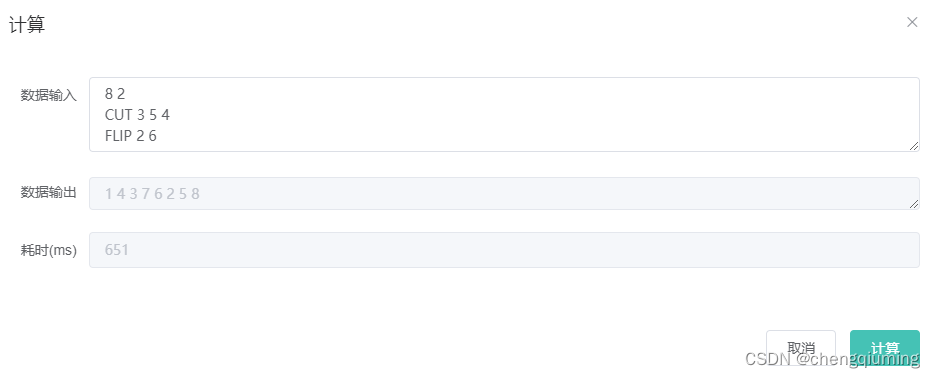

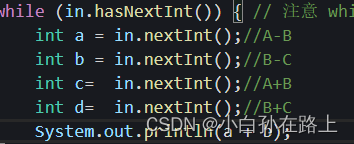

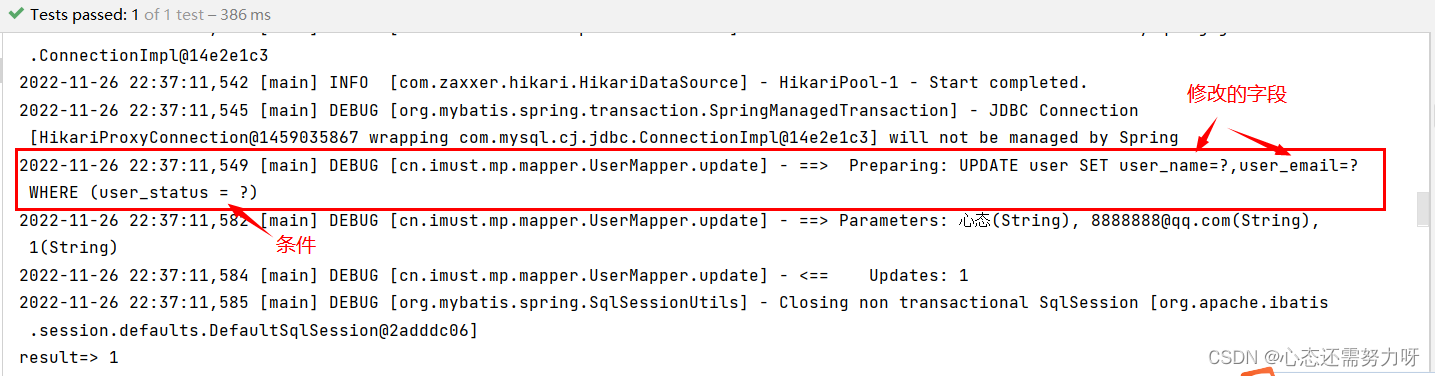

对于for循环遍历查找k近邻索引部分我们先不加"# pragma omp parallel for",编译运行。

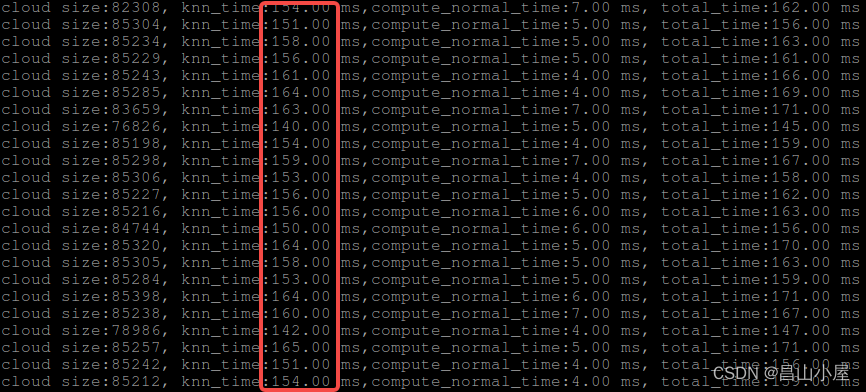

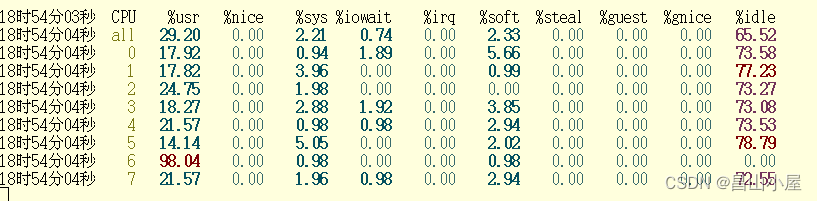

哇,加速效果明显,8w点云knn时间从400ms降到150ms左右。比pcl自带KDTree使用上OpenMP并行加速还要快。

要是能利用OpenMP做并行加速,岂不是要起飞??!!加上OpenMP加速试一试。

# pragma omp parallel for

for (int i=0; i<cloud_size; i++) {

std::vector<size_t> out_ids(k);

std::vector<float> out_dists_sqr(k);

nanoflann::KNNResultSet<float> result_set(k);

result_set.init(&out_ids[0], &out_dists_sqr[0]);

kdtree.index->findNeighbors(result_set, &points[i*dim], nanoflann::SearchParams(k));

for (int j=0; j<k; j++) {

neighbors_all[i][j] = out_ids[j];

}

}

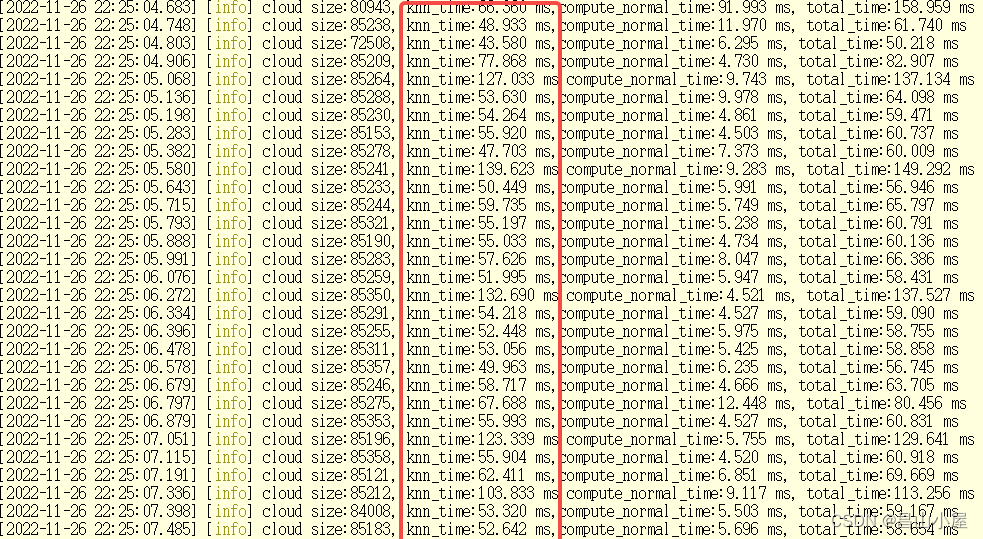

编译运行,测试结果如下:

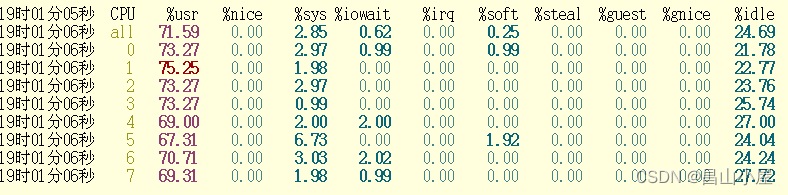

哇,虽然有波动,但常能在50ms左右徘徊,相比曾几何时的400ms提速了8倍。 多核算力分配也很均衡,完美!

所以,knn加速哪家强,nanoflann+OpenMP当称王!!!

【参考文献】

在C++中使用openmp进行多线程编程_线上幽灵的博客-CSDN博客_c++ omp

PCL GPU实现计算法线和曲率 - 知乎