目录

- 2.3 安装Logstash

- (1)检查系统jdk版本

- (2)下载logstash

- (3)安装logstash

- (4)配置logstash

- (5)启动与测试

- 方法1

- 方法2(主要的使用方式)

- (6)总结

- 2.4 安装Kibana

- (1)检查系统jdk版本

- (2)下载kibana

- (3)安装kibana

- (4)配置kibana

- (5)启动kibana

- (6)打开kibana

- 参考资料

- 关联博文

2.3 安装Logstash

官方安装包下载地址:https://www.elastic.co/cn/downloads/logstash

(1)检查系统jdk版本

同2.1

(2)下载logstash

wget https://artifacts.elastic.co/downloads/logstash/logstash-8.2.3-x86_64.rpm

(3)安装logstash

rpm -ivh logstash-8.2.3-x86_64.rpm

警告:logstash-8.2.3-x86_64.rpm: 头V4 RSA/SHA512 Signature, 密钥 ID d88e42b4: NOKEY

准备中... ################################# [100%]

正在升级/安装...

1:logstash-1:8.2.3-1 ################################# [100%]

[root@vms31 opt]#

(4)配置logstash

在实验过程中,一般来说对于logstash的配置文件是不需要配置的,保持默认即可。

logstash的配置文件位于:/etc/logstash/logstash.yaml

# Settings file in YAML

#

# Settings can be specified either in hierarchical form, e.g.:

#

# pipeline:

# batch:

# size: 125

# delay: 5

#

# Or as flat keys:

#

# pipeline.batch.size: 125

# pipeline.batch.delay: 5

#

# ------------ Node identity ------------

#

# Use a descriptive name for the node:

#

# node.name: test

#

# If omitted the node name will default to the machine's host name

#

# ------------ Data path ------------------

#

# Which directory should be used by logstash and its plugins

# for any persistent needs. Defaults to LOGSTASH_HOME/data

#

path.data: /var/lib/logstash #logstash数据的存放目录

#

# ------------ Pipeline Settings --------------

#

# The ID of the pipeline.

#

# pipeline.id: main

#

# Set the number of workers that will, in parallel, execute the filters+outputs

# stage of the pipeline.

#

# This defaults to the number of the host's CPU cores.

#

# pipeline.workers: 2

#

# How many events to retrieve from inputs before sending to filters+workers

#

# pipeline.batch.size: 125

#

# How long to wait in milliseconds while polling for the next event

# before dispatching an undersized batch to filters+outputs

#

# pipeline.batch.delay: 50

#

# Force Logstash to exit during shutdown even if there are still inflight

# events in memory. By default, logstash will refuse to quit until all

# received events have been pushed to the outputs.

#

# WARNING: Enabling this can lead to data loss during shutdown

#

# pipeline.unsafe_shutdown: false

#

# Set the pipeline event ordering. Options are "auto" (the default), "true" or "false".

# "auto" automatically enables ordering if the 'pipeline.workers' setting

# is also set to '1', and disables otherwise.

# "true" enforces ordering on the pipeline and prevent logstash from starting

# if there are multiple workers.

# "false" disables any extra processing necessary for preserving ordering.

#

# pipeline.ordered: auto

#

# Sets the pipeline's default value for `ecs_compatibility`, a setting that is

# available to plugins that implement an ECS Compatibility mode for use with

# the Elastic Common Schema.

# Possible values are:

# - disabled

# - v1

# - v8 (default)

# Pipelines defined before Logstash 8 operated without ECS in mind. To ensure a

# migrated pipeline continues to operate as it did before your upgrade, opt-OUT

# of ECS for the individual pipeline in its `pipelines.yml` definition. Setting

# it here will set the default for _all_ pipelines, including new ones.

#

# pipeline.ecs_compatibility: v8

#

# ------------ Pipeline Configuration Settings --------------

#

# Where to fetch the pipeline configuration for the main pipeline

#

path.config: /etc/logstash/conf.d #定义管道配置文件目录

#

# Pipeline configuration string for the main pipeline

#

# config.string:

#

# At startup, test if the configuration is valid and exit (dry run)

#

# config.test_and_exit: false

#

# Periodically check if the configuration has changed and reload the pipeline

# This can also be triggered manually through the SIGHUP signal

#

# config.reload.automatic: false

#

# How often to check if the pipeline configuration has changed (in seconds)

# Note that the unit value (s) is required. Values without a qualifier (e.g. 60)

# are treated as nanoseconds.

# Setting the interval this way is not recommended and might change in later versions.

#

# config.reload.interval: 3s

#

# Show fully compiled configuration as debug log message

# NOTE: --log.level must be 'debug'

#

# config.debug: false

#

# When enabled, process escaped characters such as \n and \" in strings in the

# pipeline configuration files.

#

# config.support_escapes: false

#

# ------------ API Settings -------------

# Define settings related to the HTTP API here.

#

# The HTTP API is enabled by default. It can be disabled, but features that rely

# on it will not work as intended.

#

# api.enabled: true

#

# By default, the HTTP API is not secured and is therefore bound to only the

# host's loopback interface, ensuring that it is not accessible to the rest of

# the network.

# When secured with SSL and Basic Auth, the API is bound to _all_ interfaces

# unless configured otherwise.

#

# api.http.host: 127.0.0.1

#

# The HTTP API web server will listen on an available port from the given range.

# Values can be specified as a single port (e.g., `9600`), or an inclusive range

# of ports (e.g., `9600-9700`).

#

# api.http.port: 9600-9700

#

# The HTTP API includes a customizable "environment" value in its response,

# which can be configured here.

#

# api.environment: "production"

#

# The HTTP API can be secured with SSL (TLS). To do so, you will need to provide

# the path to a password-protected keystore in p12 or jks format, along with credentials.

#

# api.ssl.enabled: false

# api.ssl.keystore.path: /path/to/keystore.jks

# api.ssl.keystore.password: "y0uRp4$$w0rD"

#

# The HTTP API can be configured to require authentication. Acceptable values are

# - `none`: no auth is required (default)

# - `basic`: clients must authenticate with HTTP Basic auth, as configured

# with `api.auth.basic.*` options below

# api.auth.type: none

#

# When configured with `api.auth.type` `basic`, you must provide the credentials

# that requests will be validated against. Usage of Environment or Keystore

# variable replacements is encouraged (such as the value `"${HTTP_PASS}"`, which

# resolves to the value stored in the keystore's `HTTP_PASS` variable if present

# or the same variable from the environment)

#

# api.auth.basic.username: "logstash-user"

# api.auth.basic.password: "s3cUreP4$$w0rD"

#

# ------------ Module Settings ---------------

# Define modules here. Modules definitions must be defined as an array.

# The simple way to see this is to prepend each `name` with a `-`, and keep

# all associated variables under the `name` they are associated with, and

# above the next, like this:

#

# modules:

# - name: MODULE_NAME

# var.PLUGINTYPE1.PLUGINNAME1.KEY1: VALUE

# var.PLUGINTYPE1.PLUGINNAME1.KEY2: VALUE

# var.PLUGINTYPE2.PLUGINNAME1.KEY1: VALUE

# var.PLUGINTYPE3.PLUGINNAME3.KEY1: VALUE

#

# Module variable names must be in the format of

#

# var.PLUGIN_TYPE.PLUGIN_NAME.KEY

#

# modules:

#

# ------------ Cloud Settings ---------------

# Define Elastic Cloud settings here.

# Format of cloud.id is a base64 value e.g. dXMtZWFzdC0xLmF3cy5mb3VuZC5pbyRub3RhcmVhbCRpZGVudGlmaWVy

# and it may have an label prefix e.g. staging:dXMtZ...

# This will overwrite 'var.elasticsearch.hosts' and 'var.kibana.host'

# cloud.id: <identifier>

#

# Format of cloud.auth is: <user>:<pass>

# This is optional

# If supplied this will overwrite 'var.elasticsearch.username' and 'var.elasticsearch.password'

# If supplied this will overwrite 'var.kibana.username' and 'var.kibana.password'

# cloud.auth: elastic:<password>

#

# ------------ Queuing Settings --------------

#

# Internal queuing model, "memory" for legacy in-memory based queuing and

# "persisted" for disk-based acked queueing. Defaults is memory

#

# queue.type: memory

#

# If using queue.type: persisted, the directory path where the data files will be stored.

# Default is path.data/queue

#

# path.queue:

#

# If using queue.type: persisted, the page data files size. The queue data consists of

# append-only data files separated into pages. Default is 64mb

#

# queue.page_capacity: 64mb

#

# If using queue.type: persisted, the maximum number of unread events in the queue.

# Default is 0 (unlimited)

#

# queue.max_events: 0

#

# If using queue.type: persisted, the total capacity of the queue in number of bytes.

# If you would like more unacked events to be buffered in Logstash, you can increase the

# capacity using this setting. Please make sure your disk drive has capacity greater than

# the size specified here. If both max_bytes and max_events are specified, Logstash will pick

# whichever criteria is reached first

# Default is 1024mb or 1gb

#

# queue.max_bytes: 1024mb

#

# If using queue.type: persisted, the maximum number of acked events before forcing a checkpoint

# Default is 1024, 0 for unlimited

#

# queue.checkpoint.acks: 1024

#

# If using queue.type: persisted, the maximum number of written events before forcing a checkpoint

# Default is 1024, 0 for unlimited

#

# queue.checkpoint.writes: 1024

#

# If using queue.type: persisted, the interval in milliseconds when a checkpoint is forced on the head page

# Default is 1000, 0 for no periodic checkpoint.

#

# queue.checkpoint.interval: 1000

#

# ------------ Dead-Letter Queue Settings --------------

# Flag to turn on dead-letter queue.

#

# dead_letter_queue.enable: false

# If using dead_letter_queue.enable: true, the maximum size of each dead letter queue. Entries

# will be dropped if they would increase the size of the dead letter queue beyond this setting.

# Default is 1024mb

# dead_letter_queue.max_bytes: 1024mb

# If using dead_letter_queue.enable: true, the interval in milliseconds where if no further events eligible for the DLQ

# have been created, a dead letter queue file will be written. A low value here will mean that more, smaller, queue files

# may be written, while a larger value will introduce more latency between items being "written" to the dead letter queue, and

# being available to be read by the dead_letter_queue input when items are are written infrequently.

# Default is 5000.

#

# dead_letter_queue.flush_interval: 5000

# If using dead_letter_queue.enable: true, the directory path where the data files will be stored.

# Default is path.data/dead_letter_queue

#

# path.dead_letter_queue:

#

# ------------ Debugging Settings --------------

#

# Options for log.level:

# * fatal

# * error

# * warn

# * info (default)

# * debug

# * trace

#

# log.level: info

path.logs: /var/log/logstash #logstash的日志存放目录

#

# ------------ Other Settings --------------

#

# Where to find custom plugins

# path.plugins: []

#

# Flag to output log lines of each pipeline in its separate log file. Each log filename contains the pipeline.name

# Default is false

# pipeline.separate_logs: false

#

# ------------ X-Pack Settings (not applicable for OSS build)--------------

#

# X-Pack Monitoring

# https://www.elastic.co/guide/en/logstash/current/monitoring-logstash.html

#xpack.monitoring.enabled: false

#xpack.monitoring.elasticsearch.username: logstash_system

#xpack.monitoring.elasticsearch.password: password

#xpack.monitoring.elasticsearch.proxy: ["http://proxy:port"]

#xpack.monitoring.elasticsearch.hosts: ["https://es1:9200", "https://es2:9200"]

# an alternative to hosts + username/password settings is to use cloud_id/cloud_auth

#xpack.monitoring.elasticsearch.cloud_id: monitoring_cluster_id:xxxxxxxxxx

#xpack.monitoring.elasticsearch.cloud_auth: logstash_system:password

# another authentication alternative is to use an Elasticsearch API key

#xpack.monitoring.elasticsearch.api_key: "id:api_key"

#xpack.monitoring.elasticsearch.ssl.certificate_authority: [ "/path/to/ca.crt" ]

#xpack.monitoring.elasticsearch.ssl.truststore.path: path/to/file

#xpack.monitoring.elasticsearch.ssl.truststore.password: password

#xpack.monitoring.elasticsearch.ssl.keystore.path: /path/to/file

#xpack.monitoring.elasticsearch.ssl.keystore.password: password

#xpack.monitoring.elasticsearch.ssl.verification_mode: certificate

#xpack.monitoring.elasticsearch.sniffing: false

#xpack.monitoring.collection.interval: 10s

#xpack.monitoring.collection.pipeline.details.enabled: true

#

# X-Pack Management

# https://www.elastic.co/guide/en/logstash/current/logstash-centralized-pipeline-management.html

#xpack.management.enabled: false

#xpack.management.pipeline.id: ["main", "apache_logs"]

#xpack.management.elasticsearch.username: logstash_admin_user

#xpack.management.elasticsearch.password: password

#xpack.management.elasticsearch.proxy: ["http://proxy:port"]

#xpack.management.elasticsearch.hosts: ["https://es1:9200", "https://es2:9200"]

# an alternative to hosts + username/password settings is to use cloud_id/cloud_auth

#xpack.management.elasticsearch.cloud_id: management_cluster_id:xxxxxxxxxx

#xpack.management.elasticsearch.cloud_auth: logstash_admin_user:password

# another authentication alternative is to use an Elasticsearch API key

#xpack.management.elasticsearch.api_key: "id:api_key"

#xpack.management.elasticsearch.ssl.certificate_authority: [ "/path/to/ca.crt" ]

#xpack.management.elasticsearch.ssl.truststore.path: /path/to/file

#xpack.management.elasticsearch.ssl.truststore.password: password

#xpack.management.elasticsearch.ssl.keystore.path: /path/to/file

#xpack.management.elasticsearch.ssl.keystore.password: password

#xpack.management.elasticsearch.ssl.verification_mode: certificate

#xpack.management.elasticsearch.sniffing: false

#xpack.management.logstash.poll_interval: 5s

# X-Pack GeoIP plugin

# https://www.elastic.co/guide/en/logstash/current/plugins-filters-geoip.html#plugins-filters-geoip-manage_update

#xpack.geoip.download.endpoint: "https://geoip.elastic.co/v1/database"

修改完后使用cat命令查看设置

# cat /etc/logstash/logstash.yml | grep -Ev "#|^$"

path.data: /var/lib/logstash

path.config: /etc/logstash/conf.d

path.logs: /var/log/logstash

(5)启动与测试

方法1

logstash启动时需要配置对应的管道配置文件,为了方便测试,第一次启动我们进行手动启动,并使用标准输入和输出进行测试。

由RPM包安装的软件,其运行文件一般位于/usr/share/logstash/bin/中。

/usr/share/logstash/bin/logstash -e 'input {stdin {}} output {stdout {}}'

-e后跟表达式

成功启动后会得到如下的输出内容

Using bundled JDK: /usr/share/logstash/jdk

OpenJDK 64-Bit Server VM warning: Option UseConcMarkSweepGC was deprecated in version 9.0 and will likely be removed in a future release.

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path /usr/share/logstash/config/log4j2.properties. Using default config which logs errors to the console

[INFO ] 2022-06-27 02:11:06.540 [main] runner - Starting Logstash {"logstash.version"=>"8.2.3", "jruby.version"=>"jruby 9.2.20.1 (2.5.8) 2021-11-30 2a2962fbd1 OpenJDK 64-Bit Server VM 11.0.15+10 on 11.0.15+10 +indy +jit [linux-x86_64]"}

[INFO ] 2022-06-27 02:11:06.564 [main] runner - JVM bootstrap flags: [-Xms1g, -Xmx1g, -XX:+UseConcMarkSweepGC, -XX:CMSInitiatingOccupancyFraction=75, -XX:+UseCMSInitiatingOccupancyOnly, -Djava.awt.headless=true, -Dfile.encoding=UTF-8, -Djruby.compile.invokedynamic=true, -Djruby.jit.threshold=0, -XX:+HeapDumpOnOutOfMemoryError, -Djava.security.egd=file:/dev/urandom, -Dlog4j2.isThreadContextMapInheritable=true, -Djruby.regexp.interruptible=true, -Djdk.io.File.enableADS=true, --add-opens=java.base/java.security=ALL-UNNAMED, --add-opens=java.base/java.io=ALL-UNNAMED, --add-opens=java.base/java.nio.channels=ALL-UNNAMED, --add-opens=java.base/sun.nio.ch=ALL-UNNAMED, --add-opens=java.management/sun.management=ALL-UNNAMED]

[INFO ] 2022-06-27 02:11:06.640 [main] settings - Creating directory {:setting=>"path.queue", :path=>"/usr/share/logstash/data/queue"}

[INFO ] 2022-06-27 02:11:06.663 [main] settings - Creating directory {:setting=>"path.dead_letter_queue", :path=>"/usr/share/logstash/data/dead_letter_queue"}

[WARN ] 2022-06-27 02:11:07.292 [LogStash::Runner] multilocal - Ignoring the 'pipelines.yml' file because modules or command line options are specified

[INFO ] 2022-06-27 02:11:07.396 [LogStash::Runner] agent - No persistent UUID file found. Generating new UUID {:uuid=>"801a5a88-a723-4953-b2b1-b8f800c19059", :path=>"/usr/share/logstash/data/uuid"}

[INFO ] 2022-06-27 02:11:09.722 [Api Webserver] agent - Successfully started Logstash API endpoint {:port=>9600, :ssl_enabled=>false}

[INFO ] 2022-06-27 02:11:10.529 [Converge PipelineAction::Create<main>] Reflections - Reflections took 188 ms to scan 1 urls, producing 120 keys and 395 values

[INFO ] 2022-06-27 02:11:11.460 [Converge PipelineAction::Create<main>] javapipeline - Pipeline `main` is configured with `pipeline.ecs_compatibility: v8` setting. All plugins in this pipeline will default to `ecs_compatibility => v8` unless explicitly configured otherwise.

[INFO ] 2022-06-27 02:11:11.687 [[main]-pipeline-manager] javapipeline - Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50, "pipeline.max_inflight"=>250, "pipeline.sources"=>["config string"], :thread=>"#<Thread:0x4d4bcb5b run>"}

[INFO ] 2022-06-27 02:11:12.819 [[main]-pipeline-manager] javapipeline - Pipeline Java execution initialization time {"seconds"=>1.13}

[INFO ] 2022-06-27 02:11:12.948 [[main]-pipeline-manager] javapipeline - Pipeline started {"pipeline.id"=>"main"}

The stdin plugin is now waiting for input:

[INFO ] 2022-06-27 02:11:13.065 [Agent thread] agent - Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

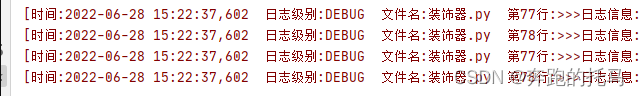

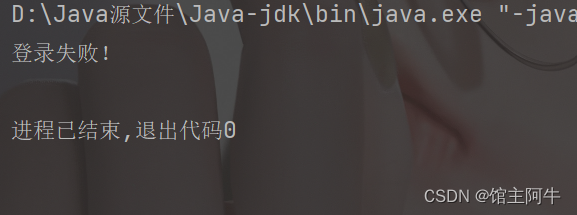

在输入一个 hello 后,会得到如下图所示内容

方法2(主要的使用方式)

使用管道配置文件。在logstash管道配置文件的目录/etc/logstash/conf.d,创建 .conf文件。

logstash的管道配置文件的后缀是conf文件

# pwd

/etc/logstash/conf.d

# vim test.conf

test.conf内容如下形式。(如果是标准输入和输出不会显示任何数据)

input {

stdin {}

}

output {

stdout {}

}

启动logstash

systemctl start logstash.service

systemctl status logstash.service

这种方法无法进行测试,但生产中会使用。

(6)总结

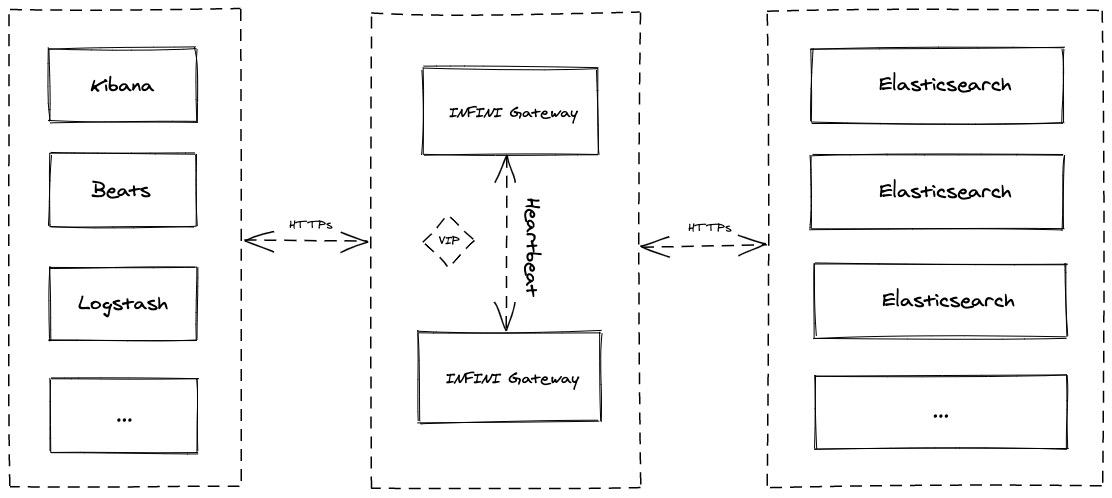

Logstash作为数据收集器时,需要部署到每个节点中。假如只是作为过滤数据的中间件,在ELK集群中可以适量部署,不一定每个节点都要安装。

2.4 安装Kibana

官方安装包下载地址:https://www.elastic.co/cn/downloads/kibana

(1)检查系统jdk版本

同2.1

(2)下载kibana

wget https://artifacts.elastic.co/downloads/kibana/kibana-8.2.3-x86_64.rpm

(3)安装kibana

# rpm -ivh kibana-8.2.3-x86_64.rpm

警告:kibana-8.2.3-x86_64.rpm: 头V4 RSA/SHA512 Signature, 密钥 ID d88e42b4: NOKEY

准备中... ################################# [100%]

正在升级/安装...

1:kibana-8.2.3-1 ################################# [100%]

Creating kibana group... OK

Creating kibana user... OK

Created Kibana keystore in /etc/kibana/kibana.keystore

(4)配置kibana

kibana的配置文件位于:/etc/kibana/kibana.yaml

[root@vms32 opt]# cat /etc/kibana/kibana.yml

# For more configuration options see the configuration guide for Kibana in

# https://www.elastic.co/guide/index.html

# =================== System: Kibana Server ===================

# Kibana is served by a back end server. This setting specifies the port to use.

server.port: 5601 #kibana服务访问的端口号,默认为5601

# Specifies the address to which the Kibana server will bind. IP addresses and host names are both valid values.

# The default is 'localhost', which usually means remote machines will not be able to connect.

# To allow connections from remote users, set this parameter to a non-loopback address.

server.host: "192.168.100.32" #访问kibana服务的IP地址,默认为localhost

# Enables you to specify a path to mount Kibana at if you are running behind a proxy.

# Use the `server.rewriteBasePath` setting to tell Kibana if it should remove the basePath

# from requests it receives, and to prevent a deprecation warning at startup.

# This setting cannot end in a slash.

#server.basePath: ""

# Specifies whether Kibana should rewrite requests that are prefixed with

# `server.basePath` or require that they are rewritten by your reverse proxy.

# Defaults to `false`.

#server.rewriteBasePath: false

# Specifies the public URL at which Kibana is available for end users. If

# `server.basePath` is configured this URL should end with the same basePath.

#server.publicBaseUrl: ""

# The maximum payload size in bytes for incoming server requests.

#server.maxPayload: 1048576

# The Kibana server's name. This is used for display purposes.

#server.name: "your-hostname"

# =================== System: Kibana Server (Optional) ===================

# Enables SSL and paths to the PEM-format SSL certificate and SSL key files, respectively.

# These settings enable SSL for outgoing requests from the Kibana server to the browser.

#server.ssl.enabled: false

#server.ssl.certificate: /path/to/your/server.crt

#server.ssl.key: /path/to/your/server.key

# =================== System: Elasticsearch ===================

# The URLs of the Elasticsearch instances to use for all your queries.

elasticsearch.hosts: ["http://192.168.100.31:9200","http://192.168.100.32:9200"] #elasticsearch的IP地址

# If your Elasticsearch is protected with basic authentication, these settings provide

# the username and password that the Kibana server uses to perform maintenance on the Kibana

# index at startup. Your Kibana users still need to authenticate with Elasticsearch, which

# is proxied through the Kibana server.

#elasticsearch.username: "kibana_system"

#elasticsearch.password: "pass"

# Kibana can also authenticate to Elasticsearch via "service account tokens".

# Service account tokens are Bearer style tokens that replace the traditional username/password based configuration.

# Use this token instead of a username/password.

# elasticsearch.serviceAccountToken: "my_token"

# Time in milliseconds to wait for Elasticsearch to respond to pings. Defaults to the value of

# the elasticsearch.requestTimeout setting.

#elasticsearch.pingTimeout: 1500

# Time in milliseconds to wait for responses from the back end or Elasticsearch. This value

# must be a positive integer.

#elasticsearch.requestTimeout: 30000

# The maximum number of sockets that can be used for communications with elasticsearch.

# Defaults to `Infinity`.

#elasticsearch.maxSockets: 1024

# Specifies whether Kibana should use compression for communications with elasticsearch

# Defaults to `false`.

#elasticsearch.compression: false

# List of Kibana client-side headers to send to Elasticsearch. To send *no* client-side

# headers, set this value to [] (an empty list).

#elasticsearch.requestHeadersWhitelist: [ authorization ]

# Header names and values that are sent to Elasticsearch. Any custom headers cannot be overwritten

# by client-side headers, regardless of the elasticsearch.requestHeadersWhitelist configuration.

#elasticsearch.customHeaders: {}

# Time in milliseconds for Elasticsearch to wait for responses from shards. Set to 0 to disable.

#elasticsearch.shardTimeout: 30000

# =================== System: Elasticsearch (Optional) ===================

# These files are used to verify the identity of Kibana to Elasticsearch and are required when

# xpack.security.http.ssl.client_authentication in Elasticsearch is set to required.

#elasticsearch.ssl.certificate: /path/to/your/client.crt

#elasticsearch.ssl.key: /path/to/your/client.key

# Enables you to specify a path to the PEM file for the certificate

# authority for your Elasticsearch instance.

#elasticsearch.ssl.certificateAuthorities: [ "/path/to/your/CA.pem" ]

# To disregard the validity of SSL certificates, change this setting's value to 'none'.

#elasticsearch.ssl.verificationMode: full

# =================== System: Logging ===================

# Set the value of this setting to off to suppress all logging output, or to debug to log everything. Defaults to 'info'

#logging.root.level: debug

# Enables you to specify a file where Kibana stores log output.

logging:

appenders:

file:

type: file

fileName: /var/log/kibana/kibana.log #kibana日志存放目录

layout:

type: json

root:

appenders:

- default

- file

# layout:

# type: json

# Logs queries sent to Elasticsearch.

#logging.loggers:

# - name: elasticsearch.query

# level: debug

# Logs http responses.

#logging.loggers:

# - name: http.server.response

# level: debug

# Logs system usage information.

#logging.loggers:

# - name: metrics.ops

# level: debug

# =================== System: Other ===================

# The path where Kibana stores persistent data not saved in Elasticsearch. Defaults to data

#path.data: data

# Specifies the path where Kibana creates the process ID file.

pid.file: /run/kibana/kibana.pid

# Set the interval in milliseconds to sample system and process performance

# metrics. Minimum is 100ms. Defaults to 5000ms.

#ops.interval: 5000

# Specifies locale to be used for all localizable strings, dates and number formats.

# Supported languages are the following: English (default) "en", Chinese "zh-CN", Japanese "ja-JP", French "fr-FR".

i18n.locale: "zh-CN" #修改kibana页面的默认语言

# =================== Frequently used (Optional)===================

# =================== Saved Objects: Migrations ===================

# Saved object migrations run at startup. If you run into migration-related issues, you might need to adjust these settings.

# The number of documents migrated at a time.

# If Kibana can't start up or upgrade due to an Elasticsearch `circuit_breaking_exception`,

# use a smaller batchSize value to reduce the memory pressure. Defaults to 1000 objects per batch.

#migrations.batchSize: 1000

# The maximum payload size for indexing batches of upgraded saved objects.

# To avoid migrations failing due to a 413 Request Entity Too Large response from Elasticsearch.

# This value should be lower than or equal to your Elasticsearch cluster’s `http.max_content_length`

# configuration option. Default: 100mb

#migrations.maxBatchSizeBytes: 100mb

# The number of times to retry temporary migration failures. Increase the setting

# if migrations fail frequently with a message such as `Unable to complete the [...] step after

# 15 attempts, terminating`. Defaults to 15

#migrations.retryAttempts: 15

# =================== Search Autocomplete ===================

# Time in milliseconds to wait for autocomplete suggestions from Elasticsearch.

# This value must be a whole number greater than zero. Defaults to 1000ms

#data.autocomplete.valueSuggestions.timeout: 1000

# Maximum number of documents loaded by each shard to generate autocomplete suggestions.

# This value must be a whole number greater than zero. Defaults to 100_000

#data.autocomplete.valueSuggestions.terminateAfter: 100000

修改完后使用cat命令查看设置

# cat /etc/kibana/kibana.yml | grep -Ev "#|^$"

server.port: 5601

server.host: "192.168.100.32"

elasticsearch.hosts: ["http://192.168.100.31:9200","http://192.168.100.32:9200"]

logging:

appenders:

file:

type: file

fileName: /var/log/kibana/kibana.log

layout:

type: json

root:

appenders:

- default

- file

pid.file: /run/kibana/kibana.pid

i18n.locale: "zh-CN"

(5)启动kibana

systemctl start kibana.service

检查端口

# lsof -i:5601

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

node 124636 kibana 20u IPv4 14842025 0t0 TCP vms32.rhce.cc:esmagent (LISTEN)xxxxxxxxxx lsof -i:5601# lsof -i:5601COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAMEnode 124636 kibana 20u IPv4 14842025 0t0 TCP vms32.rhce.cc:esmagent (LISTEN)

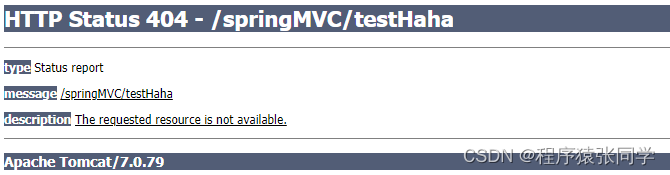

(6)打开kibana

在浏览器中输入:http://192.168.100.32:5601/,即可打开kibana UI

参考资料

- Logstash文档:Logstash Reference

- Kibana文档:Kibana Guide

关联博文

由于篇幅原因,关于搭建ELKB集群其他内容请查阅:

实验环境说明和搭建Elasticsearch集群

安装 Filebeat和问题与解决方案