编辑:OAK中国

首发:oakchina.cn

喜欢的话,请多多👍⭐️✍

内容可能会不定期更新,官网内容都是最新的,请查看首发地址链接。

▌前言

Hello,大家好,这里是OAK中国,我是助手君。

最近咱社群里有几个朋友在将yolox转换成blob的过程有点不清楚,所以我就写了这篇博客。(请夸我贴心!咱的原则:合理要求,有求必应!)

1.其他Yolo转换及使用教程请参考

2.检测类的yolo模型建议使用在线转换(地址),如果在线转换不成功,你再根据本教程来做本地转换。

▌.pt 转换为 .onnx

使用下列脚本 (将脚本放到 YOLOv7 根目录中) 将 pytorch 模型转换为 onnx 模型,若已安装 openvino_dev,则可进一步转换为 OpenVINO 模型:

示例用法:

python export_onnx.py -w <path_to_model>.pt -imgsz 320

export_onnx.py :

# coding=utf-8

import argparse

import json

import logging

import subprocess

import sys

import time

import warnings

from pathlib import Path

warnings.filterwarnings("ignore")

sys.path.append("./") # to run '$ python *.py' files in subdirectories

import torch

import torch.nn as nn

from models.common import Conv

from models.experimental import attempt_load

from utils.activations import Hardswish, SiLU

from utils.general import check_img_size, set_logging

from utils.torch_utils import select_device

if __name__ == "__main__":

parser = argparse.ArgumentParser()

parser.add_argument(

"-w", "--weights", type=str, default="./yolov7.pt", help="weights path"

)

parser.add_argument(

"-imgsz",

"--img-size",

nargs="+",

type=int,

default=[640, 640],

help="image size",

) # height, width

parser.add_argument("-op", "--opset", type=int, default=12, help="opset version")

opt = parser.parse_args()

opt.img_size *= 2 if len(opt.img_size) == 1 else 1 # expand

set_logging()

logging.info(opt)

t = time.time()

# Load PyTorch model

device = select_device("cpu")

model = attempt_load(opt.weights, map_location=device) # load FP32 model

labels = model.names

# Checks

gs = int(max(model.stride)) # grid size (max stride)

opt.img_size = [

check_img_size(x, gs) for x in opt.img_size

] # verify img_size are gs-multiples

# Input

img = torch.zeros(1, 3, *opt.img_size).to(

device

) # image size(1,3,320,320) iDetection

# Update model

for k, m in model.named_modules():

m._non_persistent_buffers_set = set() # pytorch 1.6.0 compatibility

if isinstance(m, Conv): # assign export-friendly activations

if isinstance(m.act, nn.Hardswish):

m.act = Hardswish()

elif isinstance(m.act, nn.SiLU):

m.act = SiLU()

model.model[-1].concat = True

m = model.module.model[-1] if hasattr(model, "module") else model.model[-1]

num_branches = len(m.anchor_grid)

y = model(img) # dry run

# ONNX export

try:

import onnx

logging.info("\nStarting ONNX export with onnx %s..." % onnx.__version__)

export_file = Path(opt.weights).with_suffix(".onnx") # filename

model.eval()

output_names = ["classes", "boxes"] if y is None else ["output"]

torch.onnx.export(

model,

img,

export_file,

verbose=False,

opset_version=opt.opset,

input_names=["images"],

output_names=output_names,

)

# Checks

onnx_model = onnx.load(export_file) # load onnx model

onnx.checker.check_model(onnx_model) # check onnx model

try:

import onnxsim

logging.info("\nStarting to simplify ONNX...")

onnx_model, check = onnxsim.simplify(onnx_model)

assert check, "assert check failed"

except Exception as e:

logging.warning(f"Simplifier failure: {e}")

# add named sigmoids for prunning in OpenVINO

conv_indices = []

for i, n in enumerate(onnx_model.graph.node):

if "Conv" in n.name:

conv_indices.append(i)

inputs = conv_indices[-num_branches:]

for i, inp in enumerate(inputs):

sigmoid = onnx.helper.make_node(

"Sigmoid",

inputs=[onnx_model.graph.node[inp].output[0]],

outputs=[f"output{i+1}_yolov7"],

)

onnx_model.graph.node.append(sigmoid)

onnx.checker.check_model(onnx_model) # check onnx model

onnx.save(onnx_model, export_file)

logging.info("ONNX export success, saved as %s" % export_file)

except Exception as e:

logging.warning("ONNX export failure: %s" % e)

# generate anchors and sides

anchors, sides = [], []

m = model.module.model[-1] if hasattr(model, "module") else model.model[-1]

for i in range(num_branches):

sides.append(int(opt.img_size[0] // m.stride[i]))

for j in range(m.anchor_grid[i].size()[1]):

anchors.extend(m.anchor_grid[i][0, j, 0, 0].numpy())

anchors = [float(x) for x in anchors]

# generate masks

masks = dict()

# for i, num in enumerate(sides[::-1]):

for i, num in enumerate(sides):

masks[f"side{num}"] = list(range(i * 3, i * 3 + 3))

logging.info("\nanchors: %s" % anchors)

logging.info("anchor_masks: %s" % masks)

export_json = export_file.with_suffix(".json")

export_json.with_suffix(".json").write_text(

json.dumps(

{

"anchors": anchors,

"anchor_masks": masks,

"coordinates": 4,

"labels": labels,

"num_classes": model.nc,

},

indent=4,

)

)

logging.info("Anchors data export success, saved as %s" % export_json)

# OpenVINO export

logging.info("\nStarting to export OpenVINO...")

export_dir = Path(str(export_file).replace(".onnx", "_openvino"))

OpenVINO_cmd = (

"mo --input_model %s --output_dir %s --data_type FP16 --scale 255 --reverse_input_channel --output 'output1_yolov7,output2_yolov7,output3_yolov7' "

% (export_file, export_dir)

)

try:

subprocess.check_output(OpenVINO_cmd, shell=True)

logging.info("OpenVINO export success, saved as %s" % export_dir)

except Exception as e:

logging.info("OpenVINO export failure: %s" % e)

logging.info("\nBy the way, you can try to export OpenVINO use:")

logging.info("\n%s" % OpenVINO_cmd)

# OAK Blob export

logging.info("\nThen you can try to export blob use:")

export_xml = export_dir / export_file.with_suffix(".xml")

export_blob = export_dir / export_file.with_suffix(".blob")

blob_cmd = (

"compile_tool -m %s -ip U8 -d MYRIAD -VPU_NUMBER_OF_SHAVES 6 -VPU_NUMBER_OF_CMX_SLICES 6 -o %s"

% (export_xml, export_blob)

)

logging.info("\n%s" % blob_cmd)

# Finish

logging.info("\nExport complete (%.2fs)." % (time.time() - t))

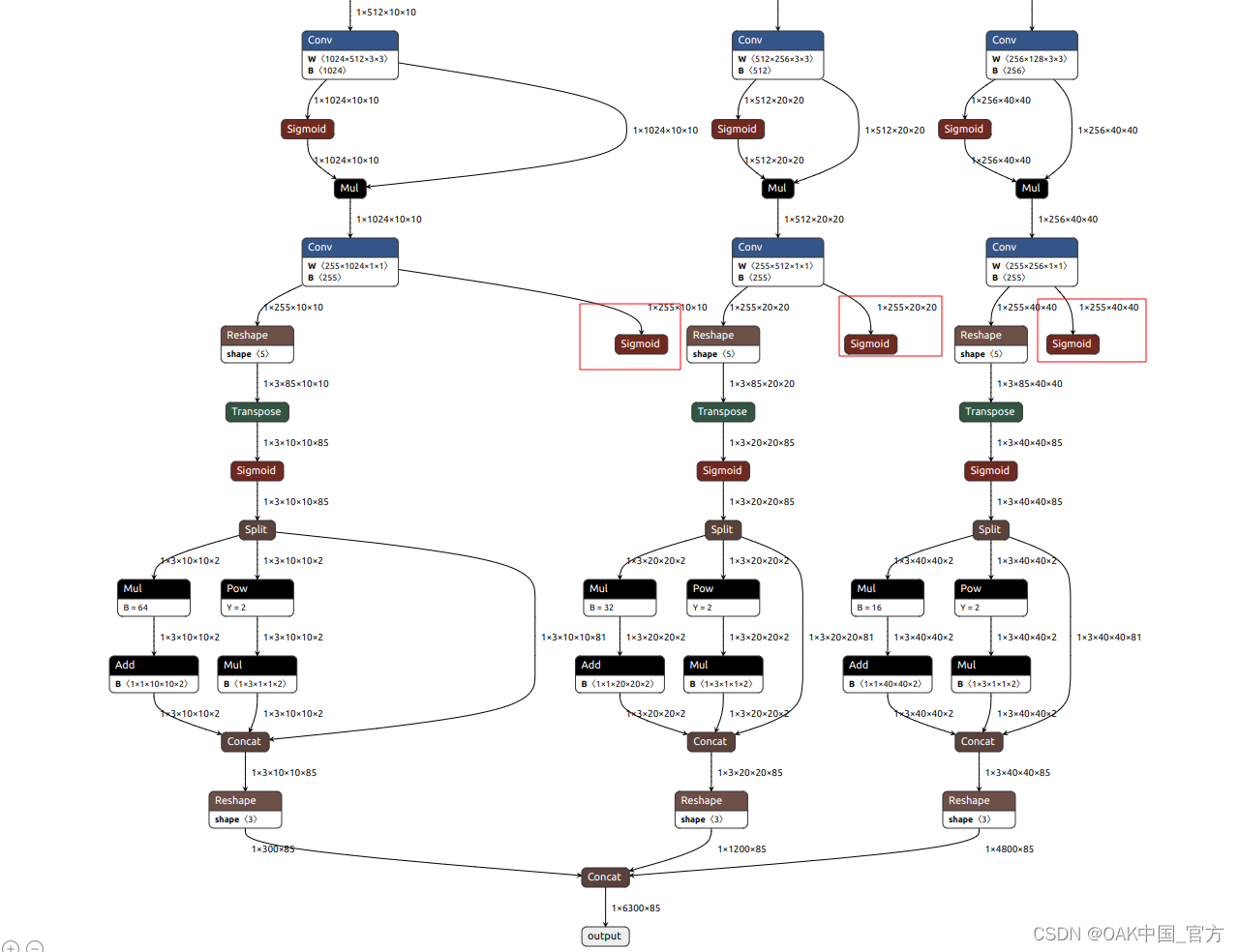

可以使用 Netron 查看模型结构

▌转换

openvino 本地转换

onnx -> openvino

mo 是 openvino_dev 2022.1 中脚本,

安装命令为

pip install openvino-dev

mo --input_model yolov7.onnx --scale 255 --reverse_input_channel

openvino -> blob

<path>/compile_tool -m yolov7.xml \

-ip U8 -d MYRIAD \

-VPU_NUMBER_OF_SHAVES 6 \

-VPU_NUMBER_OF_CMX_SLICES 6

在线转换

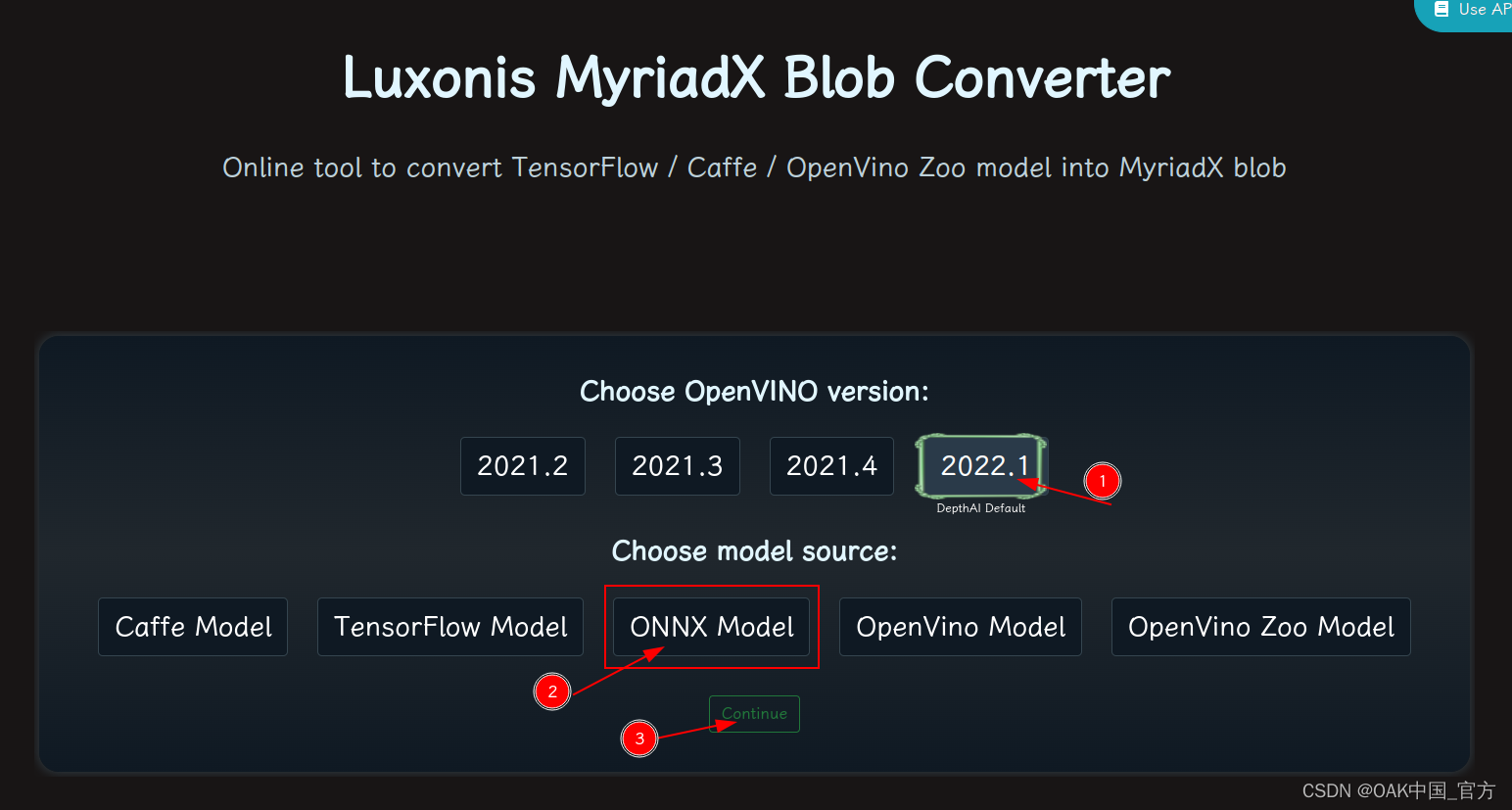

blobconvert 网页 http://blobconverter.luxonis.com/

- 进入网页,按下图指示操作:

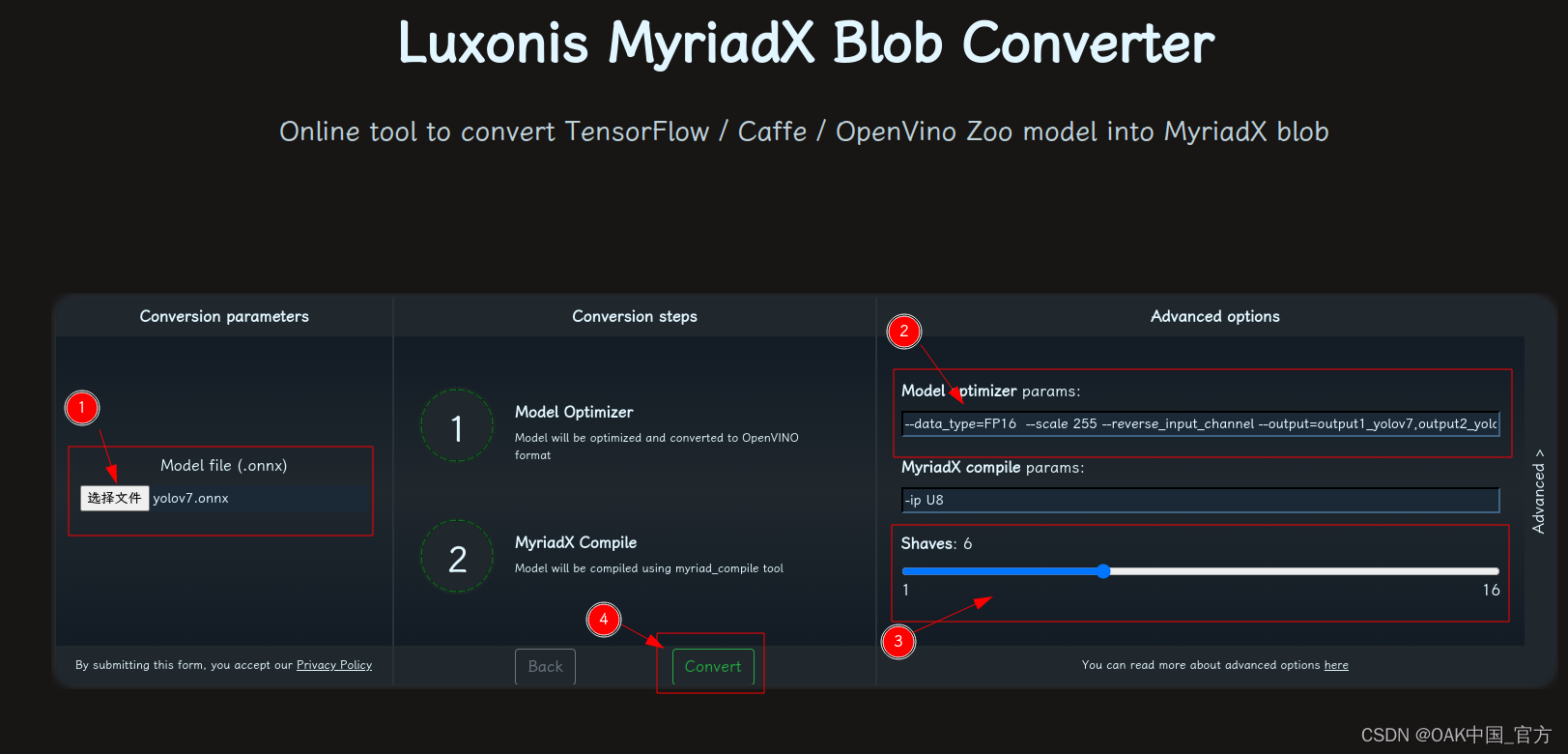

- 修改参数,转换模型:

1. 选择 onnx 模型

2. 修改 optimizer_params 为 --data_type=FP16 --scale 255 --reverse_input_channel

3. 修改 shaves 为 6

4. 转换

blobconverter python 代码

blobconverter.from_onnx(

"yolov7.onnx",

optimizer_params=[

" --scale 255",

"--reverse_input_channel",

],

shaves=6,

)

blobconvert cli

blobconverter --onnx yolov7.onnx -sh 6 -o . --optimizer-params "scale=255 --reverse_input_channel"

▌DepthAI 示例

正确解码需要可配置的网络相关参数:

使用 export_onnx.py 转换模型时会将相关参数写入 json 文件中,可根据 json 文件中数据添加下列参数

-

setNumClasses - YOLO 检测类别的数量

-

setIouThreshold - iou 阈值

-

setConfidenceThreshold - 置信度阈值,低于该阈值的对象将被过滤掉

-

setAnchors - yolo 锚点

-

setAnchorMasks - 锚掩码

Anchors:

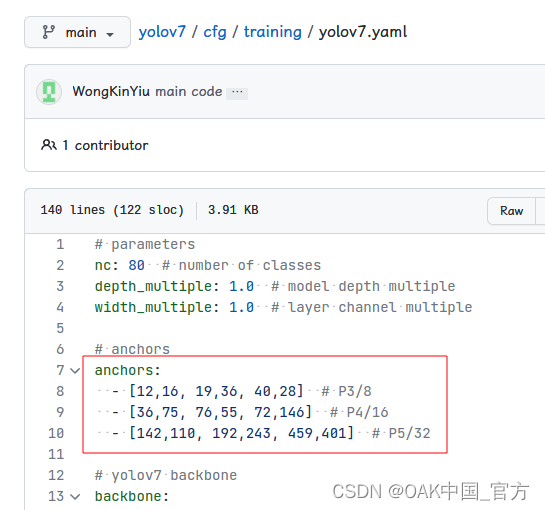

训练模型时 cfg 中的 anchors,例如:

[12,16, 19,36, 40,28, 36,75, 76,55, 72,146, 142,110, 192,243, 459,401]是从 yolov7.yaml 中 获取

AnchorMasks :

如果使用不同的输入宽度,还应该重新设置

sideX,sideY,sideZ, 其中X = width/8,Y = width/16,Z = width/32。如果您使用的是微型(tiny)模型,那么只要设置sideX,sideY,其中X = width/16,Y = width/32。

import cv2

import depthai as dai

import numpy as np

model = dai.OpenVINO.Blob("yolov7.blob")

dim = model.networkInputs.get("images").dims

W, H = dim[:2]

labelMap = [

# "class_1","class_2","..."

"class_%s"%i for i in range(80)

]

# Create pipeline

pipeline = dai.Pipeline()

# Define sources and outputs

camRgb = pipeline.create(dai.node.ColorCamera)

detectionNetwork = pipeline.create(dai.node.YoloDetectionNetwork)

xoutRgb = pipeline.create(dai.node.XLinkOut)

nnOut = pipeline.create(dai.node.XLinkOut)

xoutRgb.setStreamName("rgb")

nnOut.setStreamName("nn")

# Properties

camRgb.setPreviewSize(W, H)

camRgb.setResolution(dai.ColorCameraProperties.SensorResolution.THE_1080_P)

camRgb.setInterleaved(False)

camRgb.setColorOrder(dai.ColorCameraProperties.ColorOrder.BGR)

camRgb.setFps(40)

# Network specific settings

detectionNetwork.setBlob(model)

detectionNetwork.setConfidenceThreshold(0.5)

detectionNetwork.setNumClasses(80)

detectionNetwork.setCoordinateSize(4)

detectionNetwork.setAnchors(

[

12,16, 19,36, 40,28,

36,75, 76,55, 72,146,

142,110, 192,243, 459,401

]

)

detectionNetwork.setAnchorMasks(

{

"side%s"%(W/8): [0,1,2],

"side%s"%(W/16): [3,4,5],

"side%s"%(W/32): [6,7,8]

}

)

detectionNetwork.setIouThreshold(0.5)

# Linking

camRgb.preview.link(detectionNetwork.input)

camRgb.preview.link(xoutRgb.input)

detectionNetwork.out.link(nnOut.input)

# Connect to device and start pipeline

with dai.Device(pipeline) as device:

# Output queues will be used to get the rgb frames and nn data from the outputs defined above

qRgb = device.getOutputQueue(name="rgb", maxSize=4, blocking=False)

qDet = device.getOutputQueue(name="nn", maxSize=4, blocking=False)

frame = None

detections = []

color2 = (255, 255, 255)

# nn data, being the bounding box locations, are in <0..1> range - they need to be normalized with frame width/height

def frameNorm(frame, bbox):

normVals = np.full(len(bbox), frame.shape[0])

normVals[::2] = frame.shape[1]

return (np.clip(np.array(bbox), 0, 1) * normVals).astype(int)

def displayFrame(name, frame):

color = (255, 0, 0)

for detection in detections:

bbox = frameNorm(frame, (detection.xmin, detection.ymin, detection.xmax, detection.ymax))

cv2.putText(frame, labelMap[detection.label], (bbox[0] + 10, bbox[1] + 20), cv2.FONT_HERSHEY_TRIPLEX, 0.5, 255)

cv2.putText(frame, f"{int(detection.confidence * 100)}%", (bbox[0] + 10, bbox[1] + 40), cv2.FONT_HERSHEY_TRIPLEX, 0.5, 255)

cv2.rectangle(frame, (bbox[0], bbox[1]), (bbox[2], bbox[3]), color, 2)

# Show the frame

cv2.imshow(name, frame)

while True:

inRgb = qRgb.tryGet()

inDet = qDet.tryGet()

if inRgb is not None:

frame = inRgb.getCvFrame()

if inDet is not None:

detections = inDet.detections

if frame is not None:

displayFrame("rgb", frame)

if cv2.waitKey(1) == ord('q'):

break

▌参考资料

https://www.oakchina.cn/2023/02/23/yolov7-blob/

https://docs.oakchina.cn/en/latest/

https://www.oakchina.cn/selection-guide/

OAK中国

| OpenCV AI Kit在中国区的官方代理商和技术服务商

| 追踪AI技术和产品新动态

戳「+关注」获取最新资讯↗↗

![交换字符使得字符串相同[贪心]](https://img-blog.csdnimg.cn/2697794abadd4002bd7f0c3f9f4c2149.png)