— k8s basic —

安装版本信息查询

命令行自动补全功能设置

01. Namespaces and Pods

02. Assigning Pods to Nodes

03. Basic Deployments

04. Advanced Deployment

05. Jobs, Init Containers and Cron Jobs

06. Manage DaemonSets

07. Manage Services

08. Manage Ingress

09. Persistent Volumes

10. ConfigMaps

11. Secrets

12. Debug Problems

CKA environment based practise

—contents—

Playground

Vim Setup

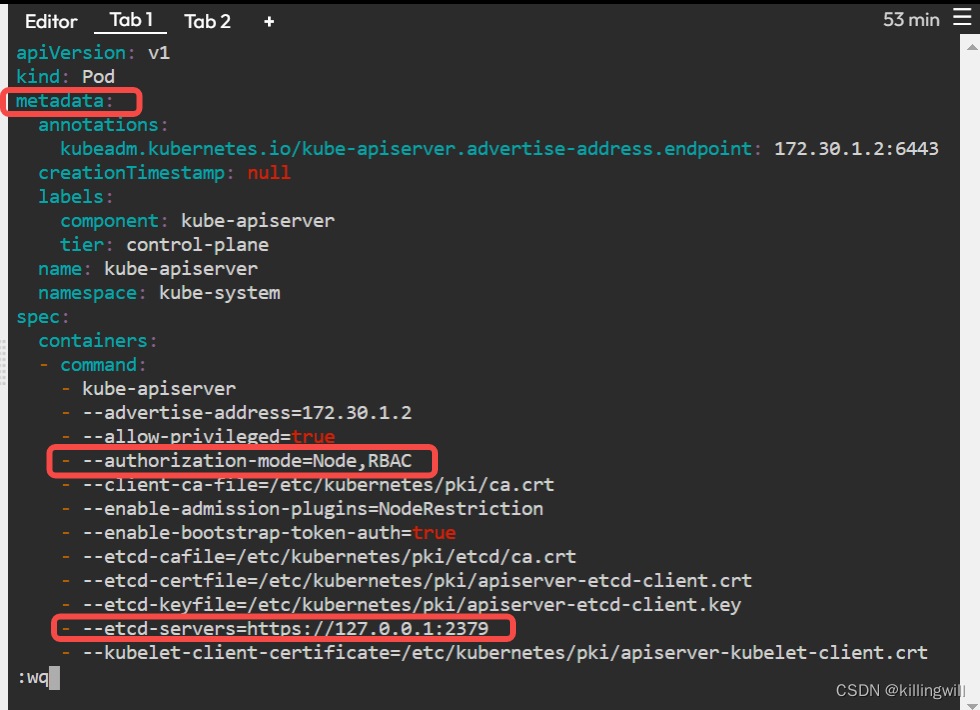

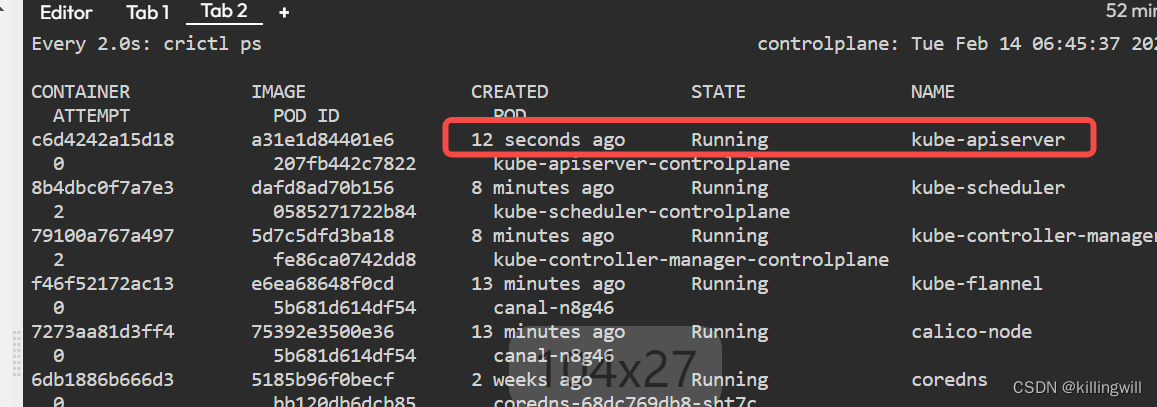

Apiserver Crash

Apiserver Misconfigured

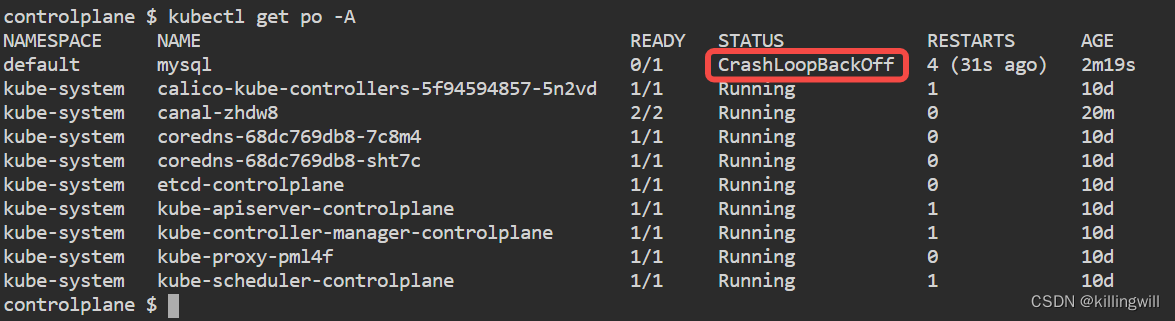

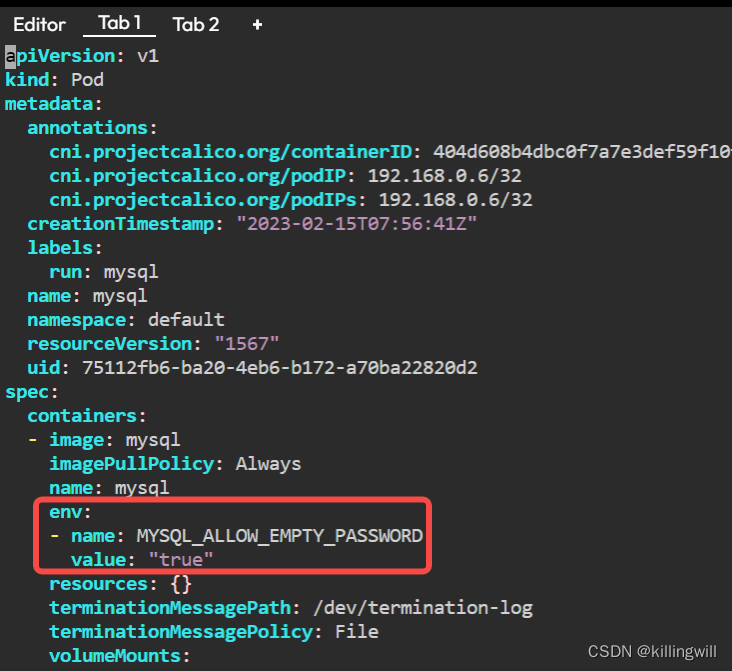

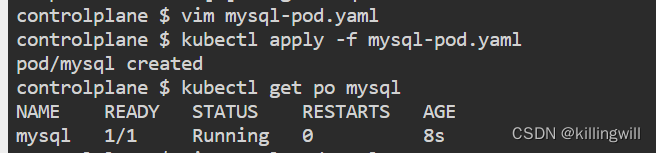

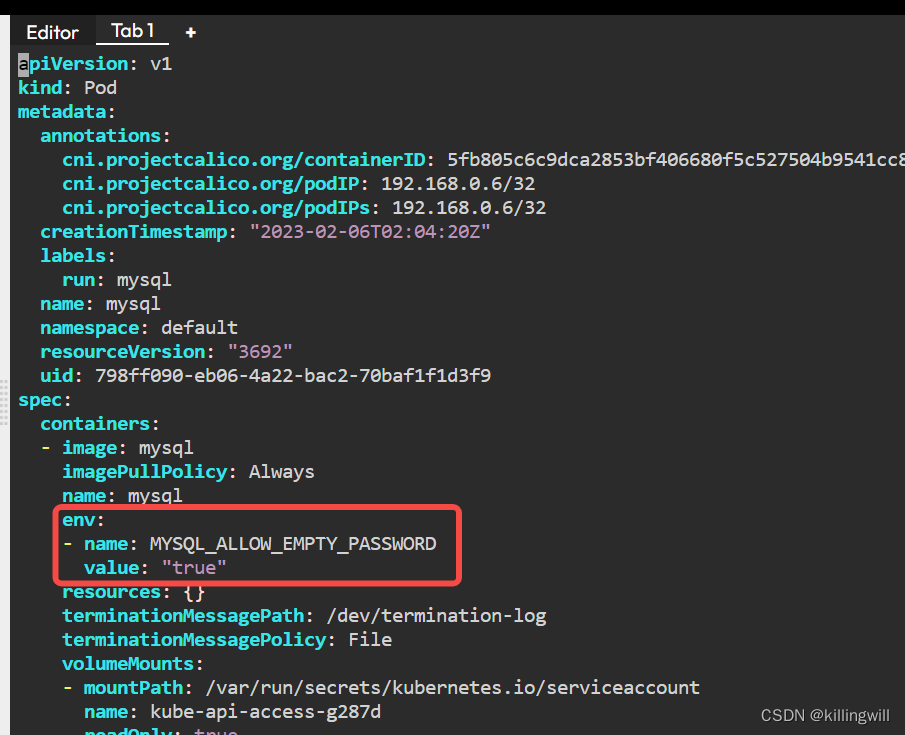

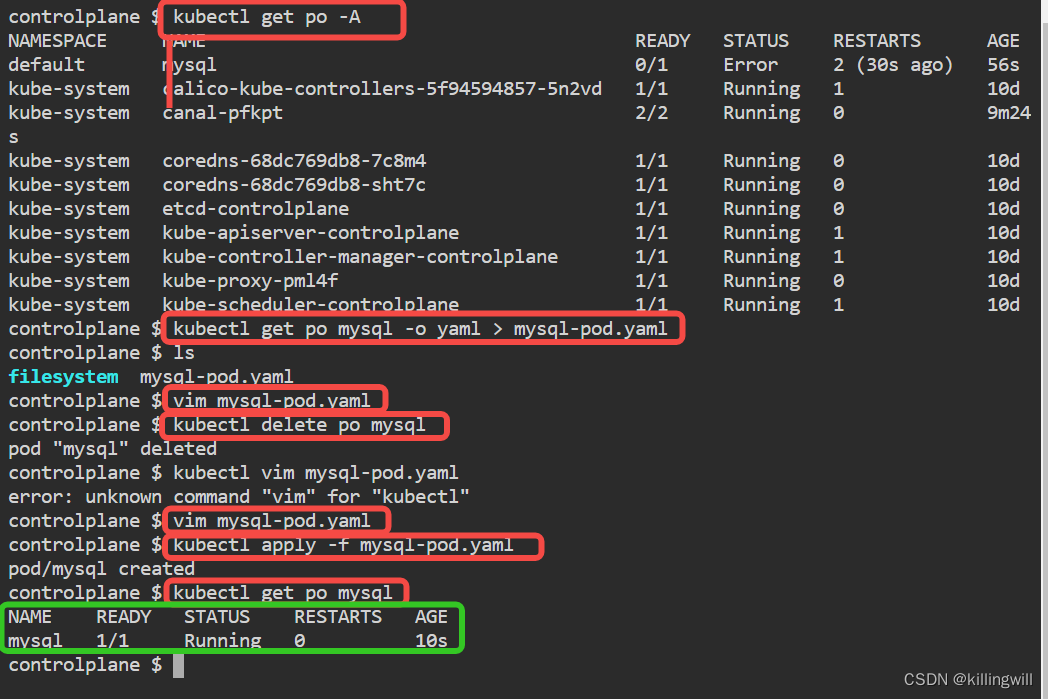

Application Misconfigured

Application Multi Container Issue

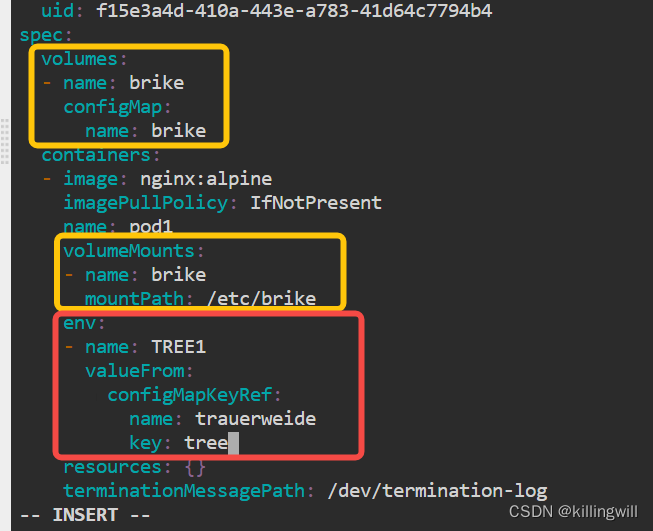

ConfigMap Access in Pods

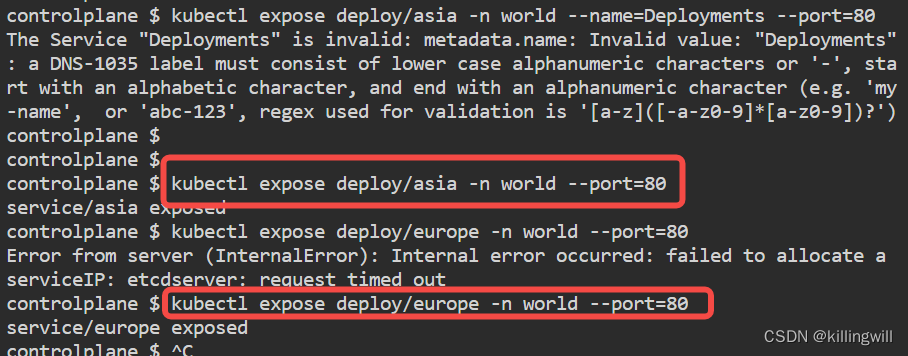

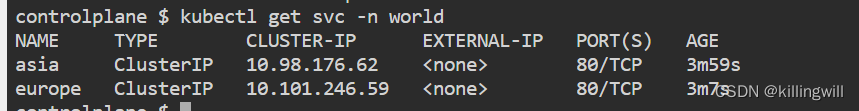

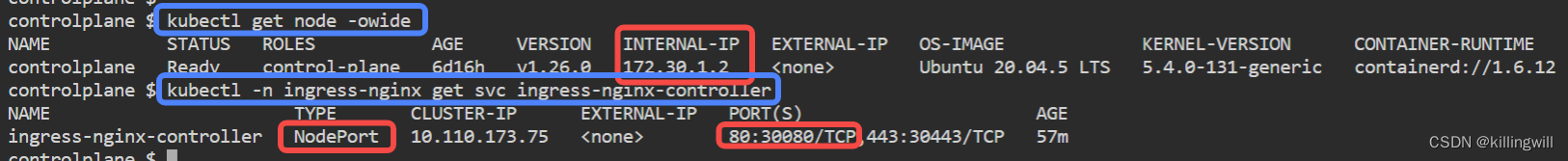

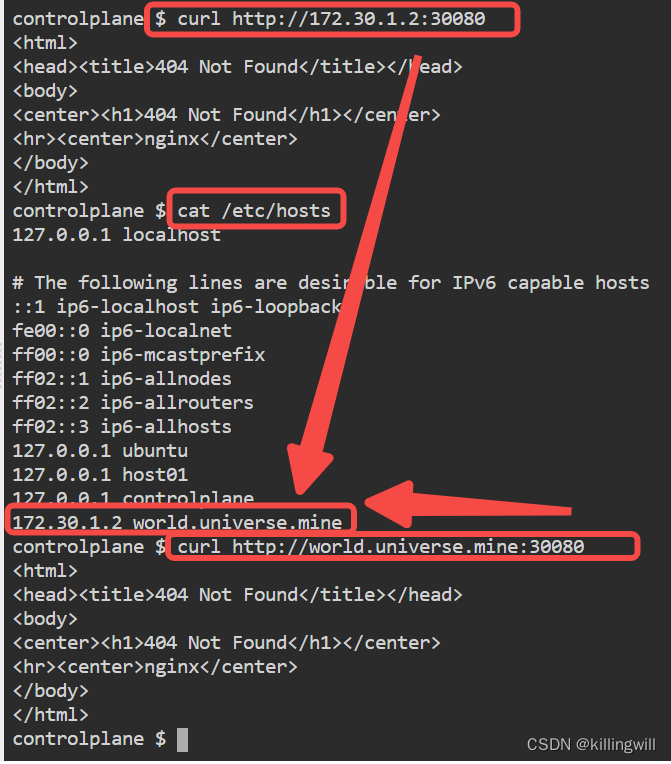

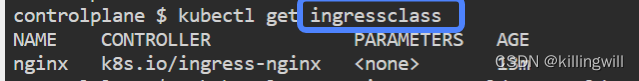

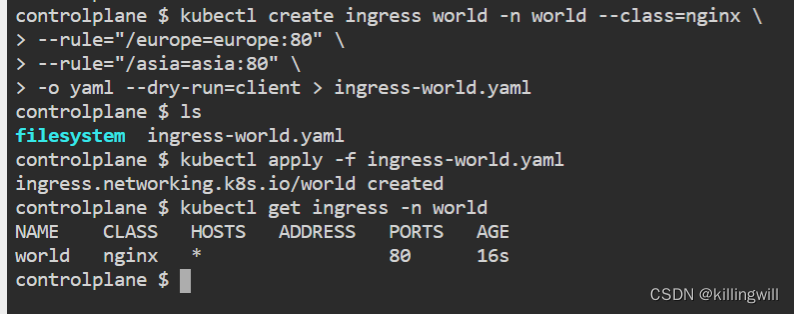

Ingress Create

NetworkPolicy Namespace Selector

RBAC ServiceAccount Permissions

RBAC User Permissions

Scheduling Priority

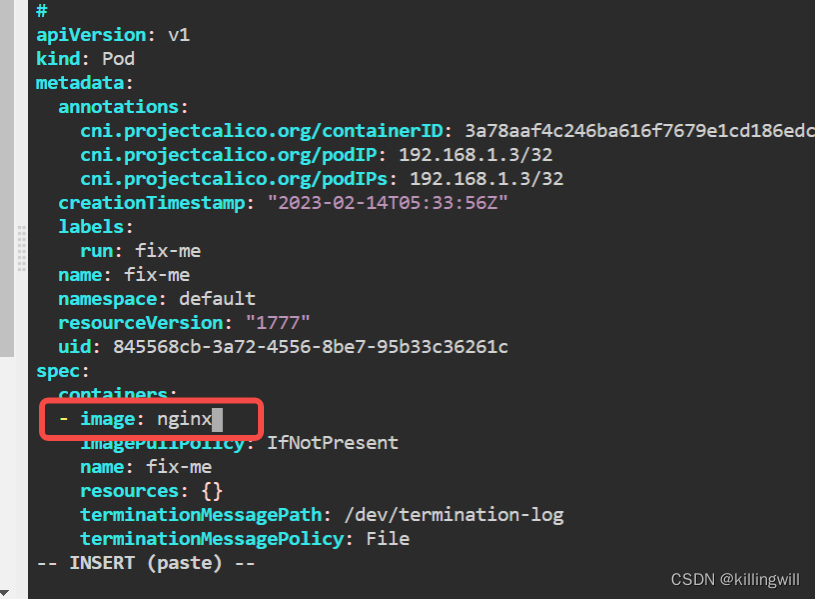

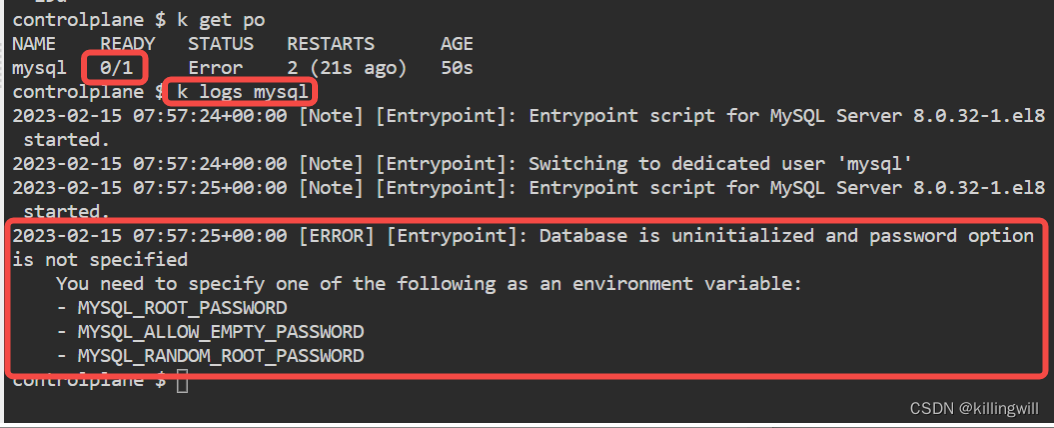

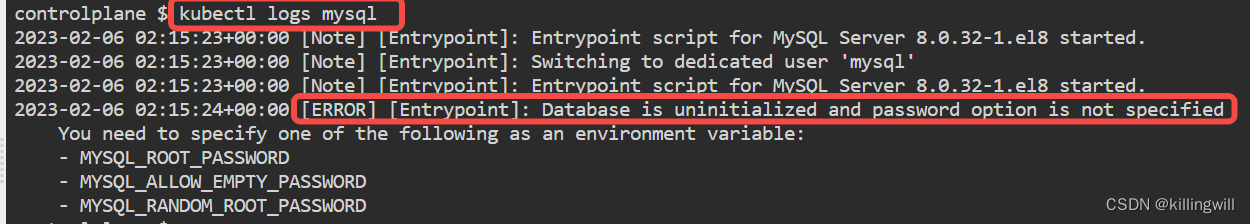

k8s trouble shooting CTF: K8s trouble shooting simple pod errors

Crash Bang Wallop

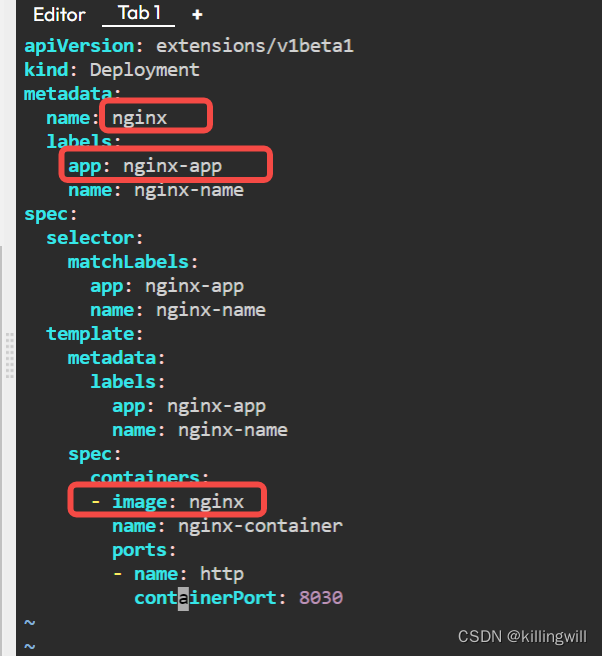

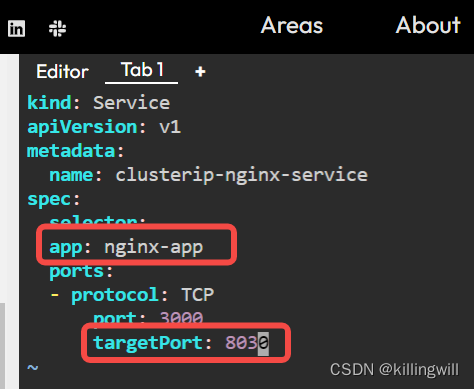

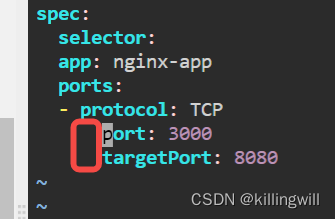

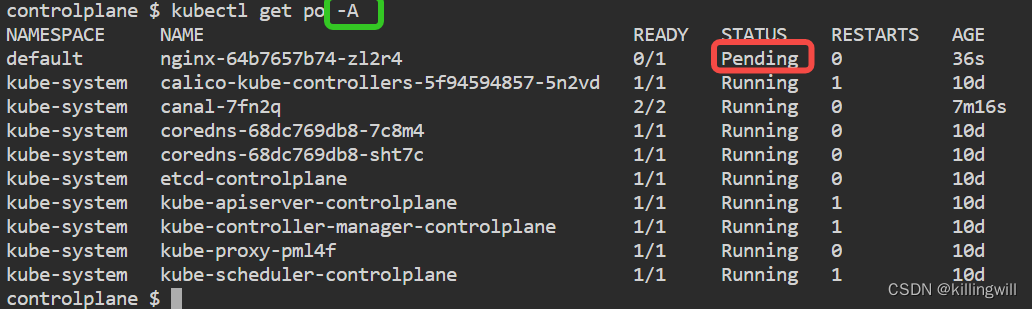

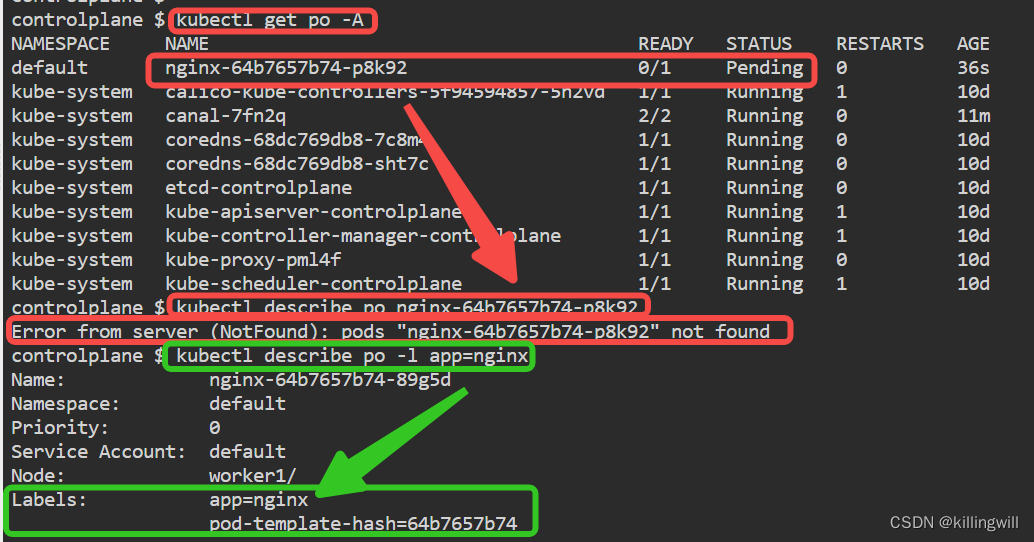

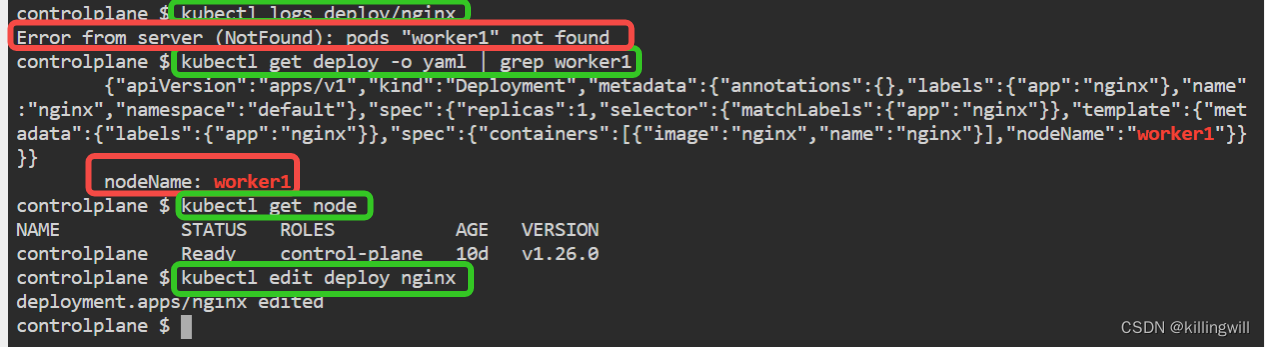

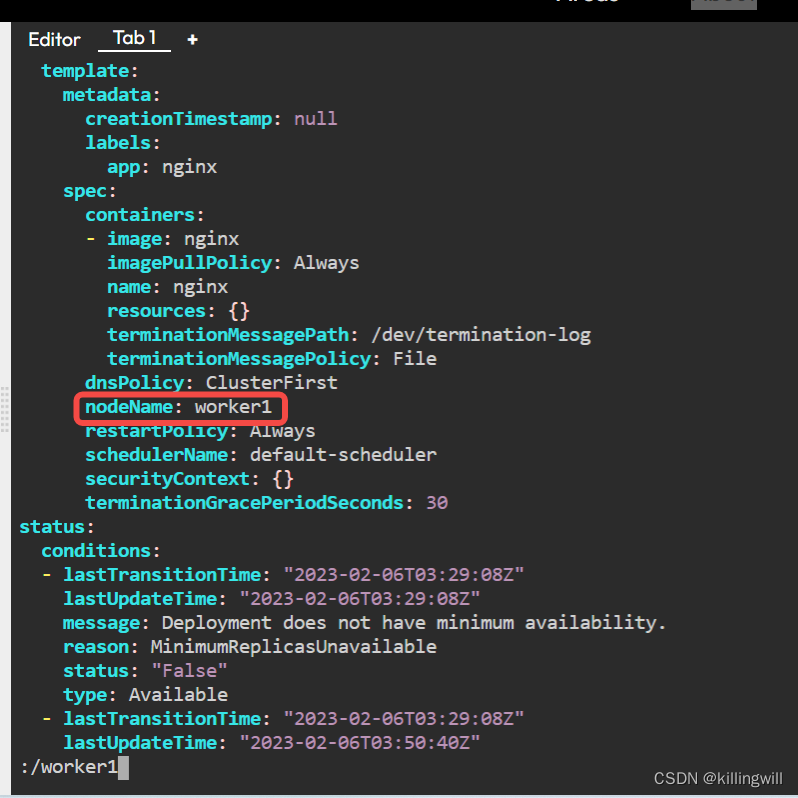

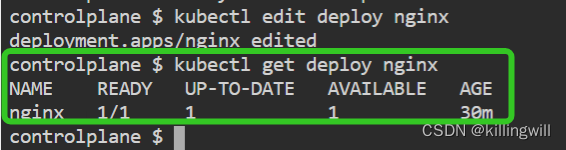

Fixing Deployments

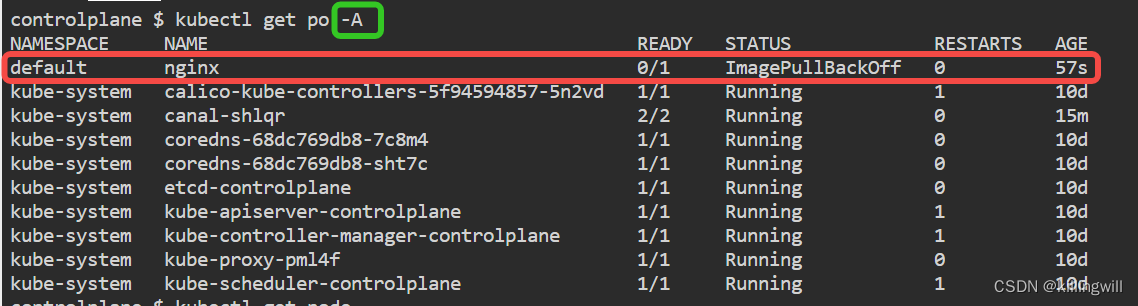

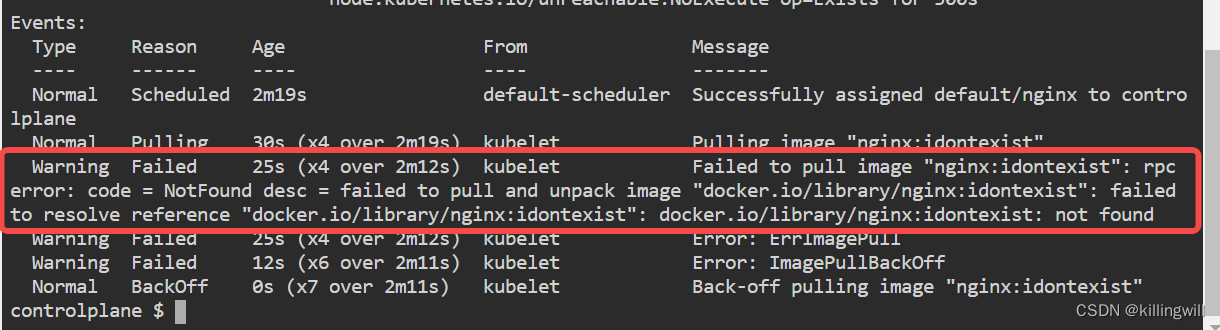

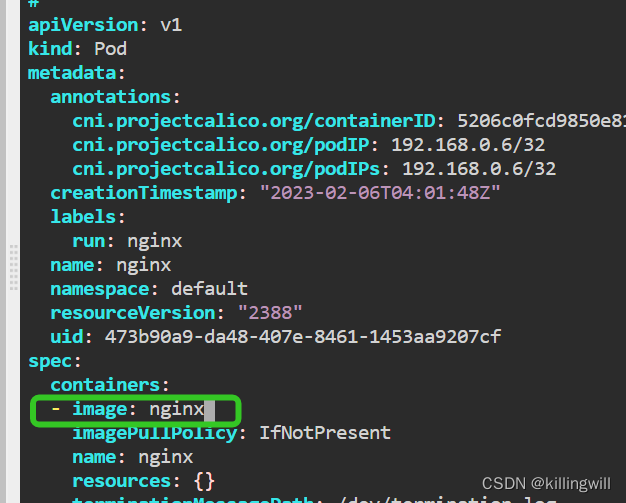

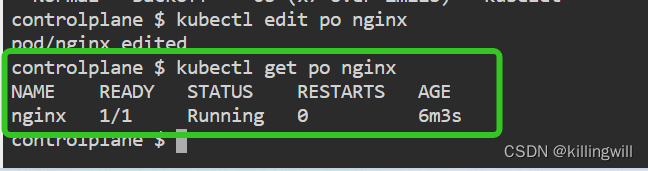

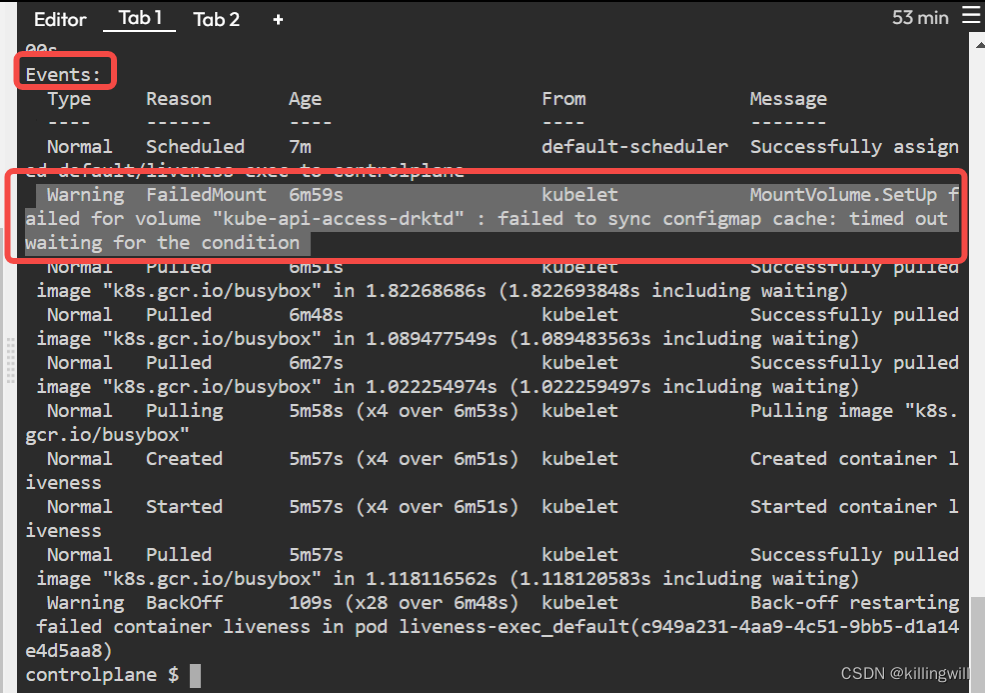

Image issues

Under Construction

Cloud Foundry:

try cloud foundry on k8s

this tutorial provides a brief introduction to deploying applications to Kubernetes via the Cloud Foundry platform.

⭕️online practise:

https://killercoda.com/

NOTE: 如下CKA考试界面在用谷歌浏览器打开的界面上不显示,在edge浏览器中才显示

https://killercoda.com/killer-shell-cka

https://killercoda.com/playgrounds

https://killercoda.com/spcloud/course/kubernetes/

😮 😮 😮

⭕️ 安装版本信息查询

kubectl version [-h|--help]- 简单集群版本信息获取

kubectl version [--short]

NOTE:kubectl versioninformation is deprecated and will be replaced with the output fromkubectl version --short

Flag --short has been deprecated, and will be removed in the future. The --short output will become the default.

- 详细版本信息获取

kubectl version --output=yaml|json

Use --output=yaml|json to get the full version.

⭕️ 集群配置信息获取及安装问题排查

kubectl cluster-info [-h|--help]- 简单集群配置信息获取

kubectl cluster-info

Display addresses of the control plane and services with label kubernetes.io/cluster-service=true.

- 进一步调试诊断集群问题

kubectl cluster-info dump > cluster-info.json

To further debug and diagnose cluster

problems, use ‘kubectl cluster-info dump’.

⭕️ 命令行自动补全功能设置

kubectl提供命令行自动补全功能支持,可选择如下任意一种:

-

bash

1.test if you have bash-completion already installed:type _init_completion

2.if not,then install bash-completion:

Debian os(debian|ubuntu|kali):apt-get install bash-completion

RHLE os(redhat|centos):yum install bash-completion

3.manually source this file in your ~/.bashrc file:source /usr/share/bash-completion/bash_completion

NOTE:export, source命令说明

4.reload your shell and verify that bash-completion is correctly installed:type _init_completion

5.enable kubectl autocompletion bash:

ensure that the kubectl completion script gets sourced in all your shell sessions.

There are two ways in which you can do this:

User:echo 'source <(kubectl completion bash)' >>~/.bashrc #if you have an alias for kubectl, extend shell completion with that alias: echo 'alias k=kubectl' >>~/.bashrc echo 'complete -o default -F __start_kubectl k' >>~/.bashrc #two approaches are equivalent, enable bash autocompletion in current session exec bash NOTE:bash-completion sources all completion scripts in /etc/bas_completion.dSystem:

kubectl completion bash | sudo tee /etc/bash_completion.d/kubectl > /dev/null #if you have an alias for kubectl, extend shell completion with that alias: echo 'alias k=kubectl' >>~/.bashrc echo 'complete -o default -F __start_kubectl k' >>~/.bashrc #two approaches are equivalent, enable bash autocompletion in current session exec bash NOTE:bash-completion sources all completion scripts in /etc/bas_completion.d -

fish

autocomplete for fish requires kubectl 1.23 or later.

1.generate completion script for fish:kubectl completion fish

2.souring the completion script in your shell enable kubectl autocompletion:

to do so in all your shell sessions, add the following line to your ~/.config/fish/config.fish file:

kubectl completion fish | source -

zsh

1.generate completion script for zsh:

kubectl completion zsh

2.souring the completion script in your shell enable kubectl autocompletion:

to do so in all your shell sessions, add the following line to your ~/.zshrc file:

source <(kubectl completion zsh)

01. Namespaces and Pods

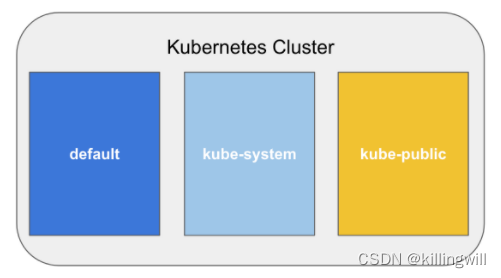

ns:资源隔离

kubectl get ns

k8s predefined namespaces:

-

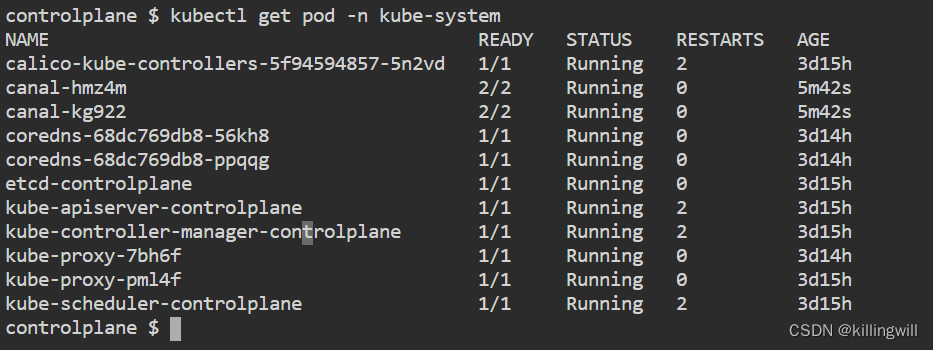

kube-system:has objects created by k8s system.

kubectl get po -n kube-system

-

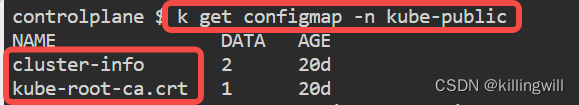

kube-public:has a ConfigMap which contains the bootstrapping and certificate configuration for k8s cluster. visible throught the whloe cluster.

kubectl get po -n kube-public

nothing you will see, but we can see a cluster-info ConfigMap:

kubectl -n kube-public get configmap

kubectl get configmap cluster-info -n kube-public -o yaml

in addition, this ns can be treated as a location used to run an object which should be visible and readable throught the whole cluster.

- default:all objects created without specifying a ns will automatically be created in the default ns, and default ns can not be deleted.

kubectl get po

kubectl get po -n default

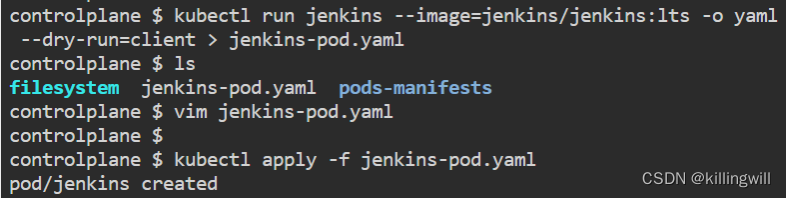

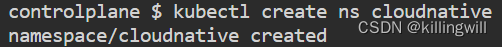

practise:

kubectl create ns cloudnative

#使用kubectl run <podName> --image -oyaml --dry-run > outputfilename.yaml 快速创建一个pod的yaml文件

kubectl run jenkins --image=jenkins/jenkins:lts -o yaml --dry-run=client > jenkins-pod.yaml

ls

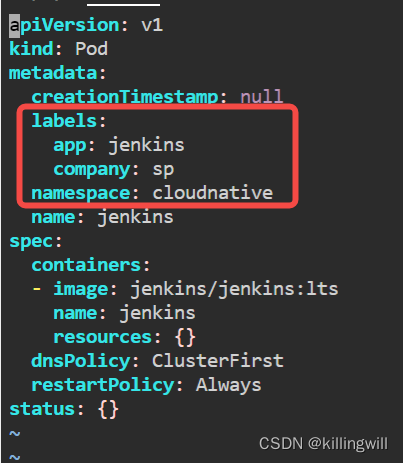

vim jenkins-pod.yaml

kubectl apply -f jenkins-pod.yaml

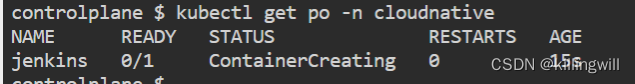

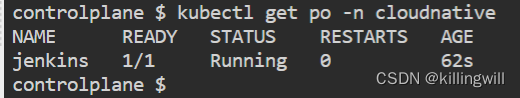

kubectl get po jenkins -n cloudnative

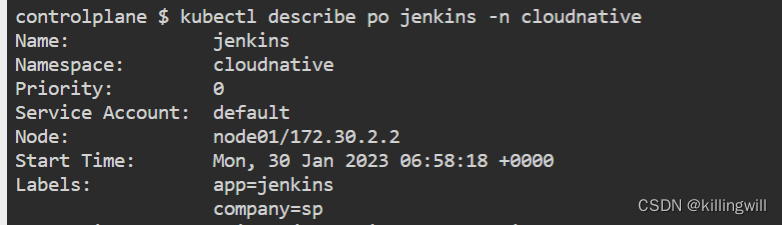

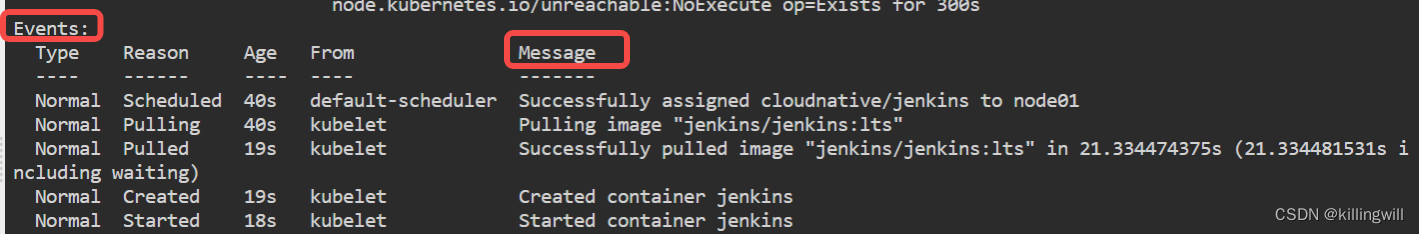

kubectl describe po jenkins -n cloudnative

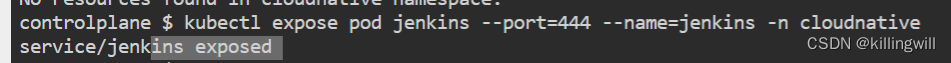

#使用kubectl expose pod <podname> -n <podnamespace> --port=<serviceport> --name=<servicename>

#快速将一个pod暴露为服务

kubectl expose po jenkins -n cloudnative --port=444 --name=jenkins

kubectl get svc -n cloudnative

A. Create namespace: cloudnative

B. Create a pod with the following constraints:

Pod name: jenkins

- Deploy pod in a cloudnative namespace

- Add additional labels:

app: jenkins

company: sp- Use this container image: jenkins/jenkins:lts

C. [Optional] Expose the pod using a service and check if Jenkins is running successfully

02. Assigning Pods to Nodes

# 查询所有ns下的po,使用-owide选项可看到该pod位于那个node上

kubectl get po -A -owide

k8s scheduler automatically assigns pods to nodes.

if you want to decide this, there are two ways:

- 节点选择器:

nodeSeletor

将pod调度到具有特定标签的节点上。

若满足pod的nodeSelector的k-v条件在任意node均不存在,则该pod调度失败。 - 亲和性|反亲和性:

Affinity|Anti-Affinity

上述两点都是通过标签labels及标签选择器selector实现。

https://kubernetes.io/docs/concepts/scheduling-eviction/assign-pod-node/

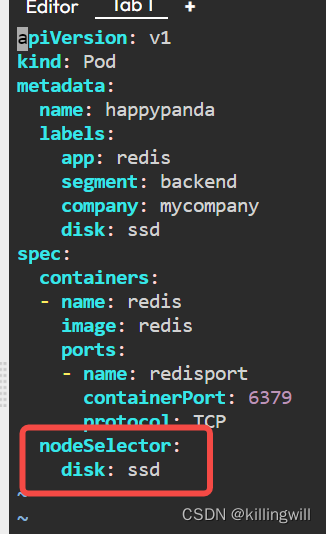

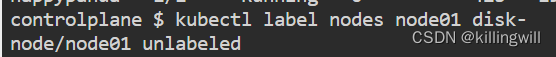

task:schedule happypanda pod to the node01 node by using nodeSelector(disk=ssd)

- discover node labels

kubectl get nodes --show-labels- add a new node label

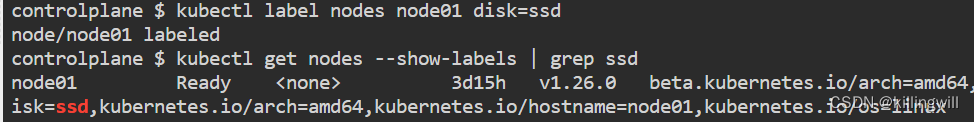

kubectl label nodes node01 disk=ssd

kubectl get nodes node01 --show-labels | grep ssd

- assign the happypanda pod to node01, matching disk:ssd label

cat /manifests/pod-nodeselector.yaml

kubectl apply -f /manifests/pod-nodeselector.yaml

- verify pod has been successfully scheduled on the node01 node:

kubectl get po -o wide

- delete pod

kubectl delete -f /manifests/pod-nodeselector.yaml

or

kubectl delete pod happypanda- delete label

kubectl label node node01 disk-

亲和性:

- NodeAffinity:schedule pods on specific nodes.

- PodAffinity: run multiple pods on specific nodes.

Node Affinity:

spec.affinity.nodeAffinity.preferredDuringSchedulingIgnoredDuringExecution: Soft NodeAffinity and Anti-Affinity.

if the node label exists: the pod will run there.

if not: the pod will be rescheduled elsewhere within the cluster.

# node-soft-affinity.yaml

# 使用preferred(soft) + In operator(affinity)

apiVersion: v1

kind: Pod

metadata:

name: happypanda

labels:

app: redis

segment: backend

company: mycompany

disk: ssd

spec:

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 1

preference:

matchExpressions:

- key: fruit

operator: In

values:

- apple

containers:

- name: redis

image: redis

ports:

- name: redisport

containerPort: 6379

protocol: TCP

# node-soft-anti-affinity.yaml

# 使用preferred(soft) + NotIn operator(anti-affinity)

apiVersion: v1

kind: Pod

metadata:

name: happypanda

labels:

app: redis

segment: backend

company: mycompany

disk: ssd

spec:

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 1

preference:

matchExpressions:

- key: fruit

perator: NotIn

values:

- apple

containers:

- name: redis

image: redis

ports:

- name: redisport

containerPort: 6379

protocol: TCP

spec.affinity.nodeAffinity.requiredDuringSchedulingIgnoredDuringExecution: Hard NodeAffinity and Anti-Affinity.

if the node label does not exist, the pod won`t be scheduled at all.

# node-hard-affinity.yaml

# 使用required(hard) + In operator(affinity)

apiVersion: v1

kind: Pod

metadata:

name: happypanda

labels:

app: redis

segment: backend

company: mycompany

disk: ssd

spec:

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 1

preference:

matchExpressions:

- key: fruit

operator: In

values:

- apple

containers:

- name: redis

image: redis

ports:

- name: redisport

containerPort: 6379

protocol: TCP

#node-hard-anti-affinity.yaml

#使用required(hard) + NotIn operator(anti-affinity)

apiVersion: v1

kind: Pod

metadata:

name: happypanda

labels:

app: redis

segment: backend

company: mycompany

disk: ssd

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: fruit

operator: NotIn

values:

- apple

containers:

- name: redis

image: redis

ports:

- name: redisport

containerPort: 6379

protocol: TCP

Pod Affinity:

spec.affinity.podAffinity.preferredDuringSchedulingIgnoredDuringExecution: Soft Pod Affinity.

if the preferred option is available: the pod will run there.

if not: the pod scheduled any where.spec.affinity.podAffinity.requiredDuringSchedulingIgnoredDuringExecution: Hard Pod Affinity.

if the required option is not available: the pod cannot run.

# pod-hard-affinity.yaml

# if none of the nodes are labelled with fruit=apple, then the pod won`t be scheduled.

apiVersion: v1

kind: Pod

metadata:

name: happypanda

labels:

app: redis

segment: backend

company: mycompany

disk: ssd

spec:

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: fruit

operator: In

values:

- apple

topologyKey: kubernetes.io/hostname

containers:

- name: redis

image: redis

ports:

- name: redisport

containerPort: 6379

protocol: TCP

Pod Anti-Affinity:

spec.affinity.podAntiAffinity.perferredDuringSchedulingIgnoredDuringExecution: Soft Pod Anti-Affinity.spec.affinity.podAntiAffinity.requiredDuringSchedulingIgnoredDuringExecution: Hard Pod Anti-Affinity.

pod anti-affinity works the opposite way of pod affinity.

If one if the nodes has a pod running with label fruit=apple, the pod will be scheduled on different node.

03. Basic Deployments

Tip --dry-run with -o=yaml is an excellent way to generate configurations!

# 使用yaml的输出形式可看到输出为一个items列表

kubectl get po -o yaml

kubectl describe po $(kubectl get po --no-headers=true|awk '{print $1}')

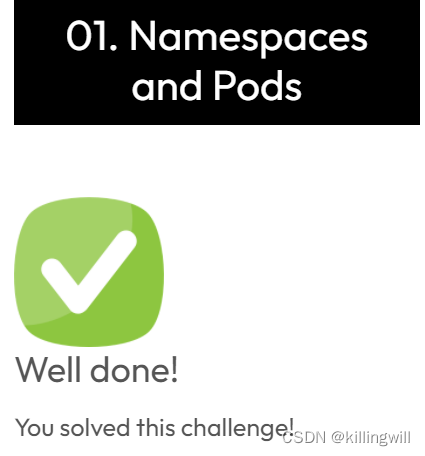

# Job Logs:

# 1.in order to see the job`s log we need get the name of the job.

# jsonpath的路径参考-o为yaml时的输出形式

kubectl get pods -o 'jsonpath={.items[0].metadata.name}'; echo

# 2.the execute the following command to get the logs:

kubectl logs `kubectl get po -o 'jsonpath={.items[0].metadata.name'`

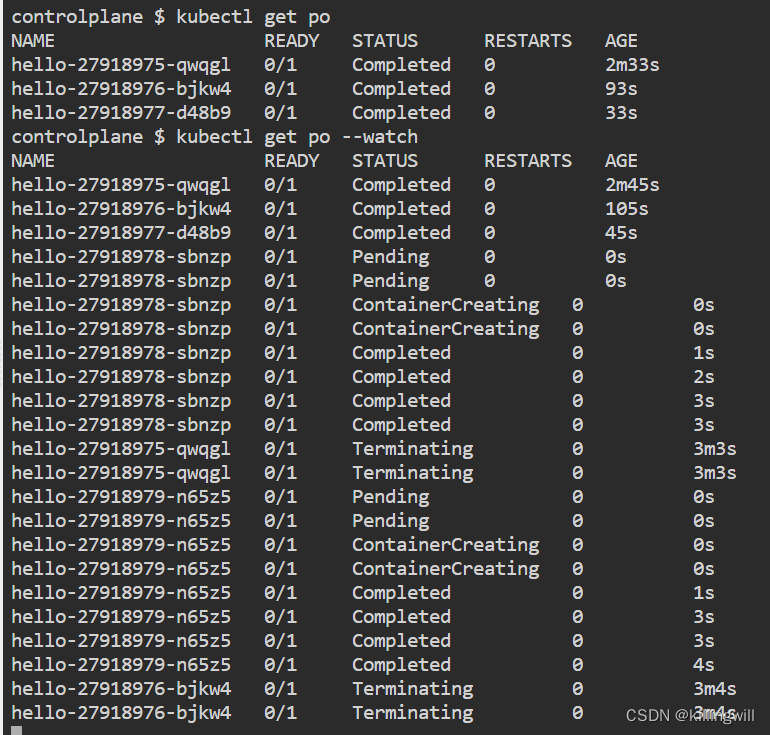

kubectl get cronjob --watch

kubectl get po --watch

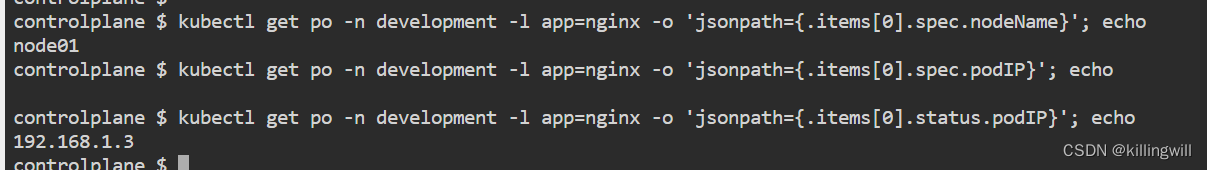

kubectl get po -n development -l app=nginx -o 'jsonpath={.items[0].spec.nodeName}'; echo

kubectl get po -n deployment -l app=nginx -o 'jsonpath={.items[0].status.podIP}'; echo

kubectl get po -n development -l app=nginx -o 'jsonpath={.items[0].spec.nodeName}'; echo

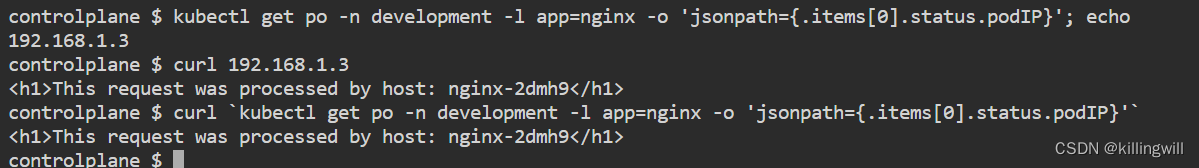

kubectl get po -n development -l app=nginx -o 'jsonpath={.items[0].status.podIP}'; echo

curl <podIP>

curl `kubectl get po -n development -l app=nginx -o 'jsonpath={.items[0].status.podIP}'`

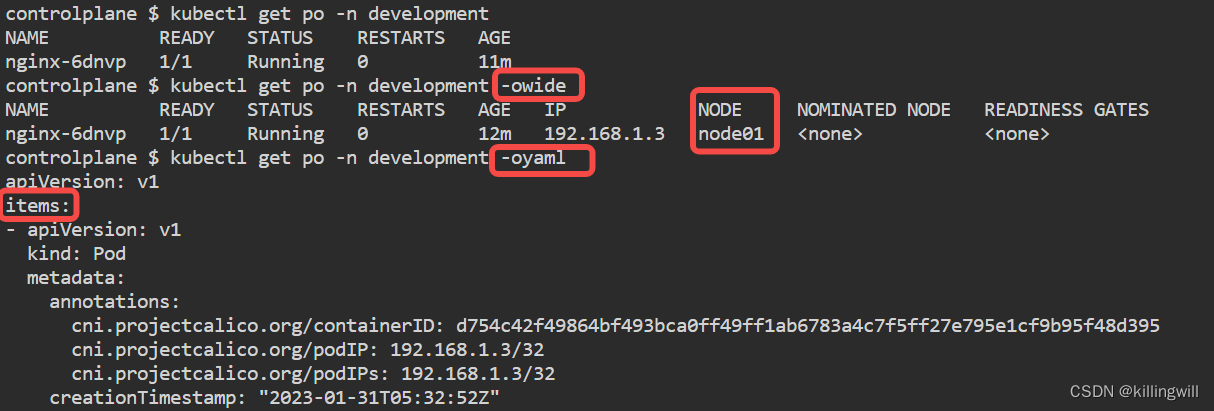

kubectl get po -n <nsname>

kubectl get po -n <nsname> -owide

kubectl get po -n <nsname> -oyaml

kubectl get po -n <nsname> --no-headers=true | awk '{print $1}'; echo

kubectl get po -n <nsname> -l <k=v>

kubectl get po --show-labels

04. Advanced Deployment

The goal of this Lab is to explain Kubernetes deployments.

This labs will explain the concept of ReplicaSets, Deployments, and have us create multiple Deployments using different strategies.

ReplicaSets

Before we work with Deployments, we’ll go over the basics of ReplicaSets. A ReplicaSet will ensure that the desired number of replicas of each Pod are up and running. Any time a Pod goes down, the ReplicaSet will deploy a new one to maintain high availability.

Now inspect the file ./resources/tutum-rs.yaml . It should look familiar to the Pod resource. We do have a few additions. These additions are what configure our ReplicaSet.

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: tutum-rs

labels:

app: tutum

spec:

replicas: 3

selector:

matchLabels:

app: tutum

template:

metadata:

labels:

app: tutum

spec:

containers:

- name: tutum

image: tutum/hello-world

ports:

- containerPort: 80

The biggest additions are replicas: 3 and selector . The first component configures the total number of replicas of the Pod should be active at all times. The selector matches a set of constraints to identify Pods to represent. In this case, the ReplicaSet will track Pods with the label app=tutum .

We can deploy this ReplicaSet the same way we did Pods:

kubectl create -f ./resources/tutum-rs.yaml

Now watch Kubernets create 3 tutum Pods based on the specification in the tutum-rs.yaml file.

kubectl get po --watch

Wait for the pods to be created. You can press CTRL-C to stop watching.

Inspect the ReplicaSet .

NOTE: rs is shorthand for ReplicaSet

kubectl describe rs tutum-rs

Now modify the ReplicaSet to instantiate 5 pods by changing the replicas: 3 value.

kubectl edit rs tutum-rs

With edit , you can live edit the configuration of the resource in Kubernetes. However, it will not edit the underlying Manifest file representing the object.

Scaling

In the last step you scaled up the tutum-rs ReplicaSet to 5 pods by editing the spec file. Those changes were automatically applied.

Manual Scaling

To manually scale a ReplicaSet up or down, use the scale command. Scale the tutum pods down to 2 with the command:

kubectl scale rs tutum-rs --replicas=2

You can verify that 3 of the 5 tutum instances have been terminated:

kubectl get pods

or watch them until they finish

kubectl get po --watch

Of course, the ideal way to do this is to update our Manifest to reflect these changes.

AutoScaling

Kubernetes provides native autoscaling of your Pods. However, kube-scheduler might not be able to schedule additional Pods if your cluster is under high load. In addition, if you have a limited set of compute resources, autoscaling Pods can have severe consequences, unless your worker nodes can automatically scale as well (e.g. AWS autoscaling groups).

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: frontend-scaler

spec:

scaleTargetRef:

kind: ReplicaSet

name: tutum-rs

minReplicas: 3

maxReplicas: 10

targetCPUUtilizationPercentage: 50

To see all the autoscale options:

kubectl autoscale --help

It is also possible to automatically generate a config file, which we’ve seen before. The command to output a YAML config looks like this:

kubectl autoscale rs tutum-rs --max=10 --min=3 --cpu-percent=50 --dry-run=client -o=yaml

Note --dry-run=client , this means that Kubernetes will not apply the desired state changes to our cluster. However, we provided it with -o=yaml , which means output the configuration as YAML. This lets us easily generate a Manifest.

Tip --dry-run with -o=yaml is an excellent way to generate configurations!

We’ve provided this content in ./resources/hpa-tutum.yaml .

Now actually apply the configuration: kubectl create -f ./resources/hpa-tutum.yaml

At this point, we have a ReplicaSet managing the Tutum Pods, with Horizontal Pod Autoscaling configured. Let’s clean up our environment:

kubectl delete -f ./resources/hpa-tutum.yaml

kubectl delete -f ./resources/tutum-rs.yaml

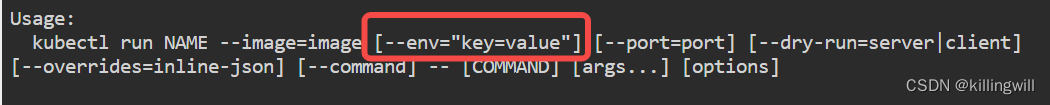

Deployments on the CLI

Kubernetes Deployments can be created on the command line with kubectl run . It enables you to configure both the Pods and ReplicaSets.

kubectl run NAME --image=image

--port=port]

[--env="key=value"]

[--replicas=replicas]

[--dry-run=bool]

[--overrides=inline-json]

[--command]

-- [COMMAND] [args...]

To create a simple Kubernetes deployment from the command-line:

kubectl create deployment tutum --image=tutum/hello-world --port 80

Congrats, you have just created your first Deployment. The run command created a Deplyment which automatically performed a few things for you:

- it searched for a suitable node to run the pod

- it scheduled the pod to run on that Node

- it configured the cluster to restart / reschedule the pod when needed

Basically, it created all of the objects we defined, which include Pods and ReplicaSets. It scheduled the Pods on a node capable of accepting workloads.

Let’s think back, what is the difference between this command, and how we create Pods on the CLI?

--restart=Never

To verify that the command created a Deployment:

kubectl get deployments

To see the Pods created by the Deployment:

kubectl get pods

To see the ReplicaSet created by the Deployment:

kubectl get replicasets

We can also get more information about our Deployment:

kubectl describe deployment tutum

The magic of Deployments

If a pod that was created by a Deployment should ever crash, the Deployment will automatically restart it. To see this in action, kill the Pod directly:

kubectl delete pod $(kubectl get pods --no-headers=true | awk '{print $1;}')

The pod should be deleted successfully. Now wait a moment or two and check the pod again:

kubectl get pods

Notice the the pod is running again. This is because the Deployment will restart a pod when it fails. What actually restarts those Pods?

Let’s quickly clean up and delete our Deployment: kubectl delete deployment tutum

Deployment Manifests

The most effective and repeatable way to manage our Deployments is with Manifest files. Here is one that defines our simple Tutum application (./resources/tutum-simple.yaml ):

apiVersion: apps/v1

kind: Deployment

metadata:

name: tutum-deployment

spec:

template:

spec:

containers:

- name: tutum

image: tutum/hello-world

ports:

- containerPort: 80

If we look at this Deployment, it looks very similar to our PodSpec and RS Manifests. We can add any configuration that we’ve already covered in the Pod section to this manifest. We should also configure the ReplicaSet to match our replication requirements.

Let’s create our Deployment: kubectl create -f ./resources/tutum-simple.yaml

All Kubernetes deployment YAML files must contain the following specifications:

apiVersion - apps/v1

The apiVersion specifies the version of the API to use. The API objects are defined in groups. The deployment object belongs to the apps API group. Group objects can be declared alpha , beta , or stable :

alpha- may contain bugs and no guarantee that it will work in the future. Example:(object)/v1alpha1beta- still somewhat unstable, but will most likely go into the Kubernetes main APIs. Example:(object)/v1beta1stable- Only stable versions are recommended to be used in production systems. Example:apps/v1

NOTE: You can check the latest Kubernetes API version here: https://kubernetes.io/docs/reference/

kind - Deployment

A kind value declares the type of Kubernetes object to be described in the Yaml file. Kubernetes supports the followng ‘kind’ objects:

componentstatusesconfigmapsdaemonsetsDeploymenteventsendpointshorizontalpodautoscalersingressjobslimitrangesNamespacenodespodspersistentvolumespersistentvolumeclaimsresourcequotasreplicasetsreplicationcontrollersserviceaccountsservices

metadata

The metadata declares additional data to uniquely identify a Kubernetes object. The key metadata that can be added to an object:

labels- size constrained key-value pairs used internally by k8s for selecting objects based on identifying informationname- the name of the object (in this case the name of the deployment)namespace- the name of the namespace to create the object (deployment)annotations- large unstructured key-value pairs used to provide non-identifying information for objects. k8s cannot query on annotations.spec- the desired state and characteristics of the object. spec has three important subfields:replicas: the numbers of pods to run in the deploymentselector: the pod labels that must match to manage the deploymenttemplate: defines each pod (containers, ports, etc. )

Interacting with Deployments

Now that we have created our Tutum Deployment, let’s see what we’ve got:

kubectl get deployments

kubectl get rs

kubectl get pods

Managing all of these objects by creating a Deployment is powerful because it lets us abstract away the Pods and ReplicaSets. We still need to configure them, but that gets easier with time.

Let’s see what happened when we created our Deployment:

kubectl describe deployment tutum-deployment

We can see that, in the events for the Deployment, a ReplicaSet was created. Let’s see what the ReplicaSet with kubectl describe rs . Your ReplicaSet has a unique name, so you’ll need to tab-complete.

When we look at the ReplicaSet events, we can see that it is creating Pods.

When we look at our Pods with kubectl describe pod , we’ll see that the host pulled the image, and started the container.

Deployments can be updated on the command line with set . Here’s an example:

kubectl set image deployment/tutum-deployment tutum=nginx:alpine --record

Remember, Kubernetes lets you do whatever you want, even if it doesn’t make sense. In this case, we updated the image tutum to be nginx , which is allowed, although strange.

Let’s see what happened now that we upated the image to something strange:

kubectl describe deployment tutum-deployment

We can see the history, which includes scaling up and down ReplicaSets for Pods from our command. We can also view revision history:

kubectl rollout history deployment tutum-deployment

We didn’t specify any reason for our updates, so CHANGE-CLAUSE is empty. We can also update other configuration options, such as environment variables:

kubectl set env deployment/tutum-deployment username=admin

How do we view those updated environment variables?

Let’s get the name of the Pod

We need to exec env inside the Pod

You can also update resources , selector , serviceaccount and subject .

For now, let’s simply delete our Deployment:

kubectl delete -f ./resources/tutum-simple.yaml

Configuring Tutum

Now that we’ve covered a simple Deployment, let’s walk through and create a fully featured one. We will deploy Tutum with additional configuration options.

First, let’s all start off with the same file: touch ./resources/tutum-full.yaml

Now, let’s populate this file with the base content for a Deployment:

apiVersion:

kind:

metadata:

spec:

What values should we put in for each of these?

apiVersion: apps/v1kind: Deploymentmetadata? We need to apply anameandlabels, let’s sayapp=tutumspecis a complex component, where we need to configure our RS and Pod

We should have something similar to shis:

apiVersion: apps/v1

kind: Deployment

metadata:

name: tutum-deployment

labels:

app: tutum

spec:

Next, let’s add the scaffolding required to configure the RS and Pods:

apiVersion: apps/v1

kind: Deployment

metadata:

name: tutum-deployment

labels:

app: tutum

spec:

# RS config goes here

template:

metadata:

spec:

containers:

Now that we’ve got the scaffolding, let’s configure the RS

We need to set the number of replicas

We need to configure a selector to matchLabels

Let’s stick with 3 replicas. Remember, we need the RS To match labels on the Pod.

apiVersion: apps/v1

kind: Deployment

metadata:

name: tutum-deployment

labels:

app: tutum

spec:

replicas: 3

selector:

matchLabels:

app: tutum

template:

metadata:

spec:

containers:

Now we need to give the Pods the appropriate labels so the RS will match with it. In addition, let’s configure the containers.

- We need to apply the label app=tutum to the Pod

- We need a single container, let’s call it tutum

- We need to specify the image, which is tutum/hello-world

- The Pod needs to expose port 80

This configuration leads us to create this file (or similar):

apiVersion: apps/v1

kind: Deployment

metadata:

name: tutum-deployment

labels:

app: tutum

spec:

replicas: 3

selector:

matchLabels:

app: tutum

template:

metadata:

labels:

app: tutum

spec:

containers:

- name: tutum

image: tutum/hello-world

ports:

- containerPort: 80

Now that we’ve got the application configured, we can always add additional PodSpec like what we did in the previous Pod lab. For now, let’s deploy the application:

kubectl create -f ./resources/tutum-full.yaml

Who can help me validate that the Deployment was successful?

- Check Pod status

- Make a request against the webserver

At this point, you can make Deployments as simple or advanced as you want.

Configuring NGiNX

Now that we’ve covered Manifest files, its time to tackle some real applications.

Form groups of 2-3 people, and you will put together a Manifest file to help us configure an application. Here’s what you need to do:

- Create a Manifest file:

touch ./resources/nginx-self.yaml - Fill in the required configuration information to run your Deployment

- Run the Deployment and make sure it works properly

Here is what you’ll need to put in your Pod (in addition to other requirements, like apiVersion):

- Name the Deployment

- Configure labels for the Deployment

- Have the RS maintain 5 Pods

- Use the nginx-alpine image

- Listen on port 80

- Configure environment variables user=admin, password=root, host=katacoda

- Configure resource limits: 1 CPU core, 256 MB of RAM

Once you’ve created the Manifest file, save it, and create the Deployment: kubectl create -f ./resources/nginx-self.yaml

Next, to prove it is working correctly, open up a shell and view the environment variables, and CURL the welcome page from your host. After that, get the logs to make sure everything is working properly. Finally, open up a shell on the Pod, and find out what processes are running inside.

HINT: you might need to install additional software.

Bonus Task: update the image used by the Pod to alpine:latest , apply the configuration change, what happens?

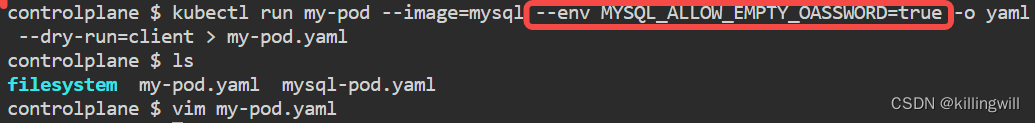

Configuring Minecraft

Next, we will deploy software many of probably has heard of, the Minecraft server. The Minecraft server is free to run, and many people have made businesses out of hosting other people’s servers. We’ll do this with Kubernetes.

The configuration is relatively simple. Create your base file: touch ./resources/minecraft.yaml

Now, you need to configure the following values, in addition to everything else necessary to create a Deployment:

- replicase = 1

- image = itzg/minecraft-server

- environment variables: EULA=“true”

- container port = 25565

- volume: Pod local = /data , use an emptyDir for the actual storage

There are many more scaffolding requirements for this Deployment, such as apiVersion . Refer back to your notes, and you may need to check out what you’ve previously done in the Pod lab. You can find old files that you’ve previously worked on in the /old/ directory on this host.

Once you’ve deployed it, it should be pretty easy to verify that everything is working correctly.

Deploying applications is really easy with Kubernetes. If any of you have softare running on a server in your home, I can almost guarantee someone is currently maintaining a Deployment Manifest for it on GitHub.

Rolling Updates and Rollbacks

Now that we’ve gotten a good taste of creating our own Deployments, its time to use the rolling update and rollback features.

First, let’s all start off with a fully configured NGiNX Deployment, located at ./resources/nginx.yaml

Here are the file contents:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 5

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80

For our ReplicaSet, we can configure a strategy that defines how to safely perform a rolling update.

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

type: RollingUpdate

This strategy utilizes Rolling Updates. With rolling updates, Kubernetes will spin up a new Pod, and when it is ready, tear down an old Pod. The maxSurge refers to the total number of Pods that can be active at any given time. If maxSurge = 6 and replicas = 5, that means 1 new Pod (6 - 5) can be created at a time for the rolling update. maxUnavailable is the total number (or percentage) of Pods that can be unavailable at a time.

Here is what our Manifest looks like after integrating this:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 5

selector:

matchLabels:

app: nginx

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80

Now, let’s apply this configuration change: kubectl apply -f ./resources/nginx.yaml

Now that the application is deployed, lets update the Manifest to use a different image: nginx:alpine . Now apply the changes.

kubectl get pods --watch

We can see that the Pods are being updated one at a time. If we look at the Deployment events, we can see this as well:

kubectl describe deployment nginx-deployment

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 1m deployment-controller Scaled up replica set nginx-deployment-555958bc44 to 1

Normal ScalingReplicaSet 1m deployment-controller Scaled down replica set nginx-deployment-67594d6bf6 to 3

Normal ScalingReplicaSet 1m deployment-controller Scaled up replica set nginx-deployment-555958bc44 to 2

Normal ScalingReplicaSet 1m deployment-controller Scaled down replica set nginx-deployment-67594d6bf6 to 2

Normal ScalingReplicaSet 1m deployment-controller Scaled up replica set nginx-deployment-555958bc44 to 3

Normal ScalingReplicaSet 1m deployment-controller Scaled down replica set nginx-deployment-67594d6bf6 to 1

Normal ScalingReplicaSet 1m deployment-controller Scaled up replica set nginx-deployment-555958bc44 to 4

Normal ScalingReplicaSet 1m deployment-controller Scaled down replica set nginx-deployment-67594d6bf6 to 0

We can see that the Deployment scaled up RS for the new Pods, and then scaled down the old RS. These actions were done one at a time, as specified by our RollingUpdate configuration.

We can now get our Deployment rollout history:

kubectl rollout history deployment/nginx-deployment

We can jump back a version:

kubectl rollout undo deployment.v1.apps/nginx-deployment

Or we can jump back to a specific version:

kubectl rollout undo deployment.v1.apps/nginx-deployment --to-revision=X , where X is the version you want to rollback to

05. Jobs, Init Containers and Cron Jobs

This scenario provides an overview of Jobs, Init Containers and CronJobs in Kubernetes.

Jobs resources create one or more pods and ensures that all of them successfully terminate.

There are two types of jobs:

- Non-parallel Job: A Job which creates only one Pod (which is re-created if the Pod terminates unsuccessfully), and which is completed when the Pod terminates successfully.

- Parallel jobs with a completion count: A Job that is completed when a certain number of Pods terminate successfully. You specify the desired number of completions using the completions field.

Cron Jobs create a job object, they are useful for creating periodic and recurring tasks, e.g running backups or sending emails.

Init Containers are regular containers within a pod that run before the app container and they also satisfy the following statements:

- They can run setup scripts not present in an app container - e.g prepopulate some data, waiting until a specific service is up and running and etc.

- A pod can have one or more init containers apart from app containers

- Init containers always run to completation

- Each one must complete successfully before the next one is started

- The application container won’t run if any of the configured init containers will not finish the execution successfully

Jobs

Job resources are used to facilitate the execution of a batch job. Through Job resources, Kubernetes also supports parallel jobs which will finish executing when a specific number of successful completions is reached.

Therefore with Job resources, we can run work items such as frames to be rendered, files to be transcoded, ranges of keys in a NoSQL database to scan, and so on.

Have a look at Jobs Api reference to see how to build a job resource in Kubernetes.

Pods created by jobs are not automatically deleted. Keeping the pods around allows you to view the logs of completed jobs in order to check for potential errors. If you want to remove them, you need to do that manually.

Create Countdown Job

Take a look at the file job.yaml .

apiVersion: batch/v1

kind: Job

metadata:

name: countdown

spec:

template:

spec:

containers:

- name: countdown

image: bash

command: ["/bin/sh", "-c"]

args:

- for i in 9 8 7 6 5 4 3 2 1 ; do echo $i ; done &&

echo Perfect!

restartPolicy: OnFailure

This example creates a job which runs a bash command to count down from 10 to 1.

Notice that the field spec.restartPolicy allow only two values: “OnFailure” or “Never”. For further information read here

Note: There are situations where you want to fail a job after a number of retries. To do so, use

spec.backoffLimitwhich, by defauly, is set 6. You can usespec.activeDeadlineSecondsto limit the execution time in case you want to manage the duration of a specific job. If the execution reaches this deadline, the Job and all of its Pods are terminated.

Create the countdown job:

kubectl apply -f /manifests/job.yaml

Job status

Check the status of the job:

kubectl get jobs

Job Logs

In order to see the job’s logs we need to get the name of the Job in question:

kubectl get pods -o 'jsonpath={.items[0].metadata.name}'; echo

And then execute the following command to get the logs:

kubectl logs `kubectl get pods -o 'jsonpath={.items[0].metadata.name}'`

You will get a result like:

9 8 7 6 5 4 3 2 1 Perfect!

Delete Job

kubectl delete -f /manifests/job.yaml

or

kubectl delete job countdown

Parallel Jobs

To create a parallel job we can use spec.parallelism to set how many pods we want to run in parallel and spec.completions to set how many job completitions we would like to achieve.

Create Countdown Parallel Job

Inspect the file jobs-parallels.yaml .

apiVersion: batch/v1

kind: Job

metadata:

name: countdown

spec:

completions: 8

parallelism: 2

template:

spec:

containers:

- name: countdown

image: bash

command: ["/bin/sh", "-c"]

args:

- for i in 9 8 7 6 5 4 3 2 1 ; do echo $i ; done &&

echo Perfect!

restartPolicy: OnFailure

This is the same countdown job we used in the previous scenario but we have added spec.parallelism and spec.completions parameters.

The job will run 2 pods in parallel until it reaches 8 completions successfully.

Create countdown parallel job:

kubectl apply -f /manifests/jobs-parallels.yaml

Job status

Wait for a few seconds to get the 8 completions and then check the status of the job:

kubectl get jobs

You should see a similar result to the following, but if not, wait for a few more seconds and check again:

NAME DESIRED SUCCESSFUL AGE

countdown 8 8 16s

This job was executed successfully 8 time by running 2 jobs in parallel.

Job Logs

In order to see the job’s logs, we need to get the job name:

kubectl get pods -o 'jsonpath={.items[0].metadata.name}'; echo

And then execute the following command to get the logs:

kubectl logs `kubectl get pods -o 'jsonpath={.items[0].metadata.name}'`

You will get a result like:

9 8 7 6 5 4 3 2 1 Perfect!

Delete Job

kubectl delete -f /manifests/jobs-parallels.yaml

or

kubectl delete job countdown

Cron Jobs

Written in a Cron format, a Cron Job resource runs a job periodically on a given schedule. These are useful for creating periodic and recurring tasks, e.g running backups or sending emails.

Create Hello Cron Job

Take a look at the file cronjob.yaml . This example create a job every minute which prints the current time and a hello message.

apiVersion: batch/v1

kind: CronJob

metadata:

name: hello

spec:

schedule: "*/1 * * * *"

jobTemplate:

spec:

template:

spec:

containers:

- name: hello

image: busybox

args:

- /bin/sh

- -c

- date; echo Hello from the Kubernetes cluster

restartPolicy: OnFailure

kubectl apply -f /manifests/cronjob.yaml

Cron Job status

Check the status of the cronjob:

kubectl get cronjob hello

Immediatly after creating a cron job, the LAST-SCHEDULE column will have no value ( ). This indicates that the CronJob hasn’t run yet.

master $ kubectl get cronjob hello

NAME SCHEDULE SUSPEND ACTIVE LAST SCHEDULE AGE

hello */1 * * * * False 0 <none> 8s

Once the LAST-SCHEDULE column gets a value, it indicates that the CronJobs is now scheduled to run:

kubectl get cronjob --watch

Check the cron job again, you should see that the cronjob has been scheduled at the time specified in LAST-SCHEDULE :

kubectl get cronjob hello

Cron Job Logs

In order to see the job’s logs, we need to know the pod’s name:

kubectl get pod -o 'jsonpath={.items[0].metadata.name}'; echo

And then:

# kubectl logs <pod-name>

kubectl logs `kubectl get pod -o 'jsonpath={.items[0].metadata.name}'`

# kubectl logs cronjob/<cronjob-name>:

kubectl log cronjob/`kubectl get cronjonb -o 'jsonpath={.items[0].metadata.name}'`

Delete Cron Job

kubectl delete cronjob hello

Init Container

An Init Container is a container which is executed before the application container is started. Init-containers are usually used for deploying utilities or execute scripts which are not loaded and executed in the application container image.

Create a Pod with an init container

Take a look at the file init-container.yaml .

apiVersion: v1

kind: Pod

metadata:

name: happypanda

spec:

containers:

- name: busybox

image: busybox:latest

command: ["/bin/sh", "-c"]

args: ["cat /opt/workdir/helloworld && sleep 3600"]

volumeMounts:

- name: workdir

mountPath: /opt/workdir

initContainers:

- name: init-container

image: busybox

command:

- sh

- -c

- 'echo "The app is running" > /opt/workdir/helloworld'

volumeMounts:

- mountPath: /opt/workdir

name: workdir

volumes:

- name: workdir

emptyDir: {}

This example runs an init-container which creates a helloworld file in a volume. The application pod will be scheduled if the helloworld file exist at a specific path and the pod can access it.

Create the init container:

kubectl apply -f /manifests/init-container.yaml

It could take some time until the Init container finishes the execution successfully and the application container is scheduled to run.

Pod status

The Init container will take some time until it creates the file so you might have to check the status of the pod a couple of times:

kubectl get pods

If the pod is running, it means that the file was created succesfully and the pod can read it. We are going to manually check that the file is at the specified path and it has the correct content:

kubectl exec -ti happypanda -- cat /opt/workdir/helloworld

You should see a result like:

The app is running

Delete Pod

kubectl delete -f /manifests/init-container.yaml

or

kubectl delete pod happypanda

06. Manage DaemonSets

Overview

A DaemonSet ensures that all (or some) Nodes run a copy of a Pod. As nodes are added to the cluster, Pods are added to them. As nodes are removed from the cluster, those Pods are evicted. Deleting a DaemonSet will clean up the Pods it created.

Some typical uses of a DaemonSet are:

- running a cluster storage daemon, such as glusterd and ceph , on each node.

- running a logs collection daemon on every node, such as fluentd or logstash .

- running a node monitoring daemon on every node, such as Prometheus Node Exporter (node_exporter ), collectd , Datadog agent, New Relic agent, or Ganglia gmond .

In a simple case, one DaemonSet, covering all nodes, would be used for each type of daemon. A more complex setup might use multiple DaemonSets for a single type of daemon, but with different flags and/or different memory and cpu constraints for different hardware types.

Create a DaemonSet

In this scenario, we’re going to create an nginx DaemonSet. Initially, we’ll run this on our worker nodes (node01), but then we will manipulate the DaemonSet to get it to run on the master node too.

nginx DaemonSet

In your terminal, you’ll see a file named nginx-daemonset.yaml . This is the DaemonSet which we will be using to run nginx across both of our nodes.

First, let’s create all the prerequisites needed for this DaemonSet to run:

kubectl create -f nginx-ds-prereqs.yaml

---

kind: Namespace

apiVersion: v1

metadata:

name: development

labels:

name: development

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-svc-acct

namespace: development

labels:

name: nginx-svc-acct

Now we’ve created the namespace (and other prerequisites), let’s inspect the manifest for the nginx DaemonSet:

cat nginx-daemonset.yaml; echo

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: nginx

namespace: development

labels:

app: nginx

name: nginx

spec:

selector:

matchLabels:

app: nginx

name: nginx

template:

metadata:

labels:

app: nginx

name: nginx

spec:

serviceAccountName: nginx-svc-acct

containers:

- image: katacoda/docker-http-server:latest

name: nginx

ports:

- name: http

containerPort: 80

As you can see, we’re running a basic DaemonSet - in the development namespace - which exposes port 80 inside the container.

Create it:

kubectl create -f nginx-daemonset.yaml

Now check the status of the DaemonSet:

kubectl get daemonsets -n development

Accessing nginx

Now that we’ve created our nginx DaemonSet, let’s see what host it’s running on:

kubectl get po -n development -l app=nginx -o 'jsonpath={.items[0].spec.nodeName}'; echo

Notice that it’s running on node01 and not master . By default, Kubernetes won’t schedule any workload to master nodes unless they’re tainted. Essentially, what this means is that workload has to be specifically set to be ran on master nodes.

It’s not best practice to run ‘normal’ workload on master nodes, as it’s where etcd and other crucial Kubernetes components reside. However, it’s acceptable to run workload such as log collection and node monitoring daemons on master nodes as you want to understand what’s happening on those nodes.

Testing the Webserver

We want to get the IP address for the pod so we can test that it’s working, now that we know which node it’s running on:

kubectl get po -n development -l app=nginx -o 'jsonpath={.items[0].status.podIP}'; echo

Curl it:

curl `kubectl get po -n development -l app=nginx -o 'jsonpath={.items[0].status.podIP}'`

You should see a result like:

This request was processed by host: nginx-8n2qj

Updating a DaemonSet (Rolling Update)

As mentioned in the previous chapter, workload isn’t scheduled to master nodes unless specificaly tainted. In this scenario, we want to run the nginx DaemonSet across both master and node01 .

We need to update the DaemonSet, so we’re going to use the nginx-daemonset-tolerations.yaml file to replace the manifest.

First, let’s see what we added to the -tolerations.yaml file:

cat nginx-daemonset-tolerations.yaml; echo

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: nginx

namespace: development

labels:

app: nginx

name: nginx

spec:

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

name: nginx

spec:

serviceAccountName: nginx-svc-acct

containers:

- image: katacoda/docker-http-server:latest

name: nginx

ports:

- name: http

containerPort: 80

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

As you can see, we’ve added the following to the spec section:

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

This is what manifests need to be tainted with in order to be ran on master nodes. Proceed to update the DaemonSet:

kubectl replace -f nginx-daemonset-tolerations.yaml

Now check to see if an additional pod has been created. Remember - a DaemonSet schedules a pod to every node, so there should be two pods created:

kubectl get po -n development -l app=nginx -o wide

If there’s two pods - great. That means that the tolerations have worked and we are now running across two nodes.

Accessing the pod on the master node

Find the pod IP address for the newly created pod on the master node:

kubectl get po -n development -l app=nginx -o 'jsonpath={.items[1].status.podIP}'; echo

Notice that it’s different to the IP address that we curl’ed before.

Now curl the new pod’s IP address:

curl `kubectl get po -n development -l app=nginx -o 'jsonpath={.items[1].status.podIP}'`

You should see a similar result to the one in the previous chapter:

This request was processed by host: nginx-njq9h

Deleting a DaemonSet

Clean up our workspace:

kubectl delete daemonset nginx -n development

Alternatively, we could use the short hand, which achieves the same result:

kubectl delete ds nginx -n development

Success - you’ve deleted the DaemonSet. Check for pods:

kubectl get pods -n development

Great! You’re all done.

07. Manage Services

A Service is an abstraction in kubernetes that allows you to connect to pods, it provides two main functionalities service-discovery and load-balancing.

Some typical uses of a Service are:

- provide an endpoint to connect to an application, such as an nginx webserver

- create a load-balancer that will distribute traffic to pods

- create an external endpoint to a service outside of the cluster for example an RDS database

There are multiple types of services:

NodePortthat exposes a port on all the nodesLoadBalancerthat create a loadbalancer depending on your environmentClusterIPwhich creates a dedicated IP which can usually be only access inside of the cluster

Launch Deployment

First things first, lets create a deployment that we will use to learn the various service types.

To do so run:

kubectl create -f nginx-deployment.yml

# nginx-deployment.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

labels:

app: nginx

name: nginx

spec:

selector:

matchLabels:

app: nginx

name: nginx

template:

metadata:

labels:

app: nginx

name: nginx

spec:

containers:

- image: katacoda/docker-http-server:latest

name: nginx

ports:

- name: http

containerPort: 80

and make sure all is healthy:

kubectl get deploy

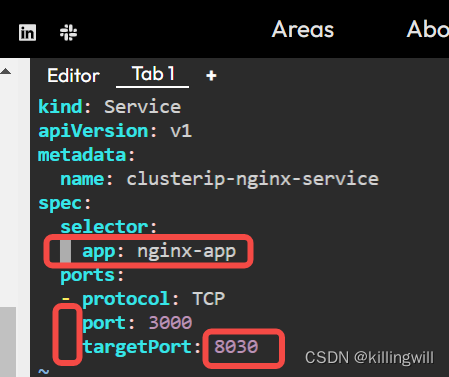

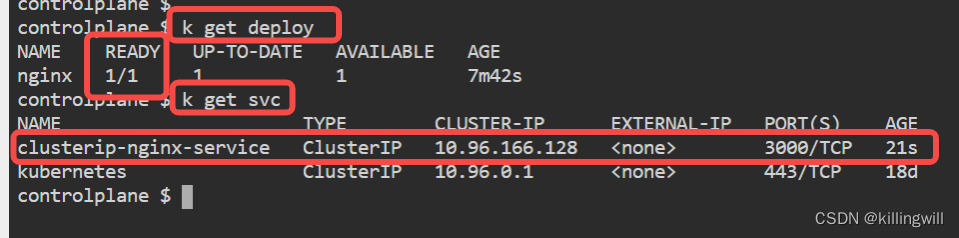

ClusterIP Service

Now that we have a working deployment, lets expose it to the cluster so that other deployments can access it too.

kubectl create -f clusterip-service.yml

# clusterip-service.yml

kind: Service

apiVersion: v1

metadata:

name: clusterip-nginx-service

spec:

selector:

app: nginx

ports:

- protocol: TCP

port: 80

targetPort: 80

We can check if it was created with:

kubectl get svc -o wide

or if we want more information:

kubectl describe svc clusterip-nginx-service

NodePort Service

What if we wanted to expose our service outside of the cluster? This is where NodePort comes in. NodePort is one the most often utilized service types in kubernetes.

Lets create one:

kubectl create -f nodeport-service.yml

# nodeport-service.yml

kind: Service

apiVersion: v1

metadata:

name: nodeport-nginx-service

spec:

selector:

app: nginx

ports:

- protocol: TCP

port: 80

targetPort: 80

type: NodePort

We can check if it was created with:

kubectl get svc -o wide

or if we want more information:

kubectl describe svc nodeport-nginx-service

We can now access our service with:

curl http://<NODEPORT-IP>

LoadBalancer Service

What if we wanted a single point of entry for our service from the oustide? For that we need a LoadBalancer type of service. If you are running on any of the major cloud providers it will be freely available for you, but if you are on-prem or in this case katacoda, then you need to make this functionality available.

Lets make katacoda LoadBalancer friendly:

kubectl create -f cloudprovider.yml

# cloudprovider.yml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-keepalived-vip

namespace: kube-system

spec:

selector:

matchLabels:

name: kube-keepalived-vip

template:

metadata:

labels:

name: kube-keepalived-vip

spec:

hostNetwork: true

containers:

- image: gcr.io/google_containers/kube-keepalived-vip:0.9

name: kube-keepalived-vip

imagePullPolicy: Always

securityContext:

privileged: true

volumeMounts:

- mountPath: /lib/modules

name: modules

readOnly: true

- mountPath: /dev

name: dev

# use downward API

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

# to use unicast

args:

- --services-configmap=kube-system/vip-configmap

# unicast uses the ip of the nodes instead of multicast

# this is useful if running in cloud providers (like AWS)

#- --use-unicast=true

volumes:

- name: modules

hostPath:

path: /lib/modules

- name: dev

hostPath:

path: /dev

nodeSelector:

# type: worker # adjust this to match your worker nodes

---

## We also create an empty ConfigMap to hold our config

apiVersion: v1

kind: ConfigMap

metadata:

name: vip-configmap

namespace: kube-system

data:

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: keepalived-cloud-provider

name: keepalived-cloud-provider

namespace: kube-system

spec:

replicas: 1

revisionHistoryLimit: 2

selector:

matchLabels:

app: keepalived-cloud-provider

strategy:

type: RollingUpdate

template:

metadata:

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ""

scheduler.alpha.kubernetes.io/tolerations: '[{"key":"CriticalAddonsOnly", "op

labels:

app: keepalived-cloud-provider

spec:

containers:

- name: keepalived-cloud-provider

image: quay.io/munnerz/keepalived-cloud-provider:0.0.1

imagePullPolicy: IfNotPresent

env:

- name: KEEPALIVED_NAMESPACE

value: kube-system

- name: KEEPALIVED_CONFIG_MAP

value: vip-configmap

- name: KEEPALIVED_SERVICE_CIDR

value: 10.10.0.0/26 # pick a CIDR that is explicitly reserved for keepalive

volumeMounts:

- name: certs

mountPath: /etc/ssl/certs

resources:

requests:

cpu: 200m

livenessProbe:

httpGet:

path: /healthz

port: 10252

host: 127.0.0.1

initialDelaySeconds: 15

timeoutSeconds: 15

failureThreshold: 8

volumes:

- name: certs

hostPath:

path: /etc/ssl/certs

Once we have that we can create our service:

kubectl create -f loadbalancer-service.yml

# loadbalancer-service.yml

kind: Service

apiVersion: v1

metadata:

name: lb-nginx-service

spec:

selector:

app: nginx

ports:

- protocol: TCP

port: 80

targetPort: 80

type: LoadBalancer

We can check if it was created with:

kubectl get svc -o wide

or if we want more information:

kubectl describe svc lb-nginx-service

We can now access our service with:

curl http://<EXTERNAL-IP>

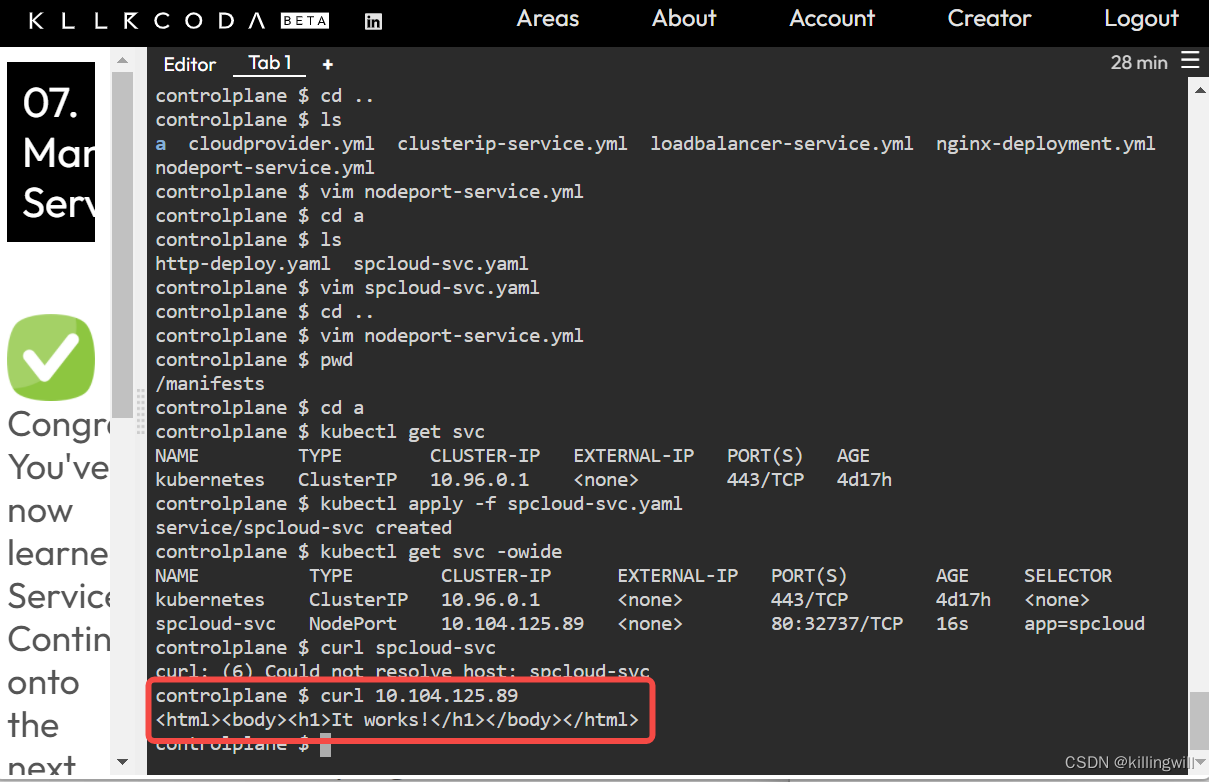

Exercise

Create a deployment in the default namespace with the httpd image, name the deployment as spcloud and expose it as a NodePort service named spcloud-svc .

When you runcurl node01:<NODE_PORT>, you should receive a responseIt works!

mkdir a

cd a

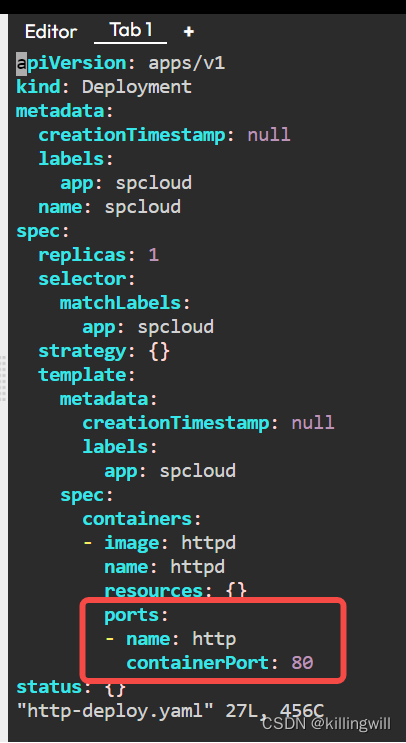

使用-oyaml以及--dry-run=client快速创建deploy的yaml文件:

kubectl create deploy spcloud --image=httpd -o yaml --dry-run=client > httpd-deploy.yaml

vim httpd-deploy.yaml

修改http-deploy.yaml文件,增加如下容器开放的80端口:

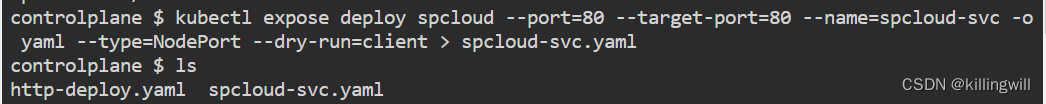

#根据已有deploy快速创建service,并将其生成为yaml文件形式:

kubectl apply -f httpd-deploy.yaml

kubectl get deploy

kubectl expose deploy <deployname> --port=80 --target-port=80 --name=<svc-name> --type=NodePort -o yaml --dry-run=client > spcloud-svc.yaml

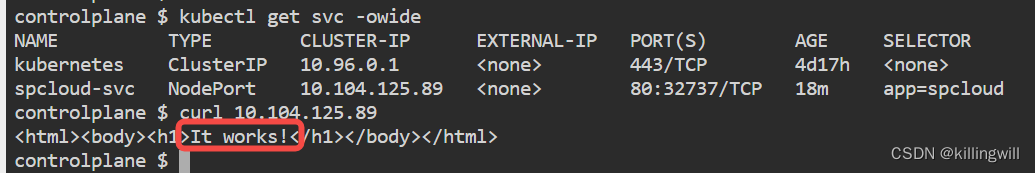

检查service配置文件spcloud-svc.yaml符合题意要求后:

kubectl apply -f spcloud-svc.yaml

获取svc的ip

kubectl get svc -owide

curl <ip>

08. Manage Ingress

Traditionally, you would create a LoadBalancer service for each public system you want to expose. This can get rather expensive, very quickly. Ingress gives you a way to route requests to services based on the request host or path, centralizing a number of services into a single entrypoint (or in this case, load balancer).

Ingress Resources

Ingress is split up into two main pieces. The first is an Ingress resource, which defines how you want requests routed to the backing services.

For example, a definition that defines an Ingress to handle requests for www.mysite.com and forums.mysite.com and routes them to the Kubernetes services named website and forums respectively would look like:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: my-ingress

spec:

rules:

- host: www.mysite.com

http:

paths:

- backend:

serviceName: website

servicePort: 80

- host: forums.mysite.com

http:

paths:

- path:

backend:

serviceName: forums

servicePort: 80

Ingress Controllers

Here is where things seem to get confusing, though. Ingress on its own doesn’t really do anything. You need something to listen to the Kubernetes API for Ingress resources and then handle requests that match them. This is where the second piece to the puzzle comes in — the Ingress Controller.

Ingress Controllers can technically be any system capable of reverse proxying, but the most common is Nginx. A full example Nginx Ingress Controller (and LoadBalancer service) is as follows. Please note that if you are not on a provider that supports LoadBalancer services (ie. bare-metal), you can create a NodePort Service instead and point to your nodes with an alternative solution that fills that role — a reverse proxy capable of routing requests to the exposed NodePort for the Ingress Controller on each of your nodes.

Creating the Controller

First, inspect the file:

cat ing-controller.yaml; echo

# ing-controller.yaml

kind: Service

apiVersion: v1

metadata:

name: ingress-nginx

spec:

type: LoadBalancer

selector:

app: ingress-nginx

ports:

- name: http

port: 80

targetPort: http

- name: https

port: 443

targetPort: https

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: ingress-nginx

spec:

selector:

matchLabels:

app: ingress-nginx

spec:

terminationGracePeriodSeconds: 60

containers:

- image: gcr.io/google_containers/nginx-ingress-controller:0.8.3

name: ingress-nginx

imagePullPolicy: Always

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

livenessProbe:

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 30

timeoutSeconds: 5

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

args:

- /nginx-ingress-controller

- --default-backend-service=$(POD_NAMESPACE)/nginx-default-backend

Now create it:

kubectl create -f ing-controller.yaml

This is essentially an Nginx image that monitors Ingress resources for requested routes to serve. One callout you may have noticed is that it specifies a --default-backend-service as a startup argument, passing in nginx-default-backend. This is wanting a service that can simply handle returning a 404 response for requests that the Ingress Controller is unable to match to an Ingress rule. Let’s create that as well with the specified name:

kubectl create -f ing-backend.yaml

# ing-backend.yaml

kind: Service

apiVersion: v1

metadata:

name: nginx-default-backend

spec:

ports:

- port: 80

targetPort: http

selector:

app: nginx-default-backend

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: nginx-default-backend

spec:

selector:

matchLabels:

app: nginx-default-backend

replicas: 1

template:

metadata:

labels:

app: nginx-default-backend

spec:

selector:

matchLabels:

app: nginx-default-backend

replicas: 1

template:

metadata:

labels:

app: nginx-default-backend

spec:

terminationGracePeriodSeconds: 60

containers:

- name: default-http-backend

image: gcr.io/google_containers/defaultbackend:1.0

livenessProbe:

httpGet:

path: /healthz

port: 8080

scheme: HTTP

initialDelaySeconds: 30

timeoutSeconds: 5

resources:

limits:

cpu: 10m

memory: 20Mi

requests:

cpu: 10m

memory: 20Mi

ports:

- name: http

containerPort: 8080

protocol: TCP

I said Ingress is made up of two main components and we introduced three new things. This is because the default backend isn’t really a piece of Ingress itself, but rather is something that the Nginx Ingress Controller requires. Other Ingress Controllers won’t necessarily have this component.

Wiring it Up

Assuming you’ve created the Ingress Controller above with the dependent default backend, your Ingress resources should be handled by the LoadBalancer created with the Ingress Controller service. Of course, you would need services named website and forums to route to in the above example.

As a quick test, you can deploy the following instead.

kubectl create -f demo-ing.yaml

# demo-ing.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: demo-ingress

spec:

rules:

- http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx

port:

number: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx

spec:

ports:

- port: 80

targetPort: 80

selector:

app: nginx

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

spec:

selector:

matchLabels:

app: nginx

replicas: 1

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: echoserver

image: nginx

ports:

- containerPort: 80

To test things out, you need to get your Ingress Controller entrypoint.

For LoadBalancer services that will be:

kubectl get service ingress-nginx -o wide

For NodePort services, you can find the exposed port with:

kubectl describe service ingress-nginx

09. Persistent Volumes

We’ll learn the basics of how to manage persistent storage, and how to supply this storage to running Pods.

In general, our microservices are stateless, so they won’t need any persistent storage. However, microservices are almost never truly stateless, and this can be important. In addition, you might be running a Database in your Kubernetes cluster, and want to provide it Kubernetes-managed persistent storage.

For more information, see the Kubernetes documentation.

Local Volumes

To start off, let’s create a local emptyDir volume for a Pod. An emptyDir volume has the same lifecycle as the Pod. If the container(s) in the Pod restart or ccrash, the volume will live on and persist data. However, if the Pod is removed, then the volume will be removed as well.

First, let’s look at a simple Pod Manifest:

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- name: nginx-data

mountPath: /data/nginx

volumes:

- name: nginx-data

emptyDir: {}

In this Manifest file, we create a Pod and provide a container specification to run nginx. In this scenario, we are creating a local volume for nginx. It will be mounted inside of the nginx container at /data/nginx .

On the host system, Kubernetes will provide an empty directory for storage.

Next, let’s launch this Pod, and store some data:

kubectl create -f ./resources/nginx-local.yaml

# nginx-local.ymal

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- name: nginx-data

mountPath: /data/nginx

volumes:

- name: nginx-data

emptyDir: {}

We can see that the Pod was started:

kubectl get pods --watch

Once it has successfully started, let’s go into the nginx container and drop a file.

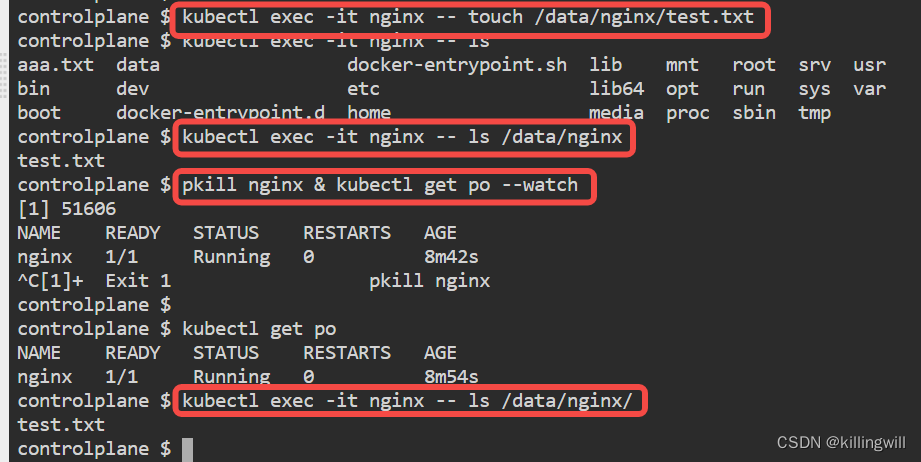

kubectl exec -it nginx -- touch /data/nginx/test.txt

Now, let’s force the nginx container in the Pod to restart. At this point, the Kubelet will destroy that container, and start a new one.

pkill nginx & kubectl get pods --watch

Now, we can open up another bash session into the container, and we’ll find that our file is still there:

kubectl exec nginx -- ls /data/nginx/

Creating a PersistentVolume

PersistentVolumes abstract the low-level details of a storage device, and provide a high-level API to provide such storage to Pods.

PersistentVolumes are storage inside of your cluster that has been provisioned by your administrator. Their lifecycle is external to your Pods or other objects.

There are many different types of PersistentVolumes that can be used with Kubernetes. As an example, you can use a local filesystem, NFS, and there are plugins for cloud vendor storage solutions like EBS.

We specify PersistentVolumes via a Manifest file:

apiVersion: v1

kind: PersistentVolume

metadata:

name: local-pv

spec:

capacity:

storage: 3Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Delete

storageClassName: local-storage

hostPath:

path: "/mnt/data"

This describes a single PersistentVolume. It is mounted to /mnt/data on a node. It is of type Filesystem, with 3 GB of storage. (hostPath are only appropriate for testing in single node environments)

We can create this PersistentVolume:

kubectl create -f ./resources/pv-local.yaml

# pv-local.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: local-pv

spec:

capacity:

storage: 3Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Delete

storageClassName: local-storage

hostPath:

path: "/mnt/data"

We can then view it with:

kubectl get pv

We can get even more information with:

kubectl describe pv local-pv

If we want to swap out the PersistentVolume type, we can configure it for NFS or EBS storage by making a few tweaks to the file. For more information, please see the PersistentVolume documentation.

Creating a PersistentVolumeClaim

Now that we have a PersistentVolume, let’s make a PersistentVolumeClaim to provide storage to a Pod. PersistentVolumeClaims enable you to request a certain amount of storage from a PersistentVolume, and reserve it for your Pod.

The following is a PersistentVolumeClaim:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nginx-pvc

spec:

# Notice the storage-class name matches the storage class in the PV we made in the previous step.

storageClassName: local-storage

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

This PersistentVolumeClaim is requesting 10 GB of storage from a local Filesystem PersistentVolume. When a Pod uses this Claim, Kubernetes will attempt to satisfy the claim by enumerating all PersistentVolumes, and matching the requirements in this Claim to what is present in the cluster.

If we were to match this Claim to PersistentVolume, it would succeed, because we have a PersistentVolume of type Filesystem with 100 GB of storage.

Let’s create the PersistentVolumeClaim:

kubectl create -f ./resources/pv-claim.yaml

# pv-claim.ymal

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nginx-pvc

spec:

# Notice the storage-class name matches the storage class in the PV we made in the

storageClassName: local-storage

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

and wait until the resource is available:

kubectl get pvc --watch

We can also use label selectors to aid in matching Claims with PersistentVolumes.

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nginx-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

selector:

matchLabels:

env: dev

This Claim is identical to the previous one, but it will only be matched with PersistentVolumes that have the label env: dev . You can use this to have more control over which Claims bind to a particular PersistentVolume.

Adding Storage to Pods

Now that we have PersistentVolumes and a PersistentVolumeClaim, we can provide the Claim to a Pod, and Kubernetes will provision storage.

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- name: nginx-data

mountPath: /data/nginx

volumes:

- name: nginx-data

persistentVolumeClaim:

claimName: nginx-pvc

This is very similar to the first Pod that we initially created with local storage. Now, we have basically changed what provides the Storage with the addition of those bottom two lines. To deploy our pod, execute the following:

kubectl create -f ./resources/nginx-persistent.yaml

# nginx-persistent.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-persist

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- name: nginx-data

mountPath: /data/nginx

volumes:

- name: nginx-data

persistentVolumeClaim:

claimName: nginx-pvc

~

We can see that the Pod was created, and that the Claim was fulfilled:

kubectl get pods --watch

kubectl get pvc

Clean Up

Delete all Kubernetes resources

kubectl delete -f ./resources

practise

# pv-local.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: local-pv

spec:

capacity:

storage: 3Gi

volumeMode: FileSystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Delete

storageClassName: local-storage

hostPath:

path: "/mnt/data"

---

# pv-claim.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nginx-pvc

spec:

# notice the pvc storageClassName matches the pv storageClassName

storageClassName: local-storage

accessMode:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

# nginx-persistent.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-persitent

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- name: nginx-data

mountPath: /data/nginx

volumes:

- name: nginx-data

persistentVolumeClaim:

claimName: nginx-pvc

---

# nginx-local.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- name: nginx-data

mountPath: /data/nginx

volumes:

- name: nginx-data

emptyDir: {}

kubectl exec -it <podname> -- <command you want execute>

pkill <podname> & kubectl get po --watch

# delete all k8s resources:

kubectl delete -f ./resources

10. ConfigMaps

ConfigMaps are a way to decouple configuration from application source code and Kubernetes Pods / Deployment files. If you are familiar with 12-Factor App principles, then this addresses factor 3: “Store config in the environment.”

ConfigMaps allow you to independently store data that your application needs in a Kubernetes object. ConfigMaps are decoupled from Pods and Deployments. In addition, ConfigMaps can be consumed by multiple Pods and Deployments, which can be extremely useful. As an example, if you have multiple microservies that need access to the same configuration parameter, all it takes is a single ConfigMap. This used to be accomplished with a Config-Server microservice, or supplying each microservice with the same environment variable, which made updates difficult.

We will go through the process of creating ConfigMaps from literal values, files, and directories. We’ll supply these values to Pods as environment variables, and finally create a simple Redis Deployment configured via ConfigMaps.

Create ConfigMaps from Literal Values

ConfigMaps are Kubernetes objects that store configuration external to source code. Creating a ConfigMap is easy:

kubectl create configmap <configmap-name> <data>

The <data> used to populate the ConfigMap can be from multiple different formats. For testing purposes, the easiest way to supply this is with literals.

Literals refers to data you enter in on the command line. Here’s the simplest way to create a ConfigMap:

kubectl create configmap my-config --from-literal=course="Kubernetes Fundamentals"

Now that we’ve created the ConfigMap, we can view it with:

kubectl get configmaps

The output should look like this:

NAME DATA AGE

my-config 1 20s

We can inspect the contents with:

kubectl describe configmap my-config

We can get an even better view with:

kubectl get configmap my-config -o yaml

You can also provide multiple values to a ConfigMap:

kubectl create configmap multi-config --from-literal=name.first=David --from-literal=name.last=Widen --from-literal=age=28 --from-literal=hometown="Glen Cove"

And get a better view with

kubectl get configmap multi-config -o yaml

Create ConfigMaps from Files

You can also create ConfigMaps from from a file. Your file should have a set of key=value upairs, one per line. Creating a ConfigMap from such files is easy:

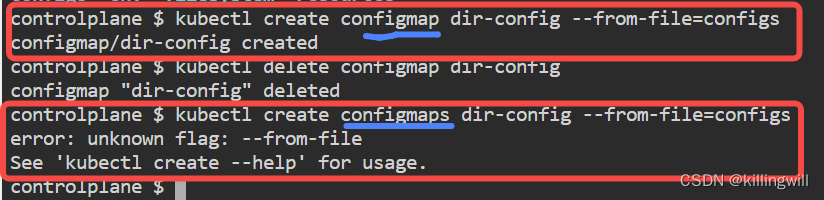

kubectl create configmap <name> --from-file=<path-to-file>

To view a sample file to use with ConfigMaps, execute:

cat configs/game.properties

Execute the following to create a ConfigMap from that configuration file for a hypothetical video game’s configuration:

kubectl create configmap game-config --from-file=configs/game.properties

Now that we’ve created the ConfigMap, we can view it with:

kubectl get configmaps

The output should look like this:

NAME DATA AGE

game-config 4 20s

We can inspect the contents with:

kubectl describe configmap game-config

We can get an even better view with:

kubectl get configmap game-config -o yaml

You can also provide multiple values to a ConfigMap:

Creating ConfigMaps from a single file is very useful, because it lets you quickly convert multiple key=value pairs into data that Kubernetes objects can ingest. In addition, you can use env-files to provide more verbose source for developers.

# I'm an env-file

# Comments start with #

# Blank lines are ignored

# All other lines should be key=val

# Any quotes you include will become part of the value

name.first=David

name.last=Widen

age="28"

We can create ConfigMaps from env-files very similarly to how we did above, just swap --from-file with --from-env-file .

kubectl create configmap person-config --from-env-file=env/person.env

And, view the ConfigMap:

kubectl get configmap person-config -o yaml

Create ConfigMaps from Directories

You can also create a ConfigMap from a directory. This is very similar to creating them from files. It can be very useful, as it allows you to separate out configuration into multiple directories, and then you can create an individual ConfigMp for each directory, and then quickly swap out configuration.

First, let’s clear out our ConfigMaps:

kubectl delete configmap my-config

kubectl delete configmap multi-config

kubectl delete configmap game-config

kubectl delete configmap person-config