Kubeadm介绍

1.通俗点讲,kubeadm跟minikube一样,都是一个搭建kubernetes环境一个工具;

区别在于:minikube是搭建单机kubernetes环境的一个工具

kubeadm是搭建集群kubernetes环境的一个工具,这个常用;

2.对于具体的使用说明,大家可以参考下面的地址去学习(中英文都有)

使用Kubeamd搭建kubernetes集群环境

首先准备一个三台的centos机器

1.三台机器需要能够互相ping通

2.三台机器的网段需要一致,保持在一个网络之中;

3.搭建可以参考下面的地址

yum -y update [在三台机器上执行更新包]

yum -y update

[root@manager-node ~]# yum install -y conntrack ipvsadm ipset jq sysstat curl iptables libseccomp

[root@manager-node ~]# yum install -y conntrack ipvsadm ipset jq sysstat curl iptables libseccomp

Loaded plugins: fastestmirror

Loading mirror speeds from cached hostfile

* base: ftp.sjtu.edu.cn

* extras: mirror.bit.edu.cn

* updates: mirror.bit.edu.cn

Package ipset-7.1-1.el7.x86_64 already installed and latest version

No package jq available.

Package curl-7.29.0-54.el7_7.1.x86_64 already installed and latest version

Package iptables-1.4.21-33.el7.x86_64 already installed and latest version

Package libseccomp-2.3.1-3.el7.x86_64 already installed and latest version

Resolving Dependencies

--> Running transaction check

---> Package conntrack-tools.x86_64 0:1.4.4-5.el7_7.2 will be installed

--> Processing Dependency: libnetfilter_cttimeout.so.1(LIBNETFILTER_CTTIMEOUT_1.1)(64bit) for package: conntrack-tools-1.4.4-5.el7_7.2.x86_64

--> Processing Dependency: libnetfilter_cttimeout.so.1(LIBNETFILTER_CTTIMEOUT_1.0)(64bit) for package: conntrack-tools-1.4.4-5.el7_7.2.x86_64

--> Processing Dependency: libnetfilter_cthelper.so.0(LIBNETFILTER_CTHELPER_1.0)(64bit) for package: conntrack-tools-1.4.4-5.el7_7.2.x86_64

--> Processing Dependency: libnetfilter_queue.so.1()(64bit) for package: conntrack-tools-1.4.4-5.el7_7.2.x86_64

--> Processing Dependency: libnetfilter_cttimeout.so.1()(64bit) for package: conntrack-tools-1.4.4-5.el7_7.2.x86_64

--> Processing Dependency: libnetfilter_cthelper.so.0()(64bit) for package: conntrack-tools-1.4.4-5.el7_7.2.x86_64

---> Package ipvsadm.x86_64 0:1.27-7.el7 will be installed

---> Package sysstat.x86_64 0:10.1.5-18.el7 will be installed

--> Processing Dependency: libsensors.so.4()(64bit) for package: sysstat-10.1.5-18.el7.x86_64

--> Running transaction check

---> Package libnetfilter_cthelper.x86_64 0:1.0.0-10.el7_7.1 will be installed

---> Package libnetfilter_cttimeout.x86_64 0:1.0.0-6.el7_7.1 will be installed

---> Package libnetfilter_queue.x86_64 0:1.0.2-2.el7_2 will be installed

---> Package lm_sensors-libs.x86_64 0:3.4.0-8.20160601gitf9185e5.el7 will be installed

--> Finished Dependency Resolution

Dependencies Resolved

===================================================================================================================================================================================================================

Package Arch Version Repository Size

===================================================================================================================================================================================================================

Installing:

conntrack-tools x86_64 1.4.4-5.el7_7.2 updates 187 k

ipvsadm x86_64 1.27-7.el7 base 45 k

sysstat x86_64 10.1.5-18.el7 base 315 k

Installing for dependencies:

libnetfilter_cthelper x86_64 1.0.0-10.el7_7.1 updates 18 k

libnetfilter_cttimeout x86_64 1.0.0-6.el7_7.1 updates 18 k

libnetfilter_queue x86_64 1.0.2-2.el7_2 base 23 k

lm_sensors-libs x86_64 3.4.0-8.20160601gitf9185e5.el7 base 42 k

Transaction Summary

===================================================================================================================================================================================================================

Install 3 Packages (+4 Dependent packages)

Total download size: 647 k

Installed size: 1.9 M

Downloading packages:

(1/7): ipvsadm-1.27-7.el7.x86_64.rpm | 45 kB 00:00:00

(2/7): lm_sensors-libs-3.4.0-8.20160601gitf9185e5.el7.x86_64.rpm | 42 kB 00:00:00

(3/7): libnetfilter_cthelper-1.0.0-10.el7_7.1.x86_64.rpm | 18 kB 00:00:00

(4/7): libnetfilter_cttimeout-1.0.0-6.el7_7.1.x86_64.rpm | 18 kB 00:00:00

(5/7): sysstat-10.1.5-18.el7.x86_64.rpm | 315 kB 00:00:00

(6/7): libnetfilter_queue-1.0.2-2.el7_2.x86_64.rpm | 23 kB 00:00:00

(7/7): conntrack-tools-1.4.4-5.el7_7.2.x86_64.rpm | 187 kB 00:00:00

-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

Total 721 kB/s | 647 kB 00:00:00

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

Installing : libnetfilter_cttimeout-1.0.0-6.el7_7.1.x86_64 1/7

Installing : lm_sensors-libs-3.4.0-8.20160601gitf9185e5.el7.x86_64 2/7

Installing : libnetfilter_queue-1.0.2-2.el7_2.x86_64 3/7

Installing : libnetfilter_cthelper-1.0.0-10.el7_7.1.x86_64 4/7

Installing : conntrack-tools-1.4.4-5.el7_7.2.x86_64 5/7

Installing : sysstat-10.1.5-18.el7.x86_64 6/7

Installing : ipvsadm-1.27-7.el7.x86_64 7/7

Verifying : libnetfilter_cthelper-1.0.0-10.el7_7.1.x86_64 1/7

Verifying : conntrack-tools-1.4.4-5.el7_7.2.x86_64 2/7

Verifying : libnetfilter_queue-1.0.2-2.el7_2.x86_64 3/7

Verifying : ipvsadm-1.27-7.el7.x86_64 4/7

Verifying : sysstat-10.1.5-18.el7.x86_64 5/7

Verifying : lm_sensors-libs-3.4.0-8.20160601gitf9185e5.el7.x86_64 6/7

Verifying : libnetfilter_cttimeout-1.0.0-6.el7_7.1.x86_64 7/7

Installed:

conntrack-tools.x86_64 0:1.4.4-5.el7_7.2 ipvsadm.x86_64 0:1.27-7.el7 sysstat.x86_64 0:10.1.5-18.el7

Dependency Installed:

docker 安装 [在三台机器上都要这么执行]

卸载docker

[root@manager-node ~]# sudo yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-engine

-----------------------------------

安装Docker的依赖

[root@manager-node ~]# sudo yum install -y yum-utils \

device-mapper-persistent-data \

lvm2

指定一下Docker的仓库

[root@manager-node ~]# sudo yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repo

Loaded plugins: fastestmirror

adding repo from: https://download.docker.com/linux/centos/docker-ce.repo

grabbing file https://download.docker.com/linux/centos/docker-ce.repo to /etc/yum.repos.d/docker-ce.repo

repo saved to /etc/yum.repos.d/docker-ce.repo

[root@manager-node ~]#

-----------------------------------

开始安装(这里我们指定版本18.09.0)

[vagrant@localhost ~]$ yum install -y docker-ce-18.09.0 docker-ce-cli-18.09.0 containerd.io

就此安装完成

开始启动

[root@manager-node ~]# sudo systemctl start docker

设置开机启动

[root@manager-node ~]# sudo systemctl enable docker

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

[root@manager-node ~]#

修改三台机器的hosts文件

manager-node节点

设置服务器的hostname为manager-node 主要是用于hosts文件解析

[root@manager-node ~]# hostnamectl set-hostname manager-node

设置hosts文件 新增下面的配置项目

192.168.1.111 manager-node

192.168.1.122 workder01-node

192.168.1.133 workder02-node

workder01-node节点

设置服务器的hostname为workder01-node 主要是用于hosts文件解析

[root@worker01-node ~]# hostnamectl set-hostname worker01-node

[root@worker01-node ~]#

设置hosts文件 新增下面的配置项目

192.168.1.111 manager-node

192.168.1.122 workder01-node

192.168.1.133 workder02-node

workder02-node节点

设置服务器的hostname为workder02-node 主要是用于hosts文件解析

[root@worker02-node ~]# hostnamectl set-hostname workder02-node

设置hosts文件 新增下面的配置项目

192.168.1.111 manager-node

192.168.1.122 workder01-node

192.168.1.133 workder02-node

备注

1.这里注意下,修改hosts文件之后,无需执行其他命令,会自动生效,

因为dns域名解析,实时去访问hosts文件去解析的;

测试域名解析(任意节点去ping其他的节点都能ping通)

[root@worker02-node ~]# hostnamectl set-hostname workder02-node

[root@worker02-node ~]# vi /etc/hosts

[root@worker02-node ~]# ping manager-node

PING manager-node (192.168.1.111) 56(84) bytes of data.

64 bytes from manager-node (192.168.1.111): icmp_seq=1 ttl=64 time=1.48 ms

64 bytes from manager-node (192.168.1.111): icmp_seq=2 ttl=64 time=0.609 ms

^Z

[1]+ Stopped ping manager-node

[root@worker02-node ~]#

-----------------------------------

系统配置(三台机器全部执行,这个我都是基于官网的要求去做的)

关闭防火墙[使得集群之间的ip端口能否都可以ping通]

[root@manager-node ~]# systemctl stop firewalld &&

关闭selinux [这个也是一个安全的linux模块,可以指明某个进程可以访问的资源]

[root@manager-node ~]# setenforce 0

[root@manager-node ~]# sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

[root@manager-node ~]#

关闭swap

1.swap类似windows中的虚拟内存,如果内存不足了,可以通过swap转换硬盘,获取内存

[root@manager-node ~]# swapoff -a

[root@manager-node ~]# sed -i '/swap/s/^\(.*\)$/#\1/g' /etc/fstab

配置iptables的ACCEPT规则

[root@manager-node ~]# iptables -F && iptables -X && iptables -F -t nat && iptables -X -t nat && iptables -P FORWARD ACCEPT

设置系统参数

执行脚本

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

执行样例

[root@manager-node ~]# cat <<EOF > /etc/sysctl.d/k8s.conf

> net.bridge.bridge-nf-call-ip6tables = 1

> net.bridge.bridge-nf-call-iptables = 1

> EOF

[root@manager-node ~]#

[root@manager-node ~]# sysctl --system

* Applying /usr/lib/sysctl.d/00-system.conf ...

net.bridge.bridge-nf-call-ip6tables = 0

net.bridge.bridge-nf-call-iptables = 0

net.bridge.bridge-nf-call-arptables = 0

* Applying /usr/lib/sysctl.d/10-default-yama-scope.conf ...

kernel.yama.ptrace_scope = 0

* Applying /usr/lib/sysctl.d/50-default.conf ...

kernel.sysrq = 16

kernel.core_uses_pid = 1

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.all.rp_filter = 1

net.ipv4.conf.default.accept_source_route = 0

net.ipv4.conf.all.accept_source_route = 0

net.ipv4.conf.default.promote_secondaries = 1

net.ipv4.conf.all.promote_secondaries = 1

fs.protected_hardlinks = 1

fs.protected_symlinks = 1

* Applying /etc/sysctl.d/99-sysctl.conf ...

* Applying /etc/sysctl.d/k8s.conf ...

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

* Applying /etc/sysctl.conf ...

[root@manager-node ~]#

-----------------------------------

Installing kubeadm, kubelet and kubectl

kubeadm: the command to bootstrap the cluster.

kubelet: the component that runs on all of the machines in your cluster and does things like starting pods and containers.

kubectl: the command line util to talk to your cluster.

-----------------------------------

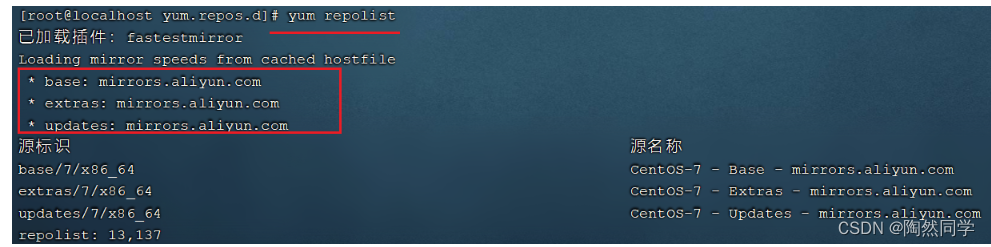

配置安装的yum源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

-----------------------------------

1.这里注意下,cat 相当于显示输出的内容

<<EOF 相当于文件开始

后面的EOF相当于文件结束的标记

/etc/yum.repos.d/kubernetes.repo 是输入的那个文件中

文件中的内容是是下面这些;

文件内容

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

-----------------------------------

安装kubeadm&kubelet&kubectl

查看kubeadm的版本,并且排序

[root@manager-node ~]# yum list kubeadm --showduplicates | sort -r

* updates: mirror.bit.edu.cn

Loading mirror speeds from cached hostfile

......

kubeadm.x86_64 1.14.9-0 kubernetes

kubeadm.x86_64 1.14.8-0 kubernetes

kubeadm.x86_64 1.14.7-0 kubernetes

kubeadm.x86_64 1.14.6-0 kubernetes

kubeadm.x86_64 1.14.5-0 kubernetes

kubeadm.x86_64 1.14.4-0 kubernetes

....

Available Packages

[root@manager-node ~]#

-----------------------------------

[root@manager-node ~]# yum install -y kubeadm-1.14.0-0 kubelet-1.14.0-0 kubectl-1.14.0-0

Loaded plugins: fastestmirror

Loading mirror speeds from cached hostfile

* base: ftp.sjtu.edu.cn

* extras: mirror.bit.edu.cn

* updates: mirror.bit.edu.cn

No package kubeadm-1.14.0-0 available.

No package kubelet-1.14.0-0 available.

No package kubectl-1.14.0-0 available.

Error: Nothing to do

[root@manager-node ~]#

[root@manager-node ~]# cat /etc/yum.repos.d/kubernetes.repo

cat: /etc/yum.repos.d/kubernetes.repo: No such file or directory

[root@manager-node ~]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo

> [kubernetes]

> name=Kubernetes

> baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

> enabled=1

> gpgcheck=0

> repo_gpgcheck=0

> gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

> http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

> EOF

[root@manager-node ~]# cat /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

[root@manager-node ~]#

[root@manager-node ~]#

[root@manager-node ~]#

[root@manager-node ~]#

[root@manager-node ~]# > baseurl=http://mirrors.

-bash: baseurl=http://mirrors.: No such file or directory

[root@manager-node ~]# vi /etc/docker/daemon.json

[root@manager-node ~]# systemctl restart docker

[root@manager-node ~]# sed -i "s/cgroup-driver=systemd/cgroup-driver=cgroupfs/g" /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

sed: can't read /etc/systemd/system/kubelet.service.d/10-kubeadm.conf: No such file or directory

[root@manager-node ~]# sed -i "s/cgroup-driver=systemd/cgroup-driver=cgroupfs/g" /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

sed: can't read /etc/systemd/system/kubelet.service.d/10-kubeadm.conf: No such file or directory

[root@manager-node ~]# systemctl enable kubelet && systemctl start kubelet

Failed to execute operation: No such file or directory

[root@manager-node ~]# yum install -y kubeadm-1.14.0-0 kubelet-1.14.0-0 kubectl-1.14.0-0

Loaded plugins: fastestmirror

Loading mirror speeds from cached hostfile

* base: ftp.sjtu.edu.cn

* extras: mirror.bit.edu.cn

* updates: mirror.bit.edu.cn

kubernetes | 1.4 kB 00:00:00

kubernetes/primary | 61 kB 00:00:00

kubernetes 442/442

Resolving Dependencies

--> Running transaction check

---> Package kubeadm.x86_64 0:1.14.0-0 will be installed

--> Processing Dependency: kubernetes-cni >= 0.7.5 for package: kubeadm-1.14.0-0.x86_64

--> Processing Dependency: cri-tools >= 1.11.0 for package: kubeadm-1.14.0-0.x86_64

---> Package kubectl.x86_64 0:1.14.0-0 will be installed

---> Package kubelet.x86_64 0:1.14.0-0 will be installed

--> Processing Dependency: socat for package: kubelet-1.14.0-0.x86_64

--> Running transaction check

---> Package cri-tools.x86_64 0:1.13.0-0 will be installed

---> Package kubernetes-cni.x86_64 0:0.7.5-0 will be installed

---> Package socat.x86_64 0:1.7.3.2-2.el7 will be installed

--> Finished Dependency Resolution

Dependencies Resolved

===================================================================================================================================================================================================================

Package Arch Version Repository Size

===================================================================================================================================================================================================================

Installing:

kubeadm x86_64 1.14.0-0 kubernetes 8.7 M

kubectl x86_64 1.14.0-0 kubernetes 9.5 M

kubelet x86_64 1.14.0-0 kubernetes 23 M

Installing for dependencies:

cri-tools x86_64 1.13.0-0 kubernetes 5.1 M

kubernetes-cni x86_64 0.7.5-0 kubernetes 10 M

socat x86_64 1.7.3.2-2.el7 base 290 k

Transaction Summary

===================================================================================================================================================================================================================

Install 3 Packages (+3 Dependent packages)

Total download size: 57 M

Installed size: 258 M

Downloading packages:

(1/6): fea2c041b42bef6e4de4ee45eee4456236f2feb3d66572ac310f857676fe9598-kubeadm-1.14.0-0.x86_64.rpm | 8.7 MB 00:00:40

(2/6): 14bfe6e75a9efc8eca3f638eb22c7e2ce759c67f95b43b16fae4ebabde1549f3-cri-tools-1.13.0-0.x86_64.rpm | 5.1 MB 00:00:45

(3/6): 2b52e839216dfc620bd1429cdb87d08d00516eaa75597ad4491a9c1e7db3c392-kubectl-1.14.0-0.x86_64.rpm | 9.5 MB 00:00:25

(4/6): socat-1.7.3.2-2.el7.x86_64.rpm | 290 kB 00:00:10

(5/6): 6089961a11403e579c547532462e16b1bb1f97ec539e4671c4c15f377c427c18-kubelet-1.14.0-0.x86_64.rpm | 23 MB 00:02:31

(6/6): 548a0dcd865c16a50980420ddfa5fbccb8b59621179798e6dc905c9bf8af3b34-kubernetes-cni-0.7.5-0.x86_64.rpm | 10 MB 00:02:16

-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

Total 287 kB/s | 57 MB 00:03:22

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

Installing : socat-1.7.3.2-2.el7.x86_64 1/6

Installing : kubernetes-cni-0.7.5-0.x86_64 2/6

Installing : kubelet-1.14.0-0.x86_64 3/6

Installing : kubectl-1.14.0-0.x86_64 4/6

Installing : cri-tools-1.13.0-0.x86_64 5/6

Installing : kubeadm-1.14.0-0.x86_64 6/6

Verifying : kubelet-1.14.0-0.x86_64 1/6

Verifying : kubeadm-1.14.0-0.x86_64 2/6

Verifying : cri-tools-1.13.0-0.x86_64 3/6

Verifying : kubectl-1.14.0-0.x86_64 4/6

Verifying : kubernetes-cni-0.7.5-0.x86_64 5/6

Verifying : socat-1.7.3.2-2.el7.x86_64 6/6

Installed:

kubeadm.x86_64 0:1.14.0-0 kubectl.x86_64 0:1.14.0-0 kubelet.x86_64 0:1.14.0-0

Dependency Installed:

cri-tools.x86_64 0:1.13.0-0 kubernetes-cni.x86_64 0:0.7.5-0 socat.x86_64 0:1.7.3.2-2.el7

Complete!

[root@manager-node ~]#

-----------------------------------

docker和k8s设置同一个cgroup

[root@worker01-node ~]# vi /etc/docker/daemon.json

内容如下

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

重启docker

[root@worker01-node ~]# systemctl restart docker

kubelet设置 下面出现No such file or directory 是正常的

[root@manager-node ~]# sed -i "s/cgroup-driver=systemd/cgroup-driver=cgroupfs/g" /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

sed: can't read /etc/systemd/system/kubelet.service.d/10-kubeadm.conf: No such file or directory

[root@manager-node ~]#

-----------------------------------

设置开启启动kubelete

[root@manager-node ~]# systemctl enable kubelet && systemctl start kubelet

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

[root@manager-node ~]#

[root@manager-node ~]

-----------------------------------

拉取pull镜像并推送push到自己的仓库中

[root@manager-node ~]# kubeadm config images list

I1229 08:30:24.617466 4852 version.go:96] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.txt": Get https://dl.k8s.io/release/stable-1.txt: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

I1229 08:30:24.617556 4852 version.go:97] falling back to the local client version: v1.14.0

k8s.gcr.io/kube-apiserver:v1.14.0

k8s.gcr.io/kube-controller-manager:v1.14.0

k8s.gcr.io/kube-scheduler:v1.14.0

k8s.gcr.io/kube-proxy:v1.14.0

k8s.gcr.io/pause:3.1

k8s.gcr.io/etcd:3.3.10

k8s.gcr.io/coredns:1.3.1

[root@manager-node ~]#

-----------------------------------

拉取镜像

设置执行的脚本

#!/bin/bash

set -e

KUBE_VERSION=v1.14.0

KUBE_PAUSE_VERSION=3.1

ETCD_VERSION=3.3.10

CORE_DNS_VERSION=1.3.1

GCR_URL=k8s.gcr.io

ALIYUN_URL=registry.cn-hangzhou.aliyuncs.com/google_containers

images=(kube-proxy:${KUBE_VERSION}

kube-scheduler:${KUBE_VERSION}

kube-controller-manager:${KUBE_VERSION}

kube-apiserver:${KUBE_VERSION}

pause:${KUBE_PAUSE_VERSION}

etcd:${ETCD_VERSION}

coredns:${CORE_DNS_VERSION})

for imageName in ${images[@]} ; do

docker pull $ALIYUN_URL/$imageName

docker tag $ALIYUN_URL/$imageName $GCR_URL/$imageName

docker rmi $ALIYUN_URL/$imageName

done

-----------------------------------

执行上面的脚本

[root@manager-node kubeadm]# sh kubeadm.sh

v1.14.0: Pulling from google_containers/kube-proxy

346aee5ea5bc: Pull complete

1e695dec1fee: Pull complete

9ce77f082c19: Pull complete

Digest: sha256:a704064100b363856afa4cee160a51948b9ac49bbc34ba97caeb7928055e9de1

Status: Downloaded newer image for registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.14.0

Untagged: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.14.0

Untagged: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy@sha256:a704064100b363856afa4cee160a51948b9ac49bbc34ba97caeb7928055e9de1

v1.14.0: Pulling from google_containers/kube-scheduler

346aee5ea5bc: Already exists

25d09f49ddd0: Pull complete

Digest: sha256:0484d3f811282a124e60a48de8f19f91913bac4d0ba0805d2ed259ea3b691a5e

Status: Downloaded newer image for registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.14.0

Untagged: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.14.0

Untagged: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler@sha256:0484d3f811282a124e60a48de8f19f91913bac4d0ba0805d2ed259ea3b691a5e

v1.14.0: Pulling from google_containers/kube-controller-manager

346aee5ea5bc: Already exists

fb9302cbe084: Pull complete

Digest: sha256:09c62c11cdfe8dc43e0314174271ca434329c7991d6db5ef7c41a95da399cbf8

Status: Downloaded newer image for registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.14.0

Untagged: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.14.0

Untagged: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager@sha256:09c62c11cdfe8dc43e0314174271ca434329c7991d6db5ef7c41a95da399cbf8

v1.14.0: Pulling from google_containers/kube-apiserver

346aee5ea5bc: Already exists

a1448280d5df: Pull complete

Digest: sha256:ebfb9018e345697e85d7adc4664c9340570bca33fff126e158264a791c6a5708

Status: Downloaded newer image for registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.14.0

Untagged: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.14.0

Untagged: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver@sha256:ebfb9018e345697e85d7adc4664c9340570bca33fff126e158264a791c6a5708

3.1: Pulling from google_containers/pause

cf9202429979: Pull complete

Digest: sha256:759c3f0f6493093a9043cc813092290af69029699ade0e3dbe024e968fcb7cca

Status: Downloaded newer image for registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1

Untagged: registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1

Untagged: registry.cn-hangzhou.aliyuncs.com/google_containers/pause@sha256:759c3f0f6493093a9043cc813092290af69029699ade0e3dbe024e968fcb7cca

3.3.10: Pulling from google_containers/etcd

90e01955edcd: Pull complete

6369547c492e: Pull complete

bd2b173236d3: Pull complete

Digest: sha256:240bd81c2f54873804363665c5d1a9b8e06ec5c63cfc181e026ddec1d81585bb

Status: Downloaded newer image for registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.3.10

Untagged: registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.3.10

Untagged: registry.cn-hangzhou.aliyuncs.com/google_containers/etcd@sha256:240bd81c2f54873804363665c5d1a9b8e06ec5c63cfc181e026ddec1d81585bb

1.3.1: Pulling from google_containers/coredns

e0daa8927b68: Pull complete

3928e47de029: Pull complete

Digest: sha256:638adb0319813f2479ba3642bbe37136db8cf363b48fb3eb7dc8db634d8d5a5b

Status: Downloaded newer image for registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.3.1

Untagged: registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.3.1

Untagged: registry.cn-hangzhou.aliyuncs.com/google_containers/coredns@sha256:638adb0319813f2479ba3642bbe37136db8cf363b48fb3eb7dc8db634d8d5a5b

[root@manager-node kubeadm]#

-----------------------------------

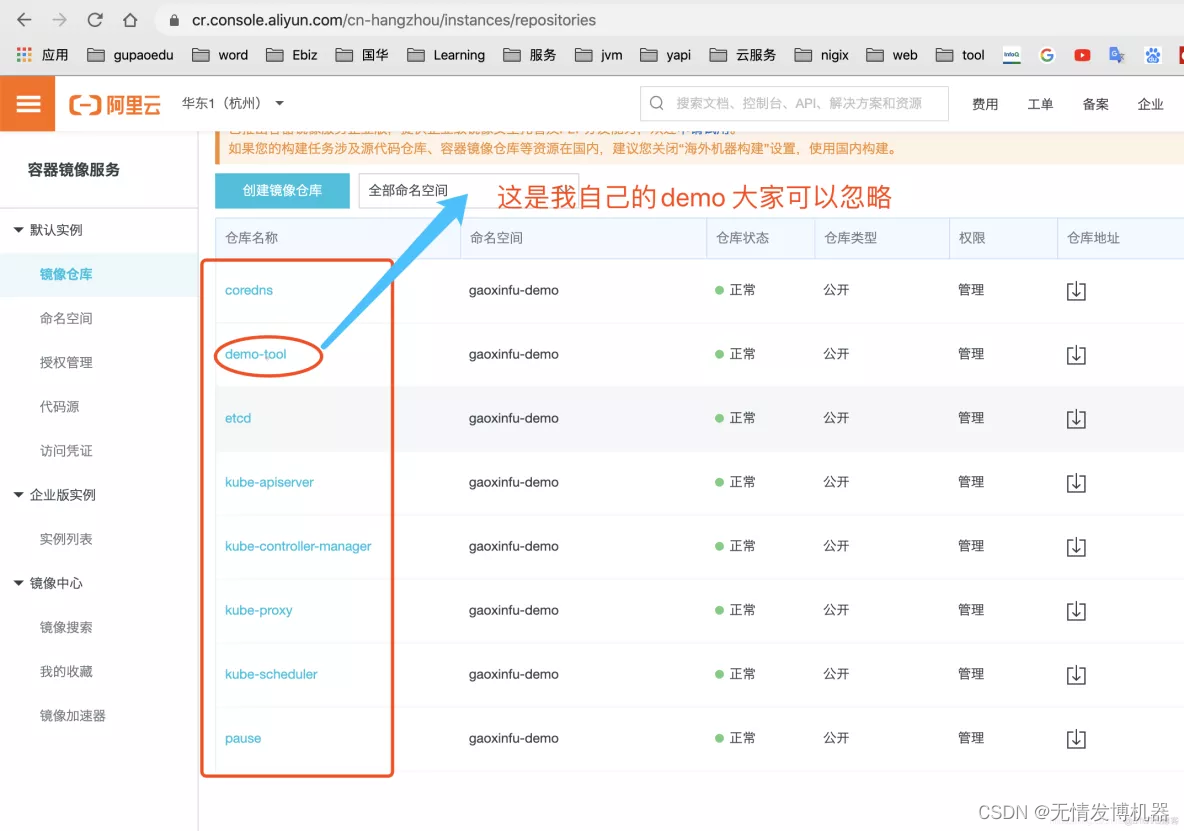

推送镜像到自己的仓库(这个只需要一台机器上执行即可)

首先登录仓库(我这里用的是阿里云的仓库)

[root@manager-node kubeadm]# docker login --username=高新富20180421 registry.cn-hangzhou.aliyuncs.com

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

[root@manager-node kubeadm]#

-----------------------------------

开始推送

制作推送的脚本 kubeadm-push-aliyun.sh

#!/bin/bash

set -e

KUBE_VERSION=v1.14.0

KUBE_PAUSE_VERSION=3.1

ETCD_VERSION=3.3.10

CORE_DNS_VERSION=1.3.1

GCR_URL=k8s.gcr.io

ALIYUN_URL=registry.cn-hangzhou.aliyuncs.com/gaoxinfu-demo

images=(kube-proxy:${KUBE_VERSION}

kube-scheduler:${KUBE_VERSION}

kube-controller-manager:${KUBE_VERSION}

kube-apiserver:${KUBE_VERSION}

pause:${KUBE_PAUSE_VERSION}

etcd:${ETCD_VERSION}

coredns:${CORE_DNS_VERSION})

for imageName in ${images[@]} ; do

docker tag $GCR_URL/$imageName $ALIYUN_URL/$imageName

docker push $ALIYUN_URL/$imageName

docker rmi $ALIYUN_URL/$imageName

done

-----------------------------------

ALIYUN_URL=registry.cn-hangzhou.aliyuncs.com/gaoxinfu-demo

这里我是将镜像推送到我自己的阿里云命名空间gaoxinfu-demo下面

执行脚本 kubeadm-push-aliyun.sh

[root@manager-node kubeadm]# sh kubeadm-push-aliyun.sh

The push refers to repository [registry.cn-hangzhou.aliyuncs.com/kube-proxy]

49ffb7ee5526: Preparing

0b8d2e946c93: Preparing

5ba3be777c2d: Preparing

denied: requested access to the resource is denied

[root@worker01-node kubeadm]# vi kubeadm-push-aliyun.sh

[root@worker01-node kubeadm]# sh kubeadm-push-aliyun.sh

The push refers to repository [registry.cn-hangzhou.aliyuncs.com/gaoxinfu-demo/kube-proxy]

49ffb7ee5526: Pushed

0b8d2e946c93: Pushed

5ba3be777c2d: Pushed

v1.14.0: digest: sha256:a704064100b363856afa4cee160a51948b9ac49bbc34ba97caeb7928055e9de1 size: 951

Untagged: registry.cn-hangzhou.aliyuncs.com/gaoxinfu-demo/kube-proxy:v1.14.0

Untagged: registry.cn-hangzhou.aliyuncs.com/gaoxinfu-demo/kube-proxy@sha256:a704064100b363856afa4cee160a51948b9ac49bbc34ba97caeb7928055e9de1

The push refers to repository [registry.cn-hangzhou.aliyuncs.com/gaoxinfu-demo/kube-scheduler]

f46bd0cdf014: Mounted from google_containers/kube-scheduler

5ba3be777c2d: Mounted from gaoxinfu-demo/kube-proxy

v1.14.0: digest: sha256:0484d3f811282a124e60a48de8f19f91913bac4d0ba0805d2ed259ea3b691a5e size: 741

Untagged: registry.cn-hangzhou.aliyuncs.com/gaoxinfu-demo/kube-scheduler:v1.14.0

Untagged: registry.cn-hangzhou.aliyuncs.com/gaoxinfu-demo/kube-scheduler@sha256:0484d3f811282a124e60a48de8f19f91913bac4d0ba0805d2ed259ea3b691a5e

The push refers to repository [registry.cn-hangzhou.aliyuncs.com/gaoxinfu-demo/kube-controller-manager]

e54a9b2ca97a: Mounted from google_containers/kube-controller-manager

5ba3be777c2d: Mounted from gaoxinfu-demo/kube-scheduler

v1.14.0: digest: sha256:09c62c11cdfe8dc43e0314174271ca434329c7991d6db5ef7c41a95da399cbf8 size: 741

Untagged: registry.cn-hangzhou.aliyuncs.com/gaoxinfu-demo/kube-controller-manager:v1.14.0

Untagged: registry.cn-hangzhou.aliyuncs.com/gaoxinfu-demo/kube-controller-manager@sha256:09c62c11cdfe8dc43e0314174271ca434329c7991d6db5ef7c41a95da399cbf8

The push refers to repository [registry.cn-hangzhou.aliyuncs.com/gaoxinfu-demo/kube-apiserver]

ed0630bf1e64: Mounted from google_containers/kube-apiserver

5ba3be777c2d: Mounted from gaoxinfu-demo/kube-controller-manager

v1.14.0: digest: sha256:ebfb9018e345697e85d7adc4664c9340570bca33fff126e158264a791c6a5708 size: 741

Untagged: registry.cn-hangzhou.aliyuncs.com/gaoxinfu-demo/kube-apiserver:v1.14.0

Untagged: registry.cn-hangzhou.aliyuncs.com/gaoxinfu-demo/kube-apiserver@sha256:ebfb9018e345697e85d7adc4664c9340570bca33fff126e158264a791c6a5708

The push refers to repository [registry.cn-hangzhou.aliyuncs.com/gaoxinfu-demo/pause]

e17133b79956: Mounted from google_containers/pause

3.1: digest: sha256:759c3f0f6493093a9043cc813092290af69029699ade0e3dbe024e968fcb7cca size: 527

Untagged: registry.cn-hangzhou.aliyuncs.com/gaoxinfu-demo/pause:3.1

Untagged: registry.cn-hangzhou.aliyuncs.com/gaoxinfu-demo/pause@sha256:759c3f0f6493093a9043cc813092290af69029699ade0e3dbe024e968fcb7cca

The push refers to repository [registry.cn-hangzhou.aliyuncs.com/gaoxinfu-demo/etcd]

6fbfb277289f: Mounted from google_containers/etcd

30796113fb51: Mounted from google_containers/etcd

8a788232037e: Mounted from google_containers/etcd

3.3.10: digest: sha256:240bd81c2f54873804363665c5d1a9b8e06ec5c63cfc181e026ddec1d81585bb size: 950

Untagged: registry.cn-hangzhou.aliyuncs.com/gaoxinfu-demo/etcd:3.3.10

Untagged: registry.cn-hangzhou.aliyuncs.com/gaoxinfu-demo/etcd@sha256:240bd81c2f54873804363665c5d1a9b8e06ec5c63cfc181e026ddec1d81585bb

The push refers to repository [registry.cn-hangzhou.aliyuncs.com/gaoxinfu-demo/coredns]

c6a5fc8a3f01: Mounted from google_containers/coredns

fb61a074724d: Mounted from google_containers/coredns

1.3.1: digest: sha256:638adb0319813f2479ba3642bbe37136db8cf363b48fb3eb7dc8db634d8d5a5b size: 739

Untagged: registry.cn-hangzhou.aliyuncs.com/gaoxinfu-demo/coredns:1.3.1

Untagged: registry.cn-hangzhou.aliyuncs.com/gaoxinfu-demo/coredns@sha256:638adb0319813f2479ba3642bbe37136db8cf363b48fb3eb7dc8db634d8d5a5b

[root@worker01-node kubeadm]#

-----------------------------------

执行完成,可以看一下自己的阿里云的镜像仓库是否有这几个镜像了

kubeadm init初始化master节点

kubeadm reset 还原

这个主要是假如你初始化了想还原或者放弃,重新搭建,可以用此命令

[root@manager-node ~]# kubeadm reset

[reset] WARNING: Changes made to this host by 'kubeadm init' or 'kubeadm join' will be reverted.

[reset] Are you sure you want to proceed? [y/N]: y

[preflight] Running pre-flight checks

W1229 09:23:34.757252 7056 reset.go:234] [reset] No kubeadm config, using etcd pod spec to get data directory

[reset] No etcd config found. Assuming external etcd

[reset] Please manually reset etcd to prevent further issues

[reset] Stopping the kubelet service

[reset] unmounting mounted directories in "/var/lib/kubelet"

[reset] Deleting contents of stateful directories: [/var/lib/kubelet /etc/cni/net.d /var/lib/dockershim /var/run/kubernetes]

[reset] Deleting contents of config directories: [/etc/kubernetes/manifests /etc/kubernetes/pki]

[reset] Deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf]

The reset process does not reset or clean up iptables rules or IPVS tables.

If you wish to reset iptables, you must do so manually.

For example:

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

If your cluster was setup to utilize IPVS, run ipvsadm --clear (or similar)

to reset your system's IPVS tables.

[root@manager-node ~]#

-----------------------------------

kubeadm init 初始化

kubeadm init --kubernetes-version=1.14.0 --apiserver-advertise-address=192.168.1.111 --pod-network-cidr=10.244.0.0/16

参数说明

1.--apiserver-advertise-address=192.168.1.111 这里的ip 实际就是master节点的内网ip,我这里manager-node节点是192.168.1.111

执行样例

[root@manager-node ~]# kubeadm init --kubernetes-version=1.14.0 --apiserver-advertise-address=192.168.1.111 --pod-network-cidr=10.244.0.0/16

[init] Using Kubernetes version: v1.14.0

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Activating the kubelet service

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [manager-node kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.1.111]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [manager-node localhost] and IPs [192.168.1.111 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [manager-node localhost] and IPs [192.168.1.111 127.0.0.1 ::1]

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 15.506013 seconds

[upload-config] storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.14" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --experimental-upload-certs

[mark-control-plane] Marking the node manager-node as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node manager-node as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: 3wgo6s.s8gm0tum1vy4a89f

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.1.111:6443 --token 3wgo6s.s8gm0tum1vy4a89f \

--discovery-token-ca-cert-hash sha256:f2b7372e6745ac4e4b121b76e594b6c172730e9d76043fd307909e525c58cb9a

[root@manager-node ~]#

-----------------------------------

备注

1.保存好最后一个执行的命令:(因此待会worker节点搭建集群的时候,需要跟主节点进行集群搭建)

kubeadm join 192.168.1.111:6443 --token 3wgo6s.s8gm0tum1vy4a89f \

--discovery-token-ca-cert-hash sha256:f2b7372e6745ac4e4b121b76e594b6c172730e9d76043fd307909e525c58cb9a

-----------------------------------

根据上面init的提示,在master节点上执行下面的命令

[root@manager-node ~]# mkdir -p $HOME/.kube

[root@manager-node ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@manager-node ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@manager-node ~]#

-----------------------------------

验证是否安装成功

[root@manager-node ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-fb8b8dccf-6hvcz 0/1 Pending 0 9m8s

coredns-fb8b8dccf-x8xxc 0/1 Pending 0 9m8s

etcd-manager-node 1/1 Running 0 8m30s

kube-apiserver-manager-node 1/1 Running 0 8m12s

kube-controller-manager-manager-node 1/1 Running 0 8m21s

kube-proxy-rvddm 1/1 Running 0 9m9s

kube-scheduler-manager-node 1/1 Running 0 8m2s

[root@manager-node ~]#

-----------------------------------

备注

1.通过上面命令查看我们可以看到 etc,controller,scheduler等组件都以pod的方式安装成功了;

2.这里注意 coredns没有启动,需要安装网络插件(参考下面的calico网络插件安装)

健康健康校验

[root@manager-node ~]# curl -k https://localhost:6443/healthz

ok[root@manager-node ~]#

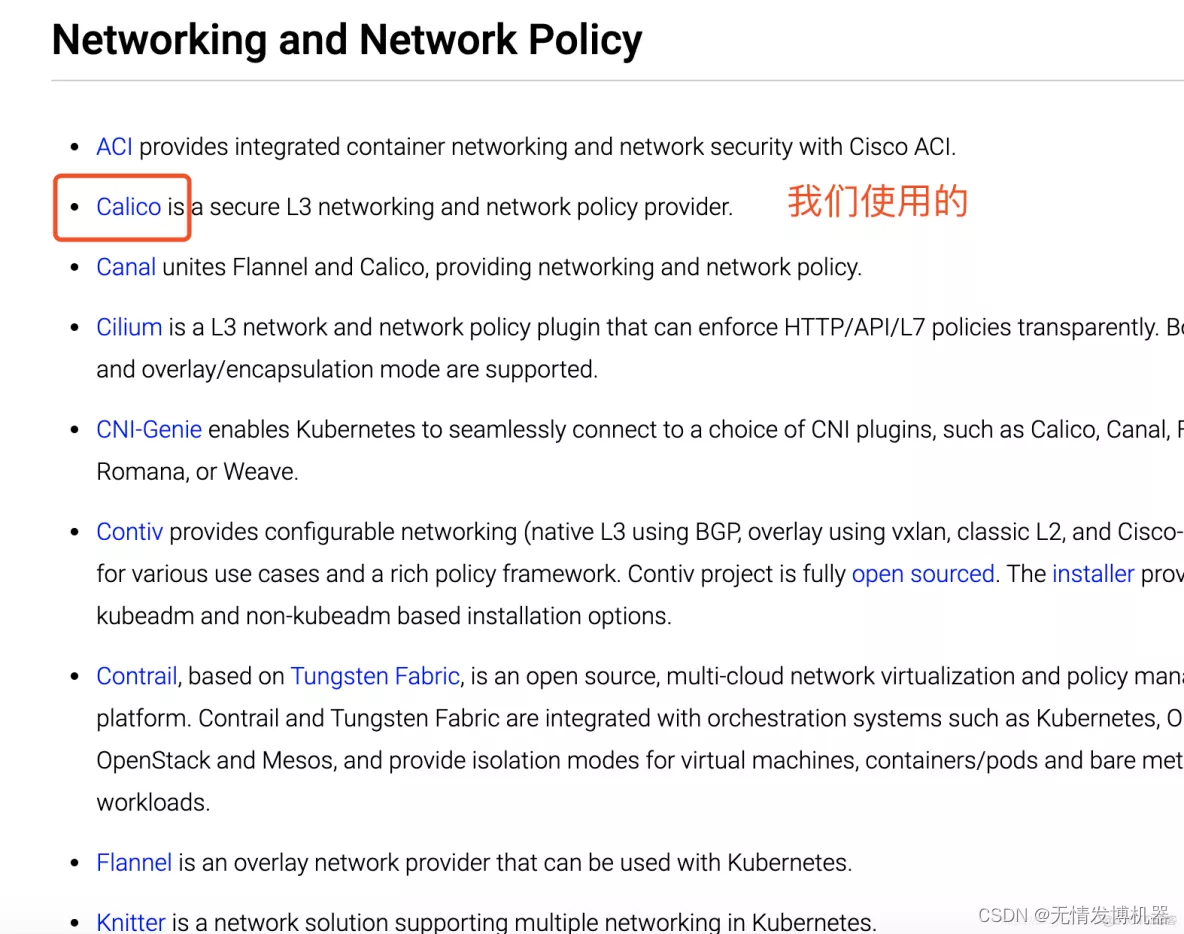

部署calico网络插件

网络插件方式地址

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Calico安装

Calico官网

https://docs.projectcalico.org/v3.9/getting-started/kubernetes/

[root@manager-node ~]# kubectl apply -f https://docs.projectcalico.org/v3.9/manifests/calico.yaml

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

[root@manager-node ~]#

-----------------------------------

kubectl get pods [查看是否安装成功]

kubectl get pods --all-namespaces -w [查看所有的pod]

kubectl get pods --all-namespaces -w

[root@manager-node ~]# kubectl get pods --all-namespaces -w

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-594b6978c5-7lxqz 1/1 Running 1 14h

kube-system calico-node-82rx7 1/1 Running 1 14h

kube-system coredns-fb8b8dccf-6hvcz 1/1 Running 1 14h

kube-system coredns-fb8b8dccf-x8xxc 1/1 Running 1 14h

kube-system etcd-manager-node 1/1 Running 1 14h

kube-system kube-apiserver-manager-node 1/1 Running 1 14h

kube-system kube-controller-manager-manager-node 1/1 Running 1 14h

kube-system kube-proxy-rvddm 1/1 Running 1 14h

kube-system kube-scheduler-manager-node

-----------------------------------

kubectl get pods -n kube-system [查看k8s系统级别的pod]

[root@manager-node demo]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-594b6978c5-7lxqz 1/1 Running 1 16h

calico-node-82rx7 1/1 Running 1 16h

calico-node-xrqf8 1/1 Running 0 104m

calico-node-z86gh 1/1 Running 0 104m

coredns-fb8b8dccf-6hvcz 1/1 Running 1 16h

coredns-fb8b8dccf-x8xxc 1/1 Running 1 16h

etcd-manager-node 1/1 Running 1 16h

kube-apiserver-manager-node 1/1 Running 1 16h

kube-controller-manager-manager-node 1/1 Running 2 16h

kube-proxy-5q9b5 1/1 Running 0 104m

kube-proxy-pw6r7 1/1 Running 0 104m

kube-proxy-rvddm 1/1 Running 1 16h

kube-scheduler-manager-node 1/1 Running 3 16h

[root@manager-node demo]#

-----------------------------------

kube join [worker节点关联集群]

下面的命令是初始化kubeadm init成功 提示的需要在子节点上执行的命令

kubeadm join 192.168.1.111:6443 --token 3wgo6s.s8gm0tum1vy4a89f \

--discovery-token-ca-cert-hash sha256:f2b7372e6745ac4e4b121b76e594b6c172730e9d76043fd307909e525c58cb9a

在worker01-node节点上执行

[root@worker01-node ~]# kubeadm join 192.168.1.111:6443 --token 3wgo6s.s8gm0tum1vy4a89f \

> --discovery-token-ca-cert-hash sha256:f2b7372e6745ac4e4b121b76e594b6c172730e9d76043fd307909e525c58cb9a

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.14" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Activating the kubelet service

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

[root@worker01-node ~]# kubectl get nodes

The connection to the server localhost:8080 was refused - did you specify the right host or port?

[root@worker01-node ~]#

-----------------------------------

在worker02-node节点上执行

[root@worker02-node ~]# kubeadm join 192.168.1.111:6443 --token 3wgo6s.s8gm0tum1vy4a89f \

> --discovery-token-ca-cert-hash sha256:f2b7372e6745ac4e4b121b76e594b6c172730e9d76043fd307909e525c58cb9a

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.14" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Activating the kubelet service

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

[root@worker02-node ~]# kubectl get nodes

The connection to the server localhost:8080 was refused - did you specify the right host or port?

[root@worker02-node ~]#

-----------------------------------

在Manager-node(master主节点)上查看是否已经有子节点

kubectl get nodes [查看]

[root@manager-node ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

manager-node Ready master 15h v1.14.0

worker01-node Ready <none> 87s v1.14.0

worker02-node NotReady <none> 83s v1.14.0

[root@manager-node ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

manager-node Ready master 15h v1.14.0

worker01-node Ready <none> 5m21s v1.14.0

worker02-node Ready <none> 5m17s v1.14.0

[root@manager-node ~]#

-----------------------------------

如下

1.从NotRedeay变为Ready,集群中已经有三个节点;

kubectl get pods -o wide [展示详情查看]

[root@manager-node demo]# kubectl get pods -o wide

至此,集群搭建完成

案例:使用集群搭建nginx pod

Manager-Node节点上创建demo文件夹

[root@manager-node ~]# pwd

/root

[root@manager-node ~]# mkdir demo

创建Nginx pod 配置文件

创建脚本

cat > pod_nginx_kubeadm.yaml <<EOF

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: nginx

labels:

tier: frontend

spec:

replicas: 3

selector:

matchLabels:

tier: frontend

template:

metadata:

name: nginx

labels:

tier: frontend

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

-----------------------------------

执行样例

[root@manager-node demo]# cat > pod_nginx_kubeadm.yaml <<EOF

> apiVersion: apps/v1

> kind: ReplicaSet

> metadata:

> name: nginx

> labels:

> tier: frontend

> spec:

> replicas: 3

> selector:

> matchLabels:

> tier: frontend

> template:

> metadata:

> name: nginx

> labels:

> tier: frontend

> spec:

> containers:

> - name: nginx

> image: nginx

> ports:

> - containerPort: 80

> EOF

[root@manager-node demo]# ls -la

total 4

drwxr-xr-x. 2 root root 36 Jan 2 04:25 .

dr-xr-x---. 7 root root 243 Jan 2 04:24 ..

-rw-r--r--. 1 root root 353 Jan 2 04:25 pod_nginx_kubeadm.yaml

[root@manager-node demo]#

-----------------------------------

kubectl apply -f pod_nginx_kubeadm.yaml [启动]

[root@manager-node demo]# kubectl apply -f pod_nginx_kubeadm.yaml

replicaset.apps/nginx created

[root@manager-node demo]#

kubectl get pods 查看

kubectl get pods # 显示当前状态

kubectl get pods -w # 实时查看显示状态

kubectl describe pod nginx

[root@manager-node demo]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-9t4qw 0/1 ContainerCreating 0 34s

nginx-h49g8 0/1 ContainerCreating 0 34s

nginx-v6njt 0/1 ContainerCreating 0 34s

-----------------------------------

kubectl get pods [查看pod]

可以发现上面的状态是ContainerCreating,过段时间会变为Running

[root@manager-node demo]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-9t4qw 1/1 Running 0 9m2s

nginx-h49g8 1/1 Running 0 9m2s

nginx-v6njt 1/1 Running 0 9m2s

[root@manager-node demo]#

-----------------------------------

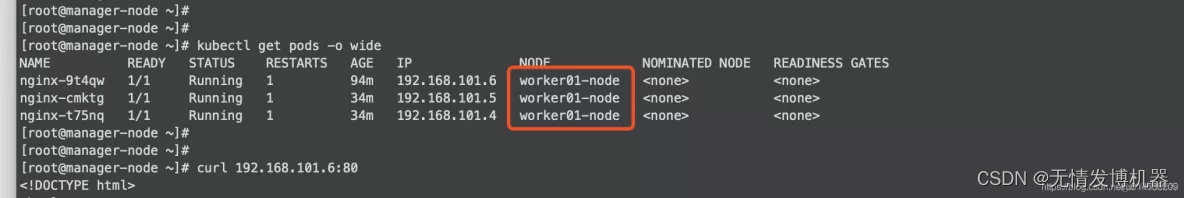

kubectl get pods -o wide [查看pod详情]

[root@manager-node ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-9t4qw 1/1 Running 1 91m 192.168.101.6 worker01-node <none> <none>

nginx-cmktg 1/1 Running 1 30m 192.168.101.5 worker01-node <none> <none>

nginx-t75nq 1/1 Running 1 30m 192.168.101.4 worker01-node <none> <none>

[root@manager-node ~]#

-----------------------------------

1.我们可以看到 我们在master节点上执行创建三个pod之后,

创建的三个节点随机的都到worker01-node节点上,并且三个pod,都有的自己的ip

2.这里注意下,pod或者容器的ip创建都是通过我们上传安装的Calico组件创建的;

3.上面的192.168.101.6,192.168.101.5,192.168.101.4

验证nginx pod安装

[root@manager-node ~]#

[root@manager-node ~]# curl 192.168.101.6:80

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

[root@manager-node ~]#

-----------------------------------

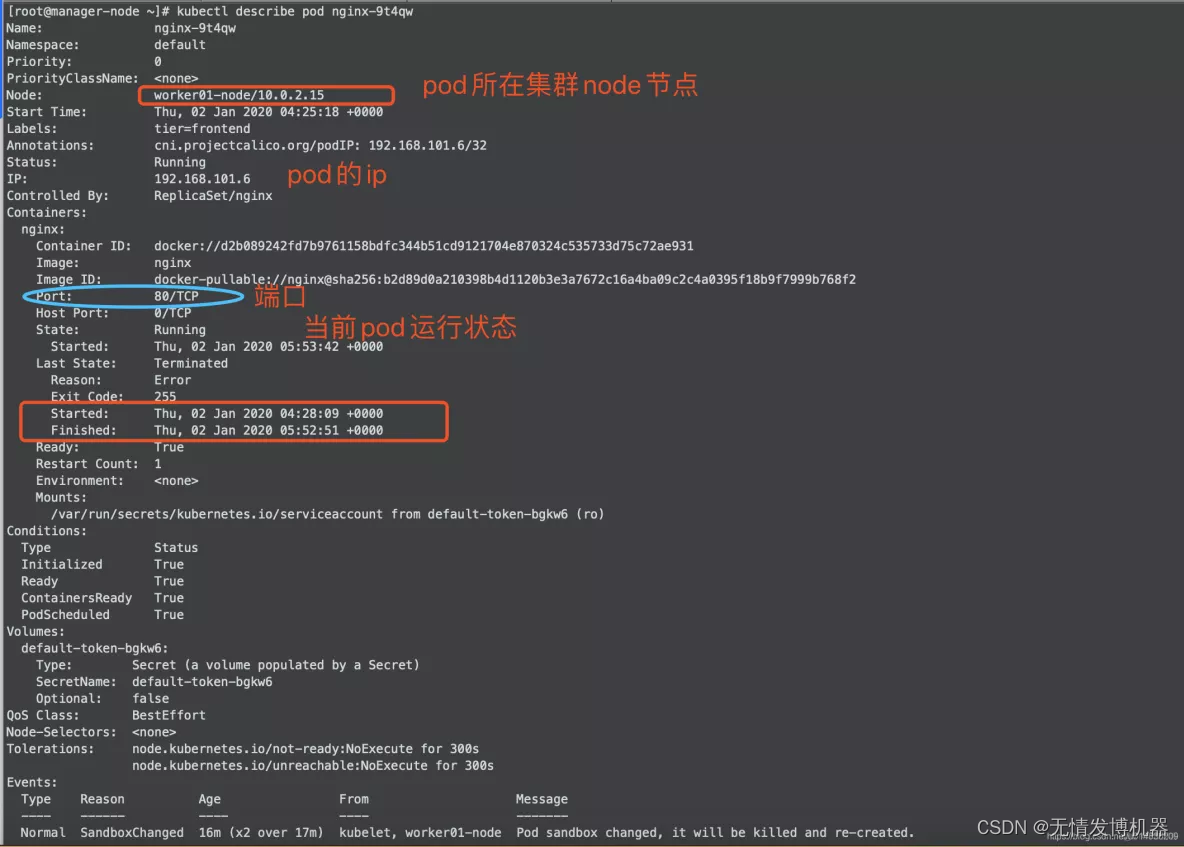

[root@manager-node ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-9t4qw 1/1 Running 1 104m 192.168.101.6 worker01-node <none> <none>

nginx-cmktg 1/1 Running 1 44m 192.168.101.5 worker01-node <none> <none>

nginx-t75nq 1/1 Running 1 44m 192.168.101.4 worker01-node <none> <none>

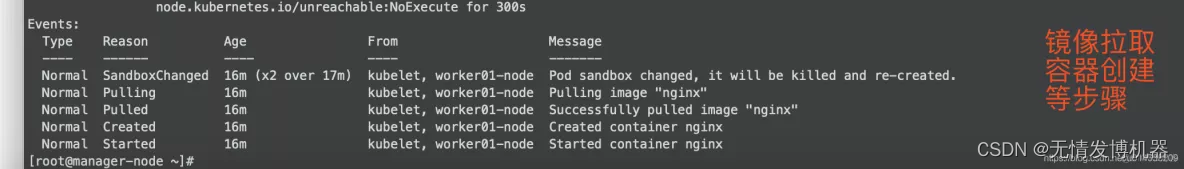

[root@manager-node ~]# kubectl describe pod nginx-9t4qw

## 见下面的截图

-----------------------------------

删除pod kubectl delete -f pod_nginx_rs.yaml

kubectl delete -f pod_nginx_kubeadm.yaml

kubeadm init步骤说明

/*

01-进行一系列检查,以确定这台机器可以部署kubernetes

02-生成kubernetes对外提供服务所需要的各种证书可对应目录

/etc/kubernetes/pki/*

03-为其他组件生成访问kube-ApiServer所需的配置文件

ls /etc/kubernetes/

admin.conf controller-manager.conf kubelet.conf scheduler.conf

04-为 Master组件生成Pod配置文件。

ls /etc/kubernetes/manifests/*.yaml

kube-apiserver.yaml

kube-controller-manager.yaml

kube-scheduler.yaml

05-生成etcd的Pod YAML文件。

ls /etc/kubernetes/manifests/*.yaml

kube-apiserver.yaml

kube-controller-manager.yaml

kube-scheduler.yaml

etcd.yaml

06-一旦这些 YAML 文件出现在被 kubelet 监视的/etc/kubernetes/manifests/目录下,kubelet就会自动创建这些yaml文件定义的pod,即master组件的容器。master容器启动后,kubeadm会通过检查localhost:6443/healthz这个master组件的健康状态检查URL,等待master组件完全运行起来

07-为集群生成一个bootstrap token

08-将ca.crt等 Master节点的重要信息,通过ConfigMap的方式保存在etcd中,工后续部署node节点使用

09-最后一步是安装默认插件,kubernetes默认kube-proxy和DNS两个插件是必须安装的

-----------------------------------