概述

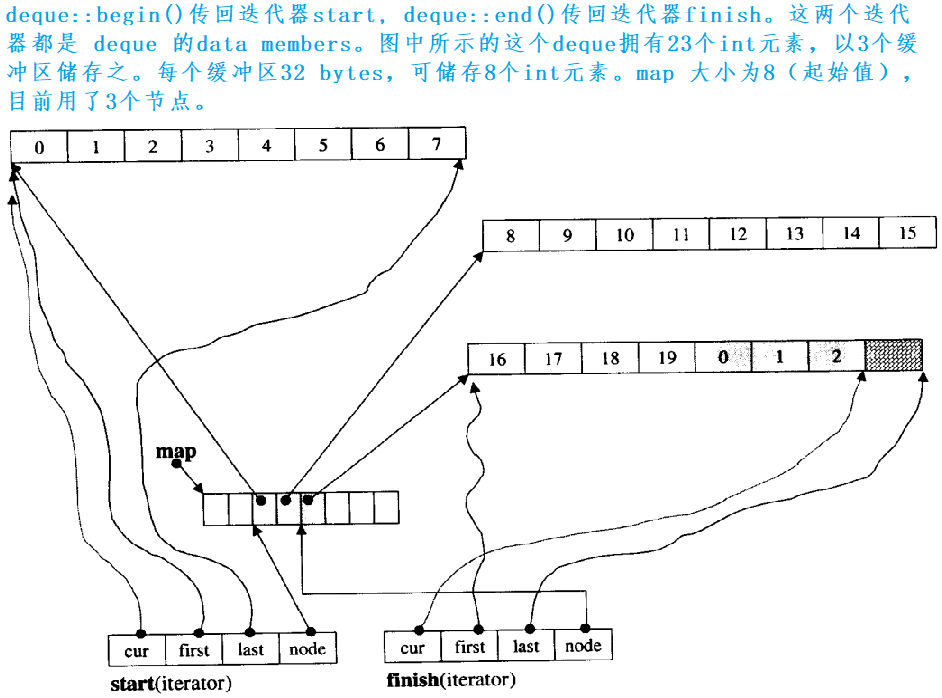

对于surfaceflinger大多数人都知道它的功能是做图形合成的,用英语表示就是指composite。其大致框图如下:

- 各个Android app将自己的图形画面通过surface为载体通过AIDL接口(Binder IPC)传递到surfaceflinger进程

- surfaceflinger进程中的composition engine与HWC协商,哪些图层HWC可以直接显示,哪些图层需要自己合成为一个图层后再送给HWC显示。

- surfaceflinger与HWC把合成策略协商完后,再将合成后的图层和独立显示的图层分别传递给HWC,HWC再操作硬件显示在屏幕上。

本系列文章会深入分析surfaceflinger的各个方面,力争弄懂下列问题:

- surfaceflinger做合成的动作是如何触发的?进程的消息模型是什么样的? 如何驱动循环做合成动作的?

- surface是什么? surface与图形数据buffer有什么关系?

- surface对应在surfaceflinger进程内用什么东西表示?各个app的图形数据buffer怎么传递过来的?

- 图形数据在surfaceflinger进程内部流转过程是什么样的?

- 既然涉及到跨进程传递,图形buffer的生产和消费是如何同步的?Fence是什么玩意?

- Vsync是个什么意思?有什么用?

本篇文章先来阅读源码分析下第一个问题。

surfaceflinger进程的main函数

int main(int, char**) {

signal(SIGPIPE, SIG_IGN);

hardware::configureRpcThreadpool(1 /* maxThreads */,false /* callerWillJoin */);

startGraphicsAllocatorService();

// When SF is launched in its own process, limit the number of

// binder threads to 4.

ProcessState::self()->setThreadPoolMaxThreadCount(4);

// start the thread pool

sp<ProcessState> ps(ProcessState::self());

ps->startThreadPool();

// instantiate surfaceflinger

sp<SurfaceFlinger> flinger = surfaceflinger::createSurfaceFlinger();

setpriority(PRIO_PROCESS, 0, PRIORITY_URGENT_DISPLAY);

set_sched_policy(0, SP_FOREGROUND);

// Put most SurfaceFlinger threads in the system-background cpuset

// Keeps us from unnecessarily using big cores

// Do this after the binder thread pool init

if (cpusets_enabled()) set_cpuset_policy(0, SP_SYSTEM);

// initialize before clients can connect

flinger->init();

// publish surface flinger

sp<IServiceManager> sm(defaultServiceManager());

sm->addService(String16(SurfaceFlinger::getServiceName()), flinger, false,

IServiceManager::DUMP_FLAG_PRIORITY_CRITICAL | IServiceManager::DUMP_FLAG_PROTO);

startDisplayService(); // dependency on SF getting registered above

if (SurfaceFlinger::setSchedFifo(true) != NO_ERROR) {

ALOGW("Couldn't set to SCHED_FIFO: %s", strerror(errno));

}

// run surface flinger in this thread

flinger->run();

return 0;

}

上面的代码大致可以缩减为三句话:

int main(int, char**) {

..............................;

sp<SurfaceFlinger> flinger = surfaceflinger::createSurfaceFlinger();

..............................;

flinger->init();

..............................;

flinger->run();

return 0;

}

下面再分别看下这三个函数分别做了什么?

surfaceflinger::createSurfaceFlinger

sp<SurfaceFlinger> createSurfaceFlinger() {

static DefaultFactory factory;

return new SurfaceFlinger(factory);

}

surfaceflinger::init

主要是对子模块mCompositionEngine,Displays, RenderEngine等模块的初始化,貌似和消息传递关系不大,先放着不深入分析。

void SurfaceFlinger::init() {

ALOGI( "SurfaceFlinger's main thread ready to run. "

"Initializing graphics H/W...");

Mutex::Autolock _l(mStateLock);

mCompositionEngine->setRenderEngine(renderengine::RenderEngine::create(

renderengine::RenderEngineCreationArgs::Builder()

.setPixelFormat(static_cast<int32_t>(defaultCompositionPixelFormat))

.setImageCacheSize(maxFrameBufferAcquiredBuffers)

.setUseColorManagerment(useColorManagement)

.setEnableProtectedContext(enable_protected_contents(false))

.setPrecacheToneMapperShaderOnly(false)

.setSupportsBackgroundBlur(mSupportsBlur)

.setContextPriority(useContextPriority

? renderengine::RenderEngine::ContextPriority::HIGH

: renderengine::RenderEngine::ContextPriority::MEDIUM)

.build()));

mCompositionEngine->setTimeStats(mTimeStats);

LOG_ALWAYS_FATAL_IF(mVrFlingerRequestsDisplay,

"Starting with vr flinger active is not currently supported.");

mCompositionEngine->setHwComposer(getFactory().createHWComposer(getBE().mHwcServiceName));

mCompositionEngine->getHwComposer().setConfiguration(this, getBE().mComposerSequenceId);

// Process any initial hotplug and resulting display changes.

processDisplayHotplugEventsLocked();

const auto display = getDefaultDisplayDeviceLocked();

LOG_ALWAYS_FATAL_IF(!display, "Missing internal display after registering composer callback.");

LOG_ALWAYS_FATAL_IF(!getHwComposer().isConnected(*display->getId()),

"Internal display is disconnected.");

................................................................;

// initialize our drawing state

mDrawingState = mCurrentState;

// set initial conditions (e.g. unblank default device)

initializeDisplays();

char primeShaderCache[PROPERTY_VALUE_MAX];

property_get("service.sf.prime_shader_cache", primeShaderCache, "1");

if (atoi(primeShaderCache)) {

getRenderEngine().primeCache();

}

// Inform native graphics APIs whether the present timestamp is supported:

const bool presentFenceReliable =

!getHwComposer().hasCapability(hal::Capability::PRESENT_FENCE_IS_NOT_RELIABLE);

mStartPropertySetThread = getFactory().createStartPropertySetThread(presentFenceReliable);

if (mStartPropertySetThread->Start() != NO_ERROR) {

ALOGE("Run StartPropertySetThread failed!");

}

ALOGV("Done initializing");

}

surfaceflinger::run

看到死循环了,哈哈,这应该就是本篇文章要找的死循环。看来要重点看下这个mEventQueue在wait什么Message了,哪里来的Message。

void SurfaceFlinger::run() {

while (true) {

mEventQueue->waitMessage();

}

}

std::unique_ptr mEventQueue创建

SurfaceFlinger::SurfaceFlinger(Factory& factory, SkipInitializationTag)

: mFactory(factory),

mInterceptor(mFactory.createSurfaceInterceptor(this)),

mTimeStats(std::make_shared<impl::TimeStats>()),

mFrameTracer(std::make_unique<FrameTracer>()),

mEventQueue(mFactory.createMessageQueue()),

mCompositionEngine(mFactory.createCompositionEngine()),

mInternalDisplayDensity(getDensityFromProperty("ro.sf.lcd_density", true)),

mEmulatedDisplayDensity(getDensityFromProperty("qemu.sf.lcd_density", false)) {}

std::unique_ptr<MessageQueue> DefaultFactory::createMessageQueue() {

return std::make_unique<android::impl::MessageQueue>();

}

MessageQueue 的定义

可以看到MessageQueue类中还定义了一个Handler类。现在还不知道这些类中的成员变量和函数的作用。那么就从waitMessage()函数入手,一探究竟。

class MessageQueue final : public android::MessageQueue {

class Handler : public MessageHandler {

enum { eventMaskInvalidate = 0x1, eventMaskRefresh = 0x2, eventMaskTransaction = 0x4 };

MessageQueue& mQueue;

int32_t mEventMask;

std::atomic<nsecs_t> mExpectedVSyncTime;

public:

explicit Handler(MessageQueue& queue) : mQueue(queue), mEventMask(0) {}

virtual void handleMessage(const Message& message);

void dispatchRefresh();

void dispatchInvalidate(nsecs_t expectedVSyncTimestamp);

};

friend class Handler;

sp<SurfaceFlinger> mFlinger;

sp<Looper> mLooper;

sp<EventThreadConnection> mEvents;

gui::BitTube mEventTube;

sp<Handler> mHandler;

static int cb_eventReceiver(int fd, int events, void* data);

int eventReceiver(int fd, int events);

public:

~MessageQueue() override = default;

void init(const sp<SurfaceFlinger>& flinger) override;

void setEventConnection(const sp<EventThreadConnection>& connection) override;

void waitMessage() override;

void postMessage(sp<MessageHandler>&&) override;

// sends INVALIDATE message at next VSYNC

void invalidate() override;

// sends REFRESH message at next VSYNC

void refresh() override;

};

MessageQueue

waitMessage源码分析

void MessageQueue::waitMessage() {

do {

IPCThreadState::self()->flushCommands();

int32_t ret = mLooper->pollOnce(-1);

switch (ret) {

case Looper::POLL_WAKE:

case Looper::POLL_CALLBACK:

continue;

case Looper::POLL_ERROR:

ALOGE("Looper::POLL_ERROR");

continue;

case Looper::POLL_TIMEOUT:

// timeout (should not happen)

continue;

default:

// should not happen

ALOGE("Looper::pollOnce() returned unknown status %d", ret);

continue;

}

} while (true);

}

看来要重点看下IPCThreadState和这个mLooper了

IPCThreadState这玩意是和Binder相关啊,看着这意思是要把Binder中的消息刷出来,看看各个app进程有没有通过Binder发消息过来。先放在着,后面回过头再来分析。

void IPCThreadState::flushCommands()

{

if (mProcess->mDriverFD < 0)

return;

talkWithDriver(false);

if (mOut.dataSize() > 0) {

talkWithDriver(false);

}

if (mOut.dataSize() > 0) {

ALOGW("mOut.dataSize() > 0 after flushCommands()");

}

}

再来看看这个mLooper的实现。

class Looper : public RefBase {

protected:

virtual ~Looper();

public:

Looper(bool allowNonCallbacks);

bool getAllowNonCallbacks() const;

int pollOnce(int timeoutMillis, int* outFd, int* outEvents, void** outData);

inline int pollOnce(int timeoutMillis) {

return pollOnce(timeoutMillis, nullptr, nullptr, nullptr);

}

int pollAll(int timeoutMillis, int* outFd, int* outEvents, void** outData);

inline int pollAll(int timeoutMillis) {

return pollAll(timeoutMillis, nullptr, nullptr, nullptr);

}

void wake();

int addFd(int fd, int ident, int events, Looper_callbackFunc callback, void* data);

int addFd(int fd, int ident, int events, const sp<LooperCallback>& callback, void* data);

int removeFd(int fd);

void sendMessage(const sp<MessageHandler>& handler, const Message& message);

void sendMessageDelayed(nsecs_t uptimeDelay, const sp<MessageHandler>& handler,

const Message& message);

void sendMessageAtTime(nsecs_t uptime, const sp<MessageHandler>& handler,

const Message& message);

void removeMessages(const sp<MessageHandler>& handler);

void removeMessages(const sp<MessageHandler>& handler, int what);

bool isPolling() const;

static sp<Looper> prepare(int opts);

static void setForThread(const sp<Looper>& looper);

static sp<Looper> getForThread();

private:

struct Request {

int fd;

int ident;

int events;

int seq;

sp<LooperCallback> callback;

void* data;

void initEventItem(struct epoll_event* eventItem) const;

};

struct Response {

int events;

Request request;

};

struct MessageEnvelope {

MessageEnvelope() : uptime(0) { }

MessageEnvelope(nsecs_t u, const sp<MessageHandler> h,

const Message& m) : uptime(u), handler(h), message(m) {

}

nsecs_t uptime;

sp<MessageHandler> handler;

Message message;

};

const bool mAllowNonCallbacks; // immutable

android::base::unique_fd mWakeEventFd; // immutable

Mutex mLock;

Vector<MessageEnvelope> mMessageEnvelopes; // guarded by mLock

bool mSendingMessage; // guarded by mLock

// Whether we are currently waiting for work. Not protected by a lock,

// any use of it is racy anyway.

volatile bool mPolling;

android::base::unique_fd mEpollFd; // guarded by mLock but only modified on the looper thread

bool mEpollRebuildRequired; // guarded by mLock

// Locked list of file descriptor monitoring requests.

KeyedVector<int, Request> mRequests; // guarded by mLock

int mNextRequestSeq;

// This state is only used privately by pollOnce and does not require a lock since

// it runs on a single thread.

Vector<Response> mResponses;

size_t mResponseIndex;

nsecs_t mNextMessageUptime; // set to LLONG_MAX when none

int pollInner(int timeoutMillis);

int removeFd(int fd, int seq);

void awoken();

void pushResponse(int events, const Request& request);

void rebuildEpollLocked();

void scheduleEpollRebuildLocked();

static void initTLSKey();

static void threadDestructor(void *st);

static void initEpollEvent(struct epoll_event* eventItem);

};

先来看看这个pollOnce(-1)函数是在干啥 ?

int pollOnce(int timeoutMillis, int* outFd, int* outEvents, void** outData);

inline int pollOnce(int timeoutMillis) {

return pollOnce(timeoutMillis, nullptr, nullptr, nullptr);

}

int Looper::pollOnce(int timeoutMillis, int* outFd, int* outEvents, void** outData) {

int result = 0;

for (;;) {

//因为参数outFd, outEvents, outData都为null,所以此while循环不用太关注,

//如果mResponses中有事件,就将mResponseIndex加一返回,没有事件就执行下面的pollInner

//下面重点关注mResponses里面的item在哪里push进去的

while (mResponseIndex < mResponses.size()) {

const Response& response = mResponses.itemAt(mResponseIndex++);

int ident = response.request.ident;

if (ident >= 0) {

int fd = response.request.fd;

int events = response.events;

void* data = response.request.data;

if (outFd != nullptr) *outFd = fd;

if (outEvents != nullptr) *outEvents = events;

if (outData != nullptr) *outData = data;

return ident;

}

}

if (result != 0) {

if (outFd != nullptr) *outFd = 0;

if (outEvents != nullptr) *outEvents = 0;

if (outData != nullptr) *outData = nullptr;

return result;

}

//重点关注下这个实现

result = pollInner(timeoutMillis);

}

}

pollInner干了什么 ?

int Looper::pollInner(int timeoutMillis) {

//计算出timeoutMillis作为epoll_wait的timeout时长

if (timeoutMillis != 0 && mNextMessageUptime != LLONG_MAX) {

nsecs_t now = systemTime(SYSTEM_TIME_MONOTONIC);

int messageTimeoutMillis = toMillisecondTimeoutDelay(now, mNextMessageUptime);

if (messageTimeoutMillis >= 0

&& (timeoutMillis < 0 || messageTimeoutMillis < timeoutMillis)) {

timeoutMillis = messageTimeoutMillis;

}

}

// Poll.

int result = POLL_WAKE;

mResponses.clear(); //清空mResponses,我擦,看来mResponses就是在这里push和clear的

mResponseIndex = 0;

mPolling = true;

struct epoll_event eventItems[EPOLL_MAX_EVENTS];

//epoll_wait 等待事件的到来,后面再具体看下这个epoll中都有哪些被监控的文件句柄?

int eventCount = epoll_wait(mEpollFd.get(), eventItems, EPOLL_MAX_EVENTS, timeoutMillis);

mPolling = false;

mLock.lock();

...........................;

for (int i = 0; i < eventCount; i++) {

int fd = eventItems[i].data.fd;

uint32_t epollEvents = eventItems[i].events;

if (fd == mWakeEventFd.get()) {

............................;

} else {

ssize_t requestIndex = mRequests.indexOfKey(fd);

if (requestIndex >= 0) {

int events = 0;

if (epollEvents & EPOLLIN) events |= EVENT_INPUT;

if (epollEvents & EPOLLOUT) events |= EVENT_OUTPUT;

if (epollEvents & EPOLLERR) events |= EVENT_ERROR;

if (epollEvents & EPOLLHUP) events |= EVENT_HANGUP;

//将epoll监控到的event push到mResponses中

pushResponse(events, mRequests.valueAt(requestIndex));

} else {

ALOGW("Ignoring unexpected epoll events 0x%x on fd %d that is "

"no longer registered.", epollEvents, fd);

}

}

}

Done:

mNextMessageUptime = LLONG_MAX;

//处理mMessageEnvelopes中具体message,这里面的message哪里来的,现在还不知道

while (mMessageEnvelopes.size() != 0) {

nsecs_t now = systemTime(SYSTEM_TIME_MONOTONIC);

const MessageEnvelope& messageEnvelope = mMessageEnvelopes.itemAt(0);

if (messageEnvelope.uptime <= now) {

{ // obtain handler

sp<MessageHandler> handler = messageEnvelope.handler;

Message message = messageEnvelope.message;

mMessageEnvelopes.removeAt(0);

mSendingMessage = true;

mLock.unlock();

handler->handleMessage(message); //需要重点分析的函数

} // release handler

mLock.lock();

mSendingMessage = false;

result = POLL_CALLBACK;

} else {

// The last message left at the head of the queue determines the next wakeup time.

mNextMessageUptime = messageEnvelope.uptime;

break;

}

}

mLock.unlock();

//处理epoll监控到的事件,通过提前注册的callback来处理此event,这些callback是什么时候注册的,现在还不知道

for (size_t i = 0; i < mResponses.size(); i++) {

Response& response = mResponses.editItemAt(i);

if (response.request.ident == POLL_CALLBACK) {

int fd = response.request.fd;

int events = response.events;

void* data = response.request.data;

int callbackResult = response.request.callback->handleEvent(fd, events, data);

if (callbackResult == 0) {

removeFd(fd, response.request.seq);

}

response.request.callback.clear();

result = POLL_CALLBACK;

}

}

return result;

}

通过上面的代码分析pollInner主要逻辑如下:

- 通过epoll_wait监控获取event,将获取到的event封装成response结构push到mResponses中。

- 处理mMessageEnvelopes中的message:

sp handler = messageEnvelope.handler;

Message message = messageEnvelope.message;

handler->handleMessage(message); - 处理mResponses中每一个response:

response.request.callback->handleEvent(fd, events, data)

现在新疑问来了,

- mMessageEnvelopes中message哪里来的?

- epoll中被监控的fd是什么后添加到epoll中的 ?

void MessageQueue::setEventConnection(const sp<EventThreadConnection>& connection) {

if (mEventTube.getFd() >= 0) {

mLooper->removeFd(mEventTube.getFd());

}

mEvents = connection;

mEvents->stealReceiveChannel(&mEventTube);

//往epoll中添加mEventTube的fd,其中MessageQueue::cb_eventReceiver是epoll监控到事件后执行的callback

mLooper->addFd(mEventTube.getFd(), 0, Looper::EVENT_INPUT, MessageQueue::cb_eventReceiver,

this);

}

//找到答案了: "epoll中被监控的fd是什么后添加到epoll中的"

int Looper::addFd(int fd, int ident, int events, const sp<LooperCallback>& callback, void* data) {

....................;

{ // acquire lock

AutoMutex _l(mLock);

Request request;

request.fd = fd;

request.ident = ident;

request.events = events;

request.seq = mNextRequestSeq++;

request.callback = callback;

request.data = data;

struct epoll_event eventItem;

request.initEventItem(&eventItem);

ssize_t requestIndex = mRequests.indexOfKey(fd);

if (requestIndex < 0) {

int epollResult = epoll_ctl(mEpollFd.get(), EPOLL_CTL_ADD, fd, &eventItem);

mRequests.add(fd, request);

} else {

........................;

}

} // release lock

return 1;

}

int MessageQueue::cb_eventReceiver(int fd, int events, void* data) {

MessageQueue* queue = reinterpret_cast<MessageQueue*>(data);

//执行MessageQueue中的eventReceiver函数

return queue->eventReceiver(fd, events);

}

int MessageQueue::eventReceiver(int /*fd*/, int /*events*/) {

ssize_t n;

DisplayEventReceiver::Event buffer[8];

//监控到mEventTube中有事件后,从mEventTube中读取事件,处理事件

while ((n = DisplayEventReceiver::getEvents(&mEventTube, buffer, 8)) > 0) {

for (int i = 0; i < n; i++) {

if (buffer[i].header.type == DisplayEventReceiver::DISPLAY_EVENT_VSYNC) {

mHandler->dispatchInvalidate(buffer[i].vsync.expectedVSyncTimestamp);

break;

}

}

}

return 1;

}

void MessageQueue::Handler::dispatchInvalidate(nsecs_t expectedVSyncTimestamp) {

if ((android_atomic_or(eventMaskInvalidate, &mEventMask) & eventMaskInvalidate) == 0) {

mExpectedVSyncTime = expectedVSyncTimestamp;

//从mEventTube读取VSYNC事件后,先Looper中发一个MessageQueue::INVALIDATE类型的消息

mQueue.mLooper->sendMessage(this, Message(MessageQueue::INVALIDATE));

}

}

void Looper::sendMessage(const sp<MessageHandler>& handler, const Message& message) {

nsecs_t now = systemTime(SYSTEM_TIME_MONOTONIC);

sendMessageAtTime(now, handler, message);

}

void Looper::sendMessageDelayed(nsecs_t uptimeDelay, const sp<MessageHandler>& handler,

const Message& message) {

nsecs_t now = systemTime(SYSTEM_TIME_MONOTONIC);

sendMessageAtTime(now + uptimeDelay, handler, message);

}

void Looper::sendMessageAtTime(nsecs_t uptime, const sp<MessageHandler>& handler,

const Message& message) {

{ // acquire lock

AutoMutex _l(mLock);

size_t messageCount = mMessageEnvelopes.size();

while (i < messageCount && uptime >= mMessageEnvelopes.itemAt(i).uptime) {

i += 1;

}

MessageEnvelope messageEnvelope(uptime, handler, message);

//找到答案了: "mMessageEnvelopes中message哪里来的?"

mMessageEnvelopes.insertAt(messageEnvelope, i, 1);

if (mSendingMessage) {

return;

}

} // release lock

if (i == 0) {

wake();

}

}

上面的问题解答后,又来两个新疑问:

- MessageQueue::setEventConnection(…) 什么时候有谁调用的 ?

- mEventTube是个什么玩意?

下一篇文章继续深入分析,然后画图…