最近邻

简单来说就是x方向和y方向分别复制

#!/usr/bin/env python

# _*_ coding:utf-8 _*_

import numpy as np

import torch

from cv2 import cv2

from torch import nn

def numpy2tensor(x: np.ndarray) -> torch.Tensor:

"""

(H,W) -> (1, 1, H, W)

(H,W,C) -> (1, C, H, W)

"""

if x.ndim == 2:

x = x[:, :, None]

else:

x = cv2.cvtColor(x, cv2.COLOR_BGR2RGB)

return torch.from_numpy(x.transpose((2, 0, 1))).contiguous().unsqueeze(0)

def tensor2numpy(x: torch.Tensor) -> np.ndarray:

"""

(B,C,H,W) -> (H, W, C)

(B,1,H,W) -> (H, W)

"""

x = x.clone().detach()

x = x.to(torch.device('cpu'))

x = x.squeeze(0)

# x = x.mul_(255).add_(0.5).clamp_(0, 255)

x = x.permute(1, 2, 0).type(torch.uint8).numpy()

if x.shape[-1] == 3:

return cv2.cvtColor(x, cv2.COLOR_RGB2BGR)

return np.squeeze(x, axis=2)

def nearest1(x: np.ndarray, upscale: int) -> np.ndarray:

if 2 == x.ndim:

x = x[:, :, None]

x = x.transpose((2, 0, 1))

c, h, w = x.shape

h_index = np.arange(h * upscale) // upscale

w_index = np.arange(w * upscale) // upscale

x = x[:, :, w_index]

x = x[:, h_index, :]

x = x.transpose((1, 2, 0))

if 1 == c:

return np.squeeze(x, -1)

return x

def nearest2(x: np.ndarray, upscale: int) -> np.ndarray:

if 2 == x.ndim:

x = x[:, :, None]

x = x.transpose((2, 0, 1))

c, h, w = x.shape

x = x[:, :, None, :, None].repeat(upscale, axis=-1).repeat(upscale, axis=2).reshape(c, h * upscale, w * upscale)

x = x.transpose((1, 2, 0))

if 1 == c:

return np.squeeze(x, -1)

return x

def nearest3(x: torch.Tensor, upscale: int) -> torch.Tensor:

return x[:, :, :, None, :, None].expand(-1, -1, -1, upscale, -1, upscale).reshape(x.size(0), x.size(1),

x.size(2) * upscale,

x.size(3) * upscale)

if __name__ == '__main__':

torch.use_deterministic_algorithms(True)

# torch.backends.cudnn.enabled = False

torch.backends.cudnn.benchmark = False

torch.backends.cudnn.deterministic = True

scale = 2

img = cv2.imread('lena.png')

h, w, _ = img.shape

new_h = h * scale

new_w = w * scale

tensor = numpy2tensor(img)

m = nn.Upsample(scale_factor=2, mode='nearest')

result = [

nearest1(img, scale),

nearest2(img, scale),

cv2.resize(img, (new_w, new_h), interpolation=cv2.INTER_NEAREST),

tensor2numpy(nearest3(tensor, scale)),

tensor2numpy(m(tensor)),

tensor2numpy(m(tensor.to(torch.device('cuda:0'))))

]

for i in range(1, len(result)):

print(np.allclose(result[i], result[i - 1]))

双线性插值

一维线性插值

已知两个点

(

x

1

,

y

1

)

,

(

x

2

,

y

2

)

\left(x_1,y_1\right),\left(x_2,y_2\right)

(x1,y1),(x2,y2)

给定一个

x

x

x,问在这个直线上,对应的

y

y

y是多少

y

−

y

1

x

−

x

1

=

y

2

−

y

1

x

2

−

x

1

y

=

y

1

+

y

2

−

y

1

x

2

−

x

1

(

x

−

x

1

)

y

=

x

2

−

x

x

2

−

x

1

y

1

+

x

−

x

1

x

2

−

x

1

y

2

\begin{aligned} \frac{y - y_1}{x - x_1} &= \frac{y_2 - y_1}{x_2 - x_1}\\ y &= y_1 + \frac{y_2 - y_1}{x_2 - x_1}\left(x - x_1\right)\\ y &= \frac{x_2-x}{x_2 - x_1}y_1 + \frac{x-x_1}{x_2 - x_1}y_2\\ \end{aligned}

x−x1y−y1yy=x2−x1y2−y1=y1+x2−x1y2−y1(x−x1)=x2−x1x2−xy1+x2−x1x−x1y2

相当于两边加权求和

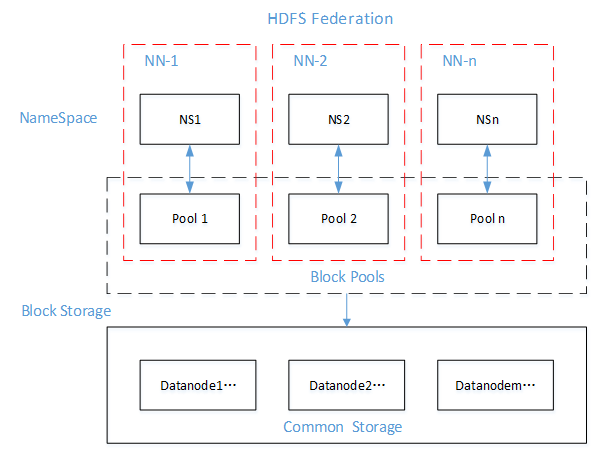

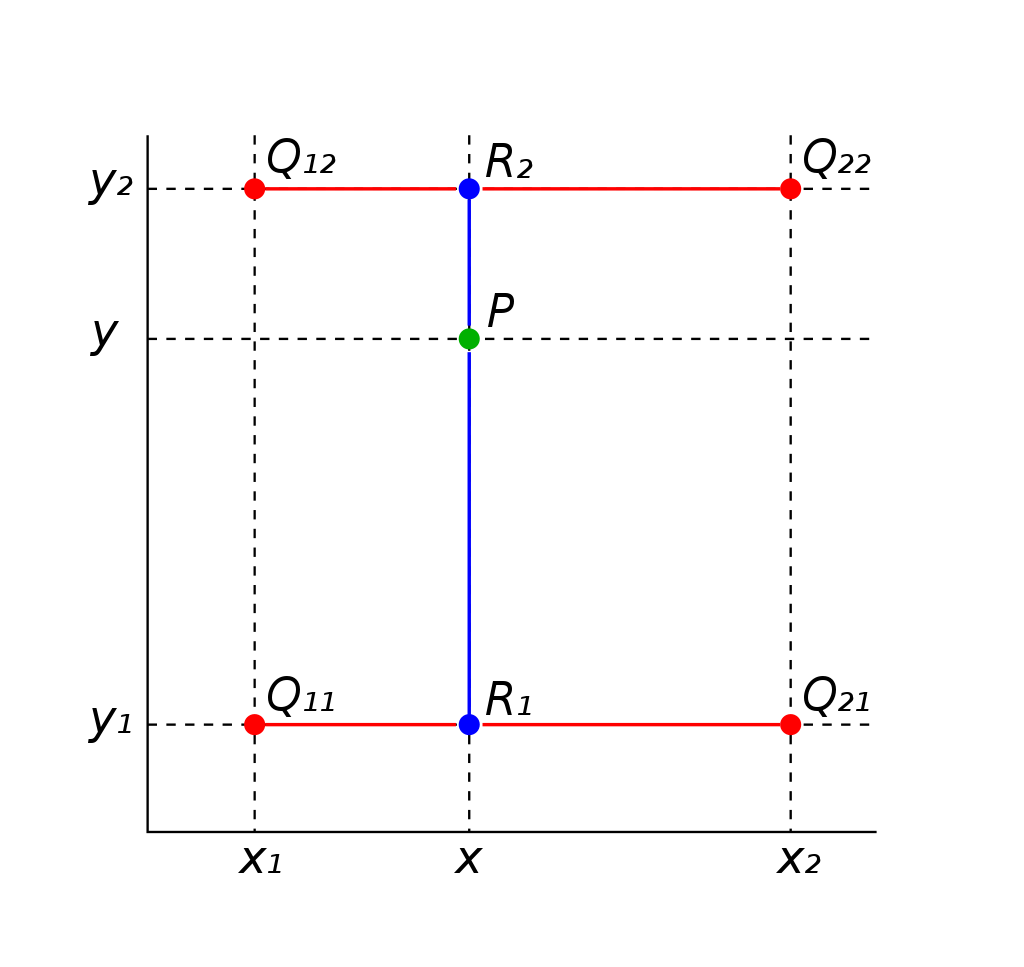

二维的时候类似

在

x

x

x方向插值,然后在

y

y

y方向插值

f

(

x

,

y

1

)

=

x

2

−

x

x

2

−

x

1

f

(

Q

11

)

+

x

−

x

1

x

2

−

x

1

f

(

Q

21

)

f

(

x

,

y

2

)

=

x

2

−

x

x

2

−

x

1

f

(

Q

12

)

+

x

−

x

1

x

2

−

x

1

f

(

Q

22

)

\begin{aligned} f\left(x,y_1\right) &= \frac{x_2-x}{x_2 - x_1}f\left(Q_{11}\right) + \frac{x-x_1}{x_2 - x_1}f\left(Q_{21}\right)\\ f\left(x,y_2\right) &= \frac{x_2-x}{x_2 - x_1}f\left(Q_{12}\right) + \frac{x-x_1}{x_2 - x_1}f\left(Q_{22}\right)\\ \end{aligned}

f(x,y1)f(x,y2)=x2−x1x2−xf(Q11)+x2−x1x−x1f(Q21)=x2−x1x2−xf(Q12)+x2−x1x−x1f(Q22)

其中

f

(

Q

i

j

)

=

f

(

x

i

,

y

j

)

f\left(Q_{ij}\right) = f\left(x_i,y_j\right)

f(Qij)=f(xi,yj)

f

(

x

,

y

)

=

y

2

−

y

y

2

−

y

1

f

(

x

,

y

1

)

+

y

−

y

1

y

2

−

y

1

f

(

x

,

y

2

)

=

y

2

−

y

y

2

−

y

1

(

x

2

−

x

x

2

−

x

1

f

(

Q

11

)

+

x

−

x

1

x

2

−

x

1

f

(

Q

21

)

)

+

y

−

y

1

y

2

−

y

1

(

x

2

−

x

x

2

−

x

1

f

(

Q

12

)

+

x

−

x

1

x

2

−

x

1

f

(

Q

22

)

)

=

1

(

y

2

−

y

1

)

(

x

2

−

x

1

)

(

f

(

Q

11

)

(

x

2

−

x

)

(

y

2

−

y

)

+

f

(

Q

21

)

(

x

−

x

1

)

(

y

2

−

y

)

+

f

(

Q

12

)

(

x

2

−

x

)

(

y

−

y

1

)

+

f

(

Q

22

)

(

x

−

x

1

)

(

y

−

y

1

)

)

=

1

(

y

2

−

y

1

)

(

x

2

−

x

1

)

(

x

2

−

x

x

−

x

1

)

T

(

f

(

Q

11

)

f

(

Q

12

)

f

(

Q

21

)

f

(

Q

22

)

)

(

y

2

−

y

y

−

y

1

)

\begin{aligned} f\left(x,y\right) &= \frac{y_2 - y}{y_2 - y_1}f\left(x,y_1\right) + \frac{y-y_1}{y_2 - y_1}f\left(x,y_2\right)\\ &= \frac{y_2 - y}{y_2 - y_1}\left(\frac{x_2-x}{x_2 - x_1}f\left(Q_{11}\right) + \frac{x-x_1}{x_2 - x_1}f\left(Q_{21}\right)\right) + \frac{y-y_1}{y_2 - y_1}\left(\frac{x_2-x}{x_2 - x_1}f\left(Q_{12}\right) + \frac{x-x_1}{x_2 - x_1}f\left(Q_{22}\right)\right)\\ &= \frac{1}{\left(y_2 - y_1\right)\left(x_2 - x_1\right)}\left(f\left(Q_{11}\right)\left(x_2 - x\right)\left(y_2 - y\right) + f\left(Q_{21}\right)\left(x-x_1\right)\left(y_2-y\right) + f\left(Q_{12}\right)\left(x_2-x\right)\left(y-y_1\right) + f\left(Q_{22}\right)\left(x-x_1\right)\left(y-y_1\right)\right) \\ &= \frac{1}{\left(y_2 - y_1\right)\left(x_2 - x_1\right)}\begin{pmatrix} x_2-x\\ x-x_1\\ \end{pmatrix}^T\begin{pmatrix} f\left(Q_{11}\right)&f\left(Q_{12}\right)\\ f\left(Q_{21}\right)&f\left(Q_{22}\right)\\ \end{pmatrix}\begin{pmatrix} y_2-y\\ y-y_1\\ \end{pmatrix} \end{aligned}

f(x,y)=y2−y1y2−yf(x,y1)+y2−y1y−y1f(x,y2)=y2−y1y2−y(x2−x1x2−xf(Q11)+x2−x1x−x1f(Q21))+y2−y1y−y1(x2−x1x2−xf(Q12)+x2−x1x−x1f(Q22))=(y2−y1)(x2−x1)1(f(Q11)(x2−x)(y2−y)+f(Q21)(x−x1)(y2−y)+f(Q12)(x2−x)(y−y1)+f(Q22)(x−x1)(y−y1))=(y2−y1)(x2−x1)1(x2−xx−x1)T(f(Q11)f(Q21)f(Q12)f(Q22))(y2−yy−y1)

容易验证先在

y

y

y方向,然后

x

x

x方向,得到的结果是一样的

pytorch align_corners=False

把

(

3

,

3

)

\left(3,3\right)

(3,3)的图像放大成

(

9

,

9

)

\left(9,9\right)

(9,9)

原本图像的中心是

(

1

,

1

)

\left(1,1\right)

(1,1),放大后中心是

(

4

,

4

)

\left(4,4\right)

(4,4)

但是这时候就会发现

4

1

≠

3

1

\frac{4}{1}\neq \frac{3}{1}

14=13,中心对不上

而实际上,中心计算公式为

s

r

c

=

(

d

s

t

+

0.5

)

1

s

c

a

l

e

−

0.5

=

(

d

s

t

+

0.5

)

s

r

c

s

i

z

e

d

s

t

s

i

z

e

−

0.5

src = \left(dst + 0.5\right)\frac{1}{scale}-0.5 = \left(dst + 0.5\right)\frac{src_{size}}{dst_{size}}-0.5

src=(dst+0.5)scale1−0.5=(dst+0.5)dstsizesrcsize−0.5

然后一些其他细节

1.

s

r

c

src

src计算完了,要

c

l

i

p

clip

clip到

[

0

,

s

i

z

e

−

1

]

\left[0,size-1\right]

[0,size−1]

2.

x

2

−

x

1

=

y

2

−

y

1

=

1

x_2 - x_1 = y_2 - y_1 = 1

x2−x1=y2−y1=1

3.

x

1

,

y

1

x_1,y_1

x1,y1要

c

l

i

p

clip

clip到

[

0

,

s

i

z

e

−

2

]

\left[0,size-2\right]

[0,size−2]之间,防止

x

2

,

y

2

x_2,y_2

x2,y2越界

#!/usr/bin/env python

# _*_ coding:utf-8 _*_

import numpy as np

import torch

from cv2 import cv2

from torch import nn

def numpy2tensor(x: np.ndarray) -> torch.FloatTensor:

"""

(H,W) -> (1, 1, H, W)

(H,W,C) -> (1, C, H, W)

"""

if x.ndim == 2:

x = x[..., None]

else:

x = cv2.cvtColor(x, cv2.COLOR_BGR2RGB)

return torch.from_numpy(x.transpose((2, 0, 1))).contiguous().unsqueeze(0).float()

def tensor2numpy(x: torch.FloatTensor) -> np.ndarray:

"""

(B,C,H,W) -> (H, W, C)

(B,1,H,W) -> (H, W)

"""

x = x.clone().detach()

x = x.to(torch.device('cpu'))

x = x.squeeze(0)

x = x.clamp_(0, 255)

# x = x.permute(1, 2, 0).type(torch.uint8).numpy()

x = x.permute(1, 2, 0).numpy()

if x.shape[-1] == 3:

return cv2.cvtColor(x, cv2.COLOR_RGB2BGR)

return np.squeeze(x, axis=2)

def bilinear1(img: np.ndarray, upscale: int, align_corners=False) -> np.ndarray:

if 2 == img.ndim:

img = img[..., None]

h, w, c = img.shape

new_h, new_w = h * upscale, w * upscale

result = np.zeros((new_h, new_w, c), dtype=float)

for i in range(new_h):

for j in range(new_w):

if not align_corners:

x = np.clip((i + 0.5) * h / new_h - 0.5, 0, h - 1)

y = np.clip((j + 0.5) * w / new_w - 0.5, 0, w - 1)

x1 = min(int(np.floor(x)), h - 2)

y1 = min(int(np.floor(y)), w - 2)

x2 = x1 + 1

y2 = y1 + 1

else:

x = np.clip(i * (h - 1) / (new_h - 1), 0, h - 1)

y = np.clip(j * (w - 1) / (new_w - 1), 0, w - 1)

x1 = int(np.floor(x))

y1 = int(np.floor(y))

x2 = int(np.ceil(x))

y2 = int(np.ceil(y))

q11 = img[x1, y1]

q12 = img[x1, y2]

q21 = img[x2, y1]

q22 = img[x2, y2]

u = x2 - x

v = y2 - y

_1_u = 1 - u

_1_v = 1 - v

result[i, j] = (q11 * u * v + q21 * _1_u * v + q12 * u * _1_v + q22 * _1_u * _1_v)

if 1 == c:

return result.squeeze(-1)

return result

def bilinear2(img: np.ndarray, upscale: int, align_corners=False) -> np.ndarray:

if 2 == img.ndim:

img = img[..., None]

h, w, c = img.shape

new_h, new_w = h * upscale, w * upscale

if not align_corners:

x = np.clip((np.arange(new_h) + 0.5) * h / new_h - 0.5, 0, h - 1)

y = np.clip((np.arange(new_w) + 0.5) * w / new_w - 0.5, 0, w - 1)

x = np.repeat(x[:, None], new_w, axis=1)

y = np.repeat(y[None, :], new_h, axis=0)

x1 = np.clip(np.floor(x), 0, h - 2).astype(int)

y1 = np.clip(np.floor(y), 0, w - 2).astype(int)

x2 = x1 + 1

y2 = y1 + 1

else:

x = np.clip(np.arange(new_h) * (h - 1) / (new_h - 1), 0, h - 1)

y = np.clip(np.arange(new_w) * (w - 1) / (new_w - 1), 0, w - 1)

x = np.repeat(x[:, None], new_w, axis=1)

y = np.repeat(y[None, :], new_h, axis=0)

x1 = np.floor(x).astype(int)

y1 = np.floor(y).astype(int)

x2 = np.ceil(x).astype(int)

y2 = np.ceil(y).astype(int)

q11 = img[x1, y1]

q12 = img[x1, y2]

q21 = img[x2, y1]

q22 = img[x2, y2]

u = (x2 - x)[..., None]

v = (y2 - y)[..., None]

_1_u = 1 - u

_1_v = 1 - v

w11 = u * v

w12 = u * _1_v

w21 = _1_u * v

w22 = _1_u * _1_v

result = q11 * w11 + q12 * w12 + q21 * w21 + q22 * w22

if 1 == c:

return result.squeeze(-1)

return result

def bilinear3(img: torch.FloatTensor, upscale: int, align_corners=False) -> torch.FloatTensor:

B, c, h, w = img.shape

new_h, new_w = h * upscale, w * upscale

result = torch.zeros(B, c, new_h, new_w, dtype=torch.float)

for i in range(new_h):

for j in range(new_w):

if not align_corners:

x = np.clip((i + 0.5) * h / new_h - 0.5, 0, h - 1)

y = np.clip((j + 0.5) * w / new_w - 0.5, 0, w - 1)

x1 = min(int(np.floor(x)), h - 2)

y1 = min(int(np.floor(y)), w - 2)

x2 = x1 + 1

y2 = y1 + 1

else:

x = np.clip(i * (h - 1) / (new_h - 1), 0, h - 1)

y = np.clip(j * (w - 1) / (new_w - 1), 0, w - 1)

x1 = int(np.floor(x))

y1 = int(np.floor(y))

x2 = int(np.ceil(x))

y2 = int(np.ceil(y))

q11 = img[..., x1, y1]

q12 = img[..., x1, y2]

q21 = img[..., x2, y1]

q22 = img[..., x2, y2]

u = x2 - x

v = y2 - y

_1_u = 1 - u

_1_v = 1 - v

result[..., i, j] = (q11 * u * v + q21 * _1_u * v + q12 * u * _1_v + q22 * _1_u * _1_v)

return result

def bilinear4(img: torch.FloatTensor, upscale: int, align_corners=False) -> torch.FloatTensor:

B, c, h, w = img.shape

new_h, new_w = h * upscale, w * upscale

if not align_corners:

x = torch.clip((torch.arange(new_h, dtype=torch.double) + 0.5) * h / new_h - 0.5, 0, h - 1)

y = torch.clip((torch.arange(new_w, dtype=torch.double) + 0.5) * w / new_w - 0.5, 0, w - 1)

x = x[:, None].expand(-1, new_w)

y = y[None, :].expand(new_h, -1)

x1 = torch.clip(torch.floor(x), 0, h - 2).long()

y1 = torch.clip(torch.floor(y), 0, w - 2).long()

x2 = x1 + 1

y2 = y1 + 1

else:

x = torch.clip(torch.arange(new_h, dtype=torch.double) * (h - 1) / (new_h - 1), 0, h - 1)

y = torch.clip(torch.arange(new_w, dtype=torch.double) * (w - 1) / (new_w - 1), 0, w - 1)

x = x[:, None].expand(-1, new_w)

y = y[None, :].expand(new_h, -1)

x1 = torch.floor(x).long()

y1 = torch.floor(y).long()

x2 = torch.ceil(x).long()

y2 = torch.ceil(y).long()

q11 = img[..., x1, y1]

q12 = img[..., x1, y2]

q21 = img[..., x2, y1]

q22 = img[..., x2, y2]

u = (x2 - x)[None, None, ...]

v = (y2 - y)[None, None, ...]

_1_u = 1 - u

_1_v = 1 - v

w11 = u * v

w12 = u * _1_v

w21 = _1_u * v

w22 = _1_u * _1_v

result = q11 * w11 + q12 * w12 + q21 * w21 + q22 * w22

return result.float()

return result

if __name__ == '__main__':

torch.use_deterministic_algorithms(True)

# torch.backends.cudnn.enabled = False

torch.backends.cudnn.benchmark = False

torch.backends.cudnn.deterministic = True

x = cv2.imread('lena.png')

h, w = x.shape[:2]

scale = 2

new_h = h * scale

new_w = w * scale

tensor = numpy2tensor(x)

m1 = nn.Upsample(scale_factor=2, mode='bilinear', align_corners=True)

m2 = nn.Upsample(scale_factor=2, mode='bilinear', align_corners=False)

# align_corners_true = [

# tensor2numpy(m1(tensor)),

# bilinear1(x, 2, align_corners=True),

# bilinear2(x, 2, align_corners=True),

# tensor2numpy(bilinear3(tensor, 2, align_corners=True)),

# tensor2numpy(bilinear4(tensor, 2, align_corners=True)),

# tensor2numpy(m1(tensor.to(torch.device('cuda:0')))),

# cv2.resize(x, (new_w, new_h), interpolation=cv2.INTER_LINEAR)

# ]

# # 0==5 0!=1 0!=6 1==2==3==4 1!=6

# for i in range(1, len(align_corners_true)):

# print(np.allclose(align_corners_true[i], align_corners_true[i - 1]))

#

# print()

# print()

align_corners_false = [

tensor2numpy(m2(tensor)),

bilinear1(x, 2, align_corners=False),

bilinear2(x, 2, align_corners=False),

tensor2numpy(bilinear3(tensor, 2, align_corners=False)),

tensor2numpy(bilinear4(tensor, 2, align_corners=False)),

tensor2numpy(m2(tensor.to(torch.device('cuda:0')))),

cv2.resize(x, (new_w, new_h), interpolation=cv2.INTER_LINEAR)

]

# 除了opencv 其他都一样

for i in range(1, len(align_corners_false)):

print(np.allclose(align_corners_false[i], align_corners_false[i - 1]))

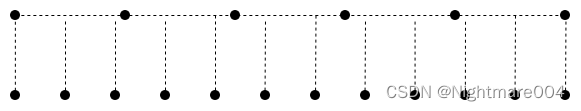

pytorch align_corners=True

这个图,上面是原图,下面是插值后的图

将

6

6

6插值成

12

12

12

这里的操作相当于原图有

5

5

5个区域,插值后有

11

11

11个区域

中心计算公式为

s

r

c

=

d

s

t

×

s

r

c

s

i

z

e

−

1

d

s

t

s

i

z

e

−

1

src = dst\times\frac{src_{size} -1}{dst_{size} - 1}

src=dst×dstsize−1srcsize−1

这个代码写的有点问题

#!/usr/bin/env python

# _*_ coding:utf-8 _*_

import numpy as np

import torch

from cv2 import cv2

from torch import nn

def numpy2tensor(x: np.ndarray) -> torch.FloatTensor:

"""

(H,W) -> (1, 1, H, W)

(H,W,C) -> (1, C, H, W)

"""

if x.ndim == 2:

x = x[..., None]

else:

x = cv2.cvtColor(x, cv2.COLOR_BGR2RGB)

return torch.from_numpy(x.transpose((2, 0, 1))).contiguous().unsqueeze(0).float()

def tensor2numpy(x: torch.FloatTensor) -> np.ndarray:

"""

(B,C,H,W) -> (H, W, C)

(B,1,H,W) -> (H, W)

"""

x = x.clone().detach()

x = x.to(torch.device('cpu'))

x = x.squeeze(0)

x = x.clamp_(0, 255)

# x = x.permute(1, 2, 0).type(torch.uint8).numpy()

x = x.permute(1, 2, 0).numpy()

if x.shape[-1] == 3:

return cv2.cvtColor(x, cv2.COLOR_RGB2BGR)

return np.squeeze(x, axis=2)

def bilinear1(img: np.ndarray, upscale: int, align_corners=False) -> np.ndarray:

if 2 == img.ndim:

img = img[..., None]

h, w, c = img.shape

new_h, new_w = h * upscale, w * upscale

result = np.zeros((new_h, new_w, c), dtype=float)

for i in range(new_h):

for j in range(new_w):

if not align_corners:

x = np.clip((i + 0.5) * h / new_h - 0.5, 0, h - 1)

y = np.clip((j + 0.5) * w / new_w - 0.5, 0, w - 1)

x1 = min(int(np.floor(x)), h - 2)

y1 = min(int(np.floor(y)), w - 2)

x2 = x1 + 1

y2 = y1 + 1

else:

x = np.clip(i * (h - 1) / (new_h - 1), 0, h - 1)

y = np.clip(j * (w - 1) / (new_w - 1), 0, w - 1)

x1 = int(np.floor(x))

y1 = int(np.floor(y))

x2 = int(np.ceil(x))

y2 = int(np.ceil(y))

q11 = img[x1, y1]

q12 = img[x1, y2]

q21 = img[x2, y1]

q22 = img[x2, y2]

u = x2 - x

v = y2 - y

_1_u = 1 - u

_1_v = 1 - v

result[i, j] = (q11 * u * v + q21 * _1_u * v + q12 * u * _1_v + q22 * _1_u * _1_v)

if 1 == c:

return result.squeeze(-1)

return result

def bilinear2(img: np.ndarray, upscale: int, align_corners=False) -> np.ndarray:

if 2 == img.ndim:

img = img[..., None]

h, w, c = img.shape

new_h, new_w = h * upscale, w * upscale

if not align_corners:

x = np.clip((np.arange(new_h) + 0.5) * h / new_h - 0.5, 0, h - 1)

y = np.clip((np.arange(new_w) + 0.5) * w / new_w - 0.5, 0, w - 1)

x = np.repeat(x[:, None], new_w, axis=1)

y = np.repeat(y[None, :], new_h, axis=0)

x1 = np.clip(np.floor(x), 0, h - 2).astype(int)

y1 = np.clip(np.floor(y), 0, w - 2).astype(int)

x2 = x1 + 1

y2 = y1 + 1

else:

x = np.clip(np.arange(new_h) * (h - 1) / (new_h - 1), 0, h - 1)

y = np.clip(np.arange(new_w) * (w - 1) / (new_w - 1), 0, w - 1)

x = np.repeat(x[:, None], new_w, axis=1)

y = np.repeat(y[None, :], new_h, axis=0)

x1 = np.floor(x).astype(int)

y1 = np.floor(y).astype(int)

x2 = np.ceil(x).astype(int)

y2 = np.ceil(y).astype(int)

q11 = img[x1, y1]

q12 = img[x1, y2]

q21 = img[x2, y1]

q22 = img[x2, y2]

u = (x2 - x)[..., None]

v = (y2 - y)[..., None]

_1_u = 1 - u

_1_v = 1 - v

w11 = u * v

w12 = u * _1_v

w21 = _1_u * v

w22 = _1_u * _1_v

result = q11 * w11 + q12 * w12 + q21 * w21 + q22 * w22

if 1 == c:

return result.squeeze(-1)

return result

def bilinear3(img: torch.FloatTensor, upscale: int, align_corners=False) -> torch.FloatTensor:

B, c, h, w = img.shape

new_h, new_w = h * upscale, w * upscale

result = torch.zeros(B, c, new_h, new_w, dtype=torch.float)

for i in range(new_h):

for j in range(new_w):

if not align_corners:

x = np.clip((i + 0.5) * h / new_h - 0.5, 0, h - 1)

y = np.clip((j + 0.5) * w / new_w - 0.5, 0, w - 1)

x1 = min(int(np.floor(x)), h - 2)

y1 = min(int(np.floor(y)), w - 2)

x2 = x1 + 1

y2 = y1 + 1

else:

x = np.clip(i * (h - 1) / (new_h - 1), 0, h - 1)

y = np.clip(j * (w - 1) / (new_w - 1), 0, w - 1)

x1 = int(np.floor(x))

y1 = int(np.floor(y))

x2 = int(np.ceil(x))

y2 = int(np.ceil(y))

q11 = img[..., x1, y1]

q12 = img[..., x1, y2]

q21 = img[..., x2, y1]

q22 = img[..., x2, y2]

u = x2 - x

v = y2 - y

_1_u = 1 - u

_1_v = 1 - v

result[..., i, j] = (q11 * u * v + q21 * _1_u * v + q12 * u * _1_v + q22 * _1_u * _1_v)

return result

def bilinear4(img: torch.FloatTensor, upscale: int, align_corners=False) -> torch.FloatTensor:

B, c, h, w = img.shape

new_h, new_w = h * upscale, w * upscale

if not align_corners:

x = torch.clip((torch.arange(new_h, dtype=torch.double) + 0.5) * h / new_h - 0.5, 0, h - 1)

y = torch.clip((torch.arange(new_w, dtype=torch.double) + 0.5) * w / new_w - 0.5, 0, w - 1)

x = x[:, None].expand(-1, new_w)

y = y[None, :].expand(new_h, -1)

x1 = torch.clip(torch.floor(x), 0, h - 2).long()

y1 = torch.clip(torch.floor(y), 0, w - 2).long()

x2 = x1 + 1

y2 = y1 + 1

else:

x = torch.clip(torch.arange(new_h, dtype=torch.double) * (h - 1) / (new_h - 1), 0, h - 1)

y = torch.clip(torch.arange(new_w, dtype=torch.double) * (w - 1) / (new_w - 1), 0, w - 1)

x = x[:, None].expand(-1, new_w)

y = y[None, :].expand(new_h, -1)

x1 = torch.floor(x).long()

y1 = torch.floor(y).long()

x2 = torch.ceil(x).long()

y2 = torch.ceil(y).long()

q11 = img[..., x1, y1]

q12 = img[..., x1, y2]

q21 = img[..., x2, y1]

q22 = img[..., x2, y2]

u = (x2 - x)[None, None, ...]

v = (y2 - y)[None, None, ...]

_1_u = 1 - u

_1_v = 1 - v

w11 = u * v

w12 = u * _1_v

w21 = _1_u * v

w22 = _1_u * _1_v

result = q11 * w11 + q12 * w12 + q21 * w21 + q22 * w22

return result.float()

if __name__ == '__main__':

torch.use_deterministic_algorithms(True)

# torch.backends.cudnn.enabled = False

torch.backends.cudnn.benchmark = False

torch.backends.cudnn.deterministic = True

x = cv2.imread('lena.png')

h, w = x.shape[:2]

scale = 2

new_h = h * scale

new_w = w * scale

tensor = numpy2tensor(x)

m1 = nn.Upsample(scale_factor=2, mode='bilinear', align_corners=True)

m2 = nn.Upsample(scale_factor=2, mode='bilinear', align_corners=False)

align_corners_true = [

tensor2numpy(m1(tensor)),

bilinear1(x, 2, align_corners=True),

bilinear2(x, 2, align_corners=True),

tensor2numpy(bilinear3(tensor, 2, align_corners=True)),

tensor2numpy(bilinear4(tensor, 2, align_corners=True)),

tensor2numpy(m1(tensor.to(torch.device('cuda:0')))),

cv2.resize(x, (new_w, new_h), interpolation=cv2.INTER_LINEAR)

]

# 0==5 0!=1 0!=6 1==2==3==4 1!=6

for i in range(1, len(align_corners_true)):

print(np.allclose(align_corners_true[i], align_corners_true[i - 1]))

print()

print()

align_corners_false = [

tensor2numpy(m2(tensor)),

bilinear1(x, 2, align_corners=False),

bilinear2(x, 2, align_corners=False),

tensor2numpy(bilinear3(tensor, 2, align_corners=False)),

tensor2numpy(bilinear4(tensor, 2, align_corners=False)),

tensor2numpy(m2(tensor.to(torch.device('cuda:0')))),

cv2.resize(x, (new_w, new_h), interpolation=cv2.INTER_LINEAR)

]

# 除了opencv 其他都一样

for i in range(1, len(align_corners_false)):

print(np.allclose(align_corners_false[i], align_corners_false[i - 1]))

参考

https://zhuanlan.zhihu.com/p/141681355