从ffplay源码分析这篇文章中可以知道int stream_component_open(VideoState *is, int stream_index)函数是创建和初始化decoder的入口。本篇文章从此入口看下ffmpeg中decoder的内部结构是什么样子。

同样先提出几个问题,带着问题梳理源码,效率贼快啊

- ffmpeg decoder内部模块的结构关系是怎样的?

- 如何匹配到正确的decoder,h264还是vp9等 ?

- decoder数据流向是怎么样的?如何驱动数据流转的?

stream_component_open

static int stream_component_open(VideoState *is, int stream_index)

{

AVFormatContext *ic = is->ic;

AVCodecContext *avctx;

const AVCodec *codec;

......................;

avctx = avcodec_alloc_context3(NULL);

avcodec_parameters_to_context(avctx, ic->streams[stream_index]->codecpar);

avctx->pkt_timebase = ic->streams[stream_index]->time_base;

codec = avcodec_find_decoder(avctx->codec_id);

avctx->codec_id = codec->id;

.......................;

avcodec_open2(avctx, codec, &opts));

is->eof = 0;

ic->streams[stream_index]->discard = AVDISCARD_DEFAULT;

switch (avctx->codec_type) {

case AVMEDIA_TYPE_AUDIO:

if ((ret = decoder_init(&is->auddec, avctx, &is->audioq, is->continue_read_thread)) < 0)

goto fail;

if ((ret = decoder_start(&is->auddec, audio_thread, "audio_decoder", is)) < 0)

goto out;

........................;

break;

case AVMEDIA_TYPE_VIDEO:

if ((ret = decoder_init(&is->viddec, avctx, &is->videoq, is->continue_read_thread)) < 0)

goto fail;

if ((ret = decoder_start(&is->viddec, video_thread, "video_decoder", is)) < 0)

goto out;

is->queue_attachments_req = 1;

break;

case AVMEDIA_TYPE_SUBTITLE:

if ((ret = decoder_init(&is->subdec, avctx, &is->subtitleq, is->continue_read_thread)) < 0)

goto fail;

if ((ret = decoder_start(&is->subdec, subtitle_thread, "subtitle_decoder", is)) < 0)

goto out;

break;

default:

break;

}

goto out;

..................;

return ret;

}

经过删减后,列出了重点代码,再深入细节分析下:

avcodec_alloc_context3

下面代码用于创建一个AVCodecContext

AVCodecContext *avctx = avcodec_alloc_context3(NULL)

后面再看看这个指针放在什么地方去了。

AVCodecContext *avcodec_alloc_context3(const AVCodec *codec)

{

AVCodecContext *avctx= av_malloc(sizeof(AVCodecContext));

//初始化, codec未NULL

init_context_defaults(avctx, codec);

return avctx;

}

//此函数暂不深入分析,代码先放在这里

static int init_context_defaults(AVCodecContext *s, const AVCodec *codec)

{

const FFCodec *const codec2 = ffcodec(codec);

int flags=0;

memset(s, 0, sizeof(AVCodecContext));

s->av_class = &av_codec_context_class;

s->codec_type = codec ? codec->type : AVMEDIA_TYPE_UNKNOWN;

if (codec) {

s->codec = codec;

s->codec_id = codec->id;

}

if(s->codec_type == AVMEDIA_TYPE_AUDIO)

flags= AV_OPT_FLAG_AUDIO_PARAM;

else if(s->codec_type == AVMEDIA_TYPE_VIDEO)

flags= AV_OPT_FLAG_VIDEO_PARAM;

else if(s->codec_type == AVMEDIA_TYPE_SUBTITLE)

flags= AV_OPT_FLAG_SUBTITLE_PARAM;

av_opt_set_defaults2(s, flags, flags);

av_channel_layout_uninit(&s->ch_layout);

s->time_base = (AVRational){0,1};

s->framerate = (AVRational){ 0, 1 };

s->pkt_timebase = (AVRational){ 0, 1 };

s->get_buffer2 = avcodec_default_get_buffer2;

s->get_format = avcodec_default_get_format;

s->get_encode_buffer = avcodec_default_get_encode_buffer;

s->execute = avcodec_default_execute;

s->execute2 = avcodec_default_execute2;

s->sample_aspect_ratio = (AVRational){0,1};

s->ch_layout.order = AV_CHANNEL_ORDER_UNSPEC;

s->pix_fmt = AV_PIX_FMT_NONE;

s->sw_pix_fmt = AV_PIX_FMT_NONE;

s->sample_fmt = AV_SAMPLE_FMT_NONE;

s->reordered_opaque = AV_NOPTS_VALUE;

if(codec && codec2->priv_data_size){

s->priv_data = av_mallocz(codec2->priv_data_size);

if (!s->priv_data)

return AVERROR(ENOMEM);

if(codec->priv_class){

*(const AVClass**)s->priv_data = codec->priv_class;

av_opt_set_defaults(s->priv_data);

}

}

if (codec && codec2->defaults) {

int ret;

const FFCodecDefault *d = codec2->defaults;

while (d->key) {

ret = av_opt_set(s, d->key, d->value, 0);

av_assert0(ret >= 0);

d++;

}

}

return 0;

}

avcodec_parameters_to_context

avcodec_parameters_to_context(avctx, ic->streams[stream_index]->codecpar)

此函数的作用是将demuxer模块demux出来的stream的具体信息赋值到AVCodecContext

int avcodec_parameters_to_context(AVCodecContext *codec,

const AVCodecParameters *par)

{

int ret;

codec->codec_type = par->codec_type;

codec->codec_id = par->codec_id;

codec->codec_tag = par->codec_tag;

codec->bit_rate = par->bit_rate;

codec->bits_per_coded_sample = par->bits_per_coded_sample;

codec->bits_per_raw_sample = par->bits_per_raw_sample;

codec->profile = par->profile;

codec->level = par->level;

switch (par->codec_type) {

case AVMEDIA_TYPE_VIDEO:

codec->pix_fmt = par->format;

codec->width = par->width;

codec->height = par->height;

codec->field_order = par->field_order;

codec->color_range = par->color_range;

codec->color_primaries = par->color_primaries;

codec->color_trc = par->color_trc;

codec->colorspace = par->color_space;

codec->chroma_sample_location = par->chroma_location;

codec->sample_aspect_ratio = par->sample_aspect_ratio;

codec->has_b_frames = par->video_delay;

break;

case AVMEDIA_TYPE_AUDIO:

codec->sample_fmt = par->format;

...................................;

codec->sample_rate = par->sample_rate;

codec->block_align = par->block_align;

codec->frame_size = par->frame_size;

codec->delay =

codec->initial_padding = par->initial_padding;

codec->trailing_padding = par->trailing_padding;

codec->seek_preroll = par->seek_preroll;

break;

case AVMEDIA_TYPE_SUBTITLE:

codec->width = par->width;

codec->height = par->height;

break;

}

if (par->extradata) {

av_freep(&codec->extradata);

codec->extradata = av_mallocz(par->extradata_size + AV_INPUT_BUFFER_PADDING_SIZE);

if (!codec->extradata)

return AVERROR(ENOMEM);

memcpy(codec->extradata, par->extradata, par->extradata_size);

codec->extradata_size = par->extradata_size;

}

return 0;

}

avcodec_find_decoder

AVCodec *codec = avcodec_find_decoder(avctx->codec_id);

通过demuxer模块中获取到的codec_id, 匹配到具体的codec即decoder,struct AVCodec实际对应一个struct FFCodec,struct FFCodec提供一个decode(…)函数执行具体的解码动作。

typedef struct AVCodec {

const char *name;

const char *long_name;

enum AVMediaType type;

enum AVCodecID id;

...................;

} AVCodec;

typedef struct FFCodec {

AVCodec p;

int (*decode)(struct AVCodecContext *avctx, void *outdata,

int *got_frame_ptr, struct AVPacket *avpkt);

..................;

}

下面来看看avcodec_find_decoder的实现细节

const AVCodec *avcodec_find_decoder(enum AVCodecID id)

{

return find_codec(id, av_codec_is_decoder);

}

static const AVCodec *find_codec(enum AVCodecID id, int (*x)(const AVCodec *))

{

const AVCodec *p, *experimental = NULL;

void *i = 0;

id = remap_deprecated_codec_id(id);

//遍历所有decoder,通过codec_id匹配到正确的decoder

while ((p = av_codec_iterate(&i))) {

if (!x(p))

continue;

if (p->id == id) {

if (p->capabilities & AV_CODEC_CAP_EXPERIMENTAL && !experimental) {

experimental = p;

} else

return p;

}

}

return experimental;

}

const AVCodec *av_codec_iterate(void **opaque)

{

uintptr_t i = (uintptr_t)*opaque;

//codec_list是一个全局变量,可通过配置文件配置,如:

//static const FFCodec * const codec_list[] = {

//&ff_h264_decoder,

// &ff_theora_decoder,

// &ff_vp3_decoder,

// &ff_vp8_decoder,

// &ff_aac_decoder,

// &ff_flac_decoder,

// &ff_mp3_decoder,

// &ff_vorbis_decoder,

// &ff_pcm_alaw_decoder,

// &ff_pcm_f32le_decoder,

// &ff_pcm_mulaw_decoder,

// &ff_pcm_s16be_decoder,

// &ff_pcm_s16le_decoder,

// &ff_pcm_s24be_decoder,

// &ff_pcm_s24le_decoder,

// &ff_pcm_s32le_decoder,

// &ff_pcm_u8_decoder,

// &ff_libopus_decoder,

// NULL };

const FFCodec *c = codec_list[i];

ff_thread_once(&av_codec_static_init, av_codec_init_static);

if (c) {

*opaque = (void*)(i + 1);

return &c->p;

}

return NULL;

}

我们来看看h264 decoder的定义?

const FFCodec ff_h264_decoder = {

.p.name = "h264",

.p.long_name = NULL_IF_CONFIG_SMALL("H.264 / AVC / MPEG-4 AVC / MPEG-4 part 10"),

.p.type = AVMEDIA_TYPE_VIDEO,

.p.id = AV_CODEC_ID_H264,

.priv_data_size = sizeof(H264Context),

.init = h264_decode_init,

.close = h264_decode_end,

.decode = h264_decode_frame,

..............................;

.flush = h264_decode_flush,

.update_thread_context = ONLY_IF_THREADS_ENABLED(ff_h264_update_thread_context),

.update_thread_context_for_user = ONLY_IF_THREADS_ENABLED(ff_h264_update_thread_context_for_user),

.p.profiles = NULL_IF_CONFIG_SMALL(ff_h264_profiles),

.p.priv_class = &h264_class,

};

ff_h264_decoder中有定义codec id为AV_CODEC_ID_H264,ffmpeg\libavcodec\codec_id.h中定义了每一种codec的值。

avcodec_open2

int attribute_align_arg avcodec_open2(AVCodecContext *avctx, const AVCodec *codec, AVDictionary **options)

{

int ret = 0;

AVCodecInternal *avci;

const FFCodec *codec2;

........................;

codec2 = ffcodec(codec);

...................;

//将匹配到的AVCodec存入AVCodecContext.codec

avctx->codec = codec;

...........................;

avci = av_mallocz(sizeof(*avci));

//分配AVCodecInternal存入AVCodecContext.internal,后续具体decode都依赖AVCodecInternal

avctx->internal = avci;

avci->buffer_frame = av_frame_alloc();

avci->buffer_pkt = av_packet_alloc();

avci->es.in_frame = av_frame_alloc();

avci->in_pkt = av_packet_alloc();

avci->last_pkt_props = av_packet_alloc();

avci->pkt_props = av_fifo_alloc2(1, sizeof(*avci->last_pkt_props),AV_FIFO_FLAG_AUTO_GROW);

avci->skip_samples_multiplier = 1;

//下面这一段代码是不是很熟悉,这个avctx->priv_data就是具体decoder的context。

//如: const FFCodec ff_h264_decoder = {

// .p.name = "h264",

// .p.long_name = NULL_IF_CONFIG_SMALL("H.264 / AVC / MPEG-4 AVC / MPEG-4 part 10"),

// .p.type = AVMEDIA_TYPE_VIDEO,

// .p.id = AV_CODEC_ID_H264,

// .priv_data_size = sizeof(H264Context),

// .............................;

// }

// 以ff_h264_decoder为例子,此处分配的是struct H264Context

if (codec2->priv_data_size > 0) {

if (!avctx->priv_data) {

avctx->priv_data = av_mallocz(codec2->priv_data_size);

if (!avctx->priv_data) {

ret = AVERROR(ENOMEM);

goto free_and_end;

}

if (codec->priv_class) {

*(const AVClass **)avctx->priv_data = codec->priv_class;

av_opt_set_defaults(avctx->priv_data);

}

}

if (codec->priv_class && (ret = av_opt_set_dict(avctx->priv_data, options)) < 0)

goto free_and_end;

} else {

avctx->priv_data = NULL;

}

.......................................;

//继续初始化AVCodecContext的字段,其中比较重要的是创建并初始化一个Filter

//AVCodecInternal中的AVBSFContext *bsf

ff_decode_preinit(avctx);

.........................................;

}

decoder_init & decoder_init

//将前面分配的AVCodecContext放置在Decoder.avctx中

static int decoder_init(Decoder *d, AVCodecContext *avctx, PacketQueue *queue, SDL_cond *empty_queue_cond) {

memset(d, 0, sizeof(Decoder));

d->pkt = av_packet_alloc();

if (!d->pkt)

return AVERROR(ENOMEM);

d->avctx = avctx;

d->queue = queue;

d->empty_queue_cond = empty_queue_cond;

d->start_pts = AV_NOPTS_VALUE;

d->pkt_serial = -1;

return 0;

}

//创建一个thread用于解码,如:video_thread

static int decoder_start(Decoder *d, int (*fn)(void *), const char *thread_name, void* arg)

{

packet_queue_start(d->queue);

d->decoder_tid = SDL_CreateThread(fn, thread_name, arg);

if (!d->decoder_tid) {

av_log(NULL, AV_LOG_ERROR, "SDL_CreateThread(): %s\n", SDL_GetError());

return AVERROR(ENOMEM);

}

return 0;

}

stream_component_open总结

- 分配AVCodecContext

- 将demuxer stream中codecpar的信息赋值给AVCodecContext对应的字段

- 遍历所有decoder通过demuxer中的codec_id配到正确的decoder存储在AVCodecContext.codec

- 分配并初始化AVCodecContext.internal

- 再启动一个thread进行具体的decode动作。

decode流程分析

下面以video_thread源码进行decode流程分析,主要分析数据流转。

static int video_thread(void *arg)

{

VideoState *is = arg;

AVFrame *frame = av_frame_alloc();

double pts;

double duration;

int ret;

AVRational tb = is->video_st->time_base;

AVRational frame_rate = av_guess_frame_rate(is->ic, is->video_st, NULL);

......................................;

for (;;) {

//获取解码之后的frame,重点分析下此函数

ret = get_video_frame(is, frame);

if (ret < 0)

goto the_end;

if (!ret)

continue;

duration = (frame_rate.num && frame_rate.den ? av_q2d((AVRational){frame_rate.den, frame_rate.num}) : 0);

pts = (frame->pts == AV_NOPTS_VALUE) ? NAN : frame->pts * av_q2d(tb);

//存入queue中供显示模块使将解码后的数据显示出来

ret = queue_picture(is, frame, pts, duration, frame->pkt_pos, is->viddec.pkt_serial);

av_frame_unref(frame);

.......................;

}

.......................;

return 0;

}

static int get_video_frame(VideoState *is, AVFrame *frame)

{

int got_picture;

if ((got_picture = decoder_decode_frame(&is->viddec, frame, NULL)) < 0)

return -1;

..................................;

return got_picture;

}

static int decoder_decode_frame(Decoder *d, AVFrame *frame, AVSubtitle *sub) {

int ret = AVERROR(EAGAIN);

for (;;) {

//serial 和pkt_serial与seek相关,如果没有seek动作,serial,pkt_serial一直保持不变

if (d->queue->serial == d->pkt_serial) {

do {

switch (d->avctx->codec_type) {

case AVMEDIA_TYPE_VIDEO:

//首先尝试获取解码后的frame,获取不到ret = EAGAIN,退出此死循环

ret = avcodec_receive_frame(d->avctx, frame);

.............................;

break;

case AVMEDIA_TYPE_AUDIO:

....................;

break;

}

if (ret >= 0)

return 1;

} while (ret != AVERROR(EAGAIN));

}

do {

if (d->packet_pending) {

d->packet_pending = 0;

} else {

int old_serial = d->pkt_serial;

//从demuxer输出的queue中获取packet

if (packet_queue_get(d->queue, d->pkt, 1, &d->pkt_serial) < 0)

return -1;

....................;

}

if (d->queue->serial == d->pkt_serial)

break;

av_packet_unref(d->pkt);

} while (1);

if (d->avctx->codec_type == AVMEDIA_TYPE_SUBTITLE) {

.........................;

} else {

//将demux出来的packet送到decode模块

if (avcodec_send_packet(d->avctx, d->pkt) == AVERROR(EAGAIN)) {

av_log(d->avctx, AV_LOG_ERROR, "Receive_frame and send_packet both returned EAGAIN, which is an API violation.\n");

d->packet_pending = 1;

} else {

av_packet_unref(d->pkt);

}

}

}

}

从上面的代码可以知道,decode核心步骤为:

- packet_queue_get(…) 获取demux结果用于decode

- avcodec_send_packet(…) 将上述数据送入到decoer

- avcodec_receive_frame(…)从decoder中获取解码后的数据。

packet_queue_get

Decoder *d

packet_queue_get(d->queue, d->pkt, 1, &d->pkt_serial);

d->queue实际就是demuxer的输出queue,以video为例子,看看赋值的地方:

decoder_init(&is->viddec, avctx, &is->videoq, is->continue_read_thread))

d->queue就是is->videoq

packet_queue_get就是从PacketQueue中取出一个AVPacket,细节不做深入分析。

static int packet_queue_get(PacketQueue *q, AVPacket *pkt, int block, int *serial)

{

MyAVPacketList pkt1;

for (;;) {

if (av_fifo_read(q->pkt_list, &pkt1, 1) >= 0) {

q->nb_packets--;

q->size -= pkt1.pkt->size + sizeof(pkt1);

q->duration -= pkt1.pkt->duration;

av_packet_move_ref(pkt, pkt1.pkt);

if (serial)

*serial = pkt1.serial;

av_packet_free(&pkt1.pkt);

ret = 1;

break;

}

}

return ret;

}

avcodec_send_packet

int attribute_align_arg avcodec_send_packet(AVCodecContext *avctx, const AVPacket *avpkt)

{

AVCodecInternal *avci = avctx->internal;

int ret;

........................;

//清空buffer_pkt

av_packet_unref(avci->buffer_pkt);

if (avpkt && (avpkt->data || avpkt->side_data_elems)) {

//将avpkt里面的数据info 和data全部拷贝到buffer_pkt中

ret = av_packet_ref(avci->buffer_pkt, avpkt);

if (ret < 0)

return ret;

}

//将数据送入bsf中,下面深入分析下

av_bsf_send_packet(avci->bsf, avci->buffer_pkt);

if (!avci->buffer_frame->buf[0]) {

//待avcodec_receive_frame源码梳理时再深入分析

ret = decode_receive_frame_internal(avctx, avci->buffer_frame);

if (ret < 0 && ret != AVERROR(EAGAIN) && ret != AVERROR_EOF)

return ret;

}

return 0;

}

int av_bsf_send_packet(AVBSFContext *ctx, AVPacket *pkt)

{

FFBSFContext *const bsfi = ffbsfcontext(ctx);

int ret;

..............................;

//将pkt->data中的数据拷贝到pkt->buf->data

ret = av_packet_make_refcounted(pkt);

if (ret < 0)

return ret;

//将pkt赋值给buffer_pkt

av_packet_move_ref(bsfi->buffer_pkt, pkt);

return 0;

}

avcodec_receive_frame

int attribute_align_arg avcodec_receive_frame(AVCodecContext *avctx, AVFrame *frame)

{

AVCodecInternal *avci = avctx->internal;

int ret, changed;

if (avci->buffer_frame->buf[0]) {

av_frame_move_ref(frame, avci->buffer_frame);

} else {

//先看看这个函数

ret = decode_receive_frame_internal(avctx, frame);

if (ret < 0)

return ret;

}

if (avctx->codec_type == AVMEDIA_TYPE_VIDEO) {

ret = apply_cropping(avctx, frame);

if (ret < 0) {

av_frame_unref(frame);

return ret;

}

}

avctx->frame_number++;

if (avctx->flags & AV_CODEC_FLAG_DROPCHANGED) {

..........................;

}

return 0;

}

static int decode_receive_frame_internal(AVCodecContext *avctx, AVFrame *frame)

{

AVCodecInternal *avci = avctx->internal;

const FFCodec *const codec = ffcodec(avctx->codec);

........................;

//当前大多codec没有实现codec->receive_frame这个接口

//所以重点看下decode_simple_receive_frame

if (codec->receive_frame) {

ret = codec->receive_frame(avctx, frame);

if (ret != AVERROR(EAGAIN))

av_packet_unref(avci->last_pkt_props);

} else

ret = decode_simple_receive_frame(avctx, frame);

.............................;

return ret;

}

static int decode_simple_receive_frame(AVCodecContext *avctx, AVFrame *frame)

{

while (!frame->buf[0]) {

ret = decode_simple_internal(avctx, frame, &discarded_samples);

........................;

}

return 0;

}

static inline int decode_simple_internal(AVCodecContext *avctx, AVFrame *frame, int64_t *discarded_samples)

{

AVCodecInternal *avci = avctx->internal;

AVPacket *const pkt = avci->in_pkt;

const FFCodec *const codec = ffcodec(avctx->codec);

int got_frame, actual_got_frame;

int ret;

if (!pkt->data && !avci->draining) {

av_packet_unref(pkt);

//通过前面的源码分析,avcodec_send_packet是将数据送到了FFBSFContext下的buffer_pkt中,

// 下面这个函数实际上在FFBSFContext做了一些其他操作后,将buffer_pkt取出来,后面可以详细看下这个代码实现

ret = ff_decode_get_packet(avctx, pkt);

if (ret < 0 && ret != AVERROR_EOF)

return ret;

}

..........................................;

got_frame = 0;

if (HAVE_THREADS && avctx->active_thread_type & FF_THREAD_FRAME) {

ret = ff_thread_decode_frame(avctx, frame, &got_frame, pkt);

} else {

//调用具体的decoder进行decode

ret = codec->decode(avctx, frame, &got_frame, pkt);

..................;

}

.............................;

return ret < 0 ? ret : 0;

}

int ff_decode_get_packet(AVCodecContext *avctx, AVPacket *pkt)

{

AVCodecInternal *avci = avctx->internal;

int ret;

ret = av_bsf_receive_packet(avci->bsf, pkt);

..................;

}

int av_bsf_receive_packet(AVBSFContext *ctx, AVPacket *pkt)

{

//调用到具体的FFBitStreamFilter的filter接口

return ff_bsf(ctx->filter)->filter(ctx, pkt);

}

//以ff_h264_metadata_bsf为例,看看filter接口的实现

const FFBitStreamFilter ff_h264_metadata_bsf = {

.p.name = "h264_metadata",

.p.codec_ids = h264_metadata_codec_ids,

.p.priv_class = &h264_metadata_class,

.priv_data_size = sizeof(H264MetadataContext),

.init = &h264_metadata_init,

.close = &ff_cbs_bsf_generic_close,

.filter = &ff_cbs_bsf_generic_filter,

};

int ff_cbs_bsf_generic_filter(AVBSFContext *bsf, AVPacket *pkt)

{

CBSBSFContext *ctx = bsf->priv_data;

CodedBitstreamFragment *frag = &ctx->fragment;

int err;

err = ff_bsf_get_packet_ref(bsf, pkt);

if (err < 0)

return err;

........................;

return err;

}

int ff_bsf_get_packet_ref(AVBSFContext *ctx, AVPacket *pkt)

{

FFBSFContext *const bsfi = ffbsfcontext(ctx);

//取出buffer_pkt

av_packet_move_ref(pkt, bsfi->buffer_pkt);

return 0;

}

总结

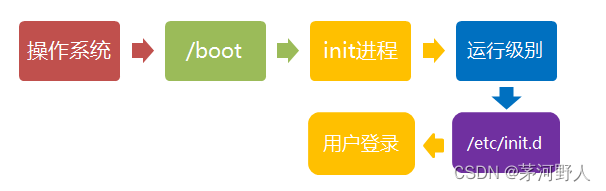

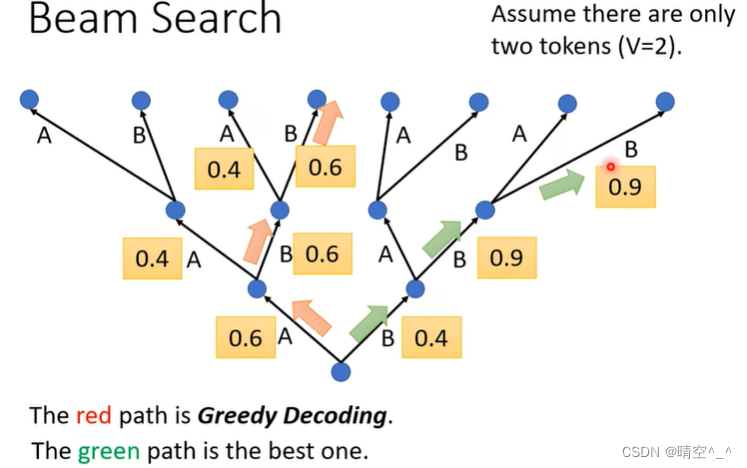

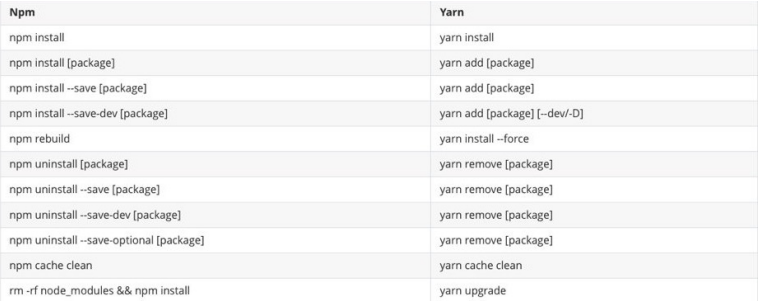

- ffmpeg decoder内部模块的结构关系是怎样的?

具体关系看下图 - 如何匹配到正确的decoder,h264还是vp9等 ?

demuxer模块会demux出具体的codec,然后给根据此codec的codec id匹配到正确的decoder - decoder数据流向是怎么样的?如何驱动数据流转的?

avcodec_send_packet()将未解码的数据存储在filter模块,然后再调用avcodec_recieve_frame() 从decoder中获取解码后的frame