CVPR-2018

Singh B, Davis L S. An analysis of scale invariance in object detection snip[C]//Proceedings of the IEEE conference on computer vision and pattern recognition. 2018: 3578-3587.

https://github.com/bharatsingh430/snip?tab=readme-ov-file

文章目录

- 1、Background and Motivation

- 2、Related Work

- 3、Advantages / Contributions

- 4、Method

- 4.1、Image Classification at Multiple Scales

- 4.2 Data Variation or Correct Scale?

- 5.3 Object Detection on an Image Pyramid

- 5、Experiments

- 5.1、Datasets

- 5.2、Evaluation

- 6、Conclusion(own) / Future work / Reference

1、Background and Motivation

CNN 在图像分类上已经超越了人类的水平,但是在目标检测上还有很长的路要走

large scale variation across object instances, and especially, the challenge of detecting very small objects stands out as one of the factors behind the difference in performance.

可以看到在 COCO 数据集上,大约 50% 的目标小于图片的 10%,

目标尺寸从小到大排序位于 10%~90% 的目标,尺寸相差高达 20 倍,尺度变化是非常大的

To alleviate the problems arising from scale variation and small object instances,有如下的解决思路

- shallow(er) layers, are combined with deeper layers for detecting small object

- dilated/deformable convolution for detecting 大目标

- independent predictions at layers of different resolutions are used to capture object instances of different scales

- context

- multi-scale train

- inference is performed on multiple scales,然后 NMS 合并

作者提出 Scale Normalization for Image Pyramids (SNIP),only back-propagate gradients for RoIs/anchors that have a resolution close to that of the pre-trained CNN

2、Related Work

围绕着解决尺度变化较大的解决思路展开

3、Advantages / Contributions

针对目标检测中的尺寸问题,提出了 SNIP 方法,缓解了小目标检出率(为了减少 Domain-Shift——pretrain Train和Train尺寸上的差异,在梯度回传的时候只将和预训练模型所基于的训练数据尺寸相对应的ROI的梯度进行回传,借鉴多尺度训练的思想,引入图像金字塔来处理数据集中不同尺寸的数据)

实现了 COCO 数据集上 SOTA

获得了 Best Student Entry in the COCO 2017 challenge.

4、Method

作者先来了几个小实验来论述他对尺度变化问题的理解

4.1、Image Classification at Multiple Scales

(1)Na¨ıve Multi-Scale Inference

inference 的时候图片先被下采样到 48x48, 64x64, 80x80, 96x96 and 128x128, 然后上采样到 224x224 送到网络中,如 CNN-B

这个实验模拟的就是训练数据的分辨率和验证数据的分辨率不一致的时候对模型效果的影响

在不同分辨率下测试

testing on resolutions on which the network was not trained is clearly sub-optimal

(2)Resolution Specific Classifiers

训 ImageNet 的网络输入 224x224,设计的时候一般 a stride of 2 followed by a max pooling layer of stride 2x2,降低计算量

输入尺寸变小的时候,网络结构也需要相应的改变,例如 48x48 和 96x96

作者 a stride of 1 and 3x3 convolutions in the first layer for 48x48 images,训练 CNN-S

对于 96 x 96 的输入分辨率的话,use a kernel of size 5x5 and stride of 2

相比于 CNN-B,在对应训练尺寸下测试,效果提升还是比较明显的(it is tempting to pre-train classification networks with different architectures for low resolution images and use them for object detection for low resolution objects)

这个实验模拟的是训练数据和验证数据的分辨率一致的效果

(3)Fine-tuning High-Resolution Classifiers

CNN-B-FT 是用高分辨率的图片训练 224x224 的网络,训练好后,用低分辨率图片上采样到 224 进行 fine-tune,效果提升明显

instead of reducing the stride by 2, it is better to up-sample images 2 times and then fine-tune the network pre-trained on high-resolution images

基于高分辨率图像训练的模型也可以有效提取放大的低分辨率图像的特征

核心思路,怎么训怎么测,训练小目标多,测小目标,训练大目标多,测大目标

4.2 Data Variation or Correct Scale?

Table 1 的第一列对应图 5.2,第二列对应 5.1,第四列 MST 对应 5.3,验证集的尺寸都是 1400x2000

80 0 a l l 800_{all} 800all 代表训练尺寸是 800x1400

140 0 a l l 1400_{all} 1400all 代表训练尺寸是 1400x2000

(1)Training at different resolutions

table1 第三列针对小目标放大了输入分辨率为 1400,效果最好,符合上面 ImageNet 数据集上的实验结论,放大小目标的分辨率,然后测试效果比较好,但是只比第二列好一丢丢?

作者的解释

too big to be correctly classified,

blows up the medium-to-large objects which degrades performance

ps:单纯放大图片分辨率也不是办法,要更灵活

(2)Scale specific detectors

table1 第一列针对小目标放大了图片分辨率效果反而没有第二列好?

作者的解释

ignoring medium-to-large objects (about 30% of the total object instances) that hurt performance more than it helped by eliminating extreme scale objects.

有道理,小目标虽然多,但是难训练呀,提升的点有限,这么一操作,中大目标的精度夸夸的掉

(3)Multi-Scale Training (MST)

总体效果还行,但没有想象中的好

degraded by extremely small and large objects

it is important to train a detector with appropriately scaled objects while capturing as much variation across the objects as possible.

还是验证了提升分辨率解决小目标问题比较有效,有没有更有效的方法?

更好的实现 train for test

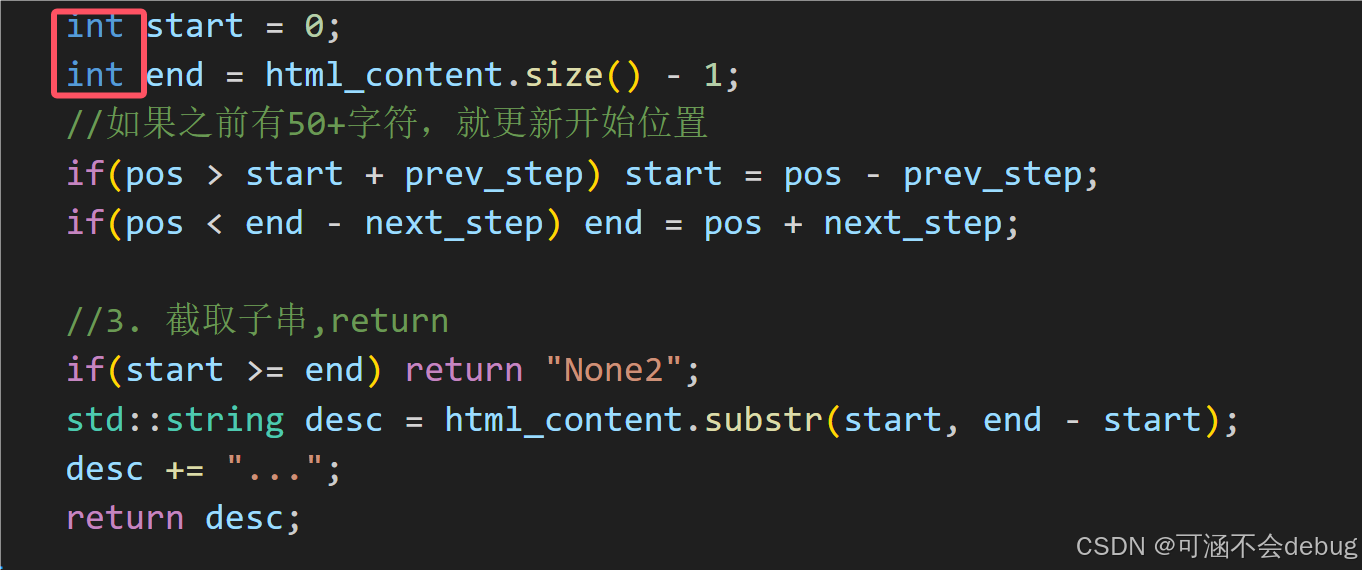

5.3 Object Detection on an Image Pyramid

Scale Normalization for Image Pyramids (SNIP)

与 invalid GT 的 overlap 过大的 anchor 将会被舍弃

Faster RCNN 的那套框架,backbone + RPN + RCN

those anchors which have an overlap greater than 0.3 with an invalid ground truth box are excluded during training (i.e. their gradients are set to zero).

不需要在高分辨率图像中对大型的目标进行反向传播,不需要在中分辨率图像中对中型目标进行反向传播,不需要在低分辨率图像中对小目标进行反向传播以更好的缓解预训练的尺寸空间中的Domain-Shift从而获得精度提升( reducing the domain-shift in the scale-space)

5、Experiments

5.1、Datasets

COCO

5.2、Evaluation

这个表 AR 和小目标的 AP 提升比较明显

我们知道 faster rcnn 中,GT 与 anchor 的 overlap 大于 0.7 的时候 anchor 才是 positive,

均小于 0.7 的时候,将分配最大 overlap 的 anchor 作为 正样本(If there does not exist a matching anchor, RPN assigns the anchor with the maximum overlap with ground truth bounding box as positive.)

统计发现

more than 40% of the ground truth boxes, an anchor which has an overlap less than 0.5 is assigned as a positive

换句话说,很多 GT 与 anchor 的 overlap 是小于 50%的,质量并不高

SNIP 的设计缓解了此现象(会有 invalid ground truth,规避掉了一些质量不高的 anchor,因为我连 GT 都摒弃,哈哈哈)

a stronger classification network like DPN-92

6、Conclusion(own) / Future work / Reference

-

参考 在小目标检测上另辟蹊径的SNIP

-

training the detector on similar scale object instances as the pre-trained classification networks helps to reduce the domain shift for the pre-trained classification network.

-

个人阅读感受:整个行文思路不是很清晰,重点的方法部分论述的不多,实验部分不够充实,细节东一句西一句,总的给人感觉思路不太顺畅,前后两句跳跃性比较大

-

多尺寸训练的缺点,极大或者极小的目标会有干扰