【图书介绍】《Spark SQL大数据分析快速上手》-CSDN博客

《Spark SQL大数据分析快速上手》【摘要 书评 试读】- 京东图书

大数据与数据分析_夏天又到了的博客-CSDN博客

Hadoop完全分布式环境搭建步骤-CSDN博客,前置环境安装参看此博文

伪分布模式也是在一台主机上运行,我们直接使用2.2节配置好的CentOS7-201虚拟机。伪分布模式需要启动Spark的两个进程,分别是Master和Worker。启动后,可以通过8080端口查看Spark的运行状态。伪分布模式安装需要修改一个配置文件SPARK_HOME/conf/workers,添加一个worker节点,然后通过SPARK_HOME/sbin目录下的start-all.sh启动Spark集群。完整的Spark伪分布模式安装的操作步骤如下:

步骤(1)配置SSH免密码登录。

由于启动Spark需要远程启动Worker进程,因此需要配置从start-all.sh的主机到worker节点的SSH免密码登录(如果之前已经配置过此项,那么可以不用重复配置):

$ ssh-keygen -t rsa

$ ssh-copy-id server201步骤(2)修改配置文件。

在spark-env.sh文件中添加JAVA_HOME环境变量(在最前面添加即可):

$ vim /app/spark-3.3.1/sbin/spark-env.sh

export JAVA_HOME=/usr/java/jdk1.8.0-361修改workers配置文件:

$ vim /app/spark-3.3.1/conf/workers

server201步骤(3) 执行start-all.sh启动Spark:

$ /app/spark-3.3.1/sbin/start-all.sh启动完成以后,会有两个进程,分别是Master和Worker:

[hadoop@server201 sbin]$ jps

2128 Worker

2228 Jps

2044 Master查看启动日志可知,可以通过访问8080端口查看Web界面:

$ cat /app/spark-3.3.1/logs/spark-hadoop-org.apache.spark. deploy.master.Master-1-server201.out

21/03/22 22:03:47 INFO Utils: Successfully started service 'sparkMaster' on port 7077.

21/03/22 22:03:47 INFO Master: Starting Spark master at spark://server201:7077

21/03/22 22:03:47 INFO Master: Running Spark version 3.3.1

21/03/22 22:03:47 INFO Utils: Successfully started service 'MasterUI' on port 8080.

21/03/22 22:03:47 INFO MasterWebUI: Bound MasterWebUI to 0.0.0.0, and started at http://server201:8080

21/03/22 22:03:47 INFO Master: I have been elected leader! New state: ALIVE

21/03/22 22:03:50 INFO Master: Registering worker 192.168.56.201:34907 with 2 cores, 2.7 GiB RAM

步骤(4)再次通过netstat命令查看端口的使用情况:

[hadoop@server201 sbin]$ netstat -nap | grep java

(Not all processes could be identified, non-owned process info

will not be shown, you would have to be root to see it all.)

tcp6 0 0 :::8080 :::* LISTEN 2044/java

tcp6 0 0 :::8081 :::* LISTEN 2128/java

tcp6 0 0 192.168.56.201:34907 :::* LISTEN 2128/java

tcp6 0 0 192.168.56.201:7077 :::* LISTEN 2044/java

tcp6 0 0 192.168.56.201:53630 192.168.56.201:7077 ESTABLISHED 2128/java

tcp6 0 0 192.168.56.201:7077 192.168.56.201:53630 ESTABLISHED 2044/java

unix 2 [ ] STREAM CONNECTED 53247 2044/java

unix 2 [ ] STREAM CONNECTED 55327 2128/java

unix 2 [ ] STREAM CONNECTED 54703 2044/java

unix 2 [ ] STREAM CONNECTED 54699 2128/java

[hadoop@server201 sbin]$ jps

2128 Worker

2243 Jps

2044 Master

可以发现一共有两个端口被占用,它们分别是7077和8080。

步骤(5)查看8080端口。

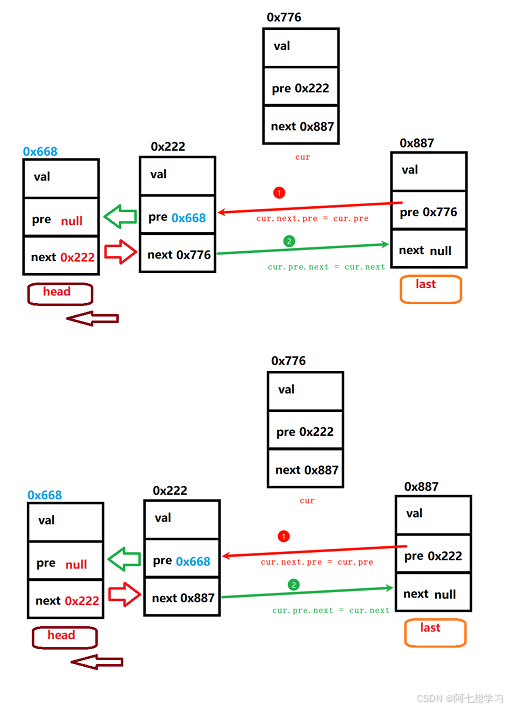

在宿主机浏览器中直接输入http://192.168.56.201:8080查看Spark的运行状态,如图2-7所示。

图2-7 Spark的运行状态

图2-7 Spark的运行状态

步骤(6) 测试集群是否运行。

依然使用Spark Shell,通过--master指定spark://server201:7077的地址即可使用这个集群:

$ spark-shell --master spark://server201:7077然后我们可以再做一次2.4.1节的WordCount测试。

(1)读取文件:

scala> val file = sc.textFile("file:///app/hadoop-3.2.3/NOTICE.txt");

file: org.apache.spark.rdd.RDD[String] = file:///app/hadoop-3.2.3/NOTICE.txt MapPartitionsRDD[1] at textFile at <console>:1

(2)根据空格符和回车符将字符节分为一个一个的单词:

scala> val words = file.flatMap(_.split("\\s+"));

words: org.apache.spark.rdd.RDD[String] = MapPartitionsRDD[2] at flatMap at <console>:1

(3)统计单词,每一个单词初始统计为1:

scala> val kv = words.map((_,1));

kv: org.apache.spark.rdd.RDD[(String, Int)] = MapPartitionsRDD[3] at map at <console>:1(4)根据key进行统计计算:

scala> val worldCount = kv.reduceByKey(_+_);

worldCount: org.apache.spark.rdd.RDD[(String, Int)] = ShuffledRDD[4] at reduceByKey at <console>:1(5)输出结果并在每一行中添加一个制表符:

scala> worldCount.collect.foreach(kv=>println(kv._1+"\t"+kv._2));

this 2

is 1

how 1

into 2

something 1

hive. 2

file 1

And 1

process 1

you 2

about 1

wordcount 1

如果在运行时查看后台进程,将会发现多出以下两个进程:

[hadoop@server201 ~]# jps

12897 Worker

13811 SparkSubmit

13896 CoarseGrainedExecutorBackend

12825 Master

14108 Jps

SparkSubmit为一个客户端,与Running Application对应;CoarseGrainedExecutorBackend用于接收任务。

![[Codesys]常用功能块应用分享-BMOV功能块功能介绍及其使用实例说明](https://i-blog.csdnimg.cn/direct/42e93b825ee24940b6a7601b90554c97.png)