- 🍨 本文为🔗365天深度学习训练营 中的学习记录博客

- 🍖 原作者:K同学啊

前言

- 本次采用VGG16模型进行预测,准确率达到了98.875,但是修改VGG16网络结构, 准确率达到了0.9969,并且计算量下降78.07%

1、API积累

VGG16简介

VGG优缺点分析:

- VGG优点

VGG的结构非常简洁,整个网络都使用了同样大小的卷积核尺寸(3x3)和最大池化尺寸(2x2)。

- VGG缺点

1)训练时间过长,调参难度大。2)需要的存储容量大,不利于部署。例如存储VGG-16权重值文件的大小为500多MB,不利于安装到嵌入式系统中。

后面优化也是基于VGG的缺点来进行

VGG结构图如下(PPT绘制):

API积累

🚄 优化

- shuffle() :打乱数据,关于此函数的详细介绍可以参考:https://zhuanlan.zhihu.com/p/42417456

- prefetch() :预取数据,加速运行,TensorFlow的prefetch方法用于在GPU执行计算时,由CPU预处理下一个批次的数据,实现生产者/消费者重叠,提高训练效率,参考本专栏案例一:https://yxzbk.blog.csdn.net/article/details/142862154

- cache() :将数据集缓存到内存当中,加速运行

💂 像素归一化

讲像素映射到—> [0, 1]中,代码如下:

# 归一化数据

normalization_layer = layers.experimental.preprocessing.Rescaling(1.0 / 255)

# 训练集和验证集归一化

train_ds = train_ds.map(lambda x, y : (normalization_layer(x), y))

val_ds = val_ds.map(lambda x, y : (normalization_layer(x), y))

💛 优化器

本文全连接层最后一层采用softmax,故优化器为SparseCategoricalCrossentropy。

SparseCategoricalCrossentropy函数注意事项:

from_logits参数:

- 布尔值,默认值为

False。 - 当为

True时,函数假设传入的预测值是未经过激活函数处理的原始 logits 值。如果模型的最后一层没有使用 softmax 激活函数(即返回 logits),需要将from_logits设置为True。 - 当为

False时,函数假设传入的预测值已经是经过 softmax 处理的概率分布。

2、案例

1、数据处理

1、导入库

import tensorflow as tf

from tensorflow.keras import models, layers, datasets

import matplotlib.pyplot as plt

import numpy as np

# 判断支持gpu

gpus = tf.config.list_physical_devices("GPU")

if gpus:

gpu0 = gpus[0]

tf.config.experimental.set_memory_growth(gpu0, True)

tf.config.set_visible_devices([gpu0], "GPU")

gpus

[PhysicalDevice(name='/physical_device:GPU:0', device_type='GPU')]

2、查看数据目录,获取类别

数据存储格式:data/ 下每个类别分别存储在不同模块中

import os, pathlib

data_dir = './data/'

data_dir = pathlib.Path(data_dir)

# 查看data_dir下的所有文件名

classnames = os.listdir(data_dir)

classnames

['Dark', 'Green', 'Light', 'Medium']

3、导入数据与划分数据集

# 训练集 : 测试集 = 8 :2

batch_size = 32

img_width, img_height = 224, 224

train_ds = tf.keras.preprocessing.image_dataset_from_directory(

'./data/',

validation_split = 0.2,

batch_size=batch_size,

image_size = (img_width, img_height),

shuffle = True,

subset='training',

seed=42

)

val_ds = tf.keras.preprocessing.image_dataset_from_directory(

'./data/',

validation_split = 0.2,

batch_size=batch_size,

image_size = (img_width, img_height),

shuffle = True,

subset='validation',

seed=42

)

Found 1200 files belonging to 4 classes.

Using 960 files for training.

Found 1200 files belonging to 4 classes.

Using 240 files for validation.

# 查看数据格式

for X, y in train_ds.take(1):

print("[N, W, H, C]", X.shape)

print("lables: ", y)

break

[N, W, H, C] (32, 224, 224, 3)

lables: tf.Tensor([0 0 2 3 1 1 1 3 0 1 2 2 2 1 0 2 0 2 1 0 0 1 2 1 3 2 2 2 1 0 2 3], shape=(32,), dtype=int32)

# 查看原始数据像素

imgs, labelss = next(iter(train_ds)) # 获取一批数据

first = imgs[0]

print(first.shape)

print(np.min(first), np.max(first))

(224, 224, 3)

0.0 255.0

4、展示一批数据

plt.figure(figsize=(20, 10))

for images, labels in train_ds.take(1):

for i in range(20):

plt.subplot(5, 10, i + 1) # H, W

plt.imshow(images[i].numpy().astype("uint8"))

plt.title(classnames[labels[i]])

plt.axis('off')

plt.show()

5、配置数据集与归一化数据

- shuffle() :打乱数据,关于此函数的详细介绍可以参考:https://zhuanlan.zhihu.com/p/42417456

- prefetch() :预取数据,加速运行,TensorFlow的prefetch方法用于在GPU执行计算时,由CPU预处理下一个批次的数据,实现生产者/消费者重叠,提高训练效率,参考本专栏案例一:https://yxzbk.blog.csdn.net/article/details/142862154

- cache() :将数据集缓存到内存当中,加速运行

# 加速

# 变量名比较复杂,但是代码比较固定

from tensorflow.data.experimental import AUTOTUNE

AUTOTUNE = tf.data.experimental.AUTOTUNE

# 打乱加速

train_ds = train_ds.cache().shuffle(1000).prefetch(buffer_size=AUTOTUNE)

val_ds = val_ds.cache().prefetch(buffer_size=AUTOTUNE)

# 归一化数据

normalization_layer = layers.experimental.preprocessing.Rescaling(1.0 / 255)

# 训练集和验证集归一化

train_ds = train_ds.map(lambda x, y : (normalization_layer(x), y))

val_ds = val_ds.map(lambda x, y : (normalization_layer(x), y))

# 查看归一化数据

image_batch, label_batch = next(iter(val_ds))

# 取一个元素

first_image = image_batch[0]

# 查看

print(np.min(first_image), np.max(first_image)) # 查看像素最大值,最小值

print(image_batch.shape)

print(first_image.shape)

0.0 1.0

(32, 224, 224, 3)

(224, 224, 3)

2024-11-08 18:37:15.334784: W tensorflow/core/kernels/data/cache_dataset_ops.cc:856] The calling iterator did not fully read the dataset being cached. In order to avoid unexpected truncation of the dataset, the partially cached contents of the dataset will be discarded. This can happen if you have an input pipeline similar to `dataset.cache().take(k).repeat()`. You should use `dataset.take(k).cache().repeat()` instead.

2、手动搭建VGG16网络

def VGG16(class_num, input_shape):

inputs = layers.Input(input_shape)

# 1st block

x = layers.Conv2D(64, kernel_size=(3, 3), activation='relu', strides=(1, 1), padding='same')(inputs)

x = layers.Conv2D(64, kernel_size=(3, 3), activation='relu', strides=(1, 1), padding='same')(x)

x = layers.MaxPooling2D((2, 2), strides=(2, 2))(x)

# 2nd block

x = layers.Conv2D(128, kernel_size=(3, 3), activation='relu', strides=(1, 1), padding='same')(x)

x = layers.Conv2D(128, kernel_size=(3, 3), activation='relu', strides=(1, 1), padding='same')(x)

x = layers.MaxPooling2D((2, 2), strides=(2, 2))(x)

# 3rd block

x = layers.Conv2D(256, kernel_size=(3, 3), activation='relu', strides=(1, 1), padding='same')(x)

x = layers.Conv2D(256, kernel_size=(3, 3), activation='relu', strides=(1, 1), padding='same')(x)

x = layers.Conv2D(256, kernel_size=(3, 3), activation='relu', strides=(1, 1), padding='same')(x)

x = layers.MaxPooling2D((2, 2), strides=(2, 2))(x)

# 4th block

x = layers.Conv2D(512, kernel_size=(3, 3), activation='relu', strides=(1, 1), padding='same')(x)

x = layers.Conv2D(512, kernel_size=(3, 3), activation='relu', strides=(1, 1), padding='same')(x)

x = layers.Conv2D(512, kernel_size=(3, 3), activation='relu', strides=(1, 1), padding='same')(x)

x = layers.MaxPooling2D((2, 2), strides=(2, 2))(x)

# 5th block

x = layers.Conv2D(512, kernel_size=(3, 3), activation='relu', strides=(1, 1), padding='same')(x)

x = layers.Conv2D(512, kernel_size=(3, 3), activation='relu', strides=(1, 1), padding='same')(x)

x = layers.Conv2D(512, kernel_size=(3, 3), activation='relu', strides=(1, 1), padding='same')(x)

x = layers.MaxPooling2D((2, 2), strides=(2, 2))(x)

# 全连接层, 这里修改以下

x = layers.Flatten()(x)

x = layers.Dense(4096, activation='relu')(x)

x = layers.Dense(4096, activation='relu')(x)

# 最后一层用激活函数:softmax

out_shape = layers.Dense(class_num, activation='softmax')(x)

# 创建模型

model = models.Model(inputs=inputs, outputs=out_shape)

return model

model = VGG16(len(classnames), (img_width, img_height, 3))

model.summary()

Model: "model"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_1 (InputLayer) [(None, 224, 224, 3)] 0

conv2d (Conv2D) (None, 224, 224, 64) 1792

conv2d_1 (Conv2D) (None, 224, 224, 64) 36928

max_pooling2d (MaxPooling2D (None, 112, 112, 64) 0

)

conv2d_2 (Conv2D) (None, 112, 112, 128) 73856

conv2d_3 (Conv2D) (None, 112, 112, 128) 147584

max_pooling2d_1 (MaxPooling (None, 56, 56, 128) 0

2D)

conv2d_4 (Conv2D) (None, 56, 56, 256) 295168

conv2d_5 (Conv2D) (None, 56, 56, 256) 590080

conv2d_6 (Conv2D) (None, 56, 56, 256) 590080

max_pooling2d_2 (MaxPooling (None, 28, 28, 256) 0

2D)

conv2d_7 (Conv2D) (None, 28, 28, 512) 1180160

conv2d_8 (Conv2D) (None, 28, 28, 512) 2359808

conv2d_9 (Conv2D) (None, 28, 28, 512) 2359808

max_pooling2d_3 (MaxPooling (None, 14, 14, 512) 0

2D)

conv2d_10 (Conv2D) (None, 14, 14, 512) 2359808

conv2d_11 (Conv2D) (None, 14, 14, 512) 2359808

conv2d_12 (Conv2D) (None, 14, 14, 512) 2359808

max_pooling2d_4 (MaxPooling (None, 7, 7, 512) 0

2D)

flatten (Flatten) (None, 25088) 0

dense (Dense) (None, 4096) 102764544

dense_1 (Dense) (None, 4096) 16781312

dense_2 (Dense) (None, 4) 16388

=================================================================

Total params: 134,276,932

Trainable params: 134,276,932

Non-trainable params: 0

_________________________________________________________________

3、模型的训练

1、设置超参数

learn_rate = 1e-4

# 动态学习率

lr_schedule = tf.keras.optimizers.schedules.ExponentialDecay(

learn_rate,

decay_steps=20,

decay_rate=0.95,

staircase=True

)

# 设置优化器

opt = tf.keras.optimizers.Adam(learning_rate=learn_rate)

# 设置超参数

model.compile(

optimizer=opt,

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['accuracy']

)

2、模型训练

from tensorflow.keras.callbacks import ModelCheckpoint, EarlyStopping

# 设置训练次数

epochs = 20

# 设置早停

earlystopper = EarlyStopping(monitor='val_accuracy',

min_delta=0.001,

patience=20,

verbose=1)

# 保存最佳模型

checkpointer = ModelCheckpoint('best_model.h5',

monitor='val_accuracy',

verbose=1,

save_best_only=True,

save_weight_only=True)

history = model.fit(

x=train_ds,

validation_data=val_ds,

epochs=epochs,

verbose=1,

callbacks=[earlystopper, checkpointer]

)

Epoch 1/20

2024-11-08 18:37:27.650111: I tensorflow/stream_executor/cuda/cuda_dnn.cc:384] Loaded cuDNN version 8101

2024-11-08 18:37:31.754452: I tensorflow/stream_executor/cuda/cuda_blas.cc:1786] TensorFloat-32 will be used for the matrix multiplication. This will only be logged once.

30/30 [==============================] - ETA: 0s - loss: 1.3401 - accuracy: 0.3094

Epoch 1: val_accuracy improved from -inf to 0.55833, saving model to best_model.h5

30/30 [==============================] - 17s 255ms/step - loss: 1.3401 - accuracy: 0.3094 - val_loss: 0.9073 - val_accuracy: 0.5583

Epoch 2/20

30/30 [==============================] - ETA: 0s - loss: 0.9208 - accuracy: 0.5406

Epoch 2: val_accuracy improved from 0.55833 to 0.63333, saving model to best_model.h5

30/30 [==============================] - 7s 223ms/step - loss: 0.9208 - accuracy: 0.5406 - val_loss: 0.6053 - val_accuracy: 0.6333

Epoch 3/20

30/30 [==============================] - ETA: 0s - loss: 0.6325 - accuracy: 0.6594

Epoch 3: val_accuracy did not improve from 0.63333

30/30 [==============================] - 4s 128ms/step - loss: 0.6325 - accuracy: 0.6594 - val_loss: 0.7538 - val_accuracy: 0.5542

Epoch 4/20

30/30 [==============================] - ETA: 0s - loss: 0.5219 - accuracy: 0.7115

Epoch 4: val_accuracy improved from 0.63333 to 0.82083, saving model to best_model.h5

30/30 [==============================] - 7s 246ms/step - loss: 0.5219 - accuracy: 0.7115 - val_loss: 0.4044 - val_accuracy: 0.8208

Epoch 5/20

30/30 [==============================] - ETA: 0s - loss: 0.3322 - accuracy: 0.8771

Epoch 5: val_accuracy improved from 0.82083 to 0.86667, saving model to best_model.h5

30/30 [==============================] - 7s 238ms/step - loss: 0.3322 - accuracy: 0.8771 - val_loss: 0.3286 - val_accuracy: 0.8667

Epoch 6/20

30/30 [==============================] - ETA: 0s - loss: 0.1433 - accuracy: 0.9573

Epoch 6: val_accuracy improved from 0.86667 to 0.95417, saving model to best_model.h5

30/30 [==============================] - 7s 230ms/step - loss: 0.1433 - accuracy: 0.9573 - val_loss: 0.1310 - val_accuracy: 0.9542

Epoch 7/20

30/30 [==============================] - ETA: 0s - loss: 0.0982 - accuracy: 0.9594

Epoch 7: val_accuracy improved from 0.95417 to 0.97917, saving model to best_model.h5

30/30 [==============================] - 7s 233ms/step - loss: 0.0982 - accuracy: 0.9594 - val_loss: 0.0739 - val_accuracy: 0.9792

Epoch 8/20

30/30 [==============================] - ETA: 0s - loss: 0.0630 - accuracy: 0.9802

Epoch 8: val_accuracy did not improve from 0.97917

30/30 [==============================] - 4s 127ms/step - loss: 0.0630 - accuracy: 0.9802 - val_loss: 0.2461 - val_accuracy: 0.9250

Epoch 9/20

30/30 [==============================] - ETA: 0s - loss: 0.1089 - accuracy: 0.9625

Epoch 9: val_accuracy improved from 0.97917 to 0.98333, saving model to best_model.h5

30/30 [==============================] - 6s 217ms/step - loss: 0.1089 - accuracy: 0.9625 - val_loss: 0.0717 - val_accuracy: 0.9833

Epoch 10/20

30/30 [==============================] - ETA: 0s - loss: 0.0392 - accuracy: 0.9885

Epoch 10: val_accuracy did not improve from 0.98333

30/30 [==============================] - 4s 126ms/step - loss: 0.0392 - accuracy: 0.9885 - val_loss: 0.0901 - val_accuracy: 0.9708

Epoch 11/20

30/30 [==============================] - ETA: 0s - loss: 0.0297 - accuracy: 0.9854

Epoch 11: val_accuracy improved from 0.98333 to 0.98750, saving model to best_model.h5

30/30 [==============================] - 7s 232ms/step - loss: 0.0297 - accuracy: 0.9854 - val_loss: 0.0629 - val_accuracy: 0.9875

Epoch 12/20

30/30 [==============================] - ETA: 0s - loss: 0.0331 - accuracy: 0.9885

Epoch 12: val_accuracy did not improve from 0.98750

30/30 [==============================] - 4s 127ms/step - loss: 0.0331 - accuracy: 0.9885 - val_loss: 0.0384 - val_accuracy: 0.9875

Epoch 13/20

30/30 [==============================] - ETA: 0s - loss: 0.1043 - accuracy: 0.9708

Epoch 13: val_accuracy did not improve from 0.98750

30/30 [==============================] - 4s 128ms/step - loss: 0.1043 - accuracy: 0.9708 - val_loss: 0.0445 - val_accuracy: 0.9833

Epoch 14/20

30/30 [==============================] - ETA: 0s - loss: 0.0352 - accuracy: 0.9833

Epoch 14: val_accuracy did not improve from 0.98750

30/30 [==============================] - 4s 134ms/step - loss: 0.0352 - accuracy: 0.9833 - val_loss: 0.1387 - val_accuracy: 0.9500

Epoch 15/20

30/30 [==============================] - ETA: 0s - loss: 0.1128 - accuracy: 0.9594

Epoch 15: val_accuracy did not improve from 0.98750

30/30 [==============================] - 4s 128ms/step - loss: 0.1128 - accuracy: 0.9594 - val_loss: 0.4397 - val_accuracy: 0.8125

Epoch 16/20

30/30 [==============================] - ETA: 0s - loss: 0.0949 - accuracy: 0.9646

Epoch 16: val_accuracy did not improve from 0.98750

30/30 [==============================] - 4s 130ms/step - loss: 0.0949 - accuracy: 0.9646 - val_loss: 0.1068 - val_accuracy: 0.9500

Epoch 17/20

30/30 [==============================] - ETA: 0s - loss: 0.0618 - accuracy: 0.9781

Epoch 17: val_accuracy did not improve from 0.98750

30/30 [==============================] - 4s 128ms/step - loss: 0.0618 - accuracy: 0.9781 - val_loss: 0.1663 - val_accuracy: 0.9292

Epoch 18/20

30/30 [==============================] - ETA: 0s - loss: 0.0351 - accuracy: 0.9854

Epoch 18: val_accuracy did not improve from 0.98750

30/30 [==============================] - 4s 128ms/step - loss: 0.0351 - accuracy: 0.9854 - val_loss: 0.0687 - val_accuracy: 0.9792

Epoch 19/20

30/30 [==============================] - ETA: 0s - loss: 0.0609 - accuracy: 0.9781

Epoch 19: val_accuracy did not improve from 0.98750

30/30 [==============================] - 4s 128ms/step - loss: 0.0609 - accuracy: 0.9781 - val_loss: 0.0963 - val_accuracy: 0.9708

Epoch 20/20

30/30 [==============================] - ETA: 0s - loss: 0.0263 - accuracy: 0.9896

Epoch 20: val_accuracy did not improve from 0.98750

30/30 [==============================] - 4s 127ms/step - loss: 0.0263 - accuracy: 0.9896 - val_loss: 0.2104 - val_accuracy: 0.9458

- 最好效果:val_accuracy did not improve from 0.98750

4、结果显示

# 获取训练集和验证集损失率和准确率

acc = history.history['accuracy']

val_acc = history.history['val_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs_range = range(epochs)

plt.figure(figsize=(12, 4))

plt.subplot(1, 2, 1)

plt.plot(epochs_range, acc, label='Training Accuracy')

plt.plot(epochs_range, val_acc, label='Validation Accuracy')

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')

plt.subplot(1, 2, 2)

plt.plot(epochs_range, loss, label='Training Loss')

plt.plot(epochs_range, val_loss, label='Validation Loss')

plt.legend(loc='upper right')

plt.title('Training and Validation Loss')

plt.show()

3、优化

讲全连接层进行优化,对其减少全连接层神经元的数量:

# 原来

x = layers.Flatten()(x)

x = layers.Dense(4096, activation='relu')(x)

x = layers.Dense(4096, activation='relu')(x)

# 最后一层用激活函数:softmax

out_shape = layers.Dense(class_num, activation='softmax')(x)

# 修改

x = layers.Flatten()(x)

x = layers.Dense(1024, activation='relu')(x)

x = layers.Dense(512, activation='relu')(x)

# 最后一层用激活函数:softmax

out_shape = layers.Dense(class_num, activation='softmax')(x)

修改效果:loss: 0.0166 - accuracy: 0.9969,准确率提升:0.6%个百分点,但是计算量确实大量减少

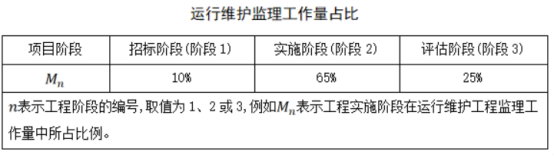

修改前的全连接层参数数量

- 第一个

Dense层:输入 25088,输出 4096- 参数数量:( (25088 + 1) \times 4096 = 102764544 )

- 第二个

Dense层:输入 4096,输出 4096- 参数数量:( (4096 + 1) \times 4096 = 16781312 )

- 输出层:输入 4096,输出 4

- 参数数量:( (4096 + 1) \times 4 = 16388 )

总参数数量:( 102764544 + 16781312 + 16388 = 119562244 )

修改后的全连接层参数数量

- 第一个

Dense层:输入 25088,输出 1024- 参数数量:( (25088 + 1) \times 1024 = 25690112 )

- 第二个

Dense层:输入 1024,输出 512- 参数数量:( (1024 + 1) \times 512 = 524800 )

- 输出层:输入 512,输出 4

- 参数数量:( (512 + 1) \times 4 = 2052 )

总参数数量:( 25690112 + 524800 + 2052 = 26216964 )

计算减少的百分比

减少的参数数量:

119562244−26216964=93345280

减少的百分比:

93345280

119562244

×

100

%

≈

78.07

%

\frac{93345280}{119562244}\times100\%\approx78.07\%

11956224493345280×100%≈78.07%

因此,修改后计算量(以参数数量衡量)减少了约 78.07%。

![[CKS] K8S RuntimeClass SetUp](https://i-blog.csdnimg.cn/direct/1e54134332844ab4a860dbb9e97df141.png)