今天主要是结合理论进一步熟悉TensorRT-LLM的内容

从下面的分享可以看出,TensorRT-LLM是在TensorRT的基础上进行了进一步封装,提供拼batch,量化等推理加速实现方式。

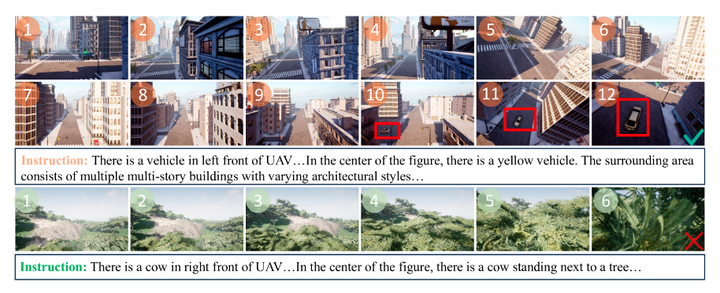

下面的图片更好的展示了TensorRT-LLM的流程,包含权重转换,构建Engine,以及推理,评估等内容。总结一下就是三步。

不想看图的话,可以看看AI的总结,我放在附录中。

下图也很好的展示的trt-llm推理的全流程。

多卡并行

值得注意的是,trt-llm特意考虑了多卡部署的使用场景。通过tp-size参数来控制张量并行的程度,pp-size来控制溧水县并行的程度。

流水线并行

量化

权重&激活值量化

KV Cache量化

量化精度影响

从下图可以看出,使用FP8进行量化,量化精度较高。

性能调优

关于性能调优,trt-llm中也使用了类似于vllm中xontinuous batching的策略。

附录

The image describes an overview of the TensorRT-LLM (Large Language Model) workflow. Here's a summary of the key steps and elements involved:

1. Input Models:

- Various external models from frameworks like **HuggingFace**, **NeMo**, **AMMO**, and **Jax** can be used as inputs.

2. TRT-LLM Checkpoint:

- These external models are converted into a format defined by TRT-LLM using scripts like **convert_checkpoint.py** or **quantize.py**.

- This conversion determines several key backward layer parameters, including:

- Quantization method

- Parallelization method

- And more...

3. TRT-LLM Engines:

- After converting to the checkpoint format, the **trtllm-build** command is used to further convert and optimize the checkpoint into **TensorRT Engines**.

- During this step, important inference parameters are set, such as:

- Max batch size

- Max input length

- Max output length

- Max beam width

- Plugin configuration

- And others...

- Most of the automatic optimizations occur at this stage.

4. Application Development:

- Using C++/Python APIs, developers can build applications with these optimized engines.

- TensorRT-LLM comes with several built-in tools to help with secondary development:

- **summarize.py** for text summarization

- **mmlu.py** for accuracy testing

- **run.py** for a dry run to verify the model

- **benchmark** for benchmarking

- The runtime options include:

- **Temperature** (for sampling)

- **Top K** (for top K sampling)

- **Top P** (for nucleus sampling)

This workflow outlines how to integrate and optimize models for efficient inference with TensorRT-LLM and leverage its tools for application development and performance testing.

NVIDIA AI 加速精讲堂-TensorRT-LLM 应用与部署_哔哩哔哩_bilibili