最近接触cuda 编程,记录一下。

1 工作实现一个【0-100)的加法

如果用python

sum = 0

for i in range(200):

sum+=i

print(sum)

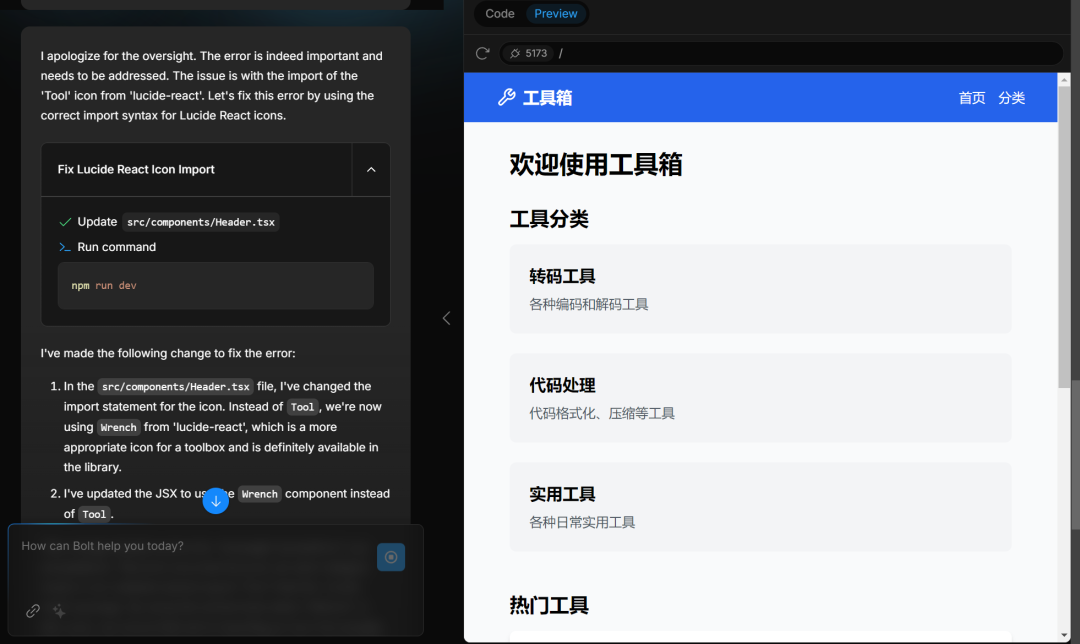

2 cuda 的一些简单的概念

一维情况下大概是这样的

(1个grid * 2个blocks * 4个thread)

3 代码直接上代码

我把100分为20个blocks ,每个block 有5个threads。

int num_blocks = 20;

int block_size = data_len/num_blocks // 100/20 = 5;

sum_kernel << <num_blocks, block_size >> > (sum, dev_c, data_len); //将其送入到内核中去

内核函数计算加法

int tid = blockIdx.x * blockDim.x + threadIdx.x; // blockDim.x =5

原子相加,相当加了一个锁,保证运算的正确性。

atomicAdd(sum, data[tid]);

3 完整代码

#include <stdio.h>

#include <stdlib.h>

#include <cuda_runtime.h>

__global__ void sum_kernel(int* sum, int* data, int n) {

int tid = blockIdx.x * blockDim.x + threadIdx.x;

int stride = gridDim.x * blockDim.x;

printf("stride=%d, blockIdx.x blockDim.x threadIdx.x [%d, %d, %d] \n", stride,blockIdx.x,blockDim.x,threadIdx.x);

atomicAdd(sum, data[tid]);

printf("data[%d] = %d sum in kernel: %d\n",tid,data[tid],*sum);

}

int main() {

const int data_len = 100;

int* dev_c = 0;

int *sum=0;

cudaError_t cudaStatus;

cudaStatus = cudaSetDevice(0);

if (cudaStatus != cudaSuccess) {

fprintf(stderr, "选择GPU失败,您的电脑上没有GPU");

return 0;

}

cudaStatus = cudaMalloc((void**)&dev_c, data_len * sizeof(int));

cudaStatus = cudaMalloc((void**)&sum, data_len * sizeof(int));

//cudaMalloc(&sum, sizeof(int));

int data_cpu[data_len];

for (int i = 0; i < data_len; ++i)

{

data_cpu[i] = i;

}

cudaStatus =cudaMemcpy(dev_c, data_cpu,sizeof(int)* data_len, cudaMemcpyHostToDevice);

//cudaMemcpy(dev_histo, &threadSum, sizeof(int), cudaMemcpyHostToDevice);

if (cudaStatus != cudaSuccess) {

fprintf(stderr, "dev_b复制失败");

}

int num_blocks = 20;

int block_size = data_len/num_blocks;

sum_kernel << <num_blocks, block_size >> > (sum, dev_c, data_len);

int result;

cudaMemcpy(&result, sum, sizeof(int), cudaMemcpyDeviceToHost);

printf("sum = %d\n", result);

}

4 运行结果

stride=100, blockIdx.x blockDim.x threadIdx.x [6, 5, 0]

stride=100, blockIdx.x blockDim.x threadIdx.x [6, 5, 1]

stride=100, blockIdx.x blockDim.x threadIdx.x [6, 5, 2]

stride=100, blockIdx.x blockDim.x threadIdx.x [6, 5, 3]

stride=100, blockIdx.x blockDim.x threadIdx.x [6, 5, 4]

stride=100, blockIdx.x blockDim.x threadIdx.x [18, 5, 0]

stride=100, blockIdx.x blockDim.x threadIdx.x [18, 5, 1]

stride=100, blockIdx.x blockDim.x threadIdx.x [18, 5, 2]

stride=100, blockIdx.x blockDim.x threadIdx.x [18, 5, 3]

stride=100, blockIdx.x blockDim.x threadIdx.x [18, 5, 4]

stride=100, blockIdx.x blockDim.x threadIdx.x [2, 5, 0]

stride=100, blockIdx.x blockDim.x threadIdx.x [2, 5, 1]

stride=100, blockIdx.x blockDim.x threadIdx.x [2, 5, 2]

stride=100, blockIdx.x blockDim.x threadIdx.x [2, 5, 3]

stride=100, blockIdx.x blockDim.x threadIdx.x [2, 5, 4]

stride=100, blockIdx.x blockDim.x threadIdx.x [9, 5, 0]

stride=100, blockIdx.x blockDim.x threadIdx.x [9, 5, 1]

stride=100, blockIdx.x blockDim.x threadIdx.x [9, 5, 2]

stride=100, blockIdx.x blockDim.x threadIdx.x [9, 5, 3]

stride=100, blockIdx.x blockDim.x threadIdx.x [9, 5, 4]

stride=100, blockIdx.x blockDim.x threadIdx.x [8, 5, 0]

stride=100, blockIdx.x blockDim.x threadIdx.x [8, 5, 1]

stride=100, blockIdx.x blockDim.x threadIdx.x [8, 5, 2]

stride=100, blockIdx.x blockDim.x threadIdx.x [8, 5, 3]

stride=100, blockIdx.x blockDim.x threadIdx.x [8, 5, 4]

stride=100, blockIdx.x blockDim.x threadIdx.x [14, 5, 0]

stride=100, blockIdx.x blockDim.x threadIdx.x [14, 5, 1]

stride=100, blockIdx.x blockDim.x threadIdx.x [14, 5, 2]

stride=100, blockIdx.x blockDim.x threadIdx.x [14, 5, 3]

stride=100, blockIdx.x blockDim.x threadIdx.x [14, 5, 4]

stride=100, blockIdx.x blockDim.x threadIdx.x [17, 5, 0]

stride=100, blockIdx.x blockDim.x threadIdx.x [17, 5, 1]

stride=100, blockIdx.x blockDim.x threadIdx.x [17, 5, 2]

stride=100, blockIdx.x blockDim.x threadIdx.x [17, 5, 3]

stride=100, blockIdx.x blockDim.x threadIdx.x [17, 5, 4]

stride=100, blockIdx.x blockDim.x threadIdx.x [11, 5, 0]

stride=100, blockIdx.x blockDim.x threadIdx.x [11, 5, 1]

stride=100, blockIdx.x blockDim.x threadIdx.x [11, 5, 2]

stride=100, blockIdx.x blockDim.x threadIdx.x [11, 5, 3]

stride=100, blockIdx.x blockDim.x threadIdx.x [11, 5, 4]

stride=100, blockIdx.x blockDim.x threadIdx.x [5, 5, 0]

stride=100, blockIdx.x blockDim.x threadIdx.x [5, 5, 1]

stride=100, blockIdx.x blockDim.x threadIdx.x [5, 5, 2]

stride=100, blockIdx.x blockDim.x threadIdx.x [5, 5, 3]

stride=100, blockIdx.x blockDim.x threadIdx.x [5, 5, 4]

stride=100, blockIdx.x blockDim.x threadIdx.x [3, 5, 0]

stride=100, blockIdx.x blockDim.x threadIdx.x [3, 5, 1]

stride=100, blockIdx.x blockDim.x threadIdx.x [3, 5, 2]

stride=100, blockIdx.x blockDim.x threadIdx.x [3, 5, 3]

stride=100, blockIdx.x blockDim.x threadIdx.x [3, 5, 4]

stride=100, blockIdx.x blockDim.x threadIdx.x [15, 5, 0]

stride=100, blockIdx.x blockDim.x threadIdx.x [15, 5, 1]

stride=100, blockIdx.x blockDim.x threadIdx.x [15, 5, 2]

stride=100, blockIdx.x blockDim.x threadIdx.x [15, 5, 3]

stride=100, blockIdx.x blockDim.x threadIdx.x [15, 5, 4]

stride=100, blockIdx.x blockDim.x threadIdx.x [0, 5, 0]

stride=100, blockIdx.x blockDim.x threadIdx.x [0, 5, 1]

stride=100, blockIdx.x blockDim.x threadIdx.x [0, 5, 2]

stride=100, blockIdx.x blockDim.x threadIdx.x [0, 5, 3]

stride=100, blockIdx.x blockDim.x threadIdx.x [0, 5, 4]

stride=100, blockIdx.x blockDim.x threadIdx.x [12, 5, 0]

stride=100, blockIdx.x blockDim.x threadIdx.x [12, 5, 1]

stride=100, blockIdx.x blockDim.x threadIdx.x [12, 5, 2]

stride=100, blockIdx.x blockDim.x threadIdx.x [12, 5, 3]

stride=100, blockIdx.x blockDim.x threadIdx.x [12, 5, 4]

stride=100, blockIdx.x blockDim.x threadIdx.x [7, 5, 0]

stride=100, blockIdx.x blockDim.x threadIdx.x [7, 5, 1]

stride=100, blockIdx.x blockDim.x threadIdx.x [7, 5, 2]

stride=100, blockIdx.x blockDim.x threadIdx.x [7, 5, 3]

stride=100, blockIdx.x blockDim.x threadIdx.x [7, 5, 4]

stride=100, blockIdx.x blockDim.x threadIdx.x [19, 5, 0]

stride=100, blockIdx.x blockDim.x threadIdx.x [19, 5, 1]

stride=100, blockIdx.x blockDim.x threadIdx.x [19, 5, 2]

stride=100, blockIdx.x blockDim.x threadIdx.x [19, 5, 3]

stride=100, blockIdx.x blockDim.x threadIdx.x [19, 5, 4]

stride=100, blockIdx.x blockDim.x threadIdx.x [10, 5, 0]

stride=100, blockIdx.x blockDim.x threadIdx.x [10, 5, 1]

stride=100, blockIdx.x blockDim.x threadIdx.x [10, 5, 2]

stride=100, blockIdx.x blockDim.x threadIdx.x [10, 5, 3]

stride=100, blockIdx.x blockDim.x threadIdx.x [10, 5, 4]

stride=100, blockIdx.x blockDim.x threadIdx.x [4, 5, 0]

stride=100, blockIdx.x blockDim.x threadIdx.x [4, 5, 1]

stride=100, blockIdx.x blockDim.x threadIdx.x [4, 5, 2]

stride=100, blockIdx.x blockDim.x threadIdx.x [4, 5, 3]

stride=100, blockIdx.x blockDim.x threadIdx.x [4, 5, 4]

stride=100, blockIdx.x blockDim.x threadIdx.x [16, 5, 0]

stride=100, blockIdx.x blockDim.x threadIdx.x [16, 5, 1]

stride=100, blockIdx.x blockDim.x threadIdx.x [16, 5, 2]

stride=100, blockIdx.x blockDim.x threadIdx.x [16, 5, 3]

stride=100, blockIdx.x blockDim.x threadIdx.x [16, 5, 4]

stride=100, blockIdx.x blockDim.x threadIdx.x [1, 5, 0]

stride=100, blockIdx.x blockDim.x threadIdx.x [1, 5, 1]

stride=100, blockIdx.x blockDim.x threadIdx.x [1, 5, 2]

stride=100, blockIdx.x blockDim.x threadIdx.x [1, 5, 3]

stride=100, blockIdx.x blockDim.x threadIdx.x [1, 5, 4]

stride=100, blockIdx.x blockDim.x threadIdx.x [13, 5, 0]

stride=100, blockIdx.x blockDim.x threadIdx.x [13, 5, 1]

stride=100, blockIdx.x blockDim.x threadIdx.x [13, 5, 2]

stride=100, blockIdx.x blockDim.x threadIdx.x [13, 5, 3]

stride=100, blockIdx.x blockDim.x threadIdx.x [13, 5, 4]

data[25] = 25 sum in kernel: 135

data[26] = 26 sum in kernel: 135

data[27] = 27 sum in kernel: 135

data[28] = 28 sum in kernel: 135

data[29] = 29 sum in kernel: 135

data[40] = 40 sum in kernel: 345

data[41] = 41 sum in kernel: 345

data[42] = 42 sum in kernel: 345

data[43] = 43 sum in kernel: 345

data[44] = 44 sum in kernel: 345

data[45] = 45 sum in kernel: 740

data[46] = 46 sum in kernel: 740

data[47] = 47 sum in kernel: 740

data[48] = 48 sum in kernel: 740

data[49] = 49 sum in kernel: 740

data[30] = 30 sum in kernel: 740

data[31] = 31 sum in kernel: 740

data[32] = 32 sum in kernel: 740

data[33] = 33 sum in kernel: 740

data[34] = 34 sum in kernel: 740

data[50] = 50 sum in kernel: 1110

data[51] = 51 sum in kernel: 1110

data[52] = 52 sum in kernel: 1110

data[53] = 53 sum in kernel: 1110

data[54] = 54 sum in kernel: 1110

data[85] = 85 sum in kernel: 1545

data[86] = 86 sum in kernel: 1545

data[87] = 87 sum in kernel: 1545

data[88] = 88 sum in kernel: 1545

data[89] = 89 sum in kernel: 1545

data[55] = 55 sum in kernel: 1830

data[56] = 56 sum in kernel: 1830

data[57] = 57 sum in kernel: 1830

data[58] = 58 sum in kernel: 1830

data[59] = 59 sum in kernel: 1830

data[20] = 20 sum in kernel: 1110

data[21] = 21 sum in kernel: 1110

data[22] = 22 sum in kernel: 1110

data[23] = 23 sum in kernel: 1110

data[24] = 24 sum in kernel: 1110

data[90] = 90 sum in kernel: 2290

data[91] = 91 sum in kernel: 2290

data[92] = 92 sum in kernel: 2290

data[93] = 93 sum in kernel: 2290

data[94] = 94 sum in kernel: 2290

data[10] = 10 sum in kernel: 3155

data[11] = 11 sum in kernel: 3155

data[12] = 12 sum in kernel: 3155

data[13] = 13 sum in kernel: 3155

data[14] = 14 sum in kernel: 3155

data[15] = 15 sum in kernel: 3155

data[16] = 16 sum in kernel: 3155

data[17] = 17 sum in kernel: 3155

data[18] = 18 sum in kernel: 3155

data[19] = 19 sum in kernel: 3155

data[60] = 60 sum in kernel: 3155

data[61] = 61 sum in kernel: 3155

data[62] = 62 sum in kernel: 3155

data[63] = 63 sum in kernel: 3155

data[64] = 64 sum in kernel: 3155

data[80] = 80 sum in kernel: 2700

data[81] = 81 sum in kernel: 2700

data[82] = 82 sum in kernel: 2700

data[83] = 83 sum in kernel: 2700

data[84] = 84 sum in kernel: 2700

data[95] = 95 sum in kernel: 3675

data[96] = 96 sum in kernel: 3675

data[97] = 97 sum in kernel: 3675

data[98] = 98 sum in kernel: 3675

data[99] = 99 sum in kernel: 3675

data[5] = 5 sum in kernel: 3190

data[6] = 6 sum in kernel: 3190

data[7] = 7 sum in kernel: 3190

data[8] = 8 sum in kernel: 3190

data[9] = 9 sum in kernel: 3190

data[70] = 70 sum in kernel: 4035

data[71] = 71 sum in kernel: 4035

data[72] = 72 sum in kernel: 4035

data[73] = 73 sum in kernel: 4035

data[74] = 74 sum in kernel: 4035

data[75] = 75 sum in kernel: 4615

data[76] = 76 sum in kernel: 4615

data[77] = 77 sum in kernel: 4615

data[78] = 78 sum in kernel: 4615

data[79] = 79 sum in kernel: 4615

data[0] = 0 sum in kernel: 4615

data[1] = 1 sum in kernel: 4615

data[2] = 2 sum in kernel: 4615

data[3] = 3 sum in kernel: 4615

data[4] = 4 sum in kernel: 4615

data[35] = 35 sum in kernel: 4615

data[36] = 36 sum in kernel: 4615

data[37] = 37 sum in kernel: 4615

data[38] = 38 sum in kernel: 4615

data[39] = 39 sum in kernel: 4615

data[65] = 65 sum in kernel: 4950

data[66] = 66 sum in kernel: 4950

data[67] = 67 sum in kernel: 4950

data[68] = 68 sum in kernel: 4950

data[69] = 69 sum in kernel: 4950

sum = 4950

![[论文阅读]: Detecting Copyrighted Content in Language Models Training Data](https://i-blog.csdnimg.cn/direct/9a9bc757c5a94b24986daf4fc253dd75.png)