对于单张图片,丢进卷积和全连接层直接得出分类结果就行

但对于视频,早期的一些工作把视频中的一些关键帧抽取出来,把一个个帧通过网络,最后把结果合并,或者把帧叠起来,一起丢进网络。在网络中进行early fusion或later fusion。但这些工作的效果都不太行,打不过特征工程+机器学习

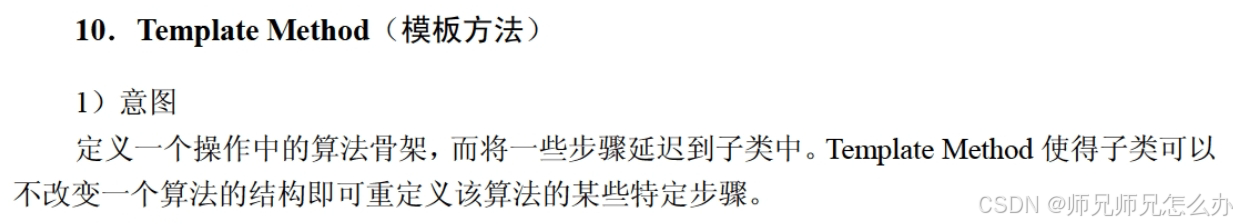

作者认为,早期工作中的卷积之所以效果不好,是因为卷积比较擅长学习局部特征,而不擅长学习视频中动态的规律。因此,既然你不擅长学,那就别学了,增加一个抽取动态规律的模块,在这里是抽取动作规律的模块——光流抽取,使卷积只需要学一开始的输入光流到最后动作分类之间的映射就行。神经网络正擅长学习输入输出之间的某种映射

作者把上面的卷积神经网络称为空间流卷积神经网络,下面的卷积神经网络叫时间流卷积神经网络,两个分类概率加权平均得到最终结果

左图是两帧叠加,由于背景没有变动,只要人在变动,因此捕捉到的光流只有人,其他地方是黑的,从而实现捕捉动作,且越亮的地方表示运动幅度越大

Abstract

We investigate architectures of discriminatively trained deep Convolutional Net works (ConvNets) for action recognition in video. The challenge is to capture the complementary information on appearance from still frames and motion be tween frames. We also aim to generalise the best performing hand-crafted features within a data-driven learning framework. Our contribution is three-fold. First, we propose a two-stream ConvNet architec ture which incorporates spatial and temporal networks. Second, we demonstrate that a ConvNet trained on multi-frame dense optical flow is able to achieve very good performance in spite of limited training data. Finally, we show that multi task learning, applied to two different action classification datasets, can be used to increase the amount of training data and improve the performance on both. Our architecture is trained and evaluated on the standard video actions bench marks of UCF-101 and HMDB-51, where it is competitive with the state of the art. It also exceeds by a large margin previous attempts to use deep nets for video classification.

翻译:

我们研究了用于视频动作识别的判别性训练深度卷积网络(ConvNets)的架构。挑战在于捕捉静态帧的外观信息和帧间运动信息的互补性。我们还旨在将表现最佳的手工设计特征推广到数据驱动的学习框架中。

我们的贡献有三个方面。首先,我们提出了一个双流ConvNet架构,它结合了空间和时间网络。其次,我们证明了一个在多帧密集光流上训练的ConvNet能够取得非常好的性能,尽管训练数据有限。最后,我们展示了多任务学习,应用于两个不同的动作分类数据集,可以用来增加训练数据量,并提高两者的性能。

我们的架构在UCF-101和HMDB-51这两个标准视频动作基准上进行了训练和评估,它与最先进的技术竞争。它还大幅度超过了之前尝试使用深度网络进行视频分类的尝试。

总结:

任务难度在于,如何获取静态的appearance信息,如物体形状、大小、颜色、场景信息等,和动态的Motion信息

Introduction

Recognition of human actions in videos is a challenging task which has received a significant amount of attention in the research community [11, 14, 17, 26]. Compared to still image classification, the temporal component of videos provides an additional (and important) clue for recognition, as a number of actions can be reliably recognised based on the motion information. Additionally, video provides natural data augmentation (jittering) for single image (video frame) classification. In this work, we aim at extending deep Convolutional Networks (ConvNets) [19], a state-of-the art still image representation [15], to action recognition in video data. This task has recently been addressed in [14] by using stacked video frames as input to the network, but the results were signif icantly worse than those of the best hand-crafted shallow representations [20, 26]. We investigate a different architecture based on two separate recognition streams (spatial and temporal), which are then combined by late fusion. The spatial stream performs action recognition from still video frames, whilst the temporal stream is trained to recognise action from motion in the form of dense optical flow. Both streams are implemented as ConvNets. Decoupling the spatial and temporal nets also allows us to exploit the availability of large amounts of annotated image data by pre-training the spatial net on the ImageNet challenge dataset [1]. Our proposed architecture is related to the two-streams hypothesis [9], according to which the human visual cortex contains two pathways: the ventral stream (which performs object recognition) and the dorsal stream (which recognises motion); though we do not investigate this connection any further here. The rest of the paper is organised as follows. In Sect. 1.1 we review the related work on action recognition using both shallow and deep architectures. In Sect. 2 we introduce the two-stream architecture and specify the Spatial ConvNet. Sect. 3 introduces the Temporal ConvNet and in particular how it generalizes the previous architectures reviewed in Sect. 1.1. A mult-task learning framework is developed in Sect. 4 in order to allow effortless combination of training data over multiple datasets. Implementation details are given in Sect. 5, and the performance is evaluated in Sect. 6 and compared to the state of the art. Our experiments on two challenging datasets (UCF 101 [24] and HMDB-51 [16]) show that the two recognition streams are complementary, and our deep architecture significantly outperforms that of [14] and is competitive with the state of the art shallow representations [20, 21, 26] in spite of being trained on relatively small datasets.

翻译:

在视频中识别人类动作是一项具有挑战性的任务,在研究界引起了广泛关注[11, 14, 17, 26]。与静态图像分类相比,视频的时间成分提供了一个额外的(且重要的)线索用于识别,因为许多动作可以根据运动信息可靠地识别。此外,视频为单幅图像(视频帧)分类提供了自然的数据增强(抖动)。

在这项工作中,我们的目标是将深度卷积网络(ConvNets)[19],一种最先进的静态图像表示[15],扩展到视频数据中的动作识别。这项任务最近在[14]中通过将堆叠的视频帧作为网络输入来解决,但结果明显不如最佳的手工设计浅层表示[20, 26]。我们研究了一种基于两个独立识别流(空间和时间)的不同架构,然后通过后期融合将它们结合起来。空间流从静态视频帧中执行动作识别,而时间流被训练以识别运动形式的动作,即密集光流。两个流都实现为ConvNets。将空间和时间网络解耦还允许我们利用大量标注图像数据的可用性,通过在ImageNet挑战数据集[1]上预训练空间网络。我们提出的架构与双流假设[9]有关,根据该假设,人类视觉皮层包含两条路径:腹侧流(执行对象识别)和背侧流(识别运动);尽管我们在这里没有进一步研究这种联系。

本文的其余部分组织如下。在第1.1节中,我们回顾了使用浅层和深层架构进行动作识别的相关研究。第2节介绍了双流架构,并指定了空间ConvNet。第3节介绍了时间ConvNet,特别是它如何泛化第1.1节回顾的先前架构。第4节开发了一个多任务学习框架,以便在多个数据集上轻松组合训练数据。第5节给出了实现细节,第6节评估了性能,并与最新技术进行了比较。我们在两个具有挑战性的数据集(UCF 101[24]和HMDB-51[16])上的实验表明,两个识别流是互补的,我们的深度架构显著优于[14],并且尽管是在相对较小的数据集上训练的,但与最先进的浅层表示[20, 21, 26]具有竞争力。

总结:

视频的数据质量比拿着图片做数据增强要更加自然,更加好

Related work

Video recognition research has been largely driven by the advances in image recognition methods, which were often adapted and extended to deal with video data. A large family of video action recognition methods is based on shallow high-dimensional encodings of local spatio-temporal fea tures. For instance, the algorithm of [17] consists in detecting sparse spatio-temporal interest points, which are then described using local spatio-temporal features: Histogram of Oriented Gradients (HOG) [7] and Histogram of Optical Flow (HOF). The features are then encoded into the Bag Of Features (BoF) representation, which is pooled over several spatio-temporal grids (similarly to spa tial pyramid pooling) and combined with an SVM classifier. In a later work [28], it was shown that dense sampling of local features outperforms sparse interest points. Instead of computing local video features over spatio-temporal cuboids, state-of-the-art shallow video representations [20, 21, 26] make use of dense point trajectories. The approach, first in troduced in [29], consists in adjusting local descriptor support regions, so that they follow dense trajectories, computed using optical flow. The best performance in the trajectory-based pipeline was achieved by the Motion Boundary Histogram (MBH) [8], which is a gradient-based feature, separately computed on the horizontal and vertical components of optical flow. A combination of several features was shown to further boost the accuracy. Recent improvements of trajectory-based hand-crafted representations include compensation of global (camera) motion [10, 16, 26], and the use of the Fisher vector encoding [22] (in [26]) or its deeper variant [23] (in [21]). There has also been a number of attempts to develop a deep architecture for video recognition. In the majority of these works, the input to the network is a stack of consecutive video frames, so the model is expected to implicitly learn spatio-temporal motion-dependent features in the first layers, which can be a difficult task. In [11], an HMAX architecture for video recognition was proposed with pre-defined spatio-temporal filters in the first layer. Later, it was combined [16] with a spatial HMAX model, thus forming spatial (ventral-like) and temporal (dorsal-like) recognition streams. Unlike our work, however, the streams were implemented as hand-crafted and rather shallow (3 layer) HMAX models. In [4, 18, 25], a convolutional RBM and ISA were used for unsupervised learning of spatio-temporal features, which were then plugged into a discriminative model for action classification. Discriminative end-to-end learning of video ConvNets has been addressed in [12] and, more recently, in [14], who compared several ConvNet architectures for action recognition. Training was carried out on a very large Sports-1M dataset, comprising 1.1M YouTube videos of sports activities. Interestingly, [14] found that a network, operating on individual video frames, performs similarly to the networks, whose input is a stack of frames. This might indicate that the learnt spatio-temporal features do not capture the motion well. The learnt representation, fine tuned on the UCF-101 dataset, turned out to be 20% less accurate than hand-crafted state-of-the-art trajectory-based representation [20, 27]. Our temporal stream ConvNet operates on multiple-frame dense optical flow, which is typically computed in an energy minimisation framework by solving for a displacement field (typically at multiple image scales). We used a popular method of [2], which formulates the energy based on constancy assumptions for intensity and its gradient, as well as smoothness of the displacement field. Recently, [30] proposed an image patch matching scheme, which is reminiscent of deep ConvNets, but does not incorporate learning.

翻译:

视频识别研究在很大程度上是由图像识别方法的进步所推动的,这些方法通常被调整和扩展以处理视频数据。大量基于浅层高维编码的视频动作识别方法是基于局部时空特征的。例如,[17]中的算法包括检测稀疏的时空兴趣点,然后使用局部时空特征进行描述:梯度方向直方图(HOG)[7]和光流直方图(HOF)。然后将特征编码到特征包(BoF)表示中,该表示在几个时空网格上进行池化(类似于空间金字塔池化)并与SVM分类器结合。在后续工作中[28],显示了密集采样的局部特征优于稀疏兴趣点。

与在时空立方体上计算局部视频特征不同,最先进的浅层视频表示[20, 21, 26]利用了密集点轨迹。这种方法首先在[29]中引入,包括调整局部描述符支持区域,使其跟随使用光流计算的密集轨迹。基于轨迹的流水线中的最佳性能是由运动边界直方图(MBH)[8]实现的,它是一种基于梯度的特征,分别在光流的水平和垂直分量上计算。结合多个特征被证明可以进一步提高准确性。基于轨迹的手工设计表示的最新改进包括补偿全局(相机)运动[10, 16, 26],以及使用Fisher向量编码[22](在[26]中)或其更深层的变体[23](在[21]中)。

也有一些尝试开发用于视频识别的深度架构。在这些工作中,大多数网络的输入是连续的视频帧堆栈,因此模型被期望在第一层隐式学习时空运动依赖特征,这可能是一个困难的任务。在[11]中,提出了一个用于视频识别的HMAX架构,第一层有预定义的时空滤波器。后来,它与空间HMAX模型结合[16],形成了空间(腹侧样)和时间(背侧样)识别流。然而,与我们的工作不同,这些流被实现为手工设计的较浅(3层)HMAX模型。在[4, 18, 25]中,使用卷积RBM和ISA进行无监督学习时空特征,然后将这些特征插入到用于动作分类的判别模型中。在[12]中,以及更近的[14]中,已经解决了视频ConvNets的判别性端到端学习。训练是在非常大的Sports-1M数据集上进行的,该数据集包含1.1M YouTube体育活动视频。有趣的是,[14]发现在单个视频帧上操作的网络,与输入为帧堆栈的网络表现相似。这可能表明学习到的时空特征没有很好地捕捉到运动。在UCF-101数据集上微调后的学习表示,结果比手工设计的最先进的基于轨迹的表示[20, 27]的准确性低20%。

我们的时间流ConvNet在多帧密集光流上操作,这通常是通过解决位移场(通常在多个图像尺度上)的最小化能量框架来计算的。我们使用了[2]中的一种流行方法,该方法基于强度及其梯度的恒定性假设以及位移场的平滑性来制定能量。最近,[30]提出了一种图像块匹配方案,这让人想起了深度ConvNets,但没有包含学习。

总结:

早期工作中的局部时空学习衍化成了今天的3d网络,基于光流轨迹的方法衍化成了今天的双流网络

原来那些所谓的early fusion或later fusion并没有真正提取到时序信息

Two-stream architecture for video recognition

Video can naturally be decomposed into spatial and temporal components. The spatial part, in the form of individual frame appearance, carries information about scenes and objects depicted in the video. The temporal part, in the form of motion across the frames, conveys the movement of the observer (the camera) and the objects. We devise our video recognition architecture accordingly, dividing it into two streams, as shown in Fig. 1. Each stream is implemented using a deep ConvNet, softmax scores of which are combined by late fusion. We consider two fusion methods: averaging and training a multi-class linear SVM [6] on stacked L2-normalised softmax scores as features.

Spatial stream ConvNet operates on individual video frames, effectively performing action recog nition from still images. The static appearance by itself is a useful clue, since some actions are strongly associated with particular objects. In fact, as will be shown in Sect. 6, action classification from still frames (the spatial recognition stream) is fairly competitive on its own. Since a spatial ConvNet is essentially an image classification architecture, we can build upon the recent advances in large-scale image recognition methods [15], and pre-train the network on a large image classifica tion dataset, such as the ImageNet challenge dataset. The details are presented in Sect. 5. Next, we describe the temporal stream ConvNet, which exploits motion and significantly improves accuracy.

翻译:

视频可以自然地被分解为空间和时间两个部分。空间部分以单独帧的外观形式存在,携带了视频中描绘的场景和对象的信息。时间部分以帧间运动的形式存在,传达了观察者(相机)和对象的运动。我们相应地设计了我们的视频识别架构,将其分为两个流,如图1所示。每个流都使用深度卷积网络(ConvNet)实现,其softmax分数通过后期融合进行组合。我们考虑了两种融合方法:平均和在堆叠的L2归一化softmax分数上训练多类线性SVM[6]作为特征。

空间流ConvNet操作在单个视频帧上,有效地执行静态图像的动作识别。静态外观本身是一个有用的线索,因为一些动作与特定对象强烈相关。实际上,正如第6节将展示的,从静态帧(空间识别流)进行动作分类本身就相当有竞争力。由于空间ConvNet本质上是一个图像分类架构,我们可以利用最近在大规模图像识别方法[15]上的进步,并在大型图像分类数据集上预训练网络,例如ImageNet挑战数据集。细节在第5节中介绍。接下来,我们描述了时间流ConvNet,它利用运动并显著提高了准确性。

翻译:

要么加权合并,要么拿softmax后的东西当成特征丢给SVM做分类

空间流卷积神经网络是拿视频的一帧帧作为输入

Optical flow ConvNets

ConvNet input configurations

(a)(b)是前后两帧,动作是从后往前取箭,(c)是光流的可视化,表示人的动作是朝这个方向,(d)是光流水平方向上的位移,(e)是光流竖直方向上的位移

光流图的深度为2,代表水平方向位移和竖直方向位移

光流图帧数=总帧数-1

时间流卷积网络的输入是多帧光流图叠一起

左边是简单的光流图叠加,右边是根据光流轨迹进行叠加,但实验结果显示左边更好,令人费解

作者也进行了双向的光流实验

Conclusions and directions for improvement

We proposed a deep video classification model with competitive performance, which incorporates separate spatial and temporal recognition streams based on ConvNets. Currently it appears that training a temporal ConvNet on optical flow (as here) is significantly better than training on raw stacked frames [14]. The latter is probably too challenging, and might require architectural changes (for example, a combination with the deep matching approach of [30]). Despite using optical flow as input, our temporal model does not require significant hand-crafting, since the flow is computed using a method based on the generic assumptions of constancy and smoothness. As wehave shown, extra training data is beneficial for our temporal ConvNet, so we are planning to train it on large video datasets, such as the recently released collection of [14]. This, however, poses a significant challenge on its own due to the gigantic amount of training data (multiple TBs). There still remain some essential ingredients of the state-of-the-art shallow representation [26], which are missed in our current architecture. The most prominent one is local feature pooling over spatio-temporal tubes, centered at the trajectories. Even though the input (2) captures the opti cal flow along the trajectories, the spatial pooling in our network does not take the trajectories into account. Another potential area of improvement is explicit handling of camera motion, which in our case is compensated by mean displacement subtraction

翻译:

我们提出了一个具有竞争力的深度视频分类模型,该模型结合了基于ConvNets的空间和时间识别流。目前看来,像这里一样在光流上训练时间ConvNet比在原始堆叠帧上训练要好得多[14]。后者可能过于具有挑战性,可能需要架构上的改变(例如,与[30]中的深度匹配方法结合)。尽管使用光流作为输入,我们的时间模型不需要大量的手工设计,因为流是使用基于恒定性和平滑性的通用假设方法计算的。

正如我们所展示的,额外的训练数据对我们的时间ConvNet是有益的,因此我们计划在大型视频数据集上训练它,例如最近发布的[14]的集合。然而,由于训练数据量巨大(多个TBs),这本身就是一个重大挑战。我们当前架构仍然错过了一些最先进的浅层表示[26]的基本要素。最突出的是围绕轨迹的时空管的局部特征池化。尽管输入(2)捕捉了轨迹上的光流,但我们网络中的空间池化并没有考虑轨迹。另一个潜在的改进领域是明确处理相机运动,在我们的案例中,这是通过平均位移减法来补偿的。