1.背景及准备

书接上文【以图搜图代码实现】–犬类以图搜图示例 总结了一下可以优化的点,其中提到使用自己的数据集训练网络,而不是单纯使用预训练的模型,这不就来了!!

使用11类犬类微调resnet18网络模型:

1. 数据准备

【数据集】11种犬类,共1089张

链接:百度网盘链接

提取码:qlrt

2. 数据集划分

按照train和val8:2的比例进行划分,划分代码如下:

#!/usr/bin/env python

# -*- coding: UTF-8 -*-

'''

@Project :ImageRec

@File :split_data.py

@IDE :PyCharm

@Author :菜菜2024

@Date :2024/9/30

'''

import os

import shutil

import random

def split_images_into_train_test(source_directory, train_directory, val_directory, train_ratio=0.8):

"""

将源文件夹下的图片按照指定比例分为训练集和测试集,并分别复制到train和val文件夹下。

"""

# 确保train和test目录存在,如果不存在则创建

os.makedirs(train_directory, exist_ok=True)

os.makedirs(val_directory, exist_ok=True)

# 获取源文件夹中所有图片文件的列表

image_files = [f for f in os.listdir(source_directory) if os.path.isfile(os.path.join(source_directory, f))]

image_files = [f for f in image_files if f.lower().endswith(('.png', '.jpg', '.jpeg', '.gif', '.bmp'))]

# 打乱图片文件列表的顺序

random.shuffle(image_files)

total_images = len(image_files)

train_images_count = int(train_ratio * total_images)

# 将图片复制到对应的文件夹下

for i, image_file in enumerate(image_files):

source_path = os.path.join(source_directory, image_file)

if i < train_images_count:

dest_path = os.path.join(train_directory, image_file)

else:

dest_path = os.path.join(val_directory, image_file)

shutil.copy2(source_path, dest_path)

print(f"Copied {image_file} to {os.path.dirname(dest_path)}")

if __name__ == '__main__':

source_directory = "E:\\xxx\\datas\\imgs"

train_directory = "E:\\xxx\\datas\\pet_dog\\train"

val_directory = "E:\\xxx\\datas\\pet_dog\\val"

file_list = os.listdir(source_directory)

for file in file_list:

source=os.path.join(source_directory, file)

val = os.path.join(val_directory, file)

train = os.path.join(train_directory, file)

split_images_into_train_test(source, train, val)

最终效果:

train和val下的目录结果都是如下图所示,只是数量不一样。

2.代码实现

#!/usr/bin/env python

# -*- coding: UTF-8 -*-

'''

@Project :ImageRec

@File :train.py

@IDE :PyCharm

@Author :菜菜2024

@Date :2024/9/30

'''

import torch

import os

import torch.optim as optim

from torch.optim import lr_scheduler

from torchvision import datasets, transforms, models

from torch.utils.data import DataLoader

import argparse

def train_model(model, dataloaders, criterion, optimizer, scheduler, num_epochs=5):

"""

参数:

model: torch.nn.Module - 要训练的模型实例。

dataloaders: dict - 包含训练集和验证集的数据加载器,例如{'train': train_loader, 'val': val_loader}。

criterion: nn.Module - 用于计算损失的函数。

optimizer: torch.optim.Optimizer - 用于更新模型参数的优化器。

scheduler: torch.optim.lr_scheduler._LRScheduler - 学习率调度器。

num_epochs: int - 训练的总轮数。

"""

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

model.to(device)

for epoch in range(num_epochs):

print(f"Epoch {epoch + 1}/{num_epochs}")

for phase in ['train', 'val']:

if phase == 'train':

model.train() # 设置模型为训练模式

else:

model.eval() # 设置模型为评估模式

running_loss = 0.0

running_corrects = 0

i = 0

for inputs, labels in dataloaders[phase]:

i+=1

inputs, labels = inputs.to(device), labels.to(device)

# 前向传播

with torch.set_grad_enabled(phase == 'train'):

outputs = model(inputs)

loss = criterion(outputs, labels)

if i%10==0:

print(f"{phase} Loss: {loss:.4f}")

_, preds = torch.max(outputs, 1)

# 反向传播与优化(仅在训练阶段)

if phase == 'train':

optimizer.zero_grad()

loss.backward()

optimizer.step()

running_loss += loss.item() * inputs.size(0)

running_corrects += torch.sum(preds == labels.data)

if phase == 'train':

scheduler.step()

epoch_loss = running_loss / len(dataloaders[phase].dataset)

epoch_acc = running_corrects.double() / len(dataloaders[phase].dataset)

print(f"{phase} Loss: {epoch_loss:.4f} Acc: {epoch_acc:.4f}")

print("Training complete.")

# 训练完成后,保存模型状态字典(包含权重)

torch.save(model.state_dict(), './weights/resnet18_dog.pth')

def main():

# 创建参数解析器

parser = argparse.ArgumentParser(description='使用自己的数据集训练resnet18')

# 添加参数

parser.add_argument('--data_dir', type=str, default="E:\HWR_files\datas\pet_dog",

help='Path to the dataset directory')

parser.add_argument('--batch_size', type=int, default=16, help='Input batch size for training (default: 16)')

parser.add_argument('--num_workers', type=int, default=2, help='Number of workers for data loading (default: 2)')

parser.add_argument('--learning_rate', type=float, default=0.001, help='Learning rate (default: 0.001)')

parser.add_argument('--num_epochs', type=int, default=5, help='Number of epochs to train (default: 25)')

args = parser.parse_args()

# 数据预处理和加载

data_transforms = {

'train': transforms.Compose([

transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

]),

'val': transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

]),

}

image_datasets = {x: datasets.ImageFolder(os.path.join(args.data_dir, x), data_transforms[x]) for x in

['train', 'val']}

dataloaders_dict = {

x: DataLoader(image_datasets[x], batch_size=args.batch_size, shuffle=True, num_workers=args.num_workers) for x

in ['train', 'val']}

# 使用ResNet18模型

model = models.resnet18(pretrained=True)

# 加载之前保存的权重

# model.load_state_dict(torch.load('./weights/resnet18_dog.pth'))

num_features = model.fc.in_features

model.fc = torch.nn.Linear(num_features, len(image_datasets['train'].classes)) # 修改最后一层全连接层

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

model = model.to(device)

# 定义损失函数和优化器

criterion = torch.nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=args.learning_rate, momentum=0.9)

scheduler = lr_scheduler.StepLR(optimizer, step_size=7, gamma=0.1)

# 训练模型

train_model(model, dataloaders_dict, criterion, optimizer, scheduler, args.num_epochs)

if __name__ == '__main__':

main()

3.代码测试

#!/usr/bin/env python

# -*- coding: UTF-8 -*-

'''

@Project :ImageRec

@File :test.py

@IDE :PyCharm

@Author :菜菜2024

@Date :2024/9/30

'''

import torch

from torch.utils.data import DataLoader

from torchvision import datasets, transforms, models

def test_model(weights_path, val_root, batch_size=4):

"""

使用验证集测试模型性能。

参数:

- weights_path: str, 训练好的模型权重文件路径

- val_root: str, 验证数据集的根目录

- batch_size: int, 数据加载时的批次大小

"""

# 设定数据预处理

transform = transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])

# 加载验证集

val_dataset = datasets.ImageFolder(root=val_root, transform=transform)

val_loader = DataLoader(val_dataset, batch_size=batch_size, shuffle=False, num_workers=2)

# 初始化模型并加载权重

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

# 修改最后一层全连接层确保num_classes与实际类别数匹配

model = models.resnet18()

num_features = model.fc.in_features

model.fc = torch.nn.Linear(num_features, len(val_dataset.classes))

model.load_state_dict(torch.load(weights_path, map_location=device))

model.to(device)

model.eval() # 设置模型为评估模式

# 测试循环

correct = 0

total = 0

with torch.no_grad():

for images, labels in val_loader:

images, labels = images.to(device), labels.to(device)

outputs = model(images)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

# 计算准确率并打印结果

accuracy = 100 * correct / total

print(f'Accuracy on validation set: {accuracy}%')

if __name__ == '__main__':

weights_path = './weights/resnet18_dog.pth'

val_root = "E:\\xxx\\datas\\pet_dog\\val"

test_model(weights_path, val_root)

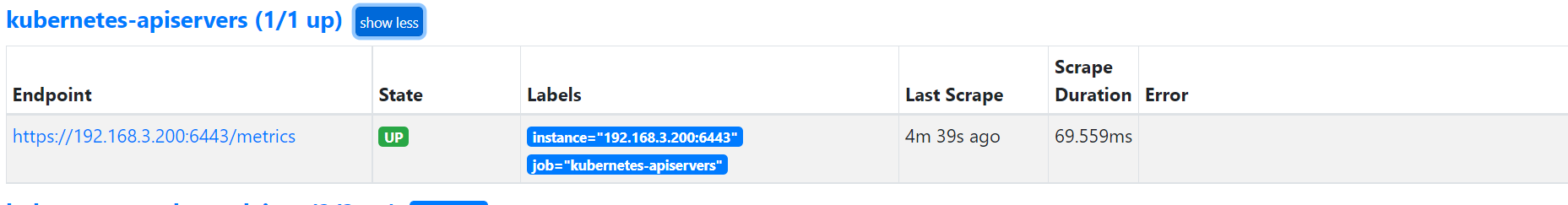

结果图:

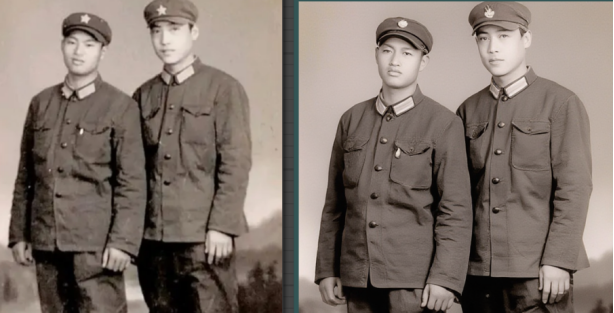

4.效果对比

书接上篇的图像检索:【以图搜图代码实现】–犬类以图搜图示例

来看看有没有准一点的

使用预训练的resnet18:

离谱了,匹配的前三个都是吉娃娃

看看使用微调之后的resnet18:

对应在上一篇种,模型加载和最后一层的输出个数变成类别数,这里是11。

哇哇哇!效果显著呀!!!

可以可以,下次尝试使用faiss喽