0. 引言

在缺陷检测中,由于真实世界样本中的缺陷数据极为稀少,有时在几千甚至几万个样品中才会出现一个缺陷数据。因此,以往的模型只需在正常样本上进行训练,学习正常样品的数据分布。在测试时,需要手动指定阈值来区分每种项目的正常和异常实例,然而这并不适用于实际的生产环境。

大型视觉语言模型(LVLMs),诸如 MiniGPT - 4 和 LLaVA,已展现出强大的图像理解能力,在各类视觉任务中取得显著性能。那么,大模型能否应用于工业缺陷检测领域呢?AnomalyGPT 对此展开了深入探索

1.AnomalyGPT

针对缺陷检测中的问题,现有方法主要分为两大类:基于重建和基于特征嵌入。基于重建的方法主要是将异常样本重建为相应的正常样本,并通过计算重建误差来检测异常。而基于特征嵌入的方法则侧重于对正常样本的特征嵌入进行建模,然后通过计算测试样本的特征嵌入与正常样本特征嵌入库的距离,来判断是否异常。但这些现有方法在面对新数据时,都需要大量数据重新训练,无法满足真实的工业缺陷检测需求。

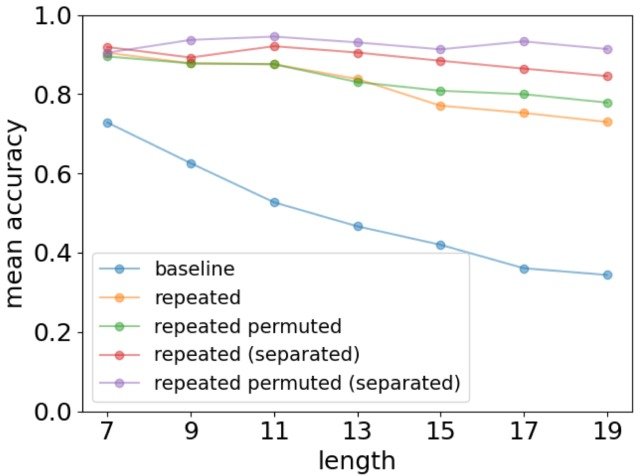

AnomalyGPT论文作者提出了创新性的解决办法,开创性地将大视觉语言模型应用于工业异常检测领域,推出了 AnomalyGPT 模型。该模型能够检测异常的存在(分类)和位置(定位),且无需手动设置阈值。此外,AnomalyGPT 可以提供关于图像的信息,并支持交互式参与,使用户能够根据其需求和所提供的答案提出后续问题。同时,AnomalyGPT 还可以对少量的正常样本(无需缺陷样品)进行上下文学习,从而能够快速适应以前未见过的物体。

AnomalyGPT 模型的创新点如下:

- 首次将大视觉语言模型应用到工业异常检测领域;

- 支持输出缺陷 mask;

- 支持多轮对话;

- 只需要少量数据,即可泛化到其他新数据的检测当中。

2.环境安装

2.1 GPU环境

要本地部署AnomalyGPT 需要用到GPU加速,GPU的显存要大于等于8G,我这里部署的环境是系统是win10,GPU是3090ti 24G显存,cuda版本是11.8,cudnn版本是8.9。

2.2 创建环境

# 创建并配置环境依赖

conda create -n agpt python=3.10

conda activate agpt

2.3 下载源码

git clone https://github.com/CASIA-IVA-Lab/AnomalyGPT.git

2.4 安装依赖

2.4.1 pytorch

这里pytorch建议单独安装,可以找到cuda对应的版本进行安装:

conda install pytorch==2.0.0 torchvision==0.15.0 torchaudio==2.0.0 pytorch-cuda=11.8 -c pytorch -c nvidia

2.4.2 安装deepspeed

官方给的环境默认会安装deepspeed库(支持sat库训练),此库对于模型推理并非必要,同时部分Windows环境安装此库的某些版本时会遇到问题。 这里可以使用deepspeed 0.3.16这个版本:

pip install deepspeed==0.3.16

2.4.3 安装requirements.txt文件内其他依赖

打开源码里面的requirements.txt文件,把torch和deepspeed的依赖删掉,然后安装:

pip install -r requirements.txt

3. 模型下载与合并

3.1 ImageBind模型

从https://dl.fbaipublicfiles.com/imagebind/imagebind_huge.pth下载模型,然后放到以下目录:

3.2 合并模型

这里模型需要LLaMA的模型与Vicuna Delta模型合并得到。

3.2.1 下载LLaMA 7B模型

可以从LLaMa官方下载到7B模型,这里我把模型转到百度网盘了,通过网盘分享的文件:LLaMA

链接: https://pan.baidu.com/s/1syklVFou4r252PxcCaZY7w 提取码: 5ffx 。只下载7B和tokenizer.model,然后把model放在7B文件夹。

然后在AnomalyGPT根目录下创建一个LLaMA目录,把7B目录复制到这个目录下:

3.2.2 转换成Huggingface格式

- 安装protobuf

pip install protobuf==3.20

- 转换模型

可以参考官网给的文档转换模型:

也可以直接复制下面的代码进行模型转换:

import argparse

import gc

import json

import os

import shutil

import warnings

from typing import List

import torch

from transformers import GenerationConfig, LlamaConfig, LlamaForCausalLM, LlamaTokenizer, PreTrainedTokenizerFast

from transformers.convert_slow_tokenizer import TikTokenConverter

try:

from transformers import LlamaTokenizerFast

except ImportError as e:

warnings.warn(e)

warnings.warn(

"The converted tokenizer will be the `slow` tokenizer. To use the fast, update your `tokenizers` library and re-run the tokenizer conversion"

)

LlamaTokenizerFast = None

NUM_SHARDS = {

"7B": 1,

"8B": 1,

"8Bf": 1,

"7Bf": 1,

"13B": 2,

"13Bf": 2,

"34B": 4,

"30B": 4,

"65B": 8,

"70B": 8,

"70Bf": 8,

"405B": 8,

"405B-MP16": 16,

}

CONTEXT_LENGTH_FOR_VERSION = {"3.1": 131072, "3": 8192, "2": 4096, "1": 2048}

def compute_intermediate_size(n, ffn_dim_multiplier=1, multiple_of=256):

return multiple_of * ((int(ffn_dim_multiplier * int(8 * n / 3)) + multiple_of - 1) // multiple_of)

def read_json(path):

with open(path, "r") as f:

return json.load(f)

def write_json(text, path):

with open(path, "w") as f:

json.dump(text, f)

def write_model(

model_path,

input_base_path,

model_size=None,

safe_serialization=True,

llama_version="1",

vocab_size=None,

num_shards=None,

instruct=False,

):

os.makedirs(model_path, exist_ok=True)

tmp_model_path = os.path.join(model_path, "tmp")

os.makedirs(tmp_model_path, exist_ok=True)

params = read_json(os.path.join(input_base_path, "params.json"))

num_shards = NUM_SHARDS[model_size] if num_shards is None else num_shards

params = params.get("model", params)

n_layers = params["n_layers"]

n_heads = params["n_heads"]

n_heads_per_shard = n_heads // num_shards

dim = params["dim"]

dims_per_head = dim // n_heads

base = params.get("rope_theta", 10000.0)

inv_freq = 1.0 / (base ** (torch.arange(0, dims_per_head, 2).float() / dims_per_head))

if base > 10000.0 and float(llama_version) < 3:

max_position_embeddings = 16384

else:

max_position_embeddings = CONTEXT_LENGTH_FOR_VERSION[llama_version]

if params.get("n_kv_heads", None) is not None:

num_key_value_heads = params["n_kv_heads"] # for GQA / MQA

num_key_value_heads_per_shard = num_key_value_heads // num_shards

key_value_dim = dims_per_head * num_key_value_heads

else: # compatibility with other checkpoints

num_key_value_heads = n_heads

num_key_value_heads_per_shard = n_heads_per_shard

key_value_dim = dim

# permute for sliced rotary

def permute(w, n_heads, dim1=dim, dim2=dim):

return w.view(n_heads, dim1 // n_heads // 2, 2, dim2).transpose(1, 2).reshape(dim1, dim2)

print(f"Fetching all parameters from the checkpoint at {input_base_path}.")

# Load weights

if num_shards == 1:

# Not sharded

# (The sharded implementation would also work, but this is simpler.)

loaded = torch.load(os.path.join(input_base_path, "consolidated.00.pth"), map_location="cpu")

else:

# Sharded

checkpoint_list = sorted([file for file in os.listdir(input_base_path) if file.endswith(".pth")])

print("Loading in order:", checkpoint_list)

loaded = [torch.load(os.path.join(input_base_path, file), map_location="cpu") for file in checkpoint_list]

param_count = 0

index_dict = {"weight_map": {}}

for layer_i in range(n_layers):

filename = f"pytorch_model-{layer_i + 1}-of-{n_layers + 1}.bin"

if num_shards == 1:

# Unsharded

state_dict = {

f"model.layers.{layer_i}.self_attn.q_proj.weight": permute(

loaded[f"layers.{layer_i}.attention.wq.weight"], n_heads=n_heads

),

f"model.layers.{layer_i}.self_attn.k_proj.weight": permute(

loaded[f"layers.{layer_i}.attention.wk.weight"],

n_heads=num_key_value_heads,

dim1=key_value_dim,

),

f"model.layers.{layer_i}.self_attn.v_proj.weight": loaded[f"layers.{layer_i}.attention.wv.weight"],

f"model.layers.{layer_i}.self_attn.o_proj.weight": loaded[f"layers.{layer_i}.attention.wo.weight"],

f"model.layers.{layer_i}.mlp.gate_proj.weight": loaded[f"layers.{layer_i}.feed_forward.w1.weight"],

f"model.layers.{layer_i}.mlp.down_proj.weight": loaded[f"layers.{layer_i}.feed_forward.w2.weight"],

f"model.layers.{layer_i}.mlp.up_proj.weight": loaded[f"layers.{layer_i}.feed_forward.w3.weight"],

f"model.layers.{layer_i}.input_layernorm.weight": loaded[f"layers.{layer_i}.attention_norm.weight"],

f"model.layers.{layer_i}.post_attention_layernorm.weight": loaded[f"layers.{layer_i}.ffn_norm.weight"],

}

else:

# Sharded

# Note that attention.w{q,k,v,o}, feed_fordward.w[1,2,3], attention_norm.weight and ffn_norm.weight share

# the same storage object, saving attention_norm and ffn_norm will save other weights too, which is

# redundant as other weights will be stitched from multiple shards. To avoid that, they are cloned.

state_dict = {

f"model.layers.{layer_i}.input_layernorm.weight": loaded[0][

f"layers.{layer_i}.attention_norm.weight"

].clone(),

f"model.layers.{layer_i}.post_attention_layernorm.weight": loaded[0][

f"layers.{layer_i}.ffn_norm.weight"

].clone(),

}

state_dict[f"model.layers.{layer_i}.self_attn.q_proj.weight"] = permute(

torch.cat(

[

loaded[i][f"layers.{layer_i}.attention.wq.weight"].view(n_heads_per_shard, dims_per_head, dim)

for i in range(len(loaded))

],

dim=0,

).reshape(dim, dim),

n_heads=n_heads,

)

state_dict[f"model.layers.{layer_i}.self_attn.k_proj.weight"] = permute(

torch.cat(

[

loaded[i][f"layers.{layer_i}.attention.wk.weight"].view(

num_key_value_heads_per_shard, dims_per_head, dim

)

for i in range(len(loaded))

],

dim=0,

).reshape(key_value_dim, dim),

num_key_value_heads,

key_value_dim,

dim,

)

state_dict[f"model.layers.{layer_i}.self_attn.v_proj.weight"] = torch.cat(

[

loaded[i][f"layers.{layer_i}.attention.wv.weight"].view(

num_key_value_heads_per_shard, dims_per_head, dim

)

for i in range(len(loaded))

],

dim=0,

).reshape(key_value_dim, dim)

state_dict[f"model.layers.{layer_i}.self_attn.o_proj.weight"] = torch.cat(

[loaded[i][f"layers.{layer_i}.attention.wo.weight"] for i in range(len(loaded))], dim=1

)

state_dict[f"model.layers.{layer_i}.mlp.gate_proj.weight"] = torch.cat(

[loaded[i][f"layers.{layer_i}.feed_forward.w1.weight"] for i in range(len(loaded))], dim=0

)

state_dict[f"model.layers.{layer_i}.mlp.down_proj.weight"] = torch.cat(

[loaded[i][f"layers.{layer_i}.feed_forward.w2.weight"] for i in range(len(loaded))], dim=1

)

state_dict[f"model.layers.{layer_i}.mlp.up_proj.weight"] = torch.cat(

[loaded[i][f"layers.{layer_i}.feed_forward.w3.weight"] for i in range(len(loaded))], dim=0

)

state_dict[f"model.layers.{layer_i}.self_attn.rotary_emb.inv_freq"] = inv_freq

for k, v in state_dict.items():

index_dict["weight_map"][k] = filename

param_count += v.numel()

torch.save(state_dict, os.path.join(tmp_model_path, filename))

filename = f"pytorch_model-{n_layers + 1}-of-{n_layers + 1}.bin"

if num_shards == 1:

# Unsharded

state_dict = {

"model.embed_tokens.weight": loaded["tok_embeddings.weight"],

"model.norm.weight": loaded["norm.weight"],

"lm_head.weight": loaded["output.weight"],

}

else:

concat_dim = 0 if llama_version in ["3", "3.1"] else 1

state_dict = {

"model.norm.weight": loaded[0]["norm.weight"],

"model.embed_tokens.weight": torch.cat(

[loaded[i]["tok_embeddings.weight"] for i in range(len(loaded))], dim=concat_dim

),

"lm_head.weight": torch.cat([loaded[i]["output.weight"] for i in range(len(loaded))], dim=0),

}

for k, v in state_dict.items():

index_dict["weight_map"][k] = filename

param_count += v.numel()

torch.save(state_dict, os.path.join(tmp_model_path, filename))

# Write configs

index_dict["metadata"] = {"total_size": param_count * 2}

write_json(index_dict, os.path.join(tmp_model_path, "pytorch_model.bin.index.json"))

ffn_dim_multiplier = params["ffn_dim_multiplier"] if "ffn_dim_multiplier" in params else 1

multiple_of = params["multiple_of"] if "multiple_of" in params else 256

if llama_version in ["3", "3.1"]:

bos_token_id = 128000

if instruct:

eos_token_id = [128001, 128008, 128009]

else:

eos_token_id = 128001

else:

bos_token_id = 1

eos_token_id = 2

config = LlamaConfig(

hidden_size=dim,

intermediate_size=compute_intermediate_size(dim, ffn_dim_multiplier, multiple_of),

num_attention_heads=params["n_heads"],

num_hidden_layers=params["n_layers"],

rms_norm_eps=params["norm_eps"],

num_key_value_heads=num_key_value_heads,

vocab_size=vocab_size,

rope_theta=base,

max_position_embeddings=max_position_embeddings,

bos_token_id=bos_token_id,

eos_token_id=eos_token_id,

)

config.save_pretrained(tmp_model_path)

if instruct:

generation_config = GenerationConfig(

do_sample=True,

temperature=0.6,

top_p=0.9,

bos_token_id=bos_token_id,

eos_token_id=eos_token_id,

)

generation_config.save_pretrained(tmp_model_path)

# Make space so we can load the model properly now.

del state_dict

del loaded

gc.collect()

print("Loading the checkpoint in a Llama model.")

model = LlamaForCausalLM.from_pretrained(tmp_model_path, torch_dtype=torch.bfloat16, low_cpu_mem_usage=True)

# Avoid saving this as part of the config.

del model.config._name_or_path

model.config.torch_dtype = torch.float16

print("Saving in the Transformers format.")

model.save_pretrained(model_path, safe_serialization=safe_serialization)

shutil.rmtree(tmp_model_path, ignore_errors=True)

class Llama3Converter(TikTokenConverter):

def __init__(self, vocab_file, special_tokens=None, instruct=False, model_max_length=None, **kwargs):

super().__init__(vocab_file, additional_special_tokens=special_tokens, **kwargs)

tokenizer = self.converted()

chat_template = (

"{% set loop_messages = messages %}"

"{% for message in loop_messages %}"

"{% set content = '<|start_header_id|>' + message['role'] + '<|end_header_id|>\n\n'+ message['content'] | trim + '<|eot_id|>' %}"

"{% if loop.index0 == 0 %}"

"{% set content = bos_token + content %}"

"{% endif %}"

"{{ content }}"

"{% endfor %}"

"{{ '<|start_header_id|>assistant<|end_header_id|>\n\n' }}"

)

self.tokenizer = PreTrainedTokenizerFast(

tokenizer_object=tokenizer,

bos_token="<|begin_of_text|>",

eos_token="<|end_of_text|>" if not instruct else "<|eot_id|>",

chat_template=chat_template if instruct else None,

model_input_names=["input_ids", "attention_mask"],

model_max_length=model_max_length,

)

def write_tokenizer(tokenizer_path, input_tokenizer_path, llama_version="2", special_tokens=None, instruct=False):

tokenizer_class = LlamaTokenizer if LlamaTokenizerFast is None else LlamaTokenizerFast

if llama_version in ["3", "3.1"]:

tokenizer = Llama3Converter(

input_tokenizer_path, special_tokens, instruct, model_max_length=CONTEXT_LENGTH_FOR_VERSION[llama_version]

).tokenizer

else:

tokenizer = tokenizer_class(input_tokenizer_path)

print(f"Saving a {tokenizer_class.__name__} to {tokenizer_path}.")

tokenizer.save_pretrained(tokenizer_path)

return tokenizer

DEFAULT_LLAMA_SPECIAL_TOKENS = {

"3": [

"<|begin_of_text|>",

"<|end_of_text|>",

"<|reserved_special_token_0|>",

"<|reserved_special_token_1|>",

"<|reserved_special_token_2|>",

"<|reserved_special_token_3|>",

"<|start_header_id|>",

"<|end_header_id|>",

"<|reserved_special_token_4|>",

"<|eot_id|>", # end of turn

]

+ [f"<|reserved_special_token_{i}|>" for i in range(5, 256 - 5)],

"3.1": [

"<|begin_of_text|>",

"<|end_of_text|>",

"<|reserved_special_token_0|>",

"<|reserved_special_token_1|>",

"<|finetune_right_pad_id|>",

"<|reserved_special_token_2|>",

"<|start_header_id|>",

"<|end_header_id|>",

"<|eom_id|>", # end of message

"<|eot_id|>", # end of turn

"<|python_tag|>",

]

+ [f"<|reserved_special_token_{i}|>" for i in range(3, 256 - 8)],

}

def main():

parser = argparse.ArgumentParser()

parser.add_argument(

"--input_dir",

help="Location of LLaMA weights, which contains tokenizer.model and model folders",

)

parser.add_argument(

"--model_size",

default=None,

help="'f' Deprecated in favor of `num_shards`: models correspond to the finetuned versions, and are specific to the Llama2 official release. For more details on Llama2, checkout the original repo: https://huggingface.co/meta-llama",

)

parser.add_argument(

"--output_dir",

help="Location to write HF model and tokenizer",

)

parser.add_argument(

"--safe_serialization", default=True, type=bool, help="Whether or not to save using `safetensors`."

)

# Different Llama versions used different default values for max_position_embeddings, hence the need to be able to specify which version is being used.

parser.add_argument(

"--llama_version",

choices=["1", "2", "3", "3.1"],

default="1",

type=str,

help="Version of the Llama model to convert. Currently supports Llama1 and Llama2. Controls the context size",

)

parser.add_argument(

"--num_shards",

default=None,

type=int,

help="The number of individual shards used for the model. Does not have to be the same as the number of consolidated_xx.pth",

)

parser.add_argument(

"--special_tokens",

default=None,

type=List[str],

help="The list of special tokens that should be added to the model.",

)

parser.add_argument(

"--instruct",

default=False,

type=bool,

help="Whether the model is an instruct model or not. Will affect special tokens for llama 3.1.",

)

args = parser.parse_args()

if args.model_size is None and args.num_shards is None:

raise ValueError("You have to set at least `num_shards` if you are not giving the `model_size`")

if args.special_tokens is None:

# no special tokens by default

args.special_tokens = DEFAULT_LLAMA_SPECIAL_TOKENS.get(str(args.llama_version), [])

spm_path = os.path.join(args.input_dir, "tokenizer.model")

vocab_size = len(

write_tokenizer(

args.output_dir,

spm_path,

llama_version=args.llama_version,

special_tokens=args.special_tokens,

instruct=args.instruct,

)

)

if args.model_size != "tokenizer_only":

write_model(

model_path=args.output_dir,

input_base_path=args.input_dir,

model_size=args.model_size,

safe_serialization=args.safe_serialization,

llama_version=args.llama_version,

vocab_size=vocab_size,

num_shards=args.num_shards,

instruct=args.instruct,

)

if __name__ == "__main__":

main()

然后执行:

python convert_llama_weights_to_hf.py --input_dir llama/7B --model_size 7B --output_dir llama/7Bhuggingface

可能报以下错误:

from transformers.convert_slow_tokenizer import TikTokenConverter

ImportError: cannot import name 'TikTokenConverter' from 'transformers.convert_slow_tokenizer' (C:\Users\Easyai\.conda\envs\agpt\lib\site-packages\transformers\convert_slow_tokenizer.py)

解决方法:

pip install -e .

或者

pip install --upgrade transformers

在LLaMa下会多出一个7Bhuggingface的目录,目录文件结构如下:

3.2.3 获取Vicuna Delta权重

从https://huggingface.co/lmsys/vicuna-7b-delta-v0/tree/main 获取模型:

然后在LLaMa目录创建相应的目录,并把模型放到目录下:

3.2.4 合并LLaMA和Vicuna Delta

- 安装fastchat

pip install fschat

可能会报下面的错误:

Collecting wavedrom (from markdown2[all]->fschat==0.2.1)

Downloading http://172.16.2.230:8501/packages/be/71/6739e3abac630540aaeaaece4584c39f88b5f8658ce6ca517efec455e3de/wavedrom-2.0.3.post3.tar.gz (137 kB)

Preparing metadata (setup.py) ... error

error: subprocess-exited-with-error

× python setup.py egg_info did not run successfully.

│ exit code: 1

╰─> [48 lines of output]

C:\Users\Easyai\.conda\envs\agpt\lib\site-packages\setuptools\__init__.py:94: _DeprecatedInstaller: setuptools.installer and fetch_build_eggs are deprecated.

!!

********************************************************************************

Requirements should be satisfied by a PEP 517 installer.

If you are using pip, you can try `pip install --use-pep517`.

********************************************************************************

!!

dist.fetch_build_eggs(dist.setup_requires)

WARNING: The repository located at 172.16.2.230 is not a trusted or secure host and is being ignored. If this repository is available via HTTPS we recommend you use HTTPS instead, otherwise you may silence this warning and allow it anyway with '--trusted-host 172.16.2.230'.

ERROR: Could not find a version that satisfies the requirement setuptools_scm (from versions: none)

ERROR: No matching distribution found for setuptools_scm

Traceback (most recent call last):

File "C:\Users\Easyai\.conda\envs\agpt\lib\site-packages\setuptools\installer.py", line 102, in _fetch_build_egg_no_warn

subprocess.check_call(cmd)

File "C:\Users\Easyai\.conda\envs\agpt\lib\subprocess.py", line 369, in check_call

raise CalledProcessError(retcode, cmd)

subprocess.CalledProcessError: Command '['C:\\Users\\Easyai\\.conda\\envs\\agpt\\python.exe', '-m', 'pip', '--disable-pip-version-check', 'wheel', '--no-deps', '-w', 'd:\\temp\\tmpjryrv_kd', '--quiet', 'setuptools_scm']' returned non-zero exit status 1.

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "<string>", line 2, in <module>

File "<pip-setuptools-caller>", line 34, in <module>

File "D:\temp\pip-install-6achpvqg\wavedrom_e8564a73a10342d7801b8a35deab645d\setup.py", line 28, in <module>

setup(

File "C:\Users\Easyai\.conda\envs\agpt\lib\site-packages\setuptools\__init__.py", line 116, in setup

_install_setup_requires(attrs)

File "C:\Users\Easyai\.conda\envs\agpt\lib\site-packages\setuptools\__init__.py", line 89, in _install_setup_requires

_fetch_build_eggs(dist)

File "C:\Users\Easyai\.conda\envs\agpt\lib\site-packages\setuptools\__init__.py", line 94, in _fetch_build_eggs

dist.fetch_build_eggs(dist.setup_requires)

File "C:\Users\Easyai\.conda\envs\agpt\lib\site-packages\setuptools\dist.py", line 617, in fetch_build_eggs

return _fetch_build_eggs(self, requires)

File "C:\Users\Easyai\.conda\envs\agpt\lib\site-packages\setuptools\installer.py", line 39, in _fetch_build_eggs

resolved_dists = pkg_resources.working_set.resolve(

File "C:\Users\Easyai\.conda\envs\agpt\lib\site-packages\pkg_resources\__init__.py", line 897, in resolve

dist = self._resolve_dist(

File "C:\Users\Easyai\.conda\envs\agpt\lib\site-packages\pkg_resources\__init__.py", line 933, in _resolve_dist

dist = best[req.key] = env.best_match(

File "C:\Users\Easyai\.conda\envs\agpt\lib\site-packages\pkg_resources\__init__.py", line 1271, in best_match

return self.obtain(req, installer)

File "C:\Users\Easyai\.conda\envs\agpt\lib\site-packages\pkg_resources\__init__.py", line 1307, in obtain

return installer(requirement) if installer else None

File "C:\Users\Easyai\.conda\envs\agpt\lib\site-packages\setuptools\installer.py", line 104, in _fetch_build_egg_no_warn

raise DistutilsError(str(e)) from e

distutils.errors.DistutilsError: Command '['C:\\Users\\Easyai\\.conda\\envs\\agpt\\python.exe', '-m', 'pip', '--disable-pip-version-check', 'wheel', '--no-deps', '-w', 'd:\\temp\\tmpjryrv_kd', '--quiet', 'setuptools_scm']' returned non-zero exit status 1.

[end of output]

note: This error originates from a subprocess, and is likely not a problem with pip.

error: metadata-generation-failed

× Encountered error while generating package metadata.

╰─> See above for output.

note: This is an issue with the package mentioned above, not pip.

hint: See above for details.

解决方法:

第一步:

pip install setuptools_scm

第二步,清华源安装

pip install wavedrom -i https://pypi.tuna.tsinghua.edu.cn/simple

然后安装:

pip install fschat==0.1.10

- 合并模型

python -m fastchat.model.apply_delta --base llama/7Bhuggingface --target pretrained_ckpt/vicuna_ckpt/7b_v0 --delta llama/vicuna-7b-v0-delta

合并,注意target路径是合并后所在的文件夹路径,即AnomalyGPT/pretrained_ckpt/vicuna_ckpt/7b_v0

3.3 获取AnomalyGPT Delta权重

3.3.1 Delta 权重

从官方的git界面给的连接下载对接权重,权重下载链接:https://huggingface.co/openllmplayground/pandagpt_7b_max_len_1024/tree/main

把下载好的模型放到下面目录:

3.3.2 AnomalyGPT Delta权重

在AnomalyGPT/code目录下创建这三个目录,然后从官方git界面下载相应的模型权重放到里面:

对应的模型不能下错:

4.运行项目

4.1 测试代码

带界面的测试代码在code目录下,切换到code,运行web_demo.py,这里可能要安装gradio:

pip install gradio==3.50.0

运行测试代码:

python web_demo.py

4.2 测试

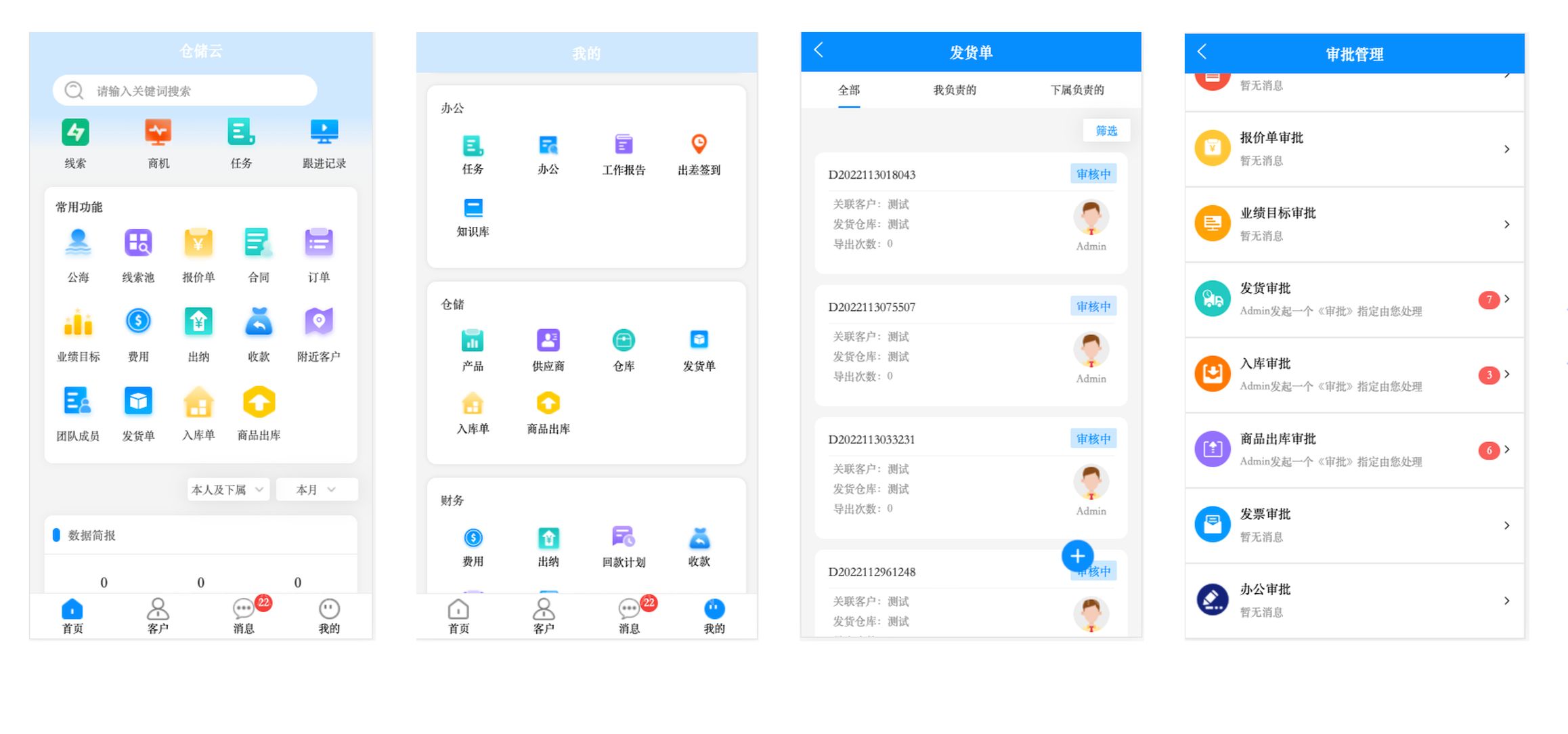

打开http://127.0.0.1:7860,打开图像,可以用中文或者英文进行交互,效果如下:

有缺陷的图像:

无缺陷的图像: