报错信息

OSError: We couldn’t connect to ‘https://huggingface.co’ to load this file, couldn’t find it in the cached files and it looks like jinaai/jina-bert-implementation is not the path to a directory containing a file named configuration_bert.py.

报错信息简述是连不上huggingface网址,也找不到缓存文件,缺失jinaai/jina-bert-implementation路径的configuration_bert.py文件。

网上也有好多解决的方法,对我来说感觉都太复杂了。

现在提供我的解决思路,非常简单,希望对你有帮助。

解决方法

在使用jinaai/jina-embeddings-v2-base-zh向量模型时,发现会报错信息如上。

此时我们还需要下载这个模型jinaai/jina-bert-implementation。

Huggling Face 下载

# Load model directly

from transformers import AutoTokenizer, AutoModel

model = "jinaai/jina-embeddings-v2-base-zh"

tokenizer = AutoTokenizer.from_pretrained(model , cache_dir="./", trust_remote_code=True)

model = AutoModel.from_pretrained(model , cache_dir="./", trust_remote_code=True)

网不好的使用国内的魔塔下载

jina-bert-implementation模型下载

jina-embeddings-v2-base-zh模型下载

建议手动下载,因为里面有很多而外的文件,速度比较慢。

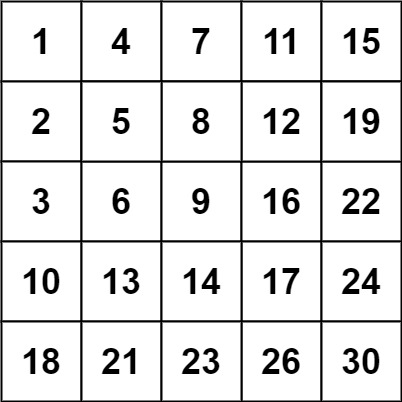

以上两种方式下载完成后,最后整理一下文件,两个模型最小包含文件如下:

我存放的目录为 /home/jinaai/

/home/jinaai/

├── jina-bert-implementation

│ ├── configuration_bert.py

│ └── modeling_bert.py

└── jina-embeddings-v2-base-zh

├── config.json

├── merges.txt

├── model.safetensors

├── special_tokens_map.json

├── tokenizer_config.json

├── tokenizer.json

└── vocab.json

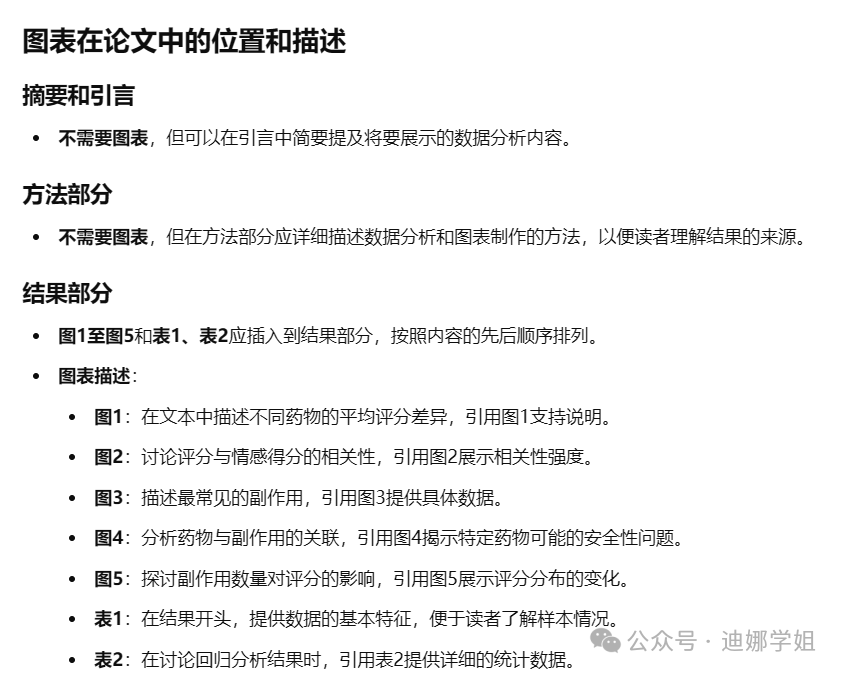

修改jina-embeddings-v2-base-zh模型config.json配置文件,将红色框中的路径换成jina-bert-implementation模型实际的路径即可。

测试是否成功

from numpy.linalg import norm

import torch

from transformers import AutoModel

from numpy.linalg import norm

if __name__ == "__main__":

path = "/home/jinaai/jina-embeddings-v2-base-zh"

cos_sim = lambda a,b: (a @ b.T) / (norm(a)*norm(b))

model = AutoModel.from_pretrained(path, trust_remote_code=True, torch_dtype=torch.bfloat16)

embeddings = model.encode(['How is the weather today?', '今天天气怎么样?'])

print(cos_sim(embeddings[0], embeddings[1]))

# 打印结果: 0.7868529

完美解决 OSError: We couldn’t connect to ‘https://huggingface.co’ to load this file, couldn’t find it in the cached files and it looks like jinaai/jina-bert-implementation is not the path to a directory containing a file named configuration_bert.py.这个报错,祝你好运~