论文网址:Polarized Graph Neural Networks | Proceedings of the ACM Web Conference 2022

英文是纯手打的!论文原文的summarizing and paraphrasing。可能会出现难以避免的拼写错误和语法错误,若有发现欢迎评论指正!文章偏向于笔记,谨慎食用

目录

1. 省流版

1.1. 心得

2. 论文逐段精读

2.1. Abstract

2.2. Introduction

2.3. Related work

2.4. Preliminaries

2.4.1. Problem formulation

2.4.2. Homophily and heteriophily in graphs

2.4.3. Anonymous walks

2.5. Method

2.5.1. Measurement of similarities and dissmilarities

2.5.2. Polarization on the hyper-spherical space

2.5.3. Spherical classification loss

2.6. Experiment

2.6.1. Experimental setup

2.6.2. Node classification on different types of networks

2.6.3. Model analysis

2.6.4. Visualization

2.7. Conclusion

3. Reference

1. 省流版

1.1. 心得

(1)也是比较容易理解的文章,但是没有代码还是比较sad

2. 论文逐段精读

2.1. Abstract

①AGAIN~Message passing is only suit for homogeneous dissemination

②They proposed polargraph Neural Network (Polar-GNN)

2.2. Introduction

①The edge generation difference between homophilic graph and hererophilic graph:

②Heterophilic information prompts polorization(酱紫的话岂不是一个人看到相似的观点也是促进,看到不相似的也是促进,那好的坏的都当好的了?其实这个很难评,有些人是看到讨厌的东西更坚定自己,有些人反而慢慢接受了呢?计算机应该不能完全仿照什么什么心理学观点吧,这种东西没有一定的)

③Chanllenges: mining similar and dissimilar unlabeled nodes, discriminatively aggregating message

2.3. Related work

①Introducing homophily, heterophily, spectral and contrastive learning methods

2.4. Preliminaries

2.4.1. Problem formulation

①Graph representations ,

is node and

is edge

②Each node is associated with a feature vector

③Each node belongs to a label or class

④For this semi-supervised learning, only few nodes are labeled

2.4.2. Homophily and heteriophily in graphs

①Definition 1: the edge homophily rartio:

where denotes the intra-class edges

②Homophily ratio: similar nodes , dissimilar nodes

2.4.3. Anonymous walks

①Anonymous walks is similar to random work but deletes the specific identity of nodes:

2.5. Method

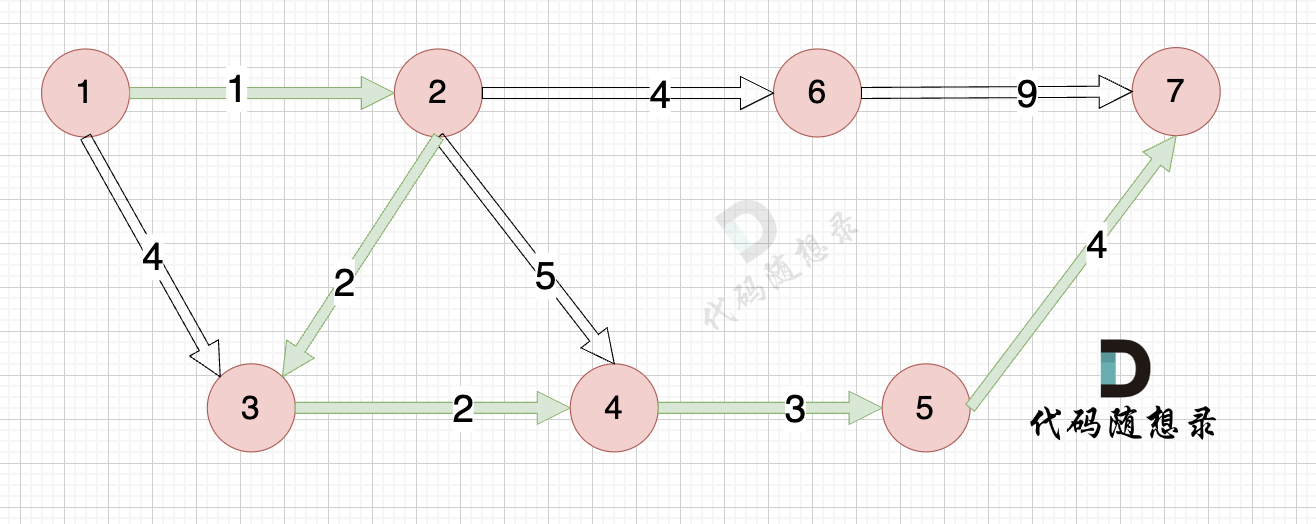

①Overall framework:

2.5.1. Measurement of similarities and dissmilarities

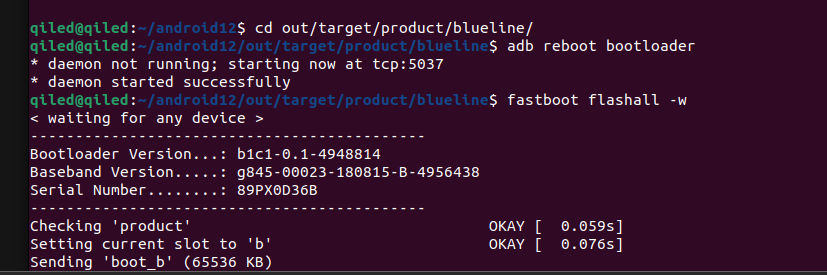

①Exampling GAT:

②They introduced negative weights for dissimilar node pairs:

where denotes the Hadamard product,

denotes a mapping function

, they chose cosine similarity

③To record the structure of nodes, they samples anonymous walks sequence with length for each node

, the distribution of the sequence

is regarded to the structure of node

④The structural similarity:

⑤Aggregated weights:

2.5.2. Polarization on the hyper-spherical space

①Neighbors utilization:

②Number of neighbors affects the feature

③The authors have demonstrated that for a specific node representation, during a gradient descent update, if its norm is relatively large, then the change in its direction will be comparatively small.

④They project embeddings to hyper-sphere, they designe a polarized objective function:

minimizing the distance between the initial and target states for stability, minimizing the distance between identified similar node pairs, and conversely, maximizing the distance between dissimilar node pairs

⑤Alogorithm of Polar GNN:

2.5.3. Spherical classification loss

①Cross entropy loss in hyper-sphere:

②By fixing and

by

Norm, they transform the cross entropy to:

where denotes the angle between

and

③They add an addictive angular penalty :

④The following figure shows the effect of the penalty term on distance approximation:

2.6. Experiment

2.6.1. Experimental setup

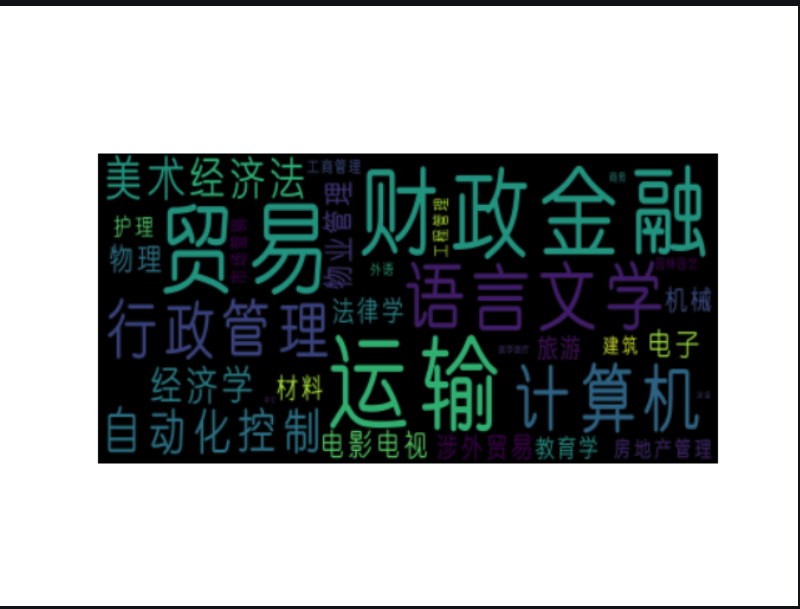

(1)Dataset

①Homophily datasets: Cora, Pubmed, Photo and Facebook

②Heterophily datasets: Chameleon, Squirrel, Actor and Twitch

(2)Baselines

①GCN and GraphSAGE

②GAT and APPNP

③Geom-GCN

④FAGCN and GPRGNN

(3)Settings

①Training: 20 nodes per class for training

②Testing: 1000 nodes

③Validation: another 500 nodes

④Epoch: 500

2.6.2. Node classification on different types of networks

①Model performance:

2.6.3. Model analysis

(1)Ablation studies

①Module ablation study on Chameleon, with

| Polar-GNN-Feat | only incorporates node features when calculating the aggregation weights |

| Polar-GNN-Struct | only uses topological structure when calculating weights |

| Polar-GNN-NoNorm | Polar-GNN variant without normalization to a hyper-sphere |

they got

②Penalty ablation:

(2)Alleviating over-smooth

①GNN layer ablation:

2.6.4. Visualization

(1)Aggregation weights

①Weight aggregation on Chameleon:

(2)Node embeddings-how negative weights help

①Node embedding on Chameleon:

2.7. Conclusion

The polarization is similar to constrained optimization

3. Reference

Fang, Z. et al. (2022) 'Polarized Graph Neural Networks', WWW. doi: https://doi.org/10.1145/3485447.3512187