欢迎关注我的CSDN:https://spike.blog.csdn.net/

本文地址:https://spike.blog.csdn.net/article/details/142528967

免责声明:本文来源于个人知识与公开资料,仅用于学术交流,欢迎讨论,不支持转载。

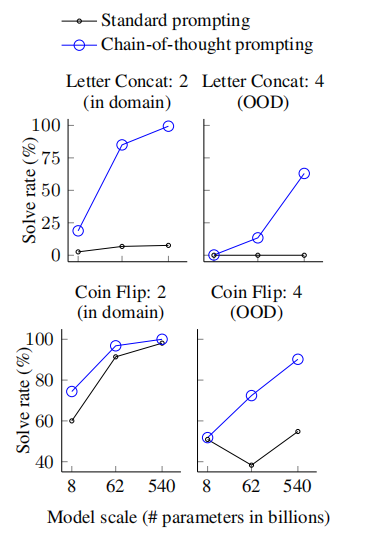

vLLM 用于 大语言模型(LLM) 的推理和服务,具有多项优化技术,包括先进的服务吞吐量、高效的内存管理、连续批处理请求、优化 CUDA 内核以及支持量化技术,如GPTQ、AWQ等。FlashAttention 是先进的注意力机制优化工具,通过减少内存访问和优化计算过程,显著提高大型语言模型的推理速度。

GitHub:

- FlashAttention: https://github.com/Dao-AILab/flash-attention

- Transformers: https://github.com/huggingface/transformers

- vLLM: https://github.com/vllm-project/vllm

1. 配置 vLLM

准备 Qwen2-VL 模型,包括 7B 和 72B,即:

modelscope --token [your token] download --model Qwen/Qwen2-VL-7B-Instruct

modelscope --token [your token] download --model Qwen/Qwen2-VL-72B-Instruct-GPTQ-Int4

注意:Qwen2-VL 暂时不支持 GGUF 转换,因此不能使用 Ollama 提供服务。

配置 vLLM:

pip install vllm==0.6.1 -i https://pypi.tuna.tsinghua.edu.cn/simple

参考:vLLM - Using VLMs

注意:当前(2024.9.26)最新 Transformers 版本不支持 Qwen2-VL,需要使用固定 commit 版本,参考:

pip install git+https://github.com/huggingface/transformers.git@21fac7abba2a37fae86106f87fcf9974fd1e3830

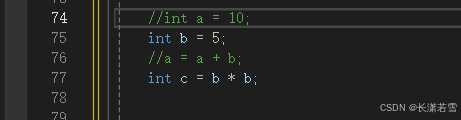

Transformers 的 Commit ID (21fac7abba2a37fae86106f87fcf9974fd1e3830) 内容,以更新 Qwen2-VL 为主,即:

commit 21fac7abba2a37fae86106f87fcf9974fd1e3830 (HEAD)

Author: Shijie <821898965@qq.com>

Date: Fri Sep 6 00:19:30 2024 +0800

simple align qwen2vl kv_seq_len calculation with qwen2 (#33161)

* qwen2vl_align_kv_seqlen_to_qwen2

* flash att test

* [run-slow] qwen2_vl

* [run-slow] qwen2_vl fix OOM

* [run-slow] qwen2_vl

* Update tests/models/qwen2_vl/test_modeling_qwen2_vl.py

Co-authored-by: Raushan Turganbay <raushan.turganbay@alumni.nu.edu.kz>

* Update tests/models/qwen2_vl/test_modeling_qwen2_vl.py

Co-authored-by: Raushan Turganbay <raushan.turganbay@alumni.nu.edu.kz>

* code quality

---------

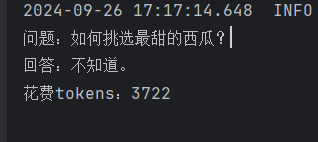

vLLM 的视觉文本测试代码,如下:

- 通过

SamplingParams设置最大的 Tokens 数量。 - 注意,不同的模型 Image Token 也不同,Qwen2-VL 是

<|image_pad|>,而InternVL2-2B是<image>

即:

from vllm import LLM, SamplingParams

import PIL

import os

os.environ["CUDA_VISIBLE_DEVICES"] = "1"

def main():

# Qwen2-VL

llm = LLM(model="llm/Qwen/Qwen2-VL-7B-Instruct/")

prompt = "USER: <|image_pad|>\nWhat is the content of this image?\nASSISTANT:"

# InternVL2-2B

# llm = LLM(model="llm/InternVL2-2B/", trust_remote_code=True)

# Refer to the HuggingFace repo for the correct format to use

# prompt = "USER: <image>\nWhat is the content of this image?\nASSISTANT:"

# 设置最大输出 Token 数量

sampling_params = SamplingParams(max_tokens=8172)

# Load the image using PIL.Image

image = PIL.Image.open("llm/img_test.jpg")

# Single prompt inference

outputs = llm.generate({

"prompt": prompt,

"multi_modal_data": {"image": image},

}, sampling_params)

for o in outputs:

generated_text = o.outputs[0].text

print(generated_text)

if __name__ == '__main__':

main()

Qwen2-VL 的输出:

The image shows a person standing on a road near a sidewalk. The person is dressed in a light-colored outfit consisting of a short-sleeved blouse and a skirt. The background features greenery, including trees and some buildings, with a few cars parked along the street. The overall scene appears to be a sunny day with good visibility.

BugFix1:

File "miniconda3/envs/torch-llm/lib/python3.9/site-packages/vllm/transformers_utils/configs/__init__.py", line 13, in <module>

from vllm.transformers_utils.configs.mllama import MllamaConfig

File "miniconda3/envs/torch-llm/lib/python3.9/site-packages/vllm/transformers_utils/configs/mllama.py", line 1, in <module>

from transformers.models.mllama import configuration_mllama as mllama_hf_config

ModuleNotFoundError: No module named 'transformers.models.mllama'

原因:降级 vLLM 版本至 0.6.1,vllm/transformers_utils/configs/mllama.py 是 0.6.2 版本加入,即:

pip install vllm==0.6.1 -i https://pypi.tuna.tsinghua.edu.cn/simple

BugFix2:

[rank0]: File "miniconda3/envs/torch-llm/lib/python3.9/site-packages/vllm/inputs/registry.py", line 256, in process_input

[rank0]: return processor(InputContext(model_config), inputs)

[rank0]: File "miniconda3/envs/torch-llm/lib/python3.9/site-packages/vllm/model_executor/models/qwen2_vl.py", line 770, in input_processor_for_qwen2_vl

[rank0]: assert len(image_indices) == len(image_inputs)

[rank0]: AssertionError

原因,参考 vllm/model_executor/models/qwen2_vl.py,hf_config.image_token_id 与当前 Prompt 的 Image Token (<image>),不一致,即:

prompt_token_ids = llm_inputs.get("prompt_token_ids", None)

if prompt_token_ids is None:

prompt = llm_inputs["prompt"]

prompt_token_ids = processor.tokenizer(

prompt,

padding=True,

return_tensors=None,

)["input_ids"]

print(f"[Info] decode prompt: \n{processor.decode(prompt_token_ids)}\n")

print(f"[Info] decode image_token_id (151655): {processor.decode([151655])}")

# Expand image pad tokens.

if image_inputs is not None:

image_indices = [

idx for idx, token in enumerate(prompt_token_ids)

if token == hf_config.image_token_id

]

print(f"[Info] hf_config.image_token_id: {hf_config.image_token_id}, prompt_token_ids: {prompt_token_ids}")

image_inputs = make_batched_images(image_inputs)

print(f"[Info] image_indices: {len(image_indices)} and image_inputs: {len(image_inputs)}")

assert len(image_indices) == len(image_inputs)

经过分析,确定 Qwen2-VL 的 Image Token 是 <|image_pad|>,而不是 <image>,替换 Prompt 即可。

输出:

[Info] decode prompt:

USER: <|image_pad|>

What is the content of this image?

ASSISTANT:

[Info] decode image_token_id (151655): <|image_pad|>

[Info] hf_config.image_token_id: 151655, prompt_token_ids: [6448, 25, 220, 151655, 198, 3838, 374, 279, 2213, 315, 419, 2168, 5267, 4939, 3846, 2821, 25]

[Info] image_indices: 1 and image_inputs: 1

2. 配置 FlashAttention

FlashAttention 可以加速大模型的推理过程,配置 FlashAttention,参考,安装依赖的 Python 包:

pip install packaging

pip install ninja

测试 ninja 包是否可用,即:

ninja --version # 1.11.1.git.kitware.jobserver-1

echo $? # 0

Ninja 类似于 Makefile,语法简单,但是比 Makefile 更加简洁。

不推荐 直接安装 flash-attn,建议使用源码安装,安装过程可控,请耐心等待,即:

pip install flash-attn --no-build-isolation

# log

Building wheels for collected packages: flash-attn

Building wheel for flash-attn (setup.py) ... |

检测 Python 版本:

python --version # Python 3.9.19

nvidia-smi # CUDA Version: 12.0

python

import torch

print(torch.__version__) # 2.4.0+cu121

print(torch.cuda.is_available())

exit()

建议通过直接源码进行安装,即:

git clone git@github.com:Dao-AILab/flash-attention.git

python setup.py install

整体的编译过程,包括 85 步,耐心等待,即:

Using envvar MAX_JOBS (64) as the number of workers...

[1/85] c++ -MMD -MF ...

# ...

Using miniconda3/envs/torch-llm/lib/python3.9/site-packages

Finished processing dependencies for flash-attn==2.6.3