RT-DETR(RT-DETR: DETRs Beat YOLOs on Real-time Object Detection) 和YOLOv8等在最后加nms RT-DETR转onnx和tensorrt和 RT-DETR转onnx和tensorrt

步骤流程:

1. nvidia驱动,cuda,cudnn三者的版本是相互对应的,必须要确保版本匹配(https://blog.csdn.net/qq_41246375/article/details/133926272?spm=1001.2101.3001.6650.7&utm_medium=distribute.pc_relevant.none-task-blog-2%7Edefault%7EBlogCommendFromBaidu%7ERate-7-133926272-blog-120109778.235%5Ev43%5Epc_blog_bottom_relevance_base9&depth_1-utm_source=distribute.pc_relevant.none-task-blog-2%7Edefault%7EBlogCommendFromBaidu%7ERate-7-133926272-blog-120109778.235%5Ev43%5Epc_blog_bottom_relevance_base9&utm_relevant_index=13)

2.下载tensor RT,注意版本对应关系(https://developer.nvidia.com/nvidia-tensorrt-8x-download)

我自己的方法是直接下载deb的

sudo dpkg -i nv-tensorrt-local-repo-ubuntu2004-8.6.1-cuda-11.8_1.0-1_amd64.deb

sudo cp /var/nv-tensorrt-local-repo-ubuntu2004-8.6.1-cuda-11.8/nv-tensorrt-local-D7BB1B18-keyring.gpg /usr/share/keyrings/

sudo apt-get update

sudo apt-get install tensorrt

dpkg-query -W tensorrt #查看安装结果

上面是关于简单的介绍cuda,cudnn和tensorrt的方法,我就进行了简写,具体的cuda,cudnn可以看这个视频介绍

视频链接:https://www.bilibili.com/video/BV1KL411S7hw/?spm_id_from=333.880.my_history.page.click

3.下面是主要关于介绍转onnx模型和tensor模型

3.1 YOLOv8转onnx方法

尽管在ultralytics(https://github.com/ultralytics/ultralytics?tab=readme-ov-file)中的方法有详细的介绍了转onnx的方法,但是没有加nms

我根据RT-DETR(RT-DETR: DETRs Beat YOLOs on Real-time Object Detection) 原论文介绍,如何加nms方法进行介绍。

在(https://github.com/lyuwenyu/RT-DETR/tree/main/benchmark) 链接中将yolov8_onnx.py和utils.py两代码直接放入官网的YOLOv8中 [https://github.com/ultralytics/ultralytics?tab=readme-ov-file]

官方的yolov8_onnx.py源代码:

'''by lyuwenyu

'''

import torch

import torchvision

import numpy as np

import onnxruntime as ort

from utils import yolo_insert_nms

class YOLOv8(torch.nn.Module):

def __init__(self, name) -> None:

super().__init__()

from ultralytics import YOLO

# Load a model

# build a new model from scratch

# model = YOLO(f'{name}.yaml')

# load a pretrained model (recommended for training)

model = YOLO(f'{name}.pt')

self.model = model.model

def forward(self, x):

'''https://github.com/ultralytics/ultralytics/blob/main/ultralytics/nn/tasks.py#L216

'''

pred: torch.Tensor = self.model(x)[0] # n 84 8400,

pred = pred.permute(0, 2, 1)

boxes, scores = pred.split([4, 80], dim=-1)

boxes = torchvision.ops.box_convert(boxes, in_fmt='cxcywh', out_fmt='xyxy')

return boxes, scores

def export_onnx(name='yolov8n'):

'''export onnx

'''

m = YOLOv8(name)

x = torch.rand(1, 3, 640, 640)

dynamic_axes = {

'image': {0: '-1'}

}

torch.onnx.export(m, x, f'{name}.onnx',

input_names=['image'],

output_names=['boxes', 'scores'],

opset_version=13,

dynamic_axes=dynamic_axes)

data = np.random.rand(1, 3, 640, 640).astype(np.float32)

sess = ort.InferenceSession(f'{name}.onnx')

_ = sess.run(output_names=None, input_feed={'image': data})

if __name__ == '__main__':

import argparse

parser = argparse.ArgumentParser()

parser.add_argument('--name', type=str, default='YOLOv8x')

parser.add_argument('--score_threshold', type=float, default=0.001)

parser.add_argument('--iou_threshold', type=float, default=0.7)

parser.add_argument('--max_output_boxes', type=int, default=300)

args = parser.parse_args()

export_onnx(name=args.name)

yolo_insert_nms(path=f'{args.name}.onnx',

score_threshold=args.score_threshold,

iou_threshold=args.iou_threshold,

max_output_boxes=args.max_output_boxes, )

然而我在跑我自己的数据集的时候它报了一个错误,根据错误信息,使用 split 方法将一个张量 pred 分成两个部分时遇到了问题。错误信息指出,split_with_sizes 期望 split_sizes 的和与张量在指定维度上的大小完全一致,但输入张量在最后一个维度上的大小是5,而传递的 split_sizes 是 [4, 80]。

我对源码进行了更正,更正如下:

'''by lyuwenyu

'''

import torch

import torchvision

import numpy as np

import onnxruntime as ort

from utils import yolo_insert_nms

class YOLOv8(torch.nn.Module):

def __init__(self, name) -> None:

super().__init__()

from ultralytics import YOLO

model = YOLO(f'{name}.pt')

self.model = model.model

def forward(self, x):

'''https://github.com/ultralytics/ultralytics/blob/main/ultralytics/nn/tasks.py#L216

'''

pred: torch.Tensor = self.model(x)[0] # n 84 8400,

pred = pred.permute(0, 2, 1)

print(f'Pred shape: {pred.shape}') # 打印 pred 的形状

boxes, scores = pred.split([4, 1], dim=-1) # 根据 pred 的形状调整 split 大小

boxes = torchvision.ops.box_convert(boxes, in_fmt='cxcywh', out_fmt='xyxy')

return boxes, scores

def export_onnx(name='yolov8n'):

'''export onnx

'''

m = YOLOv8(name)

x = torch.rand(1, 3, 640, 640)

dynamic_axes = {

'image': {0: '-1'}

}

torch.onnx.export(m, x, f'{name}.onnx',

input_names=['image'],

output_names=['boxes', 'scores'],

opset_version=13,

dynamic_axes=dynamic_axes)

data = np.random.rand(1, 3, 640, 640).astype(np.float32)

sess = ort.InferenceSession(f'{name}.onnx')

_ = sess.run(output_names=None, input_feed={'image': data})

if __name__ == '__main__':

import argparse

parser = argparse.ArgumentParser()

parser.add_argument('--name', type=str, default=r'YOLOv8x')

parser.add_argument('--score_threshold', type=float, default=0.001)

parser.add_argument('--iou_threshold', type=float, default=0.7)

parser.add_argument('--max_output_boxes', type=int, default=300)

args = parser.parse_args()

export_onnx(name=args.name)

yolo_insert_nms(path=f'{args.name}.onnx',

score_threshold=args.score_threshold,

iou_threshold=args.iou_threshold,

max_output_boxes=args.max_output_boxes, )

我用我自己的数据报错如下:

C:\Users\29269\Desktop\RTDETR-20240802\ultralytics\nn\modules\head.py:49: TracerWarning: Converting a tensor to a Python boolean might cause the trace to be incorrect. We can't record the data flow of Python values, so this value will be treated as a constant in the future. This means that the trace might not generalize to other inputs!

elif self.dynamic or self.shape != shape:

C:\Users\29269\Desktop\RTDETR-20240802\ultralytics\utils\tal.py:254: TracerWarning: Iterating over a tensor might cause the trace to be incorrect. Passing a tensor of different shape won't change the number of iterations executed (and might lead to errors or silently give incorrect results).

for i, stride in enumerate(strides):

Traceback (most recent call last):

File "C:\Users\29269\Desktop\RTDETR-20240802\yolov8_onnx.py", line 66, in <module>

export_onnx(name=args.name)

File "C:\Users\29269\Desktop\RTDETR-20240802\yolov8_onnx.py", line 45, in export_onnx

torch.onnx.export(m, x, f'{name}.onnx',

File "D:\software\Anaconda3\envs\yolov8\lib\site-packages\torch\onnx\utils.py", line 506, in export

_export(

File "D:\software\Anaconda3\envs\yolov8\lib\site-packages\torch\onnx\utils.py", line 1548, in _export

graph, params_dict, torch_out = _model_to_graph(

File "D:\software\Anaconda3\envs\yolov8\lib\site-packages\torch\onnx\utils.py", line 1113, in _model_to_graph

graph, params, torch_out, module = _create_jit_graph(model, args)

File "D:\software\Anaconda3\envs\yolov8\lib\site-packages\torch\onnx\utils.py", line 989, in _create_jit_graph

graph, torch_out = _trace_and_get_graph_from_model(model, args)

File "D:\software\Anaconda3\envs\yolov8\lib\site-packages\torch\onnx\utils.py", line 893, in _trace_and_get_graph_from_model

trace_graph, torch_out, inputs_states = torch.jit._get_trace_graph(

File "D:\software\Anaconda3\envs\yolov8\lib\site-packages\torch\jit\_trace.py", line 1268, in _get_trace_graph

============== Diagnostic Run torch.onnx.export version 2.0.1+cpu ==============

verbose: False, log level: Level.ERROR

======================= 0 NONE 0 NOTE 0 WARNING 0 ERROR ========================

outs = ONNXTracedModule(f, strict, _force_outplace, return_inputs, _return_inputs_states)(*args, **kwargs)

File "D:\software\Anaconda3\envs\yolov8\lib\site-packages\torch\nn\modules\module.py", line 1501, in _call_impl

return forward_call(*args, **kwargs)

File "D:\software\Anaconda3\envs\yolov8\lib\site-packages\torch\jit\_trace.py", line 127, in forward

graph, out = torch._C._create_graph_by_tracing(

File "D:\software\Anaconda3\envs\yolov8\lib\site-packages\torch\jit\_trace.py", line 118, in wrapper

outs.append(self.inner(*trace_inputs))

File "D:\software\Anaconda3\envs\yolov8\lib\site-packages\torch\nn\modules\module.py", line 1501, in _call_impl

return forward_call(*args, **kwargs)

File "D:\software\Anaconda3\envs\yolov8\lib\site-packages\torch\nn\modules\module.py", line 1488, in _slow_forward

result = self.forward(*input, **kwargs)

File "C:\Users\29269\Desktop\RTDETR-20240802\yolov8_onnx.py", line 29, in forward

boxes, scores = pred.split([4, 80], dim=-1)

File "D:\software\Anaconda3\envs\yolov8\lib\site-packages\torch\_tensor.py", line 803, in split

return torch._VF.split_with_sizes(self, split_size, dim)

RuntimeError: split_with_sizes expects split_sizes to sum exactly to 5 (input tensor's size at dimension -1), but got split_sizes=[4, 80]

报错结果:

运行下面指令后, 会生成两个文件,一个是没有加YOLOv8x.onnx文件和yolo_w_nms.onnx两个文件,这两个文件,yolo_w_nms.onnx是加入了nms而YOLOv8x.onnx没加入nms,

运行下面指令后, 会生成两个文件,一个是没有加YOLOv8x.onnx文件和yolo_w_nms.onnx两个文件,这两个文件,yolo_w_nms.onnx是加入了nms而YOLOv8x.onnx没加入nms,

python yolov8_onnx.py

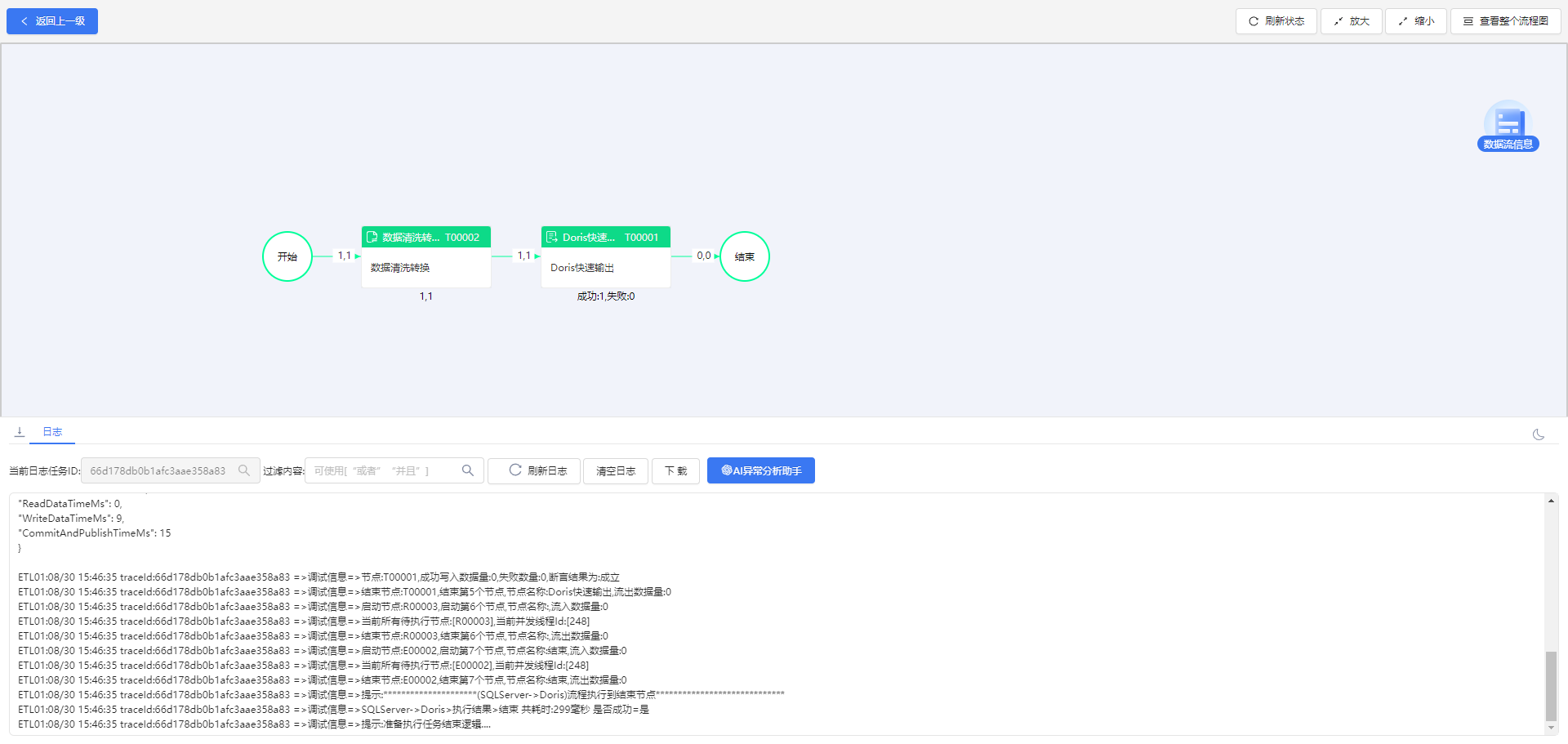

如何查看是否加入了nms需要通过netron进行查看。下面是加入nms的效果示意图

转tensorrt 方法:

trtexec --onnx=./yolo_w_nms.onnx --saveEngine=yolo_w_nms.engine --buildOnly --fp16

测FPS方法:

trtexec --loadEngine=yolo_w_nms.engine --fp16 --avgRuns=100

参数介绍:

-

–loadEngine=/path/to/your_model.engine:指定要加载的 TensorRT 引擎文件。

-

–fp16:使用 FP16 精度进行推理(如果你的模型支持)。

-

–avgRuns=100:平均运行 100 次以获得稳定的 FPS 值。

用 trtexec --loadEngine=yolo_w_nms.engine --fp16 --avgRuns=100指令测试后会找到GPU Compute Time的mean值,然后用1000除以它得到FPS值。

如下图所示:它的mean值是2.00663 .则FPS=1000/2.00663=498.347

4.在用RT-DETR模型中,依然可以用官方的ultralytics导出onnx,然后用转tensorrt 方法再测FPS

import warnings

warnings.filterwarnings('ignore')

from ultralytics import RTDETR

# onnx onnxsim onnxruntime onnxruntime-gpu

# 导出参数官方详解链接:https://docs.ultralytics.com/modes/export/#usage-examples

if __name__ == '__main__':

model = RTDETR(r"R18best.pt")

model.export(format='onnx', simplify=True)

参考资料:

- https://blog.csdn.net/qq_41246375/article/details/133926272?spm=1001.2101.3001.6650.7&utm_medium=distribute.pc_relevant.none-task-blog-2%7Edefault%7EBlogCommendFromBaidu%7ERate-7-133926272-blog-120109778.235%5Ev43%5Epc_blog_bottom_relevance_base9&depth_1-utm_source=distribute.pc_relevant.none-task-blog-2%7Edefault%7EBlogCommendFromBaidu%7ERate-7-133926272-blog-120109778.235%5Ev43%5Epc_blog_bottom_relevance_base9&utm_relevant_index=13

- https://blog.csdn.net/java1314777/article/details/134629701

- https://blog.csdn.net/qq_41246375/article/details/115597025