神经网络 - 非线性激活

使用到的pytorch网站:

- Padding Layers(对输入图像进行填充的各种方式)

几乎用不到,nn.ZeroPad2d(在输入tensor数据类型周围用0填充)

nn.ConstantPad2d(用常数填充)

在 Conv2d 中可以实现,故不常用 - Non-linear Activations (weighted sum, nonlinearity)

- Non-linear Activations (other)

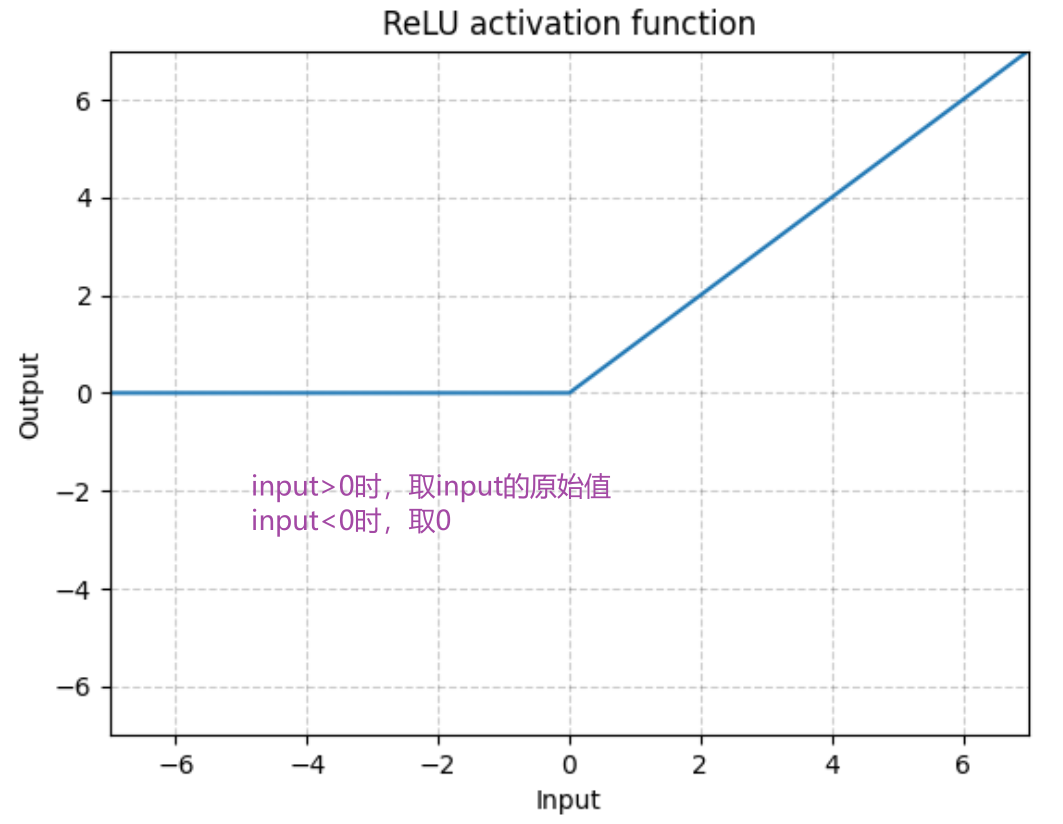

1.最常见的非线性激活:RELU

ReLU — PyTorch 1.10 documentation

输入:(N,*) N 为 batch_size,*不限制可以是任意

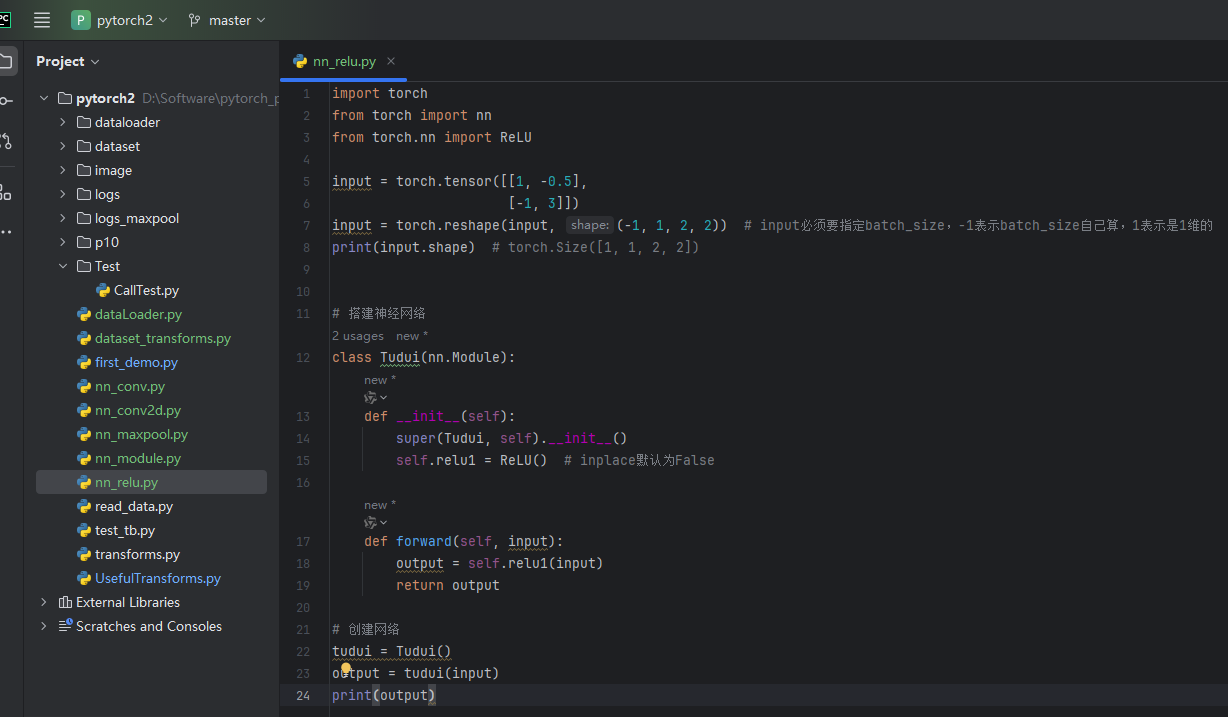

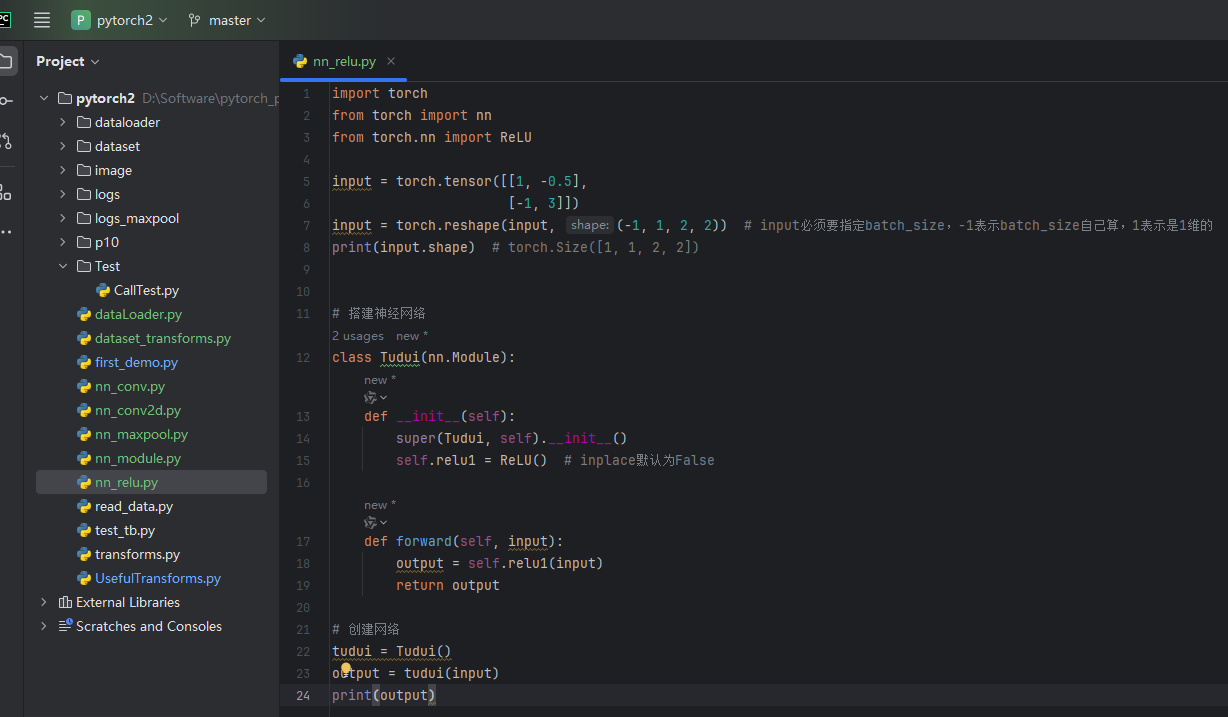

代码举例:RELU

import torch

from torch import nn

from torch.nn import ReLU

input = torch.tensor([[1,-0.5],

[-1,3]])

input = torch.reshape(input,(-1,1,2,2)) #input必须要指定batch_size,-1表示batch_size自己算,1表示是1维的

print(input.shape) #torch.Size([1, 1, 2, 2])

# 搭建神经网络

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.relu1 = ReLU() #inplace默认为False

def forward(self,input):

output = self.relu1(input)

return output

# 创建网络

tudui = Tudui()

output = tudui(input)

print(output)

运行结果:

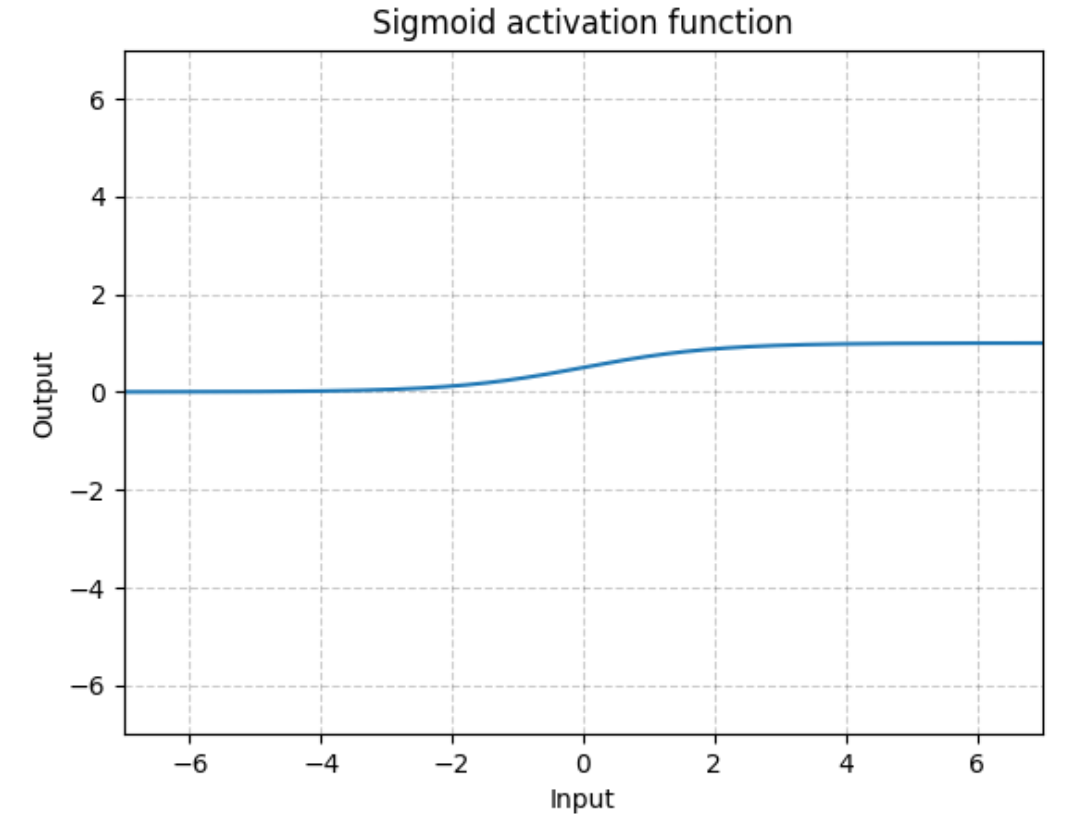

2.Sigmoid

Sigmoid — PyTorch 1.10 documentation

输入:(N,*) N 为 batch_size,*不限制

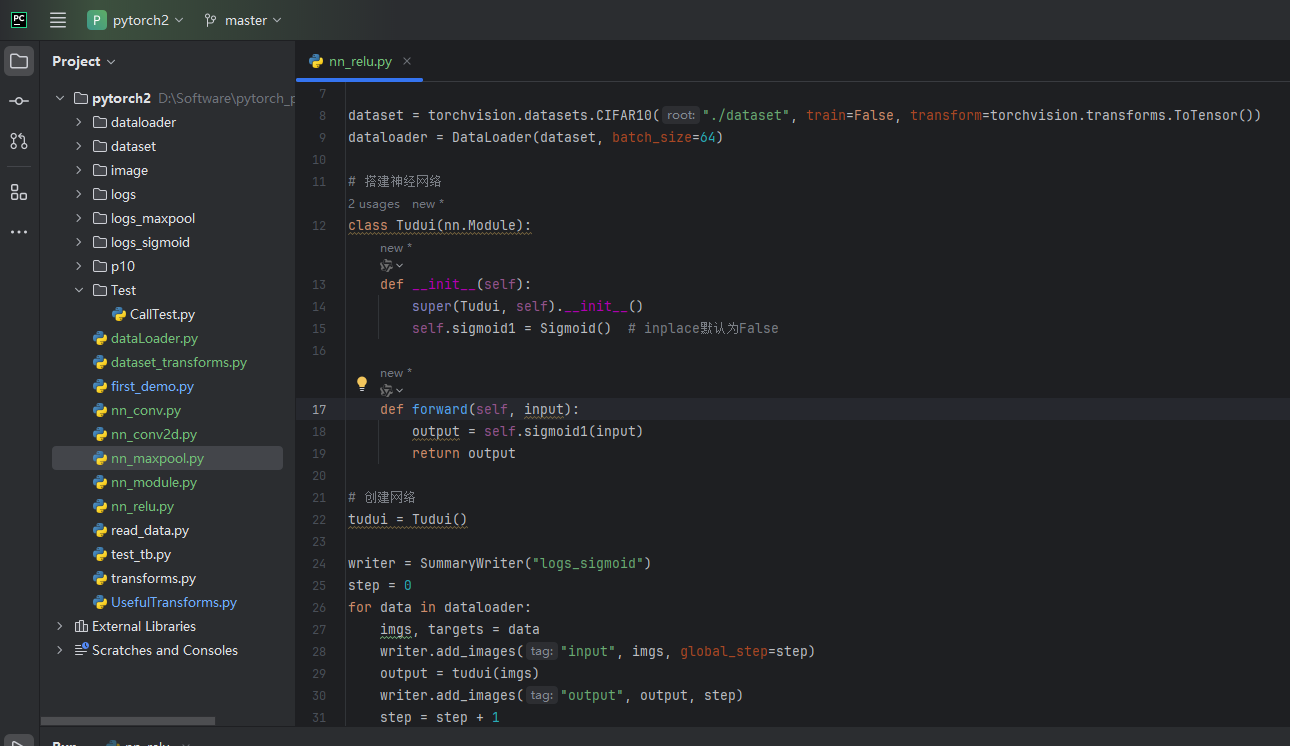

代码举例:Sigmoid(数据集CIFAR10)

import torch

import torchvision.datasets

from torch import nn

from torch.nn import Sigmoid

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10("../data",train=False,download=True,transform=torchvision.transforms.ToTensor())

dataloader = DataLoader(dataset,batch_size=64)

# 搭建神经网络

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.sigmoid1 = Sigmoid() #inplace默认为False

def forward(self,input):

output = self.sigmoid1(input)

return output

# 创建网络

tudui = Tudui()

writer = SummaryWriter("../logs_sigmoid")

step = 0

for data in dataloader:

imgs,targets = data

writer.add_images("input",imgs,global_step=step)

output = tudui(imgs)

writer.add_images("output",output,step)

step = step + 1

writer.close()

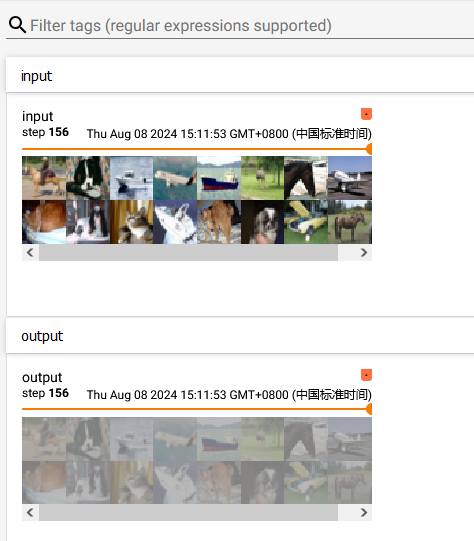

运行后在 terminal 里输入:

tensorboard --logdir=logs_sigmoid

打开网址:

关于inplace

tensorboard --logdir=logs_sigmoid

打开网址:

[外链图片转存中...(img-9qQDN4dS-1724861715512)]

### 关于inplace

![[羊城杯 2024] Crypto](https://i-blog.csdnimg.cn/direct/4c7a8ff361bb451981699fcc3287f43d.png)