NLP-transformer学习:(5)模型训练和预测

基于 NLP-transformer学习:(2,3,4),这里对transformer 更近一步,学习尝试使用其中的bert

文章目录

- NLP-transformer学习:(5)模型训练和预测

- @[TOC](文章目录)

- 1 上节补充:什么是 BERT

- 2 RBT模型调用与实践

- 2.1 数据准备

- 2.2 创建 dataset,读取csv

- 2.3 创建训练

- 2.4 模型预测

文章目录

- NLP-transformer学习:(5)模型训练和预测

- @[TOC](文章目录)

- 1 上节补充:什么是 BERT

- 2 RBT模型调用与实践

- 2.1 数据准备

- 2.2 创建 dataset,读取csv

- 2.3 创建训练

- 2.4 模型预测

提示:以下是本篇文章正文内容,下面案例可供参考

1 上节补充:什么是 BERT

BERT: Bidirectional Encoder Representation from Transformers

是一个预训练的语言表征模型。它强调了不再像以往一样采用传统的单向语言模型或者把两个单向语言模型进行浅层拼接的方法进行预训练,而是采用新的masked language model(MLM),以致能生成深度的双向语言表征。BERT论文发表时提及在11个NLP(Natural Language Processing,自然语言处理)任务中获得了新的state-of-the-art的结果

推荐链接:

https://blog.csdn.net/SMith7412/article/details/88755019

其实说白了就是 transformer可以进行堆叠

2 RBT模型调用与实践

2.1 数据准备

url:https://github.com/SophonPlus/ChineseNlpCorpus

下载这个数据集

2.2 创建 dataset,读取csv

from transformers import AutoTokenizer, AutoModelForSequenceClassification # tokenizer and model will help text classiffication

import pandas as pd # used for dealing with csv data

from torch.utils.data import Dataset

#from torch.utils.data import random_split # my torch version is 1.12.0 this functions is added at lest 1.13, so I add it in utils.py

import torch

import utils as ut

from torch.utils.data import DataLoader

class TxtDataset(Dataset):

# return None type value import code readability

def __init__(self) -> None:

super().__init__()

# read the data

self.data = pd.read_csv("./01-Getting_Started/04-model/ChnSentiCorp_htl_all.csv")

# remove null data

self.data = self.data.dropna()

def __getitem__(self, index): # return one label with data in one time

return self.data.iloc[index]["review"], self.data.iloc[index]["label"]

def __len__(self): #return the size of current dataset

return len(self.data)

if __name__ == "__main__":

txtdataset = TxtDataset()

for i in range(5):

# print the sentense with label, 1 shows positive comment

print(txtdataset[i])

print("len txtdataset:" + str(len(txtdataset)))

# length means the propotion,

# for this case, train dataset is 0.9 and valid dataset is 0.1,

# validset + tran must equals 1.0

trainset, validset = ut.random_split(txtdataset, [.9, .1], generator=torch.Generator().manual_seed(42))

print("len trainset:" + str(len(trainset)))

print("len validset:" + str(len(validset)))

for i in range(10):

print(trainset[i])

#

tokenizer = AutoTokenizer.from_pretrained("./rbt3")

运行结果:

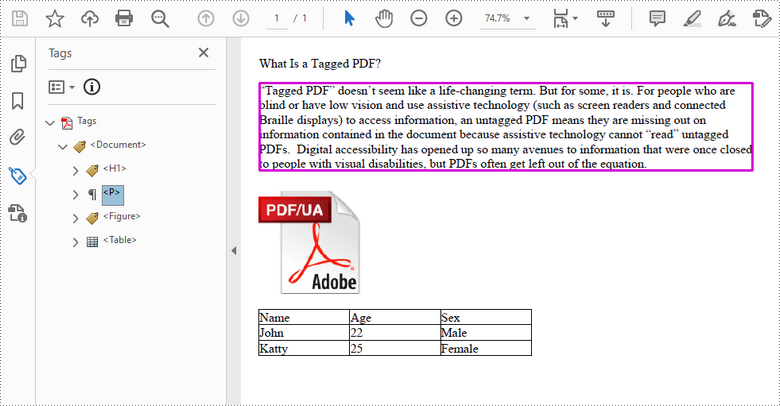

可以看到数据被正常读取,length可以读到,并且数据集中有结尾为1的postive评价和为0的negtive评价

2.3 创建训练

训练代码,其中要说明的是这里做的是迁移学习,ii因此学习率可以小一些。

在训练伊始需要对optimizer 归零。

加上之前小结以及给你建立好的数据和,我们可以这样

代码:

from transformers import AutoTokenizer, AutoModelForSequenceClassification # tokenizer and model will help text classiffication

import pandas as pd # used for dealing with csv data

from torch.utils.data import Dataset

#from torch.utils.data import random_split # my torch version is 1.12.0 this functions is added at lest 1.13, so I add it in utils.py

import torch

import utils as ut

from torch.utils.data import DataLoader

class TxtDataset(Dataset):

# return None type value import code readability

def __init__(self) -> None:

super().__init__()

# read the data

self.data = pd.read_csv("/home/mex/Desktop/learn_transformer/mexwayne_transformers_NLP/01-Getting_Started/04-model/ChnSentiCorp_htl_all.csv")

# remove null data

self.data = self.data.dropna()

def __getitem__(self, index): # return one label with data in one time

return self.data.iloc[index]["review"], self.data.iloc[index]["label"]

def __len__(self): #return the size of current dataset

return len(self.data)

# when we train the model, we will make traindata with batchsize and pack them with into one tensor

# so we need implement a function like this

# the text and label should be store.

def collate_fun(batch):

texts, labels = [], []

for item in batch:

texts.append(item[0])

labels.append(item[1])

# the datat which is too long should be truncated with max_length, and the short data should be

# padd all the data into same length

inputs = tokenizer(texts, max_length=128, padding="max_length", truncation=True, return_tensors="pt")

# if the label in the data, the loss will caculate by model like brt did so

inputs["labels"] = torch.tensor(labels)

return inputs

def evaluate():

model.eval()

acc_num = 0

with torch.inference_mode():

for batch in validloader:

if torch.cuda.is_available():

batch = {k: v.cuda() for k, v in batch.items()}

output = model(**batch) # input the valid data into model and get the reuslts

pred = torch.argmax(output.logits, dim=-1)

acc_num += (pred.long() == batch["labels"].long()).float().sum() # the value after == is type of bool, we need to change it into int

return acc_num / len(validset) # finaly we count the negtive and positive value

def train(epoch=3, log_step=100):

global_step = 0

for ep in range(epoch):

model.train() # need to open the train mode for model

for batch in trainloader:

if torch.cuda.is_available():

batch = {k: v.cuda() for k, v in batch.items()}

optimizer.zero_grad() # the optimizer should be set zero first before used

output = model(**batch) # we want to put all the key into it, so we use double start

print(output)

output.loss.backward()

optimizer.step() # update the model

if global_step % log_step == 0:

print(f"ep: {ep}, global_step: {global_step}, loss: {output.loss.item()}")

global_step += 1 # gobale step need increase

acc = evaluate() # check the accuracy

print(f"ep: {ep}, acc: {acc}")

if __name__ == "__main__":

txtdataset = TxtDataset()

for i in range(5):

# print the sentense with label, 1 shows positive comment

print(txtdataset[i])

print("len txtdataset:" + str(len(txtdataset)))

# length means the propotion,

# for this case, train dataset is 0.9 and valid dataset is 0.1,

# validset + tran must equals 1.0

trainset, validset = ut.random_split(txtdataset, [.9, .1], generator=torch.Generator().manual_seed(42))

print("len trainset:" + str(len(trainset)))

print("len validset:" + str(len(validset)))

for i in range(10):

print(trainset[i])

tokenizer = AutoTokenizer.from_pretrained("hfl/rbt3")

trainloader = DataLoader(trainset, batch_size=32, shuffle=True, collate_fn=collate_fun)

validloader = DataLoader(validset, batch_size=64, shuffle=False, collate_fn=collate_fun)

print(type(trainloader))

from torch.optim import Adam # define the trainer, use adam gradiant descent

model = AutoModelForSequenceClassification.from_pretrained("hfl/rbt3")

if torch.cuda.is_available(): # model should be set on the gpu

model = model.cuda()

optimizer = Adam(model.parameters(), lr=2e-5)

train()

因为做的是迁移训练,因此这个迭代很较少,步长也很大,因此很快就结束

2.4 模型预测

2.3 中我们可以将训练好的 代码进行预测

代码:

# 前面的省略

from torch.optim import Adam # define the trainer, use adam gradiant descent

model = AutoModelForSequenceClassification.from_pretrained("hfl/rbt3")

if torch.cuda.is_available(): # model should be set on the gpu

model = model.cuda()

optimizer = Adam(model.parameters(), lr=2e-5)

train()

sen = "我觉得这家羊肉泡沫馆子不错,做的羊肉很好吃!"

id2_label = {0: "差评!", 1: "好评!"}

model.eval()

with torch.inference_mode():

inputs = tokenizer(sen, return_tensors="pt")

inputs = {k: v.cuda() for k, v in inputs.items()}

logits = model(**inputs).logits

pred = torch.argmax(logits, dim=-1)

print(f"输入:{sen}\n模型预测结果:{id2_label.get(pred.item())}")

这里单独说下logits:

(1)未激活的原始分数:logits 是模型前向传播的输出之一,它代表模型对每个类别的信心值。这些信心值并没有经过激活函数(如 softmax 或 sigmoid)的处理,因此它们可以是任意实数(正、负、或零)。

(2)分类任务中的核心输出:在分类任务中,logits 是关键的输出,因为它们在激活函数处理后会转换为概率分布。概率分布中的最大值通常对应于模型最终的预测类别。

于是我们得到预测结果:

可以看到我用了十分小众的羊肉泡馍,以及关键词牛逼作为评价,得到的结果和预期保持一致