>- **🍨 本文为[🔗365天深度学习训练营]中的学习记录博客**

>- **🍖 原作者:[K同学啊]**

本周任务:

1.请根据本文TensorFlow代码,编写出相应的pytorch代码

2.了解残差结构

3.是否可以将残差模块融入到C3当中(自由探索)

一、理论知识储备

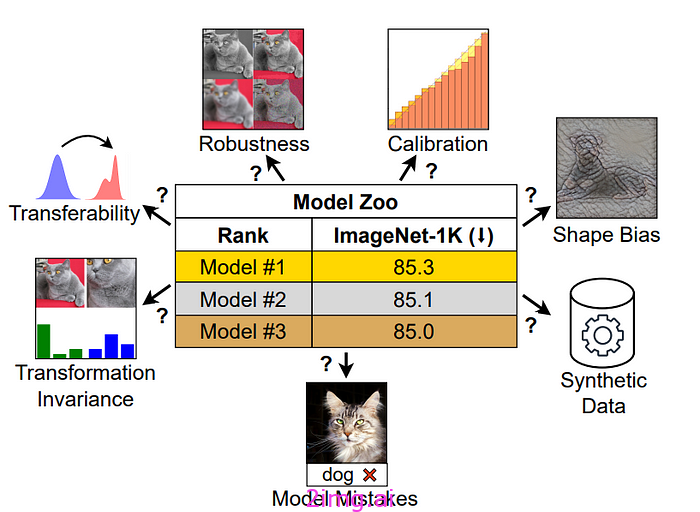

1.CNN算法发展

首先借用一下来自于网络的插图,在这张图上列出了一些有里程碑意义的、经典卷积神经网络。评估网络的性能,一个维度是识别精度,另一个维度则是网络的复杂度(计算量)。从这张图里,我们能看到:

(1) 2012年,AlexNet是由Alex Krizhevsky、llya Sutskever和Geoffrey Hinton在2012年lmageNet图像分类竞赛中提出的一种经典的卷积神经网络。AlexNet是首个深层卷积神经网络,同时也引入了ReLU激活函数、局部归一化、数据增强和Dropout 处理。

(2) VGG-16和VGG-19,这是依靠多层卷积+池化层堆叠而成的一个网络,其性能在当时也还不错,但是计算量巨大。VGG-16的网络结构,是将深层网络结构分为几个组,每组堆数量不等的Conv-ReLU层,并在最后一层使用MaxPool缩减特征图尺寸。

(3) GoogLeNet(也就是Inception V1),这是一个提出了使用并联卷积结构、且在每个通路中使用不同卷积核的网络,并且随后衍生出V2、V3、V4等一系列网络结构,构成一个家族。

(4) ResNet,有V1、V2、NeXt等不同的版本,这是一个提出恒等映射概念、具有短路直接路径模块化的网络结构,可以很方便地扩展为18~1001层(ResNet-18、ResNet-34、ResNet-50、ResNet-101中的数字都是表示网络层数)。

(5) DenseNet,这是一种具有前级特征重用、层间直连、结构递归扩展等特点的卷积网络。

2.残差网络的由来

深度残差网络Resnet(deep residual network)在2015年由何凯明等提出,因为它简单与实用并存,随后很多研究都是建立在ResNet-50或者ResNet-101基础上完成的。

ResNet主要解决深度卷积网络在深度加深时候的“退化”问题。 在一般的卷积神经网络中,增大网络深度后带来的第一个问题就是梯度消失、爆炸,这个问题在Szegedy提出BN后被顺利解决。BN层能对各层的输出做归一化,这样梯度在反向层层传递后仍能保持大小稳定,不会出现过小或过大的情况。但是作者发现加了BN后,再加大深度仍然不容易收敛,其提到了第二个问题——准确率下降问题:层级大到一定程度时,准确率就会饱和,然后迅速下降。这种下降既不是梯度消失引起的,也不是过拟合造成的,而是由于网络过于复杂,以至于光靠不加约束的放养式的训练很难达到理想的错误率。

准确率下降问题不是网络结构本身的问题,而是现有的训练方式不够理想造成的。当前广泛使用的训练方法,无论是SGD,还是RMSProp,或是Adam,都无法在网络深度变大后达到理论上最优的收敛结果。

作者在文中证明了只要有合适的网络结构,更深的网络肯定会比较浅的网络效果要好。证明过程也很简单:假设在一种网络A的后面添加几层形成新的网络B,如果增加的层级只是对A的输出做了个恒等映射(identity mapping),即A的输出经过新增的层级变成B的输出后没有发生变化,这样网络A和网络B的错误率就是相等的,也就证明了加深后的网络不会比加深前的网络效果差。

图1 残差模块

何凯明提出了一种残差结构来实现上述恒等映射(图1):整个模块除了正常的卷积层输出外,还有一个分支把输入直接连到输出上,该分支输出和卷积的输出做算数相加得到最终的输出,用公式表达就是 H ( x ) = F ( x ) + x ,x是输入,F(x)是卷积分支的输出,H ( x )是整个结构的输出。可以证明如果F(x)分支中所有参数都是0,H ( x ) 就是个恒等映射。

残差结构人为制造了恒等映射,就能让整个结构朝着恒等映射的方向去收敛,确保最终的错误率不会因为深度的变大而越来越差。如果一个网络通过简单的手工设置参数就能达到想要的结果,那这种结构就很容易通过训练来收敛到该结果,这是一条设计复杂的网络时通用的规则。

图2 两种残差单元

图2左边的单元为ResNet两层的残差单元,两层的残差单元包含两个相同输出的通道数的 3x3 卷积,只是用于较浅的ResNet网络,对较深的网络主要使用三层的残差单元。三层的残差单元又称为bottleneck结构,先用一个 1x1 卷积进行降维,然后 3x3 卷积,最后用 1x1 升维恢复原有的维度。另外,如果有输入输出维度不同的情况,可以对输入做一个线性映射变换维度,再连接后面的层,三层的残差单元对于相同数量的层又减少了参数量,因此可以拓展更深的模型,通过残差单元的组合有经典的ResNet-50,ResNet-101等网络结构。

我们可以通过何凯明的这篇文章深入了解:Deep Residual Learning for Image Recognition.pdf

二、前期工作

🚀我的环境:

- 语言环境:Python3.11.7

- 编译器:jupyter notebook

- 深度学习框架:TensorFlow2.13.0

1、设置CPU(也可以是GPU)

import tensorflow as tf

gpus=tf.config.list_physical_devices("GPU")

if gpus:

tf.config.experimental.set_memory_growth(gpus[0],True)

tf.config.set_visible_devices([gpus[0]],"GPU")2、导入数据

import pathlib

data_dir=r"D:\THE MNIST DATABASE\J系列\J1\bird_photos"

data_dir=pathlib.Path(data_dir)3、查看数据

image_count=len(list(data_dir.glob('*/*')))

print("图片总数为:",image_count)运行结果:

图片总数为: 565三、数据预处理

| 文件夹 | 数量 |

| Bananaquit | 166张 |

| Black Skimmer | 111张 |

| Black Throated Bushtiti | 122张 |

| Cockatoo | 166张 |

1、加载数据

加载训练集:

train_ds=tf.keras.preprocessing.image_dataset_from_directory(

data_dir,

validation_split=0.2,

subset="training",

seed=123,

image_size=(224,224),

batch_size=8

)这里突然出现了一个我从未遇到的错误:

UnicodeDecodeError: 'utf-8' codec can't decode byte 0xfb in position 27: invalid start byte我查询了好久才发现原来是因为中文路径造成的问题,翻阅以前自己做的项目,确实没有出现过中文路径,然后百度下解决方法,最简便的就是修改路径为英文,省时省力。故而返回前面修改路径为:D:\THE MNIST DATABASE\J-series\J1\bird_photos,然后再次运行,得到运行结果:

Found 565 files belonging to 4 classes.

Using 452 files for training.加载验证集:

val_ds=tf.keras.preprocessing.image_dataset_from_directory(

data_dir,

validation_split=0.2,

subset="validation",

seed=123,

image_size=(224,224),

batch_size=8

)运行结果:

Found 565 files belonging to 4 classes.

Using 113 files for validation.查看分类名称

class_names=train_ds.class_names

print(class_names)运行结果:

['Bananaquit', 'Black Skimmer', 'Black Throated Bushtiti', 'Cockatoo']2、可视化数据

import matplotlib.pyplot as plt

plt.rcParams['font.sans-serif']=['SimHei'] #正常显示中文标签

plt.rcParams['axes.unicode_minus']=False #正常显示负号

plt.figure(figsize=(10,5))

plt.suptitle("OreoCC的案例")

for images,labels in train_ds.take(1):

for i in range(8):

ax=plt.subplot(2,4,i+1)

plt.imshow(images[i].numpy().astype("uint8"))

plt.title(class_names[labels[i]])

plt.axis("off")运行结果:

单独查看

plt.imshow(images[1].numpy().astype("uint8"))运行结果:

3、再次检查数据

for image_batch,labels_batch in train_ds:

print(image_batch.shape)

print(labels_batch.shape)

break运行结果:

(8, 224, 224, 3)

(8,)image_batch是形状的张量(8,224,224,3)。这是一批形状224*224*4的8张图片(最后一维指的是彩色通道RGB)

labels_batch是形状(8,)的张量,这些标签对应8张图片。

4、配置数据集

shuffle() : 打乱数据,关于此函数的详细介绍可以参考:https://zhuanlan.zhihu.com/p/42417456

prefetch() :预取数据,加速运行,其详细介绍可以参考前面文章,里面都有讲解。

cache() :将数据集缓存到内存当中,加速运行

AUTOTUNE=tf.data.AUTOTUNE

train_ds=train_ds.cache().shuffle(1000).prefetch(buffer_size=AUTOTUNE)

val_ds=val_ds.cache().prefetch(buffer_size=AUTOTUNE)四、残差网络(ResNet)介绍

1、残差网络解决了什么

残差网络是为了解决神经网络隐藏层过多时,而引起的网络退化问题。退化(degradation)问题是指:当网络隐藏层变多时,网络的准确度达到饱和然后急剧退化,而且这个退化不是由于过拟合引起的。

拓展:深度神经网络的"两朵乌云"

- 梯度弥散/爆炸

简单来讲就是网络太深了,会导致模型训练难以收敛。这个问题可以被标准初始化和中间层正规化的方法有效控制。

- 网络退化

随着网络深度增加,网络的表现先是逐渐增加至饱和,然后迅速下降,这个退化不是由过拟合引起的。

梯度消失:随着深度增加,梯度急剧减小。梯度消失是指在反向传播过程中梯度逐渐降低到0导致参数不可学习的情况。最后几层可以改变,但前几层(靠近输入层的隐含层神经元)相对固定,变为浅层模型,不能有效地学习。很大程度上是来自于激活函数的饱和。

梯度爆炸:梯度消失相反,在反向传播过程中由于梯度过大导致模型无法收敛的情形。导致靠近输入层的隐含层神经元调整变动极大。

网络退化:在增加网络层数的过程中,training accuracy 逐渐趋于饱和,继续增加层数,training accuracy 就会出现下降的现象,而这种下降不是由过拟合造成的。实际上较深模型后面添加的不是恒等映射,而是一些非线性层。因此,退化问题也表明了:通过多个非线性层来近似恒等映射可能是困难的。如下图:

由上图可以看出,56-layer(层)的网络比20-layer的网络在训练集和测试集上的表现都要差【注意:这里不是过拟合(过拟合是在训练集上表现得好,而在测试集中表现得很差)】,说明如果只是简单的增加网络深度,可能会使神经网络模型退化,进而丢失网络前面获取的特征。

2、论文分析

可以通过大名鼎鼎的大神何凯明的这篇文章深入了解:

Deep Residual Learning for Image Recognition

本论文提出的问题:

- 深度神经网络训练困难:随着网络深度的增加,训练更深的神经网络变得更加困难,会出现梯度消失或爆炸的问题,导致网络难以收敛。

- 深度增加时准确率饱和然后下降:即使使用现有的技术(如归一化初始化和中间归一化层)解决了梯度问题,当网络深度增加时,准确率会饱和然后迅速下降,这被称为退化问题。

本论文的解决方案:

- 残差学习框架:论文提出了一种新的框架,称为残差学习,通过让网络层学习输入的残差函数而不是未经引用的函数来简化训练过程。

- 引入快捷连接(Shortcut Connections):在网络中引入了快捷连接,这些连接跳过一个或多个层,其输出被加到堆叠层的输出上,从而允许网络学习输入和输出之间的残差。

- 残差块(Residual Blocks):通过构建残差块,每个块包括两个或多个卷积层和一个快捷连接,使得如果最优函数接近于恒等映射,则求解器可以更容易地找到解。

将所需的基础映射表示为H(x)

让堆叠的非线性层适合F(x):= H(x)- x的另一个映射。

原始映射为F(x)+ x。

通过快捷连接来实现身份验证。

最后结果:

- 优化容易:残差网络(ResNet)比普通网络(Plain Networks)更容易优化,并且在增加深度时可以获得更高的准确率。

- 准确率提升:在ImageNet数据集上,使用残差网络可以达到152层的深度,而且复杂度低于VGG网络,并且在ILSVRC 2015分类任务上取得了第一名。

- 在多个任务上的成功应用:残差网络不仅在图像分类上表现出色,还在COCO数据集的目标检测任务上取得了显著的改进,相对提高了28%的性能,并在ILSVRC & COCO 2015比赛中的多个任务中获得了第一名。

论文通过大量的实验验证了残差学习框架的有效性,并展示了深度残差网络在多个视觉识别任务上的强大性能。

没有时间看原文的童鞋可以通过这篇文章进行学习:ResNet:《Deep Residual Learning for Image Recognition》 - 知乎 (zhihu.com)

3、ResNet-50介绍

ResNet-50有两个基本的块,分别名为Conv Block和Identity Block

Conv Block结构:

五、构建ResNet-50网络模型

尝试按照上面三张图构建ResNet-50模型:

from keras import layers

from keras.layers import Input,Activation,BatchNormalization,Flatten

from keras.layers import Dense,Conv2D,MaxPooling2D,ZeroPadding2D,AveragePooling2D

from keras.models import Model

def identity_block(input_tensor,kernel_size,filters,stage,block):

filters1,filters2,filters3=filters

name_base=str(stage)+block+'_identity_block_'

x=Conv2D(filters1,(1,1),name=name_base+'conv1')(input_tensor)

x=BatchNormalization(name=name_base+'bn1')(x)

x=Activation('relu',name=name_base+'relu1')(x)

x=Conv2D(filters2,kernel_size,padding='same',name=name_base+'conv2')(x)

x=BatchNormalization(name=name_base+'bn2')(x)

x=Activation('relu',name=name_base+'relu2')(x)

x=Conv2D(filters3,(1,1),name=name_base+'conv3')(x)

x=BatchNormalization(name=name_base+'bn3')(x)

x=layers.add([x,input_tensor],name=name_base+'add')

x=Activation('relu',name=name_base+'relu4')(x)

return x

#在残差网络中,广泛地使用了BN层:但是没有使用MaxPooling以便减小特征图尺寸

#作为替代,在每个模块的第一层,都使用了strides=(2,2)的方式进行特征图尺寸缩减

#与使用MaxPooling相比,毫无疑问是减少了卷积的次数,输入图像分辨率较大时比较适合

#在残差网络的最后一级,先利用layer.add()实现H(x)=x+F(x)

def conv_block(input_tensor,kernel_size,filters,stage,block,strides=(2,2)):

filters1,filters2,filters3=filters

res_name_base=str(stage)+block+'_conv_block_res_'

name_base=str(stage)+block+'_conv_block_'

x=Conv2D(filters1,(1,1),strides=strides,name=name_base+'conv1')(input_tensor)

x=BatchNormalization(name=name_base+'bn1')(x)

x=Activation('relu',name=name_base+'relu1')(x)

x=Conv2D(filters2,kernel_size,padding='same',name=name_base+'conv2')(x)

x=BatchNormalization(name=name_base+'bn2')(x)

x=Activation('relu',name=name_base+'relu2')(x)

x=Conv2D(filters3,(1,1),name=name_base+'conv3')(x)

x=BatchNormalization(name=name_base+'bn3')(x)

shortcut=Conv2D(filters3,(1,1),strides=strides,name=res_name_base+'conv')(input_tensor)

shortcut=BatchNormalization(name=res_name_base+'bn')(shortcut)

x=layers.add([x,shortcut],name=name_base+'add')

x=Activation('relu',name=name_base+'relu4')(x)

return x

def ResNet50(input_shape=[224,224,3],classes=1000):

img_input=Input(shape=input_shape)

x=ZeroPadding2D((3,3))(img_input)

x=Conv2D(64,(7,7),strides=(2,2),name='conv1')(x)

x=BatchNormalization(name='bn_conv1')(x)

x=Activation('relu')(x)

x=MaxPooling2D((3,3),strides=(2,2))(x)

x= conv_block(x,3,[64,64,256],stage=2,block='a',strides=(1,1))

x=identity_block(x,3,[64,64,256],stage=2,block='b')

x=identity_block(x,3,[64,64,256],stage=2,block='c')

x= conv_block(x,3,[128,128,512],stage=3,block='a')

x=identity_block(x,3,[128,128,512],stage=3,block='b')

x=identity_block(x,3,[128,128,512],stage=3,block='c')

x=identity_block(x,3,[128,128,512],stage=3,block='d')

x= conv_block(x,3,[256,256,1024],stage=4,block='a')

x=identity_block(x,3,[256,256,1024],stage=4,block='b')

x=identity_block(x,3,[256,256,1024],stage=4,block='c')

x=identity_block(x,3,[256,256,1024],stage=4,block='d')

x=identity_block(x,3,[256,256,1024],stage=4,block='e')

x=identity_block(x,3,[256,256,1024],stage=4,block='f')

x= conv_block(x,3,[512,512,2048],stage=5,block='a')

x=identity_block(x,3,[512,512,2048],stage=5,block='b')

x=identity_block(x,3,[512,512,2048],stage=5,block='c')

x=AveragePooling2D((7,7),name='avg_pool')(x)

x=Flatten()(x)

x=Dense(classes,activation='softmax',name='fc1000')(x)

model=Model(img_input,x,name='resnet50')

#加载预训练模型

model.load_weights(r"D:\THE MNIST DATABASE\J-series\resnet50_weights_tf_dim_ordering_tf_kernels.h5")

return model

model=ResNet50()

model.summary()运行结果:

Model: "resnet50"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_8 (InputLayer) [(None, 224, 224, 3)] 0 []

zero_padding2d_7 (ZeroPadd (None, 230, 230, 3) 0 ['input_8[0][0]']

ing2D)

conv1 (Conv2D) (None, 112, 112, 64) 9472 ['zero_padding2d_7[0][0]']

bn_conv1 (BatchNormalizati (None, 112, 112, 64) 256 ['conv1[0][0]']

on)

activation_7 (Activation) (None, 112, 112, 64) 0 ['bn_conv1[0][0]']

max_pooling2d_7 (MaxPoolin (None, 55, 55, 64) 0 ['activation_7[0][0]']

g2D)

2a_conv_block_conv1 (Conv2 (None, 55, 55, 64) 4160 ['max_pooling2d_7[0][0]']

D)

2a_conv_block_bn1 (BatchNo (None, 55, 55, 64) 256 ['2a_conv_block_conv1[0][0]']

rmalization)

2a_conv_block_relu1 (Activ (None, 55, 55, 64) 0 ['2a_conv_block_bn1[0][0]']

ation)

2a_conv_block_conv2 (Conv2 (None, 55, 55, 64) 36928 ['2a_conv_block_relu1[0][0]']

D)

2a_conv_block_bn2 (BatchNo (None, 55, 55, 64) 256 ['2a_conv_block_conv2[0][0]']

rmalization)

2a_conv_block_relu2 (Activ (None, 55, 55, 64) 0 ['2a_conv_block_bn2[0][0]']

ation)

2a_conv_block_conv3 (Conv2 (None, 55, 55, 256) 16640 ['2a_conv_block_relu2[0][0]']

D)

2a_conv_block_res_conv (Co (None, 55, 55, 256) 16640 ['max_pooling2d_7[0][0]']

nv2D)

2a_conv_block_bn3 (BatchNo (None, 55, 55, 256) 1024 ['2a_conv_block_conv3[0][0]']

rmalization)

2a_conv_block_res_bn (Batc (None, 55, 55, 256) 1024 ['2a_conv_block_res_conv[0][0]

hNormalization) ']

2a_conv_block_add (Add) (None, 55, 55, 256) 0 ['2a_conv_block_bn3[0][0]',

'2a_conv_block_res_bn[0][0]']

2a_conv_block_relu4 (Activ (None, 55, 55, 256) 0 ['2a_conv_block_add[0][0]']

ation)

2b_identity_block_conv1 (C (None, 55, 55, 64) 16448 ['2a_conv_block_relu4[0][0]']

onv2D)

2b_identity_block_bn1 (Bat (None, 55, 55, 64) 256 ['2b_identity_block_conv1[0][0

chNormalization) ]']

2b_identity_block_relu1 (A (None, 55, 55, 64) 0 ['2b_identity_block_bn1[0][0]'

ctivation) ]

2b_identity_block_conv2 (C (None, 55, 55, 64) 36928 ['2b_identity_block_relu1[0][0

onv2D) ]']

2b_identity_block_bn2 (Bat (None, 55, 55, 64) 256 ['2b_identity_block_conv2[0][0

chNormalization) ]']

2b_identity_block_relu2 (A (None, 55, 55, 64) 0 ['2b_identity_block_bn2[0][0]'

ctivation) ]

2b_identity_block_conv3 (C (None, 55, 55, 256) 16640 ['2b_identity_block_relu2[0][0

onv2D) ]']

2b_identity_block_bn3 (Bat (None, 55, 55, 256) 1024 ['2b_identity_block_conv3[0][0

chNormalization) ]']

2b_identity_block_add (Add (None, 55, 55, 256) 0 ['2b_identity_block_bn3[0][0]'

) , '2a_conv_block_relu4[0][0]']

2b_identity_block_relu4 (A (None, 55, 55, 256) 0 ['2b_identity_block_add[0][0]'

ctivation) ]

2c_identity_block_conv1 (C (None, 55, 55, 64) 16448 ['2b_identity_block_relu4[0][0

onv2D) ]']

2c_identity_block_bn1 (Bat (None, 55, 55, 64) 256 ['2c_identity_block_conv1[0][0

chNormalization) ]']

2c_identity_block_relu1 (A (None, 55, 55, 64) 0 ['2c_identity_block_bn1[0][0]'

ctivation) ]

2c_identity_block_conv2 (C (None, 55, 55, 64) 36928 ['2c_identity_block_relu1[0][0

onv2D) ]']

2c_identity_block_bn2 (Bat (None, 55, 55, 64) 256 ['2c_identity_block_conv2[0][0

chNormalization) ]']

2c_identity_block_relu2 (A (None, 55, 55, 64) 0 ['2c_identity_block_bn2[0][0]'

ctivation) ]

2c_identity_block_conv3 (C (None, 55, 55, 256) 16640 ['2c_identity_block_relu2[0][0

onv2D) ]']

2c_identity_block_bn3 (Bat (None, 55, 55, 256) 1024 ['2c_identity_block_conv3[0][0

chNormalization) ]']

2c_identity_block_add (Add (None, 55, 55, 256) 0 ['2c_identity_block_bn3[0][0]'

) , '2b_identity_block_relu4[0][

0]']

2c_identity_block_relu4 (A (None, 55, 55, 256) 0 ['2c_identity_block_add[0][0]'

ctivation) ]

3a_conv_block_conv1 (Conv2 (None, 28, 28, 128) 32896 ['2c_identity_block_relu4[0][0

D) ]']

3a_conv_block_bn1 (BatchNo (None, 28, 28, 128) 512 ['3a_conv_block_conv1[0][0]']

rmalization)

3a_conv_block_relu1 (Activ (None, 28, 28, 128) 0 ['3a_conv_block_bn1[0][0]']

ation)

3a_conv_block_conv2 (Conv2 (None, 28, 28, 128) 147584 ['3a_conv_block_relu1[0][0]']

D)

3a_conv_block_bn2 (BatchNo (None, 28, 28, 128) 512 ['3a_conv_block_conv2[0][0]']

rmalization)

3a_conv_block_relu2 (Activ (None, 28, 28, 128) 0 ['3a_conv_block_bn2[0][0]']

ation)

3a_conv_block_conv3 (Conv2 (None, 28, 28, 512) 66048 ['3a_conv_block_relu2[0][0]']

D)

3a_conv_block_res_conv (Co (None, 28, 28, 512) 131584 ['2c_identity_block_relu4[0][0

nv2D) ]']

3a_conv_block_bn3 (BatchNo (None, 28, 28, 512) 2048 ['3a_conv_block_conv3[0][0]']

rmalization)

3a_conv_block_res_bn (Batc (None, 28, 28, 512) 2048 ['3a_conv_block_res_conv[0][0]

hNormalization) ']

3a_conv_block_add (Add) (None, 28, 28, 512) 0 ['3a_conv_block_bn3[0][0]',

'3a_conv_block_res_bn[0][0]']

3a_conv_block_relu4 (Activ (None, 28, 28, 512) 0 ['3a_conv_block_add[0][0]']

ation)

3b_identity_block_conv1 (C (None, 28, 28, 128) 65664 ['3a_conv_block_relu4[0][0]']

onv2D)

3b_identity_block_bn1 (Bat (None, 28, 28, 128) 512 ['3b_identity_block_conv1[0][0

chNormalization) ]']

3b_identity_block_relu1 (A (None, 28, 28, 128) 0 ['3b_identity_block_bn1[0][0]'

ctivation) ]

3b_identity_block_conv2 (C (None, 28, 28, 128) 147584 ['3b_identity_block_relu1[0][0

onv2D) ]']

3b_identity_block_bn2 (Bat (None, 28, 28, 128) 512 ['3b_identity_block_conv2[0][0

chNormalization) ]']

3b_identity_block_relu2 (A (None, 28, 28, 128) 0 ['3b_identity_block_bn2[0][0]'

ctivation) ]

3b_identity_block_conv3 (C (None, 28, 28, 512) 66048 ['3b_identity_block_relu2[0][0

onv2D) ]']

3b_identity_block_bn3 (Bat (None, 28, 28, 512) 2048 ['3b_identity_block_conv3[0][0

chNormalization) ]']

3b_identity_block_add (Add (None, 28, 28, 512) 0 ['3b_identity_block_bn3[0][0]'

) , '3a_conv_block_relu4[0][0]']

3b_identity_block_relu4 (A (None, 28, 28, 512) 0 ['3b_identity_block_add[0][0]'

ctivation) ]

3c_identity_block_conv1 (C (None, 28, 28, 128) 65664 ['3b_identity_block_relu4[0][0

onv2D) ]']

3c_identity_block_bn1 (Bat (None, 28, 28, 128) 512 ['3c_identity_block_conv1[0][0

chNormalization) ]']

3c_identity_block_relu1 (A (None, 28, 28, 128) 0 ['3c_identity_block_bn1[0][0]'

ctivation) ]

3c_identity_block_conv2 (C (None, 28, 28, 128) 147584 ['3c_identity_block_relu1[0][0

onv2D) ]']

3c_identity_block_bn2 (Bat (None, 28, 28, 128) 512 ['3c_identity_block_conv2[0][0

chNormalization) ]']

3c_identity_block_relu2 (A (None, 28, 28, 128) 0 ['3c_identity_block_bn2[0][0]'

ctivation) ]

3c_identity_block_conv3 (C (None, 28, 28, 512) 66048 ['3c_identity_block_relu2[0][0

onv2D) ]']

3c_identity_block_bn3 (Bat (None, 28, 28, 512) 2048 ['3c_identity_block_conv3[0][0

chNormalization) ]']

3c_identity_block_add (Add (None, 28, 28, 512) 0 ['3c_identity_block_bn3[0][0]'

) , '3b_identity_block_relu4[0][

0]']

3c_identity_block_relu4 (A (None, 28, 28, 512) 0 ['3c_identity_block_add[0][0]'

ctivation) ]

3d_identity_block_conv1 (C (None, 28, 28, 128) 65664 ['3c_identity_block_relu4[0][0

onv2D) ]']

3d_identity_block_bn1 (Bat (None, 28, 28, 128) 512 ['3d_identity_block_conv1[0][0

chNormalization) ]']

3d_identity_block_relu1 (A (None, 28, 28, 128) 0 ['3d_identity_block_bn1[0][0]'

ctivation) ]

3d_identity_block_conv2 (C (None, 28, 28, 128) 147584 ['3d_identity_block_relu1[0][0

onv2D) ]']

3d_identity_block_bn2 (Bat (None, 28, 28, 128) 512 ['3d_identity_block_conv2[0][0

chNormalization) ]']

3d_identity_block_relu2 (A (None, 28, 28, 128) 0 ['3d_identity_block_bn2[0][0]'

ctivation) ]

3d_identity_block_conv3 (C (None, 28, 28, 512) 66048 ['3d_identity_block_relu2[0][0

onv2D) ]']

3d_identity_block_bn3 (Bat (None, 28, 28, 512) 2048 ['3d_identity_block_conv3[0][0

chNormalization) ]']

3d_identity_block_add (Add (None, 28, 28, 512) 0 ['3d_identity_block_bn3[0][0]'

) , '3c_identity_block_relu4[0][

0]']

3d_identity_block_relu4 (A (None, 28, 28, 512) 0 ['3d_identity_block_add[0][0]'

ctivation) ]

4a_conv_block_conv1 (Conv2 (None, 14, 14, 256) 131328 ['3d_identity_block_relu4[0][0

D) ]']

4a_conv_block_bn1 (BatchNo (None, 14, 14, 256) 1024 ['4a_conv_block_conv1[0][0]']

rmalization)

4a_conv_block_relu1 (Activ (None, 14, 14, 256) 0 ['4a_conv_block_bn1[0][0]']

ation)

4a_conv_block_conv2 (Conv2 (None, 14, 14, 256) 590080 ['4a_conv_block_relu1[0][0]']

D)

4a_conv_block_bn2 (BatchNo (None, 14, 14, 256) 1024 ['4a_conv_block_conv2[0][0]']

rmalization)

4a_conv_block_relu2 (Activ (None, 14, 14, 256) 0 ['4a_conv_block_bn2[0][0]']

ation)

4a_conv_block_conv3 (Conv2 (None, 14, 14, 1024) 263168 ['4a_conv_block_relu2[0][0]']

D)

4a_conv_block_res_conv (Co (None, 14, 14, 1024) 525312 ['3d_identity_block_relu4[0][0

nv2D) ]']

4a_conv_block_bn3 (BatchNo (None, 14, 14, 1024) 4096 ['4a_conv_block_conv3[0][0]']

rmalization)

4a_conv_block_res_bn (Batc (None, 14, 14, 1024) 4096 ['4a_conv_block_res_conv[0][0]

hNormalization) ']

4a_conv_block_add (Add) (None, 14, 14, 1024) 0 ['4a_conv_block_bn3[0][0]',

'4a_conv_block_res_bn[0][0]']

4a_conv_block_relu4 (Activ (None, 14, 14, 1024) 0 ['4a_conv_block_add[0][0]']

ation)

4b_identity_block_conv1 (C (None, 14, 14, 256) 262400 ['4a_conv_block_relu4[0][0]']

onv2D)

4b_identity_block_bn1 (Bat (None, 14, 14, 256) 1024 ['4b_identity_block_conv1[0][0

chNormalization) ]']

4b_identity_block_relu1 (A (None, 14, 14, 256) 0 ['4b_identity_block_bn1[0][0]'

ctivation) ]

4b_identity_block_conv2 (C (None, 14, 14, 256) 590080 ['4b_identity_block_relu1[0][0

onv2D) ]']

4b_identity_block_bn2 (Bat (None, 14, 14, 256) 1024 ['4b_identity_block_conv2[0][0

chNormalization) ]']

4b_identity_block_relu2 (A (None, 14, 14, 256) 0 ['4b_identity_block_bn2[0][0]'

ctivation) ]

4b_identity_block_conv3 (C (None, 14, 14, 1024) 263168 ['4b_identity_block_relu2[0][0

onv2D) ]']

4b_identity_block_bn3 (Bat (None, 14, 14, 1024) 4096 ['4b_identity_block_conv3[0][0

chNormalization) ]']

4b_identity_block_add (Add (None, 14, 14, 1024) 0 ['4b_identity_block_bn3[0][0]'

) , '4a_conv_block_relu4[0][0]']

4b_identity_block_relu4 (A (None, 14, 14, 1024) 0 ['4b_identity_block_add[0][0]'

ctivation) ]

4c_identity_block_conv1 (C (None, 14, 14, 256) 262400 ['4b_identity_block_relu4[0][0

onv2D) ]']

4c_identity_block_bn1 (Bat (None, 14, 14, 256) 1024 ['4c_identity_block_conv1[0][0

chNormalization) ]']

4c_identity_block_relu1 (A (None, 14, 14, 256) 0 ['4c_identity_block_bn1[0][0]'

ctivation) ]

4c_identity_block_conv2 (C (None, 14, 14, 256) 590080 ['4c_identity_block_relu1[0][0

onv2D) ]']

4c_identity_block_bn2 (Bat (None, 14, 14, 256) 1024 ['4c_identity_block_conv2[0][0

chNormalization) ]']

4c_identity_block_relu2 (A (None, 14, 14, 256) 0 ['4c_identity_block_bn2[0][0]'

ctivation) ]

4c_identity_block_conv3 (C (None, 14, 14, 1024) 263168 ['4c_identity_block_relu2[0][0

onv2D) ]']

4c_identity_block_bn3 (Bat (None, 14, 14, 1024) 4096 ['4c_identity_block_conv3[0][0

chNormalization) ]']

4c_identity_block_add (Add (None, 14, 14, 1024) 0 ['4c_identity_block_bn3[0][0]'

) , '4b_identity_block_relu4[0][

0]']

4c_identity_block_relu4 (A (None, 14, 14, 1024) 0 ['4c_identity_block_add[0][0]'

ctivation) ]

4d_identity_block_conv1 (C (None, 14, 14, 256) 262400 ['4c_identity_block_relu4[0][0

onv2D) ]']

4d_identity_block_bn1 (Bat (None, 14, 14, 256) 1024 ['4d_identity_block_conv1[0][0

chNormalization) ]']

4d_identity_block_relu1 (A (None, 14, 14, 256) 0 ['4d_identity_block_bn1[0][0]'

ctivation) ]

4d_identity_block_conv2 (C (None, 14, 14, 256) 590080 ['4d_identity_block_relu1[0][0

onv2D) ]']

4d_identity_block_bn2 (Bat (None, 14, 14, 256) 1024 ['4d_identity_block_conv2[0][0

chNormalization) ]']

4d_identity_block_relu2 (A (None, 14, 14, 256) 0 ['4d_identity_block_bn2[0][0]'

ctivation) ]

4d_identity_block_conv3 (C (None, 14, 14, 1024) 263168 ['4d_identity_block_relu2[0][0

onv2D) ]']

4d_identity_block_bn3 (Bat (None, 14, 14, 1024) 4096 ['4d_identity_block_conv3[0][0

chNormalization) ]']

4d_identity_block_add (Add (None, 14, 14, 1024) 0 ['4d_identity_block_bn3[0][0]'

) , '4c_identity_block_relu4[0][

0]']

4d_identity_block_relu4 (A (None, 14, 14, 1024) 0 ['4d_identity_block_add[0][0]'

ctivation) ]

4e_identity_block_conv1 (C (None, 14, 14, 256) 262400 ['4d_identity_block_relu4[0][0

onv2D) ]']

4e_identity_block_bn1 (Bat (None, 14, 14, 256) 1024 ['4e_identity_block_conv1[0][0

chNormalization) ]']

4e_identity_block_relu1 (A (None, 14, 14, 256) 0 ['4e_identity_block_bn1[0][0]'

ctivation) ]

4e_identity_block_conv2 (C (None, 14, 14, 256) 590080 ['4e_identity_block_relu1[0][0

onv2D) ]']

4e_identity_block_bn2 (Bat (None, 14, 14, 256) 1024 ['4e_identity_block_conv2[0][0

chNormalization) ]']

4e_identity_block_relu2 (A (None, 14, 14, 256) 0 ['4e_identity_block_bn2[0][0]'

ctivation) ]

4e_identity_block_conv3 (C (None, 14, 14, 1024) 263168 ['4e_identity_block_relu2[0][0

onv2D) ]']

4e_identity_block_bn3 (Bat (None, 14, 14, 1024) 4096 ['4e_identity_block_conv3[0][0

chNormalization) ]']

4e_identity_block_add (Add (None, 14, 14, 1024) 0 ['4e_identity_block_bn3[0][0]'

) , '4d_identity_block_relu4[0][

0]']

4e_identity_block_relu4 (A (None, 14, 14, 1024) 0 ['4e_identity_block_add[0][0]'

ctivation) ]

4f_identity_block_conv1 (C (None, 14, 14, 256) 262400 ['4e_identity_block_relu4[0][0

onv2D) ]']

4f_identity_block_bn1 (Bat (None, 14, 14, 256) 1024 ['4f_identity_block_conv1[0][0

chNormalization) ]']

4f_identity_block_relu1 (A (None, 14, 14, 256) 0 ['4f_identity_block_bn1[0][0]'

ctivation) ]

4f_identity_block_conv2 (C (None, 14, 14, 256) 590080 ['4f_identity_block_relu1[0][0

onv2D) ]']

4f_identity_block_bn2 (Bat (None, 14, 14, 256) 1024 ['4f_identity_block_conv2[0][0

chNormalization) ]']

4f_identity_block_relu2 (A (None, 14, 14, 256) 0 ['4f_identity_block_bn2[0][0]'

ctivation) ]

4f_identity_block_conv3 (C (None, 14, 14, 1024) 263168 ['4f_identity_block_relu2[0][0

onv2D) ]']

4f_identity_block_bn3 (Bat (None, 14, 14, 1024) 4096 ['4f_identity_block_conv3[0][0

chNormalization) ]']

4f_identity_block_add (Add (None, 14, 14, 1024) 0 ['4f_identity_block_bn3[0][0]'

) , '4e_identity_block_relu4[0][

0]']

4f_identity_block_relu4 (A (None, 14, 14, 1024) 0 ['4f_identity_block_add[0][0]'

ctivation) ]

5a_conv_block_conv1 (Conv2 (None, 7, 7, 512) 524800 ['4f_identity_block_relu4[0][0

D) ]']

5a_conv_block_bn1 (BatchNo (None, 7, 7, 512) 2048 ['5a_conv_block_conv1[0][0]']

rmalization)

5a_conv_block_relu1 (Activ (None, 7, 7, 512) 0 ['5a_conv_block_bn1[0][0]']

ation)

5a_conv_block_conv2 (Conv2 (None, 7, 7, 512) 2359808 ['5a_conv_block_relu1[0][0]']

D)

5a_conv_block_bn2 (BatchNo (None, 7, 7, 512) 2048 ['5a_conv_block_conv2[0][0]']

rmalization)

5a_conv_block_relu2 (Activ (None, 7, 7, 512) 0 ['5a_conv_block_bn2[0][0]']

ation)

5a_conv_block_conv3 (Conv2 (None, 7, 7, 2048) 1050624 ['5a_conv_block_relu2[0][0]']

D)

5a_conv_block_res_conv (Co (None, 7, 7, 2048) 2099200 ['4f_identity_block_relu4[0][0

nv2D) ]']

5a_conv_block_bn3 (BatchNo (None, 7, 7, 2048) 8192 ['5a_conv_block_conv3[0][0]']

rmalization)

5a_conv_block_res_bn (Batc (None, 7, 7, 2048) 8192 ['5a_conv_block_res_conv[0][0]

hNormalization) ']

5a_conv_block_add (Add) (None, 7, 7, 2048) 0 ['5a_conv_block_bn3[0][0]',

'5a_conv_block_res_bn[0][0]']

5a_conv_block_relu4 (Activ (None, 7, 7, 2048) 0 ['5a_conv_block_add[0][0]']

ation)

5b_identity_block_conv1 (C (None, 7, 7, 512) 1049088 ['5a_conv_block_relu4[0][0]']

onv2D)

5b_identity_block_bn1 (Bat (None, 7, 7, 512) 2048 ['5b_identity_block_conv1[0][0

chNormalization) ]']

5b_identity_block_relu1 (A (None, 7, 7, 512) 0 ['5b_identity_block_bn1[0][0]'

ctivation) ]

5b_identity_block_conv2 (C (None, 7, 7, 512) 2359808 ['5b_identity_block_relu1[0][0

onv2D) ]']

5b_identity_block_bn2 (Bat (None, 7, 7, 512) 2048 ['5b_identity_block_conv2[0][0

chNormalization) ]']

5b_identity_block_relu2 (A (None, 7, 7, 512) 0 ['5b_identity_block_bn2[0][0]'

ctivation) ]

5b_identity_block_conv3 (C (None, 7, 7, 2048) 1050624 ['5b_identity_block_relu2[0][0

onv2D) ]']

5b_identity_block_bn3 (Bat (None, 7, 7, 2048) 8192 ['5b_identity_block_conv3[0][0

chNormalization) ]']

5b_identity_block_add (Add (None, 7, 7, 2048) 0 ['5b_identity_block_bn3[0][0]'

) , '5a_conv_block_relu4[0][0]']

5b_identity_block_relu4 (A (None, 7, 7, 2048) 0 ['5b_identity_block_add[0][0]'

ctivation) ]

5c_identity_block_conv1 (C (None, 7, 7, 512) 1049088 ['5b_identity_block_relu4[0][0

onv2D) ]']

5c_identity_block_bn1 (Bat (None, 7, 7, 512) 2048 ['5c_identity_block_conv1[0][0

chNormalization) ]']

5c_identity_block_relu1 (A (None, 7, 7, 512) 0 ['5c_identity_block_bn1[0][0]'

ctivation) ]

5c_identity_block_conv2 (C (None, 7, 7, 512) 2359808 ['5c_identity_block_relu1[0][0

onv2D) ]']

5c_identity_block_bn2 (Bat (None, 7, 7, 512) 2048 ['5c_identity_block_conv2[0][0

chNormalization) ]']

5c_identity_block_relu2 (A (None, 7, 7, 512) 0 ['5c_identity_block_bn2[0][0]'

ctivation) ]

5c_identity_block_conv3 (C (None, 7, 7, 2048) 1050624 ['5c_identity_block_relu2[0][0

onv2D) ]']

5c_identity_block_bn3 (Bat (None, 7, 7, 2048) 8192 ['5c_identity_block_conv3[0][0

chNormalization) ]']

5c_identity_block_add (Add (None, 7, 7, 2048) 0 ['5c_identity_block_bn3[0][0]'

) , '5b_identity_block_relu4[0][

0]']

5c_identity_block_relu4 (A (None, 7, 7, 2048) 0 ['5c_identity_block_add[0][0]'

ctivation) ]

avg_pool (AveragePooling2D (None, 1, 1, 2048) 0 ['5c_identity_block_relu4[0][0

) ]']

flatten_6 (Flatten) (None, 2048) 0 ['avg_pool[0][0]']

fc1000 (Dense) (None, 1000) 2049000 ['flatten_6[0][0]']

==================================================================================================

Total params: 25636712 (97.80 MB)

Trainable params: 25583592 (97.59 MB)

Non-trainable params: 53120 (207.50 KB)

__________________________________________________________________________________________________六、编译

在准备对模型进行训练之前,还需要再对其进行一些设置。以下内容是在模型的编译步骤中添加的:

损失函数(loss):用于衡量模型在训练期间的准确率。

优化器(optimizer):决定模型如何根据其看到的数据和自身的损失函数进行更新。

指标(metrics):用于监控训练和测试步骤。以下示例使用了准确率,即被正确分类的图像的比率。

#设置优化器,这里修改了学习率

opt=tf.keras.optimizers.Adam(learning_rate=1e-7)

model.compile(optimizer=opt,

loss='sparse_categorical_crossentropy',

metrics=['accuracy']

)七、训练模型

epochs=10

history=model.fit(

train_ds,

validation_data=val_ds,

epochs=epochs

)运行结果:

Epoch 1/10

57/57 [==============================] - 141s 2s/step - loss: 1.4295 - accuracy: 0.6858 - val_loss: 568.1717 - val_accuracy: 0.2655

Epoch 2/10

57/57 [==============================] - 127s 2s/step - loss: 0.5009 - accuracy: 0.8518 - val_loss: 15.5711 - val_accuracy: 0.2566

Epoch 3/10

57/57 [==============================] - 122s 2s/step - loss: 0.2234 - accuracy: 0.9159 - val_loss: 10.4333 - val_accuracy: 0.2566

Epoch 4/10

57/57 [==============================] - 121s 2s/step - loss: 0.1847 - accuracy: 0.9226 - val_loss: 1.3618 - val_accuracy: 0.7699

Epoch 5/10

57/57 [==============================] - 142s 2s/step - loss: 0.0681 - accuracy: 0.9757 - val_loss: 0.3556 - val_accuracy: 0.8938

Epoch 6/10

57/57 [==============================] - 125s 2s/step - loss: 0.0431 - accuracy: 0.9934 - val_loss: 0.1768 - val_accuracy: 0.9469

Epoch 7/10

57/57 [==============================] - 127s 2s/step - loss: 0.0094 - accuracy: 0.9978 - val_loss: 0.1479 - val_accuracy: 0.9735

Epoch 8/10

57/57 [==============================] - 125s 2s/step - loss: 0.0012 - accuracy: 1.0000 - val_loss: 0.1206 - val_accuracy: 0.9823

Epoch 9/10

57/57 [==============================] - 128s 2s/step - loss: 6.5304e-04 - accuracy: 1.0000 - val_loss: 0.1136 - val_accuracy: 0.9823

Epoch 10/10

57/57 [==============================] - 121s 2s/step - loss: 4.9506e-04 - accuracy: 1.0000 - val_loss: 0.1131 - val_accuracy: 0.9823八、模型评估

acc=history.history['accuracy']

val_acc=history.history['val_accuracy']

loss=history.history['loss']

val_loss=history.history['val_loss']

epochs_range=range(epochs)

plt.figure(figsize=(12,4))

plt.suptitle("OreoCC")

plt.subplot(1,2,1)

plt.plot(epochs_range,acc,label='Training Accuracy')

plt.plot(epochs_range,val_acc,label='Validation Accuracy')

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')

plt.subplot(1,2,2)

plt.plot(epochs_range,loss,label='Training Loss')

plt.plot(epochs_range,val_loss,label='Validation Loss')

plt.legend(loc='upper right')

plt.title('Training and Validation Loss')

plt.show()运行结果:

九、预测

import numpy as np

#采用加载的模型(new_model)来看预测结果

plt.figure(figsize=(10,5))

plt.suptitle("OreoCC")

for images,labels in val_ds.take(1):

for i in range(8):

ax=plt.subplot(2,4,i+1)

#显示图片

plt.imshow(images[i].numpy().astype("uint8"))

#需要给图片增加一个维度

img_array=tf.expand_dims(images[i],0)

#使用模型预测图片中的人物

predictions=model.predict(img_array)

plt.title(class_names[np.argmax(predictions)])

plt.axis("off")运行结果:

十、心得体会

学习了残差网络的由来及构成,成功利用TensorFlow建立resnet-50模型并对数据集进行了模型验证。