1.概述

GraphRAG的本质是调用LLM生成知识图谱,然后在回答问题时检索相关内容输到prompt里,作为补充知识来辅助回答。那么有没有可能将这运用到层级多标签文本分类(HMTC)任务中呢?

当然,乍一听有一点天方夜谭,因为GraphRAG的提出是为了解决QFS这样的全局问题,这和HMTC任务理应毫无关系。

不过我们先思考解决实际的HMTC问题,并且不使用传统算法,而是使用LLM进行分类回答。显然在prompt里加入能够辅助分类的专业知识应该可以一定程度提高分类准确性,但是对于一个超大规模标签的HMTC问题,全部专业知识很可能远超LLM的上下文,但是通过GraphRAG将大量专业知识整合,在询问时只检索与当前分类对象相关的专业知识就可以满足上下文窗口限制,并返回更高质量的分类结果。

理论上这么考虑似乎行得通,那么就来具体做实验试试吧。

2.实验内容

2.1 实验设置

大模型:llama-3.1 8B、70B-Instruct

优点:上下文窗口长度足够128k

数据集:dbpedia classes(标准的HTC),blurbgenrecollection(bgc,标准的HMTC)

大模型选用llama-3.1 8B-Instruct,体量合适,比较适合反复调整,在实际项目可以考虑效果更好的70B。另外llama-3.1 8B的上下文窗口在同量级中是无可比拟的存在,达到了恐怖的128k,这非常适合输入大量的专业知识。

接下来简单介绍一下使用的两个数据集。

dbpedia是个很常见的数据集,具体数据例子如下。

"Liu Chao-shiuan (Chinese: 劉兆玄; pinyin: Liú Zhàoxuán; born May 10, 1943) is a Taiwanese educator and politician. He is a former president of the National Tsing Hua University (1987–1993) and Soochow University (2004–2008) and a former Premier of the Republic of China (2008–2009).",Agent,Politician,PrimeMinister

标签结构可以在官网查到,数据集可以从kaggle下载。

显然这是一个HTC问题,并不存在多标签。

bgc是一个图书分类数据集,具体例子如下。

<book date="2018-08-18" xml:lang="en">

<title>Trailsman #267: California Casualties</title>

<body>Fargo tangles with a terrible town tyrant!Skye Fargo has seen more than his fair share of hangings. So when he ambles into a midnight neck-stretching party on the California coastline, he knows something ain’t right at the end of the rope. When he saves the doomed man, all hell breaks loose, with even more people dead, and Fargo riding the rough side of a vicious town boss—the mannerly Del Manning. There’s more going on than meets the Trailsman’s eagle eye, though, when some concerned “citizens” hire Fargo to help take down Manning by hook or crook. But no sooner does Fargo get involved than he’s accused of a cold-blooded murder, and must ride for his life with a big bounty on his head—and every local gun looking to blow it clean off…</body>

<copyright>(c) Penguin Random House</copyright>

<metadata>

<topics>

<d0>Fiction</d0><d1>Western Fiction</d1>

</topics>

<author>Jon Sharpe</author>

<published>Jan 06, 2004 </published>

<page_num> 176 Pages</page_num>

<isbn>9781101165843</isbn>

<url>https://www.penguinrandomhouse.com/books/291719/trailsman-267-california-casualties-by-jon-sharpe/</url>

</metadata>

</book>

标签结构从官网下载下来的文件里自带,深度为4。而且实际分类的结果可以不精确到叶节点,是难度更高的HMTC任务。

既然标题里有(1),那这里就只写一个实验,另一个就等下回分解了,本来两次实验都是打算本地运行LLM来调用GraphRAG的,但服务器这阵子很忙没卡,所以临时改成还是调用kimi的api了,因为是自费,所以只处理了100个样本,o(╥﹏╥)o

在后续更新的(2)里会详细讲述如何用本地LLM进行GraphRAG调用。

2.2 方法论

方法论1(针对bgc):知识库自生成。

对于分类目标让大模型自己生成待学习文本,人工纠正其中的问题,然后生成知识图谱指导后续分类。

具体prompt:如何通过一本书的标题(title)和简介(body)判断其是否属于Biography & Memoir这一类别?

若根据简介判断某本书属于Biography & Memoir这一类别,如何判断其具体属于Arts & Entertainment Biographies & Memoirs,Political Figure Biographies & Memoirs,Historical Figure Biographies & Memoirs,Literary Figure Biographies & Memoirs中的一种或几种?

- 你是一个图书分类领域的专家,你的任务是利用自己的知识尽可能详细完整地回答问题,回答的结果将被用来构建一个本地知识库。

- 如果问题可以分步进行回答,请尽量分步回答。回答最好可以包括复数个高质量的例子。

- 请包含所有必要的信息,以便于之后可以本地直接获取这些知识。

原理:CoT可以提高LLM的分类结果,但是对于这样超大体量的树状标签,使用CoT很容易在prompt阶段超过上下文数量上限,多次调用也浪费资源。但如果把CoT的整个过程作为外挂知识库进行索引就只有在构建索引的时候花费资源,之后再通过Query的方式可以在一次问询中完成CoT,如果问题数量很大就会相对来说就是节省资源的。

方法论2(针对dbpedia classes):指定类别的信息,输入维基百科对该内容的解释或者其他专业书籍。

https://mappings.dbpedia.org/server/ontology/classes/

建筑分类architectural structure

知识库:建筑简史;建筑分类相关书籍(然而并没有)

物种分类species(除人类)

知识库:tree of life;earth party;Evolution, Classification & Diversity: Essential Biology Self-Teaching Guide (Essential Biology Self-Teaching Guides);物种起源;How Zoologists Organize Things: The Art of Classification

目前的sota(有源码,但是用的数据集是WOS,比较简单)

GitHub - Rooooyy/HILL: Official implementation for NAACL 2024 paper "HILL: Hierarchy-aware Information Lossless Contrastive Learning for Hierarchical Text Classification".

3.具体步骤

这里我们只介绍方法论1的具体实现过程。

3.1 bgc数据清理

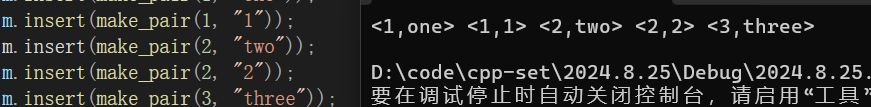

代码如下,使用正则化的方式提取,书籍名称,简介以及分类结果,并排序。

import csv

import re

# 读取文本数据

with open('/blurbgenrecollectionen/BlurbGenreCollection_EN_test.txt', 'r', encoding='utf-8') as file:

text_data = file.read()

# 使用正则表达式提取数据

books = re.findall(r'<book.*?>.*?</book>', text_data, re.DOTALL)

# 打开一个CSV文件来写入数据

with open('books.csv', 'w', newline='', encoding='utf-8') as csvfile:

# 定义列标题

fieldnames = ['Number', 'Title', 'Body', 'Topics']

writer = csv.DictWriter(csvfile, fieldnames=fieldnames)

# 写入列标题

writer.writeheader()

# 遍历每本书并提取数据

for i, book in enumerate(books, start=1):

title = re.search(r'<title>(.*?)</title>', book).group(1)

body = re.search(r'<body>(.*?)</body>', book).group(1)

topics = re.findall(r'<d\d>(.*?)</d\d>', book)

# 写入CSV行数据

writer.writerow({

'Number': i,

'Title': title,

'Body': body,

'Topics': ', '.join(topics)

})

print("CSV文件生成成功。")考虑到实际实验没法使用全部的数据,写一个简单的提取数据程序。

import csv

n = 2

# 原始CSV文件的路径

input_file = ''

# 新CSV文件的路径

output_file = 'books_top%s.csv'%n

# 读取原始CSV文件并保留前项

with open(input_file, 'r', encoding='utf-8') as infile:

reader = csv.reader(infile)

# 读取所有行

rows = list(reader)

# 提取前n项,包含标题行和前n条数据

top_rows = rows[:n+1]

# 将前n项写入新CSV文件

with open(output_file, 'w', newline='', encoding='utf-8') as outfile:

writer = csv.writer(outfile)

# 写入前n项数据

writer.writerows(top_rows)

print("前%s项数据已保存到 {output_file}"%n)3.2 如何让llm读懂层级分类标签,批量问llm分类问题,并输出标准格式的答案

这样数据就解决了,然后需要解决llm调用以及调用方式的问题了。kimi官方调用的示例如下。

from openai import OpenAI

client = OpenAI(

api_key = "你自己的",

base_url = "https://api.moonshot.cn/v1",

)

completion = client.chat.completions.create(

model = "moonshot-v1-8k",

messages = [

{"role": "system", "content": "你是 Kimi,由 Moonshot AI 提供的人工智能助手,你更擅长中文和英文的对话。你会为用户提供安全,有帮助,准确的回答。同时,你会拒绝一切涉及恐怖主义,种族歧视,黄色暴力等问题的回答。Moonshot AI 为专有名词,不可翻译成其他语言。"},

{"role": "user", "content": "你好,我叫李雷,1+1等于多少?"}

],

temperature = 0.3,

)

print(completion.choices[0].message.content)根据此编写批量问询的程序,因为很容易超过tpm,所以设置了问询延迟时间。

from openai import OpenAI

from prompt import prompt_system,prompt_user

import csv

import time

# 初始化OpenAI API密钥

client = OpenAI(

api_key = "你自己的",

base_url = "https://api.moonshot.cn/v1",

)

def classify_book(title, body):

completion = client.chat.completions.create(

model = "moonshot-v1-8k",

messages = [

{"role": "system", "content": prompt_system},

{"role": "user", "content": prompt_user.format(Title=title, Body=body)}

]

)

return completion.choices[0].message.content

# 读取CSV文件

input_file = '/Users/xueyaowan/Documents/HIT-AI小组/GraphRAG-text-classification/books_top100.csv'

output_file = 'classified_books.csv'

# 设置每次请求之间的延迟时间(以秒为单位)

delay_between_requests = 2

with open(input_file, mode='r', encoding='utf-8') as infile, open(output_file, mode='w', encoding='utf-8', newline='') as outfile:

reader = csv.DictReader(infile)

fieldnames = reader.fieldnames + ['Predicted Topics']

writer = csv.DictWriter(outfile, fieldnames=fieldnames)

writer.writeheader()

for row in reader:

title = row['Title']

body = row['Body']

predicted_topics = classify_book(title, body)

row['Predicted Topics'] = predicted_topics

writer.writerow(row)

# 请求完成后等待指定的时间

time.sleep(delay_between_requests)

print("The book categorization has been completed, and the results have been written into the file named 'classified_books.csv'.")所使用的prompt如下所示。

prompt_system = """

You are an expert in book classification with deep knowledge across various genres and categories. Your task is to classify a book based on its title and body text. The classification system is hierarchical, with multiple levels of categories. The book may belong to one or more categories, and if a specific leaf node category is not appropriate, the book may be classified at any level of the hierarchy. Please return all relevant classifications for the book.

### Hierarchical Classification System ###

hierarchical_tags = {

"Nonfiction": {

"Biography & Memoir": {

"Arts & Entertainment Biographies & Memoirs": {},

"Political Figure Biographies & Memoirs": {},

"Historical Figure Biographies & Memoirs": {},

"Literary Figure Biographies & Memoirs": {}

},

"Cooking": {

"Regional & Ethnic Cooking": {},

"Cooking Methods": {},

"Food Memoir & Travel": {},

"Baking & Desserts": {},

"Wine & Beverage": {}

},

"Arts & Entertainment": {

"Art": {},

"Design": {},

"Film": {},

"Music": {},

"Performing Arts": {},

"Photography": {},

"Writing": {}

},

"Business": {

"Economics": {},

"Management": {},

"Marketing": {}

},

"Crafts, Home & Garden": {

"Crafts & Hobbies": {},

"Home & Garden": {},

"Weddings": {}

},

"Games": {},

"Health & Fitness": {

"Alternative Therapies": {},

"Diet & Nutrition": {},

"Exercise": {},

"Health & Reference": {}

},

"History": {

"Military History": {

"World War II Military History": {},

"World War I Military History": {},

"1950 – Present Military History": {}

},

"U.S. History": {

"21st Century U.S. History": {},

"20th Century U.S. History": {},

"19th Century U.S. History": {},

"Civil War Period": {},

"Colonial/Revolutionary Period": {},

"Native American History": {}

},

"World History": {

"African World History": {},

"Ancient World History": {},

"Asian World History": {},

"European World History": {},

"Latin American World History": {},

"Middle Eastern World History": {},

"North American World History": {}

}

},

"Parenting": {},

"Pets": {},

"Politics": {

"Domestic Politics": {},

"World Politics": {}

},

"Popular Science": {

"Science": {},

"Technology": {}

},

"Psychology": {},

"Reference": {

"Language": {},

"Test Preparation": {}

},

"Religion & Philosophy": {

"Religion": {},

"Philosophy": {}

},

"Self-Improvement": {

"Beauty": {},

"Inspiration & Motivation": {},

"Personal Finance": {},

"Personal Growth": {}

},

"Sports": {},

"Travel": {

"Travel: Africa": {},

"Travel: Asia": {},

"Travel: Australia & Oceania": {},

"Travel: Caribbean & Mexico": {},

"Travel: Central & South America": {},

"Travel: Europe": {},

"Travel: Middle East": {},

"Specialty Travel": {},

"Travel Writing": {},

"Travel: USA & Canada": {}

}

},

"Fiction": {

"Fantasy": {

"Contemporary Fantasy": {},

"Epic Fantasy": {},

"Fairy Tales": {},

"Urban Fantasy": {}

},

"Gothic & Horror": {},

"Graphic Novels & Manga": {},

"Historical Fiction": {},

"Literary Fiction": {},

"Military Fiction": {},

"Mystery & Suspense": {

"Cozy Mysteries": {},

"Crime Mysteries": {},

"Espionage Mysteries": {},

"Noir Mysteries": {},

"Suspense & Thriller": {}

},

"Paranormal Fiction": {},

"Romance": {

"Contemporary Romance": {},

"Erotica": {},

"Historical Romance": {},

"New Adult Romance": {},

"Paranormal Romance": {},

"Regency Romance": {},

"Suspense Romance": {},

"Western Romance": {}

},

"Science Fiction": {

"Cyber Punk": {},

"Military Science Fiction": {},

"Space Opera": {}

},

"Spiritual Fiction": {},

"Western Fiction": {},

"Women’s Fiction": {}

},

"Classics": {

"Fiction Classics": {},

"Literary Collections": {},

"Literary Criticism": {},

"Nonfiction Classics": {}

},

"Children’s Books": {

"Step Into Reading": {},

"Children’s Middle Grade Books": {

"Children’s Middle Grade Mystery & Detective Books": {},

"Children’s Middle Grade Action & Adventure Books": {},

"Children’s Middle Grade Fantasy & Magical Books": {},

"Children’s Middle Grade Historical Books": {},

"Children’s Middle Grade Sports Books": {}

},

"Children’s Activity & Novelty Books": {},

"Children’s Board Books": {},

"Children’s Boxed Sets": {},

"Children’s Chapter Books": {},

"Childrens Media Tie-In Books": {},

"Children’s Picture Books": {}

},

"Teen & Young Adult": {

"Teen & Young Adult Action & Adventure": {},

"Teen & Young Adult Mystery & Suspense": {},

"Teen & Young Adult Fantasy Fiction": {},

"Teen & Young Adult Nonfiction": {},

"Teen & Young Adult Fiction": {},

"Teen & Young Adult Social Issues": {},

"Teen & Young Adult Historical Fiction": {},

"Teen & Young Adult Romance": {},

"Teen & Young Adult Science Fiction": {}

},

"Religion & Philosophy": {

"Bibles": {}

},

"Humor": {},

"Poetry": {}

}

### Instructions ###

Classify the book into the most appropriate categories from the provided hierarchical classification system. If the book belongs to multiple categories, list all relevant categories. If no specific leaf category is a perfect match, assign the book to the most appropriate higher-level category.

### Output Format ###

- Return the list of categories as a single line of comma-separated values, **each value including the tag and its parent tags**.

### Examples ###

Example1:

- Title: The End of the Suburbs

- Body: "The government in the past created one American Dream at the expense of almost all others: the dream of a house, a lawn, a picket fence, two children, and a car. But there is no single American Dream anymore.”For nearly 70 years, the suburbs were as American as apple pie. As the middle class ballooned and single-family homes and cars became more affordable, we flocked to pre-fabricated communities in the suburbs, a place where open air and solitude offered a retreat from our dense, polluted cities. Before long, success became synonymous with a private home in a bedroom community complete with a yard, a two-car garage and a commute to the office, and subdivisions quickly blanketed our landscape.But in recent years things have started to change. An epic housing crisis revealed existing problems with this unique pattern of development, while the steady pull of long-simmering economic, societal and demographic forces has culminated in a Perfect Storm that has led to a profound shift in the way we desire to live.In The End of the Suburbs journalist Leigh Gallagher traces the rise and fall of American suburbia from the stately railroad suburbs that sprung up outside American cities in the 19th and early 20th centuries to current-day sprawling exurbs where residents spend as much as four hours each day commuting. Along the way she shows why suburbia was unsustainable from the start and explores the hundreds of new, alternative communities that are springing up around the country and promise to reshape our way of life for the better.Not all suburbs are going to vanish, of course, but Gallagher’s research and reporting show the trends are undeniable. Consider some of the forces at work: • The nuclear family is no more: Our marriage and birth rates are steadily declining, while the single-person households are on the rise. Thus, the good schools and family-friendly lifestyle the suburbs promised are increasingly unnecessary. • We want out of our cars: As the price of oil continues to rise, the hours long commutes forced on us by sprawl have become unaffordable for many. Meanwhile, today’s younger generation has expressed a perplexing indifference toward cars and driving. Both shifts have fueled demand for denser, pedestrian-friendly communities. • Cities are booming. Once abandoned by the wealthy, cities are experiencing a renaissance, especially among younger generations and families with young children. At the same time, suburbs across the country have had to confront never-before-seen rates of poverty and crime.Blending powerful data with vivid on the ground reporting, Gallagher introduces us to a fascinating cast of characters, including the charismatic leader of the anti-sprawl movement; a mild-mannered Minnesotan who quit his job to convince the world that the suburbs are a financial Ponzi scheme; and the disaffected residents of suburbia, like the teacher whose punishing commute entailed leaving home at 4 a.m. and sleeping under her desk in her classroom.Along the way, she explains why understanding the shifts taking place is imperative to any discussion about the future of our housing landscape and of our society itself—and why that future will bring us stronger, healthier, happier and more diverse communities for everyone."

- Output: Domestic Politics, Nonfiction, Politics

Example2:

- Title: First Aid Fast for Babies and Children

- Body: "An indispensable guide for all parents and caretakers that covers a wide range of childhood emergencies.From anaphylaxis to burns to severe bleeding and bruising, First Aid Fast for Babies and Children offers clear advice, and step-by-step photographs show you what to do.This revised and updated fifth edition abides by all the latest health guidelines and makes first aid less daunting by giving more prominence to essential actions. Quick reference symbols at the end of every sequence indicate whether to seek medical advice, take your child to the hospital, or call an ambulance, and question-and-answer sections provide additional easy-to-access information. It even includes helpful advice on child safety in and around the home.First Aid Fast for Babies and Children gives you all the direction you need to deal with an injury or first aid emergency and keep your baby or child safe."

- Output: Nonfiction, Parenting, Health & Fitness

"""

prompt_user = """

### Real Data ###

- Title: {Title}

- Body: "{Body}"

- Output:

"""执行结果的示例如下。

1,Teenage Mutant Ninja Turtles: The Box Set Volume 1,"TMNT co-creator Kevin Eastman and writer Tom Waltz guide readers through a ground-breaking new origin and into epic tales of courage, loyalty and family as the Turtles and their allies battle for survival against enemies old and new in the dangerous streets and sewers of New York City. Includes TMNT volumes #1–5, which collects the first 20 issues of the ongoing series.","Fiction, Graphic Novels & Manga","Fiction, Graphic Novels & Manga, Teen & Young Adult Fiction"

3.3 构建数据库,graphrag生成知识图谱

既然知识库要自生成,这里就还是反复调用LLM进行回答,具体代码如下。

from openai import OpenAI

from prompt import prompt_system_2

# Define the questions

from questions import questions_list

# 初始化OpenAI API密钥

client = OpenAI(

api_key = "你自己的",

base_url = "https://api.moonshot.cn/v1",

)

def ask_question(question):

completion = client.chat.completions.create(

model = "moonshot-v1-8k",

messages = [

{"role": "system", "content": prompt_system_2},

{"role": "user", "content": question}

],

)

return completion.choices[0].message.content

# Write the response to a text file

output_file = "classification_guidelines.txt"

with open(output_file, "w") as file:

for i, question in enumerate(questions_list, start=1):

print(f"Asking question {i}/{len(questions_list)}: {question}")

response = ask_question(question)

# Write the question and its corresponding answer to the file

file.write(f"Question {i}: {question}\n")

file.write(f"Answer {i}:\n{response}\n")

file.write("\n" + "="*50 + "\n\n") # Separator between question-answer pairs

print(f"All responses have been written to {output_file}")

questions未来实际上也可以自生成,类似prompt-tune,不过这里还是列出来的方式。以下为问题示例,不是全部,但剩下的问题也很容易推测。

questions_content = """"What is the step-by-step process for classifying a book into categories based on its title and body text? Please outline each step, from analyzing the book’s body text to assigning it to a primary category and any relevant subcategories."

"When a book’s content seems to fit into multiple categories or is ambiguous, what are the recommended strategies for determining the most appropriate classification? Include any guidelines or decision-making frameworks that can help."

"How can you determine if a book belongs to the "Nonfiction" category based on its title and body?"

"If the title and the body of the book suggests that the book belongs to the "Nonfiction" category, how can you further determine whether it specifically falls into one or more of the following subcategories: "Biography & Memoir", "Cooking", "Arts & Entertainment", "Business", "Crafts, Home & Garden","Games","Health & Fitness","History","Parenting","Pets","Politics","Popular Science","Psychology","Reference","Religion & Philosophy","Self-Improvement","Sports",or "Travel" ?"

"How can you determine if a book belongs to the "Biography & Memoir" category based on its title and body?"

"If the title and the body of the book suggests that the book belongs to the "Biography & Memoir" category, how can you further determine whether it specifically falls into one or more of the following subcategories: "Arts & Entertainment Biographies & Memoirs" ,"Political Figure Biographies & Memoirs" ,"Historical Figure Biographies & Memoirs" ,or "Literary Figure Biographies & Memoirs"?"

"""

questions_list = questions_content.splitlines()使用的prompt如下,就是上面方法论的英文翻译。

prompt_system_2 = """

- You are an expert in the field of book classification. Your task is to answer questions as thoroughly and completely as possible. The responses will be used to build a local knowledge base.

- If a question can be answered in steps, please break it down and provide a step-by-step answer. It is preferable to include multiple high-quality examples.

- Please include all necessary information to ensure that the knowledge can be directly accessed locally later.

"""3.4 graphrag的local如何批量使用

这部分比较复杂,我们详细来说一下。

import subprocess

import csv

import time

import re

from prompt import prompt_user_2

def classify_book(title, body):

# 构建分类问题

question = prompt_user_2.format(Title=title, Body=body)

# 构建命令行查询命令

command = f'python -m graphrag.query --root ./ragtest --method local """{question}"""'

# 执行命令并获取结果

result = subprocess.run(command,shell = True,capture_output = True, text=True)

# 返回结果

return result.stdout

# 读取CSV文件

input_file = 'books_top100.csv'

output_file = 'classified_books_graphrag.csv'

# 设置每次请求之间的延迟时间(以秒为单位)

delay_between_requests = 2

with open(input_file, mode='r', encoding='utf-8') as infile, open(output_file, mode='w', encoding='utf-8', newline='') as outfile:

reader = csv.DictReader(infile)

fieldnames = reader.fieldnames + ['Predicted Topics']

writer = csv.DictWriter(outfile, fieldnames=fieldnames)

writer.writeheader()

i = 1

for row in reader:

title = row['Title']

body = row['Body']

# 调用 classify_book 函数来获取分类结果,并正则化结果

output = classify_book(title, body)

match = re.search(r'Local Search Response:\s*(.*)', output)

if match:

predicted_topics = match.group(1)

else:

predicted_topics = 'Unknown'

# 将分类结果写入CSV文件

row['Predicted Topics'] = predicted_topics

writer.writerow(row)

# 请求完成后等待指定的时间

print(i)

i += 1

time.sleep(delay_between_requests)

print("The book categorization has been completed, and the results have been written into the file named 'classified_books_graphrag.csv'.")最开始直接地想法就是调用GraphRAG自己的local问询程序,但是终端命令,subprocess.run()没法接受那么长的prompt,于是就只把书籍的标题和介绍输进去。而完整的提示prompt改到源码中去,具体看query的调用程序找到GraphRAG路径的/graphrag/query/structured_search/local_search/system_prompt.py

根据调用的这行代码search.py得到上面的信息。

from graphrag.query.structured_search.local_search.system_prompt import (

LOCAL_SEARCH_SYSTEM_PROMPT,

)修改后的prompt

prompt_system_3 = """

You are an expert in book classification with deep knowledge across various genres and categories. Your task is to classify a book based on its title and body text. The classification system is hierarchical, with multiple levels of categories. The book may belong to one or more categories, and if a specific leaf node category is not appropriate, the book may be classified at any level of the hierarchy. Please return all relevant classifications for the book.

### Hierarchical Classification System ###

hierarchical_tags = {{

"Nonfiction": {{

"Biography & Memoir": {{

"Arts & Entertainment Biographies & Memoirs": {{}},

"Political Figure Biographies & Memoirs": {{}},

"Historical Figure Biographies & Memoirs": {{}},

"Literary Figure Biographies & Memoirs": {{}}

}},

"Cooking": {{

"Regional & Ethnic Cooking": {{}},

"Cooking Methods": {{}},

"Food Memoir & Travel": {{}},

"Baking & Desserts": {{}},

"Wine & Beverage": {{}}

}},

"Arts & Entertainment": {{

"Art": {{}},

"Design": {{}},

"Film": {{}},

"Music": {{}},

"Performing Arts": {{}},

"Photography": {{}},

"Writing": {{}}

}},

"Business": {{

"Economics": {{}},

"Management": {{}},

"Marketing": {{}}

}},

"Crafts, Home & Garden": {{

"Crafts & Hobbies": {{}},

"Home & Garden": {{}},

"Weddings": {{}}

}},

"Games": {{}},

"Health & Fitness": {{

"Alternative Therapies": {{}},

"Diet & Nutrition": {{}},

"Exercise": {{}},

"Health & Reference": {{}}

}},

"History": {{

"Military History": {{

"World War II Military History": {{}},

"World War I Military History": {{}},

"1950 – Present Military History": {{}}

}},

"U.S. History": {{

"21st Century U.S. History": {{}},

"20th Century U.S. History": {{}},

"19th Century U.S. History": {{}},

"Civil War Period": {{}},

"Colonial/Revolutionary Period": {{}},

"Native American History": {{}}

}},

"World History": {{

"African World History": {{}},

"Ancient World History": {{}},

"Asian World History": {{}},

"European World History": {{}},

"Latin American World History": {{}},

"Middle Eastern World History": {{}},

"North American World History": {{}}

}}

}},

"Parenting": {{}},

"Pets": {{}},

"Politics": {{

"Domestic Politics": {{}},

"World Politics": {{}}

}},

"Popular Science": {{

"Science": {{}},

"Technology": {{}}

}},

"Psychology": {{}},

"Reference": {{

"Language": {{}},

"Test Preparation": {{}}

}},

"Religion & Philosophy": {{

"Religion": {{}},

"Philosophy": {{}}

}},

"Self-Improvement": {{

"Beauty": {{}},

"Inspiration & Motivation": {{}},

"Personal Finance": {{}},

"Personal Growth": {{}}

}},

"Sports": {{}},

"Travel": {{

"Travel: Africa": {{}},

"Travel: Asia": {{}},

"Travel: Australia & Oceania": {{}},

"Travel: Caribbean & Mexico": {{}},

"Travel: Central & South America": {{}},

"Travel: Europe": {{}},

"Travel: Middle East": {{}},

"Specialty Travel": {{}},

"Travel Writing": {{}},

"Travel: USA & Canada": {{}}

}}

}},

"Fiction": {{

"Fantasy": {{

"Contemporary Fantasy": {{}},

"Epic Fantasy": {{}},

"Fairy Tales": {{}},

"Urban Fantasy": {{}}

}},

"Gothic & Horror": {{}},

"Graphic Novels & Manga": {{}},

"Historical Fiction": {{}},

"Literary Fiction": {{}},

"Military Fiction": {{}},

"Mystery & Suspense": {{

"Cozy Mysteries": {{}},

"Crime Mysteries": {{}},

"Espionage Mysteries": {{}},

"Noir Mysteries": {{}},

"Suspense & Thriller": {{}}

}},

"Paranormal Fiction": {{}},

"Romance": {{

"Contemporary Romance": {{}},

"Erotica": {{}},

"Historical Romance": {{}},

"New Adult Romance": {{}},

"Paranormal Romance": {{}},

"Regency Romance": {{}},

"Suspense Romance": {{}},

"Western Romance": {{}}

}},

"Science Fiction": {{

"Cyber Punk": {{}},

"Military Science Fiction": {{}},

"Space Opera": {{}}

}},

"Spiritual Fiction": {{}},

"Western Fiction": {{}},

"Women’s Fiction": {{}}

}},

"Classics": {{

"Fiction Classics": {{}},

"Literary Collections": {{}},

"Literary Criticism": {{}},

"Nonfiction Classics": {{}}

}},

"Children’s Books": {{

"Step Into Reading": {{}},

"Children’s Middle Grade Books": {{

"Children’s Middle Grade Mystery & Detective Books": {{}},

"Children’s Middle Grade Action & Adventure Books": {{}},

"Children’s Middle Grade Fantasy & Magical Books": {{}},

"Children’s Middle Grade Historical Books": {{}},

"Children’s Middle Grade Sports Books": {{}}

}},

"Children’s Activity & Novelty Books": {{}},

"Children’s Board Books": {{}},

"Children’s Boxed Sets": {{}},

"Children’s Chapter Books": {{}},

"Childrens Media Tie-In Books": {{}},

"Children’s Picture Books": {{}}

}},

"Teen & Young Adult": {{

"Teen & Young Adult Action & Adventure": {{}},

"Teen & Young Adult Mystery & Suspense": {{}},

"Teen & Young Adult Fantasy Fiction": {{}},

"Teen & Young Adult Nonfiction": {{}},

"Teen & Young Adult Fiction": {{}},

"Teen & Young Adult Social Issues": {{}},

"Teen & Young Adult Historical Fiction": {{}},

"Teen & Young Adult Romance": {{}},

"Teen & Young Adult Science Fiction": {{}}

}},

"Religion & Philosophy": {{

"Bibles": {{}}

}},

"Humor": {{}},

"Poetry": {{}}

}}

### IMPORTANT INSTRUCTIONS ###

**Do not introduce any new or unlisted categories**. You must only use the categories provided in the hierarchical classification system below. The classification results should strictly adhere to this list.

### Instructions ###

Classify the book into the most appropriate categories from the provided hierarchical classification system. If the book belongs to multiple categories, list all relevant categories. If no specific leaf category is a perfect match, assign the book to the most appropriate higher-level category.

### Output Format ###

Return the list of categories as a single line of comma-separated values, **each value including the tag and its parent tags**.

### Examples ###

Example1:

Title: The End of the Suburbs

Body: "The government in the past created one American Dream at the expense of almost all others: the dream of a house, a lawn, a picket fence, two children, and a car. But there is no single American Dream anymore.”For nearly 70 years, the suburbs were as American as apple pie. As the middle class ballooned and single-family homes and cars became more affordable, we flocked to pre-fabricated communities in the suburbs, a place where open air and solitude offered a retreat from our dense, polluted cities. Before long, success became synonymous with a private home in a bedroom community complete with a yard, a two-car garage and a commute to the office, and subdivisions quickly blanketed our landscape.But in recent years things have started to change. An epic housing crisis revealed existing problems with this unique pattern of development, while the steady pull of long-simmering economic, societal and demographic forces has culminated in a Perfect Storm that has led to a profound shift in the way we desire to live.In The End of the Suburbs journalist Leigh Gallagher traces the rise and fall of American suburbia from the stately railroad suburbs that sprung up outside American cities in the 19th and early 20th centuries to current-day sprawling exurbs where residents spend as much as four hours each day commuting. Along the way she shows why suburbia was unsustainable from the start and explores the hundreds of new, alternative communities that are springing up around the country and promise to reshape our way of life for the better.Not all suburbs are going to vanish, of course, but Gallagher’s research and reporting show the trends are undeniable. Consider some of the forces at work: • The nuclear family is no more: Our marriage and birth rates are steadily declining, while the single-person households are on the rise. Thus, the good schools and family-friendly lifestyle the suburbs promised are increasingly unnecessary. • We want out of our cars: As the price of oil continues to rise, the hours long commutes forced on us by sprawl have become unaffordable for many. Meanwhile, today’s younger generation has expressed a perplexing indifference toward cars and driving. Both shifts have fueled demand for denser, pedestrian-friendly communities. • Cities are booming. Once abandoned by the wealthy, cities are experiencing a renaissance, especially among younger generations and families with young children. At the same time, suburbs across the country have had to confront never-before-seen rates of poverty and crime.Blending powerful data with vivid on the ground reporting, Gallagher introduces us to a fascinating cast of characters, including the charismatic leader of the anti-sprawl movement; a mild-mannered Minnesotan who quit his job to convince the world that the suburbs are a financial Ponzi scheme; and the disaffected residents of suburbia, like the teacher whose punishing commute entailed leaving home at 4 a.m. and sleeping under her desk in her classroom.Along the way, she explains why understanding the shifts taking place is imperative to any discussion about the future of our housing landscape and of our society itself—and why that future will bring us stronger, healthier, happier and more diverse communities for everyone."

Output: Domestic Politics, Nonfiction, Politics

Example2:

Title: First Aid Fast for Babies and Children

Body: "An indispensable guide for all parents and caretakers that covers a wide range of childhood emergencies.From anaphylaxis to burns to severe bleeding and bruising, First Aid Fast for Babies and Children offers clear advice, and step-by-step photographs show you what to do.This revised and updated fifth edition abides by all the latest health guidelines and makes first aid less daunting by giving more prominence to essential actions. Quick reference symbols at the end of every sequence indicate whether to seek medical advice, take your child to the hospital, or call an ambulance, and question-and-answer sections provide additional easy-to-access information. It even includes helpful advice on child safety in and around the home.First Aid Fast for Babies and Children gives you all the direction you need to deal with an injury or first aid emergency and keep your baby or child safe."

Output: Nonfiction, Parenting, Health & Fitness

"""

prompt_user_2 = "### Real Data ###Title: {Title} Body: {Body} Output: "3.5 如何计算准确率以及基于实例的F1值

已经得到结果了,这里只是验证有效性,就只采用子集准确率(完全一致的标签才为真)以及平面F1值来作为评价标准,而不引入HMTC的方式(主要是类别还要写成01编码的方式才能调用之前自己写的程序,懒)

import csv

from sklearn.metrics import accuracy_score, f1_score

true_topics_list = []

predicted_topics_list = []

input_file = 'classified_books_graphrag.csv'

# 读取CSV文件

with open(input_file, mode='r', newline='', encoding='utf-8') as file:

reader = csv.DictReader(file)

for row in reader:

true_topics = set(row['Topics'].split(', '))

predicted_topics = set(row['Predicted Topics'].split(', '))

true_topics_list.append(true_topics)

predicted_topics_list.append(predicted_topics)

# 获取所有可能的标签集合

all_labels = set(label for labels in true_topics_list + predicted_topics_list for label in labels)

# 转换为二进制表示

true_binary = []

pred_binary = []

for true_set, pred_set in zip(true_topics_list, predicted_topics_list):

true_binary.append([1 if label in true_set else 0 for label in all_labels])

pred_binary.append([1 if label in pred_set else 0 for label in all_labels])

# 计算准确率

accuracy = accuracy_score(true_binary, pred_binary)

# 计算F1值

micro_f1 = f1_score(true_binary, pred_binary, average='micro')

macro_f1 = f1_score(true_binary, pred_binary, average='macro')

print(f"Accuracy: {accuracy:.2%}")

print(f"Micro-F1 Score: {micro_f1:.2f}")

print(f"Macro-F1 Score: {macro_f1:.2f}")4.实验结果

LLM直接回答:

Accuracy: 10.00%

Micro-F1 Score: 0.61

Macro-F1 Score: 0.37

GraphRAG辅助回答:

Accuracy: 15.00%

Micro-F1 Score: 0.62

Macro-F1 Score: 0.43

可以看到仅仅100个数据提升就已经比较明显了。

5.相关论文

最后再来介绍两篇同样用LLM来解决多层标签文本分类问题的论文。

5.1 《TELEClass: Taxonomy Enrichment and LLM-Enhanced Hierarchical Text Classification with Minimal Supervision》

5.1.1 LLM-Enhanced Core Class Annotation (LLM增强的核心类别注释)

这一步骤旨在使用大型语言模型(LLM)来增强文档的核心类别选择过程。具体操作如下:

- LLM生成关键术语:对于每个类别,LLM 生成一组与该类别相关的关键术语,以丰富原始的标签体系结构。

- 结构感知的候选核心类别选择:通过定义类别和文档之间的相似度得分,使用一个自顶向下的树搜索算法,从候选类别中选择与文档最相似的核心类别。

- LLM选择核心类别:利用 LLM 从候选类别中为每个文档选择初始的核心类别集合。

5.1.2 Corpus-Based Taxonomy Enrichment (基于语料库的标签体系丰富化)

在这一步中,通过从文本语料库中挖掘术语来进一步丰富类别:

- 收集相关文档:为每个类别收集包含该类别或其子类别的文档。

- 选择类别指示性术语:基于以下三个因素选择术语:

- 流行度:类别术语在相关文档中出现的频率。

- 独特性:在兄弟类别中不常见的术语。

- 语义相似度:术语与类别名称的语义相似性。

- 短语挖掘:使用工具(如 AutoPhrase)从语料库中挖掘高质量的单词和多词短语作为候选术语。

5.1.3 Core Class Refinement with Enriched Taxonomy (使用丰富化标签体系的核心类别细化)

利用丰富化后的类别术语来细化文档的核心类别:

- 文档和类别的嵌入表示:使用预训练的 Sentence Transformer 模型来编码整个文档。

- 类别表示:通过计算至少提及一个类别指示性关键词的文档集合的平均嵌入向量来定义类别表示。

- 文档-类别匹配得分:通过计算文档和类别表示之间的余弦相似度来确定匹配得分,并使用相似度差异来识别文档的核心类别。

5.1.4 Text Classifier Training with Path-Based Data Augmentation (基于路径的数据增强的文本分类器训练)

最后一步是使用细化的核心类别和数据增强来训练文本分类器:

- 路径基础的文档生成:LLM 根据标签体系中的路径生成伪文档,以确保每个类别至少有少量文档作为正样本。

- 分类器架构:使用简单的文本匹配网络,包括一个初始化为预训练 BERT-base 模型的文档编码器和对数双线性匹配网络。

- 训练过程:结合细化的核心类别和生成的伪文档来训练文本分类器,使用标准的二元交叉熵损失函数。

TELEClass 方法的创新之处在于它结合了 LLM 的生成能力和文本语料库中的信息来增强标签体系,并提出了一种有效的数据增强策略来解决层次化文本分类中的类别不平衡问题。通过这种方式,TELEClass 能够在只有类别名称作为监督信号的情况下,有效地训练出性能优异的层次化文本分类器。

这是不是听起来有一点点熟悉,和这个实验的思想有不少相似之处,这个本质上也是利用LLM的生成能力构建了一个更具体的分类标签知识树,但最后用这个知识树训练分类器,而不是直接用LLM返回结果。

5.2 《Retrieval-style In-Context Learning for Few-shot Hierarchical Text Classification》

5.2.1 检索式上下文学习框架

论文提出的框架利用大型语言模型(LLMs)进行上下文学习,并结合检索数据库来识别与输入文本相关的示例(demonstrations),以辅助层次化标签的生成。

5.2.1.1 检索数据库构建

- 使用预训练的语言模型作为索引器(indexer),为训练样本生成索引向量。

- 通过持续训练学习索引向量,使用三种目标:掩码语言模型(MLM)、层次化分类(CLS)和新提出的发散对比学习(DCL)。

5.2.1.2 迭代策略

- 为了避免一次性提供大量多层次上下文HTC标签,论文提出了一种迭代策略,逐层推断标签,从而大幅减少候选标签的数量。

5.2.2 关键组件

5.2.2.1 索引向量表示

- 定义提示模板(prompt templates)以获取每层HTC的表示,将提示与原始输入文本连接形成新的文本。

- 使用预训练的语言模型获取隐藏状态向量,作为标签感知的表示。

5.2.2.2 标签描述

- 利用LLM生成标签路径的描述,以减少由于标签信息不足导致的错误。

5.2.2.3 分层索引器训练

- 应用MLM、CLS和DCL目标训练索引器。

- MLM:预测输入中填充掩码标记的单词。

- CLS:通过每层索引向量预测HTC标签。

- DCL:使用标签文本信息选择正样本和负样本,通过对比学习使相似标签的索引向量更接近,不相似的更远离。

这个就更有意思了,Retrieval Database都出来了,原理上非常接近。将训练集作为数据库进行学习,但是这个最后训练器同样是单独训练的,并没有直接使用LLM返回结果。

这就可以考虑,如果用GraphRAG生成的知识图谱再搭配图神经网络的推理分类器会不会也是一个潜在的研究方向。