1、目的

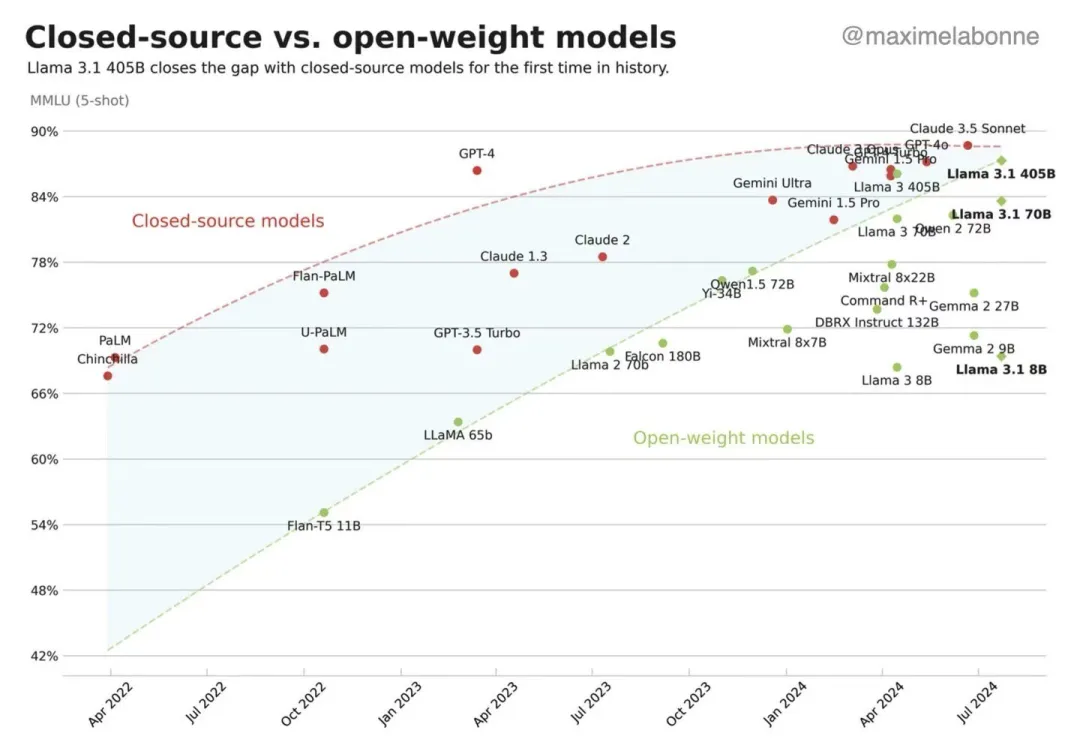

无监督表示学习在自然图像领域已经很成功,因为语言任务有离散的信号空间(words, sub-word units等),便于构建tokenized字典

现有的无监督视觉表示学习方法可以看作是构建动态字典,字典的“keys”则是从数据(images or patches)中采样得到的,并用编码网络来代表

构建的字典需要满足large和consistent as they evolve during training这两个条件

2、方法

Momentum Contrast (MoCo)

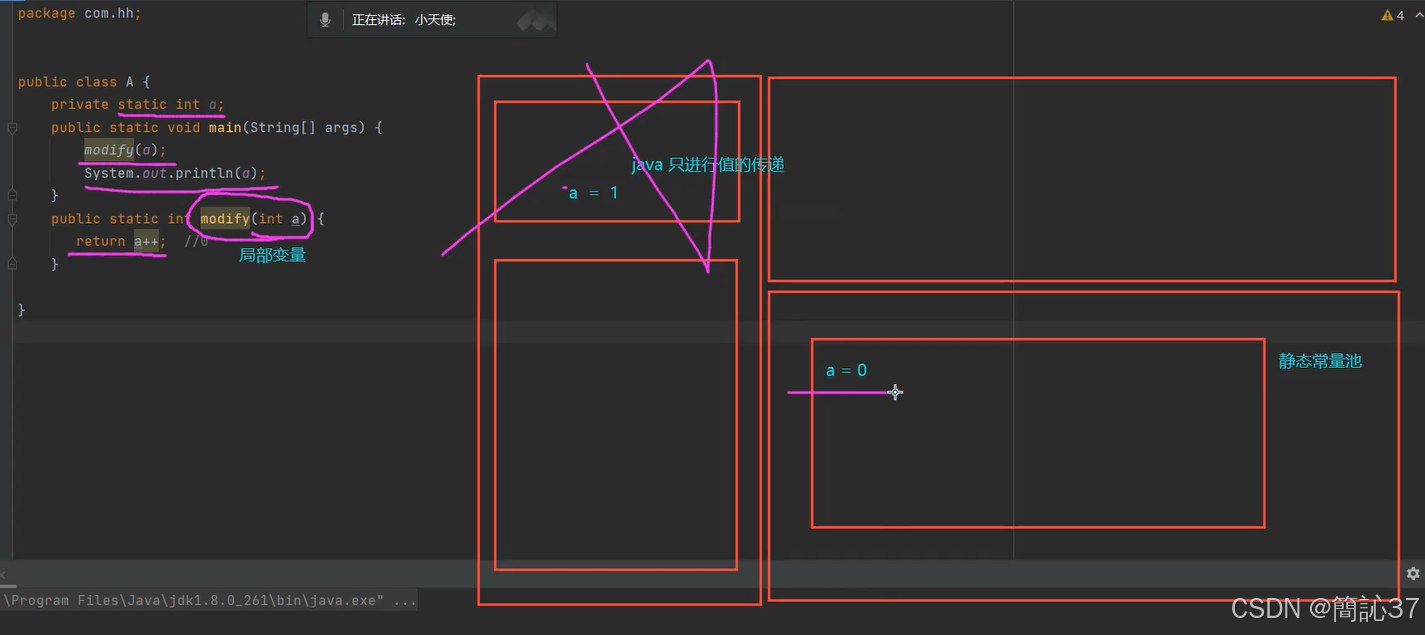

1)contrastive learning

dictionary look-up

-> loss: info NCE

-> momentum

the dictionary is dynamic: the keys are randomly sampled, and the key encoder evolves during training

2)dictionary as a queue

-> large: decouple the dictionary size (can be set as a hyper-parameter) from the mini-batch size

-> consistent: the encoded representations of the current mini-batch are enqueued, and the oldest are dequeued.

the dictionary keys come from the preceding several mini-batches, slowly progressing key encoder, momentum-based moving average of the query encoder

3)momentum update

![]()

-> 只有的参数是通过back-propagation更新的

-> 尽管不同mini-batch中的key是用不同的encoder编码的,这些encoder之间的差异比较小

4)pretext task

instance discrimination: a query matches a key if they are encoded views (e.g. different crops) of the same image

5)shuffling BN

perform BN on the samples independently for each GPU,以防intra-batch communication among samples造成信息泄露

![Edge-TTS:微软推出的,免费、开源、支持多种中文语音语色的AI工具[工具版]](https://i-blog.csdnimg.cn/direct/bd393adfc52c412585c8084f38f768ef.png)