前一篇文章介绍了 Stream-K: Work-centric Parallel Decomposition for Dense Matrix-Matrix Multiplication on the GPU 论文,下面对其代码实现进行分析。

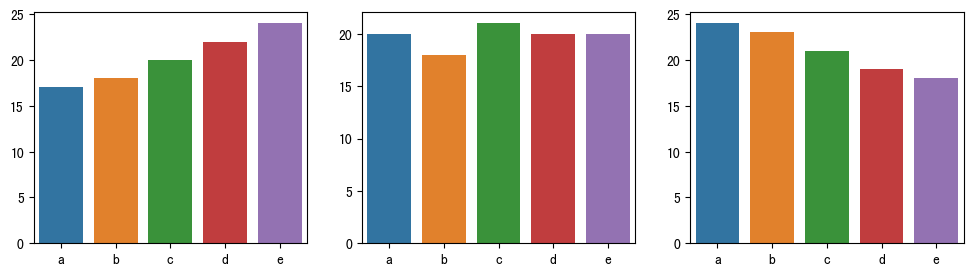

cutlass 的 examples/47_ampere_gemm_universal_streamk 展示了 GEMM Stream-K 算法在 Ampere 架构上的使用。对比了普通 Gemm 以及 Split-K 算法和 Stream-K 的性能:

- Device 层面,GemmUniversal 统一支持了 Gemm、Split-K 和 Stream-K 算法,主要实现在其基类 GemmUniversalBase 中;

- Kernel 层面, GemmUniversal 为 Gemm 和 Split-K 的实现,GemmUniversalStreamk 为 Stream-K 的实现:

- 二者由 DefaultGemmUniversal、DefaultGemm、Gemm 等共享很多组件和配置,即构建了 Gemm,但是仅使用其中组件;

- 通用的 kernel 模板函数 Kernel2 调用 GemmUniversal::invoke 和 GemmUniversalStreamk::invoke 函数,主要实现为 GemmUniversal::run_with_swizzle 和 GemmUniversalStreamk::gemm 函数;

- Threadblock 层面,同样分为 GemmIdentityThreadblockSwizzle 和 ThreadblockSwizzleStreamK 两个分支:

- 采用4阶段的 MmaMultistage,其中 A 和 B 矩阵的迭代器为 PredicatedTileAccessIterator;

- Epilogue 同时继承了 EpilogueBase 和 EpilogueBaseStreamK,DefaultEpilogueTensorOp 中指定了输出迭代器为 PredicatedTileIterator。

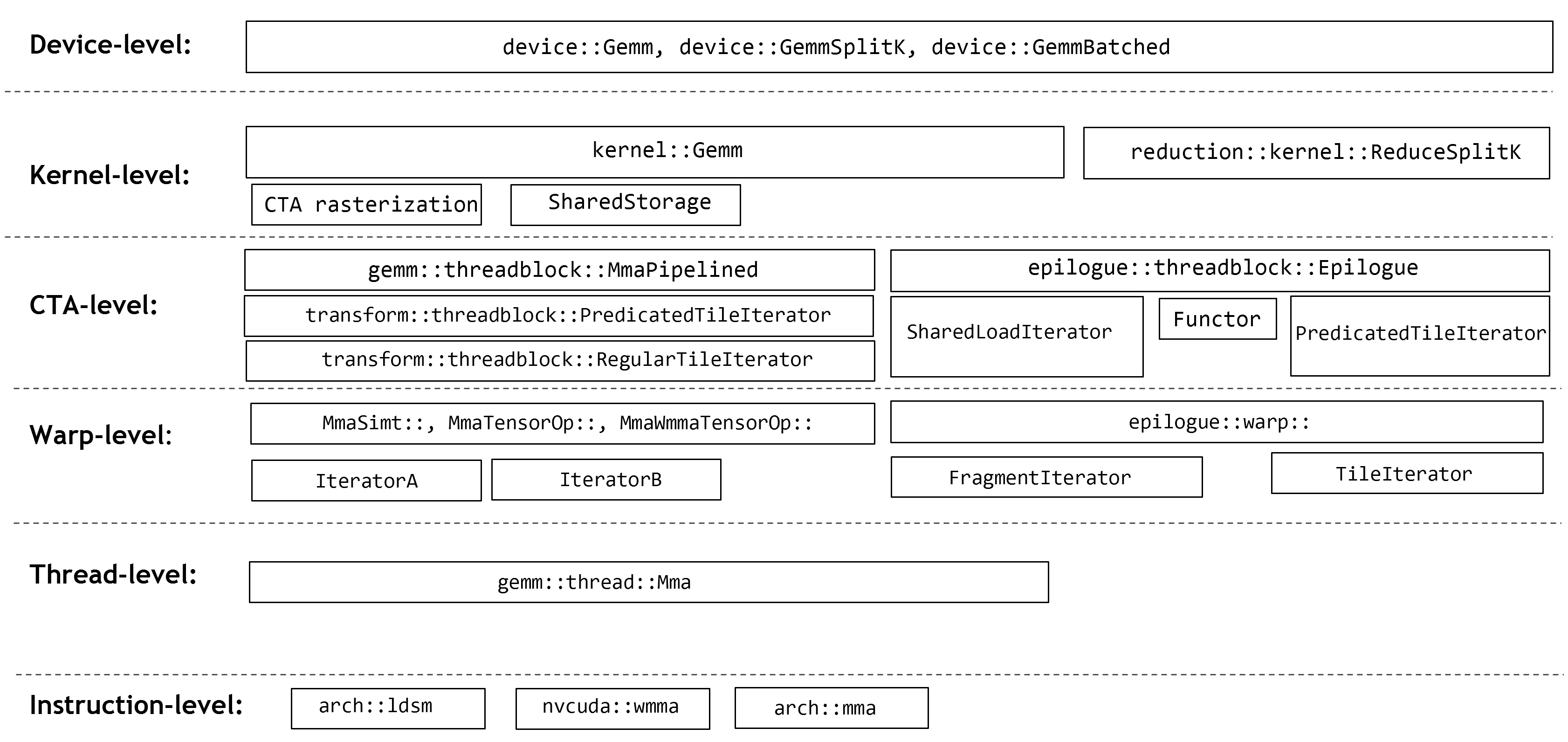

可以参照 CUTLASS GEMM Components 展示的层级来理解。

注意:

- 当前 cutlass 仓库中新旧代码并存,该示例调用的是 2.x API。

- 论文中 Data-parallel 和 Fixed-split 均对应到

kGemm模式,kGemmSplitKParallel模式为 GemmSplitKParallel。

ampere_gemm_universal_streamk.cu

检查 CUDA Toolkit 版本。

/// Program entrypoint

int main(int argc, const char **argv)

{

// CUTLASS must be compiled with CUDA 11.0 Toolkit to run these examples.

if (!(__CUDACC_VER_MAJOR__ >= 11)) {

std::cerr << "Ampere Tensor Core operations must be compiled with CUDA 11.0 Toolkit or later." << std::endl;

// Returning zero so this test passes on older Toolkits. Its actions are no-op.

return 0;

}

cudaGetDevice 为 CUDA Runtime API,返回当前正在使用的设备。

cudaGetDeviceProperties 返回有关计算设备的信息 cudaDeviceProp 。

检查设备计算能力。这里要求 SM80以上。

// Current device must must have compute capability at least 80

cudaDeviceProp props;

int current_device_id;

CUDA_CHECK(cudaGetDevice(¤t_device_id));

CUDA_CHECK(cudaGetDeviceProperties(&props, current_device_id));

if (!((props.major * 10 + props.minor) >= 80))

{

std::cerr << "Ampere Tensor Core operations must be run on a machine with compute capability at least 80."

<< std::endl;

// Returning zero so this test passes on older Toolkits. Its actions are no-op.

return 0;

}

创建一个 Options 结构体。

Options::parse 通过 CommandLine 结构体解析命令行参数。

// Parse commandline options

Options options("ampere_streamk_gemm");

options.parse(argc, argv);

if (options.help) {

options.print_usage(std::cout) << std::endl;

return 0;

}

std::cout <<

options.iterations << " timing iterations of " <<

options.problem_size.m() << " x " <<

options.problem_size.n() << " x " <<

options.problem_size.k() << " matrix-matrix multiply" << std::endl;

if (!options.valid()) {

std::cerr << "Invalid problem." << std::endl;

return -1;

}

HostTensor::resize 改变逻辑张量的大小。

HostTensor::host_view 返回一个 TensorView 对象。

TensorFillRandomUniform 函数通过 std::rand 生成随机数。

//

// Initialize GEMM datasets

//

// Initialize tensors using CUTLASS helper functions

options.tensor_a.resize(options.problem_size.mk()); // <- Create matrix A with dimensions M x K

options.tensor_b.resize(options.problem_size.kn()); // <- Create matrix B with dimensions K x N

options.tensor_c.resize(options.problem_size.mn()); // <- Create matrix C with dimensions M x N

options.tensor_d.resize(options.problem_size.mn()); // <- Create matrix D with dimensions M x N used to store output from CUTLASS kernel

options.tensor_ref_d.resize(options.problem_size.mn()); // <- Create matrix D with dimensions M x N used to store output from reference kernel

// Fill matrix A on host with uniform-random data [-2, 2]

cutlass::reference::host::TensorFillRandomUniform(

options.tensor_a.host_view(),

1,

ElementA(2),

ElementA(-2),

0);

// Fill matrix B on host with uniform-random data [-2, 2]

cutlass::reference::host::TensorFillRandomUniform(

options.tensor_b.host_view(),

1,

ElementB(2),

ElementB(-2),

0);

// Fill matrix C on host with uniform-random data [-2, 2]

cutlass::reference::host::TensorFillRandomUniform(

options.tensor_c.host_view(),

1,

ElementC(2),

ElementC(-2),

0);

HostTensor::sync_device 拷贝数据到设备端。

HostTensor::device_ref 返回一个 TensorRef 对象。

DeviceGemmReference 即 Gemm。调用参考 kernel 计算结果。

HostTensor::sync_host 拷贝数据到主机端。

//

// Compute reference output

//

// Copy data from host to GPU

options.tensor_a.sync_device();

options.tensor_b.sync_device();

options.tensor_c.sync_device();

// Zero-initialize reference output matrix D

cutlass::reference::host::TensorFill(options.tensor_ref_d.host_view());

options.tensor_ref_d.sync_device();

// Create instantiation for device reference gemm kernel

DeviceGemmReference gemm_reference;

// Launch device reference gemm kernel

gemm_reference(

options.problem_size,

ElementAccumulator(options.alpha),

options.tensor_a.device_ref(),

options.tensor_b.device_ref(),

ElementAccumulator(options.beta),

options.tensor_c.device_ref(),

options.tensor_ref_d.device_ref());

// Wait for kernels to finish

CUDA_CHECK(cudaDeviceSynchronize());

// Copy output data from reference kernel to host for comparison

options.tensor_ref_d.sync_host();

options.split_k_factor=1时比较 Basic-DP 和 StreamK。

调用 run 模板函数来运行参数实例化的 kernel。

DeviceGemmBasic 和 DeviceGemmStreamK 均为 GemmUniversal。只是前者使用 GemmIdentityThreadblockSwizzle 后者使用 ThreadblockSwizzleStreamK。

options.split_k_factor自增。

//

// Evaluate CUTLASS kernels

//

// Test default operation

if (options.split_k_factor == 1)

{

// Compare basic data-parallel version versus StreamK version using default load-balancing heuristics

Result basic_dp = run<DeviceGemmBasic>("Basic data-parallel GEMM", options);

Result streamk_default = run<DeviceGemmStreamK>("StreamK GEMM with default load-balancing", options);

printf(" Speedup vs Basic-DP: %.3f\n", (basic_dp.avg_runtime_ms / streamk_default.avg_runtime_ms));

// Show that StreamK can emulate basic data-parallel GEMM when we set the number of SMs to load-balance across = 1

options.avail_sms = 1; // Set loadbalancing width to 1 SM (no load balancing)

Result streamk_dp = run<DeviceGemmStreamK>("StreamK emulating basic data-parallel GEMM", options);

options.avail_sms = -1; // Reset loadbalancing width to unspecified SMs (i.e., the number of device SMs)

printf(" Speedup vs Basic-DP: %.3f\n", (basic_dp.avg_runtime_ms / streamk_dp.avg_runtime_ms));

options.split_k_factor++; // Increment splitting factor for next evaluation

}

options.split_k_factor大于1时,比较 Basic-SplitK 和 SplitK-StreamK。

// Show that StreamK can emulate "Split-K" with a tile-splitting factor

Result basic_splitk = run<DeviceGemmBasic>(

std::string("Basic split-K GEMM with tile-splitting factor ") + std::to_string(options.split_k_factor),

options);

Result streamk_splitk = run<DeviceGemmStreamK>(

std::string("StreamK emulating Split-K GEMM with tile-splitting factor ") + std::to_string(options.split_k_factor),

options);

printf(" Speedup vs Basic-SplitK: %.3f\n", (basic_splitk.avg_runtime_ms / streamk_splitk.avg_runtime_ms));

return 0;

}

run

TensorFill 用标量元素填充张量。

/// Execute a given example GEMM computation

template <typename DeviceGemmT>

Result run(std::string description, Options &options)

{

// Display test description

std::cout << std::endl << description << std::endl;

// Zero-initialize test output matrix D

cutlass::reference::host::TensorFill(options.tensor_d.host_view());

options.tensor_d.sync_device();

创建一个 GemmUniversal 对象。

args_from_options 分为 DeviceGemmBasic 和 DeviceGemmStreamK 两个版本。根据 Options 构造出 GemmUniversal::Arguments,即 GemmUniversalBase::Arguments,即 GemmUniversal::Arguments。

GemmUniversalBase::get_workspace_size 返回由这些参数表示的问题几何形状所需的工作区大小(以字节为单位)。

allocation 即 DeviceAllocation。构造函数调用 allocate 申请内存。

GemmUniversalBase::can_implement 判断能否 grid 是否超出以及形状是否满足对齐要求。

GemmUniversalBase::initialize 初始化参数。

// Instantiate CUTLASS kernel depending on templates

DeviceGemmT device_gemm;

// Create a structure of gemm kernel arguments suitable for invoking an instance of DeviceGemmT

auto arguments = args_from_options(device_gemm, options, options.tensor_a, options.tensor_b, options.tensor_c, options.tensor_d);

// Using the arguments, query for extra workspace required for matrix multiplication computation

size_t workspace_size = DeviceGemmT::get_workspace_size(arguments);

// Allocate workspace memory

cutlass::device_memory::allocation<uint8_t> workspace(workspace_size);

// Check the problem size is supported or not

CUTLASS_CHECK(device_gemm.can_implement(arguments));

// Initialize CUTLASS kernel with arguments and workspace pointer

CUTLASS_CHECK(device_gemm.initialize(arguments, workspace.get()));

进行功能测试。

调用不带入参的 GemmUniversalBase::operator() 函数。

TensorEquals 检查输出是否和参考值的每个元素都相等。能做到严格相等吗?

// Correctness / Warmup iteration

CUTLASS_CHECK(device_gemm());

// Copy output data from CUTLASS and reference kernel to host for comparison

options.tensor_d.sync_host();

// Check if output from CUTLASS kernel and reference kernel are equal or not

Result result;

result.passed = cutlass::reference::host::TensorEquals(

options.tensor_d.host_view(),

options.tensor_ref_d.host_view());

std::cout << " Disposition: " << (result.passed ? "Passed" : "Failed") << std::endl;

性能测试。

GpuTimer 通过 cudaEvent 计时。

gflops 为实际计算吞吐量。

// Run profiling loop

if (options.iterations > 0)

{

GpuTimer timer;

timer.start();

for (int iter = 0; iter < options.iterations; ++iter) {

CUTLASS_CHECK(device_gemm());

}

timer.stop();

// Compute average runtime and GFLOPs.

float elapsed_ms = timer.elapsed_millis();

result.avg_runtime_ms = double(elapsed_ms) / double(options.iterations);

result.gflops = options.gflops(result.avg_runtime_ms / 1000.0);

std::cout << " Avg runtime: " << result.avg_runtime_ms << " ms" << std::endl;

std::cout << " GFLOPs: " << result.gflops << std::endl;

}

if (!result.passed) {

exit(-1);

}

return result;

}

GemmUniversal

GemmUniversal 是一个有状态的、可重用的 GEMM 句柄。一旦为给定的 GEMM 计算(问题几何形状和数据引用)初始化后,它就可以在具有相同几何形状的不同 GEMM 问题之间重复使用。(一旦初始化,有关问题几何形状和指向工作区内存的引用的详细信息将无法更新。)通用 GEMM 支持串行归约、并行归约、批量跨步和批量数组变体。

主要实现都在 GemmUniversalBase 中。

DefaultGemmUniversal::GemmKernel 即 GemmUniversal 或 GemmUniversalStreamk。

DefaultGemmConfiguration::EpilogueOutputOp,即 LinearCombination。

/*!

GemmUniversal is a stateful, reusable GEMM handle. Once initialized for a given GEMM computation

(problem geometry and data references), it can be reused across different GEMM problems having the

geometry. (Once initialized, details regarding problem geometry and references to workspace memory

cannot be updated.)

The universal GEMM accommodates serial reductions, parallel reductions, batched strided, and

batched array variants.

*/

template <

/// Element type for A matrix operand

typename ElementA_,

/// Layout type for A matrix operand

typename LayoutA_,

/// Element type for B matrix operand

typename ElementB_,

/// Layout type for B matrix operand

typename LayoutB_,

/// Element type for C and D matrix operands

typename ElementC_,

/// Layout type for C and D matrix operands

typename LayoutC_,

/// Element type for internal accumulation

typename ElementAccumulator_ = ElementC_,

/// Operator class tag

typename OperatorClass_ = arch::OpClassSimt,

/// Tag indicating architecture to tune for. This is the minimum SM that

/// supports the intended feature. The device kernel can be built

/// targeting any SM larger than this number.

typename ArchTag_ = arch::Sm70,

/// Threadblock-level tile size (concept: GemmShape)

typename ThreadblockShape_ = typename DefaultGemmConfiguration<

OperatorClass_, ArchTag_, ElementA_, ElementB_, ElementC_,

ElementAccumulator_>::ThreadblockShape,

/// Warp-level tile size (concept: GemmShape)

typename WarpShape_ = typename DefaultGemmConfiguration<

OperatorClass_, ArchTag_, ElementA_, ElementB_, ElementC_,

ElementAccumulator_>::WarpShape,

/// Instruction-level tile size (concept: GemmShape)

typename InstructionShape_ = typename DefaultGemmConfiguration<

OperatorClass_, ArchTag_, ElementA_, ElementB_, ElementC_,

ElementAccumulator_>::InstructionShape,

/// Epilogue output operator

typename EpilogueOutputOp_ = typename DefaultGemmConfiguration<

OperatorClass_, ArchTag_, ElementA_, ElementB_, ElementC_,

ElementAccumulator_>::EpilogueOutputOp,

/// Threadblock-level swizzling operator

typename ThreadblockSwizzle_ = threadblock::GemmIdentityThreadblockSwizzle<>,

/// Number of stages used in the pipelined mainloop

int Stages =

DefaultGemmConfiguration<OperatorClass_, ArchTag_, ElementA_, ElementB_,

ElementC_, ElementAccumulator_>::kStages,

/// Access granularity of A matrix in units of elements

int AlignmentA =

DefaultGemmConfiguration<OperatorClass_, ArchTag_, ElementA_, ElementB_,

ElementC_, ElementAccumulator_>::kAlignmentA,

/// Access granularity of B matrix in units of elements

int AlignmentB =

DefaultGemmConfiguration<OperatorClass_, ArchTag_, ElementA_, ElementB_,

ElementC_, ElementAccumulator_>::kAlignmentB,

/// Operation performed by GEMM

typename Operator_ = typename DefaultGemmConfiguration<

OperatorClass_, ArchTag_, ElementA_, ElementB_, ElementC_,

ElementAccumulator_>::Operator,

/// Complex elementwise transformation on A operand

ComplexTransform TransformA = ComplexTransform::kNone,

/// Complex elementwise transformation on B operand

ComplexTransform TransformB = ComplexTransform::kNone,

/// Gather operand A by using an index array

bool GatherA = false,

/// Gather operand B by using an index array

bool GatherB = false,

/// Scatter result D by using an index array

bool ScatterD = false,

/// Permute result D

typename PermuteDLayout_ = layout::NoPermute,

/// Permute operand A

typename PermuteALayout_ = layout::NoPermute,

/// Permute operand B

typename PermuteBLayout_ = layout::NoPermute

>

class GemmUniversal :

public GemmUniversalBase<

typename kernel::DefaultGemmUniversal<

ElementA_,

LayoutA_,

TransformA,

AlignmentA,

ElementB_,

LayoutB_,

TransformB,

AlignmentB,

ElementC_,

LayoutC_,

ElementAccumulator_,

OperatorClass_,

ArchTag_,

ThreadblockShape_,

WarpShape_,

InstructionShape_,

EpilogueOutputOp_,

ThreadblockSwizzle_,

Stages,

Operator_,

SharedMemoryClearOption::kNone,

GatherA,

GatherB,

ScatterD,

PermuteDLayout_,

PermuteALayout_,

PermuteBLayout_

>::GemmKernel

> {

public:

using ElementAccumulator = ElementAccumulator_;

using OperatorClass = OperatorClass_;

using ArchTag = ArchTag_;

using ThreadblockShape = ThreadblockShape_;

using WarpShape = WarpShape_;

using InstructionShape = InstructionShape_;

using EpilogueOutputOp = EpilogueOutputOp_;

using ThreadblockSwizzle = ThreadblockSwizzle_;

using Operator = Operator_;

using PermuteDLayout = PermuteDLayout_;

using PermuteALayout = PermuteALayout_;

using PermuteBLayout = PermuteBLayout_;

static int const kStages = Stages;

static int const kAlignmentA = AlignmentA;

static int const kAlignmentB = AlignmentB;

static int const kAlignmentC = EpilogueOutputOp::kCount;

static ComplexTransform const kTransformA = TransformA;

static ComplexTransform const kTransformB = TransformB;

GemmUniversal::GemmKernel 为 GemmUniversalBase::GemmKernel,即 DefaultGemmUniversal::GemmKernel。后者根据传入的模板参数ThreadblockSwizzle来确定。

using Base = GemmUniversalBase<

typename kernel::DefaultGemmUniversal<

ElementA_,

LayoutA_,

TransformA,

AlignmentA,

ElementB_,

LayoutB_,

TransformB,

AlignmentB,

ElementC_,

LayoutC_,

ElementAccumulator_,

OperatorClass_,

ArchTag_,

ThreadblockShape_,

WarpShape_,

InstructionShape_,

EpilogueOutputOp_,

ThreadblockSwizzle_,

Stages,

Operator_,

SharedMemoryClearOption::kNone,

GatherA,

GatherB,

ScatterD,

PermuteDLayout_,

PermuteALayout_,

PermuteBLayout_

>::GemmKernel

>;

using Arguments = typename Base::Arguments;

using GemmKernel = typename Base::GemmKernel;

};

GemmUniversalBase

使用 GemmUniversal 或者 GemmUniversalStreamk 中的信息。

template <typename GemmKernel_>

class GemmUniversalBase {

public:

using GemmKernel = GemmKernel_;

/// Boolean indicating whether the CudaHostAdapter is enabled

static bool const kEnableCudaHostAdapter = CUTLASS_ENABLE_CUDA_HOST_ADAPTER;

using ThreadblockShape = typename GemmKernel::Mma::Shape;

using ElementA = typename GemmKernel::ElementA;

using LayoutA = typename GemmKernel::LayoutA;

using TensorRefA = TensorRef<ElementA const, LayoutA>;

static ComplexTransform const kTransformA = GemmKernel::kTransformA;

using ElementB = typename GemmKernel::ElementB;

using LayoutB = typename GemmKernel::LayoutB;

using TensorRefB = TensorRef<ElementB const, LayoutB>;

static ComplexTransform const kTransformB = GemmKernel::kTransformB;

using ElementC = typename GemmKernel::ElementC;

using LayoutC = typename GemmKernel::LayoutC;

using TensorRefC = TensorRef<ElementC const, LayoutC>;

using TensorRefD = TensorRef<ElementC, LayoutC>;

/// Numerical accumulation element type

using ElementAccumulator = typename GemmKernel::Mma::ElementC;

using EpilogueOutputOp = typename GemmKernel::EpilogueOutputOp;

using ThreadblockSwizzle = typename GemmKernel::ThreadblockSwizzle;

using Operator = typename GemmKernel::Operator;

Arguments使用传入GemmKernel结构体的类型。即 GemmUniversal::Arguments 或者GemmUniversalStreamk::Arguments。

device_ordinal_的初始值为-1。

/// Argument structure

using Arguments = typename GemmKernel::Arguments;

/// Index of the GEMM Kernel within the CudaHostAdapter

static int32_t const kGemmKernelIndex = 0;

/// Kernel dynamic shared memory allocation requirement

/// Update the kernel function's shared memory configuration for the current device

static constexpr size_t kSharedStorageSize = sizeof(typename GemmKernel::SharedStorage);

protected:

//

// Device properties (uniform across all instances of the current thread)

//

// Device ordinal

CUTLASS_THREAD_LOCAL static int device_ordinal_;

/// Device SM count

CUTLASS_THREAD_LOCAL static int device_sms_;

/// Kernel SM occupancy (in thread blocks)

CUTLASS_THREAD_LOCAL static int sm_occupancy_;

GemmUniversalBase::init_device_props

初始化device_sms_和sm_occupancy_,并设置动态 Shared Memory。

如果有必要,初始化线程当前设备的静态线程本地成员。

CUTLASS_TRACE_HOST 在 debug 模式下,打印文件名和行号。

protected:

/// Initialize static thread-local members for the thread's current device,

/// if necessary.

static Status init_device_props()

{

CUTLASS_TRACE_HOST("GemmUniversalBase::init_device_props()");

cudaGetDevice 返回当前正在使用的设备。

如果当前设备已经初始化了,则直接返回。

cudaError_t cudart_result;

// Get current device ordinal

int current_ordinal;

cudart_result = cudaGetDevice(¤t_ordinal);

if (cudart_result != cudaSuccess) {

CUTLASS_TRACE_HOST(" cudaGetDevice() returned error " << cudaGetErrorString(cudart_result));

return Status::kErrorInternal;

}

// Done if matches the current static member

if (current_ordinal == device_ordinal_) {

// Already initialized

return Status::kSuccess;

}

cudaDeviceGetAttribute 返回有关设备的信息。

// Update SM count member

cudart_result = cudaDeviceGetAttribute (&device_sms_, cudaDevAttrMultiProcessorCount, current_ordinal);

if (cudart_result != cudaSuccess) {

CUTLASS_TRACE_HOST(" cudaDeviceGetAttribute() returned error " << cudaGetErrorString(cudart_result));

return Status::kErrorInternal;

}

cudaFuncSetAttribute 设置给定函数的属性。

如果 SharedMemory 大于48KB,则设置函数的动态分配的共享内存的最大容量。

// If requires more than 48KB: configure for extended, dynamic shared memory

if constexpr (kSharedStorageSize >= (48 << 10))

{

cudart_result = cudaFuncSetAttribute(

Kernel2<GemmKernel>,

cudaFuncAttributeMaxDynamicSharedMemorySize,

kSharedStorageSize);

if (cudart_result != cudaSuccess) {

CUTLASS_TRACE_HOST(" cudaFuncSetAttribute() returned error " << cudaGetErrorString(cudart_result));

return Status::kErrorInternal;

}

}

cudaOccupancyMaxActiveBlocksPerMultiprocessorWithFlags 是 CUDA Runtime API,返回每个 SM 运行该 kernel 函数时的最大活跃线程块数。

// Update SM occupancy member

cudart_result = cudaOccupancyMaxActiveBlocksPerMultiprocessorWithFlags(

&sm_occupancy_,

Kernel2<GemmKernel>,

GemmKernel::kThreadCount,

kSharedStorageSize,

cudaOccupancyDisableCachingOverride);

if (cudart_result != cudaSuccess) {

CUTLASS_TRACE_HOST(" cudaOccupancyMaxActiveBlocksPerMultiprocessorWithFlags() returned error " << cudaGetErrorString(cudart_result));

return Status::kErrorInternal;

}

// Update device ordinal member on success

device_ordinal_ = current_ordinal;

CUTLASS_TRACE_HOST(" "

"device_ordinal: (" << device_ordinal_ << "), "

"device_sms: (" << device_sms_ << "), "

"sm_occupancy: (" << sm_occupancy_ << ") "

"smem_size: (" << kSharedStorageSize << ") "

"GemmKernel::kThreadCount: (" << GemmKernel::kThreadCount << ")");

return Status::kSuccess;

}

因 Kernel 不同,可能是 GemmUniversal::Params 或者 GemmUniversalStreamk::Params。

protected:

//

// Instance data members

//

/// Kernel parameters

typename GemmKernel::Params params_;

GemmUniversalBase::init_params

初始化params_。

/// Initialize params member

Status init_params(Arguments const &args, CudaHostAdapter *cuda_adapter = nullptr)

{

int32_t device_sms = 0;

int32_t sm_occupancy = 0;

kEnableCudaHostAdapter 的值为宏CUTLASS_ENABLE_CUDA_HOST_ADAPTER,未启用。

CudaHostAdapter 类也没有实现。

if constexpr (kEnableCudaHostAdapter) {

CUTLASS_ASSERT(cuda_adapter);

//

// Occupancy query using CudaHostAdapter::query_occupancy().

//

if (cuda_adapter) {

Status status = cuda_adapter->query_occupancy(

&device_sms,

&sm_occupancy,

kGemmKernelIndex,

GemmKernel::kThreadCount,

kSharedStorageSize);

CUTLASS_ASSERT(status == Status::kSuccess);

if (status != Status::kSuccess) {

return status;

}

}

else {

return Status::kErrorInternal;

}

}

因此,调用 GemmUniversalBase::init_device_props 函数得到 SM 数量和 SM 内的最大线程块数。

else {

CUTLASS_ASSERT(cuda_adapter == nullptr);

// Initialize static device properties, if necessary

Status result = init_device_props();

if (result != Status::kSuccess) {

return result;

}

//

// Use thread-local static members for occupancy query initialized by call to

// `init_device_props()`

//

device_sms = device_sms_;

sm_occupancy = sm_occupancy_;

}

得到一个 GemmUniversal::Params 或者 GemmUniversalStreamk::Params 对象。

// Initialize params member

params_ = typename GemmKernel::Params(args, device_sms, sm_occupancy);

return Status::kSuccess;

}

GemmUniversalBase::can_implement

调用 kernel 的 GemmUniversal::can_implement 或 GemmUniversalStreamk::can_implement 进一步检查。

public:

//---------------------------------------------------------------------------------------------

// Stateless API

//---------------------------------------------------------------------------------------------

/// Determines whether the GEMM can execute the given problem.

static Status can_implement(Arguments const &args, CudaHostAdapter *cuda_adapter = nullptr)

{

CUTLASS_TRACE_HOST("GemmUniversalBase::can_implement()");

dim3 grid = get_grid_shape(args, cuda_adapter);

if (!(grid.y <= std::numeric_limits<uint16_t>::max() &&

grid.z <= std::numeric_limits<uint16_t>::max()))

{

return Status::kErrorInvalidProblem;

}

return GemmKernel::can_implement(args);

}

GemmUniversalBase::get_workspace_size

返回由这些参数表示的问题几何形状所需的工作区大小(以字节为单位)。

/// Returns the workspace size (in bytes) needed for the problem

/// geometry expressed by these arguments

static size_t get_workspace_size(Arguments const &args, CudaHostAdapter *cuda_adapter = nullptr)

{

CUTLASS_TRACE_HOST("GemmUniversalBase::get_workspace_size()");

首先创建一个 GemmUniversalBase 对象。

然后调用 GemmUniversalBase::init_params 初始化参数。

// Initialize parameters from args

GemmUniversalBase base;

if (base.init_params(args, cuda_adapter) != Status::kSuccess) {

return 0;

}

调用 UniversalParamsBase::get_workspace_size 或者 GemmUniversalStreamk::Params::get_workspace_size 函数得到 kernel 需要的全局内存工作空间大小。

// Get size from parameters

size_t workspace_bytes = base.params_.get_workspace_size();

CUTLASS_TRACE_HOST(" workspace_bytes: " << workspace_bytes);

return workspace_bytes;

}

GemmUniversalBase::get_grid_shape

/// Returns the grid extents in thread blocks to launch

static dim3 get_grid_shape(Arguments const &args, CudaHostAdapter *cuda_adapter = nullptr)

{

CUTLASS_TRACE_HOST("GemmUniversalBase::get_grid_shape()");

首先创建一个 GemmUniversalBase 对象。

然后调用 GemmUniversalBase::init_params 初始化参数。

// Initialize parameters from args

GemmUniversalBase base;

if (base.init_params(args, cuda_adapter) != Status::kSuccess) {

return dim3(0,0,0);

}

调用 UniversalParamsBase::get_grid_dims 或者 GemmUniversalStreamk::Params::get_grid_dims 函数得到网格的维度。

// Get dims from parameters

dim3 grid_dims = base.params_.get_grid_dims();

CUTLASS_TRACE_HOST(

" tiled_shape: " << base.params_.get_tiled_shape() << "\n"

<< " grid_dims: {" << grid_dims << "}");

return grid_dims;

}

GemmUniversalBase::maximum_active_blocks

与 GemmUniversalBase::init_params 中的操作类似。

/// Returns the maximum number of active thread blocks per multiprocessor

static int maximum_active_blocks(CudaHostAdapter *cuda_adapter = nullptr)

{

CUTLASS_TRACE_HOST("GemmUniversalBase::maximum_active_blocks()");

int32_t device_sms = 0;

int32_t sm_occupancy = 0;

if constexpr (kEnableCudaHostAdapter) {

CUTLASS_ASSERT(cuda_adapter);

if (cuda_adapter) {

Status status = cuda_adapter->query_occupancy(

&device_sms,

&sm_occupancy,

kGemmKernelIndex,

GemmKernel::kThreadCount,

kSharedStorageSize);

CUTLASS_ASSERT(status == Status::kSuccess);

if (status != Status::kSuccess) {

return -1;

}

}

else {

return -1;

}

}

else {

CUTLASS_ASSERT(cuda_adapter == nullptr);

// Initialize static device properties, if necessary

if (init_device_props() != Status::kSuccess) {

return -1;

}

sm_occupancy = sm_occupancy_;

}

CUTLASS_TRACE_HOST(" max_active_blocks: " << sm_occupancy_);

return sm_occupancy;

}

GemmUniversalBase::initialize

// Stateful API

//---------------------------------------------------------------------------------------------

/// Initializes GEMM state from arguments and workspace memory

Status initialize(

Arguments const &args,

void *workspace = nullptr,

cudaStream_t stream = nullptr,

CudaHostAdapter *cuda_adapter = nullptr)

{

CUTLASS_TRACE_HOST("GemmUniversalBase::initialize() - workspace "

<< workspace << ", stream: " << (stream ? "non-null" : "null"));

调用 GemmUniversalBase::init_params 函数得到 GemmUniversal::Params 或者 GemmUniversalStreamk::Params。

// Initialize parameters from args

Status result = init_params(args, cuda_adapter);

if (result != Status::kSuccess) {

return result;

}

调用 UniversalParamsBase::init_workspace 函数或者 GemmUniversalStreamk::Params::init_workspace 函数对工作空间清零。

// Assign and prepare workspace memory

if (args.mode == GemmUniversalMode::kGemm) {

return params_.init_workspace(workspace, stream);

}

return Status::kSuccess;

}

GemmUniversalBase::update

调用 GemmUniversal::Params::update 或者 GemmUniversalStreamk::Params::update 函数更新参数。

/// Lightweight update given a subset of arguments.

Status update(Arguments const &args)

{

CUTLASS_TRACE_HOST("GemmUniversalBase()::update()");

params_.update(args);

return Status::kSuccess;

}

GemmUniversalBase::run

CUTLASS_TRACE_HOST 宏在 debug 模式下使用。

GemmUniversal::kThreadCount 和 GemmUniversalStreamk::kThreadCount 均通过 WarpCount得到。后者为 MmaBase::WarpCount,通过应用程序传入的 ThreadblockShape 和 WarpShape 确定。

调用 UniversalParamsBase::get_grid_dims 或 GemmUniversalStreamk::Params::get_grid_dims 函数得到网格维度。

/// Runs the kernel using initialized state.

Status run(cudaStream_t stream = nullptr, CudaHostAdapter *cuda_adapter = nullptr)

{

CUTLASS_TRACE_HOST("GemmUniversalBase::run()");

// Configure grid and block dimensions

dim3 block(GemmKernel::kThreadCount, 1, 1);

dim3 grid = params_.get_grid_dims();

CUTLASS_ASSERT 为断言。

Kernel2 调用 GemmUniversal::invoke 或者 GemmUniversalStreamk::invoke 函数。

kernel 函数的参数为 GemmUniversal::Params 或者 GemmUniversalStreamk::Params 类。

// Launch kernel

CUTLASS_TRACE_HOST(" "

"grid: (" << grid << "), "

"block: (" << block << "), "

"SMEM: (" << kSharedStorageSize << ")");

if constexpr (kEnableCudaHostAdapter) {

CUTLASS_ASSERT(cuda_adapter);

if (cuda_adapter) {

void* kernel_params[] = {¶ms_};

return cuda_adapter->launch(grid, block, kSharedStorageSize, stream, kernel_params, 0);

}

else {

return Status::kErrorInternal;

}

}

else {

CUTLASS_ASSERT(cuda_adapter == nullptr);

Kernel2<GemmKernel><<<grid, block, kSharedStorageSize, stream>>>(params_);

// Query for errors

cudaError_t result = cudaGetLastError();

if (result != cudaSuccess) {

CUTLASS_TRACE_HOST(" grid launch failed with error " << cudaGetErrorString(result));

return Status::kErrorInternal;

}

}

return Status::kSuccess;

}

GemmUniversalBase::operator()

重载运算符调用 GemmUniversalBase::run 函数。

/// Runs the kernel using initialized state.

Status operator()(cudaStream_t stream = nullptr, CudaHostAdapter *cuda_adapter = nullptr)

{

return run(stream, cuda_adapter);

}

GemmUniversalBase::operator()

接受输入参数的版本先 GemmUniversalBase::initialize 再 GemmUniversalBase::run。

/// Runs the kernel using initialized state.

Status operator()(

Arguments const &args,

void *workspace = nullptr,

cudaStream_t stream = nullptr,

CudaHostAdapter *cuda_adapter = nullptr)

{

Status status = initialize(args, workspace, stream, cuda_adapter);

if (status == Status::kSuccess) {

status = run(stream, cuda_adapter);

}

return status;

}

};

UniversalParamsBase

/// Parameters structure

template <

typename ThreadblockSwizzle,

typename ThreadblockShape,

typename ElementA,

typename ElementB,

typename ElementC,

typename LayoutA,

typename LayoutB>

struct UniversalParamsBase

{

//

// Data members

//

GemmCoord problem_size{};

GemmCoord grid_tiled_shape{};

int swizzle_log_tile{0};

GemmUniversalMode mode = cutlass::gemm::GemmUniversalMode::kGemm;

int batch_count {0};

int gemm_k_size {0};

int64_t batch_stride_D {0};

int *semaphore = nullptr;

//

// Host dispatch API

//

/// Default constructor

UniversalParamsBase() = default;

UniversalParamsBase::UniversalParamsBase

构造函数调用 UniversalParamsBase::init_grid_tiled_shape 计算切块后的网格形状。

/// Constructor

UniversalParamsBase(

UniversalArgumentsBase const &args, /// GEMM application arguments

int device_sms, /// Number of SMs on the device

int sm_occupancy) /// Kernel SM occupancy (in thread blocks)

:

problem_size(args.problem_size),

mode(args.mode),

batch_count(args.batch_count),

batch_stride_D(args.batch_stride_D),

semaphore(nullptr)

{

init_grid_tiled_shape();

}

UniversalParamsBase::get_workspace_size

GemmSplitKParallel 需要problem.m() * problem.n() * k_slice 的工作空间。

/// Returns the workspace size (in bytes) needed for this problem geometry

size_t get_workspace_size() const

{

size_t workspace_bytes = 0;

if (mode == GemmUniversalMode::kGemmSplitKParallel)

{

// Split-K parallel always requires a temporary workspace

workspace_bytes =

sizeof(ElementC) *

size_t(batch_stride_D) *

size_t(grid_tiled_shape.k());

}

串行的话空间对应输出分块数量,因为每个输出分块需要一个同步信号量进行归约。

else if (mode == GemmUniversalMode::kGemm && grid_tiled_shape.k() > 1)

{

// Serial split-K only requires a temporary workspace if the number of partitions along the

// GEMM K dimension is greater than one.

workspace_bytes = sizeof(int) * size_t(grid_tiled_shape.m()) * size_t(grid_tiled_shape.n());

}

return workspace_bytes;

}

UniversalParamsBase::init_workspace

调用 UniversalParamsBase::get_workspace_size 获取大小。

cudaMemsetAsync 将同步信号量清零。

分配并初始化指定的工作区缓冲区。 假设分配给工作区的内存至少与 get_workspace_size() 相同大。

/// Assign and initialize the specified workspace buffer. Assumes

/// the memory allocated to workspace is at least as large as get_workspace_size().

Status init_workspace(

void *workspace,

cudaStream_t stream = nullptr)

{

semaphore = static_cast<int *>(workspace);

// Zero-initialize entire workspace

if (semaphore)

{

size_t workspace_bytes = get_workspace_size();

CUTLASS_TRACE_HOST(" Initialize " << workspace_bytes << " workspace bytes");

cudaError_t result = cudaMemsetAsync(

semaphore,

0,

workspace_bytes,

stream);

if (result != cudaSuccess) {

CUTLASS_TRACE_HOST(" cudaMemsetAsync() returned error " << cudaGetErrorString(result));

return Status::kErrorInternal;

}

}

return Status::kSuccess;

}

UniversalParamsBase::get_tiled_shape

/// Returns the GEMM volume in thread block tiles

GemmCoord get_tiled_shape() const

{

return grid_tiled_shape;

}

UniversalParamsBase::get_grid_blocks

返回要启动的线程块总数。

UniversalParamsBase::get_grid_dims 函数返回网格的维度。

/// Returns the total number of thread blocks to launch

int get_grid_blocks() const

{

dim3 grid_dims = get_grid_dims();

return grid_dims.x * grid_dims.y * grid_dims.z;

}

UniversalParamsBase::get_grid_dims

GemmIdentityThreadblockSwizzle::get_grid_shape 函数根据传入的grid_tiled_shape以逻辑图块为单位计算 CUDA 网格尺寸。

/// Returns the grid extents in thread blocks to launch

dim3 get_grid_dims() const

{

return ThreadblockSwizzle().get_grid_shape(grid_tiled_shape);

}

UniversalParamsBase::init_grid_tiled_shape

调用 GemmIdentityThreadblockSwizzle::get_tiled_shape 函数以逻辑图块为单位返回问题的形状。

GemmIdentityThreadblockSwizzle::get_log_tile 函数计算最佳光栅化宽度。

private:

CUTLASS_HOST_DEVICE

void init_grid_tiled_shape() {

// Get GEMM volume in thread block tiles

grid_tiled_shape = ThreadblockSwizzle::get_tiled_shape(

problem_size,

{ThreadblockShape::kM, ThreadblockShape::kN, ThreadblockShape::kK},

batch_count);

swizzle_log_tile = ThreadblockSwizzle::get_log_tile(grid_tiled_shape);

// Determine extent of K-dimension assigned to each block

gemm_k_size = problem_size.k();

如果是 Gemm 模式或者 GemmSplitKParallel 模式,调整grid_tiled_shape.k()的值。

is_continous_k_aligned 判断 k 维是否对齐。

const_max 返回两个整型的最大值。

CACHELINE_BYTES是128,写法上支持更大值。

ceil_div 向上对齐的除法。

gemm_k_size为 GEMM 运算时的 k 维大小。根据问题大小得到 k 维上的分块数量。

if (mode == GemmUniversalMode::kGemm || mode == GemmUniversalMode::kGemmSplitKParallel)

{

static const uint32_t CACHELINE_BYTES = 128;

static const size_t element_bytes_a = sizeof(ElementA);

static const size_t element_bytes_b = sizeof(ElementB);

static const size_t cacheline_elements_a = CACHELINE_BYTES / element_bytes_a;

static const size_t cacheline_elements_b = CACHELINE_BYTES / element_bytes_b;

const bool cacheline_alignment_needed =

util::is_continous_k_aligned<LayoutA, LayoutB>(problem_size, cacheline_elements_a, cacheline_elements_b);

int const kAlignK = const_max(

const_max(128 / sizeof_bits<ElementA>::value, 128 / sizeof_bits<ElementB>::value),

cacheline_alignment_needed ? const_max(cacheline_elements_a, cacheline_elements_b) : 1);

gemm_k_size = round_up(ceil_div(problem_size.k(), batch_count), kAlignK);

if (gemm_k_size) {

grid_tiled_shape.k() = ceil_div(problem_size.k(), gemm_k_size);

}

}

}

};

DefaultGemmUniversal

/

//

// Real-valued GEMM kernels

//

template <

/// Element type for A matrix operand

typename ElementA,

/// Layout type for A matrix operand

typename LayoutA,

/// Access granularity of A matrix in units of elements

int kAlignmentA,

/// Element type for B matrix operand

typename ElementB,

/// Layout type for B matrix operand

typename LayoutB,

/// Access granularity of B matrix in units of elements

int kAlignmentB,

/// Element type for C and D matrix operands

typename ElementC,

/// Layout type for C and D matrix operands

typename LayoutC,

/// Element type for internal accumulation

typename ElementAccumulator,

/// Operator class tag

typename OperatorClass,

/// Tag indicating architecture to tune for

typename ArchTag,

/// Threadblock-level tile size (concept: GemmShape)

typename ThreadblockShape,

/// Warp-level tile size (concept: GemmShape)

typename WarpShape,

/// Warp-level tile size (concept: GemmShape)

typename InstructionShape,

/// Epilogue output operator

typename EpilogueOutputOp,

/// Threadblock-level swizzling operator

typename ThreadblockSwizzle,

/// Number of stages used in the pipelined mainloop

int Stages,

/// Operation performed by GEMM

typename Operator,

/// Use zfill or predicate for out-of-bound cp.async

SharedMemoryClearOption SharedMemoryClear,

/// Gather operand A by using an index array

bool GatherA,

/// Gather operand B by using an index array

bool GatherB,

/// Scatter result D by using an index array

bool ScatterD,

/// Permute result D

typename PermuteDLayout,

/// Permute operand A

typename PermuteALayout,

/// Permute operand B

typename PermuteBLayout

>

struct DefaultGemmUniversal<

ElementA,

LayoutA,

ComplexTransform::kNone, // transform A

kAlignmentA,

ElementB,

LayoutB,

ComplexTransform::kNone, // transform B

kAlignmentB,

ElementC,

LayoutC,

ElementAccumulator,

OperatorClass,

ArchTag,

ThreadblockShape,

WarpShape,

InstructionShape,

EpilogueOutputOp,

ThreadblockSwizzle,

Stages,

Operator,

SharedMemoryClear,

GatherA,

GatherB,

ScatterD,

PermuteDLayout,

PermuteALayout,

PermuteBLayout,

typename platform::enable_if< ! cutlass::is_complex<ElementAccumulator>::value>::type

> {

DefaultGemmKernel为 DefaultGemm::GemmKernel,即 Gemm。

DefaultGemmKernel::Mma 为 Gemm::Mma,即

DefaultGemm::Mma,即 DefaultMma::ThreadblockMma,即 MmaMultistage。因为应用程序指定了 NumStages 等于4。

DefaultGemmKernel::Epilogue 为 Gemm::Epilogue,即 DefaultGemm::Epilogue,即 DefaultGemm::RegularEpilogue,即 DefaultEpilogueTensorOp::Epilogue,即 Epilogue。

using DefaultGemmKernel = typename kernel::DefaultGemm<

ElementA,

LayoutA,

kAlignmentA,

ElementB,

LayoutB,

kAlignmentB,

ElementC,

LayoutC,

ElementAccumulator,

OperatorClass,

ArchTag,

ThreadblockShape,

WarpShape,

InstructionShape,

EpilogueOutputOp,

ThreadblockSwizzle,

Stages,

true,

Operator,

SharedMemoryClear,

GatherA,

GatherB,

ScatterD,

PermuteDLayout,

PermuteALayout,

PermuteBLayout

>::GemmKernel;

SelectBase继承 GemmUniversal 或者 GemmUniversalStreamk。

根据传入的ThreadblockSwizzle是 GemmIdentityThreadblockSwizzle 还是 ThreadblockSwizzleStreamK 推断出来。

/// Universal kernel without StreamkFeature member type

template <class SwizzleT, class Enable = void>

class SelectBase :

public kernel::GemmUniversal<

typename DefaultGemmKernel::Mma,

typename DefaultGemmKernel::Epilogue,

SwizzleT>

{};

/// Universal kernel with StreamkFeature member type

template <class SwizzleT>

class SelectBase<SwizzleT, typename SwizzleT::StreamkFeature> :

public kernel::GemmUniversalStreamk<

typename DefaultGemmKernel::Mma,

typename DefaultGemmKernel::Epilogue,

SwizzleT>

{};

/// Select kernel by ThreadblockSwizzle's support for StreamkFeature

using GemmKernel = SelectBase<ThreadblockSwizzle>;

};

GemmUniversal

Mma::Policy为DefaultMmaCore::MmaPolicy,即 DefaultMmaTensorOp::Policy,即 MmaTensorOpPolicy。

Mma::Operator为 DefaultMmaCore::MmaTensorOp ,即 DefaultMmaTensorOp::type,MmaTensorOp。

template <

typename Mma_, ///! Threadblock-scoped matrix multiply-accumulate

typename Epilogue_, ///! Epilogue

typename ThreadblockSwizzle_ ///! Threadblock swizzling function

>

class GemmUniversal<

Mma_,

Epilogue_,

ThreadblockSwizzle_,

void,

// 3.x kernels use the first template argument to define the ProblemShape

// We use this invariant to SFINAE dispatch against either the 2.x API or the 3.x API

cute::enable_if_t<not (cute::is_tuple<Mma_>::value || IsCutlass3ArrayKernel<Mma_>::value)>

> {

public:

using Mma = Mma_;

using Epilogue = Epilogue_;

using EpilogueOutputOp = typename Epilogue::OutputOp;

using ThreadblockSwizzle = ThreadblockSwizzle_;

using ElementA = typename Mma::IteratorA::Element;

using LayoutA = typename Mma::IteratorA::Layout;

using ElementB = typename Mma::IteratorB::Element;

using LayoutB = typename Mma::IteratorB::Layout;

using ElementC = typename Epilogue::OutputTileIterator::Element;

using LayoutC = typename Epilogue::OutputTileIterator::Layout;

static ComplexTransform const kTransformA = Mma::kTransformA;

static ComplexTransform const kTransformB = Mma::kTransformB;

using Operator = typename Mma::Operator;

using OperatorClass = typename Mma::Operator::OperatorClass;

using ThreadblockShape = typename Mma::Shape;

using WarpShape = typename Mma::Operator::Shape;

using InstructionShape = typename Mma::Policy::Operator::InstructionShape;

using ArchTag = typename Mma::ArchTag;

static int const kStages = Mma::kStages;

static int const kAlignmentA = Mma::IteratorA::AccessType::kElements;

static int const kAlignmentB = Mma::IteratorB::AccessType::kElements;

static int const kAlignmentC = Epilogue::OutputTileIterator::kElementsPerAccess;

/// Warp count (concept: GemmShape)

using WarpCount = typename Mma::WarpCount;

static int const kThreadCount = 32 * WarpCount::kCount;

/// Split-K preserves splits that are 128b aligned

static int const kSplitKAlignment = const_max(128 / sizeof_bits<ElementA>::value, 128 / sizeof_bits<ElementB>::value);

GemmUniversal::Arguments

主要实现在基类 UniversalArgumentsBase 中。

//

// Structures

//

/// Argument structure

struct Arguments : UniversalArgumentsBase

{

//

// Data members

//

typename EpilogueOutputOp::Params epilogue;

void const * ptr_A;

void const * ptr_B;

void const * ptr_C;

void * ptr_D;

int64_t batch_stride_A;

int64_t batch_stride_B;

int64_t batch_stride_C;

typename LayoutA::Stride stride_a;

typename LayoutB::Stride stride_b;

typename LayoutC::Stride stride_c;

typename LayoutC::Stride stride_d;

typename LayoutA::Stride::LongIndex lda;

typename LayoutB::Stride::LongIndex ldb;

typename LayoutC::Stride::LongIndex ldc;

typename LayoutC::Stride::LongIndex ldd;

int const * ptr_gather_A_indices;

int const * ptr_gather_B_indices;

int const * ptr_scatter_D_indices;

GemmUniversal::Arguments::Arguments

//

// Methods

//

Arguments():

ptr_A(nullptr), ptr_B(nullptr), ptr_C(nullptr), ptr_D(nullptr),

ptr_gather_A_indices(nullptr),

ptr_gather_B_indices(nullptr),

ptr_scatter_D_indices(nullptr)

{}

GemmUniversal::Arguments::Arguments

/// constructs an arguments structure

Arguments(

GemmUniversalMode mode,

GemmCoord problem_size,

int batch_count,

typename EpilogueOutputOp::Params epilogue,

void const * ptr_A,

void const * ptr_B,

void const * ptr_C,

void * ptr_D,

int64_t batch_stride_A,

int64_t batch_stride_B,

int64_t batch_stride_C,

int64_t batch_stride_D,

typename LayoutA::Stride stride_a,

typename LayoutB::Stride stride_b,

typename LayoutC::Stride stride_c,

typename LayoutC::Stride stride_d,

int const *ptr_gather_A_indices = nullptr,

int const *ptr_gather_B_indices = nullptr,

int const *ptr_scatter_D_indices = nullptr)

:

UniversalArgumentsBase(mode, problem_size, batch_count, batch_stride_D),

epilogue(epilogue),

ptr_A(ptr_A), ptr_B(ptr_B), ptr_C(ptr_C), ptr_D(ptr_D),

batch_stride_A(batch_stride_A), batch_stride_B(batch_stride_B), batch_stride_C(batch_stride_C),

stride_a(stride_a), stride_b(stride_b), stride_c(stride_c), stride_d(stride_d),

ptr_gather_A_indices(ptr_gather_A_indices), ptr_gather_B_indices(ptr_gather_B_indices),

ptr_scatter_D_indices(ptr_scatter_D_indices)

{

lda = 0;

ldb = 0;

ldc = 0;

ldd = 0;

CUTLASS_TRACE_HOST("GemmUniversal::Arguments::Arguments() - problem_size: " << problem_size);

}

GemmUniversal::Arguments::Arguments

/// constructs an arguments structure

Arguments(

GemmUniversalMode mode,

GemmCoord problem_size,

int batch_count,

typename EpilogueOutputOp::Params epilogue,

void const * ptr_A,

void const * ptr_B,

void const * ptr_C,

void * ptr_D,

int64_t batch_stride_A,

int64_t batch_stride_B,

int64_t batch_stride_C,

int64_t batch_stride_D,

typename LayoutA::Stride::LongIndex lda,

typename LayoutB::Stride::LongIndex ldb,

typename LayoutC::Stride::LongIndex ldc,

typename LayoutC::Stride::LongIndex ldd,

int const *ptr_gather_A_indices = nullptr,

int const *ptr_gather_B_indices = nullptr,

int const *ptr_scatter_D_indices = nullptr

):

UniversalArgumentsBase(mode, problem_size, batch_count, batch_stride_D),

epilogue(epilogue),

ptr_A(ptr_A), ptr_B(ptr_B), ptr_C(ptr_C), ptr_D(ptr_D),

batch_stride_A(batch_stride_A), batch_stride_B(batch_stride_B), batch_stride_C(batch_stride_C),

lda(lda), ldb(ldb), ldc(ldc), ldd(ldd),

ptr_gather_A_indices(ptr_gather_A_indices), ptr_gather_B_indices(ptr_gather_B_indices),

ptr_scatter_D_indices(ptr_scatter_D_indices)

{

stride_a = make_Coord(lda);

stride_b = make_Coord(ldb);

stride_c = make_Coord(ldc);

stride_d = make_Coord(ldd);

CUTLASS_TRACE_HOST("GemmUniversal::Arguments::Arguments() - problem_size: " << problem_size);

}

GemmUniversal::Arguments::transposed_problem

/// Returns arguments for the transposed problem

Arguments transposed_problem() const

{

Arguments args(*this);

std::swap(args.problem_size.m(), args.problem_size.n());

std::swap(args.ptr_A, args.ptr_B);

std::swap(args.lda, args.ldb);

std::swap(args.stride_a, args.stride_b);

std::swap(args.batch_stride_A, args.batch_stride_B);

std::swap(args.ptr_gather_A_indices, args.ptr_gather_B_indices);

return args;

}

};

GemmUniversal::Params

主要实现同样在基类 UniversalParamsBase 中。

//

// Structure for precomputing values in host memory and passing to kernels

//

/// Parameters structure

struct Params : UniversalParamsBase<

ThreadblockSwizzle,

ThreadblockShape,

ElementA,

ElementB,

ElementC,

LayoutA,

LayoutB>

{

using ParamsBase = UniversalParamsBase<

ThreadblockSwizzle,

ThreadblockShape,

ElementA,

ElementB,

ElementC,

LayoutA,

LayoutB>;

//

// Data members

//

typename Mma::IteratorA::Params params_A;

typename Mma::IteratorB::Params params_B;

typename Epilogue::OutputTileIterator::Params params_C;

typename Epilogue::OutputTileIterator::Params params_D;

typename EpilogueOutputOp::Params output_op;

void * ptr_A;

void * ptr_B;

void * ptr_C;

void * ptr_D;

int64_t batch_stride_A;

int64_t batch_stride_B;

int64_t batch_stride_C;

int * ptr_gather_A_indices;

int * ptr_gather_B_indices;

int * ptr_scatter_D_indices;

//

// Host dispatch API

//

/// Default constructor

Params() = default;

GemmUniversal::Params::Params

/// Constructor

Params(

Arguments const &args, /// GEMM application arguments

int device_sms, /// Number of SMs on the device

int sm_occupancy) /// Kernel SM occupancy (in thread blocks)

:

ParamsBase(args, device_sms, sm_occupancy),

params_A(args.lda ? make_Coord_with_padding<LayoutA::kStrideRank>(args.lda) : args.stride_a),

params_B(args.ldb ? make_Coord_with_padding<LayoutB::kStrideRank>(args.ldb) : args.stride_b),

params_C(args.ldc ? make_Coord_with_padding<LayoutC::kStrideRank>(args.ldc) : args.stride_c),

params_D(args.ldd ? make_Coord_with_padding<LayoutC::kStrideRank>(args.ldd) : args.stride_d),

output_op(args.epilogue),

ptr_A(const_cast<void *>(args.ptr_A)),

ptr_B(const_cast<void *>(args.ptr_B)),

ptr_C(const_cast<void *>(args.ptr_C)),

ptr_D(args.ptr_D),

batch_stride_A(args.batch_stride_A),

batch_stride_B(args.batch_stride_B),

batch_stride_C(args.batch_stride_C),

ptr_gather_A_indices(const_cast<int *>(args.ptr_gather_A_indices)),

ptr_gather_B_indices(const_cast<int *>(args.ptr_gather_B_indices)),

ptr_scatter_D_indices(const_cast<int *>(args.ptr_scatter_D_indices))

{}

GemmUniversal::Params::update

更新数据指针和 batch 步长。

/// Lightweight update given a subset of arguments.

void update(Arguments const &args)

{

CUTLASS_TRACE_HOST("GemmUniversal::Params::update()");

// Update input/output pointers

ptr_A = const_cast<void *>(args.ptr_A);

ptr_B = const_cast<void *>(args.ptr_B);

ptr_C = const_cast<void *>(args.ptr_C);

ptr_D = args.ptr_D;

batch_stride_A = args.batch_stride_A;

batch_stride_B = args.batch_stride_B;

batch_stride_C = args.batch_stride_C;

this->batch_stride_D = args.batch_stride_D;

ptr_gather_A_indices = const_cast<int *>(args.ptr_gather_A_indices);

ptr_gather_B_indices = const_cast<int *>(args.ptr_gather_B_indices);

ptr_scatter_D_indices = const_cast<int *>(args.ptr_scatter_D_indices);

output_op = args.epilogue;

}

};

主循环和收尾阶段使用相同的 Shared Memory。

/// Shared memory storage structure

union SharedStorage {

typename Mma::SharedStorage main_loop;

typename Epilogue::SharedStorage epilogue;

};

GemmUniversal::can_implement

检查问题的尺寸是否满足3个矩阵 layout 的对齐要求。

public:

//

// Host dispatch API

//

/// Determines whether kernel satisfies alignment

static Status can_implement(

cutlass::gemm::GemmCoord const & problem_size)

{

CUTLASS_TRACE_HOST("GemmUniversal::can_implement()");

static int const kAlignmentA = (cute::is_same<LayoutA,

layout::ColumnMajorInterleaved<32>>::value)

? 32

: (cute::is_same<LayoutA,

layout::ColumnMajorInterleaved<64>>::value)

? 64

: Mma::IteratorA::AccessType::kElements;

static int const kAlignmentB = (cute::is_same<LayoutB,

layout::RowMajorInterleaved<32>>::value)

? 32

: (cute::is_same<LayoutB,

layout::RowMajorInterleaved<64>>::value)

? 64

: Mma::IteratorB::AccessType::kElements;

static int const kAlignmentC = (cute::is_same<LayoutC,

layout::ColumnMajorInterleaved<32>>::value)

? 32

: (cute::is_same<LayoutC,

layout::ColumnMajorInterleaved<64>>::value)

? 64

: Epilogue::OutputTileIterator::kElementsPerAccess;

bool isAMisaligned = false;

bool isBMisaligned = false;

bool isCMisaligned = false;

if (cute::is_same<LayoutA, layout::RowMajor>::value) {

isAMisaligned = problem_size.k() % kAlignmentA;

} else if (cute::is_same<LayoutA, layout::ColumnMajor>::value) {

isAMisaligned = problem_size.m() % kAlignmentA;

} else if (cute::is_same<LayoutA, layout::ColumnMajorInterleaved<32>>::value

|| cute::is_same<LayoutA, layout::ColumnMajorInterleaved<64>>::value) {

isAMisaligned = problem_size.k() % kAlignmentA;

}

if (cute::is_same<LayoutB, layout::RowMajor>::value) {

isBMisaligned = problem_size.n() % kAlignmentB;

} else if (cute::is_same<LayoutB, layout::ColumnMajor>::value) {

isBMisaligned = problem_size.k() % kAlignmentB;

} else if (cute::is_same<LayoutB, layout::RowMajorInterleaved<32>>::value

|| cute::is_same<LayoutB, layout::RowMajorInterleaved<64>>::value) {

isBMisaligned = problem_size.k() % kAlignmentB;

}

if (cute::is_same<LayoutC, layout::RowMajor>::value) {

isCMisaligned = problem_size.n() % kAlignmentC;

} else if (cute::is_same<LayoutC, layout::ColumnMajor>::value) {

isCMisaligned = problem_size.m() % kAlignmentC;

} else if (cute::is_same<LayoutC, layout::ColumnMajorInterleaved<32>>::value

|| cute::is_same<LayoutC, layout::ColumnMajorInterleaved<64>>::value) {

isCMisaligned = problem_size.n() % kAlignmentC;

}

if (isAMisaligned) {

CUTLASS_TRACE_HOST(" returning kErrorMisalignedOperand for A operand");

return Status::kErrorMisalignedOperand;

}

if (isBMisaligned) {

CUTLASS_TRACE_HOST(" returning kErrorMisalignedOperand for B operand");

return Status::kErrorMisalignedOperand;

}

if (isCMisaligned) {

CUTLASS_TRACE_HOST(" returning kErrorMisalignedOperand for C operand");

return Status::kErrorMisalignedOperand;

}

CUTLASS_TRACE_HOST(" returning kSuccess");

return Status::kSuccess;

}

GemmUniversal::can_implement

static Status can_implement(Arguments const &args) {

return can_implement(args.problem_size);

}

GemmUniversal::invoke

类静态方法实现工厂调用。 GemmUniversal::operator() 为实现。

public:

//

// Device-only API

//

// Factory invocation

CUTLASS_DEVICE

static void invoke(

Params const ¶ms,

SharedStorage &shared_storage)

{

GemmUniversal op;

op(params, shared_storage);

}

GemmUniversal::operator()

调用 GemmUniversal::run_with_swizzle 函数。

/// Executes one GEMM

CUTLASS_DEVICE

void operator()(Params const ¶ms, SharedStorage &shared_storage) {

ThreadblockSwizzle threadblock_swizzle;

run_with_swizzle(params, shared_storage, threadblock_swizzle);

}

GemmUniversal::run_with_swizzle

Gemm 模式的实现。

调用 GemmIdentityThreadblockSwizzle::get_tile_offset 获得交错重排后的 CTA 坐标。

如果超出区间则直接返回。

/// Executes one GEMM with an externally-provided swizzling function

CUTLASS_DEVICE

void run_with_swizzle(Params const ¶ms, SharedStorage &shared_storage, ThreadblockSwizzle& threadblock_swizzle) {

cutlass::gemm::GemmCoord threadblock_tile_offset =

threadblock_swizzle.get_tile_offset(params.swizzle_log_tile);

// Early exit if CTA is out of range

if (params.grid_tiled_shape.m() <= threadblock_tile_offset.m() ||

params.grid_tiled_shape.n() <= threadblock_tile_offset.n()) {

return;

}

offset_k为当前 CTA 在 k 维上的偏移。

problem_size_k为当前 CTA 处理的问题 k 维大小。

Gemm 和 GemmSplitKParallel 模式下多个 CTA 处理 k 维。

Batched 和 Array 模式需要调整ptr_A和ptr_B当前矩阵的位置。

为什么需要同步线程呢?

int offset_k = 0;

int problem_size_k = params.problem_size.k();

ElementA *ptr_A = static_cast<ElementA *>(params.ptr_A);

ElementB *ptr_B = static_cast<ElementB *>(params.ptr_B);

//

// Fetch pointers based on mode.

//

if (params.mode == GemmUniversalMode::kGemm ||

params.mode == GemmUniversalMode::kGemmSplitKParallel) {

if (threadblock_tile_offset.k() + 1 < params.grid_tiled_shape.k()) {

problem_size_k = (threadblock_tile_offset.k() + 1) * params.gemm_k_size;

}

offset_k = threadblock_tile_offset.k() * params.gemm_k_size;

}

else if (params.mode == GemmUniversalMode::kBatched) {

ptr_A += threadblock_tile_offset.k() * params.batch_stride_A;

ptr_B += threadblock_tile_offset.k() * params.batch_stride_B;

}

else if (params.mode == GemmUniversalMode::kArray) {

ptr_A = static_cast<ElementA * const *>(params.ptr_A)[threadblock_tile_offset.k()];

ptr_B = static_cast<ElementB * const *>(params.ptr_B)[threadblock_tile_offset.k()];

}

__syncthreads();

计算 CTA 在 A 和 B 矩阵上的逻辑坐标。

MmaMultistage::IteratorA 为 DefaultMma::IteratorA,即 PredicatedTileAccessIterator。IteratorB类型与之相同。

// Compute initial location in logical coordinates

cutlass::MatrixCoord tb_offset_A{

threadblock_tile_offset.m() * Mma::Shape::kM,

offset_k,

};

cutlass::MatrixCoord tb_offset_B{

offset_k,

threadblock_tile_offset.n() * Mma::Shape::kN

};

// Compute position within threadblock

int thread_idx = threadIdx.x;

// Construct iterators to A and B operands

typename Mma::IteratorA iterator_A(

params.params_A,

ptr_A,

{params.problem_size.m(), problem_size_k},

thread_idx,

tb_offset_A,

params.ptr_gather_A_indices);

typename Mma::IteratorB iterator_B(

params.params_B,

ptr_B,

{problem_size_k, params.problem_size.n()},

thread_idx,

tb_offset_B,

params.ptr_gather_B_indices);

canonical_warp_idx_sync 得到线程束的索引。

// Broadcast the warp_id computed by lane 0 to ensure dependent code

// is compiled as warp-uniform.

int warp_idx = canonical_warp_idx_sync();

int lane_idx = threadIdx.x % 32;

gemm_k_iterations为 CTA 在 k 维上的循环次数。

MmaMultistage 执行主体循环。

//

// Main loop

//

// Construct thread-scoped matrix multiply

Mma mma(shared_storage.main_loop, thread_idx, warp_idx, lane_idx);

typename Mma::FragmentC accumulators;

accumulators.clear();

// Compute threadblock-scoped matrix multiply-add

int gemm_k_iterations = (problem_size_k - offset_k + Mma::Shape::kK - 1) / Mma::Shape::kK;

// Compute threadblock-scoped matrix multiply-add

mma(

gemm_k_iterations,

accumulators,

iterator_A,

iterator_B,

accumulators);

收尾

EpilogueOutputOp 即 Epilogue::OutputOp,即 EpilogueOp,即 LinearCombination。

//

// Epilogue

//

EpilogueOutputOp output_op(params.output_op);

//

// Masked tile iterators constructed from members

//

threadblock_tile_offset = threadblock_swizzle.get_tile_offset(params.swizzle_log_tile);

//assume identity swizzle

MatrixCoord threadblock_offset(

threadblock_tile_offset.m() * Mma::Shape::kM,

threadblock_tile_offset.n() * Mma::Shape::kN

);

int block_idx = threadblock_tile_offset.m() + threadblock_tile_offset.n() * params.grid_tiled_shape.m();

ElementC *ptr_C = static_cast<ElementC *>(params.ptr_C);

ElementC *ptr_D = static_cast<ElementC *>(params.ptr_D);

创建 CTA 间的同步信号量 Semaphore。

如果是kSplitKSerial, Semaphore::fetch 函数最初获取同步锁但不阻塞。

LinearCombination::set_k_partition 根据归约时 k 的索引设置beta_值。除了第一个 CTA 外均为1。

已知 GEMM 的公式为:

D

=

α

A

B

+

β

C

D = \alpha AB + \beta C

D=αAB+βC

这样第一个 CTA 根据情况处理 C 矩阵,其他 CTA 均从 Global Memory 加载 D 矩阵,累加部分和。

Epilogue::OutputTileIterator 为 DefaultEpilogueTensorOp::OutputTileIterator,即 DefaultEpilogueTensorOp::PackedOutputTileIterator,即 PredicatedTileIterator。

//

// Fetch pointers based on mode.

//

// Construct the semaphore.

Semaphore semaphore(params.semaphore + block_idx, thread_idx);

if (params.mode == GemmUniversalMode::kGemm) {

// If performing a reduction via split-K, fetch the initial synchronization

if (params.grid_tiled_shape.k() > 1) {

// Fetch the synchronization lock initially but do not block.

semaphore.fetch();

// Indicate which position in a serial reduction the output operator is currently updating

output_op.set_k_partition(threadblock_tile_offset.k(), params.grid_tiled_shape.k());

}

}

else if (params.mode == GemmUniversalMode::kGemmSplitKParallel) {

ptr_D += threadblock_tile_offset.k() * params.batch_stride_D;

}

else if (params.mode == GemmUniversalMode::kBatched) {

ptr_C += threadblock_tile_offset.k() * params.batch_stride_C;

ptr_D += threadblock_tile_offset.k() * params.batch_stride_D;

}

else if (params.mode == GemmUniversalMode::kArray) {

ptr_C = static_cast<ElementC * const *>(params.ptr_C)[threadblock_tile_offset.k()];

ptr_D = static_cast<ElementC * const *>(params.ptr_D)[threadblock_tile_offset.k()];

}

// Tile iterator loading from source tensor.

typename Epilogue::OutputTileIterator iterator_C(

params.params_C,

ptr_C,

params.problem_size.mn(),

thread_idx,

threadblock_offset,

params.ptr_scatter_D_indices

);

// Tile iterator writing to destination tensor.

typename Epilogue::OutputTileIterator iterator_D(

params.params_D,

ptr_D,

params.problem_size.mn(),

thread_idx,

threadblock_offset,

params.ptr_scatter_D_indices

);

创建一个 Epilogue 对象。

如果不是第一个 CTA,则需要切换源矩阵,从前一个线程块计算的结果开始继续计算。

Semaphore::wait 等待到 k 个。

Epilogue epilogue(

shared_storage.epilogue,

thread_idx,

warp_idx,

lane_idx);

// Wait on the semaphore - this latency may have been covered by iterator construction

if (params.mode == GemmUniversalMode::kGemm && params.grid_tiled_shape.k() > 1) {

// For subsequent threadblocks, the source matrix is held in the 'D' tensor.

if (threadblock_tile_offset.k()) {

iterator_C = iterator_D;

}

semaphore.wait(threadblock_tile_offset.k());

}

// Execute the epilogue operator to update the destination tensor.

epilogue(

output_op,

iterator_D,

accumulators,

iterator_C);

Semaphore::release 释放信号量。

//

// Release the semaphore

//

if (params.mode == GemmUniversalMode::kGemm && params.grid_tiled_shape.k() > 1) {

int lock = 0;

if (params.grid_tiled_shape.k() == threadblock_tile_offset.k() + 1) {

// The final threadblock resets the semaphore for subsequent grids.

lock = 0;

}

else {

// Otherwise, the semaphore is incremented

lock = threadblock_tile_offset.k() + 1;

}

semaphore.release(lock);

}

}

};

GemmUniversalStreamk

template <

typename Mma_, ///! Threadblock-scoped matrix multiply-accumulate

typename Epilogue_, ///! Epilogue

typename ThreadblockSwizzle_ ///! Threadblock mapping function

>

struct GemmUniversalStreamk {

public:

//

// Types and constants

//

using Mma = Mma_;

using Epilogue = Epilogue_;

using EpilogueOutputOp = typename Epilogue::OutputOp;

using ThreadblockSwizzle = ThreadblockSwizzle_;

using ElementA = typename Mma::IteratorA::Element;

using LayoutA = typename Mma::IteratorA::Layout;

using ElementB = typename Mma::IteratorB::Element;

using LayoutB = typename Mma::IteratorB::Layout;

using ElementC = typename Epilogue::OutputTileIterator::Element;

using LayoutC = typename Epilogue::OutputTileIterator::Layout;

/// The per-thread tile of raw accumulators

using AccumulatorTile = typename Mma::FragmentC;

static ComplexTransform const kTransformA = Mma::kTransformA;

static ComplexTransform const kTransformB = Mma::kTransformB;

using Operator = typename Mma::Operator;

using OperatorClass = typename Mma::Operator::OperatorClass;

using ThreadblockShape = typename Mma::Shape;

using WarpShape = typename Mma::Operator::Shape;

using InstructionShape = typename Mma::Policy::Operator::InstructionShape;

using ArchTag = typename Mma::ArchTag;

static int const kStages = Mma::kStages;

static int const kAlignmentA = Mma::IteratorA::AccessType::kElements;

static int const kAlignmentB = Mma::IteratorB::AccessType::kElements;

static int const kAlignmentC = Epilogue::OutputTileIterator::kElementsPerAccess;

/// Warp count (concept: GemmShape)

using WarpCount = typename Mma::WarpCount;

static int const kThreadCount = 32 * WarpCount::kCount;

__NV_STD_MAX 是在常量表达式中使用的宏函数,因为 C++等价的功能需要编译器支持constexpr。这些宏函数以__NV_STD_*为前缀。

kWorkspaceBytesPerBlock取 Mma 和 Epilogue 两者中的最大值。

/// Workspace bytes per thread block

static size_t const kWorkspaceBytesPerBlock =

__NV_STD_MAX(

kThreadCount * sizeof(AccumulatorTile),

Epilogue::kWorkspaceBytesPerBlock);

/// Block-striped reduction utility

using BlockStripedReduceT = BlockStripedReduce<kThreadCount, AccumulatorTile>;

GemmUniversalStreamk::Arguments

//

// Structures

//

/// Argument structure

struct Arguments {

//

// Data members

//

GemmUniversalMode mode = GemmUniversalMode::kGemm;

GemmCoord problem_size {};

int batch_count {1}; // Either (mode == GemmUniversalMode::kBatched) the batch count, or (mode == GemmUniversalMode::kGemm) the tile-splitting factor

typename EpilogueOutputOp::Params epilogue{};

void const * ptr_A = nullptr;

void const * ptr_B = nullptr;

void const * ptr_C = nullptr;

void * ptr_D = nullptr;

int64_t batch_stride_A{0};

int64_t batch_stride_B{0};

int64_t batch_stride_C{0};

int64_t batch_stride_D{0};

typename LayoutA::Stride stride_a{0};

typename LayoutB::Stride stride_b{0};

typename LayoutC::Stride stride_c{0};

typename LayoutC::Stride stride_d{0};

typename LayoutA::Stride::LongIndex lda{0};

typename LayoutB::Stride::LongIndex ldb{0};

typename LayoutC::Stride::LongIndex ldc{0};

typename LayoutC::Stride::LongIndex ldd{0};

int avail_sms{-1}; /// The number of SMs that StreamK dispatch heuristics will attempt to load-balance across (-1 defaults to device width, 1 implies classic data-parallel scheduling)

//

// Methods

//

/// Default Constructor

Arguments() = default;

GemmUniversalStreamk::Arguments::Arguments

RowMajor::Stride,即 Coord 的版本。

/// Constructor

Arguments(

GemmUniversalMode mode,

GemmCoord problem_size,

int batch_split, /// Either (mode == GemmUniversalMode::kBatched) the batch count, or (mode == GemmUniversalMode::kGemm) the tile-splitting factor (1 defaults to StreamK, >1 emulates Split-K)

typename EpilogueOutputOp::Params epilogue,

void const * ptr_A,

void const * ptr_B,

void const * ptr_C,

void * ptr_D,

int64_t batch_stride_A,

int64_t batch_stride_B,

int64_t batch_stride_C,

int64_t batch_stride_D,

typename LayoutA::Stride stride_a,

typename LayoutB::Stride stride_b,

typename LayoutC::Stride stride_c,

typename LayoutC::Stride stride_d,

int avail_sms = -1 /// The number of SMs that StreamK dispatch heuristics will attempt to load-balance across (-1 defaults to device width, 1 implies classic data-parallel scheduling)

):

mode(mode),

problem_size(problem_size),

batch_count(batch_split),

epilogue(epilogue),

ptr_A(ptr_A), ptr_B(ptr_B), ptr_C(ptr_C), ptr_D(ptr_D),

batch_stride_A(batch_stride_A), batch_stride_B(batch_stride_B), batch_stride_C(batch_stride_C), batch_stride_D(batch_stride_D),

stride_a(stride_a), stride_b(stride_b), stride_c(stride_c), stride_d(stride_d), avail_sms(avail_sms)

{

CUTLASS_TRACE_HOST("GemmUniversalStreamk::Arguments::Arguments() - problem_size: " << problem_size);

}

GemmUniversalStreamk::Arguments::Arguments

RowMajor::LongIndex 的版本。

/// Constructor

Arguments(

GemmUniversalMode mode,

GemmCoord problem_size,

int batch_split, /// Either (mode == GemmUniversalMode::kBatched) the batch count, or (mode == GemmUniversalMode::kGemm) the tile-splitting factor (1 defaults to StreamK, >1 emulates Split-K)

typename EpilogueOutputOp::Params epilogue,

void const * ptr_A,

void const * ptr_B,

void const * ptr_C,

void * ptr_D,

int64_t batch_stride_A,

int64_t batch_stride_B,

int64_t batch_stride_C,

int64_t batch_stride_D,

typename LayoutA::Stride::LongIndex lda,

typename LayoutB::Stride::LongIndex ldb,

typename LayoutC::Stride::LongIndex ldc,

typename LayoutC::Stride::LongIndex ldd,

int avail_sms = -1 /// The number of SMs that StreamK dispatch heuristics will attempt to load-balance across (-1 defaults to device width, 1 implies classic data-parallel scheduling)

):

mode(mode),

problem_size(problem_size),

batch_count(batch_split),

epilogue(epilogue),

ptr_A(ptr_A), ptr_B(ptr_B), ptr_C(ptr_C), ptr_D(ptr_D),

batch_stride_A(batch_stride_A), batch_stride_B(batch_stride_B), batch_stride_C(batch_stride_C), batch_stride_D(batch_stride_D),

lda(lda), ldb(ldb), ldc(ldc), ldd(ldd), avail_sms(avail_sms)

{

stride_a = make_Coord(lda);

stride_b = make_Coord(ldb);

stride_c = make_Coord(ldc);

stride_d = make_Coord(ldd);

CUTLASS_TRACE_HOST("GemmUniversalStreamk::Arguments::Arguments() - problem_size: " << problem_size);

}

GemmUniversalStreamk::Arguments::transposed_problem

交换 A 和 B 矩阵。

/// Returns arguments for the transposed problem

Arguments transposed_problem() const

{

Arguments args(*this);

std::swap(args.problem_size.m(), args.problem_size.n());

std::swap(args.ptr_A, args.ptr_B);

std::swap(args.lda, args.ldb);

std::swap(args.stride_a, args.stride_b);

std::swap(args.batch_stride_A, args.batch_stride_B);

return args;

}

};

GemmUniversalStreamk::Params

/// Parameters structure

struct Params

{

public:

//

// Data members

//

void * ptr_A = nullptr;

void * ptr_B = nullptr;

typename Mma::IteratorA::Params params_A{};

typename Mma::IteratorB::Params params_B{};

int64_t batch_stride_A{0};

int64_t batch_stride_B{0};

GemmUniversalMode mode = GemmUniversalMode::kGemm;

ThreadblockSwizzle block_mapping{};

void *barrier_workspace = nullptr;

void *partials_workspace = nullptr;

typename EpilogueOutputOp::Params output_op{};

void * ptr_D = nullptr;

void * ptr_C = nullptr;

typename Epilogue::OutputTileIterator::Params params_D{};

typename Epilogue::OutputTileIterator::Params params_C{};

int64_t batch_stride_D{0};

int64_t batch_stride_C{0};

GemmUniversalStreamk::Params::cacheline_align_up

内部定义静态变量CACHELINE_SIZE。

将给定的内存分配大小对齐到最近的缓存行边界,减少缓存冲突。

protected:

//

// Host-only dispatch-utilities

//

/// Pad the given allocation size up to the nearest cache line

static size_t cacheline_align_up(size_t size)

{

static const int CACHELINE_SIZE = 128;

return (size + CACHELINE_SIZE - 1) / CACHELINE_SIZE * CACHELINE_SIZE;

}

GemmUniversalStreamk::Params::get_barrier_workspace_size

计算执行屏障操作时所需的工作区大小。

ThreadblockSwizzleStreamK::sk_regions 返回 sk 区域的数量。

ThreadblockSwizzleStreamK::sk_blocks_per_region 每个区域中的SK CTA 的数量。

对于原子归约,每个 SK CTA 需要一个同步标志;

对于并行归约,每个归约 CTA 需要其自己的同步标志。

/// Get the workspace size needed for barrier

size_t get_barrier_workspace_size() const

{

// For atomic reduction, each SK-block needs a synchronization flag. For parallel reduction,

// each reduction block needs its own synchronization flag.

int sk_blocks = block_mapping.sk_regions() * block_mapping.sk_blocks_per_region();

int num_flags = fast_max(sk_blocks, block_mapping.reduction_blocks);

return cacheline_align_up(sizeof(typename Barrier::T) * num_flags);

}

GemmUniversalStreamk::Params::get_partials_workspace_size

ThreadblockSwizzleStreamK::sk_regions 返回 sk 区域的数量。

ThreadblockSwizzleStreamK::sk_blocks_per_region 每个区域中的SK CTA 的数量。

kWorkspaceBytesPerBlock 为每个 CTA 累加结果需要的空间。

/// Get the workspace size needed for intermediate partial sums

size_t get_partials_workspace_size() const

{

int sk_blocks = block_mapping.sk_regions() * block_mapping.sk_blocks_per_region();

return cacheline_align_up(kWorkspaceBytesPerBlock * sk_blocks);

}

public:

//

// Host dispatch API

//

/// Default constructor

Params() = default;

GemmUniversalStreamk::Params::Params

/// Constructor

Params(

Arguments const &args, /// GEMM application arguments

int device_sms, /// Number of SMs on the device

int sm_occupancy) /// Kernel SM occupancy (in thread blocks)

:

params_A(args.lda ? make_Coord_with_padding<LayoutA::kStrideRank>(args.lda) : args.stride_a),

params_B(args.ldb ? make_Coord_with_padding<LayoutB::kStrideRank>(args.ldb) : args.stride_b),

params_C(args.ldc ? make_Coord_with_padding<LayoutC::kStrideRank>(args.ldc) : args.stride_c),

params_D(args.ldd ? make_Coord_with_padding<LayoutC::kStrideRank>(args.ldd) : args.stride_d),

output_op(args.epilogue),

mode(args.mode),

ptr_A(const_cast<void *>(args.ptr_A)),

ptr_B(const_cast<void *>(args.ptr_B)),

ptr_C(const_cast<void *>(args.ptr_C)),

ptr_D(args.ptr_D),

batch_stride_A(args.batch_stride_A),

batch_stride_B(args.batch_stride_B),

batch_stride_C(args.batch_stride_C),

batch_stride_D(args.batch_stride_D),

barrier_workspace(nullptr),

partials_workspace(nullptr)

{

// Number of SMs to make available for StreamK decomposition

int avail_sms = (args.avail_sms == -1) ?

device_sms :

fast_min(args.avail_sms, device_sms);

创建一个ThreadblockSwizzleStreamK 对象。

// Initialize the block mapping structure

block_mapping = ThreadblockSwizzle(

args.mode,

args.problem_size,

{ThreadblockShape::kM, ThreadblockShape::kN, ThreadblockShape::kK},

args.batch_count,

sm_occupancy,

device_sms,

avail_sms,

sizeof(ElementA),

sizeof(ElementB),

sizeof(ElementC),

Epilogue::kAccumulatorFragments);

}

GemmUniversalStreamk::Params::get_workspace_size

调用 GemmUniversalStreamk::Params::get_barrier_workspace_size 和 GemmUniversalStreamk::Params::get_partials_workspace_size, 返回工作区大小。

/// Returns the workspace size (in bytes) needed for these parameters

size_t get_workspace_size() const

{

return

get_barrier_workspace_size() +

get_partials_workspace_size();

}

GemmUniversalStreamk::Params::init_workspace

/// Assign and initialize the specified workspace buffer. Assumes

/// the memory allocated to workspace is at least as large as get_workspace_size().

Status init_workspace(

void *workspace,

cudaStream_t stream = nullptr)

{

uint8_t *ptr = static_cast<uint8_t*>(workspace);